21天打造分布式爬虫-下载汽车之家图片(九)

9.1.下载汽车之家图片

新建项目

scrapy startproject bmx scrapy genspider bmx5 "car.autohome.com.cn"

bmx5.py

# -*- coding: utf-8 -*- import scrapy from bmx.items import BmxItem class Bmx5Spider(scrapy.Spider): name = 'bmx5' allowed_domains = ['car.autohome.com.cn'] start_urls = ['https://car.autohome.com.cn/pic/series/159.html'] def parse(self, response): uiboxs = response.xpath("//div[@class='uibox']")[1:] for uibox in uiboxs: category = uibox.xpath(".//div[@class='uibox-title']/a/text()").get() urls = uibox.xpath(".//ul/li/a/img/@src").getall() # for url in urls: # url = "https:" + url # print(url) urls = list(map(lambda url:response.urljoin(url),urls)) items = BmxItem(category=category,image_urls=urls) yield items

items.py

# -*- coding: utf-8 -*- import scrapy class BmxItem(scrapy.Item): category = scrapy.Field() #保存图片 image_urls = scrapy.Field() images = scrapy.Field()

pipelines.py

自定义保存图片的路劲

# -*- coding: utf-8 -*- from scrapy.pipelines.images import ImagesPipeline from bmx import settings import os class BMXImagesPipeline(ImagesPipeline): def get_media_requests(self, item, info): #这个方法是在发送下载请求之前调用 request_objs = super(BMXImagesPipeline, self).get_media_requests(item,info) for request_obj in request_objs: request_obj.item = item return request_objs def file_path(self, request, response=None, info=None): #这个方法是在图片将要被保存的时候调用,用来获取图片存储的路劲 path = super(BMXImagesPipeline, self).file_path(request,response,info) category = request.item.get('category') image_store = settings.IMAGES_STORE category_path = os.path.join(image_store,category) if not os.path.exists(category_path): os.mkdir(category_path) image_name = path.replace("full/","") image_path = os.path.join(category_path,image_name) return image_path

settings.py

import os ROBOTSTXT_OBEY = False DOWNLOAD_DELAY = 1 DEFAULT_REQUEST_HEADERS = { 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 'Accept-Language': 'en', 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.140 Safari/537.36', } #使用自定义的pipeline ITEM_PIPELINES = { # 'bmx.pipelines.BmxPipeline': 300, 'bmx.pipelines.BMXImagesPipeline': 1, } #图片下载的路劲 IMAGES_STORE = os.path.join(os.path.dirname(os.path.dirname(__file__)),'images')

start.py

from scrapy import cmdline cmdline.execute("scrapy crawl bmx5".split())

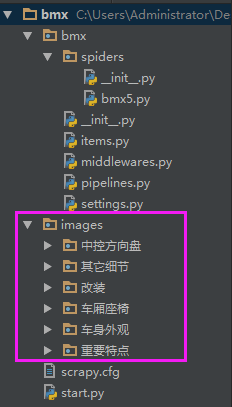

结果

posted on 2018-08-05 17:00 zhang_derek 阅读(968) 评论(0) 编辑 收藏 举报