kubeadm部署多master高可用kubernetes集群

高可用简介

kubernetes高可用部署参考:

https://kubernetes.io/docs/setup/independent/high-availability/

https://github.com/kubernetes-sigs/kubespray

https://github.com/wise2c-devops/breeze

https://github.com/cookeem/kubeadm-ha

一、拓扑选择

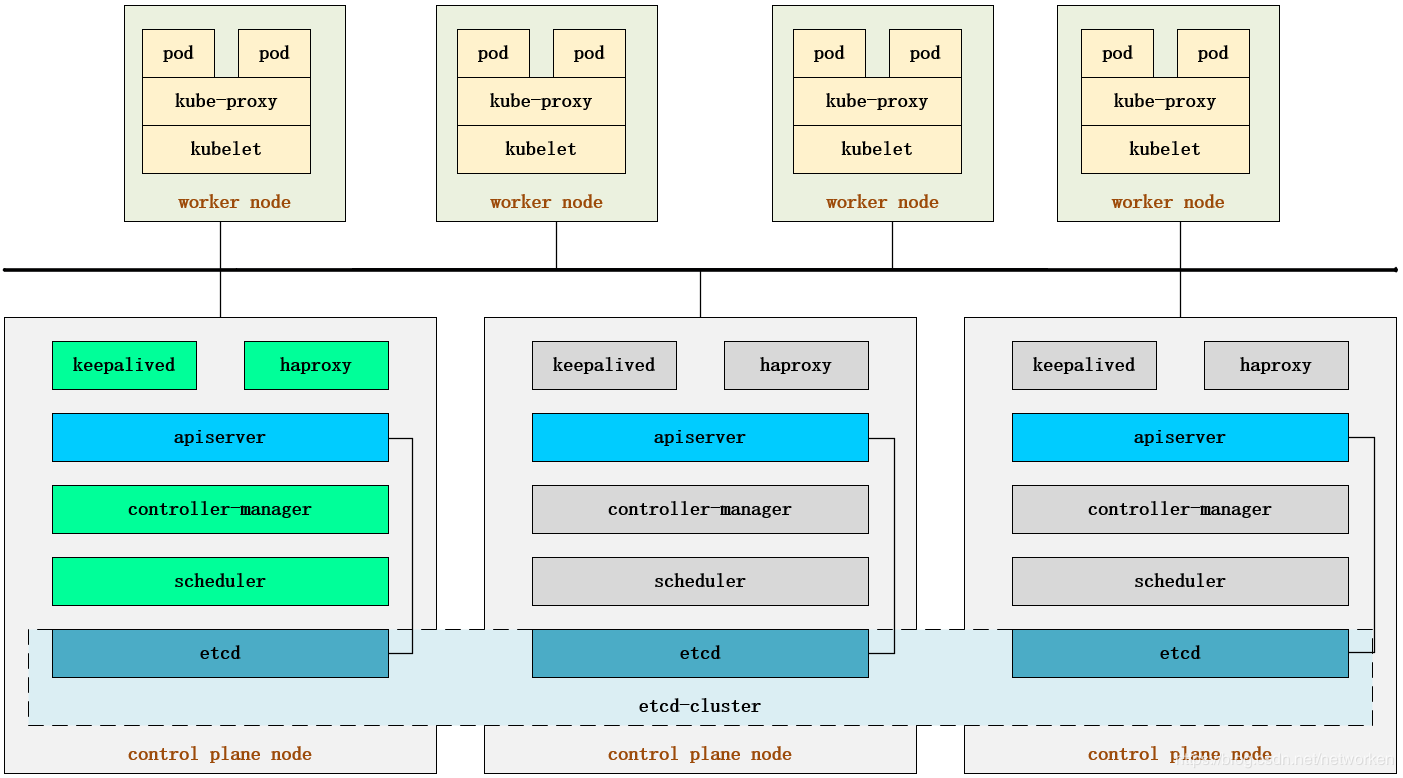

配置高可用(HA)Kubernetes集群,有以下两种可选的etcd拓扑:

- 集群master节点与etcd节点共存,etcd也运行在控制平面节点上

- 使用外部etcd节点,etcd节点与master在不同节点上运行

1、堆叠的etcd拓扑

堆叠HA集群是这样的拓扑,其中etcd提供的分布式数据存储集群与由kubeamd管理的运行master组件的集群节点堆叠部署。

每个master节点运行kube-apiserver,kube-scheduler和kube-controller-manager的一个实例。kube-apiserver使用负载平衡器暴露给工作节点。

每个master节点创建一个本地etcd成员,该etcd成员仅与本节点kube-apiserver通信。这同样适用于本地kube-controller-manager 和kube-scheduler实例。

该拓扑将master和etcd成员耦合在相同节点上。比设置具有外部etcd节点的集群更简单,并且更易于管理复制。

但是,堆叠集群存在耦合失败的风险。如果一个节点发生故障,则etcd成员和master实例都将丢失,并且冗余会受到影响。您可以通过添加更多master节点来降低此风险。

因此,您应该为HA群集运行至少三个堆叠的master节点。

这是kubeadm中的默认拓扑。使用kubeadm init和kubeadm join --experimental-control-plane命令时,在master节点上自动创建本地etcd成员。

2、外部etcd拓扑

具有外部etcd的HA集群是这样的拓扑,其中由etcd提供的分布式数据存储集群部署在运行master组件的节点形成的集群外部。

像堆叠ETCD拓扑结构,在外部ETCD拓扑中的每个master节点运行一个kube-apiserver,kube-scheduler和kube-controller-manager实例。并且kube-apiserver使用负载平衡器暴露给工作节点。但是,etcd成员在不同的主机上运行,每个etcd主机与kube-apiserver每个master节点进行通信。

此拓扑将master节点和etcd成员分离。因此,它提供了HA设置,其中丢失master实例或etcd成员具有较小的影响并且不像堆叠的HA拓扑那样影响集群冗余。

但是,此拓扑需要两倍于堆叠HA拓扑的主机数。具有此拓扑的HA群集至少需要三个用于master节点的主机和三个用于etcd节点的主机。

二、初始化环境

使用kubeadm部署高可用性Kubernetes集群的两种不同方法:

- 使用堆叠master节点。这种方法需要较少的基础设施,etcd成员和master节点位于同一位置。

- 使用外部etcd集群。这种方法需要更多的基础设施, master节点和etcd成员是分开的。

在继续之前,您应该仔细考虑哪种方法最能满足您的应用程序和环境的需求。

部署要求

- 至少3个master节点

- 至少3个worker节点

- 所有节点网络全部互通(公共或私有网络)

- 所有机器都有sudo权限

- 从一个设备到系统中所有节点的SSH访问

- 所有节点安装kubeadm和kubelet,kubectl是可选的。

- 针对外部etcd集群,你需要为etcd成员额外提供3个节点

负载均衡

部署集群前首选需要为kube-apiserver创建负载均衡器。

注意:负载平衡器有许多中配置方式。可以根据你的集群要求选择不同的配置方案。在云环境中,您应将master节点作为负载平衡器TCP转发的后端。此负载平衡器将流量分配到其目标列表中的所有健康master节点。apiserver的运行状况检查是对kube-apiserver侦听的端口的TCP检查(默认值:6443)。

负载均衡器必须能够与apiserver端口上的所有master节点通信。它还必须允许其侦听端口上的传入流量。另外确保负载均衡器的地址始终与kubeadm的ControlPlaneEndpoint地址匹配。

haproxy/nignx+keepalived是其中可选的负载均衡方案,针对公有云环境可以直接使用运营商提供的负载均衡产品。

部署时首先将第一个master节点添加到负载均衡器并使用以下命令测试连接:

# nc -v LOAD_BALANCER_IP PORT

由于apiserver尚未运行,因此预计会出现连接拒绝错误。但是,超时意味着负载均衡器无法与master节点通信。如果发生超时,请重新配置负载平衡器以与master节点通信。将剩余的master节点添加到负载平衡器目标组。

本次使用kubeadm部署kubernetes v1.16.2 高可用集群,包含3个master节点和1个node节点,部署步骤以官方文档为基础,负载均衡部分采用haproxy+keepalived容器方式实现。所有组件版本以kubernetes v1.16.2为准,其他组件以当前最新版本为准。

节点信息:

主机名 IP地址 角色 OS CPU/MEM 磁盘 网卡 k8s-master01 192.168.130.130 master CentOS7.7 2C2G 60G x1 k8s-master02 192.168.130.131 master CentOS7.7 2C2G 60G x1 k8s-master03 192.168.130.132 master CentOS7.7 2C2G 60G x1 K8S VIP 192.168.130.134 – – – - -

以下操作在所有节点执行。

1、配置主机名

hostnamectl set-hostname k8s-master1 hostnamectl set-hostname k8s-master2 hostnamectl set-hostname k8s-master3

2、设置源

cat > /etc/yum.repos.d/docker-ce.repo <<EOF

[docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/\$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

EOF

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum clean all

yum makecache fast

3、执行初始化安装脚本 chushihua.sh

#!/bin/bash yum install -y vim wget net-tools netstat bash-completion #### 配置host ####

cat >> /etc/hosts << EOF 192.168.130.130 k8s-master1 192.168.130.131 k8s-master2 192.168.130.132 k8s-master3 EOF EOF #### 关闭防火墙和iptables #### sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config && setenforce 0 systemctl stop firewalld && systemctl disable firewalld #### 内核调优 #### cat > /etc/sysctl.d/k8s.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 net.ipv4.ip_nonlocal_bind = 1 EOF sysctl -p echo "1" >/proc/sys/net/ipv4/ip_forward #### 关闭swap #### swapoff -a sed -i 's/.*swap.*/#&/' /etc/fstab #### 安装ipvs模块 #### yum install ipset ipvsadm -y cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4 #### 安装docker #### yum install -y docker-ce-19.03.13-3.el7.x86_64 mkdir -p /data/docker /etc/docker sed -i "s#dockerd#& --graph=/data/docker#" /usr/lib/systemd/system/docker.service cat >/etc/docker/daemon.json <<EOF { "registry-mirrors": ["http://10.2.57.16:5000"], "insecure-registries": ["10.2.57.16:5000","10.2.55.8:5000"] } EOF systemctl daemon-reload && systemctl restart docker.service systemctl enable docker.service #### 安装k8s #### yum install -y kubeadm-1.16.2-0.x86_64 kubelet-1.16.2-0.x86_64 kubectl-1.16.2-0.x86_64

三、安装 haproxy和keepalived

kubernetes master 节点运行如下组件:

- kube-apiserver

- kube-scheduler

- kube-controller-manager

kube-scheduler 和 kube-controller-manager 可以以集群模式运行,通过 leader 选举产生一个工作进程,其它进程处于阻塞模式。

kube-apiserver可以运行多个实例,但对其它组件需要提供统一的访问地址,该地址需要高可用。本次部署使用 keepalived+haproxy 实现 kube-apiserver VIP 高可用和负载均衡。

haproxy+keepalived配置vip,实现了api唯一的访问地址和负载均衡。keepalived 提供 kube-apiserver 对外服务的 VIP。haproxy 监听 VIP,后端连接所有 kube-apiserver 实例,提供健康检查和负载均衡功能。

运行 keepalived 和 haproxy 的节点称为 LB 节点。由于 keepalived 是一主多备运行模式,故至少两个 LB 节点。

本次部署复用 master 节点的三台机器,在所有3个master节点部署haproxy和keepalived组件,以达到更高的可用性,haproxy 监听的端口(6444) 需要与 kube-apiserver的端口 6443 不同,避免冲突。

keepalived 在运行过程中周期检查本机的 haproxy 进程状态,如果检测到 haproxy 进程异常,则触发重新选主的过程,VIP 将飘移到新选出来的主节点,从而实现 VIP 的高可用。

所有组件(如 kubeclt、apiserver、controller-manager、scheduler 等)都通过 VIP +haproxy 监听的6444端口访问 kube-apiserver 服务。

负载均衡架构图如下:

1、部署 haproxy

haproxy提供高可用性,负载均衡,基于TCP和HTTP的代理,支持数以万记的并发连接。https://github.com/haproxy/haproxy

haproxy可安装在主机上,也可使用docker容器实现。文本采用第一种。

安装haproxy

yum install -y haproxy

创建配置文件/etc/haproxy/haproxy.cfg,重要配置以中文注释标出:

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# https://www.haproxy.org/download/2.1/doc/configuration.txt

# https://cbonte.github.io/haproxy-dconv/2.1/configuration.html

#

#---------------------------------------------------------------------

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend kubernetes-apiserver

mode tcp

bind *:9443 ## 监听9443端口

option tcplog

default_backend kubernetes-apiserver

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend kubernetes-apiserver

mode tcp

balance roundrobin

server master-192.168.130.130 192.168.130.130:6443 check

server master-192.168.130.131 192.168.130.131:6443 check

server master-192.168.130.132 192.168.130.132:6443 check

分别在三个master节点启动haproxy

systemctl restart haproxy && systemctl status haproxy

systemctl enable haproxy

2、部署keepalived

keepalived是以VRRP(虚拟路由冗余协议)协议为基础, 包括一个master和多个backup。 master劫持vip对外提供服务。master发送组播,backup节点收不到vrrp包时认为master宕机,此时选出剩余优先级最高的节点作为新的master, 劫持vip。keepalived是保证高可用的重要组件。

keepalived可安装在主机上,也可使用docker容器实现。文本采用第一种。(https://github.com/osixia/docker-keepalived)

安装 keepalived

yum install -y keepalived

配置keepalived.conf, 重要部分以中文注释标出:

! Configuration File for keepalived

global_defs {

script_user root

enable_script_security

}

vrrp_script chk_haproxy {

script "/bin/bash -c 'if [[ $(netstat -nlp | grep 9443) ]]; then exit 0; else systemctl stop keepalived;fi'"

interval 2

weight -2

}

vrrp_instance VI_1 {

interface ens33

state BACKUP

virtual_router_id 51

priority 100

nopreempt

unicast_peer {

}

virtual_ipaddress {

192.168.130.133

}

authentication {

auth_type PASS

auth_pass password

}

track_script {

chk_haproxy

}

}

- vrrp_script用于检测haproxy是否正常。如果本机的haproxy挂掉,即使keepalived劫持vip,也无法将流量负载到apiserver。

- 我所查阅的网络教程全部为检测进程, 类似

killall -0 haproxy。这种方式用在主机部署上可以,但容器部署时,在keepalived容器中无法知道另一个容器haproxy的活跃情况,因此我在此处通过检测端口号来判断haproxy的健康状况。 - weight可正可负。为正时检测成功+weight,相当与节点检测失败时本身priority不变,但其他检测成功节点priority增加。为负时检测失败本身priority减少。

- 另外很多文章中没有强调nopreempt参数,意为不可抢占,此时master节点失败后,backup节点也不能接管vip,因此我将此配置删去。

分别在三台节点启动keepalived:

systemctl start keepalived && systemctl status keepalived

systemctl enable keepalived

验证HA状态

也可以在本地执行该nc命令查看结果

[root@k8s-master02 ~]# yum install -y nc [root@k8s-master02 ~]# nc -v -w 2 -z 127.0.0.1 6444 2>&1 | grep 'Connected to' | grep 6444 Ncat: Connected to 127.0.0.1:6444.

四、部署k8s master节点

1、初始化master节点

初始化参考:

https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm-init/

https://godoc.org/k8s.io/kubernetes/cmd/kubeadm/app/apis/kubeadm/v1beta1

2、创建初始化配置文件

可以使用如下命令生成初始化配置文件

kubeadm config print init-defaults > kubeadm-config.yaml

根据实际部署环境修改信息:

apiVersion: kubeadm.k8s.io/v1beta2 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration nodeRegistration: criSocket: /var/run/dockershim.sock taints: - effect: NoSchedule key: node-role.kubernetes.io/master --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta2 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controlPlaneEndpoint: 192.168.130.133:9443 ### 虚拟VIP + haproxy暴露的端口 controllerManager: {} dns: type: CoreDNS etcd: local: dataDir: /data/k8s/etcd ### 修改etcd数据目录,也可以默认 imageRepository: 10.2.55.8:5000/kubernetes ###镜像仓库地址 kind: ClusterConfiguration kubernetesVersion: v1.16.2 ### kubernetes版本 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.244.0.0/16 scheduler: {} --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration featureGates: SupportIPVSProxyMode: true mode: ipvs

配置说明:

- controlPlaneEndpoint:为vip地址和haproxy监听端口9443

- imageRepository:由于国内无法访问google镜像仓库k8s.gcr.io,这里指定为私有仓库地址 10.2.55.8:5000/kubernetes

- podSubnet:指定的IP地址段与后续部署的网络插件相匹配,这里需要部署flannel插件,所以配置为10.244.0.0/16

- mode: ipvs:最后追加的配置为开启ipvs模式。

在集群搭建完成后可以使用如下命令查看生效的配置文件:

kubectl -n kube-system get cm kubeadm-config -oyaml

3、执行创建集群

这里追加tee命令将初始化日志输出到kubeadm-init.log中以备用(可选)。

kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

该命令指定了初始化时需要使用的配置文件,其中添加–experimental-upload-certs参数可以在后续执行加入节点时自动分发证书文件。

初始化示例

kubeadm init主要执行了以下操作:

- [init]:指定版本进行初始化操作

- [preflight] :初始化前的检查和下载所需要的Docker镜像文件

- [kubelet-start]:生成kubelet的配置文件”/var/lib/kubelet/config.yaml”,没有这个文件kubelet无法启动,所以初始化之前的kubelet实际上启动失败。

- [certificates]:生成Kubernetes使用的证书,存放在/etc/kubernetes/pki目录中。

- [kubeconfig] :生成 KubeConfig 文件,存放在/etc/kubernetes目录中,组件之间通信需要使用对应文件。

- [control-plane]:使用/etc/kubernetes/manifest目录下的YAML文件,安装 Master 组件。

- [etcd]:使用/etc/kubernetes/manifest/etcd.yaml安装Etcd服务。

- [wait-control-plane]:等待control-plan部署的Master组件启动。

- [apiclient]:检查Master组件服务状态。

- [uploadconfig]:更新配置

- [kubelet]:使用configMap配置kubelet。

- [patchnode]:更新CNI信息到Node上,通过注释的方式记录。

- [mark-control-plane]:为当前节点打标签,打了角色Master,和不可调度标签,这样默认就不会使用Master节点来运行Pod。

- [bootstrap-token]:生成token记录下来,后边使用kubeadm join往集群中添加节点时会用到

- [addons]:安装附加组件CoreDNS和kube-proxy

说明:无论是初始化失败或者集群已经完全搭建成功,你都可以直接执行kubeadm reset命令清理集群或节点,然后重新执行kubeadm init或kubeadm join相关操作即可。

4、其他master加入集群

从初始化输出或kubeadm-init.log中获取命令

kubeadm join 192.168.130.133:9443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:9c9303b7f9e2efee5e79de84617642dcb0e62426aa4e82ae75cfd4156a5bf63c --control-plane --certificate-key db64578aa6f76ded25eb0ddcade8cae0326a81e7537539deaedbc8e0b9d4c2d9

依次将k8s-master2和k8s-master3加入到集群中,示例

[root@k8s-master2 ~]# kubeadm join 192.168.130.133:9443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:9c9303b7f9e2efee5e79de84617642dcb0e62426aa4e82ae75cfd4156a5bf63c \

> --control-plane --certificate-key db64578aa6f76ded25eb0ddcade8cae0326a81e7537539deaedbc8e0b9d4c2d9

[preflight] Running pre-flight checks

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 19.03.13. Latest validated version: 18.09

[WARNING Hostname]: hostname "k8s-master2" could not be reached

[WARNING Hostname]: hostname "k8s-master2": lookup k8s-master2 on 192.168.130.2:53: server misbehaving

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master2 localhost] and IPs [192.168.130.131 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master2 localhost] and IPs [192.168.130.131 127.0.0.1 ::1]

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master2 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.130.131 192.168.130.133]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.16" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

{"level":"warn","ts":"2020-12-25T18:55:00.821+0800","caller":"clientv3/retry_interceptor.go:61","msg":"retrying of unary invoker failed","target":"passthrough:///https://192.168.130.131:2379","attempt":0,"error":"rpc error: code = DeadlineExceeded desc = context deadline exceeded"}

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node k8s-master2 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-master2 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

5、配置kubectl命令

无论在master节点或node节点,要能够执行kubectl命令必须进行以下配置:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

等集群配置完成后,可以在所有master节点和node节点进行以上配置,以支持kubectl命令。针对node节点复制任意master节点/etc/kubernetes/admin.conf到本地。

查看当前状态

由于未安装网络插件,coredns处于pending状态,node处于notready状态。

6、部署k8s node节点

在三台node上分别执行如下命令:

kubeadm join 192.168.130.133:9443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:9c9303b7f9e2efee5e79de84617642dcb0e62426aa4e82ae75cfd4156a5bf63c

六、部署网络插件和dashboard

1、部署flannel网络

kubernetes支持多种网络方案,这里简单介绍常用的flannel和calico安装方法,选择其中一种方案进行部署即可。

以下操作在master01节点执行即可。

安装flannel网络插件:

由于kube-flannel.yml文件指定的镜像从coreos镜像仓库拉取,可能拉取失败,可以从dockerhub搜索相关镜像进行替换,另外可以看到yml文件中定义的网段地址段为10.244.0.0/16。

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: 10.2.57.16:5000/kubernetes/flannel:v0.12.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: 10.2.57.16:5000/kubernetes/flannel:v0.12.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

执行创建

kubectl apply -f kube-flannel.yml

再次查看node和 Pod状态,全部为Running

[root@k8s-master1 package]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready master 166m v1.16.2

k8s-master2 Ready master 166m v1.16.2

k8s-master3 Ready master 165m v1.16.2

安装calico网络插件(可选):

安装参考:https://docs.projectcalico.org/v3.6/getting-started/kubernetes/

kubectl apply -f \ https://docs.projectcalico.org/v3.6/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml

注意该yaml文件中默认CIDR为10.244.0.0/16,需要与初始化时kube-config.yaml中的配置一致,如果不同请下载该yaml修改后运行。

2、部署dashboard

创建yaml文件

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kube-system

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kube-system

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'

- nonResourceURLs:

- '*'

verbs:

- '*'

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.4

#imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kube-system

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kube-system

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.4

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

执行创建

[root@k8s-master1 ~]# kubectl apply -f kubernetes-dashboard.yaml

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

dashboard登录方式

1、使用token登录,获取token方式如下:

kubectl describe secret -n kube-system $(kubectl get secret -n kube-system | grep kubernetes-dashboard-token | awk '{print $1}') | grep -w token | awk 'END {print $2}'

使用上面生成的token即可登录

2、生成config配置文件

TOKEN=$(kubectl describe secret -n kube-system $(kubectl get secret -n kube-system | grep kubernetes-dashboard-token | awk '{print $1}') | grep -w token | awk 'NR==3 {print $2}')

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.crt --embed-certs=true --server=10.2.57.17:9443 --kubeconfig=product.kubeconfig

kubectl config set-credentials dashboard_user --token=${TOKEN} --kubeconfig=product.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=dashboard_user --kubeconfig=product.kubeconfig

kubectl config use-context default --kubeconfig=product.kubeconfig

使用生成的 product.kubeconfig 文件即可登录

七、验证集群状态

查看nodes运行情况

[root@k8s-master1 package]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master1 Ready master 169m v1.16.2 192.168.130.130 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://19.3.13

k8s-master2 Ready master 169m v1.16.2 192.168.130.131 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://19.3.13

k8s-master3 Ready master 168m v1.16.2 192.168.130.132 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://19.3.13

验证IPVS

查看kube-proxy日志,第一行输出Using ipvs Proxier.

[root@k8s-master1 package]# kubectl -n kube-system logs -f pod/kube-proxy-55l7n

W1225 10:54:52.193126 1 feature_gate.go:208] Setting GA feature gate SupportIPVSProxyMode=true. It will be removed in a future release.

E1225 10:54:59.898661 1 node.go:124] Failed to retrieve node info: rpc error: code = Unavailable desc = etcdserver: leader changed

I1225 10:55:06.897612 1 node.go:135] Successfully retrieved node IP: 192.168.130.131

I1225 10:55:06.897651 1 server_others.go:176] Using ipvs Proxier.

W1225 10:55:06.897892 1 proxier.go:420] IPVS scheduler not specified, use rr by default

I1225 10:55:06.898219 1 server.go:529] Version: v1.16.2

I1225 10:55:06.898758 1 conntrack.go:52] Setting nf_conntrack_max to 131072

I1225 10:55:06.899497 1 config.go:313] Starting service config controller

I1225 10:55:06.899561 1 shared_informer.go:197] Waiting for caches to sync for service config

I1225 10:55:06.900251 1 config.go:131] Starting endpoints config controller

I1225 10:55:06.900268 1 shared_informer.go:197] Waiting for caches to sync for endpoints config

I1225 10:55:06.999709 1 shared_informer.go:204] Caches are synced for service config

I1225 10:55:07.000848 1 shared_informer.go:204] Caches are synced for endpoints config

查看代理规则

[root@k8s-master1 package]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.130.130:30001 rr

-> 10.244.1.3:8443 Masq 1 1 0

TCP 192.168.130.133:30001 rr

-> 10.244.1.3:8443 Masq 1 0 0

TCP 10.96.0.1:443 rr

-> 192.168.130.130:6443 Masq 1 0 0

-> 192.168.130.131:6443 Masq 1 1 0

-> 192.168.130.132:6443 Masq 1 2 0

TCP 10.96.0.10:53 rr

-> 10.244.0.2:53 Masq 1 0 0

-> 10.244.0.3:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.0.2:9153 Masq 1 0 0

-> 10.244.0.3:9153 Masq 1 0 0

TCP 10.106.45.88:443 rr

-> 10.244.1.3:8443 Masq 1 0 0

TCP 10.109.201.42:8000 rr

-> 10.244.2.3:8000 Masq 1 0 0

TCP 10.244.0.0:30001 rr

-> 10.244.1.3:8443 Masq 1 0 0

TCP 10.244.0.1:30001 rr

-> 10.244.1.3:8443 Masq 1 0 0

TCP 127.0.0.1:30001 rr

-> 10.244.1.3:8443 Masq 1 0 0

TCP 172.17.0.1:30001 rr

-> 10.244.1.3:8443 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.0.2:53 Masq 1 0 0

-> 10.244.0.3:53 Masq 1 0 0

执行以下命令查看etcd集群状态

kubectl -n kube-system exec etcd-k8s-master1 -- etcdctl --endpoints=https://192.168.130.130:2379 --ca-file=/etc/kubernetes/pki/etcd/ca.crt --cert-file=/etc/kubernetes/pki/etcd/server.crt --key-file=/etc/kubernetes/pki/etcd/server.key cluster-health

示例

[root@k8s-master1 package]# kubectl -n kube-system exec etcd-k8s-master1 -- etcdctl --endpoints=https://192.168.130.130:2379 --ca-file=/etc/kubernetes/pki/etcd/ca.crt --cert-file=/etc/kubernetes/pki/etcd/server.crt --key-file=/etc/kubernetes/pki/etcd/server.key cluster-health

member 10d7e869fb290ae4 is healthy: got healthy result from https://192.168.130.132:2379

member 5f903da6ce6e7909 is healthy: got healthy result from https://192.168.130.131:2379

member fb412e80741ff7af is healthy: got healthy result from https://192.168.130.130:2379

cluster is healthy

[root@k8s-master1 package]# kubectl -n kube-system exec etcd-k8s-master1 -- etcdctl --endpoints=https://192.168.130.130:2379 --ca-file=/etc/kubernetes/pki/etcd/ca.crt --cert-file=/etc/kubernetes/pki/etcd/server.crt --key-file=/etc/kubernetes/pki/etcd/server.key member list 10d7e869fb290ae4: name=k8s-master3 peerURLs=https://192.168.130.132:2380 clientURLs=https://192.168.130.132:2379 isLeader=false 5f903da6ce6e7909: name=k8s-master2 peerURLs=https://192.168.130.131:2380 clientURLs=https://192.168.130.131:2379 isLeader=false fb412e80741ff7af: name=k8s-master1 peerURLs=https://192.168.130.130:2380 clientURLs=https://192.168.130.130:2379 isLeader=true

查看 kube-controller-manager 状态

[root@k8s-master1 package]# kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml apiVersion: v1 kind: Endpoints metadata: annotations: control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"k8s-master1_456327ee-e1d5-4857-8131-e969b411e287","leaseDurationSeconds":15,"acquireTime":"2020-12-25T10:55:28Z","renewTime":"2020-12-25T14:12:51Z","leaderTransitions":1}' creationTimestamp: "2020-12-25T10:54:36Z" name: kube-controller-manager namespace: kube-system resourceVersion: "20672" selfLink: /api/v1/namespaces/kube-system/endpoints/kube-controller-manager uid: fe5d782a-f5ad-4e3c-b146-ee25892b0ad6

查看kube-scheduler状态

[root@k8s-master1 package]# kubectl get endpoints kube-scheduler --namespace=kube-system -o yaml apiVersion: v1 kind: Endpoints metadata: annotations: control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"k8s-master1_cfbf1bda-431a-428c-960a-da07cdd87130","leaseDurationSeconds":15,"acquireTime":"2020-12-25T10:55:28Z","renewTime":"2020-12-25T14:14:37Z","leaderTransitions":1}' creationTimestamp: "2020-12-25T10:54:34Z" name: kube-scheduler namespace: kube-system resourceVersion: "20849" selfLink: /api/v1/namespaces/kube-system/endpoints/kube-scheduler uid: fe659cb7-0c3e-43fc-9a73-6cc2bd9857c2

原文链接:https://blog.csdn.net/networken/java/article/details/89599004