krping学习笔记 (1)

krping

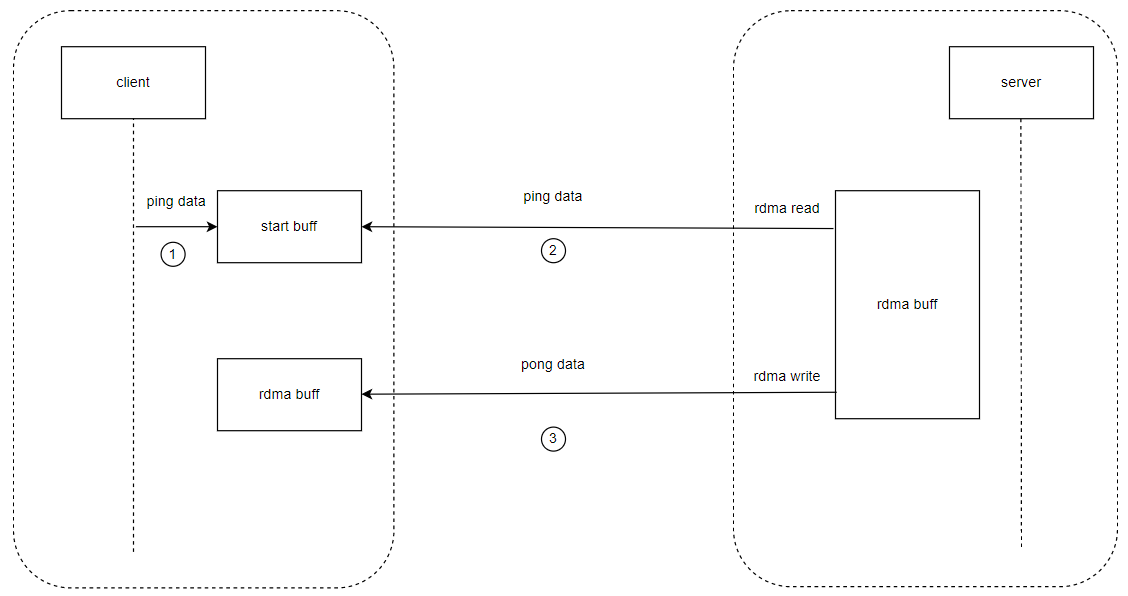

Kriping是一个内核态的rdma ping-pong程序 , 其使用rdma读写的方式实现了数据在server-client间的ping-pong式传递. 如下图所示, 在开始数据传输前, client

会先将ping data写入到事先准备好的start buff中, 在第2步中, server会主动发起rdma read将ping data从start buff中读取到自己的rdma buff中. 第3步, 将buff

中的ping data rdma write 到 client的rdma buff中. 至此一次完整的rdma ping-pong数据传输就完成了.

原理

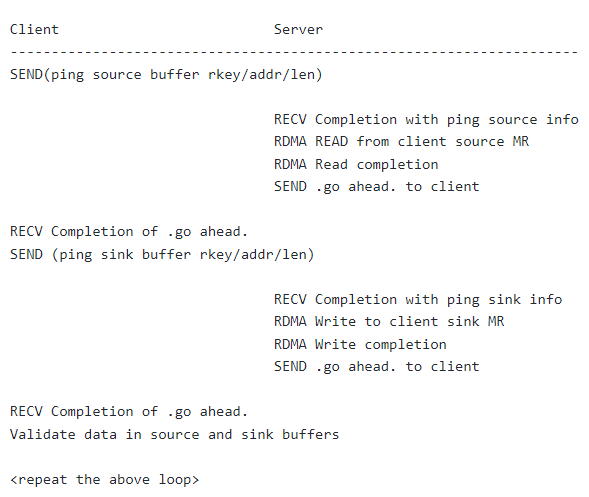

krping的工作过程如下所示:

1.client 通过 send-recv 模式 将 start buf (client会写入原始的ping data) 的地址, 发放给server进行rdma read的rkey, 每次传递给对端的payload大小.

static void krping_format_send(struct krping_cb *cb, u64 buf)

{

struct krping_rdma_info *info = &cb->send_buf;

u32 rkey;

if (!cb->server || cb->wlat || cb->rlat || cb->bw) {

//发放给server进行rdma read的rkey

rkey = krping_rdma_rkey(cb, buf, !cb->server_invalidate);

//start buf (client会写入原始的ping data) 的地址

info->buf = htonll(buf);

info->rkey = htonl(rkey);

//传递给对端的payload大小

info->size = htonl(cb->size);

DEBUG_LOG("RDMA addr %llx rkey %x len %d\n",

(unsigned long long)buf, rkey, cb->size);

}

}

//post_send

ret = ib_post_send(cb->qp, &cb->sq_wr, &bad_wr);

2.server 在recv操作的completion里, server获取到client传递过来的 start buf的总线地址(DMA分配的) , 还有操作start buf 的rkey, 以及start buf的大小.

P("read from recvq\n");

ret = ib_post_recv(cb->qp, &cb->rq_wr, &bad_wr);

if (ret) {

printk(KERN_ERR PFX "ib_post_recv failed: %d\n", ret);

goto err2;

}

krping_cq_event_handler:

case IB_WC_RECV:

DEBUG_LOG("recv completion\n");

cb->stats.recv_bytes += sizeof(cb->recv_buf);

cb->stats.recv_msgs++;

if (cb->wlat || cb->rlat || cb->bw) {

P("call server_recv\n");

ret = server_recv(cb, &wc);

} else {

P("call client recv\n");

ret = cb->server ? server_recv(cb, &wc) :

client_recv(cb, &wc);

}

if (ret) {

printk(KERN_ERR PFX "recv wc error: %d\n", ret);

goto error;

}

ret = ib_post_recv(cb->qp, &cb->rq_wr, &bad_wr);

if (ret) {

printk(KERN_ERR PFX "post recv error: %d\n",

ret);

goto error;

}

wake_up_interruptible(&cb->sem);

break;

static int server_recv(struct krping_cb *cb, struct ib_wc *wc)

{

if (wc->byte_len != sizeof(cb->recv_buf)) {

printk(KERN_ERR PFX "Received bogus data, size %d\n",

wc->byte_len);

return -1;

}

cb->remote_rkey = ntohl(cb->recv_buf.rkey);

cb->remote_addr = ntohll(cb->recv_buf.buf);

cb->remote_len = ntohl(cb->recv_buf.size);

DEBUG_LOG("Received rkey %x addr %llx len %d from peer\n",

cb->remote_rkey, (unsigned long long)cb->remote_addr,

cb->remote_len);

if (cb->state <= CONNECTED || cb->state == RDMA_WRITE_COMPLETE)

cb->state = RDMA_READ_ADV;

else

cb->state = RDMA_WRITE_ADV;

return 0;

}

3.server 构造rdma读请求, 开始从client的start buf中读取ping data

P("setup rdma_sq_wr and reset rkey\n");

cb->rdma_sq_wr.rkey = cb->remote_rkey; //设置rkey

cb->rdma_sq_wr.remote_addr = cb->remote_addr; //设置为client的start buff地址

cb->rdma_sq_wr.wr.sg_list->length = cb->remote_len; //

cb->rdma_sgl.lkey = krping_rdma_rkey(cb, cb->rdma_dma_addr, !cb->read_inv); //设置rdma_sgl的lkey

cb->rdma_sq_wr.wr.next = NULL;

/* 发起一个INV Read */

if (cb->read_inv) {

P("send read with inv cmd\n");

cb->rdma_sq_wr.wr.opcode = IB_WR_RDMA_READ_WITH_INV;

} else {

P("send read cmd\n");

cb->rdma_sq_wr.wr.opcode = IB_WR_RDMA_READ;

//链上一个opcode=local inv类型的WR

cb->rdma_sq_wr.wr.next = &inv;

memset(&inv, 0, sizeof inv);

inv.opcode = IB_WR_LOCAL_INV;

inv.ex.invalidate_rkey = cb->reg_mr->rkey;

inv.send_flags = IB_SEND_FENCE;

}

//post send wr list到qp

ret = ib_post_send(cb->qp, &cb->rdma_sq_wr.wr, &bad_wr);

if (ret) {

printk(KERN_ERR PFX "post send error %d\n", ret);

break;

}

cb->rdma_sq_wr.wr.next = NULL;

wait_event_interruptible(cb->sem, cb->state >= RDMA_READ_COMPLETE);

4.server 收到 rdma read 的WC之后, 就会主动调度一把等待队列里的进程

case IB_WC_RDMA_READ:

DEBUG_LOG("rdma read completion\n");

cb->stats.read_bytes += cb->rdma_sq_wr.wr.sg_list->length;

cb->stats.read_msgs++;

cb->state = RDMA_READ_COMPLETE;

wake_up_interruptible(&cb->sem);

break;

5.server从client rdma读取到ping data后, 会再次通过send-recv的方式发送一个go-ahead消息给client, 通知阻塞的client继续工作.

//等待wc

ret = wait_event_interruptible(cb->sem, cb->state >=

RDMA_WRITE_COMPLETE);

if (cb->state != RDMA_WRITE_COMPLETE) {

printk(KERN_ERR PFX

"wait for RDMA_WRITE_COMPLETE state %d\n",

cb->state);

break;

}

//重置状态为connected

cb->state = CONNECTED;

if (cb->server && cb->server_invalidate) {

cb->sq_wr.ex.invalidate_rkey = cb->remote_rkey;

cb->sq_wr.opcode = IB_WR_SEND_WITH_INV;

DEBUG_LOG("send-w-inv rkey 0x%x\n", cb->remote_rkey);

}

//post send to qp

ret = ib_post_send(cb->qp, &cb->sq_wr, &bad_wr);

if (ret) {

printk(KERN_ERR PFX "post send error %d\n", ret);

break;

}

DEBUG_LOG("server posted go ahead\n");

6.client 收到 go-ahead消息的 WC

case IB_WC_RECV:

DEBUG_LOG("recv completion\n");

cb->stats.recv_bytes += sizeof(cb->recv_buf);

cb->stats.recv_msgs++;

if (cb->wlat || cb->rlat || cb->bw) {

P("call server_recv\n");

ret = server_recv(cb, &wc);

} else {

P("call client recv\n");

ret = cb->server ? server_recv(cb, &wc) :

client_recv(cb, &wc);

}

if (ret) {

printk(KERN_ERR PFX "recv wc error: %d\n", ret);

goto error;

}

//

ret = ib_post_recv(cb->qp, &cb->rq_wr, &bad_wr);

if (ret) {

printk(KERN_ERR PFX "post recv error: %d\n", ret);

goto error;

}

wake_up_interruptible(&cb->sem);

break;

static int client_recv(struct krping_cb *cb, struct ib_wc *wc)

{

if (wc->byte_len != sizeof(cb->recv_buf)) {

printk(KERN_ERR PFX "Received bogus data, size %d\n",

wc->byte_len);

return -1;

}

if (cb->state == RDMA_READ_ADV)

cb->state = RDMA_WRITE_ADV;

else

cb->state = RDMA_WRITE_COMPLETE;

return 0;

}