python爬虫获取疫情信息并存入mysql数据库实践

上一次做了全国疫情统计可视化图表,这次尝试着能不能实现数据库里的更新操作,首先考虑的就是python爬虫,因为它易操作,并且python学习也是日后必须的。

通过从网上查阅学习,代码如下:

import requests from bs4 import BeautifulSoup import re import pymysql import json def create(): db = pymysql.connect("localhost", "root", "0000", "grabdata_test",charset='utf8') # 连接数据库 cursor = db.cursor() cursor.execute("DROP TABLE IF EXISTS info") sql = """CREATE TABLE info ( Id INT PRIMARY KEY AUTO_INCREMENT, Date varCHAR(255), Province varchar(255), City varchar(255), Confirmed_num varchar(255), Yisi_num varchar(255), Cured_num varchar(255), Dead_num varchar(255), Code varchar(255))""" cursor.execute(sql) db.close() def insert(value): db = pymysql.connect("localhost", "root", "0000", "grabdata_test",charset='utf8') cursor = db.cursor() sql = "INSERT INTO info(Date,Province,City,Confirmed_num,Yisi_num,Cured_num,Dead_num,Code) VALUES ( %s,%s,%s,%s,%s,%s,%s,%s)" try: cursor.execute(sql, value) db.commit() print('插入数据成功') except: db.rollback() print("插入数据失败") db.close() create() # 创建表 url = 'https://raw.githubusercontent.com/BlankerL/DXY-2019-nCoV-Data/master/json/DXYArea.json' response = requests.get(url) # 将响应信息进行json格式化 versionInfo = response.text # print(versionInfo)#打印爬取到的数据 # print("------------------------")#重要数据分割线↓ #一个从文件加载,一个从内存加载#json.load(filename)#json.loads(string) jsonData = json.loads(versionInfo) #用于存储数据的集合 dataSource = [] provinceShortNameList = [] confirmedCountList = [] curedCount = [] deadCountList = [] #遍历对应的数据存入集合中 for k in range(len(jsonData['results'])): if(jsonData['results'][k]['countryName'] == '中国'): provinceShortName = jsonData['results'][k]['provinceName'] if("待明确地区" == provinceShortName): continue; for i in range(len(jsonData['results'][k]['cities'])): confirmnum=jsonData['results'][k]['cities'][i]['confirmedCount'] yisi_num=jsonData['results'][k]['cities'][i]['suspectedCount'] cured_num=jsonData['results'][k]['cities'][i]['curedCount'] dead_num=jsonData['results'][k]['cities'][i]['deadCount'] code=jsonData['results'][k]['cities'][i]['locationId'] cityname=jsonData['results'][k]['cities'][i]['cityName'] date='2020-3-10' insert((date,provinceShortName,cityname,confirmnum,yisi_num,cured_num,dead_num,code))

这次爬取的是https://raw.githubusercontent.com/BlankerL/DXY-2019-nCoV-Data/master/json/DXYArea.json网站上的疫情信息,pycharm运行结果:

我们再来看看数据库里的信息:

我们可以看到数据库里已经成功导入数据了!

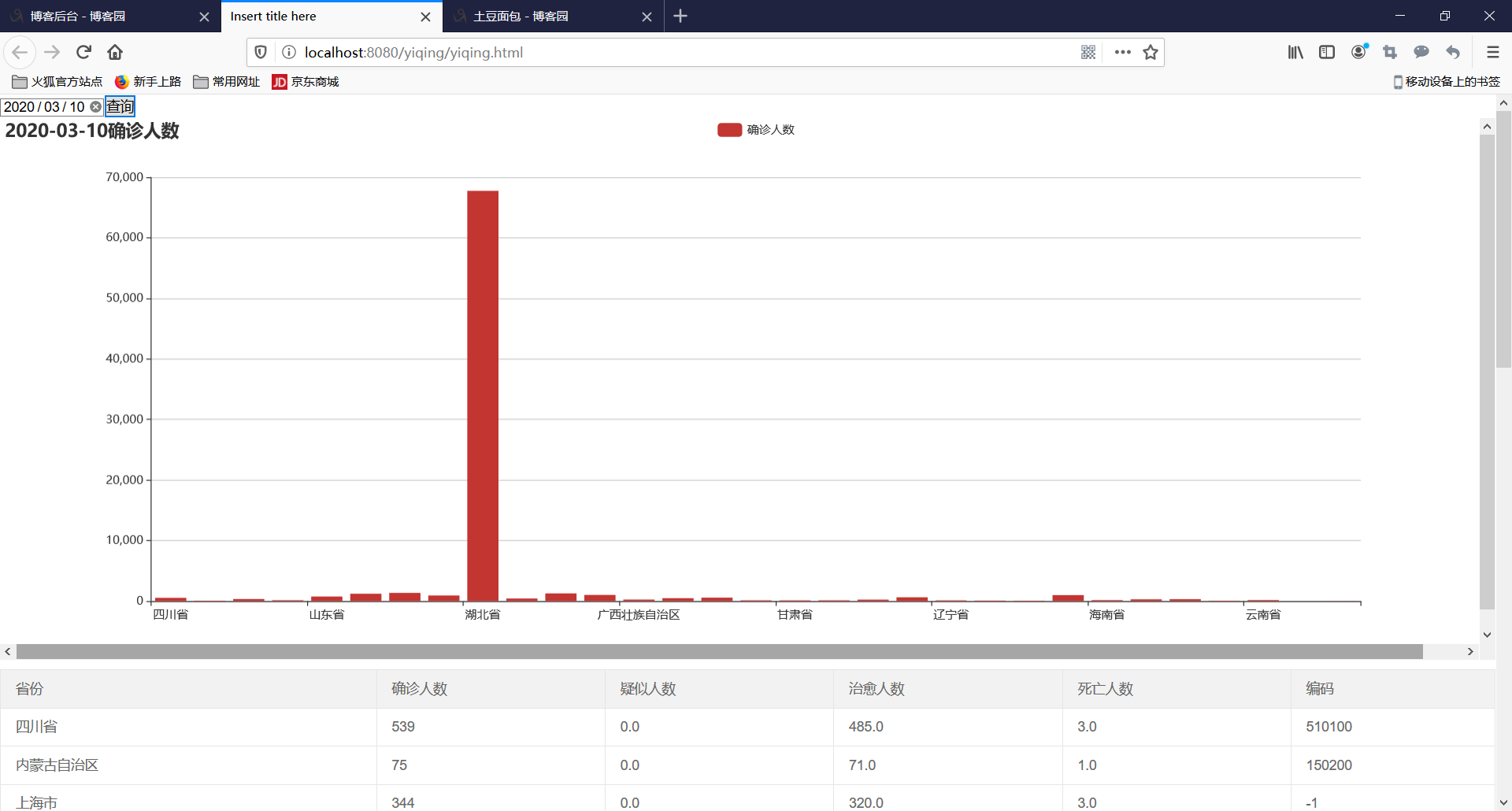

接着我们尝试让他可视化,套用上一次的图表,显示结果如下:

至此,本次python爬虫实践算是成功了!老泪纵横。。。

在pycharm的使用过程中遇到了诸多问题和bug,哭辽,把我的辛酸史写在下一篇博客里吧55555~~~