CCIE DC Multicast Part 2.

Hi Guys! In my last blog post, we had a quick look at multicast and a more in depth look at how PIM works, since this is a CCIE DC focused blog, and the Nexus 7000 uses PIM Sparse Mode, we spent most of our time looking at Sparse Mode and the way shared trees and shortest path trees work, including the shared tree to shortest path tree switchover.

在上一篇博客中,我们快速了解了组播和一些PIM工作方式。因为这是关注CCIE DC的博文,Nexus 7000使用是sparse mode,我们花费了很多时间在sparse mode上以及共享树和最短路径树的工作方式和切换方式

Incidentally, before going any further, for a great review of the multicast concepts head to:

巧合的是,在继续进展之前,有一个不错的链接可以更好地复习一下组播概念

http://www.cisco.com/en/US/prod/collateral/iosswrel/ps6537/ps6552/prod_presentation0900aecd8031088a.pdf

If any of the above doesn't make sense to you, or you just want to brush up on your PIM Sparse mode, I strongly recommend you take a look at my first blog post, the concepts we are about to discuss all really depend on understanding PIM Sparse Mode. OK! Let's start!

如果有任何不理解的,或者你就是想温习一下sparse mode,我强烈建议你看看我前面的博文,让我们开始:

So when we spent time talking about PIM we looked at how when a receiver wants to join a multicast group, the closest router to that receiver (also known as the last hop router) will send a message up the tree to the RP to say hey, this guy wants to start receiving multicast so start getting the interfaces ready to start forwarding.

因此,当我们花时间谈论PIM的时候,我们看的是当一个接收者想加入一个组播组时,靠近接收者(也是最后一跳路由器)是如何在发送一个消息给树上的RP,say hey,说这个伙计想接收组播,所以打开端口准备转发。

We kind of glossed over how the device itself tells the router it wants to start receiving the multicast though, this happens via IGMP. In simple terms an IGMP message tells the router that the host wants to receive the multicast, you can have a router join a multicast group with:

我们想想设备自己如何告诉路由器它想接收组播流,这是通过IGMP实现的,简单的说,IGMP消息告诉路由器,这个主机想接收组播,用下面的命令你可以让一个路由器加入一个组播组:

ip igmp join-group 239.1.1.1 (where 239.1.1.1 is the multicast group you want the device to join)

A Source can then generate traffic to 239.1.1.1 and start receiving the multicast stream.

一个源可以生成组播流量到239.1.1.1 和接收组播流

Everything looking fine and dandy so far? OK, here are a few problems with this approach:

至今所有事情看起来很好,ok, 那这有一些问题和想法

What happens when two sources are transmitting to the same multicast address? the receivers will receive two streams, wasting network bandwidth

当两个源正在传输流量到相同的组播地址会发生什么,接收者将接收两个流,浪费带宽

A malicious user could start sending traffic to a multicast address and have it delivered to multiple hosts, potentially knocking them off the the network or degrading performance or interfering with the legitimate multicast application

一个恶意用户能发送流量到一个组播地址,让它转发到多个主机,潜在的攻击他们的网络降低性能

We need an RP to discover the devices that want to receive multicast traffic, and we also need an RP for the first multicast frame from the source to reach our receiver (then we switch to a shortest path tree), what if our RP dies?

我们需要RP去发现那些想接收组播流的设备,同时我们也需要RP完成第一个组播帧从源传输到接收端,如果RP挂掉怎么办?

If i am trying to build multicast applications for the internet (something which I would like to point out, doesn't really seem to have happened yet unless I am mistaken, do you know of a multicast application used out on the internet? Let us know in the comments section! 😃) who runs the RP? Which ISP? Why do I trust them? Without an RP how do I learn about multicast sources and who wants to receive the traffic?

如果我想为互联网建立组播应用(谁是RP,谁是ISP,我们为何信任它们,没有RP,如何学习组播源和谁想接收组播)

These problems have been solved with IGMPv3 and SSM (Source Specific Multicast), Let's see how.

这些问题已经被IGMPv3和SSM解决,让我们看看如何解决的:

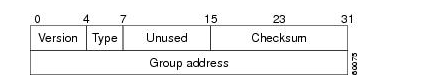

First of all, Let's quickly look at an IGMP Packet: (Picture source article: http://www.cisco.com/en/US/docs/ios/solutions_docs/ip_multicast/White_papers/mcst_ovr.html#wp1015526)

首先,我们快速看看IGMP包:

As you can probably tell from the above picture, with such a small type field, IGMP version 1 which is illustrated above is not exactly a complicated protocol. The host sends an IGMP Packet requesting to join the group address listed in the packet.

从图片看到,在这个小的字段里,IGMPv1不是一个复杂的协议,主机发送一个IGMP包请求去加入图标里列出那块组播地址

IGMP Version 2 added some extra message types that are not relevant to our discussion at the moment, the important thing is to see that the only major field this packet contains is a group address that wishes to be joined.

IGMP v2添加了一些额外的消息类型,跟我们现在讨论不太相关的,最重要的一点看到这个包的主要字段里还是只包含了一个想加入的组播组地址

Below is an IGMPv3 Packet:

As you can see, this packet not only contains the group we wish to join, but a source field, which can be used to specifiy "I want to join this multicast stream from THESE sources".

你可以看到,这个包不仅包含我们想加入的组播组,还有源字段,这个能被用来标示,我想加入组播流来自这些源的组播组

Here things get interesting.

事情开始变得有趣了

Let's assume our host knows what multicast group it wants to join and also knows the source address, this could have been learnt via some sort of external directory of multicast sources, like a webpage or something like that. The important thing to know is, somehow our host has discovered the group it wants to join, and what sources it should expect traffic from.

假设我们的主机知道它想加入的组播组,同时也知道组播源地址,这可以通过外部像一些网页看到一些组播源地址目录知道源地址,总之,重要的是如何让我们的主机知道它想加入的组播组和它想接收源自谁的组播流

Here is an example of the packets generated when we join a group using IGMPv3 on a router:

当我加入一个IGMPv3路由器的时,这有一个包生成的例子:

Router(config-if)#ip igmp version 3

Router(config-if)#ip igmp join-group 232.1.1.1 source 1.1.1.1*Feb 3 17:42:52.567: IGMP(0): WAVL Insert group: 232.1.1.1 interface: GigabitEthernet1/0Successful

*Feb 3 17:42:52.571: IGMP(0): Create source 1.1.1.1

*Feb 3 17:42:52.571: IGMP(0): Building v3 Report on GigabitEthernet1/0

*Feb 3 17:42:52.575: IGMP(0): Add Group Record for 232.1.1.1, type 5

Feb 3 17:42:52.575: IGMP(0): Add Source Record 1.1.1.1Feb 3 17:42:52.575: IGMP(0): Add Group Record for 232.1.1.1, type 6

*Feb 3 17:42:52.579: IGMP(0): No sources to add, group record removed from report

*Feb 3 17:42:52.579: IGMP(0): Send unsolicited v3 Report with 1 group records on GigabitEthernet1/0

From the above we can see that an IGMP membership report is being sent out and we are specifically saying we want the source to be 1.1.1.1.

通过上面我们看到一个IGMP成员report正在被发送,而且特别说我们想加入1.1.1.1的组播源

IGMPv3 is going to be used for a host of reasons, but our main concern with it for our CCIE DC is it's use in SSM (Source specific multicast), SSM in combination with IGMPv3 means that we already now know the source address of the multicast stream, we don't need an RP to tell us that: the receiver requesting the stream has told us via IGMPv3! So since we already know the Source address, we can just send a normal join request up the tree towards the source! No more shared trees, yet we are still using PIM Sparse Mode, Hooray!

IGMPv3被主机使用的原因里,我们主要关心它对于CCIE DC里考到SSM, SSM配合IGMPv3意味着我们已经知道组播流的源地址,我们不需要RP来告诉我,接收者通过IGMPv3已经被告诉了,所以我们已经知道源地址,我们可以直接发送正常的join request到树上到源端,不在需要共享树,而且我们依然使用的是sparse mode。

SSM has other advantages too, because we are now joining multicast groups based on an (S,G) Entry, because the S is always unique, we can avoid multicast collision, let's say for example you wanted to stream out on the internet, you have this great idea for "the next youtube" using multicast, so you start sending multicast traffic to 238.0.0.1, what's to stop someone else using that exact same address at some point in time to stream THERE multicast traffic? Why should the ISP your using forward this traffic for you? How do they know you have receivers who really want to hear this traffic? With SSM every multicast stream is unique because the multicast route is (S,G), not (,G)

SSM有另一个优点,因为我们是基于一个(S,G)条目加入组播组的,由于S是唯一的,我们避免了组播的冲突,举个例子,你想传送组播流到互联网,比如你有个想法为下一个youtube使用组播,所以你想发送组播流到238.0.0.1,怎么停止其他人也使用相同的地址传输它的组播流量,为什么ISP为你转发组播流,如何让接收者知道真正的想接收的源,用SSM每个组播流都是唯一的,因为组播路由是(S,G), 而不是 (,G)

The 232.0.0.0/8 range has been set aside by the IETF for use on the internet for SSM multicast.

232.0.0.0/8 范围已经被IETF用于分配给SSM组播

Let's load up our topology files and start checking this out!

让我们继续加载实验环境,检查它:

If you read my first Multicast post, you will know that this is a LIVE follow along blog post that you can try out yourself, all the config's you need for GNS3 and the topology file is available: here

Here is a diagram of the network:

Ok let's get started.

OK,让我们开始:

Once you have enabled PIM sparse mode on all interfaces, the only remaining bit of configuration is to enable SSM for your multicast range with the following command on each router (Except the source and receiver routers)

一旦你在所有接口开启PIM sparse mode,唯一保留的配置是开启SSM,利用下面的命令在每个路由器上(除了源路由器和接收路由器)

ip pim ssm default

The default keyword tells PIM to treat the range 232.0.0.0/8 as an SSM range, you could specify any range you wanted here however.

这个default 关键字告诉PIM使用232.0.0.0/8作为SSM组播范围,当然你可以使用你想用的任意范围

Let's take a look at what happens when our receiver joins the multicast group.

看一下当接收者加入组播组后发生了什么:

Receiver1:

interface GigabitEthernet1/0

ip address 2.2.2.1 255.255.255.0

ip pim sparse-mode 个人感觉接收端不用开这个

ip igmp join-group 232.1.1.1 source 1.1.1.1

ip igmp version 3

You can see the config....

You see the messages get sent:

debug ip igmp

eb 3 18:56:55.539: IGMP(0): Building v3 Report on GigabitEthernet1/0

*Feb 3 18:56:55.543: IGMP(0): Add Group Record for 239.1.1.1, type 5

*Feb 3 18:56:55.547: IGMP(0): No sources to add, group record removed from report

*Feb 3 18:56:55.551: IGMP(0): Add Group Record for 239.1.1.1, type 6

*Feb 3 18:56:55.551: IGMP(0): Add Source Record 1.1.1.1

*Feb 3 18:56:55.551: IGMP(0): Send unsolicited v3 Report with 1 group records on GigabitEthernet1/0

But for some reason... the multicast routing table on PIM2 does not update

但是一些原因,PIM2的组播路由表并没有更新

PIM2#show ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 224.0.1.40), 00:09:50/00:02:12, RP 0.0.0.0, flags: DL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

GigabitEthernet2/0, Forward/Sparse, 00:09:37/stopped

Let's investigate further, perform a debug ip igmp on PIM2 and you will get your answer:

让我们继续检查,执行一个PIM2的debug,你得到下面的答案:

*Feb 3 18:58:41.547: IGMP(0): Received v3 Query on GigabitEthernet2/0 from 2.2.2.1

*Feb 3 18:58:45.535: IGMP(0): v3 report on interface configured for v2, ignored

Ahh, it is now obvious what has happened, you need to specify version 3 IGMP for this interface, this is an example of the kind of troubleshooting you might have to do as part of the CCIE DC, so keep it in mind!

很显然,你需要指定IGMPv3在这个接口上,记住,这也是CCIE DC很好的排错方式。

Ok, let's enable IGMP V3 on PIM2 and watch what happens...

OK,让我们开启IGMPv3在PIM2上,观察发生了什么

PIM2(config)#int gi2/0

PIM2(config-if)#ip igmp version 3

*Feb 3 18:59:50.507: IGMP(0): Switching IGMP version from 2 to 3 on GigabitEthernet2/0

*Feb 3 18:59:50.511: IGMP(0): The v3 querier for GigabitEthernet2/0 is this system

*Feb 3 18:59:50.515: IGMP(0): Send v3 init Query on GigabitEthernet2/0

*Feb 3 18:59:51.931: IGMP(0): Received v3 Report for 2 groups on GigabitEthernet2/0 from 2.2.2.1

*Feb 3 18:59:51.931: IGMP(0): Received Group record for group 224.0.1.40, mode 2 from 2.2.2.1 for 0 sources

*Feb 3 18:59:51.931: IGMP(0): WAVL Insert group: 224.0.1.40 interface: GigabitEthernet2/0Successful

*Feb 3 18:59:51.935: IGMP(0): Switching to EXCLUDE mode for 224.0.1.40 on GigabitEthernet2/0

*Feb 3 18:59:51.935: IGMP(0): Updating EXCLUDE group timer for 224.0.1.40

Feb 3 18:59:51.935: IGMP(0): MRT Add/Update GigabitEthernet2/0 for (,224.0.1.40) by 0

Feb 3 18:59:51.939: IGMP(0): Received Group record for group 232.1.1.1, mode 1 from 2.2.2.1 for 1 sourcesFeb 3 18:59:51.943: IGMP(0): WAVL Insert group: 232e: GigabitEthernet2/0Successful

*Feb 3 18:59:51.943: IGMP(0): Create source 1.1.1.1

*Feb 3 18:59:51.943: IGMP(0): Updating expiration time on (1.1.1.1,232.1.1.1) to 180 secs

*Feb 3 18:59:51.943: IGMP(0): Setting source flags 4 on (1.1.1.1,232.1.1.1)

*Feb 3 18:59:51.943: IGMP(0): MRT Add/Update GigabitEthernet2/0 for (1.1.1.1,232.1.1.1) by 0

*Feb 3 18:59:51.963: PIM(0): Insert (1.1.1.1,232.1.1.1) join in nbr 10.0.0.1's queue

*Feb 3 18:59:51.979: PIM(0): Building Join/Prune packet for nbr 10.0.0.1

*Feb 3 18:59:51.983: PIM(0): Adding v2 (1.1.1.1/32, 232.1.1.1), S-bit Join

*Feb 3 18:59:51.983: PIM(0): Send v2 join/prune to 10.0.0.1 (GigabitEthernet3/0)

Let's go through these entries shall we? First thing we see once we enable IGMPv3 on the interface is that the router basically asks "Hey guys is anyone out there?", our receiver router sends a report back "Hey I am here!" and I am part of a few groups!, the first group is 224.0.1.40 which we don't really care about, but the next one is the one we DO care about, we have received a group request for 232.1.1.1 and important for 1 source, the source is 1.1.1.1, once we know what that source is, the router checks to see how it would route to reach 1.1.1.1 (remember, next hop reachability!😃

让我们检查所有条目,首先我们看到一旦开启在接口上IGMPv3,路由器就要问外面有人吗,我们的接收端路由器回复一个report,说我正在这,我是一些组的成员,第一个组224.0.1.40可不用关心,,但是下一个我们认真看一下了,我们已经收到一个232.1.1.1的组请求,重要的是有一个源,一旦我们知道源,路由就开始检查看如何到达1.1.1.1

PIM2#show ip route 1.1.1.1

Routing entry for 1.1.1.0/24

Known via "ospf 1", distance 110, metric 2, type intra area

Last update from 10.0.0.1 on GigabitEthernet3/0, 00:17:50 ago

Routing Descriptor Blocks:

- 10.0.0.1, from 1.1.1.2, 00:17:50 ago, via GigabitEthernet3/0

Route metric is 2, traffic share count is 1

It determines the route to 1.1.1.1 is via Gi3/0, so thus a PIM join message is sent up that interface towards the neighbor.

由于路由到1.1.1.1是应由G3/0,因此pim join消息通过那个接口送到邻居

Wooo Hoo! let's check out the routing table of the appropriate routers:

让我们检查组播路由表

PIM2#show ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(1.1.1.1, 232.1.1.1), 00:05:27/00:02:23, flags: sTI

Incoming interface: GigabitEthernet3/0, RPF nbr 10.0.0.1

Outgoing interface list:

GigabitEthernet2/0, Forward/Sparse, 00:05:27/00:02:23

We can see from the above output we now have an entry for the (S,G) 1.1.1.1, 232.1.1.1, the flags are quite interesting, a small s for SSM group, T for SPT-Bit Set (in other words, this is a shortest path tree not a shared tree since we have not involved an RP in any way shape or form) and finally an I Flag to say the receiver asked for a particular source!

我们从显示结果能看到一个S,G条目,标志位很有意思,小s标记对应SSM组,T位标记对应SPT最短路径树(换句话说我们没有涉及RP没有共享树),最后I位说接收者请求一个特定的源

let's look at PIM1's routing table:

再看看PIM1 的路由表:

PIM1#show ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(1.1.1.1, 232.1.1.1), 00:07:10/00:03:11, flags: sT

Incoming interface: GigabitEthernet1/0, RPF nbr 1.1.1.1

Outgoing interface list:

GigabitEthernet3/0, Forward/Sparse, 00:07:10/00:03:11

The tree has been built and we haven't involved an RP at any point in time! (In my config, i don't even have an RP specified: go ahead and try it yourself without an RP!)

树已经建立,我们也没有涉及任何RP点(在我的配置里,我甚至没设置任何RP)

Let's try a ping:

Source1Receiver2#ping 232.1.1.1

Type escape sequence to abort.

Sending 1, 100-byte ICMP Echos to 232.1.1.1, timeout is 2 seconds:

Reply to request 0 from 2.2.2.1, 80 ms

Success! We have a multicast tree from our source to our receiver.

成功!我们有一个从源端到接收者的组播树

Let's try getting clever here, on source1 let's create a loopback interface and see if it can reach the receiver...

让我们再清晰一下,在Source1我们创建一个回环口,看看它是否能到达接收端

Source1Receiver2(config)#int lo1

Source1Receiver2(config-if)#ip add 50.50.50.50 255.255.255.255

Source1Receiver2#ping 2.2.2.1 source lo1

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 2.2.2.1, timeout is 2 seconds:

Packet sent with a source address of 50.50.50.50

!!!!!

Source1Receiver2#ping 232.1.1.1 source lo1

Type escape sequence to abort.

Sending 1, 100-byte ICMP Echos to 232.1.1.1, timeout is 2 seconds:

Packet sent with a source address of 50.50.50.50

.

No dice! the source has reachability to 2.2.2.1 via 50.50.50.50, but because no multicast groups are setup for this because the host didn't ask for that source, the ping doesn't work as no multicast tree has been built on PIM1:

不行,我们的源50.50.50.50已经到达2.2.2.1,但是因为没有设置源是它的组播组,主机也没有请求这个源,所以ping不能工作在组播树下

PIM1#show ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(1.1.1.1, 232.1.1.1), 00:11:14/00:03:02, flags: sT

Incoming interface: GigabitEthernet1/0, RPF nbr 1.1.1.1

Outgoing interface list:

GigabitEthernet3/0, Forward/Sparse, 00:11:14/00:03:02

Some of the more observant of you might have noticed that there is no (,G) tree at all, because there is no RP there is no way to establish an (,G) Tree.

一些其他需要观察注意的是根本没有(,G)树,因为没有RP,所以建立不了(,G)

Let's try a few more expirements.

让我们再做点实验

Create a loopback on the RP Router and specify it as the RP on PIM1 and PIM2

在RP路由器上创建还回口,并在PIM1和PIM2指派它为RP

RP:

int lo1

ip add 3.3.3.3 255.255.255.255

Ip pim rp-address 3.3.3.3

PIM1/PIM2:

Ip pim rp-address 3.3.3.3

Now that's done, lets see what happens when we try and source traffic from 50.50.50.50 again

.

Source1Receiver2#ping 232.1.1.1 source lo1

Type escape sequence to abort.

Sending 1, 100-byte ICMP Echos to 232.1.1.1, timeout is 2 seconds:

Packet sent with a source address of 50.50.50.50

.

Still no luck, why?

还是不通,为什么?

The reason is we have set aside this group as an SSM Group, the routers will absolutely not forward any multicast traffic for these groups unless they have specifically received a join with the source specified in the message

原因是我们已经设置了一组组播用于SSM,路由器绝对不转发任何组播流除非它们接收到有明确源端信息的消息

On the receiver router, we have turned off the source and have just specified the group, we don't have a specific source listed:

在我们接收端路由器上,我们已经关闭这个源,只指定了特定组播组,我们没有特定源地址了

interface GigabitEthernet1/0

ip address 2.2.2.1 255.255.255.0

ip pim sparse-mode

ip igmp join-group 232.1.1.2

ip igmp version 3

!

What happens now? We changed the group number to make this easier to see to 232.1.1.2:

发生了什么?我们改了组播号更容易看到232.1.1.2

The ip mroute table on PIM2 doesn't show this table having been built:

PIM2#

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 224.0.1.40), 00:31:56/00:02:15, RP 3.3.3.3, flags: SJCL

Incoming interface: GigabitEthernet1/0, RPF nbr 10.2.0.2

Outgoing interface list:

GigabitEthernet2/0, Forward/Sparse, 00:31:43/00:02:15

No entry in mcast table? won't be forwarded...

在组播表里没有条目?不能转发的

The following debug messages show for IGMP on PIM2:

下面的debug消息显示在PIM2上

*Feb 3 19:18:24.323: IGMP(0): Received v2 Query on GigabitEthernet3/0 from 10.0.0.1

*Feb 3 19:18:25.063: IGMP(0): Received v3 Report for 1 group on GigabitEthernet2/0 from 2.2.2.1

*Feb 3 19:18:25.067: IGMP(0): Received Group record for group 232.1.1.2, mode 4 from 2.2.2.1 for 0 sources

*Feb 3 19:18:25.071: IGMP(0): Group Record mode 4 for SSM group 232.1.1.2 from 2.2.2.1 on GigabitEthernet2/0, ignored

The last line tells us all we need to know: our receiver is asking to join group (,232.1.1.2), the range 232.0.0.0/8 is a SSM group, so the (,G), or Any Source Multicast (ASM) IGMP Join request is completely ignored.

最后一行告诉我们:我们的接收这正在请求加入(,232.1.1.2),因为232.0.0.0/8 是 SSM 组,所以(,G)ASM IGMP加入信息被完全的忽略。

If we join a non SSM enabled multicast range...

如果我们加入一个非SSM的组播范围

Source2Receiver1(config)#int gi1/0

Source2Receiver1(config-if)#ip igmp join

Source2Receiver1(config-if)#ip igmp join-group 239.1.1.1

Our multicast table is built as normal, facing towards the RP.

我们的组播表正常建立,面向了RP

PIM2#show ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.1.1.1), 00:00:09/00:02:50, RP 3.3.3.3, flags: SJC

Incoming interface: GigabitEthernet1/0, RPF nbr 10.2.0.2

Outgoing interface list:

GigabitEthernet2/0, Forward/Sparse, 00:00:09/00:02:50

So as you can see, multicast SSM and normal groups can live together in complete harmony.

所以看到,SSM和普通组可以和谐地在一起运行

I hope SSM has been adequately described to you. The Next blog post we will look at Bi-Directional PIM.

我希望SSM已经清晰的表述了,下一篇我们将了解BIDir PIM

浙公网安备 33010602011771号

浙公网安备 33010602011771号