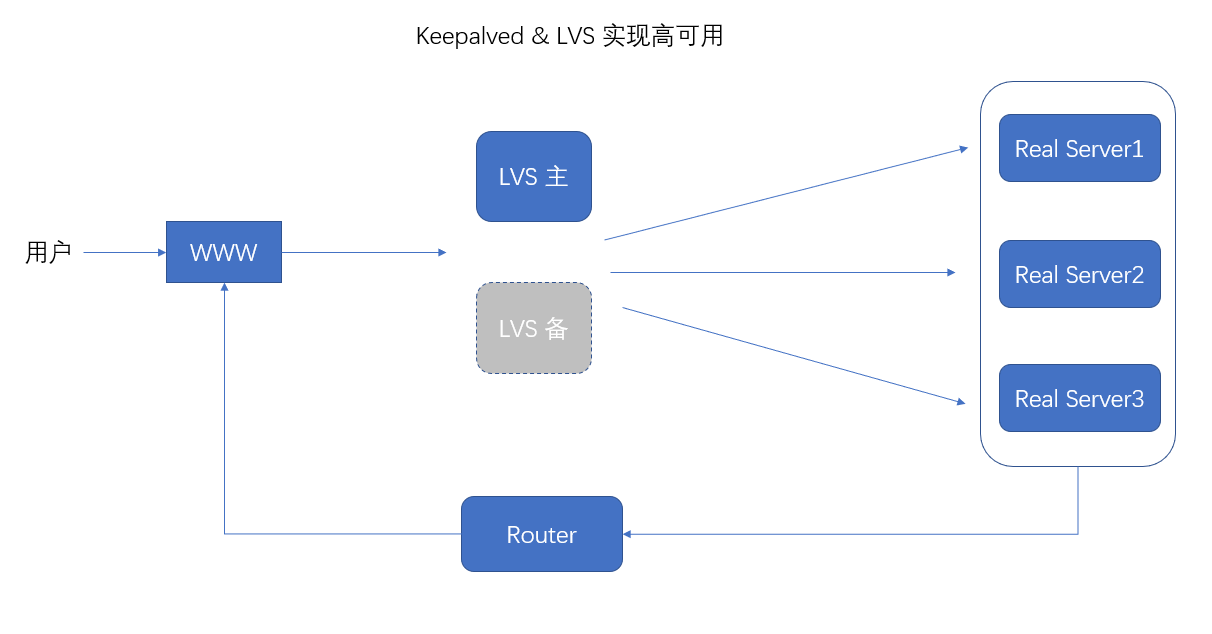

Keepalived & LVS 实现高可用集群

拓扑图:

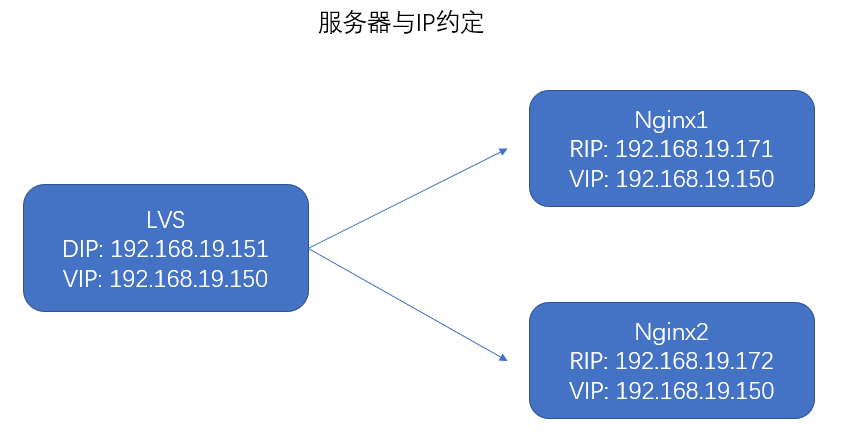

服务器与IP约定:

1.关闭 networkmanager, LVS节点和真实服务器(real server)节点

systemctl stop NetworkManager

systemctl disable NetworkManager

2.构建虚拟IP

此实验仅针对 LVS + nginx组合有效,如果keepalived + LVS 则keepalived会通过keepalived.conf实现LVS 的vip配置,同时将rs节点的ipvs 配置加进来。

# LVS node

# /etc/sysconfig/network-scripts/ifcfg-ens33

# cp if..-ens33 cp if..ens33:1 # reanem with alias

# vi if..ens33:1

BOOTPROTO="static"

DEVICE="ens33:1"

ONBOOT="yes"

IPADDR=192.168.19.150 # vip, dip -> 151

NETMASK=255.255.255.0

# service network restart

# ifconfig | ip addr

3.install cluster tools

# yum install -y ipvsadm

# ipvsadm -Ln

---

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

4.RS node

# 171 | 172 node

# /etc/sysconfig/networks-../

# cp ifcfg-lo ifcfg-lo:1 # todo

# vi ifcfg-lo:1

---

DEVICE="lo:1"

IPADDR=192.168.19.150

NETMASK=255.255.255.255

ONBOOT="yes"

NETWORK=127.0.0.0

BROADCAST=127.255.255.255

ONBOOT=yes

NAME=loopback

# ifup lo | service network restart

# ip addr | ifconfig

5. arp for rs node, 171 & 172, same operation

arp-ignore: ARP响应级别-处理请求

0: 只要本机配置了ip,就能响应请求

1:请求的目标地址到达对应网络接口后,才会响应请求 # 设置参数

arp-announce: ARP通告行为-返回响应

0:本机上任何网络接口都向外通告,所有网卡都能节能接受到通告

1:尽可能避免与本网卡与不匹配的目标进行通告

2:只在本网卡通告 # 设置参数

vi /etc/sysctl.conf

---

# 追加

# LVS conf

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.default.arp_ignore = 1

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce = 2

---

sysctl -p # 刷新

route add -host 192.168.19.150 dev lo:1 # 添加路由,将150的报文转发到 lo:1,重启后会失效

route -n # 查看route,确认添加了150的lo的路由信息

echo "route add -host 192.168.19.150 dev lo:1" >> /etc/rc.local # route add 持久化

# 至此, real server的配置就配好了,接下来是LVS主机的ipvs的规则等

6.添加ipvs规则

ipvsadm -A -t 192.168.19.150:80 -s rr # —A 添加 VS,-t tcp连接,-s 调度算法, rr wrr lc wlc等

ipvsadm -a -t 192.168.19.150:80 -r 192.168.19.171:80 -g # -a 添加RS,-t tcp连接, -r RS的ip:port,-g 默认直接路由网关模式

ipvsadm -a -t 192.168.19.150:80 -r 192.168.19.172:80 -g

---

--add-service -A add virtual service with options # 添加虚拟服务器

--edit-service -E edit virtual service with options # 编辑 vs

--delete-service -D delete virtual service

--clear -C clear the whole table

--restore -R restore rules from stdin

--save -S save rules to stdout

--add-server -a add real server with options # add rs ,添加真实服务器

--edit-server -e edit real server with options

--delete-server -d delete real server

--list -L|-l list the table # 规则列表

--zero -Z zero counters in a service or all services

--set tcp tcpfin udp set connection timeout values

--tcp-service -t service-address service-address is host[:port] # 设置tcp连接,紧跟server host:port,也就是LVS

--udp-service -u service-address service-address is host[:port]

--fwmark-service -f fwmark fwmark is an integer greater than zero

--ipv6 -6 fwmark entry uses IPv6

--scheduler -s scheduler one of rr|wrr|lc|wlc|lblc|lblcr|dh|sh|sed|nq, # 设置调度算法,轮询,权重轮询,最少连接,权重最少连接,负载均衡最少连接等等

the default scheduler is wlc.

--pe engine alternate persistence engine may be sip,

not set by default.

--persistent -p [timeout] persistent service

--netmask -M netmask persistent granularity mask

--real-server -r server-address server-address is host (and port)

--gatewaying -g gatewaying (direct routing) (default) # 路由,默认直接路由(Direct routing)

--ipip -i ipip encapsulation (tunneling)

--masquerading -m masquerading (NAT)

--weight -w weight capacity of real server

---

7.chrome验证

# 在win10 hosts文件汇总添加: 192.168.19.150 www.lvs.com

# chrome 访问www.lvs.com,发现指向的是 132节点 的 nginx server

# 刷新时,发现一只都是172,通过持久化进一步验证

# -p persistent [timeout] 客户端的多个请求会重定向到同样的rs中,第一次的请求, 默认配置300s,

ipvsadm -E -t 192.168.181.150:80 -s rr -p 10 # 编辑LVS, -p设置为10s

ipvsadm --set 1 1 1 # 平时使用,默认配置

8.keepalived + LVS高可用

首先清空ipvsadm, ipvsadm -C

环境:

- 2台 lvs server -> master & backup

- 2台 real server -> 171 & 172

# 配置keepalived.conf

---

! Configuration File for keepalived

global_defs {

# router id, global unique, host uuid

router_id keep_151

}

# vrrp conf, keepalived + nginx

vrrp_instance VI_1 {

# host -> master

state MASTER

# host interface name

interface ens33

# ensure master and backup are same

virtual_router_id 51

# weight, the larger, more chance to be master after master down

priority 100

# between m & b time interval, default 1s

advert_int 1

# authenticate pwd, default

authentication {

auth_type PASS

auth_pass 1111

}

# vip conf

virtual_ipaddress {

192.168.19.150

}

}

virtual_server 192.168.19.150 80 { #LVS配置段,VIP

delay_loop 6

lb_algo rr #调度算法轮询

lb_kind DR #工作模式DR

nat_mask 255.255.255.0

# persistence_timeout 50 #持久连接,在测试时需要注释,否则会在设置的时间内把请求都调度到一台RS服务器上面

protocol TCP

sorry_server 127.0.0.1 80 #Sorry server的服务器地址及端口

#Sorry server就是在后端的服务器全部宕机的情况下紧急提供服务。

real_server 192.168.181.131 80 { #RS服务器地址和端口

weight 1 #RS的权重

TCP_CHECK {

# 健康检查

connect_port 80 # 检查端口

connect_timeout 2 # 超时2s

nb_get_retry 2 # 重启2次

delay_before_retry 3 # 间隔3s

}

}

real_server 192.168.19.132 80 {

weight 1

TCP_CHECK {

# 健康检查

connect_port 80 # 检查端口

connect_timeout 2 # 超时2s

nb_get_retry 2 # 重启2次

delay_before_retry 3 # 间隔3s

}

}

}

---

# backup node

# vi keepalived.conf

---

! Configuration File for keepalived

global_defs {

# router id, global unique, host uuid

router_id keep_152 # 另外命令,相对151来说

}

# vrrp conf, keepalived + nginx

vrrp_instance VI_1 {

# host -> backup

state BACKUP # 备机

# host interface name

interface ens33

# ensure master and backup are same

virtual_router_id 51 # 保持同一组

# weight, the larger, more chance to be master after master down

priority 50 # 备机,权重小

# between m & b time interval, default 1s

advert_int 1

# authenticate pwd, default

authentication {

auth_type PASS

auth_pass 1111

}

# vip conf

virtual_ipaddress {

192.168.19.150

}

}

virtual_server 192.168.19.150 80 { #LVS配置段,VIP

delay_loop 6

lb_algo rr #调度算法轮询

lb_kind DR #工作模式DR

nat_mask 255.255.255.0

# persistence_timeout 50 #持久连接,在测试时需要注释,否则会在设置的时间内把请求都调度到一台RS服务器上面

protocol TCP

sorry_server 127.0.0.1 80 #Sorry server的服务器地址及端口

#Sorry server就是在后端的服务器全部宕机的情况下紧急提供服务。

real_server 192.168.181.131 80 { #RS服务器地址和端口

weight 1 #RS的权重

TCP_CHECK {

# 健康检查

connect_port 80 # 检查端口

connect_timeout 2 # 超时2s

nb_get_retry 2 # 重启2次

delay_before_retry 3 # 间隔3s

}

}

real_server 192.168.19.132 80 {

weight 1

TCP_CHECK {

# 健康检查

connect_port 80 # 检查端口

connect_timeout 2 # 超时2s

nb_get_retry 2 # 重启2次

delay_before_retry 3 # 间隔3s

}

}

}

---

# 重启keepalived

systemctl restart keepalived

# 确认ipvsadm

ipvsadm -Ln

# 确认lvs server开启端口80,nginx server也需要打开相关端口

firewall-cmd --zone=public --add-port=80/tcp --permanent

firewall-cmd --reload

# p.s 如果测试时长时间看不到效果,建议开启 ipvsadm --set 1 1 1 来调整时间限制

参考:

浙公网安备 33010602011771号

浙公网安备 33010602011771号