Elasticsearch拼音分词和IK分词的安装及使用

一、Es插件配置及下载

Es下载地址:https://www.elastic.co/cn/downloads/past-releases/elasticsearch-5-6-9

es可视化工具kibana下载地址:https://www.elastic.co/cn/downloads/past-releases/kibana-5-6-9

1.IK分词器的下载安装

关于IK分词器的介绍不再多少,一言以蔽之,IK分词是目前使用非常广泛分词效果比较好的中文分词器。做ES开发的,中文分词十有八九使用的都是IK分词器。

下载地址:https://github.com/medcl/elasticsearch-analysis-ik

2.pinyin分词器的下载安装

可以在淘宝、京东的搜索框中输入pinyin就能查找到自己想要的结果,这就是拼音分词,拼音分词则是将中文分析成拼音格式,可以通过拼音分词分析出来的数据进行查找想要的结果。

下载地址:https://github.com/medcl/elasticsearch-analysis-pinyin/releases?after=v5.6.11

注:插件下载一定要和自己版本对应的Es版本一致,并且安装完插件后需重启Es,才能生效。

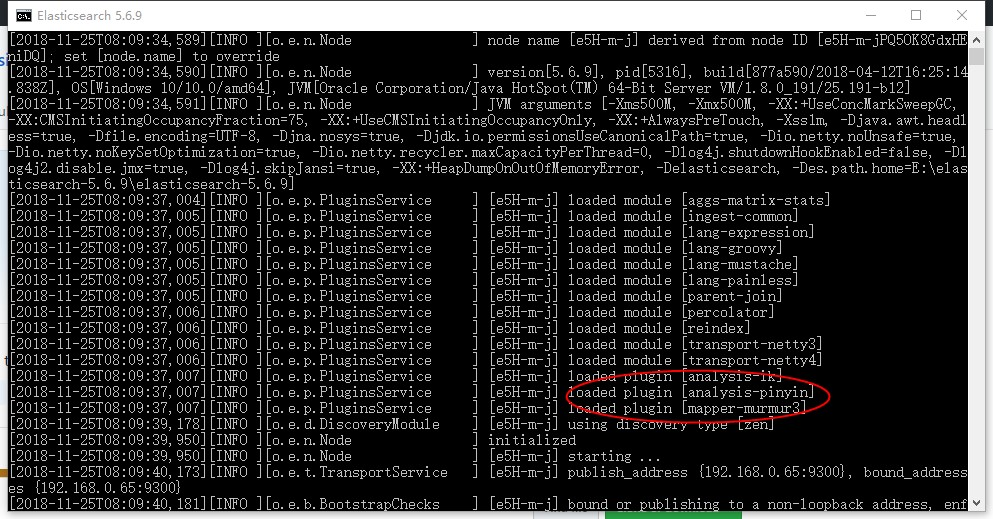

插件安装位置:(本人安装了三个插件,暂时先不介绍murmur3插件,可以暂时忽略)

插件配置成功,重启Es

二、拼音分词器和IK分词器的使用

1.IK中文分词器的使用

1.1 ik_smart: 会做最粗粒度的拆分

GET /_analyze { "text":"中华人民共和国国徽", "analyzer":"ik_smart" } 结果: { "tokens": [ { "token": "中华人民共和国", "start_offset": 0, "end_offset": 7, "type": "CN_WORD", "position": 0 }, { "token": "国徽", "start_offset": 7, "end_offset": 9, "type": "CN_WORD", "position": 1 } ] }

1.2 ik_max_word: 会将文本做最细粒度的拆分

GET /_analyze { "text": "中华人民共和国国徽", "analyzer": "ik_max_word" } 结果: { "tokens": [ { "token": "中华人民共和国", "start_offset": 0, "end_offset": 7, "type": "CN_WORD", "position": 0 }, { "token": "中华人民", "start_offset": 0, "end_offset": 4, "type": "CN_WORD", "position": 1 }, { "token": "中华", "start_offset": 0, "end_offset": 2, "type": "CN_WORD", "position": 2 }, { "token": "华人", "start_offset": 1, "end_offset": 3, "type": "CN_WORD", "position": 3 }, { "token": "人民共和国", "start_offset": 2, "end_offset": 7, "type": "CN_WORD", "position": 4 }, { "token": "人民", "start_offset": 2, "end_offset": 4, "type": "CN_WORD", "position": 5 }, { "token": "共和国", "start_offset": 4, "end_offset": 7, "type": "CN_WORD", "position": 6 }, { "token": "共和", "start_offset": 4, "end_offset": 6, "type": "CN_WORD", "position": 7 }, { "token": "国", "start_offset": 6, "end_offset": 7, "type": "CN_CHAR", "position": 8 }, { "token": "国徽", "start_offset": 7, "end_offset": 9, "type": "CN_WORD", "position": 9 } ] }

2.拼音分词器的使用

GET /_analyze { "text":"刘德华", "analyzer": "pinyin" } 结果: { "tokens": [ { "token": "liu", "start_offset": 0, "end_offset": 1, "type": "word", "position": 0 }, { "token": "ldh", "start_offset": 0, "end_offset": 3, "type": "word", "position": 0 }, { "token": "de", "start_offset": 1, "end_offset": 2, "type": "word", "position": 1 }, { "token": "hua", "start_offset": 2, "end_offset": 3, "type": "word", "position": 2 } ] }

注:不管是拼音分词器还是IK分词器,当深入搜索一条数据是时,必须是通过分词器分析的数据,才能被搜索到,否则搜索不到

三、IK分词和拼音分词的组合使用

当我们创建索引时可以自定义分词器,通过指定映射去匹配自定义分词器

PUT /my_index { "settings": { "analysis": { "analyzer": { "ik_smart_pinyin": { "type": "custom", "tokenizer": "ik_smart", "filter": ["my_pinyin", "word_delimiter"] }, "ik_max_word_pinyin": { "type": "custom", "tokenizer": "ik_max_word", "filter": ["my_pinyin", "word_delimiter"] } }, "filter": { "my_pinyin": { "type" : "pinyin", "keep_separate_first_letter" : true, "keep_full_pinyin" : true, "keep_original" : true, "limit_first_letter_length" : 16, "lowercase" : true, "remove_duplicated_term" : true } } } } }

当我们建type时,需要在字段的analyzer属性填写自己的映射

PUT /my_index/my_type/_mapping { "my_type":{ "properties": { "id":{ "type": "integer" }, "name":{ "type": "text", "analyzer": "ik_smart_pinyin" } } } }

测试,让我们先添加几条数据

POST /my_index/my_type/_bulk { "index": { "_id":1}} { "name": "张三"} { "index": { "_id": 2}} { "name": "张四"} { "index": { "_id": 3}} { "name": "李四"}

IK分词查询

GET /my_index/my_type/_search { "query": { "match": { "name": "李" } } } 结果: { "took": 3, "timed_out": false, "_shards": { "total": 5, "successful": 5, "skipped": 0, "failed": 0 }, "hits": { "total": 1, "max_score": 0.47160998, "hits": [ { "_index": "my_index", "_type": "my_type", "_id": "3", "_score": 0.47160998, "_source": { "name": "李四" } } ] } }

拼音分词查询:

GET /my_index/my_type/_search { "query": { "match": { "name": "zhang" } } } 结果: { "took": 1, "timed_out": false, "_shards": { "total": 5, "successful": 5, "skipped": 0, "failed": 0 }, "hits": { "total": 2, "max_score": 0.3758317, "hits": [ { "_index": "my_index", "_type": "my_type", "_id": "2", "_score": 0.3758317, "_source": { "name": "张四" } }, { "_index": "my_index", "_type": "my_type", "_id": "1", "_score": 0.3758317, "_source": { "name": "张三" } } ] } }

注:搜索时,先查看被搜索的词被分析成什么样的数据,如果你搜索该词输入没有被分析出的参数时,是查不到的!!!!