数据采集第四次作业

作业一

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;Scrapy+Xpath+MySQL数据库存储技术路线爬取当当网站图书数据

候选网站:http://www.dangdang.com/

关键词:学生自由选择

输出信息:MYSQL的输出信息如下

1)作业结果

代码:

myspider:

import scrapy

from ..items import DangdangItem

from bs4 import UnicodeDammit

class MySpider(scrapy.Spider):

name = "mySpider"

key = 'python'

source_url='http://search.dangdang.com/'

def start_requests(self):

url = MySpider.source_url+"?key="+MySpider.key

yield scrapy.Request(url=url,callback=self.parse)

def parse(self, response):

try:

dammit = UnicodeDammit(response.body, ["utf-8", "gbk"])

data = dammit.unicode_markup

selector = scrapy.Selector(text=data)

lis=selector.xpath("//li['@ddt-pit'][starts-with(@class,'line')]")

for li in lis:

title=li.xpath("./a[position()=1]/@title").extract_first()

price =li.xpath("./p[@class='price']/span[@class='search_now_price']/text()").extract_first()

author = li.xpath("./p[@class='search_book_author']/span[position()=1]/a/@title").extract_first()

date =li.xpath("./p[@class='search_book_author']/span[position()=last()- 1]/text()").extract_first()

publisher = li.xpath("./p[@class='search_book_author']/span[position()=last()]/a/@title ").extract_first()

detail = li.xpath("./p[@class='detail']/text()").extract_first()

#detail有时没有,结果None

item=DangdangItem()

item["title"]=title.strip() if title else ""

item["author"]=author.strip() if author else ""

item["date"] = date.strip()[1:] if date else ""

item["publisher"] = publisher.strip() if publisher else ""

item["price"] = price.strip() if price else ""

item["detail"] = detail.strip() if detail else ""

yield item

#最后一页时link为None

link=selector.xpath("//div[@class='paging']/ul[@name='Fy']/li[@class='next']/a/@href").extract_first()

if link:

url=response.urljoin(link)

yield scrapy.Request(url=url, callback=self.parse)

except Exception as err:

print(err)

pipelines:

from itemadapter import ItemAdapter

import pymysql

class DangdangPipeline(object):

def open_spider(self,spider):

print("opened")

try:

self.con = pymysql.connect(host="localhost",port=3306,user="root",passwd="gh02120425",db="first",charset="utf8")

self.cursor=self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from books")

self.opened=True

self.count=0

except Exception as err:

print(err)

self.opened=False

def close_spider(self, spider):

if self.opened:

self.con.commit()

self.con.close()

self.opened=False

print("closed")

print("总共爬取", self.count, "本书籍")

def process_item(self, item, spider):

try:

print(item["title"])

print(item["author"])

print(item["publisher"])

print(item["date"])

print(item["price"])

print(item["detail"])

print()

if self.opened:

self.cursor.execute(

"insert into books (bTitle,bAuthor,bPublisher,bDate,bPrice,bDetail) values(%s,%s,%s,%s,%s,%s)",

(item["title"],item["author"],item["publisher"],item["date"],item["price"],item["detail"]))

self.count += 1

except Exception as err:

print(err)

return item

items:

import scrapy

class DangdangItem(scrapy.Item):

# define the fields for your item here like:

title = scrapy.Field()

author = scrapy.Field()

date = scrapy.Field()

publisher = scrapy.Field()

detail = scrapy.Field()

price = scrapy.Field()

settings:(修改)

ITEM_PIPELINES = {

'dangdang.pipelines.DangdangPipeline': 300,

}

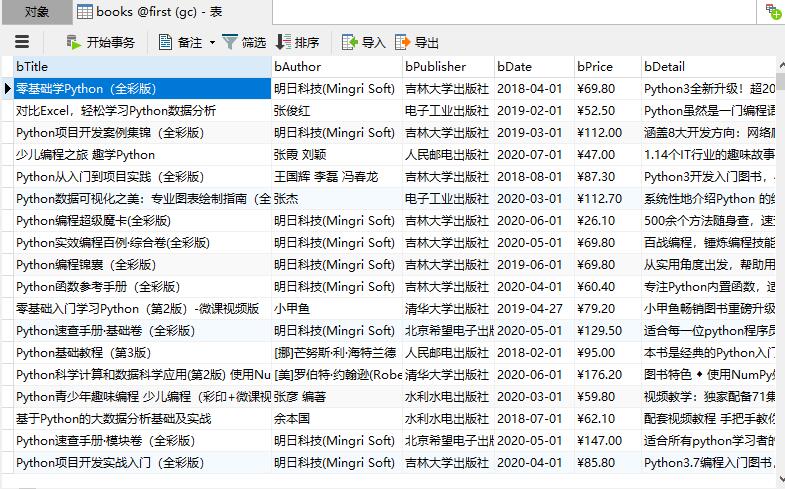

结果贴图

2)心得体会

这次复现书上的代码,更加了解用scrapy+xpath爬去数据,真的understand了!

果然还是一行一行扒比较好

作业二

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;Scrapy+Xpath+MySQL数据库存储技术路线爬取股票相关信息

候选网站:东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock/

输出信息:MYSQL数据库存储和输出格式如下,表头应是英文命名例如:序号id,股票代码:bStockNo…

1)作业结果

代码

mystock:

import scrapy

import json

from ..items import StockItem

import re

class MyStock(scrapy.Spider):

name = "myStock"

start_urls = ["http://70.push2.eastmoney.com/api/qt/clist/get?cb=jQuery1124016042972752979767_1604312088944&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80,m:1+t:2,m:1+t:23&fields=f2,f3,f8,f9,f12,f14,f15,f16,f17,f23&_=1604312088945"]

def parse(self, response):

data = response.text

#print(data)

pat = "jQuery1124016042972752979767_1604312088944\((.*)\)"

data = re.findall(pat, data)

data = ''.join(data)

#print(data)

result = json.loads(data)

for f in result['data']['diff']:

item = StockItem()

item["num"] = f['f12']

item["name"] = f['f14']

item["price"] = f['f2']

item["applies"] = f['f3']

item["high"] = f['f15']

item["low"] = f['f16']

item["op"] = f['f17']

item["turn"] = f['f8']

item["pb"] = f['f9']

item["pe"] = f['f23']

yield item

piplines:

from itemadapter import ItemAdapter

import pymysql

class StockPipeline(object):

def open_spider(self, spider):

print("opened")

try:

self.con = pymysql.connect(host="localhost", port=3306, user="root", passwd="gh02120425", db="first",

charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from stocks")

self.opened = True

except Exception as err:

print(err)

self.opened = False

def close_spider(self, spider):

if self.opened:

self.con.commit()

self.con.close()

self.opened = False

print("closed")

def process_item(self, item, spider):

try:

print(item["num"])

print(item["name"])

print(item["price"])

print(item["applies"])

print(item["high"])

print(item["low"])

print(item["op"])

print(item["turn"])

print(item["pb"])

print(item["pe"])

if self.opened:

self.cursor.execute(

"insert into stocks (num, name, price, applies, high, low, op, turn, pb, pe) values (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)",

(item['num'], item['name'], item['price'], item['applies'], item['high'],

item['low'], item['op'], item['turn'], item['pb'], item['pe']))

except Exception as err:

print(err)

return item

items:

import scrapy

class StockItem(scrapy.Item):

num = scrapy.Field() #股票代码

name = scrapy.Field() #名称

price = scrapy.Field() #最新报价

applies = scrapy.Field() #涨跌幅

high = scrapy.Field() #最高

low = scrapy.Field() #最低

op = scrapy.Field() #今开

turn = scrapy.Field() #换手

pb = scrapy.Field() #市盈

pe = scrapy.Field() #市净

settings:

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

'stock.pipelines.StockPipeline': 300,

}

结果贴图

2)心得体会

财富网是动态网页,用xpath的话只能用selenium,就。。。不太会,用了笨笨方法

又又又一次对正则表达式加深了理解,findall出来的列表一定要化为字符串!才能load

除了用笨笨方法,最没想到的是最后的导入数据库语句出了问题,看了半天都没问题,但是总是提示语法错误

度娘也查不出来,这就离谱,最后发现是因为item定义时用了python里的关键字,就疯狂报错555(是我知道的太少了)

作业三

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

候选网站:招商银行网:http://fx.cmbchina.com/hq/

输出信息:MYSQL数据库存储和输出格式

1)作业结果

代码

cmbchina:

import scrapy

from ..items import CmbItem

from bs4 import UnicodeDammit

class CmbchinaSpider(scrapy.Spider):

name = 'cmbchina'

start_url = 'http://fx.cmbchina.com/hq/'

def start_requests(self):

url = CmbchinaSpider.start_url

yield scrapy.Request(url=url,callback=self.parse)

def parse(self, response):

try:

dammit = UnicodeDammit(response.body, ["utf-8", "gbk"])

data = dammit.unicode_markup

selector = scrapy.Selector(text=data)

lis = selector.xpath("//div[@id='realRateInfo']/table/tr[position()>1]")

print(lis)

for tr in lis:

currency = tr.xpath("./td[1]/text()").extract_first()

units = tr.xpath("./td[2]/text()").extract_first()

coin = tr.xpath("./td[3]/text()").extract_first()

tsp = tr.xpath("./td[4]/text()").extract_first()

csp = tr.xpath("./td[5]/text()").extract_first()

tbp = tr.xpath("./td[6]/text()").extract_first()

cbp = tr.xpath("./td[7]/text()").extract_first()

date = tr.xpath("./td[8]/text()").extract_first()

item = CmbItem()

item["currency"] = currency.strip() if currency else ""

item["units"] = units.strip() if units else ""

item["coin"] = coin.strip()[1:] if coin else ""

item["tsp"] = tsp.strip() if tsp else ""

item["csp"] = csp.strip() if csp else ""

item["tbp"] = tbp.strip() if tbp else ""

item["cbp"] = cbp.strip() if cbp else ""

item["date"] = date.strip() if date else ""

yield item

except Exception as err:

print(err)

pipelines:

from itemadapter import ItemAdapter

import pymysql

class CmbPipeline(object):

def open_spider(self,spider):

print("opened")

try:

self.con = pymysql.connect(host="localhost",port=3306,user="root",passwd="gh02120425",db="first",charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from currency")

self.opened=True

self.id = 0

except Exception as err:

print(err)

self.opened = False

def close_spider(self, spider):

if self.opened:

self.con.commit()

self.con.close()

self.opened = False

print("closed")

def process_item(self, item, spider):

try:

print(self.id)

print(item["currency"])

print(item["units"])

print(item["coin"])

print(item["tsp"])

print(item["csp"])

print(item["tbp"])

print(item["cbp"])

print(item["date"])

print("next")

if self.opened:

self.id += 1

self.cursor.execute(

"insert into currency (ID,Currency,Units,Coin,Tsp,Csp,Tbp,Cbp,Date) values(%s,%s,%s,%s,%s,%s,%s,%s,%s)",

(self.id, item["currency"], item["units"], item["coin"], item["tsp"],

item["csp"], item["tbp"], item["cbp"], item["date"]))

except Exception as err:

print(err)

return item

items:

import scrapy

class CmbItem(scrapy.Item):

# define the fields for your item here like:

currency = scrapy.Field()

units = scrapy.Field()

coin = scrapy.Field()

tsp = scrapy.Field()

csp = scrapy.Field()

tbp = scrapy.Field()

cbp = scrapy.Field()

date = scrapy.Field()

settings:

ITEM_PIPELINES = {

'cmb.pipelines.CmbPipeline': 300,

}

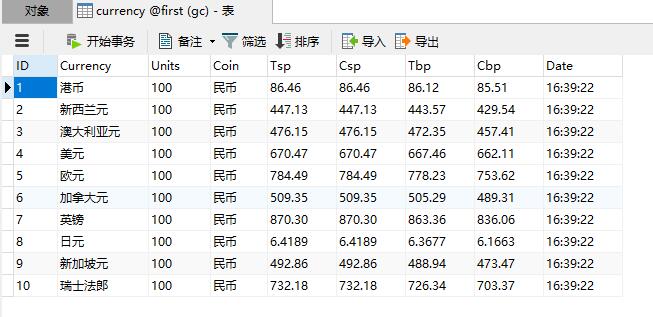

结果贴图

这就好笑了,说好的软妹币呢,怎么就变成民币了hhhh

2)心得体会

这个作业其实也是复现书上的代码,自己尝试打了一遍,还是会少东西,不过也更熟悉就是啦

浏览器会对html文本进行一定的规范化,所以tbody就很多余

还有那个self.count,感觉还是写在导入数据库语句前比较好,在后面写数据库有问题就无法输出,就很烦