k8s中flannel:镜像下载不了

重新部署一套K8S集群时,由于K8S需要扁平化的网络,所以当执行下面的

root@master ~]# kubectl apply -f kube-flannel.yml

会开始下载镜像,然后去启动,结果等了几分钟,一直没有好

[root@k8s-master ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-58cc8c89f4-9gn5g 0/1 Pending 0 27m coredns-58cc8c89f4-xxzx7 0/1 Pending 0 27m etcd-k8s-master 1/1 Running 1 26m kube-apiserver-k8s-master 1/1 Running 1 26m kube-controller-manager-k8s-master 1/1 Running 1 26m kube-flannel-ds-amd64-2dqlf 0/1 Init:ImagePullBackOff 0 11m kube-proxy-rn98b 1/1 Running 1 27m kube-scheduler-k8s-master 1/1 Running 1 26m [root@k8s-master ~]# kubectl get pod -n kube-system

去查询pod

[root@k8s-master ~]# kubectl describe pod kube-flannel-ds-amd64-2dqlf -n kube-system Name: kube-flannel-ds-amd64-2dqlf Namespace: kube-system Priority: 0 Node: k8s-master/192.168.180.130 Start Time: Thu, 19 Dec 2019 22:36:13 +0800 Labels: app=flannel controller-revision-hash=67f65bfbc7 pod-template-generation=1 tier=node Annotations: <none> Status: Pending IP: 192.168.180.130 IPs: IP: 192.168.180.130 Controlled By: DaemonSet/kube-flannel-ds-amd64 Init Containers: install-cni: Container ID: Image: quay.io/coreos/flannel:v0.11.0-amd64 Image ID: Port: <none> Host Port: <none> Command: cp Args: -f /etc/kube-flannel/cni-conf.json /etc/cni/net.d/10-flannel.conflist State: Waiting Reason: ImagePullBackOff Ready: False Restart Count: 0 Environment: <none> Mounts: /etc/cni/net.d from cni (rw) /etc/kube-flannel/ from flannel-cfg (rw) /var/run/secrets/kubernetes.io/serviceaccount from flannel-token-r52cd (ro) Containers: kube-flannel: Container ID: Image: quay.io/coreos/flannel:v0.11.0-amd64 Image ID: Port: <none> Host Port: <none> Command: /opt/bin/flanneld Args: --ip-masq --kube-subnet-mgr State: Waiting Reason: PodInitializing Ready: False Restart Count: 0 Limits: cpu: 100m memory: 50Mi Requests: cpu: 100m memory: 50Mi Environment: POD_NAME: kube-flannel-ds-amd64-2dqlf (v1:metadata.name) POD_NAMESPACE: kube-system (v1:metadata.namespace) Mounts: /etc/kube-flannel/ from flannel-cfg (rw) /run/flannel from run (rw) /var/run/secrets/kubernetes.io/serviceaccount from flannel-token-r52cd (ro) Conditions: Type Status Initialized False Ready False ContainersReady False PodScheduled True Volumes: run: Type: HostPath (bare host directory volume) Path: /run/flannel HostPathType: cni: Type: HostPath (bare host directory volume) Path: /etc/cni/net.d HostPathType: flannel-cfg: Type: ConfigMap (a volume populated by a ConfigMap) Name: kube-flannel-cfg Optional: false flannel-token-r52cd: Type: Secret (a volume populated by a Secret) SecretName: flannel-token-r52cd Optional: false QoS Class: Burstable Node-Selectors: <none> Tolerations: :NoSchedule node.kubernetes.io/disk-pressure:NoSchedule node.kubernetes.io/memory-pressure:NoSchedule node.kubernetes.io/network-unavailable:NoSchedule node.kubernetes.io/not-ready:NoExecute node.kubernetes.io/pid-pressure:NoSchedule node.kubernetes.io/unreachable:NoExecute node.kubernetes.io/unschedulable:NoSchedule Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled <unknown> default-scheduler Successfully assigned kube-system/kube-flannel-ds-amd64-2dqlf to k8s-master Warning Failed 5m29s kubelet, k8s-master Failed to pull image "quay.io/coreos/flannel:v0.11.0-amd64": rpc error: code = Unknown desc = context canceled Warning Failed 4m21s (x2 over 5m2s) kubelet, k8s-master Failed to pull image "quay.io/coreos/flannel:v0.11.0-amd64": rpc error: code = Unknown desc = Error response from daemon: Get https://quay.io/v2/: net/http: request canceled (Client.Timeout exceeded while awaiting headers) Normal Pulling 3m31s (x4 over 7m5s) kubelet, k8s-master Pulling image "quay.io/coreos/flannel:v0.11.0-amd64" Warning Failed 3m18s (x4 over 5m29s) kubelet, k8s-master Error: ErrImagePull Warning Failed 3m18s kubelet, k8s-master Failed to pull image "quay.io/coreos/flannel:v0.11.0-amd64": rpc error: code = Unknown desc = Error response from daemon: Get https://quay.io/v2/coreos/flannel/manifests/v0.11.0-amd64: Get https://quay.io/v2/auth?scope=repository%3Acoreos%2Fflannel%3Apull&service=quay.io: net/http: TLS handshake timeout Normal BackOff 3m5s (x6 over 5m29s) kubelet, k8s-master Back-off pulling image "quay.io/coreos/flannel:v0.11.0-amd64" Warning Failed 2m2s (x11 over 5m29s) kubelet, k8s-master Error: ImagePullBackOff

发现时镜像拉取失败的原因,有可能时网络原因导致的,之前都没有遇到过

kube-flannel-ds-amd64-2dqlf 0/1 Init:ImagePullBackOff 0 11m

这个状态,应该时yaml中,有一个InitC,初始化的容器,便去查看一番

所以只能把别的集群中的镜像导出一份,在导入

[root@k8s-master mnt]# docker load -i flannel.tar 7bff100f35cb: Loading layer [==================================================>] 4.672MB/4.672MB 5d3f68f6da8f: Loading layer [==================================================>] 9.526MB/9.526MB 9b48060f404d: Loading layer [==================================================>] 5.912MB/5.912MB 3f3a4ce2b719: Loading layer [==================================================>] 35.25MB/35.25MB 9ce0bb155166: Loading layer [==================================================>] 5.12kB/5.12kB Loaded image: quay.io/coreos/flannel:v0.11.0-amd64 [root@k8s-master mnt]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE registry.aliyuncs.com/google_containers/kube-apiserver v1.16.0 b305571ca60a 3 months ago 217MB registry.aliyuncs.com/google_containers/kube-proxy v1.16.0 c21b0c7400f9 3 months ago 86.1MB registry.aliyuncs.com/google_containers/kube-controller-manager v1.16.0 06a629a7e51c 3 months ago 163MB registry.aliyuncs.com/google_containers/kube-scheduler v1.16.0 301ddc62b80b 3 months ago 87.3MB registry.aliyuncs.com/google_containers/etcd 3.3.15-0 b2756210eeab 3 months ago 247MB registry.aliyuncs.com/google_containers/coredns 1.6.2 bf261d157914 4 months ago 44.1MB quay.io/coreos/flannel v0.11.0-amd64 ff281650a721 10 months ago 52.6MB registry.aliyuncs.com/google_containers/pause 3.1 da86e6ba6ca1 24 months ago 742kB [root@k8s-master mnt]# cd

[root@k8s-master ~]# kubectl get node NAME STATUS ROLES AGE VERSION k8s-master Ready master 54m v1.16.1 k8s-node01 NotReady <none> 15m v1.16.1 k8s-node02 Ready <none> 17m v1.16.1 [root@k8s-master ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-58cc8c89f4-9gn5g 1/1 Running 0 54m coredns-58cc8c89f4-xxzx7 1/1 Running 0 54m etcd-k8s-master 1/1 Running 1 53m kube-apiserver-k8s-master 1/1 Running 1 53m kube-controller-manager-k8s-master 1/1 Running 2 53m kube-flannel-ds-amd64-4bc88 1/1 Running 0 17m kube-flannel-ds-amd64-lzwd6 1/1 Running 0 19m kube-flannel-ds-amd64-vw4vn 1/1 Running 0 15m kube-proxy-bs8sd 1/1 Running 1 15m kube-proxy-nfvtt 1/1 Running 0 17m kube-proxy-rn98b 1/1 Running 1 54m kube-scheduler-k8s-master 1/1 Running 1 53m [root@k8s-master ~]# kubectl get node NAME STATUS ROLES AGE VERSION k8s-master Ready master 54m v1.16.1 k8s-node01 Ready <none> 15m v1.16.1 k8s-node02 Ready <none> 17m v1.16.1

稍等一会,就可以了。由于百度云连接经常失效,有需要镜像的,可以和我联系。

之前下载镜像是用的香港阿里云,一年100多,买的特价。

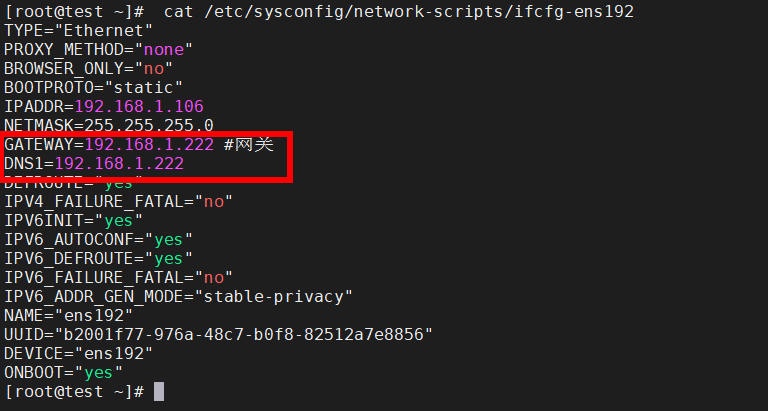

现在有了服务器,就专门安装了一个软路由,按月买的代理,100G,别的虚拟机的网关和DNS都是设置的软路由的IP,这样也能下载。

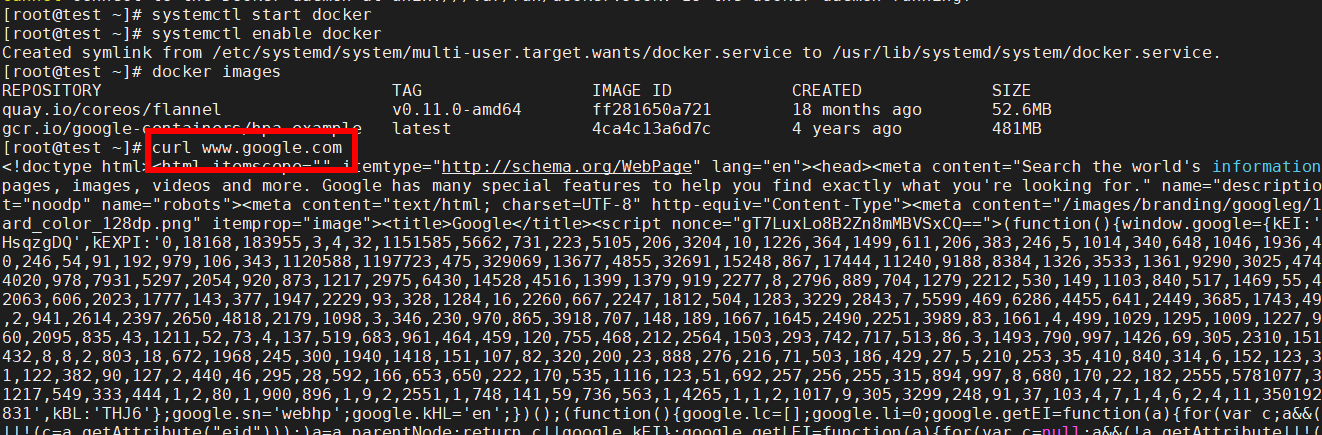

可以ping通谷歌

设置配置

下载演示:

浙公网安备 33010602011771号

浙公网安备 33010602011771号