17-K8S Basic-StatefulSet于Operator

pPS : StatefulSet官方手册 https://kubernetes.io/zh/docs/concepts/workloads/controllers/statefulset/

一、statefulset简介

- Pod

- Service 给pod提供一个固定访问接口并尝试着为动态pod的变动提供一个动态发现的机制的基础单元,在k8s中服务发现、服务注册都是借助于Service资源,最终是借助于CoreDNS-Pod资源提供,Service注册在CoreDNS之上从而能够被其他客户端解析服务名称时完成服务发现及注册,CoreDNS扮演了服务注册及服务发现的总线,CoreDNS也是借助于K8S基础服务所构建的。

- Pod本身为了体现自身的动态自愈、伸缩等功能需要借助于Pod Controllers(Pod控制器),Pod控制器是我们将控制器归类为一组的控制器,控制器有多种的实现,分别应用于不同的场景及逻辑。

应用程序可分为 四种类型

-

无状态应用

- Deployment(守护进程) : 能够建立在Replica Sets之上,完成真正意义上我们对无状态应用的各种运维管理工作,部署、变更(ConfigMap/Secret)、维持不固定数量的Pod资源的控制器。

- DaemonSet (守护进程): 能够实现运行系统级应用、仅在每一个节点上运行一个pod副本数量,或者在部分节点上的node节点上运行一个pod的副本数量

- JOB : 控制非守护进程的作业

- CrontabJob : 控制非守护进程的周期性作业即周期性调度Job的作业

-

有状态应用

- StatefulSet :控制器仅能确保帮助用户将Pod实例名称进行固定,可以为每一个实例分配一个固定存储,对于Pod副本数量扩容或缩容是没有统一的办法的。

- CoreOS公司为了解决让用户自行去开发一段代码封装对某种引用程序的需要运维人员所有操作的步骤进行封装为一个应用程序,但仅是针对于一种应用程序。

- operator : 例如redis服务,封装为一个redis-contraller,此封装好的contraller也是运行在k8s之上的一个Pod资源

- 慢慢的很多应用的公司就自己开发本应用运行在K8S之上的operator

- 可以理解为operator是对于有状态的StatefulSet控制器的定向扩充

- 自从出现了operator以后就需要开发对应有状态应用的operator才能实现更好的部署此类应用,如何让用户更快的开发对应应用的operator呢?

- CoreOS就在k8s之上引入了一个对应的开发接口叫做Operator SDK

- 一般常见的有状态应用都有自己的Operator : https://github.com/operator-framework/awesome-operators

- CoreOS公司为了解决让用户自行去开发一段代码封装对某种引用程序的需要运维人员所有操作的步骤进行封装为一个应用程序,但仅是针对于一种应用程序。

- StatefulSet :控制器仅能确保帮助用户将Pod实例名称进行固定,可以为每一个实例分配一个固定存储,对于Pod副本数量扩容或缩容是没有统一的办法的。

- 从前面的学习我们知道使用Deployment创建的pod是无状态的,当挂载了Volume之后,如果该pod挂了,Replication Controller会再启动一个pod来保证可用性,但是由于pod是无状态的,pod挂了就会和之前的Volume的关系断开,新创建的Pod无法找到之前的Pod。但是对于用户而言,他们对底层的Pod挂了是没有感知的,但是当Pod挂了之后就无法再使用之前挂载的存储卷。

- 为了解决这一问题,就引入了StatefulSet用于保留Pod的状态信息。

StatefulSet是为了解决有状态服务的问题(对应Deployments和ReplicaSets是为无状态服务而设计),其应用场景包括:- 1、稳定的持久化存储,即Pod重新调度后还是能访问到相同的持久化数据,基于PVC来实现

- 2、稳定的网络标志,即Pod重新调度后其PodName和HostName不变,基于Headless Service(即没有Cluster IP的Service)来实现

- 3、有序部署,有序扩展,即Pod是有顺序的,在部署或者扩展的时候要依据定义的顺序依次依次进行(即从0到N-1,在下一个Pod运行之前所有之前的Pod必须都是Running和Ready状态),基于init containers来实现

- 4、有序收缩,有序删除(即从N-1到0)

- 5、有序的滚动更新

StatefulSet要想真正应用必须要满足一下条件- 各Pod用到的存储卷必须是又Storage Class动态供给或者由管理事先创建好的PV

- 删除StatefulSet或者缩减其规模导致Pod被删除时不会自动删除其存储卷以确保数据安全

- StatefulSet控制器依赖于事先存在一个Headless Service 对象事先Pod对象的持久、唯一的标识符配置,此Headless Service需要由用户手动配置。

二、为什么要有headless service

- 在deployment中,每一个pod是没有名称,是随机字符串,是无序的。而statefulset中是要求有序的,每一个pod的名称必须是固定的。当节点挂了,重建之后的标识符是不变的,每一个节点的节点名称是不能改变的。pod名称是作为pod识别的唯一标识符,必须保证其标识符的稳定并且唯一。

- 为了实现标识符的稳定,这时候就需要一个headless service 解析直达到pod,还需要给pod配置一个唯一的名称。

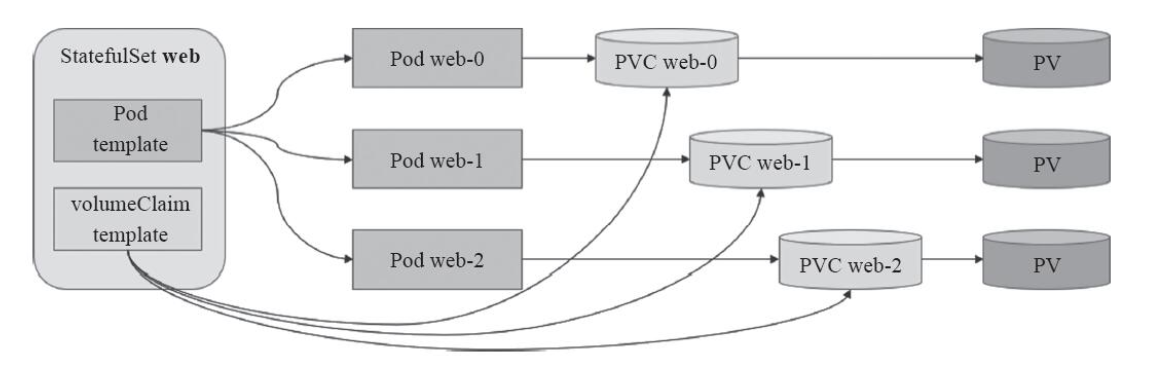

三、为什么要 有volumeClainTemplate

- 大部分有状态副本集都会用到持久存储,比如分布式系统来说,由于数据是不一样的,每个节点都需要自己专用的存储节点。而在deployment中pod模板中创建的存储卷是一个共享的存储卷,多个pod使用同一个存储卷,而statefulset定义中的每一个pod都不能使用同一个存储卷,由此基于pod模板创建pod是不适应的,这就需要引入volumeClainTemplate,当在使用statefulset创建pod时,会自动生成一个PVC,从而请求绑定一个PV,从而有自己专用的存储卷。Pod名称、PVC和PV关系图如下:

四、statefulset控制器使用

4.1、statefulset 组件

- A PodTemplate : Pod模板即Replica Sets

- A label selector : 标签选择器已关联到合适的Pod

- A Headless Service : 手动创建一个Headless Service

- A VolumeClaim Template : 取决于Pod应用是否有存储(PVC)

4.2、Pod标识符(Pod Identity)

- 在statefulset中的Pod名称标识符应该是固定且唯一的

- Ordinal Index : Pod名称标识符有序索引

- 对于具有N个副本的StatefulSet,当部署Pod时,将会顺序从{0..N-1}开始创建。

- Stable Network ID : 有状态应用网络ID

- 有状态控制器的名称+索引符号 $(statefunset name)-$(ordinal)

- (访问service)每一个有状态应用使用FQDN格式域名进行访问时格式为:$(service name).$(namespace).svc.cluster.local

- (访问Pod) $(pod name).$(governing service domain) : Pod名称.服务名称为Pod主机名

- Ordinal Index : Pod名称标识符有序索引

- 官方文档 : https://kubernetes.io/zh/docs/concepts/workloads/controllers/statefulset/#pod-标识4.2、Pod标识符(Pod Identity)

4.3、Pod的管理策略(Pod Management Policies)

4.3.1、管理策略两种方式

- 在Kubernetes 1.7及更高版本中,StatefulSet放宽了排序规则,同时通过.spec.podManagementPolicy字段保留其uniqueness和identity guarantees

OrderedReady Pod Management:- OrderedReady Pod Management 是StatefulSets的默认行为。它实现了上述 “部署/扩展” 行为。

- 默认方式即串行构建,

- 创建 :会按顺序部署三个pod(name: web-0、web-1、web-2)。web-0在Running and Ready状态后开始部署web-1,web-1在Running and Ready状态后部署web-2,期间如果web-0运行失败,web-2是不会被运行,直到web-0重新运行,web-1、web-2才会按顺序进行运行。

Parallel Pod Management- Parallel Pod Management告诉StatefulSet控制器同时启动或终止所有Pod。

- 创建 :并行 即同时创建,但是名称还是+1

- Parallel Pod Management告诉StatefulSet控制器同时启动或终止所有Pod。

4.3.2、部署和扩展策略

- 对于具有N个副本的StatefulSet,当部署Pod时,将会顺序从{0..N-1}开始创建。

- Pods被删除时,会从{N-1..0}的相反顺序终止。

- 在将缩放操作应用于Pod之前,它的所有前辈必须运行和就绪。

- 对Pod执行扩展操作时,前面的Pod必须都处于Running和Ready状态。

- 在Pod终止之前,所有successors都须完全关闭。

- 不要将StatefulSet的pod.Spec.TerminationGracePeriodSeconds值设置为0,这样设置不安全,建议不要这么使用。更多说明,请参考force deleting StatefulSet Pods.

- 在上面示例中,会按顺序部署三个pod(name: web-0、web-1、web-2)。web-0在Running and Ready状态后开始部署web-1,web-1在Running and Ready状态后部署web-2,期间如果web-0运行失败,web-2是不会被运行,直到web-0重新运行,web-1、web-2才会按顺序进行运行。

- 如果用户通过StatefulSet来扩展修改部署pod副本数,比如修改replicas=1,那么web-2首先被终止。在web-2完全关闭和删除之前,web-1是不会被终止。如果在web-2被终止和完全关闭后,但web-1还没有被终止之前,此时web-0运行出错了,那么直到web-0再次变为Running and Ready状态之后,web-1才会被终止。

4.3.3、更新策略(Update Strategies)

- 在 Kubernetes 1.7 及以后的版本中,StatefulSet 的 .spec.updateStrategy 字段让您可以配置和禁用掉自动滚动更新 Pod 的容器、标签、资源请求或限制、以及注解。

4.3.3.1、RollingUpdate 更新策略对 StatefulSet 中的 Pod 执行自动的滚动更新。

- 默认策略,它通过自动更新机制完成更新过程,启动更新过程时,它自动删除每一个Pod对象并以新配置进行重建,更新顺序同删除StatefulSet时的逆向操作机制,一次删除并更新一个Pod对象。(从索引号由大到小更新即逆序)

- .spec.updateStrategy.type 字段的默认值是 RollingUpdate,该策略为 StatefulSet 实现了 Pod 的自动滚动更新。在用户更新 StatefulSet 的 .spec.tempalte 字段时,StatefulSet Controller 将自动地删除并重建 StatefulSet 中的每一个 Pod。处理顺序如下:

- 从序号最大的 Pod 开始,逐个删除和更新每一个 Pod,直到序号最小的 Pod 被更新

- 当正在更新的 Pod 达到了 Running 和 Ready 的状态之后,才继续更新其前序 Pod

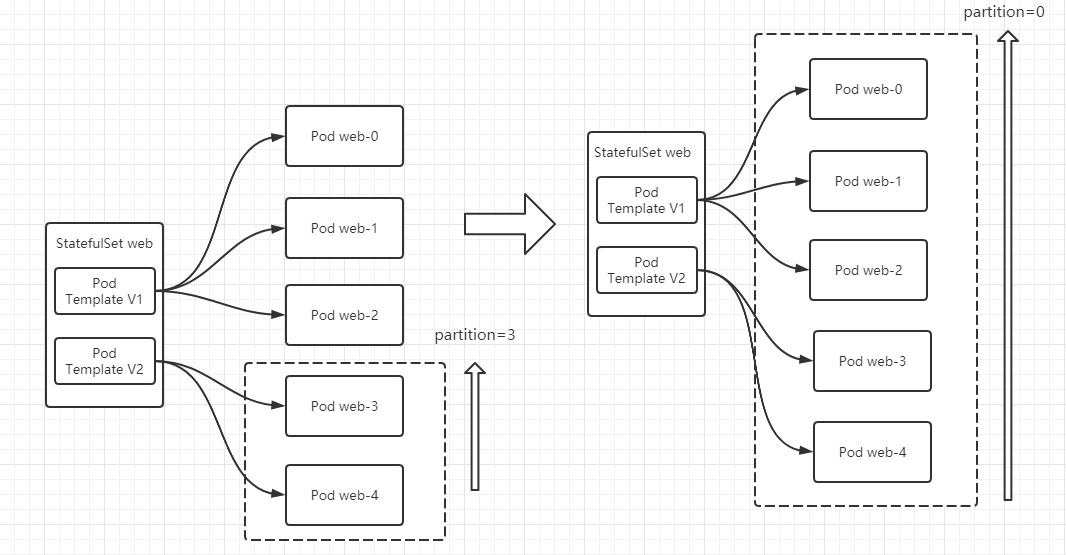

- Partitions

- 通过指定 .spec.updateStrategy.rollingUpdate.partition 字段,可以分片(partitioned)执行RollingUpdate 更新策略。当更新 StatefulSet 的 .spec.template 时:

- 序号大于或等于 .spec.updateStrategy.rollingUpdate.partition 的 Pod 将被删除重建

- 序号小于 .spec.updateStrategy.rollingUpdate.partition 的 Pod 将不会更新,及时手工删除该 Pod,kubernetes 也会使用前一个版本的 .spec.template 重建该 Pod

- 如果 .spec.updateStrategy.rollingUpdate.partition 大于 .spec.replicas,更新 .spec.tempalte 将不会影响到任何 Pod

大部分情况下,您不需要使用 .spec.updateStrategy.rollingUpdate.partition,除非您碰到如下场景:

1、执行预发布

2、执行金丝雀更新

3、执行按阶段的更新

4.3.3.2、On Delete

- 由用户手动更新现有的Pod对象从而触发其更新过程

- OnDelete 策略实现了 StatefulSet 的遗留版本(kuberentes 1.6及以前的版本)的行为。如果 StatefulSet 的 .spec.updateStrategy.type 字段被设置为 OnDelete,当您修改 .spec.template 的内容时,StatefulSet Controller 将不会自动更新其 Pod。您必须手工删除 Pod,此时 StatefulSet Controller 在重新创建 Pod 时,使用修改过的 .spec.template 的内容创建新 Pod。

五、StatefulSet的局限性

- 尽管StatefulSet为有状态应用管理提供了实现,但不同的应用却有着不同的分布模型及存储管理机制,其运维管理过程也必然地各不相同,因此StatefulSet控制器根本无法直接封装实现诸如自动规模伸缩等机制;

- 例如,运维Mysql集群与Zookeeper集群地方式大相径庭,因此StatefulSet控制器无法为每一种有状态应用直接提供自动伸缩等运维逻辑,于是,用户使用StatefulSet控制器时不得不自行提供相关实现代码。

- 但是Mysql或Zookeeper等有状态应用却又是较为流行地应用,有不少用户存在将其托管于kubernetes集群地需求;

- 为了满足这一类需求,kubernetes开放出一些API供用户自己扩展,实现自己地需求。

- 当前kubernetes内部地API变得越来越开放,使其更像时一个跑在云上地操作系统

- 用户可以把它当作一套云地SDK或Framework来使用,而且可以很方便地开发组件来扩展满足自己地业务需求。

六、StatefulSet使用实例

在创建StatefulSet之前需要准备的东西,值得注意的是创建顺序非常关键,创建顺序如下:

1、Volume

2、Persistent Volume # 如果没有动态供给地PV,必须手动创建好

3、Persistent Volume Claim

4、Headless Service

5、StatefulSet

Pod Templates

PVC Templates

6.1、创建有状态应用的PV(nfs静态持久卷)

# NFS--> PV

1、 配置NFS服务

~]# ~]# mkdir /volumes/{v0,v1,v2,v3,v4,v5}

~]# cat /etc/exports

/volumes/v0 192.168.20.0/24(rw,async,no_root_squash)

/volumes/v1 192.168.20.0/24(rw,async,no_root_squash)

/volumes/v2 192.168.20.0/24(rw,async,no_root_squash)

/volumes/v3 192.168.20.0/24(rw,async,no_root_squash)

/volumes/v4 192.168.20.0/24(rw,async,no_root_squash)

/volumes/v5 192.168.20.0/24(rw,async,no_root_squash)

~]# exportfs -avf

exporting 192.168.20.0/24:/volumes/v5

exporting 192.168.20.0/24:/volumes/v4

exporting 192.168.20.0/24:/volumes/v3

exporting 192.168.20.0/24:/volumes/v2

exporting 192.168.20.0/24:/volumes/v1

exporting 192.168.20.0/24:/volumes/v0

2、创建有状态应用的有存储的PV(静态供给)

volumes]# cat pv-nfs-demo.yaml

# 定义pv所属的api版本群组v1即核心群组

apiVersion: v1

# 定义资源类型为pv

kind: PersistentVolume

# 定义元数据(pv为集群资源不能设置namespace字段)

metadata:

# 定义pv名称

name: pv-nfs-v0

# 定义此pc标签

labels:

storsys: nfs

# 定义pv规格

spec:

# 定义此pv提供的访问模型(访问模型一定是底层存储系统支持的,不能超出底层文件系统支持的访问模型)

# NFS服务支持:单路读写、多路只读、多路读写

accessModes: ["ReadWriteOnce","ReadOnlyMany","ReadWriteMany"]

# 存储空间即存储容量(此PV打算用户存进数据的最大容量即限制)如果不定义则默认取底层文件系统的最大值

capacity:

# 定义PV最大NFS服务存储空间使用容量为5GB

storage: 5Gi

# 定义存储设备访问接口,nfs服务提供的是文件系统接口

volumeMode: Filesystem

# 定义pv回收策略

# Delete : 删除pvc也将pv删除

# Recyc : 已经废除

# Retain : 数据保留,pvc也不删除,pv也不删除

persistentVolumeReclaimPolicy: Retain

# 指定使用nfs服务作为持久卷pv

nfs:

# nfs-server端导出的真实目录(nfs服务端真实共享的目录)

path: /volumes/v0

# nfs-server的地址及端口,端口默认

server: 192.168.20.172

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-nfs-v1

labels:

storsys: nfs

spec:

accessModes: ["ReadWriteOnce","ReadOnlyMany","ReadWriteMany"]

capacity:

storage: 5Gi

volumeMode: Filesystem

persistentVolumeReclaimPolicy: Retain

nfs:

path: /volumes/v1

server: 192.168.20.172

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-nfs-v2

labels:

storsys: nfs

spec:

accessModes: ["ReadWriteOnce","ReadOnlyMany","ReadWriteMany"]

capacity:

storage: 5Gi

volumeMode: Filesystem

persistentVolumeReclaimPolicy: Retain

nfs:

path: /volumes/v2

server: 192.168.20.172

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-nfs-v3

labels:

storsys: nfs

spec:

accessModes: ["ReadWriteOnce","ReadOnlyMany","ReadWriteMany"]

capacity:

storage: 5Gi

volumeMode: Filesystem

persistentVolumeReclaimPolicy: Retain

nfs:

path: /volumes/v3

server: 192.168.20.172

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-nfs-v4

labels:

storsys: nfs

spec:

accessModes: ["ReadWriteOnce","ReadOnlyMany","ReadWriteMany"]

capacity:

storage: 5Gi

volumeMode: Filesystem

persistentVolumeReclaimPolicy: Retain

nfs:

path: /volumes/v4

server: 192.168.20.172

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-nfs-v5

labels:

storsys: nfs

spec:

accessModes: ["ReadWriteOnce","ReadOnlyMany","ReadWriteMany"]

capacity:

storage: 5Gi

volumeMode: Filesystem

persistentVolumeReclaimPolicy: Retain

nfs:

path: /volumes/v5

server: 192.168.20.172

volumes]# kubectl apply -f pv-nfs-demo.yaml

persistentvolume/pv-nfs-v0 created

persistentvolume/pv-nfs-v1 created

persistentvolume/pv-nfs-v2 created

persistentvolume/pv-nfs-v3 created

persistentvolume/pv-nfs-v4 created

persistentvolume/pv-nfs-v5 created

volumes]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-nfs-v0 5Gi RWO,ROX,RWX Retain Available 22s

pv-nfs-v1 5Gi RWO,ROX,RWX Retain Available 22s

pv-nfs-v2 5Gi RWO,ROX,RWX Retain Available 22s

pv-nfs-v3 5Gi RWO,ROX,RWX Retain Available 22s

pv-nfs-v4 5Gi RWO,ROX,RWX Retain Available 22s

pv-nfs-v5 5Gi RWO,ROX,RWX Retain Available 22s

6.2、创建ns、Headless Service、StatefulSet、PVC、Pod

~]# cd mainfests/Kubernetes_Advanced_Practical/chapter9/

1、创建资源清单

# 为了演示创建单独名称空间 ns

# 创建无头service Headless Service

# 创建StatefulSet控制器及Pod模板及Pod挂载的Pvc模板

chapter9]# cat statefulset-demo.yaml

# 创建namespace

apiVersion: v1

kind: Namespace

metadata:

name: sts

---

# 创建无头Service (Headless Service)

apiVersion: v1

kind: Service

metadata:

# service名称

name: myapp-sts-svc

namespace: sts

labels:

app: myapp

spec:

ports:

- port: 80

name: web

# clusterIP-None类型的Servce表示无头service

clusterIP: None

# 使用标签选择器匹配Pod标签,一定是下面statefulset控制器创建出的Pod对象

selector:

app: myapp-pod

controller: sts

---

# 定义资源群组

apiVersion: apps/v1

# 定义资源类型

kind: StatefulSet

# 元数据

metadata:

name: statefulset-demo

namespace: sts

# 定义资源规格

spec:

# 使用标签选择器匹配下面Pod模板创建地Pod

selector:

matchLabels:

app: myapp-pod

controller: sts

# 明确指明调用的无头Service名称即上面创建的Headless Service

serviceName: "myapp-sts-svc"

# 指定Pod副本数量

replicas: 2

# Pod创建模板

template:

# Pod创建模板的元数据

metadata:

namespace: sts

# 创建的Pod都附加的标签信息,一定要吻合上面Service及StatefulSet控制器匹配规则

labels:

app: myapp-pod

controller: sts

# Pod模板的资源规格

spec:

# Pod终止的宽限期,默认为30s

terminationGracePeriodSeconds: 10

# 指定Pod中运行的容器

containers:

# Pod中容器的名称

- name: myapp

# 容器运行所使用的镜像

image: ikubernetes/myapp:v1

# 容器端口

ports:

# 容器暴漏80端口

- containerPort: 80

# 端口暴漏别名

name: web

# 定义Pod存储卷

volumeMounts:

# 使用的存储卷名称

- name: myapp-pvc

# 容器挂载点

mountPath: /usr/share/nginx/html

# 定义PVC模板

volumeClaimTemplates:

# 指定PVC模板的元数据

- metadata:

# pvc名称

name: myapp-pvc

namespace: sts

# 指定创建的每一个pvc规格及访问模式

spec:

# 单路读写

accessModes: [ "ReadWriteOnce" ]

# 所属的存储类名称

#storageClassName: "gluster-dynamic"

# 定义pvc去请求使用pv的空间(最佳选择即资源需求)

resources:

requests:

storage: 2Gi

2、使用声明式接口创建资源

chapter9]# kubectl apply -f statefulset-demo.yaml

namespace/sts created

service/myapp-sts-svc created

statefulset.apps/statefulset-demo created

3、查看创建的名称空间下的所有资源

chapter9]# kubectl get all -n sts -o wide --show-labels

# pod

# pod名称:statefulset控制器名称+有序索引号

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS

pod/statefulset-demo-0 1/1 Running 0 3m13s 10.244.2.56 k8s.node2 <none> <none> app=myapp-pod,controller-revision-hash=statefulset-demo-5f8bd8748,controller=sts,statefulset.kubernetes.io/pod-name=statefulset-demo-0

pod/statefulset-demo-1 1/1 Running 0 3m10s 10.244.1.66 k8s.node1 <none> <none> app=myapp-pod,controller-revision-hash=statefulset-demo-5f8bd8748,controller=sts,statefulset.kubernetes.io/pod-name=statefulset-demo-1

# headless-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR LABELS

service/myapp-sts-svc ClusterIP None <none> 80/TCP 3m13s app=myapp-pod,controller=sts app=myapp

# statefulset

NAME READY AGE CONTAINERS IMAGES LABELS

statefulset.apps/statefulset-demo 2/2 3m13s myapp ikubernetes/myapp:v1 <none>

chapter9]# kubectl get all -n sts -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS

pod/statefulset-demo-0 1/1 Running 0 3m13s 10.244.2.56 k8s.node2 <none> <none> app=myapp-pod,controller-revision-hash=statefulset-demo-5f8bd8748,controller=sts,statefulset.kubernetes.io/pod-name=statefulset-demo-0

pod/statefulset-demo-1 1/1 Running 0 3m10s 10.244.1.66 k8s.node1 <none> <none> app=myapp-pod,controller-revision-hash=statefulset-demo-5f8bd8748,controller=sts,statefulset.kubernetes.io/pod-name=statefulset-demo-1

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR LABELS

service/myapp-sts-svc ClusterIP None <none> 80/TCP 3m13s app=myapp-pod,controller=sts app=myapp

NAME READY AGE CONTAINERS IMAGES LABELS

statefulset.apps/statefulset-demo 2/2 3m13s myapp ikubernetes/myapp:v1 <none>

# pvc

chapter9]# kubectl get pvc -n sts

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

myapp-pvc-statefulset-demo-0 Bound pv-nfs-v0 5Gi RWO,ROX,RWX 4m34s

myapp-pvc-statefulset-demo-1 Bound pv-nfs-v4 5Gi RWO,ROX,RWX 4m31s

# pv

chapter9]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-nfs-v0 5Gi RWO,ROX,RWX Retain Bound sts/myapp-pvc-statefulset-demo-0 15m

pv-nfs-v1 5Gi RWO,ROX,RWX Retain Available 15m

pv-nfs-v2 5Gi RWO,ROX,RWX Retain Available 15m

pv-nfs-v3 5Gi RWO,ROX,RWX Retain Available 15m

pv-nfs-v4 5Gi RWO,ROX,RWX Retain Bound sts/myapp-pvc-statefulset-demo-1 15m

pv-nfs-v5 5Gi RWO,ROX,RWX Retain Available 15m

6.3、扩容

1、Pod扩展、使用scale动态修改此控制器下的pod副本数量

~]# kubectl get sts -n sts

NAME READY AGE

statefulset-demo 2/2 10m

~]# kubectl scale --replicas=4 sts statefulset-demo -n sts

statefulset.apps/statefulset-demo scaled

# 查看Pod扩展状态

chapter9]# kubectl get pods -n sts -w

NAME READY STATUS RESTARTS AGE

statefulset-demo-0 1/1 Running 0 8m32s

statefulset-demo-1 1/1 Running 0 8m29s

statefulset-demo-2 0/1 Pending 0 0s

statefulset-demo-2 0/1 Pending 0 0s

statefulset-demo-2 0/1 Pending 0 2s

statefulset-demo-2 0/1 ContainerCreating 0 2s

statefulset-demo-2 1/1 Running 0 4s

statefulset-demo-3 0/1 Pending 0 0s

statefulset-demo-3 0/1 Pending 0 0s

statefulset-demo-3 0/1 Pending 0 2s

statefulset-demo-3 0/1 ContainerCreating 0 2s

statefulset-demo-3 1/1 Running 0 5s

2、查看扩展后的资源

chapter9]# kubectl get all -n sts -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS

pod/statefulset-demo-0 1/1 Running 0 14m 10.244.2.56 k8s.node2 <none> <none> app=myapp-pod,controller-revision-hash=statefulset-demo-5f8bd8748,controller=sts,statefulset.kubernetes.io/pod-name=statefulset-demo-0

pod/statefulset-demo-1 1/1 Running 0 14m 10.244.1.66 k8s.node1 <none> <none> app=myapp-pod,controller-revision-hash=statefulset-demo-5f8bd8748,controller=sts,statefulset.kubernetes.io/pod-name=statefulset-demo-1

pod/statefulset-demo-2 1/1 Running 0 2m24s 10.244.2.57 k8s.node2 <none> <none> app=myapp-pod,controller-revision-hash=statefulset-demo-5f8bd8748,controller=sts,statefulset.kubernetes.io/pod-name=statefulset-demo-2

pod/statefulset-demo-3 1/1 Running 0 2m20s 10.244.1.67 k8s.node1 <none> <none> app=myapp-pod,controller-revision-hash=statefulset-demo-5f8bd8748,controller=sts,statefulset.kubernetes.io/pod-name=statefulset-demo-3

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR LABELS

service/myapp-sts-svc ClusterIP None <none> 80/TCP 14m app=myapp-pod,controller=sts app=myapp

NAME READY AGE CONTAINERS IMAGES LABELS

statefulset.apps/statefulset-demo 4/4 14m myapp ikubernetes/myapp:v1 <none>

# pvc

chapter9]# kubectl get pvc -n sts

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

myapp-pvc-statefulset-demo-0 Bound pv-nfs-v0 5Gi RWO,ROX,RWX 15m

myapp-pvc-statefulset-demo-1 Bound pv-nfs-v4 5Gi RWO,ROX,RWX 15m

myapp-pvc-statefulset-demo-2 Bound pv-nfs-v3 5Gi RWO,ROX,RWX 2m59s

myapp-pvc-statefulset-demo-3 Bound pv-nfs-v1 5Gi RWO,ROX,RWX 2m55s

# pv

chapter9]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-nfs-v0 5Gi RWO,ROX,RWX Retain Bound sts/myapp-pvc-statefulset-demo-0 25m

pv-nfs-v1 5Gi RWO,ROX,RWX Retain Bound sts/myapp-pvc-statefulset-demo-3 25m

pv-nfs-v2 5Gi RWO,ROX,RWX Retain Available 25m

pv-nfs-v3 5Gi RWO,ROX,RWX Retain Bound sts/myapp-pvc-statefulset-demo-2 25m

pv-nfs-v4 5Gi RWO,ROX,RWX Retain Bound sts/myapp-pvc-statefulset-demo-1 25m

pv-nfs-v5 5Gi RWO,ROX,RWX Retain Available 25m

6.4、滚动更新→ 默认RollingUpdate

- RollingUpdate 更新策略在 StatefulSet 中实现 Pod 的自动滚动更新。 当StatefulSet的 .spec.updateStrategy.type 设置为 RollingUpdate 时,默认为:RollingUpdate。StatefulSet 控制器将在 StatefulSet 中删除并重新创建每个 Pod。 它将以与 Pod 终止相同的顺序进行(从最大的序数到最小的序数),每次更新一个 Pod。 在更新其前身之前,它将等待正在更新的 Pod 状态变成正在运行并就绪。如下操作的滚动更新是有2-0的顺序更新。

- 一次仅更新一个,逆序更新

1、使用默认的更新策略更新Pod镜像

# 类型为sts ,sts名称 Pod中容器名称=更新后的镜像

~]# kubectl set image sts statefulset-demo myapp=ikubernetes/myapp:v2 -n sts

statefulset.apps/statefulset-demo image updated

2、监视Pod更新状态

chapter9]# kubectl get pods -n sts -w

NAME READY STATUS RESTARTS AGE

statefulset-demo-0 1/1 Running 0 18m

statefulset-demo-1 1/1 Running 0 18m

statefulset-demo-2 1/1 Running 0 6m24s

statefulset-demo-3 1/1 Running 0 6m20s

statefulset-demo-3 1/1 Terminating 0 9m3s

statefulset-demo-3 0/1 Terminating 0 9m5s

statefulset-demo-3 0/1 Terminating 0 9m6s

statefulset-demo-3 0/1 Terminating 0 9m6s

statefulset-demo-3 0/1 Pending 0 0s

statefulset-demo-3 0/1 Pending 0 0s

statefulset-demo-3 0/1 ContainerCreating 0 0s

statefulset-demo-3 1/1 Running 0 2s

statefulset-demo-2 1/1 Terminating 0 9m12s

statefulset-demo-2 0/1 Terminating 0 9m13s

statefulset-demo-2 0/1 Terminating 0 9m23s

statefulset-demo-2 0/1 Terminating 0 9m23s

statefulset-demo-2 0/1 Pending 0 0s

statefulset-demo-2 0/1 Pending 0 0s

statefulset-demo-2 0/1 ContainerCreating 0 0s

statefulset-demo-2 1/1 Running 0 1s

statefulset-demo-1 1/1 Terminating 0 21m

statefulset-demo-1 0/1 Terminating 0 21m

statefulset-demo-1 0/1 Terminating 0 21m

statefulset-demo-1 0/1 Terminating 0 21m

statefulset-demo-1 0/1 Pending 0 0s

statefulset-demo-1 0/1 Pending 0 0s

statefulset-demo-1 0/1 ContainerCreating 0 0s

statefulset-demo-1 1/1 Running 0 3s

statefulset-demo-0 1/1 Terminating 0 21m

statefulset-demo-0 0/1 Terminating 0 21m

statefulset-demo-0 0/1 Terminating 0 21m

statefulset-demo-0 0/1 Terminating 0 21m

statefulset-demo-0 0/1 Pending 0 0s

statefulset-demo-0 0/1 Pending 0 0s

statefulset-demo-0 0/1 ContainerCreating 0 1s

statefulset-demo-0 1/1 Running 0 3s

3、查看sts控制器详细信息验证是否更新

chapter9]# kubectl describe pods statefulset-demo-0 -n sts

Name: statefulset-demo-0

Namespace: sts

Priority: 0

Node: k8s.node2/192.168.20.214

Start Time: Sun, 24 May 2020 14:09:57 +0800

Labels: app=myapp-pod

controller=sts

controller-revision-hash=statefulset-demo-777c58fc6c

statefulset.kubernetes.io/pod-name=statefulset-demo-0

Annotations: <none>

Status: Running

IP: 10.244.2.59

IPs:

IP: 10.244.2.59

Controlled By: StatefulSet/statefulset-demo

Containers:

myapp:

Container ID: docker://9d1caf2a43703cfb2b3d08956d184fb96fc2f07b1f3177ff8a43147da8c9c403

Image: ikubernetes/myapp:v2

Image ID: docker-pullable://ikubernetes/myapp@sha256:85a2b81a62f09a414ea33b74fb8aa686ed9b168294b26b4c819df0be0712d358

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Sun, 24 May 2020 14:09:58 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/usr/share/nginx/html from myapp-pvc (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-4glpk (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

myapp-pvc:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: myapp-pvc-statefulset-demo-0

ReadOnly: false

default-token-4glpk:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-4glpk

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled <unknown> default-scheduler Successfully assigned sts/statefulset-demo-0 to k8s.node2

Normal Pulled 110s kubelet, k8s.node2 Container image "ikubernetes/myapp:v2" already present on machine

Normal Created 110s kubelet, k8s.node2 Created container myapp

Normal Started 109s kubelet, k8s.node2 Started container myapp

[root@k8s chapter9]# kubectl describe sts statefulset-demo -n sts

Name: statefulset-demo

Namespace: sts

CreationTimestamp: Sun, 24 May 2020 13:48:14 +0800

Selector: app=myapp-pod,controller=sts

Labels: <none>

Annotations: Replicas: 4 desired | 4 total

Update Strategy: RollingUpdate # 默认更新策略

Partition: 0

Pods Status: 4 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=myapp-pod

controller=sts

Containers:

myapp:

Image: ikubernetes/myapp:v2

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts:

/usr/share/nginx/html from myapp-pvc (rw)

Volumes: <none>

Volume Claims:

Name: myapp-pvc

StorageClass:

Labels: <none>

Annotations: <none>

Capacity: 2Gi

Access Modes: [ReadWriteOnce]

Events:

Type Reason Age From Message # Pod详细更新过程

---- ------ ---- ---- -------

Normal SuccessfulCreate 25m statefulset-controller create Claim myapp-pvc-statefulset-demo-0 Pod statefulset-demo-0 in StatefulSet statefulset-demo success

Normal SuccessfulCreate 25m statefulset-controller create Claim myapp-pvc-statefulset-demo-1 Pod statefulset-demo-1 in StatefulSet statefulset-demo success

Normal SuccessfulCreate 13m statefulset-controller create Claim myapp-pvc-statefulset-demo-2 Pod statefulset-demo-2 in StatefulSet statefulset-demo success

Normal SuccessfulCreate 13m statefulset-controller create Claim myapp-pvc-statefulset-demo-3 Pod statefulset-demo-3 in StatefulSet statefulset-demo success

Normal SuccessfulDelete 4m36s statefulset-controller delete Pod statefulset-demo-3 in StatefulSet statefulset-demo successful

Normal SuccessfulCreate 4m33s (x2 over 13m) statefulset-controller create Pod statefulset-demo-3 in StatefulSet statefulset-demo successful

Normal SuccessfulDelete 4m31s statefulset-controller delete Pod statefulset-demo-2 in StatefulSet statefulset-demo successful

Normal SuccessfulCreate 4m20s (x2 over 13m) statefulset-controller create Pod statefulset-demo-2 in StatefulSet statefulset-demo successful

Normal SuccessfulDelete 4m19s statefulset-controller delete Pod statefulset-demo-1 in StatefulSet statefulset-demo successful

Normal SuccessfulCreate 4m14s (x2 over 25m) statefulset-controller create Pod statefulset-demo-1 in StatefulSet statefulset-demo successful

Normal SuccessfulDelete 4m11s statefulset-controller delete Pod statefulset-demo-0 in StatefulSet statefulset-demo successful

Normal SuccessfulCreate 4m10s (x2 over 25m) statefulset-controller create Pod statefulset-demo-0 in StatefulSet statefulset-demo successful

6.5、更新并指定→ RollingUpdate-partition(金丝雀更新策略)

- 修改更新策略,以partition方式进行更新,更新值为2,只有myapp编号大于等于2的才会进行更新。类似于金丝雀部署方式。

- kubectl explain sts.spec

- updateStrategy

- rollingUpdate

- partition

6.5.1、方式一

1、使用编辑清单的方式修改更新策略指定partition数量

chapter9]# cat statefulset-demo.yaml

# 创建namespace

apiVersion: v1

kind: Namespace

metadata:

name: sts

---

# 创建无头Service (Headless Service)

apiVersion: v1

kind: Service

metadata:

# service名称

name: myapp-sts-svc

namespace: sts

labels:

app: myapp

spec:

ports:

- port: 80

name: web

# clusterIP-None类型的Servce表示无头service

clusterIP: None

# 使用标签选择器匹配Pod标签,一定是下面statefulset控制器创建出的Pod对象

selector:

app: myapp-pod

controller: sts

---

# 定义资源群组

apiVersion: apps/v1

# 定义资源类型

kind: StatefulSet

# 元数据

metadata:

name: statefulset-demo

namespace: sts

# 定义资源规格

spec:

# 使用标签选择器匹配下面Pod模板创建地Pod

selector:

matchLabels:

app: myapp-pod

controller: sts

# 明确指明调用的无头Service名称即上面创建的Headless Service

serviceName: "myapp-sts-svc"

# 指定Pod副本数量

replicas: 2

# 指定Pod更新策略 # 指定更新策略

updateStrategy:

type: RollingUpdate

rollingUpdate:

partition: 3

# Pod创建模板

template:

# Pod创建模板的元数据

metadata:

namespace: sts

# 创建的Pod都附加的标签信息,一定要吻合上面Service及StatefulSet控制器匹配规则

labels:

app: myapp-pod

controller: sts

# Pod模板的资源规格

spec:

# Pod终止的宽限期,默认为30s

terminationGracePeriodSeconds: 10

# 指定Pod中运行的容器

containers:

# Pod中容器的名称

- name: myapp

# 容器运行所使用的镜像

image: ikubernetes/myapp:v1

# 容器端口

ports:

# 容器暴漏80端口

- containerPort: 80

# 端口暴漏别名

name: web

# 定义Pod存储卷

volumeMounts:

# 使用的存储卷名称

- name: myapp-pvc

# 容器挂载点

mountPath: /usr/share/nginx/html

# 定义PVC模板

volumeClaimTemplates:

# 指定PVC模板的元数据

- metadata:

# pvc名称

name: myapp-pvc

namespace: sts

# 指定创建的每一个pvc规格及访问模式

spec:

# 单路读写

accessModes: [ "ReadWriteOnce" ]

# 所属的存储类名称

#storageClassName: "gluster-dynamic"

# 定义pvc去请求使用pv的空间(最佳选择即资源需求)

resources:

requests:

storage: 2Gi

# kubectl apply -f xxx.yaml

6.5.2、方式二

1、使用edit方式进行修改

~]# kubectl edit sts statefulset-demo -n sts

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: apps/v1

kind: StatefulSet

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

creationTimestamp: "2020-05-24T05:48:14Z"

generation: 3

creationTimestamp: "2020-05-24T05:48:14Z"

generation: 3

managedFields:

- apiVersion: apps/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:kubectl.kubernetes.io/last-applied-configuration: {}

f:spec:

f:podManagementPolicy: {}

f:replicas: {}

f:revisionHistoryLimit: {}

f:selector:

f:matchLabels:

.: {}

f:app: {}

f:controller: {}

f:serviceName: {}

f:template:

f:metadata:

f:labels:

.: {}

f:app: {}

f:controller: {}

f:namespace: {}

f:spec:

f:containers:

k:{"name":"myapp"}:

.: {}

f:image: {}

f:imagePullPolicy: {}

f:name: {}

f:ports:

.: {}

k:{"containerPort":80,"protocol":"TCP"}:

.: {}

f:containerPort: {}

f:name: {}

f:protocol: {}

f:resources: {}

f:terminationMessagePath: {}

f:terminationMessagePolicy: {}

f:volumeMounts:

.: {}

k:{"mountPath":"/usr/share/nginx/html"}:

.: {}

f:mountPath: {}

f:name: {}

f:dnsPolicy: {}

f:restartPolicy: {}

f:schedulerName: {}

f:securityContext: {}

f:terminationGracePeriodSeconds: {}

f:updateStrategy:

f:rollingUpdate:

.: {}

f:partition: {}

f:type: {}

f:volumeClaimTemplates: {}

manager: kubectl

operation: Update

time: "2020-05-24T06:09:30Z"

- apiVersion: apps/v1

fieldsType: FieldsV1

fieldsV1:

replicas: 4

revisionHistoryLimit: 10

selector:

matchLabels:

app: myapp-pod

controller: sts

serviceName: myapp-sts-svc

template:

metadata:

creationTimestamp: null

labels:

app: myapp-pod

controller: sts

namespace: sts

spec:

containers:

- image: ikubernetes/myapp:v2

imagePullPolicy: IfNotPresent

name: myapp

ports:

- containerPort: 80

name: web

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /usr/share/nginx/html

name: myapp-pvc

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 10

updateStrategy:

rollingUpdate:

partition: 3 # 修改这里

type: RollingUpdate

volumeClaimTemplates:

- apiVersion: v1

2、再次进行更新测试

~]# kubectl set image sts statefulset-demo myapp=ikubernetes/myapp:v3 -n sts

statefulset.apps/statefulset-demo image updated

3、查看Pod更新状态 (partition: 3 会发现仅有名称索引号为3的Pod在更新)

chapter9]# kubectl get pods -n sts -w

NAME READY STATUS RESTARTS AGE

statefulset-demo-0 1/1 Running 0 22m

statefulset-demo-1 1/1 Running 0 22m

statefulset-demo-2 1/1 Running 0 22m

statefulset-demo-3 1/1 Running 0 22m

statefulset-demo-3 1/1 Terminating 0 22m

statefulset-demo-3 0/1 Terminating 0 22m

statefulset-demo-3 0/1 Terminating 0 22m

statefulset-demo-3 0/1 Terminating 0 22m

statefulset-demo-3 0/1 Pending 0 0s

statefulset-demo-3 0/1 Pending 0 0s

statefulset-demo-3 0/1 ContainerCreating 0 0s

statefulset-demo-3 1/1 Running 0 2s

4、将剩余的Pod也更新版本,只需要将更新策略的partition值改为0即可,如下:

~]# kubectl patch sts statefulset-demo -p '{"spec":{"updateStrategy":{"rollingUpdate":{"partition":0}}}}' -n sts

statefulset.apps/statefulset-demo patched

chapter9]# kubectl get pods -n sts -w

NAME READY STATUS RESTARTS AGE

statefulset-demo-0 1/1 Running 0 22m

statefulset-demo-1 1/1 Running 0 22m

statefulset-demo-2 1/1 Running 0 22m

statefulset-demo-3 1/1 Running 0 22m

statefulset-demo-3 1/1 Terminating 0 22m

statefulset-demo-3 0/1 Terminating 0 22m

statefulset-demo-3 0/1 Terminating 0 22m

statefulset-demo-3 0/1 Terminating 0 22m

statefulset-demo-3 0/1 Pending 0 0s

statefulset-demo-3 0/1 Pending 0 0s

statefulset-demo-3 0/1 ContainerCreating 0 0s

statefulset-demo-3 1/1 Running 0 2s

statefulset-demo-2 1/1 Terminating 0 26m

statefulset-demo-2 0/1 Terminating 0 26m

statefulset-demo-2 0/1 Terminating 0 26m

statefulset-demo-2 0/1 Terminating 0 26m

statefulset-demo-2 0/1 Pending 0 0s

statefulset-demo-2 0/1 Pending 0 0s

statefulset-demo-2 0/1 ContainerCreating 0 0s

statefulset-demo-2 1/1 Running 0 2s

statefulset-demo-1 1/1 Terminating 0 26m

statefulset-demo-1 0/1 Terminating 0 26m

statefulset-demo-1 0/1 Terminating 0 26m

statefulset-demo-1 0/1 Terminating 0 26m

statefulset-demo-1 0/1 Pending 0 0s

statefulset-demo-1 0/1 Pending 0 0s

statefulset-demo-1 0/1 ContainerCreating 0 0s

statefulset-demo-1 1/1 Running 0 2s

statefulset-demo-0 1/1 Terminating 0 26m

statefulset-demo-0 0/1 Terminating 0 26m

statefulset-demo-0 0/1 Terminating 0 26m

statefulset-demo-0 0/1 Terminating 0 26m

statefulset-demo-0 0/1 Pending 0 0s

statefulset-demo-0 0/1 Pending 0 0s

statefulset-demo-0 0/1 ContainerCreating 0 0s

statefulset-demo-0 1/1 Running 0 2s

6.5.3、方式三

#修改更新策略,以partition方式进行更新,更新值为2,只有myapp编号大于等于2的才会进行更新。类似于金丝雀部署方式。

[root@k8s-master mainfests]# kubectl patch sts myapp -p '{"spec":{"updateStrategy":{"rollingUpdate":{"partition":2}}}}'

statefulset.apps/myapp patched

[root@k8s-master ~]# kubectl get sts myapp

NAME DESIRED CURRENT AGE

myapp 4 4 1h

[root@k8s-master ~]# kubectl describe sts myapp

Name: myapp

Namespace: default

CreationTimestamp: Wed, 10 Oct 2018 21:58:24 -0400

Selector: app=myapp-pod

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"apps/v1","kind":"StatefulSet","metadata":{"annotations":{},"name":"myapp","namespace":"default"},"spec":{"replicas":3,"selector":{"match...

Replicas: 4 desired | 4 total

Update Strategy: RollingUpdate

Partition: 2

# 版本升级,将image的版本升级为v3,升级后对比myapp-2和myapp-1的image版本是不同的。这样就实现了金丝雀发布的效果。

[root@k8s-master mainfests]# kubectl set image sts/myapp myapp=ikubernetes/myapp:v3

statefulset.apps/myapp image updated

[root@k8s-master ~]# kubectl get sts -o wide

NAME DESIRED CURRENT AGE CONTAINERS IMAGES

myapp 4 4 1h myapp ikubernetes/myapp:v3

[root@k8s-master ~]# kubectl get pods myapp-2 -o yaml |grep image

- image: ikubernetes/myapp:v3

imagePullPolicy: IfNotPresent

image: ikubernetes/myapp:v3

imageID: docker-pullable://ikubernetes/myapp@sha256:b8d74db2515d3c1391c78c5768272b9344428035ef6d72158fd9f6c4239b2c69

[root@k8s-master ~]# kubectl get pods myapp-1 -o yaml |grep image

- image: ikubernetes/myapp:v2

imagePullPolicy: IfNotPresent

image: ikubernetes/myapp:v2

imageID: docker-pullable://ikubernetes/myapp@sha256:85a2b81a62f09a414ea33b74fb8aa686ed9b168294b26b4c819df0be0712d358

# 将剩余的Pod也更新版本,只需要将更新策略的partition值改为0即可,如下:

[root@k8s-master mainfests]# kubectl patch sts myapp -p '{"spec":{"updateStrategy":{"rollingUpdate":{"partition":0}}}}'

statefulset.apps/myapp patched

[root@k8s-master ~]# kubectl get pods -w

NAME READY STATUS RESTARTS AGE

myapp-0 1/1 Running 0 58m

myapp-1 1/1 Running 0 58m

myapp-2 1/1 Running 0 13m

myapp-3 1/1 Running 0 13m

myapp-1 1/1 Terminating 0 58m

myapp-1 0/1 Terminating 0 58m

myapp-1 0/1 Terminating 0 58m

myapp-1 0/1 Terminating 0 58m

myapp-1 0/1 Pending 0 0s

myapp-1 0/1 Pending 0 0s

myapp-1 0/1 ContainerCreating 0 0s

myapp-1 1/1 Running 0 2s

myapp-0 1/1 Terminating 0 58m

myapp-0 0/1 Terminating 0 58m

myapp-0 0/1 Terminating 0 58m

myapp-0 0/1 Terminating 0 58m

myapp-0 0/1 Pending 0 0s

myapp-0 0/1 Pending 0 0s

myapp-0 0/1 ContainerCreating 0 0s

myapp-0 1/1 Running 0 2s

6.6、缩容

- 对Pod做缩容操作,当然也会将Pod使用的pvc进行删除,但是PV要保留,当之后要扩容时要使用之前相同的pv,因为标识符是相同的

1、查看目前Pod数量

chapter9]# kubectl get pods -n sts

NAME READY STATUS RESTARTS AGE

statefulset-demo-0 1/1 Running 0 78s

statefulset-demo-1 1/1 Running 0 93s

statefulset-demo-2 1/1 Running 0 98s

statefulset-demo-3 1/1 Running 0 5m33s

2、人为有意的连入其中一个Pod并生成一些数据

~]# kubectl exec -it statefulset-demo-3 -n sts -- /bin/sh

/ # touch /usr/share/nginx/html # 将数据放置在挂载点(pvc)

/ # sync && exit

3、开始缩容

~]# kubectl scale sts statefulset-demo --replicas=3 -n sts

statefulset.apps/statefulset-demo scaled

4、查看Pod数量

chapter9]# kubectl get pods -n sts

NAME READY STATUS RESTARTS AGE

statefulset-demo-0 1/1 Running 0 7m4s

statefulset-demo-1 1/1 Running 0 7m19s

statefulset-demo-2 1/1 Running 0 7m24s

4、查看pvc/pv状态

# pvc 并不会被删除

chapter9]# kubectl get pvc -n sts

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

myapp-pvc-statefulset-demo-0 Bound pv-nfs-v0 5Gi RWO,ROX,RWX 56m

myapp-pvc-statefulset-demo-1 Bound pv-nfs-v4 5Gi RWO,ROX,RWX 56m

myapp-pvc-statefulset-demo-2 Bound pv-nfs-v3 5Gi RWO,ROX,RWX 44m

myapp-pvc-statefulset-demo-3 Bound pv-nfs-v1 5Gi RWO,ROX,RWX 44m

# pv也不会删除

chapter9]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-nfs-v0 5Gi RWO,ROX,RWX Retain Bound sts/myapp-pvc-statefulset-demo-0 67m

pv-nfs-v1 5Gi RWO,ROX,RWX Retain Bound sts/myapp-pvc-statefulset-demo-3 67m

pv-nfs-v2 5Gi RWO,ROX,RWX Retain Available 67m

pv-nfs-v3 5Gi RWO,ROX,RWX Retain Bound sts/myapp-pvc-statefulset-demo-2 67m

pv-nfs-v4 5Gi RWO,ROX,RWX Retain Bound sts/myapp-pvc-statefulset-demo-1 67m

pv-nfs-v5 5Gi RWO,ROX,RWX Retain Available 67m

5、扩容

~]# kubectl scale sts statefulset-demo --replicas=4 -n sts

statefulset.apps/statefulset-demo scaled

chapter9]# kubectl get pods -n sts

NAME READY STATUS RESTARTS AGE

statefulset-demo-0 1/1 Running 0 8m59s

statefulset-demo-1 1/1 Running 0 9m14s

statefulset-demo-2 1/1 Running 0 9m19s

statefulset-demo-3 1/1 Running 0 12s

# 之前创建的文件还存在

~]# kubectl exec -it statefulset-demo-3 -n sts -- /bin/sh

/ # ls /usr/share/

GeoIP/ man/ misc/ nginx/

/ # ls /usr/share/nginx/html/

daizhe.txt dump.rdb

七、StatefulSet总结

- 本章主要了解了有状态应用的Pod控制器StatefulSet,有状态应用相较于五状态应用来说,在管理上有着特有的复杂之处,甚至不同的有状态应用的管理方式各有不同,在部署时需要精心组织:

- StatefulSet 依赖于 Headless Service 资源为其 Pod 资源 DNS 资源记录

- 每个 Pod 资源均拥有固定且唯一的名称,并且需要由 DNS 务解析

- Pod 资源中的应用需要依赖于 PVC PV 持久保存其状态数据

- 支持扩容和缩容, 具体 现机制依赖于应用本身

- 支持自动更新,默认 更新策略为滚动更新机制

八、statefulset部署示例

8.1、有状态-etcd-StatefulSet

1、service

chapter9]# cat etcd-services.yaml

apiVersion: v1

kind: Service

metadata:

name: etcd

labels:

app: etcd

annotations:

# Create endpoints also if the related pod isn't ready

service.alpha.kubernetes.io/tolerate-unready-endpoints: "true"

spec:

ports:

- port: 2379

name: client

- port: 2380

name: peer

clusterIP: None

selector:

app: etcd-member

---

apiVersion: v1

kind: Service

metadata:

name: etcd-client

labels:

app: etcd

spec:

ports:

- name: etcd-client

port: 2379

protocol: TCP

targetPort: 2379

selector:

app: etcd-member

type: NodePort

chapter9]# kubectl apply -f etcd-services.yaml

service/etcd created

service/etcd-client created

chapter9]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

etcd ClusterIP None <none> 2379/TCP,2380/TCP 22s

etcd-client NodePort 10.98.249.13 <none> 2379:31458/TCP 22s

2、创建PV

~]# mkdir /volumes/{v6,v7,v8}

You have new mail in /var/spool/mail/root

~]# cat /etc/exports

/volumes/v0 192.168.20.0/24(rw,async,no_root_squash)

/volumes/v1 192.168.20.0/24(rw,async,no_root_squash)

/volumes/v2 192.168.20.0/24(rw,async,no_root_squash)

/volumes/v3 192.168.20.0/24(rw,async,no_root_squash)

/volumes/v4 192.168.20.0/24(rw,async,no_root_squash)

/volumes/v5 192.168.20.0/24(rw,async,no_root_squash)

/volumes/v6 192.168.20.0/24(rw,async,no_root_squash)

/volumes/v7 192.168.20.0/24(rw,async,no_root_squash)

/volumes/v8 192.168.20.0/24(rw,async,no_root_squash)

/data/nfs_share 192.168.20.0/24(rw,async)

~]# exportfs -avf

exporting 192.168.20.0/24:/volumes/v8

exporting 192.168.20.0/24:/volumes/v7

exporting 192.168.20.0/24:/volumes/v6

exporting 192.168.20.0/24:/volumes/v5

exporting 192.168.20.0/24:/volumes/v4

exporting 192.168.20.0/24:/volumes/v3

exporting 192.168.20.0/24:/volumes/v2

exporting 192.168.20.0/24:/volumes/v1

exporting 192.168.20.0/24:/volumes/v0

volumes]# cat pv-nfs678-demo.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-nfs-v6

labels:

storsys: nfs

spec:

accessModes: ["ReadWriteOnce","ReadOnlyMany","ReadWriteMany"]

capacity:

storage: 5Gi

volumeMode: Filesystem

persistentVolumeReclaimPolicy: Retain

nfs:

path: /volumes/v6

server: 192.168.20.172

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-nfs-v7

labels:

storsys: nfs

spec:

accessModes: ["ReadWriteOnce","ReadOnlyMany","ReadWriteMany"]

capacity:

storage: 5Gi

volumeMode: Filesystem

persistentVolumeReclaimPolicy: Retain

nfs:

path: /volumes/v7

server: 192.168.20.172

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-nfs-v8

labels:

storsys: nfs

spec:

accessModes: ["ReadWriteOnce","ReadOnlyMany","ReadWriteMany"]

capacity:

storage: 5Gi

volumeMode: Filesystem

persistentVolumeReclaimPolicy: Retain

nfs:

path: /volumes/v8

server: 192.168.20.172

volumes]# kubectl apply -f pv-nfs678-demo.yaml

persistentvolume/pv-nfs-v6 created

persistentvolume/pv-nfs-v7 created

persistentvolume/pv-nfs-v8 created

3、Statefulset

chapter9]# cat etcd-services.yaml

apiVersion: v1

kind: Service

metadata:

name: etcd

labels:

app: etcd

annotations:

# Create endpoints also if the related pod isn't ready

service.alpha.kubernetes.io/tolerate-unready-endpoints: "true"

spec:

ports:

- port: 2379

name: client

- port: 2380

name: peer

clusterIP: None

selector:

app: etcd-member

---

apiVersion: v1

kind: Service

metadata:

name: etcd-client

labels:

app: etcd

spec:

ports:

- name: etcd-client

port: 2379

protocol: TCP

targetPort: 2379

selector:

app: etcd-member

type: NodePort

[root@k8s chapter9]# cat etcd-s

etcd-services.yaml etcd-statefulset.yaml

[root@k8s chapter9]# cat etcd-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: etcd

labels:

app: etcd

spec:

serviceName: etcd

# changing replicas value will require a manual etcdctl member remove/add

# # command (remove before decreasing and add after increasing)

replicas: 3

selector:

matchLabels:

app: etcd-member

template:

metadata:

name: etcd

labels:

app: etcd-member

spec:

containers:

- name: etcd

image: "quay.io/coreos/etcd:v3.2.16"

ports:

- containerPort: 2379

name: client

- containerPort: 2380

name: peer

env:

- name: CLUSTER_SIZE

value: "3"

- name: SET_NAME

value: "etcd"

volumeMounts:

- name: data

mountPath: /var/run/etcd

command:

- "/bin/sh"

- "-ecx"

- |

IP=$(hostname -i)

PEERS=""

for i in $(seq 0 $((${CLUSTER_SIZE} - 1))); do

PEERS="${PEERS}${PEERS:+,}${SET_NAME}-${i}=http://${SET_NAME}-${i}.${SET_NAME}:2380"

done

# start etcd. If cluster is already initialized the `--initial-*` options will be ignored.

exec etcd --name ${HOSTNAME} \

--listen-peer-urls http://${IP}:2380 \

--listen-client-urls http://${IP}:2379,http://127.0.0.1:2379 \

--advertise-client-urls http://${HOSTNAME}.${SET_NAME}:2379 \

--initial-advertise-peer-urls http://${HOSTNAME}.${SET_NAME}:2380 \

--initial-cluster-token etcd-cluster-1 \

--initial-cluster ${PEERS} \

--initial-cluster-state new \

--data-dir /var/run/etcd/default.etcd

volumeClaimTemplates:

- metadata:

name: data

spec:

# storageClassName: gluster-dynamic

accessModes:

- "ReadWriteOnce"

resources:

requests:

storage: 1Gi

chapter9]# kubectl apply -f etcd-statefulset.yaml

statefulset.apps/etcd created

8.2、有状态-etcd-Operator

etcd coreos/etcd-operator Manages etcd k/v database clusters on Kubernetes.

github项目托管地址 :https://github.com/coreos/etcd-operator

8.3、有状态-ZK-StatefulSet

chapter9]# cat zk-sts.yaml

apiVersion: v1

kind: Service

metadata:

name: zk-hs

labels:

app: zk

spec:

ports:

- port: 2888

name: server

- port: 3888

name: leader-election

clusterIP: None

selector:

app: zk

---

apiVersion: v1

kind: Service

metadata:

name: zk-cs

labels:

app: zk

spec:

ports:

- port: 2181

name: client

selector:

app: zk

---

apiVersion: policy/v1beta1

kind: PodDisruptionBudget

metadata:

name: zk-pdb

spec:

selector:

matchLabels:

app: zk

maxUnavailable: 1

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: zk

spec:

selector:

matchLabels:

app: zk

serviceName: zk-hs

replicas: 3

updateStrategy:

type: RollingUpdate

podManagementPolicy: Parallel

template:

metadata:

labels:

app: zk

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- zk-hs

topologyKey: "kubernetes.io/hostname"

containers:

- name: kubernetes-zookeeper

image: gcr.io/google-containers/kubernetes-zookeeper:1.0-3.4.10

resources:

requests:

memory: "1Gi"

cpu: "0.5"

ports:

- containerPort: 2181

name: client

- containerPort: 2888

name: server

- containerPort: 3888

name: leader-election

command:

- sh

- -c

- "start-zookeeper \

--servers=3 \

--data_dir=/var/lib/zookeeper/data \

--data_log_dir=/var/lib/zookeeper/data/log \

--conf_dir=/opt/zookeeper/conf \

--client_port=2181 \

--election_port=3888 \

--server_port=2888 \

--tick_time=2000 \

--init_limit=10 \

--sync_limit=5 \

--heap=512M \

--max_client_cnxns=60 \

--snap_retain_count=3 \

--purge_interval=12 \

--max_session_timeout=40000 \

--min_session_timeout=4000 \

--log_level=INFO"

readinessProbe:

exec:

command:

- sh

- -c

- "zookeeper-ready 2181"

initialDelaySeconds: 10

timeoutSeconds: 5

livenessProbe:

exec:

command:

- sh

- -c

- "zookeeper-ready 2181"

initialDelaySeconds: 10

timeoutSeconds: 5

volumeMounts:

- name: data

mountPath: /var/lib/zookeeper

securityContext:

runAsUser: 1000

fsGroup: 1000

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: gluster-dynamic

resources:

requests:

storage: 5Gi

向往的地方很远,喜欢的东西很贵,这就是我努力的目标。