13-K8S Basic-volume(卷)基础应用(hostPath、local、emptyDir、NFS) 转至元数据结尾

PS :volume官方文档:https://kubernetes.io/zh/docs/concepts/storage/volumes/

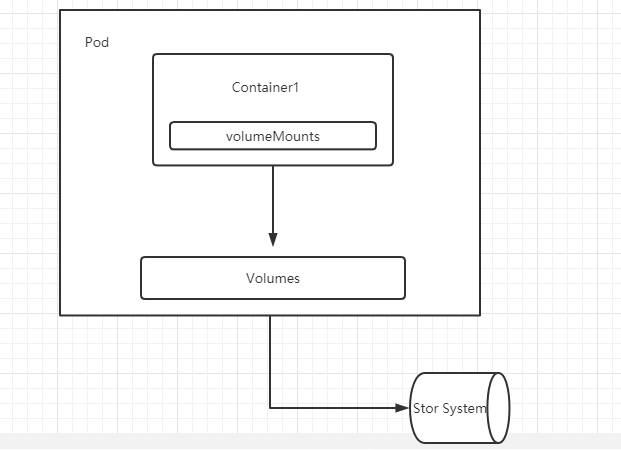

一、存储卷的概念和类型

1.1、存储卷概念

- 为了保证数据的持久性,必须保证数据在外部存储在docker容器中,为了实现数据的持久性存储,在宿主机和容器内做映射,可以保证在容器的生命周期结束,数据依旧可以实现持久性存储。但是在k8s中,由于pod分布在各个不同的节点之上,并不能实现不同节点之间持久性数据的共享,并且,在节点故障时,可能会导致数据的永久性丢失。为此,k8s就引入了外部存储卷的功能。

- k8s的存储卷类型:

# 查看k8s内建的支持的存储卷类型

~]# kubectl explain pod.spec.volumes

KIND: Pod

VERSION: v1

RESOURCE: volumes <[]Object>

DESCRIPTION:

List of volumes that can be mounted by containers belonging to the pod.

More info: https://kubernetes.io/docs/concepts/storage/volumes

Volume represents a named volume in a pod that may be accessed by any

container in the pod.

FIELDS:

awsElasticBlockStore <Object>

AWSElasticBlockStore represents an AWS Disk resource that is attached to a

kubelet's host machine and then exposed to the pod. More info:

https://kubernetes.io/docs/concepts/storage/volumes#awselasticblockstore

azureDisk <Object>

AzureDisk represents an Azure Data Disk mount on the host and bind mount to

the pod.

azureFile <Object>

AzureFile represents an Azure File Service mount on the host and bind mount

to the pod.

cephfs <Object>

CephFS represents a Ceph FS mount on the host that shares a pod's lifetime

cinder <Object>

Cinder represents a cinder volume attached and mounted on kubelets host

machine. More info: https://examples.k8s.io/mysql-cinder-pd/README.md

configMap <Object>

ConfigMap represents a configMap that should populate this volume

csi <Object>

CSI (Container Storage Interface) represents storage that is handled by an

external CSI driver (Alpha feature).

downwardAPI <Object>

DownwardAPI represents downward API about the pod that should populate this

volume

emptyDir <Object> # 空存储卷

EmptyDir represents a temporary directory that shares a pod's lifetime.

More info: https://kubernetes.io/docs/concepts/storage/volumes#emptydir

fc <Object>

FC represents a Fibre Channel resource that is attached to a kubelet's host

machine and then exposed to the pod.

flexVolume <Object>

FlexVolume represents a generic volume resource that is

provisioned/attached using an exec based plugin.

flocker <Object>

Flocker represents a Flocker volume attached to a kubelet's host machine.

This depends on the Flocker control service being running

gcePersistentDisk <Object>

GCEPersistentDisk represents a GCE Disk resource that is attached to a

kubelet's host machine and then exposed to the pod. More info:

https://kubernetes.io/docs/concepts/storage/volumes#gcepersistentdisk

gitRepo <Object>

GitRepo represents a git repository at a particular revision. DEPRECATED:

GitRepo is deprecated. To provision a container with a git repo, mount an

EmptyDir into an InitContainer that clones the repo using git, then mount

the EmptyDir into the Pod's container.

glusterfs <Object>

Glusterfs represents a Glusterfs mount on the host that shares a pod's

lifetime. More info: https://examples.k8s.io/volumes/glusterfs/README.md

hostPath <Object> # 节点级存储

HostPath represents a pre-existing file or directory on the host machine

that is directly exposed to the container. This is generally used for

system agents or other privileged things that are allowed to see the host

machine. Most containers will NOT need this. More info:

https://kubernetes.io/docs/concepts/storage/volumes#hostpath

iscsi <Object>

ISCSI represents an ISCSI Disk resource that is attached to a kubelet's

host machine and then exposed to the pod. More info:

https://examples.k8s.io/volumes/iscsi/README.md

name <string> -required-

Volume's name. Must be a DNS_LABEL and unique within the pod. More info:

https://kubernetes.io/docs/concepts/overview/working-with-objects/names/#names

nfs <Object>

NFS represents an NFS mount on the host that shares a pod's lifetime More

info: https://kubernetes.io/docs/concepts/storage/volumes#nfs

persistentVolumeClaim <Object> # PVC

PersistentVolumeClaimVolumeSource represents a reference to a

PersistentVolumeClaim in the same namespace. More info:

https://kubernetes.io/docs/concepts/storage/persistent-volumes#persistentvolumeclaims

photonPersistentDisk <Object>

PhotonPersistentDisk represents a PhotonController persistent disk attached

and mounted on kubelets host machine

portworxVolume <Object>

PortworxVolume represents a portworx volume attached and mounted on

kubelets host machine

projected <Object>

Items for all in one resources secrets, configmaps, and downward API

quobyte <Object>

Quobyte represents a Quobyte mount on the host that shares a pod's lifetime

rbd <Object>

RBD represents a Rados Block Device mount on the host that shares a pod's

lifetime. More info: https://examples.k8s.io/volumes/rbd/README.md

scaleIO <Object>

ScaleIO represents a ScaleIO persistent volume attached and mounted on

Kubernetes nodes.

secret <Object>

Secret represents a secret that should populate this volume. More info:

https://kubernetes.io/docs/concepts/storage/volumes#secret

storageos <Object>

StorageOS represents a StorageOS volume attached and mounted on Kubernetes

nodes.

vsphereVolume <Object>

VsphereVolume represents a vSphere volume attached and mounted on kubelets

host machine

1.2、k8s存储卷类型归类

- 云存储

- awsElasticBlockStore

- azureDisk

- azureFile

- gcePersistentDisk

- vsphereVolume

- 分布式存储

- cephfs

- glusterfs

- rbd

- 网络存储

- nfs

- iscsi

- fs

- 临时存储

- emptyDir

- gitRepo(deprecated)

- 本地存储

- hostPath

- local

- 特殊存储

- configMap

- secret

- downwardAPI

- 自定义存储

- csi

- 持久卷申请

- persistentVolumeClaim

二、存储卷使用示例

2.1、hostPath存储卷(节点本地目录与pod进行关联)

- hostPath宿主机路径,就是把pod所在的宿主机之上的脱离pod中的容器名称空间的之外的宿主机的文件系统的某一目录和pod建立关联关系,在pod删除时,存储数据不会丢失。

- hostPath存储卷官方手册 :https://kubernetes.io/zh/docs/concepts/storage/volumes/#hostpath

2.1.1、hostPath介绍

- hostPath 卷能将主机节点文件系统上的文件或目录挂载到您的 Pod 中。 虽然这不是大多数 Pod 需要的,但是它为一些应用程序提供了强大的逃生舱。

- 例如,hostPath 的一些用法有:

- 运行一个需要访问 Docker 引擎内部机制的容器;请使用 hostPath 挂载 /var/lib/docker 路径。

- 在容器中运行 cAdvisor 时,以 hostPath 方式挂载 /sys。

- 允许 Pod 指定给定的 hostPath 在运行 Pod 之前是否应该存在,是否应该创建以及应该以什么方式存在

- 除了必需的 path 属性之外,用户可以选择性地为 hostPath 卷指定 type。

- 支持的 type 值如下:

- 支持的 type 值如下:

- 当使用这种类型的卷时要小心,因为:

- 具有相同配置(例如从 podTemplate 创建)的多个 Pod 会由于节点上文件的不同而在不同节点上有不同的行为。

- 当 Kubernetes 按照计划添加资源感知的调度时,这类调度机制将无法考虑由 hostPath 使用的资源。

- 基础主机上创建的文件或目录只能由 root 用户写入。您需要在 特权容器 中以 root 身份运行进程,或者修改主机上的文件权限以便容器能够写入 hostPath 卷;

可定义的字段及Pod 示例

# explain查看定义的字段

~]# kubectl explain pod.spec.volumes.hostPath

KIND: Pod

VERSION: v1

RESOURCE: hostPath <Object>

DESCRIPTION:

HostPath represents a pre-existing file or directory on the host machine

that is directly exposed to the container. This is generally used for

system agents or other privileged things that are allowed to see the host

machine. Most containers will NOT need this. More info:

https://kubernetes.io/docs/concepts/storage/volumes#hostpath

Represents a host path mapped into a pod. Host path volumes do not support

ownership management or SELinux relabeling.

FIELDS:

path <string> -required- # 指定在宿主机的路径

Path of the directory on the host. If the path is a symlink, it will follow

the link to the real path. More info:

https://kubernetes.io/docs/concepts/storage/volumes#hostpath

type <string> # 为hostPath卷指定type

Type for HostPath Volume Defaults to "" More info:

https://kubernetes.io/docs/concepts/storage/volumes#hostpath

----------------------------------------------------------------------------------------------

# 创建pod示例

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- image: k8s.gcr.io/test-webserver

name: test-container

volumeMounts:

- mountPath: /test-pd

name: test-volume

volumes:

- name: test-volume

hostPath:

# directory location on host

path: /data

# this field is optional

type: Directory

2.1.2、hostPath实战(仅提供节点级别的数据持久性)

2.1.2.1、使用自主式pod作为演示

- 使用自主式pod作为演示

- 使用hostPath存储卷类型,type为DirectoryOrCreate:表示目录存在直接引用,不存在是创建此目录并引用

- 当pod创建时,会在pod被调度运行的node节点上创建对应的宿主机挂载路径并于pod中的container进行挂载

- 当pod被删除后,之前被调度运行的node节点的挂载点不被删除

- 当此pod再次创建,很可能会被创建到其他node节点上,此时则会在新调度的节点上创建此挂载点,无法访问到之前node节点的挂载点则无法访问到之前页面

# 为了方便演示使用自主式pod演示

volumes]# pwd

/root/mainfests/volumes

1、创建用于演示的名称空间

volumes]# kubectl create ns vol

namespace/vol created

2、创建自主式pods及挂在卷示例

volumes]# cat vol-daemon-yaml

# pod所属的标准资源api版本定义群组

apiVersion: v1

# 定义资源类型

kind: Pod

# 定义pod资源的元数据

metadata:

# 定义pod名称

name: myapp

# 定义pod所属的名称空间

namespace: vol

# 定义pod附加的标签

labels:

app: myapp

# 定义pod规格

spec:

# 定义pod中运行的容器

containers:

# 定义pod中运行的容器的名称

- name: myapp

# 定义容器运行使用的镜像

image: ikubernetes/myapp:v1

# (下面定义的存储卷属于这个pod的pause容器,所以这里定义运行的容器是不会使用此存储卷的,所以myapp容器要使用挂在卷必须复制并挂载它)

# 复制并挂载当前pod中pause的容器的挂载卷,可使用以下命令进行查看帮助

# kubectl explain pods.spec.containers.volumentMounts

volumeMounts:

# name :指定要挂载哪一个名称的存储卷(指定下面定义的volumes.-name名称)

- name: webstor

# mountPath :(挂载点) 挂载在容器内部的哪个路径下

mountPath: /usr/share/nginx/html

# readOnly :是否以只读挂载(默认读写)

# readOnly : true/false

# 定义pod存储卷

volumes:

# 定义存储卷名称

- name: webstor

# 定义存储卷类型

hostPath:

# 定义path字段,在宿主机存放的路径

path: /volumes/myapp

# 为hostPath类型卷指定type (DirectoryOrCreate:表示目录存在直接引用,不存在是创建此目录并引用)

type: DirectoryOrCreate

3、使用声明式接口创建pod

volumes]# kubectl apply -f vol-daemon-yaml

pod/myapp created

4、查看容器信息

volumes]# kubectl get pods -n vol -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp 1/1 Running 0 52s 10.244.2.26 k8s.node2 <none> <none>

# pods详细信息

volumes]# kubectl describe pods myapp -n vol

Name: myapp

Namespace: vol

Priority: 0

Node: k8s.node2/192.168.20.214

Start Time: Sat, 16 May 2020 15:23:11 +0800

Labels: app=myapp

Annotations: Status: Running

IP: 10.244.2.26

IPs:

IP: 10.244.2.26

Containers:

myapp:

Container ID: docker://63bc0f08674a249559b8d047224d4cd2b596636e402d22429f2557f63c8c8414

Image: ikubernetes/myapp:v1

Image ID: docker-pullable://ikubernetes/myapp@sha256:9c3dc30b5219788b2b8a4b065f548b922a34479577befb54b03330999d30d513

Port: <none>

Host Port: <none>

State: Running

Started: Sat, 16 May 2020 15:23:12 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts: # 容器中挂载点

/usr/share/nginx/html from webstor (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-cmgrj (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

webstor:

Type: HostPath (bare host directory volume) # 挂在卷类型为 HostPath

Path: /volumes/myapp # 挂载到宿主机的位置

HostPathType: DirectoryOrCreate # 为hostPath类型卷指定type (DirectoryOrCreate:表示目录存在直接引用,不存在是创建此目录并引用)

default-token-cmgrj:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-cmgrj

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 108s default-scheduler Successfully assigned vol/myapp to k8s.node2

Normal Pulled 107s kubelet, k8s.node2 Container image "ikubernetes/myapp:v1" already present on machine

Normal Created 107s kubelet, k8s.node2 Created container myapp

Normal Started 106s kubelet, k8s.node2 Started container myapp

4、验证宿主机挂在卷是否自动创建(pod被调度在哪个节点上,此node节点上就会创建响应的宿主机挂载目录)

volumes]# kubectl get pods -n vol -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES # 可以查看此pod被调度到k8s.node2节点运行

myapp 1/1 Running 0 6m44s 10.244.2.26 k8s.node2 <none> <none>

# 前往k8s.node2节点验证宿主机上挂载路径是否被创建

# 为hostPath类型卷指定type (DirectoryOrCreate:表示目录存在直接引用,不存在是创建此目录并引用)

[root@k8s ~]# hostname

k8s.node2

[root@k8s ~]# ls /volumes/myapp/

~]# cd /volumes/myapp/

[root@k8s myapp]# echo "<h1> page volumes </h1>" > index.html

5、通过pod地址进行访问测试

volumes]# curl 10.244.2.26

<h1> page volumes </h1>

6、验证当pod被删除后,之前被调度运行的node节点的挂载点不被删除

volumes]# kubectl delete -f vol-daemon-yaml

pod "myapp" deleted

# node节点 k8s.node2验证挂载卷是否存在

~]# ls /volumes/myapp/index.html

/volumes/myapp/index.html

7、当此pod再次创建,很可能会被创建到其他node节点上,此时则会在新调度的节点上创建此挂载点,无法访问到之前node节点的挂载点则无法访问到之前页面

volumes]# kubectl apply -f vol-daemon-yaml

# 为了实现每次调度器调度pod节点每次都会运行在同一个node节点上,可以使用pod绑定node节点运行,但是这样是不妥当的与资源高效利用是相违背的。

2.1.2.2、创建pod时绑定运行或被调度的node→ nodeName

- 明确告知调度器pod仅能调度到指定的nodeName节点的node上去运行

1、创建pod,并使用nodeName字段指定pod被调度到满足nodeName名称的node节点运行

volumes]# cat vol-nodename-daemon-yaml

# pod所属的标准资源api版本定义群组

apiVersion: v1

# 定义资源类型

kind: Pod

# 定义pod资源的元数据

metadata:

# 定义pod名称

name: myapp

# 定义pod所属的名称空间

namespace: vol

# 定义pod附加的标签

labels:

app: myapp

# 定义pod规格

spec:

# 执行此pod进行调度到执行满足node节点名称的节点上运行此pod

nodeName: k8s.node2

# 定义pod中运行的容器

containers:

# 定义pod中运行的容器的名称

- name: myapp

# 定义容器运行使用的镜像

image: ikubernetes/myapp:v1

# (下面定义的存储卷属于这个pod的pause容器,所以这里定义运行的容器是不会使用此存储卷的,所以myapp容器要使用挂在卷必须复制并挂载它)

# 复制并挂载当前pod中pause的容器的挂载卷,可使用以下命令进行查看帮助

# kubectl explain pods.spec.containers.volumentMounts

volumeMounts:

# name :指定要挂载哪一个名称的存储卷(指定下面定义的volumes.-name名称)

- name: webstor

# mountPath :(挂载点) 挂载在容器内部的哪个路径下

mountPath: /usr/share/nginx/html

# readOnly :是否以只读挂载(默认读写)

# readOnly : true/false

# 定义pod存储卷

volumes:

# 定义存储卷名称

- name: webstor

# 定义存储卷类型

hostPath:

# 定义path字段,在宿主机存放的路径

path: /volumes/myapp

# 为hostPath类型卷指定type (DirectoryOrCreate:表示目录存在直接引用,不存在是创建此目录并引用)

type: DirectoryOrCreate

2、使用声明式接口创建此pod

volumes]# kubectl apply -f vol-nodename-daemon-yaml

pod/myapp created

3、验证pod资源是否已经运行在指定nodeName节点的node上(这样每次pod被创建都会运行在此node节点上)

volumes]# kubectl get pods -n vol -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp 1/1 Running 0 81s 10.244.2.27 k8s.node2 <none> <none>

volumes]# curl 10.244.2.27

<h1> page volumes </h1>

2.2、local存储卷(节点级设备:local 卷指的是所挂载的某个本地存储设备,例如磁盘、分区或者目录)

2.2.1、local介绍

- 注意: alpha 版本的 PersistentVolume NodeAffinity 注释已被取消,将在将来的版本中废弃。 用户必须更新现有的使用该注解的 PersistentVolume,以使用新的 PersistentVolume

NodeAffinity字段。 - local 卷指的是所挂载的某个本地存储设备,例如磁盘、分区或者目录。

- local 卷只能用作静态创建的持久卷。尚不支持动态配置。

- 相比 hostPath 卷,local 卷可以以持久和可移植的方式使用,而无需手动将 Pod 调度到节点,因为系统通过查看 PersistentVolume 所属节点的亲和性配置,就能了解卷的节点约束。

- 然而,local 卷仍然取决于底层节点的可用性,并不是适合所有应用程序。 如果节点变得不健康,那么local 卷也将变得不可访问,并且使用它的 Pod 将不能运行。 使用 local 卷的应用程序必须能够容忍这种可用性的降低,以及因底层磁盘的耐用性特征而带来的潜在的数据丢失风险。

下面是一个使用 local 卷和 nodeAffinity 的持久卷示例:

apiVersion: v1

kind: PersistentVolume

metadata:

name: example-pv

spec:

capacity:

storage: 100Gi

# volumeMode field requires BlockVolume Alpha feature gate to be enabled.

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Delete

storageClassName: local-storage

local:

path: /mnt/disks/ssd1 # 将设备磁盘给定container使用

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- example-node

- 使用 local 卷时,需要使用 PersistentVolume 对象的 nodeAffinity 字段。 它使 Kubernetes 调度器能够将使用 local 卷的 Pod 正确地调度到合适的节点。

- 现在,可以将 PersistentVolume 对象的 volumeMode 字段设置为 “Block”(而不是默认值 “Filesystem”),以将 local 卷作为原始块设备暴露出来。 volumeMode 字段需要启用 Alpha 功能 BlockVolume。

- 当使用 local 卷时,建议创建一个 StorageClass,将 volumeBindingMode 设置为 WaitForFirstConsumer。 请参考 示例。 延迟卷绑定操作可以确保 Kubernetes 在为 PersistentVolumeClaim 作出绑定决策时,会评估 Pod 可能具有的其他节点约束,例如:如节点资源需求、节点选择器、Pod 亲和性和 Pod 反亲和性。

- 可以在 Kubernetes 之外单独运行静态驱动以改进对 local 卷的生命周期管理。 请注意,此驱动不支持动态配置。 有关如何运行外部 local 卷驱动的示例,请参考 local 卷驱动用户指南。

注意: 如果不使用外部静态驱动来管理卷的生命周期,则用户需要手动清理和删除 local 类型的持久卷。

2.2.2、local实战

- 可使用nodeAffinity 或者 nodeName对pod运行的node名称进行运行绑定;

2.3、emptyDir临时存储卷(pod中两个container共享临时存储卷,pod删除临时卷也会删除)

- 使用场景

- 1、为pod中的容器提供缓存存储空间(将node节点上的内存模拟为一块磁盘使用)

- 2、此pod无持久化需求,但是pod中运行两个容器,两个container需要共享一些数据(container之前是无法共享mount),可以使用两个容器全部对emptyDir临时存储卷进行共享读写,完成容器间数据共享

- 一个emptyDir 第一次创建是在一个pod被指定到具体node的时候,并且会一直存在在pod的生命周期当中,正如它的名字一样,它初始化是一个空的目录,pod中的容器都可以读写这个目录,这个目录可以被挂在到各个容器相同或者不相同的的路径下。当一个pod因为任何原因被移除的时候,这些数据会被永久删除。注意:一个容器崩溃了不会导致数据的丢失,因为容器的崩溃并不移除pod.

- emptyDir存储卷官方手册 :https://kubernetes.io/zh/docs/concepts/storage/volumes/#emptydir

2.3.1、emptyDir介绍

- 当 Pod 指定到某个节点上时,首先创建的是一个 emptyDir 卷,并且只要 Pod 在该节点上运行,卷就一直存在。 就像它的名称表示的那样,卷最初是空的。 尽管 Pod 中的容器挂载 emptyDir 卷的路径可能相同也可能不同,但是这些容器都可以读写 emptyDir 卷中相同的文件。 当 Pod 因为某些原因被从节点上删除时,emptyDir 卷中的数据也会永久删除。

- 注意: 容器崩溃并不会导致 Pod 被从节点上移除,因此容器崩溃时

emptyDir卷中的数据是安全的。 - emptyDir 的一些用途:

- 缓存空间,例如基于磁盘的归并排序。

- 为耗时较长的计算任务提供检查点,以便任务能方便地从崩溃前状态恢复执行。

- 在 Web 服务器容器服务数据时,保存内容管理器容器获取的文件。

- 默认情况下, emptyDir 卷存储在支持该节点所使用的介质上;这里的介质可以是磁盘或 SSD 或网络存储,这取决于您的环境。 但是,您可以将 emptyDir.medium 字段设置为 "Memory",以告诉 Kubernetes 为您安装 tmpfs(基于 RAM 的文件系统)。 虽然 tmpfs 速度非常快,但是要注意它与磁盘不同。 tmpfs 在节点重启时会被清除,并且您所写入的所有文件都会计入容器的内存消耗,受容器内存限制约束。

可定义的字段及Pod 示例

# explain查看定义的字段

volumes]# kubectl explain pods.spec.volumes.emptyDir

KIND: Pod

VERSION: v1

RESOURCE: emptyDir <Object>

DESCRIPTION:

EmptyDir represents a temporary directory that shares a pod's lifetime.

More info: https://kubernetes.io/docs/concepts/storage/volumes#emptydir

Represents an empty directory for a pod. Empty directory volumes support

ownership management and SELinux relabeling.

FIELDS:

medium <string> # 媒介、类型可使用值为 : "" 或者 Memory

# Momory 可以将node节点的内存空间拿来使用

# "" 表示disk,会在node节点上的一次磁盘空间上找一段空间当作存储卷使用

What type of storage medium should back this directory. The default is ""

which means to use the node's default medium. Must be an empty string

(default) or Memory. More info:

https://kubernetes.io/docs/concepts/storage/volumes#emptydir

sizeLimit <string> # 使用Momory时一定要定义sizeLimit,表示有多少内存空间当作存储卷可被容器进程拿来使用即空间使用

Total amount of local storage required for this EmptyDir volume. The size

limit is also applicable for memory medium. The maximum usage on memory

medium EmptyDir would be the minimum value between the SizeLimit specified

here and the sum of memory limits of all containers in a pod. The default

is nil which means that the limit is undefined. More info:

http://kubernetes.io/docs/user-guide/volumes#emptydir

----------------------------------------------------------------------------------------

# 创建pod示例

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- image: k8s.gcr.io/test-webserver

name: test-container

volumeMounts:

- mountPath: /cache

name: cache-volume

volumes:

- name: cache-volume

emptyDir: {}

2.3.2、emptyDir实战

2.3.2.1、博客鉴赏

# 参考博客

[root@k8s-master ~]# kubectl explain pods.spec.volumes.emptyDir #查看emptyDir存储定义

[root@k8s-master ~]# kubectl explain pods.spec.containers.volumeMounts #查看容器挂载方式

[root@k8s-master ~]# cd mainfests && mkdir volumes && cd volumes

[root@k8s-master volumes]# cp ../pod-demo.yaml ./

[root@k8s-master volumes]# mv pod-demo.yaml pod-vol-demo.yaml

[root@k8s-master volumes]# vim pod-vol-demo.yaml #创建emptyDir的清单

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

namespace: default

labels:

app: myapp

tier: frontend

annotations:

magedu.com/create-by:"cluster admin"

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

volumeMounts: #在容器内定义挂载存储名称和挂载路径(两个容器共享同一个存储卷)

- name: html

mountPath: /usr/share/nginx/html/

- name: busybox

image: busybox:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- name: html

mountPath: /data/ #在容器内定义挂载存储名称和挂载路径(两个容器共享同一个存储卷)

command: ['/bin/sh','-c','while true;do echo $(date) >> /data/index.html;sleep 2;done']

volumes: #定义存储卷

- name: html #定义存储卷名称

emptyDir: {} #定义存储卷类型(磁盘)

[root@k8s-master volumes]# kubectl apply -f pod-vol-demo.yaml

pod/pod-vol-demo created

[root@k8s-master volumes]# kubectl get pods

NAME READY STATUS RESTARTS AGE

pod-vol-demo 2/2 Running 0 27s

[root@k8s-master volumes]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

......

pod-vol-demo 2/2 Running 0 16s 10.244.2.34 k8s-node02

......

在上面,我们定义了2个容器,其中一个容器是输入日期到index.html中,然后验证访问nginx的html是否可以获取日期。以验证两个容器之间挂载的emptyDir实现共享。如下访问验证:

[root@k8s-master volumes]# curl 10.244.2.34 #访问验证

Tue Oct 9 03:56:53 UTC 2018

Tue Oct 9 03:56:55 UTC 2018

Tue Oct 9 03:56:57 UTC 2018

Tue Oct 9 03:56:59 UTC 2018

Tue Oct 9 03:57:01 UTC 2018

Tue Oct 9 03:57:03 UTC 2018

Tue Oct 9 03:57:05 UTC 2018

Tue Oct 9 03:57:07 UTC 2018

Tue Oct 9 03:57:09 UTC 2018

Tue Oct 9 03:57:11 UTC 2018

Tue Oct 9 03:57:13 UTC 2018

Tue Oct 9 03:57:15 UTC 2018

2.3.2.2、技术总结

chapter7]# cat vol-emptydir.yaml

apiVersion: v1

kind: Pod

metadata:

name: vol-emptydir-pod

namespace: vol

spec:

volumes:

- name: html

# 定义emptydir存储卷类型为磁盘

emptyDir: {}

containers:

- name: myapp

image: ikubernetes/myapp:v1

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

- name: pagegen

image: alpine

volumeMounts:

- name: html

mountPath: /html

# 定义容器内部运行的命令

command: ["/bin/sh", "-c"]

# 定义运行命令传递的参数

args:

- while true; do

echo $(hostname) $(date) >> /html/index.html;

sleep 10;

done

chapter7]# kubectl apply -f vol-emptydir.yaml

pod/vol-emptydir-pod created

chapter7]# kubectl get pods -n vol

NAME READY STATUS RESTARTS AGE

myapp 1/1 Running 0 45m

vol-emptydir-pod 2/2 Running 0 40s

chapter7]# kubectl get pods -n vol -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp 1/1 Running 0 46m 10.244.2.27 k8s.node2 <none> <none>

vol-emptydir-pod 2/2 Running 0 96s 10.244.1.41 k8s.node1 <none> <none>

chapter7]# kubectl describe pods vol-emptydir-pod -n vol

Name: vol-emptydir-pod

Namespace: vol

Priority: 0

Node: k8s.node1/192.168.20.212

Start Time: Sat, 16 May 2020 16:41:32 +0800

Labels: <none>

Annotations: Status: Running

IP: 10.244.1.41

IPs:

IP: 10.244.1.41

Containers:

myapp:

Container ID: docker://a19cb1266f4db46f4940f74a155dbc608ef64485a7863e7b0bd62063dcda2423

Image: ikubernetes/myapp:v1

Image ID: docker-pullable://ikubernetes/myapp@sha256:9c3dc30b5219788b2b8a4b065f548b922a34479577befb54b03330999d30d513

Port: <none>

Host Port: <none>

State: Running

Started: Sat, 16 May 2020 16:41:33 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts: # 容器挂载

/usr/share/nginx/html from html (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-cmgrj (ro)

pagegen:

Container ID: docker://627b5cb43a4cacc6b57386b8335eb53fca126b4438c87ffa24d2ff408a4d1ea5

Image: alpine

Image ID: docker-pullable://alpine@sha256:9a839e63dad54c3a6d1834e29692c8492d93f90c59c978c1ed79109ea4fb9a54

Port: <none>

Host Port: <none>

Command:

/bin/sh

-c

Args:

while true; do echo $(hostname) $(date) >> /html/index.html; sleep 10; done

State: Running

Started: Sat, 16 May 2020 16:42:08 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts: # 容器挂载

/html from html (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-cmgrj (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes: # 存储卷

html: # 存储卷名称

Type: EmptyDir (a temporary directory that shares a pod's lifetime) # 存储卷类型

Medium:

SizeLimit: <unset>

default-token-cmgrj:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-cmgrj

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m25s default-scheduler Successfully assigned vol/vol-emptydir-pod to k8s.node1

Normal Pulled 2m24s kubelet, k8s.node1 Container image "ikubernetes/myapp:v1" already present on machine

Normal Created 2m24s kubelet, k8s.node1 Created container myapp

Normal Started 2m24s kubelet, k8s.node1 Started container myapp

Normal Pulling 2m24s kubelet, k8s.node1 Pulling image "alpine"

Normal Pulled 109s kubelet, k8s.node1 Successfully pulled image "alpine"

Normal Created 109s kubelet, k8s.node1 Created container pagegen

Normal Started 109s kubelet, k8s.node1 Started container pagegen

chapter7]# curl 10.244.1.41

vol-emptydir-pod Sat May 16 08:42:08 UTC 2020

vol-emptydir-pod Sat May 16 08:42:18 UTC 2020

vol-emptydir-pod Sat May 16 08:42:28 UTC 2020

vol-emptydir-pod Sat May 16 08:42:38 UTC 2020

vol-emptydir-pod Sat May 16 08:42:48 UTC 2020

vol-emptydir-pod Sat May 16 08:42:58 UTC 2020

vol-emptydir-pod Sat May 16 08:43:08 UTC 2020

vol-emptydir-pod Sat May 16 08:43:18 UTC 2020

vol-emptydir-pod Sat May 16 08:43:28 UTC 2020

vol-emptydir-pod Sat May 16 08:43:38 UTC 2020

vol-emptydir-pod Sat May 16 08:43:48 UTC 2020

vol-emptydir-pod Sat May 16 08:43:58 UTC 2020

vol-emptydir-pod Sat May 16 08:44:08 UTC 2020

vol-emptydir-pod Sat May 16 08:44:18 UTC 2020

vol-emptydir-pod Sat May 16 08:44:28 UTC 2020

vol-emptydir-pod Sat May 16 08:44:38 UTC 2020

vol-emptydir-pod Sat May 16 08:44:48 UTC 2020

vol-emptydir-pod Sat May 16 08:44:58 UTC 2020

vol-emptydir-pod Sat May 16 08:45:08 UTC 2020

vol-emptydir-pod Sat May 16 08:45:18 UTC 2020

vol-emptydir-pod Sat May 16 08:45:28 UTC 2020

vol-emptydir-pod Sat May 16 08:45:38 UTC 2020

vol-emptydir-pod Sat May 16 08:45:48 UTC 2020

vol-emptydir-pod Sat May 16 08:45:58 UTC 2020

vol-emptydir-pod Sat May 16 08:46:08 UTC 2020

2.4、NFS存储卷

- nfs使的我们可以挂在已经存在的共享到的我们的Pod中,和emptyDir不同的是,emptyDir会被删除当我们的Pod被删除的时候,但是nfs不会被删除,仅仅是解除挂在状态而已,这就意味着NFS能够允许我们提前对数据进行处理,而且这些数据可以在Pod之间相互传递.并且,nfs可以同时被多个pod挂在并进行读写。

- NFS持久化存储卷官方手册 :https://kubernetes.io/zh/docs/concepts/storage/volumes/#nfs

2.4.1、NFS持久化介绍

- nfs 卷能将 NFS (网络文件系统) 挂载到您的 Pod 中。 不像 emptyDir 那样会在删除 Pod 的同时也会被删除,nfs 卷的内容在删除 Pod 时会被保存,卷只是被卸载掉了。 这意味着 nfs 卷可以被预先填充数据,并且这些数据可以在 Pod 之间"传递”。

可定义的字段及Pod 示例

- kubectl explain pods.spec.volumes.nfs

- path : nfs-server端导出的真实目录(nfs服务端真实共享的目录)

- server : nfs-server的地址及端口,端口默认

- readOnly :nfs数据持久化是否为只读

- true

- false

# explain查看定义的字段

chapter7]# kubectl explain pods.spec.volumes.nfs

KIND: Pod

VERSION: v1

RESOURCE: nfs <Object>

DESCRIPTION:

NFS represents an NFS mount on the host that shares a pod's lifetime More

info: https://kubernetes.io/docs/concepts/storage/volumes#nfs

Represents an NFS mount that lasts the lifetime of a pod. NFS volumes do

not support ownership management or SELinux relabeling.

FIELDS:

path <string> -required-

Path that is exported by the NFS server. More info:

https://kubernetes.io/docs/concepts/storage/volumes#nfs

readOnly <boolean>

ReadOnly here will force the NFS export to be mounted with read-only

permissions. Defaults to false. More info:

https://kubernetes.io/docs/concepts/storage/volumes#nfs

server <string> -required-

Server is the hostname or IP address of the NFS server. More info:

https://kubernetes.io/docs/concepts/storage/volumes#nfs

2.4.2、NFS持久化实战

2.4.2.1、NFS-Server安装部署及k8s个节点客户端安装测试挂载

1、安装NFS服务及创建共享文件夹

~]# yum install nfs-utils -y

~]# mkdir -p /volumes/v1

~]# chown -R nfsnobody.nfsnobody /volumes/v1

2、配置NFS服务配置文件

~]# cat /etc/exports

# /volumes/v1 192.168.20.0/24(rw,async) #/home/nfs/ 192.168.248.0/24(rw,sync,fsid=0) 同192.168.248.0/24一个网络号的主机可以挂载NFS服务器上的/home/nfs/目录到自己的文件系统中,rw表示可读写;sync表示同步写,fsid=0表示将/data找个目录包装成根目录,no_root_squash不压缩root用户权限

/volumes/v1 192.168.20.0/24(rw,async,no_root_squash)

3、启动nfs服务 #先为rpcbind和nfs做开机启动:(必须先启动rpcbind服务)

~]# systemctl start rpcbind.service nfs-server.service

~]# systemctl enable rpcbind.service nfs-server.service

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.

4、检查 NFS 服务器是否挂载我们想共享的目录

~]# rpcinfo -p

5、使配置生效

~]# exportfs -r

~]# exportfs

/volumes/v1

192.168.20.0/24

6、(k8s集群各个节点)客户端安装及测试挂载(k8s中 nfs类型的存储卷时不需要手动挂载的,如果master节点不运行pod则无需安装nfs-client)

~]# yum -y install nfs-utils

~]# showmount -e 192.168.20.172

Export list for 192.168.20.172:

/volumes/v1 192.168.20.0/24

# mount -t nfs 192.168.248.208:/volumes/v1 /home/nfs

2.4.2.2、创建pod并使用nfs存储卷

1、创建pod资源配置清单

chapter7]# cat vol-nfs.yaml

apiVersion: v1

kind: Pod

metadata:

name: vol-nfs-pod

namespace: vol

labels:

app: redis

spec:

containers:

- name: redis

image: redis:alpine

ports:

- containerPort: 6379

name: redisport

volumeMounts:

- mountPath: /data

name: data

volumes:

- name: data

nfs:

# nfs-server的地址

server: 192.168.20.172

# nfs-server的真实共享路径

path: /volumes/v1

2、使用声明式接口创建pod

chapter7]# kubectl apply -f vol-nfs.yaml

pod/vol-nfs-pod created

3、获取pod信息

chapter7]# kubectl get pods -n vol -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp 1/1 Running 0 108m 10.244.2.27 k8s.node2 <none> <none>

vol-emptydir-pod 2/2 Running 0 63m 10.244.1.41 k8s.node1 <none> <none>

vol-nfs-pod 1/1 Running 0 25s 10.244.2.30 k8s.node2 <none> <none>

chapter7]# kubectl describe pods vol-nfs-pod -n vol

Name: vol-nfs-pod

Namespace: vol

Priority: 0

Node: k8s.node2/192.168.20.214 # 此pod运行的node节点

Start Time: Sat, 16 May 2020 17:44:19 +0800

Labels: app=redis

Annotations: Status: Running

IP: 10.244.2.30

IPs:

IP: 10.244.2.30

Containers:

redis:

Container ID: docker://367d0d2b71a7c5b19be1769e106967ef5638861884db3cd3a989cf05db6ec8d2

Image: redis:alpine

Image ID: docker-pullable://redis@sha256:5f7869cb705fc567053e9ff2fac25d6bca2a680c2528dc8f224873758f17c248

Port: 6379/TCP

Host Port: 0/TCP

State: Running

Started: Sat, 16 May 2020 17:44:20 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts: # 容器挂载路劲

/data from data (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-cmgrj (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

data: # 存储卷类型为NFS

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: 192.168.20.172

Path: /volumes/v1 # 挂载NFS-Server路径

ReadOnly: false # 非只读挂载即可写

default-token-cmgrj:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-cmgrj

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 114s default-scheduler Successfully assigned vol/vol-nfs-pod to k8s.node2

Normal Pulled 113s kubelet, k8s.node2 Container image "redis:alpine" already present on machine

Normal Created 113s kubelet, k8s.node2 Created container redis

Normal Started 113s kubelet, k8s.node2 Started container redis

You have new mail in /var/spool/mail/root

4、pod详细信息中可查看到的运行node为 k8s.node2,master节点连入pod生成redis数据

chapter7]# kubectl exec -it vol-nfs-pod -n vol -- /bin/sh

/data # redis-cli

127.0.0.1:6379> SET kay1 "daizhe.net.cn"

OK

127.0.0.1:6379> BGSAVE # 手动触发保存

Background saving started

127.0.0.1:6379> exit

/data # ls

dump.rdb

5、验证nfs-server目录中是否存在redis持久化文件

~]# ls /volumes/v1/

dump.rdb

6、模拟pod删除,并验证redis持久化文件是否存在

chapter7]# kubectl delete pods vol-nfs-pod -n vol

pod "vol-nfs-pod" deleted

# 验证nfs-server是否存在redis持久化文件

~]# ls /volumes/v1/

dump.rdb

7、重建pod并手动指定运行其他node节点,模拟测试运行其他节点是否可以读取到持久化文件

chapter7]# cat vol-nfs.yaml

apiVersion: v1

kind: Pod

metadata:

name: vol-nfs-pod

namespace: vol

labels:

app: redis

spec:

# 为了演示指定此pod运行的node节点

nodeName: k8s.node1

containers:

- name: redis

image: redis:alpine

ports:

- containerPort: 6379

name: redisport

volumeMounts:

- mountPath: /data

name: data

volumes:

- name: data

nfs:

# nfs-server的地址

server: 192.168.20.172

# nfs-server的真实共享路径

path: /volumes/v1

# 创建此pod

chapter7]# kubectl apply -f vol-nfs.yaml

pod/vol-nfs-pod created

# 获取pod详细信息

chapter7]# kubectl get pods -n vol -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myapp 1/1 Running 0 122m 10.244.2.27 k8s.node2 <none> <none>

vol-emptydir-pod 2/2 Running 0 77m 10.244.1.41 k8s.node1 <none> <none>

vol-nfs-pod 0/1 Running 0 33s <none> k8s.node1 <none> <none> # 目前运行在 k8s.node1 node节点上

8、再次连入pod中验证持久化文件是否存在并被redis读取

chapter7]# kubectl exec -it vol-nfs-pod -n vol -- /bin/sh

/data # ls

dump.rdb

/data # redis-cli

127.0.0.1:6379> get key1

(nil)

127.0.0.1:6379> get kay1

"daizhe.net.cn" # 此前pod上创建的key是存在的

2.4.3、NFS持久化博客鉴赏

(1)在stor01节点上安装nfs,并配置nfs服务

[root@stor01 ~]# yum install -y nfs-utils ==》192.168.56.14

[root@stor01 ~]# mkdir /data/volumes -pv

[root@stor01 ~]# vim /etc/exports

/data/volumes 192.168.56.0/24(rw,no_root_squash)

[root@stor01 ~]# systemctl start nfs

[root@stor01 ~]# showmount -e

Export list for stor01:

/data/volumes 192.168.56.0/24

(2)在node01和node02节点上安装nfs-utils,并测试挂载

[root@k8s-node01 ~]# yum install -y nfs-utils

[root@k8s-node02 ~]# yum install -y nfs-utils

[root@k8s-node02 ~]# mount -t nfs stor01:/data/volumes /mnt

[root@k8s-node02 ~]# mount

......

stor01:/data/volumes on /mnt type nfs4 (rw,relatime,vers=4.1,rsize=131072,wsize=131072,namlen=255,hard,proto=tcp,port=0,timeo=600,retrans=2,sec=sys,clientaddr=192.168.56.13,local_lock=none,addr=192.168.56.14)

[root@k8s-node02 ~]# umount /mnt/

(3)创建nfs存储卷的使用清单

[root@k8s-master volumes]# cp pod-hostpath-vol.yaml pod-nfs-vol.yaml

[root@k8s-master volumes]# vim pod-nfs-vol.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-vol-nfs

namespace: default

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

nfs:

path: /data/volumes

server: stor01

[root@k8s-master volumes]# kubectl apply -f pod-nfs-vol.yaml

pod/pod-vol-nfs created

[root@k8s-master volumes]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

pod-vol-nfs 1/1 Running 0 21s 10.244.2.38 k8s-node02

(3)在nfs服务器上创建index.html

[root@stor01 ~]# cd /data/volumes

[root@stor01 volumes ~]# vim index.html

<h1> nfs stor01</h1>

[root@k8s-master volumes]# curl 10.244.2.38

<h1> nfs stor01</h1>

[root@k8s-master volumes]# kubectl delete -f pod-nfs-vol.yaml #删除nfs相关pod,再重新创建,可以得到数据的持久化存储

pod "pod-vol-nfs" deleted

[root@k8s-master volumes]# kubectl apply -f pod-nfs-vol.yaml

2.5、k8s中想要使用存储卷

- k8s中使用存储卷一般为两个步骤

- 首先要定义存储卷 spec.volumes

- 容器中挂载存储卷 spec.containers[].volumesMounts

向往的地方很远,喜欢的东西很贵,这就是我努力的目标。