11-K8S Basic-Ingress和Ingress Controller(七层流量的路由规则--> http/https)

一、什么是Ingress

- 在k8s之上要想使用七层调度,需要两个组件支撑

- Ingress Controller : 需要事先部署并运行在k8s之上

- 可以为Pod于Pod之间的访问或提供对外服务时,可以借助Pod Controller实现七层代理,但是配置Pod Controller 需要借助Ingress定义配置信息

- 从前面的学习,我们可以了解到Kubernetes暴露服务的方式目前只有三种:LoadBlancer Service、ExternalName、NodePort Service、Ingress;而我们需要将集群内服务提供外界访问就会产生以下几个问题:

1.1、Pod漂移问题

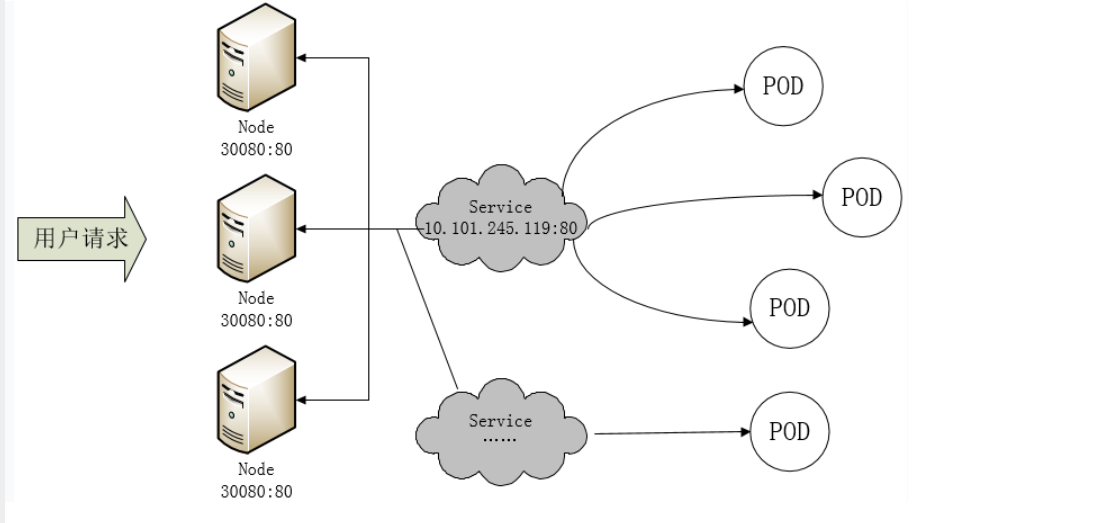

- Kubernetes 具有强大的副本控制能力,能保证在任意副本(Pod)挂掉时自动从其他机器启动一个新的,还可以动态扩容等,通俗地说,这个 Pod 可能在任何时刻出现在任何节点上,也可能在任何时刻死在任何节点上;那么自然随着 Pod 的创建和销毁,Pod IP 肯定会动态变化;那么如何把这个动态的 Pod IP 暴露出去?这里借助于 Kubernetes 的 Service 机制,Service 可以以标签的形式选定一组带有指定标签的 Pod,并监控和自动负载他们的 Pod IP,那么我们向外暴露只暴露 Service IP 就行了;这就是 NodePort 模式:即在每个节点上开起一个端口,然后转发到内部 Pod IP 上,如下图所示:

- 此时的访问方式:http://nodeip:nodeport/

1.2、端口管理问题

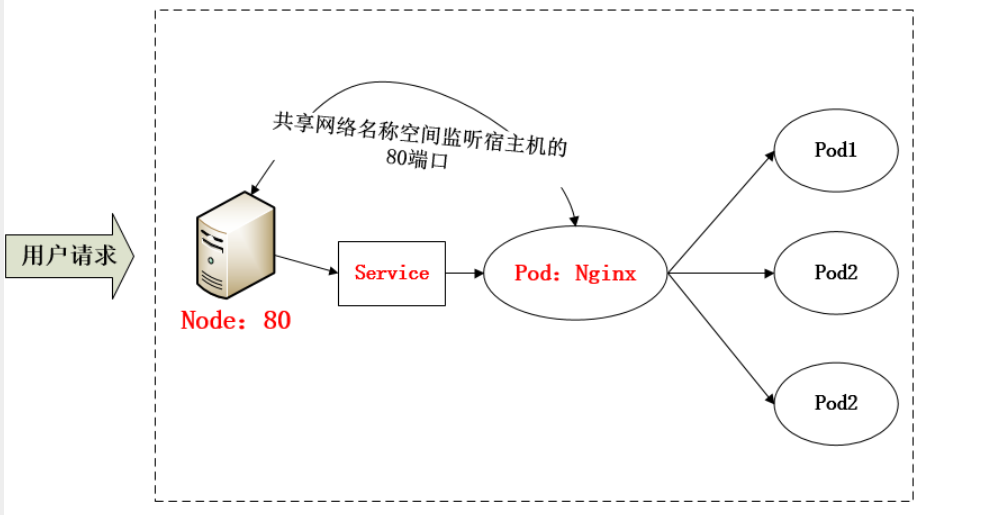

- 采用 NodePort 方式暴露服务面临问题是,服务一旦多起来,NodePort 在每个节点上开启的端口会及其庞大,而且难以维护;这时,我们可以能否使用一个Nginx直接对内进行转发呢?众所周知的是,Pod与Pod之间是可以互相通信的,而Pod是可以共享宿主机的网络名称空间的,也就是说当在共享网络名称空间时,Pod上所监听的就是Node上的端口。那么这又该如何实现呢?简单的实现就是使用 DaemonSet 在每个 Node 上监听 80,然后写好规则,因为 Nginx 外面绑定了宿主机 80 端口(就像 NodePort),本身又在集群内,那么向后直接转发到相应 Service IP 就行了,如下图所示:

1.3、域名分配及动态更新问题

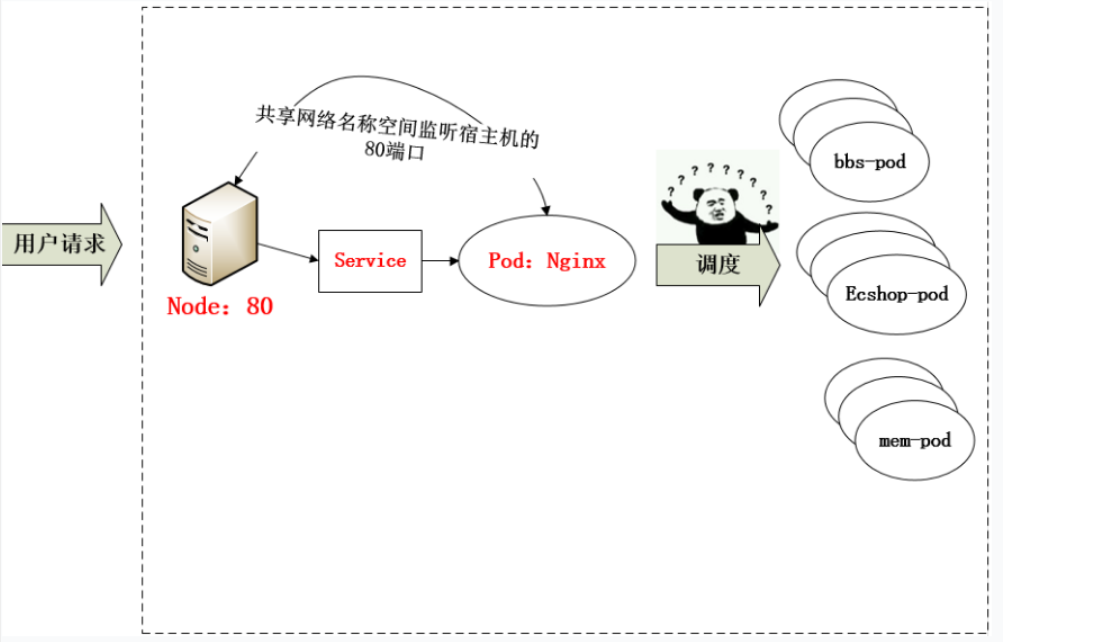

- 从上面的方法,采用 Nginx-Pod 似乎已经解决了问题,但是其实这里面有一个很大缺陷:当每次有新服务加入又该如何修改 Nginx 配置呢??我们知道使用Nginx可以通过虚拟主机域名进行区分不同的服务,而每个服务通过upstream进行定义不同的负载均衡池,再加上location进行负载均衡的反向代理,在日常使用中只需要修改nginx.conf即可实现,那在K8S中又该如何实现这种方式的调度呢???

- 假设后端的服务初始服务只有ecshop,后面增加了bbs和member服务,那么又该如何将这2个服务加入到Nginx-Pod进行调度呢?总不能每次手动改或者Rolling Update 前端 Nginx Pod 吧!!此时 Ingress 出现了,如果不算上面的Nginx,Ingress 包含两大组件:Ingress Controller 和 Ingress。

- Ingress 简单的理解就是你原来需要改 Nginx 配置,然后配置各种域名对应哪个 Service,现在把这个动作抽象出来,变成一个 Ingress 对象,你可以用 yaml 创建,每次不要去改 Nginx 了,直接改 yaml 然后创建/更新就行了;那么问题来了:”Nginx 该怎么处理?”

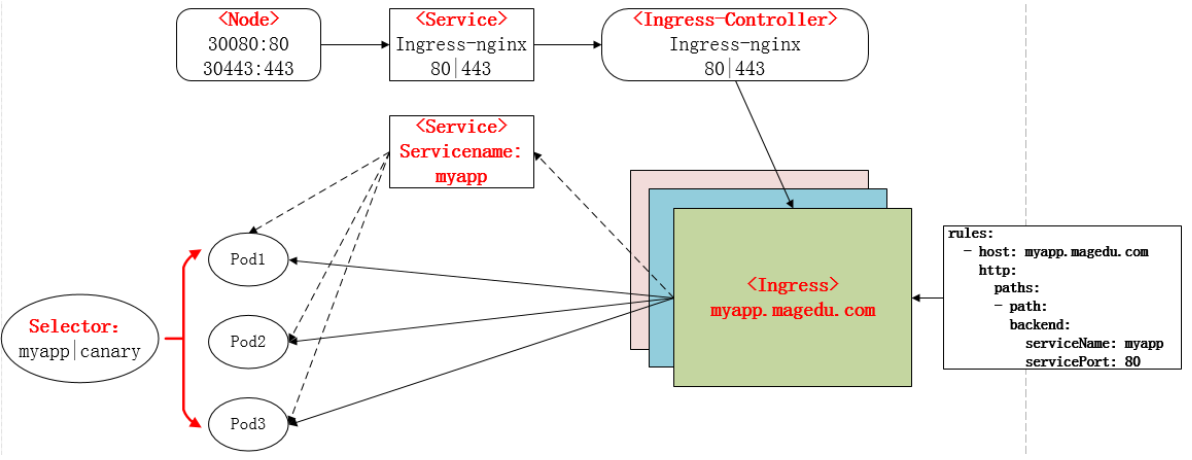

- Ingress Controller 这东西就是解决 “Nginx 的处理方式” 的;Ingress Controoler 通过与 Kubernetes API 交互,动态的去感知集群中 Ingress 规则变化,然后读取他,按照他自己模板生成一段 Nginx 配置,再写到 Nginx Pod 里,最后 reload 一下,工作流程如下图:

- 实际上Ingress也是Kubernetes API的标准资源类型之一,它其实就是一组基于DNS名称(host)或URL路径把请求转发到指定的Service资源的规则。用于将集群外部的请求流量转发到集群内部完成的服务发布。我们需要明白的是,Ingress资源自身不能进行“流量穿透”,仅仅是一组规则的集合,这些集合规则还需要其他功能的辅助,比如监听某套接字,然后根据这些规则的匹配进行路由转发,这些能够为Ingress资源监听套接字并将流量转发的组件就是Ingress Controller。

- PS:Ingress 控制器不同于Deployment 控制器的是,Ingress控制器不直接运行为kube-controller-manager的一部分,它仅仅是Kubernetes集群的一个附件,类似于CoreDNS,需要在集群上单独部署。

1.4、Nginx代理理解

upstream shop {}

upstreat user {}

server {

ServerName www.ilinux.io;

location /eshop/ {

proxy_pass http://eshop/;

}

location /user/ {

proxy_pass http://user/;

}

}

--------------------------------------------------

upstream shop {}

upstreat user {}

server {

ServerName www.ilinux.io;

location / {

proxy_pass http://eshop/;

}

}

server {

ServerName www.magedu.com;

location / {

proxy_pass http://user/;

}

}

1.5、简单的Ingress示例

- Ingress资源时基于HTTP虚拟主机或URL的转发规则,需要强调的是,这是一条转发规则。它在资源配置清单中的spec字段中嵌套了rules、backend和tls等字段进行定义。如下示例中定义了一个Ingress资源,其包含了一个转发规则:将发往myapp.magedu.com的请求,代理给一个名字为myapp的Service资源。

- 注意:ingress七层代理进程自身步支持使用标签选择器挑选真正提供服务的Pod对象,因此,他需要由Service对象的辅助完成此类功能。

- (所以还是要先为后端的一组提供服务的Pod定义一个Service,然后Ingress变相借助Service的标签选择器实现对后端Pod的过滤,Service在此仅作为一个辅助的角色实现Pod根据标签选择器发现机制及挑选机制)

apiVersion : extensions/v1beta1

kind : Ingress

metadata :

name : test-ingress

annotations :

nginx.ingress.kubernetes.io/rewrite-target : /

spec :

rules : # rules是一个对象列表,每一个列表是一个虚拟代理形式

- http : myapp.magedu.com #指定虚拟主机名称,如果没指定代表Ingress Controller 所在的虚拟主机的名称

paths :

- path : /testpath #Server中的Url访问的路径映射到backend后端主机的upstream (-path 指明,提供客户端访问的url路径,相当于nginx中的location)

backend : #backend相当于后端的虚拟主机,真正提供服务的upstream (service & service-port)

#关联到的后端Service资源,它的标签选择器匹配到的Pod对象将成为反代服务的"后端"

serviceName : test

servicePort : 80

- Ingress七层代理中的Service 作用,以为一个辅助工具:

- Service的作用一 :标签过滤器

- 因为Ingress并不支持标签选择器来选择代理后端哪些pod,所以只能借助Service来完成,所以此处Service的资源仅仅是帮助Ingress提供一个选择后端Pod的标签选择器,并不是我们要代理的后端。

- 所以在定义backend是要使用serviceName资源,所以在定义之前必须实现为一组Pod来创建一个Service。切记Service并不是作为用户的访问一端,仅用于帮助七层代理提供标签选择器。

- 如果七层代理一旦开始代理,所有的请求到达Ingress Controllers,代理后是直达pod,不会经过Service。所以Service在这里仅作为标签选择器使用。

- Service的作用二 :变相将Pod变动让Ingress Controller捕获

- Ingress Controller 是无法识别到被Service标签选择的Pod的数量的,所以Service的第二个作用就是通过标签过滤器检查后端Pod的数量。

- Service的作用一 :标签过滤器

二、Ingress Controllers介绍(运行为Pod的标准的K8S之上的Pod对象)

2.1、Ingress Controllers介绍

- ingress仅时用于定义流量转发和调度的通用格式的配置信息,他们需要转换为特定的具有http协议转发和调度功能的应用程序 (例如:nginx、haproxy、traefik等)的配置文件,并由相应的应用程序生效相应的配置后完成流量转发。

- 此类能理解ingress定义的配置信息,并可将其转换为自身配置的应用程序即为 ingress controller , 此类的资源也必须部署为Pod资源对象

- 此类控制器需要由kubernetes管理员额外以Addons的形式部署为Pod资源对象,它们通过API sERVER获取Ingress的相关定义。

- 对ingress controllers来讲,nginx、haproxy都不是云原生的,默认都不支持作为运行在Pod资源并实现协议转发和调度的,所以k8s自己把nginx改造后可作为ingress contraller

- 项目托管地址 : https://github.com/Kubernetes/ingress-nginx

- 参考博客浅谈 k8s ingress controller 选型 :https://www.jianshu.com/p/0a054ba18a93

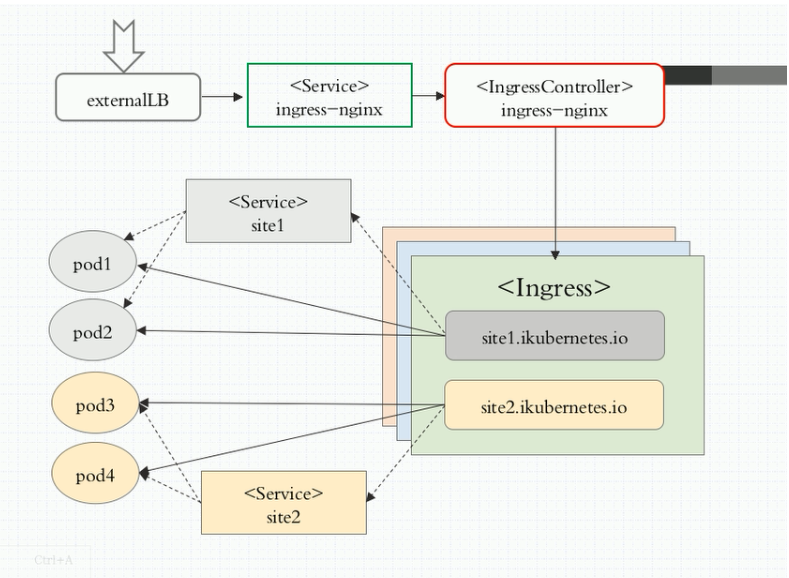

2.2、Ingress Controllers 与 Service与Ingress

- ingress 自身步运行使用标签选择器挑选真正提供服务的Pod对象,它需要由Service对象的辅助完成此类功能(当后端pods数量增长或减少时,ingress是无法动态读取到的,所以需要借助service上的标签选择器进行匹配,所以service是用来辅助ingress,弥补ingress的缺陷。)

- ingress controller 根据ingress定义的配置调度流量时,其报文将由ingress controller 直接调度后直达Pod对象,而不再经由Service调度

- ingress controller 也是 Pod对象,它能够与后端Pod直接进行通讯。

三、Ingress Controller 资源创建

3.1、方式一:创建使用ingress-nginx作为调度器的Ingress Controller资源(在线nginx-ingress-controller → 手动NodePort类型Server → ingress)

- 官方参考配置 : https://github.com/kubernetes/ingress-nginx/blob/master/deploy/static/mandatory.yaml 点击 Raw

- 可根据自己的k8s版本进行选择

3.1.1、官网提供的ingress controller清单

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

--- #标准资源界定符

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.30.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container

3.1.2、使用在线的ingress controller配置清单创建资源(在线nginx-ingress-controller → 手动Server → ingress)

1、使用官方提供的默认的配置清单创建ingress controller资源

#master节点

~]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml

#kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.27.1/deploy/static/mandatory.yaml

namespace/ingress-nginx created

configmap/nginx-configuration created

configmap/tcp-services created

configmap/udp-services created

serviceaccount/nginx-ingress-serviceaccount created

clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created

role.rbac.authorization.k8s.io/nginx-ingress-role created

rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created

clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created

deployment.apps/nginx-ingress-controller created

limitrange/ingress-nginx created

#kubectl delete -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml

2、所有的资源都会被创建在ingress-nginx名称空间中

#查看名称空间

~]# kubectl get ns

NAME STATUS AGE

ingress-nginx Active 3m46s

# ingress-nginx-controller是一个deployment类型的控制器

mainfests]# kubectl get deploy -n ingress-nginx

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-ingress-controller 1/1 1 1 38m

# 查看上面清单中的pod、service、deployment、replicaset

mainfests]# kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/nginx-ingress-controller-7fcb6cffc5-lg8h2 1/1 Running 0 56m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress NodePort 10.101.146.55 <none> 80:32072/TCP,443:31396/TCP 50m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-ingress-controller 1/1 1 1 56m

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-ingress-controller-7fcb6cffc5 1 1 1 56m

#查看此名称空间下的Pod 其实就是nginx的 ingress controller

# ( basic]# kubectl describe pods nginx-ingress-controller-5bb8fb4bb6-q5lpg -n ingress-nginx查看启动步骤状态)

~]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

nginx-ingress-controller-948ffd8cc-gdxrz 1/1 Running 0 44m #nginx-ingress-controller还不能接入集群外部流量

#查看标签,此Pod标签可作为下面创建的Service使用的标签选择器的标签

~]# kubectl get pods -n ingress-nginx --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-ingress-controller-948ffd8cc-gdxrz 1/1 Running 0 50m app.kubernetes.io/name=ingress-nginx,app.kubernetes.io/part-of=ingress-nginx,pod-template-hash=948ffd8cc

3、手动编辑一个对应NodePort类型的Service资源,并关联到nginx-ingress-controller

#定义NodePort类型的Service资源

~]# mkdir mainfests/ingress

ingress]# cat ingress-nginx-svc.yaml

#service所属的标准资源api版本定义群组

apiVersion: v1

#定义资源的类型为Service

kind: Service

#定义元数据

metadata:

#Service名称

name: ingress

#定义service所属的名称空间需要和nginx-ingress-controller————Pod在同一名称空间

namespace: ingress-nginx

#定义service规格

spec:

#定义Service的标签选择器,仅用于定义选择匹配nginx-ingress-controller的标签

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

#ports字段

ports:

#此端口的名称

- name: http

#Service地址的哪个端口于后端Pods端口映射

port: 80

#nodePort: 30080

- name: https

port: 443

#nodePort: 30443

#定义Server类型为NodePort类型

type: NodePort

4、使用声明式接口创建此Service资源

ingress]# kubectl apply -f ingress-nginx-svc.yaml

service/ingress created

5、查看定义的Service信息

ingress]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress NodePort 10.2.100.124 <none> 80:48420/TCP,443:49572/TCP 51s #30080 30443

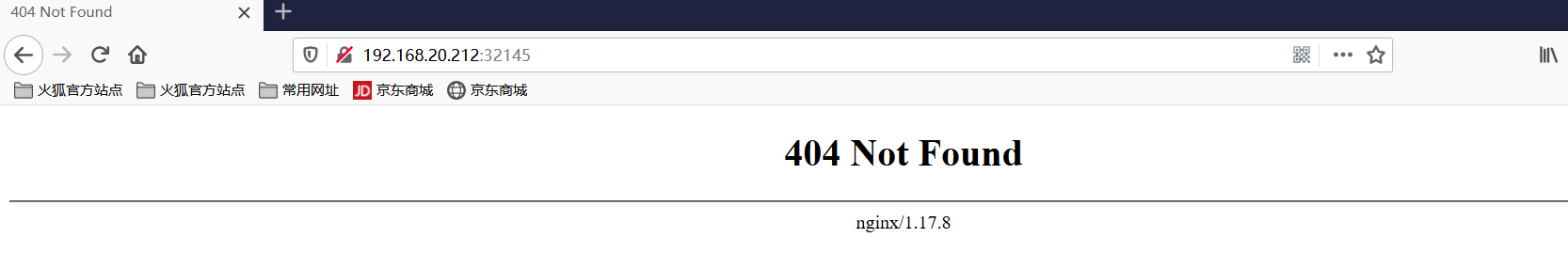

6、测试访问此集群中其中一台node地址+映射提供用户访问的端口

ingress]# curl 192.168.20.177:48420 #可以访问任何的node节点的IP + Port,可以访问到nginx-ingress-controller

nginx

- 为了方便使用,可以指定Service映射到Ingress的端口

mainfests]# cat ingress-nginx-scv.yaml

apiVersion: v1

kind: Service

metadata:

name: ingress

namespace: ingress-nginx

spec:

#定义Service的标签选择器,仅用于定义选择匹配nginx-ingress-controller的标签

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

#ports字段

ports:

#此端口的名称

- name: http

#Service地址的哪个端口于后端Pods端口映射

port: 80

nodePort: 30080 # 保证端口未被占用

- name: https

port: 443

nodePort: 30443

#定义Server类型为NodePort类型

type: NodePort

3.2、方式二:创建使用ingress-nginx作为调度器(DeamentSet控制器+(NodeSelector)节点标签选择器部署在特定Node节点运行ingress-nginx+hostNetwork(pod映射宿主机))的Ingress Controller资源(在线nginx-ingress-controller →hostNetwork→ ingress)

修改ingress controller部署文件

$ vim mandatory.yaml

kind: DaemonSet //改为DaemonSet控制器

# replicas: 1 //删除replicas

hostNetwork: true //使用HostNetwork

nodeSelector: //修改节点选择

type: "ingress"

设置ingress controller节点的标签

$ kubectl label nodes server6 type=ingress

----------------------------------------------------------

1、将资源清单下载到本地并进行编辑

[root@k8s ingress]# cat mandatory-hostNetwork.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

# replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

hostNetwork: true

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:master

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- default:

min:

memory: 90Mi

cpu: 100m

type: Container

向往的地方很远,喜欢的东西很贵,这就是我努力的目标。