prometheus(6)之常用服务监控

监控常用服务

- 1.tomcat

- 2.redis

- 3.mysql

- 4.nginx

- 5.mongodb

prometheus监控tomcat

tomcat_exporter地址

下面在k8s-master节点操作 (1)制作tomcat镜像,按如下步骤 mkdir /root/tomcat_image 把上面的war包和jar包传到这个目录下 cat Dockerfile FROM tomcat ADD metrics.war /usr/local/tomcat/webapps/ ADD simpleclient-0.8.0.jar /usr/local/tomcat/lib/ ADD simpleclient_common-0.8.0.jar /usr/local/tomcat/lib/ ADD simpleclient_hotspot-0.8.0.jar /usr/local/tomcat/lib/ ADD simpleclient_servlet-0.8.0.jar /usr/local/tomcat/lib/ ADD tomcat_exporter_client-0.0.12.jar /usr/local/tomcat/lib/ docker build -t='xianchao/tomcat_prometheus:v1' . docker login username:xianchao password:1989317** docker push xianchao/tomcat_prometheus:v1 #上传镜像到hub仓库 docker pull xianchao/tomcat_prometheus:v1 #在k8s的node节点拉取镜像拉取镜像

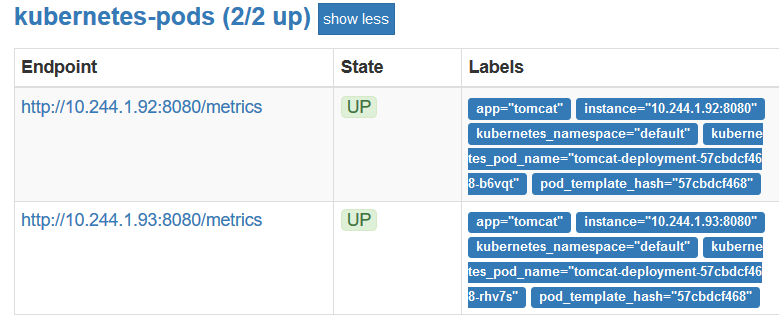

(2)基于上面的镜像创建一个tomcat实例 cat deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: name: tomcat-deployment namespace: default spec: selector: matchLabels: app: tomcat replicas: 2 # tells deployment to run 2 pods matching the template template: # create pods using pod definition in this template metadata: labels: app: tomcat annotations: prometheus.io/scrape: 'true' spec: containers: - name: tomcat image: xianchao/tomcat_prometheus:v1 ports: - containerPort: 8080 securityContext: privileged: true kubectl apply -f deploy.yaml 创建一个service,可操作也可不操作 cat tomcat-service.yaml kind: Service #service 类型 apiVersion: v1 metadata: # annotations: # prometheus.io/scrape: 'true' name: tomcat-service spec: selector: app: tomcat ports: - nodePort: 31360 port: 80 protocol: TCP targetPort: 8080 type: NodePort kubectl apply -f tomcat-service.yaml 在promethues上可以看到监控到tomcat的pod了

监控redis

配置一个Redis的exporter,我们通过redis进行暴露监控

我们在之前的Redis上添加prometheus.io/scrape=true

cat redis.yaml apiVersion: apps/v1 kind: Deployment metadata: name: redis namespace: kube-system spec: replicas: 1 selector: matchLabels: app: redis template: metadata: labels: app: redis spec: containers: - name: redis image: redis:4 resources: requests: cpu: 100m memory: 100Mi ports: - containerPort: 6379 - name: redis-exporter image: oliver006/redis_exporter:latest resources: requests: cpu: 100m memory: 100Mi ports: - containerPort: 9121 --- kind: Service apiVersion: v1 metadata: name: redis namespace: kube-system annotations: prometheus.io/scrape: "true" prometheus.io/port: "9121" spec: selector: app: redis ports: - name: redis port: 6379 targetPort: 6379 - name: prom port: 9121 targetPort: 9121

redis 这个 Pod 中包含了两个容器,一个就是 redis 本身的主应用,另外一个容器就是 redis_exporter 由于Redis服务的metrics接口在redis-exporter 9121上,所以我们添加了prometheus.io/port=9121这样的annotation,在prometheus就会自动发现redis了 接下来我们刷新一下Redis的Service配置 [root@abcdocker prometheus]# kubectl apply -f redis.yaml deployment.extensions/redis unchanged service/redis unchanged

在grafana导入redis的json文件Redis Cluster-1571393212519.json,监控界面如下

Prometheus监控mysql

[root@xianchaomaster1 prometheus]# yum install mysql -y [root@xianchaomaster1 prometheus]# yum install mariadb -y tar -xvf mysqld_exporter-0.10.0.linux-amd64.tar.gz cd mysqld_exporter-0.10.0.linux-amd64 cp -ar mysqld_exporter /usr/local/bin/ chmod +x /usr/local/bin/mysqld_exporter

登陆mysql为mysql_exporter创建账号并授权

# 创建数据库用户。 mysql> CREATE USER 'mysql_exporter'@'localhost' IDENTIFIED BY 'Abcdef123!.'; # 对mysql_exporter用户授权 mysql> GRANT PROCESS, REPLICATION CLIENT, SELECT ON *.* TO 'mysql_exporter'@'localhost'; exit 退出mysql

创建mysql配置文件、运行时可免密码连接数据库:

cd mysqld_exporter-0.10.0.linux-amd64 cat my.cnf [client] user=mysql_exporter password=Abcdef123!.

启动mysql_exporter客户端

nohup ./mysqld_exporter --config.my-cnf=./my.cnf &

mysqld_exporter的监听端口是9104

修改prometheus-alertmanager-cfg.yaml文件,添加如下

- job_name: 'mysql' static_configs: - targets: ['192.168.40.180:9104']

kubectl apply -f prometheus-alertmanager-cfg.yaml

kubectl delete -f prometheus-alertmanager-deploy.yaml

kubectl apply -f prometheus-alertmanager-deploy.yaml

grafana导入mysql监控图表

mysql-overview_rev5.json

Prometheus监控Nginx

下载nginx-module-vts模块

unzip nginx-module-vts-master.zip

mv nginx-module-vts-master /usr/local/

安装nginx

tar zxvf nginx-1.15.7.tar.gz cd nginx-1.15.7 ./configure --prefix=/usr/local/nginx --with-http_gzip_static_module --with-http_stub_status_module --with-http_ssl_module --with-pcre --with-file-aio --with-http_realip_module --add-module=/usr/local/nginx-module-vts-master make && make install

修改nginx配置文件

vim /usr/local/nginx/conf/nginx.conf server下添加如下: location /status { vhost_traffic_status_display; vhost_traffic_status_display_format html; } http中添加如下: vhost_traffic_status_zone; 测试nginx配置文件是否正确: /usr/local/nginx/sbin/nginx -t 如果正确没问题,启动nginx 启动nginx: /usr/local/nginx/sbin/nginx 访问192.168.124.16/status可以看到nginx监控数据

安装nginx-vts-exporter

unzip nginx-vts-exporter-0.5.zip mv nginx-vts-exporter-0.5 /usr/local/ chmod +x /usr/local/nginx-vts-exporter-0.5/bin/nginx-vts-exporter cd /usr/local/nginx-vts-exporter-0.5/bin nohup ./nginx-vts-exporter -nginx.scrape_uri http://192.168.124.16/status/format/json & #注意:http://192.168.124.16/status/format/json这个地方的ip地址是nginx的IP地址 nginx-vts-exporter的监听端口是9913

修改prometheus-cfg.yaml文件

添加如下job: - job_name: 'nginx' scrape_interval: 5s static_configs: - targets: ['192.168.124.16:9913'] kubectl apply -f prometheus-cfg.yaml kubectl delete -f prometheus-deploy.yaml kubectl apply -f prometheus-deploy.yaml #注意: - targets: ['192.168.124.16:9913']这个ip地址是nginx-vts-exporter程序所在机器的ip地址

在grafana界面导入nginx图标![]()

prometheus监控mongodb

下载mongodb和mongodb_exporter镜像

docker pull mongo

docker pull eses/mongodb_exporter

启动mongodb

mkdir -p /data/db docker run -d --name mongodb -p 27017:27017 -v /data/db:/data/db mongo #创建mongo账号密码,给mongodb_exporter连接mongo用 (1)登录到容器 docker exec -it 24f910190790ade396844cef61cc66412b7af2108494742922c6157c5b236aac mongo admin (2)设置密码 use admin db.createUser({ user: 'admin', pwd: 'admin111111', roles: [ { role: "userAdminAnyDatabase", db: "admin" } ] }) exit推出

启动mongo_exporter

docker run -d --name mongodb_exporter -p 30056:9104 elarasu/mongodb_exporter --mongodb.uri mongodb://admin:admin111111@192.168.124.16:27017 注:admin:admin111111这个就是上面启动mongodb后设置的密码,@后面接mongodb的ip和端口

修改prometheus-cfg.yaml文件

添加一个job_name - job_name: 'mongodb' scrape_interval: 5s static_configs: - targets: ['192.168.124.16:30056'] kubectl apply -f prometheus-cfg.yaml kubectl delete -f prometheus-deploy.yaml kubectl apply -f prometheus-deploy.yaml