[Paddle学习笔记][05][对抗生成网络]

说明:

生成对抗网络(Generative Adversarial Network [1],简称GAN)是非监督式学习的一种方法,通过让两个神经网络相互博弈的方式进行学习。本例程使用DCGAN网络和MNIST数据集生成数字字符。

实验代码:

import paddle import paddle.fluid as fluid import numpy as np import math import matplotlib.pyplot as plt %matplotlib inline # 全局变量 use_cuda = 1 # 是否使用GPU batch_size = 128 # 每批读取数据 epoch_num = 20 # 训练迭代周期 noise_size = 100 # 噪声维度 # 判别网络 def D(image): # 输入图像: N*C*H*W=N*1*28*28, H/W=(H/W-F+2*P)/S+1, 网络层数: 4 image = fluid.layers.reshape(x=image, shape=[-1, 1, 28, 28]) conv_pool_1 = fluid.nets.simple_img_conv_pool( # 输出: N*64*12*12 input=image, num_filters=64, filter_size=5, act='leaky_relu', pool_size=2, pool_stride=2, param_attr='conv_pool_1_w', bias_attr='conv_pool_1_b') conv_pool_2 = fluid.nets.simple_img_conv_pool( # 输出: N*128*4*4 input=conv_pool_1, num_filters=128, filter_size=5, act='leaky_relu', pool_size=2, pool_stride=2, param_attr='conv_pool_2_w', bias_attr='conv_pool_2_b') bn1 = fluid.layers.batch_norm( input=conv_pool_2, act='leaky_relu', name='bn1', param_attr='bn1_w', bias_attr='bn1_b', moving_mean_name='bn1_m', moving_variance_name='bn1_v') fc1 = fluid.layers.fc( # 输出: N*1024 input=bn1, size=1024, act=None, param_attr='fc1_w', bias_attr='fc1_b') bn2 = fluid.layers.batch_norm( input=fc1, act='leaky_relu', name='bn2', param_attr='bn2_w', bias_attr='bn2_b', moving_mean_name='bn2_m', moving_variance_name='bn2_v') # 输出标签: 1为真实图像,0为虚假图像 logit = fluid.layers.fc( # 输出: N*1 input=bn2, size=1, act='sigmoid', param_attr='fc2_w', bias_attr='fc2_b') return logit # 生成网络 def G(noise): # 输入噪声: N*100, 网络层数: 4 fc3 = fluid.layers.fc( # 输出: N*2048 input=noise, size=2048, act=None, param_attr='fc3_w', bias_attr='fc3_b') bn3 = fluid.layers.batch_norm( input=fc3, act='relu', name='bn3', param_attr='bn3_w', bias_attr='bn3_b', moving_mean_name='bn3_m', moving_variance_name='bn3_v') fc4 = fluid.layers.fc( # 输出: N*6272 input=bn3, size=6272, act=None, param_attr='fc4_w', bias_attr='fc4_b') bn5 = fluid.layers.batch_norm( input=fc4, act='relu', name='bn5', param_attr='bn5_w', bias_attr='bn5_b', moving_mean_name='bn5_m', moving_variance_name='bn5_v') reshape = fluid.layers.reshape(x=bn5, shape=[-1, 128, 7, 7]) deconv1 = fluid.layers.conv2d_transpose( # 输出: N*128*14*14 input=reshape, output_size=[14, 14], num_filters=128, filter_size=5, stride=2, padding=2, dilation=1, act='relu', param_attr='deconv1_w', bias_attr='deconv1_b') deconv2 = fluid.layers.conv2d_transpose( # 输出: N*1*28*28 input=deconv1, output_size=[28, 28], num_filters=1, filter_size=5, stride=2, padding=2, dilation=1, act='tanh', param_attr='deconv2_w', bias_attr='deconv2_b') # 输出图像 image = fluid.layers.reshape(x=deconv2, shape=[-1, 784]) return image # 显示图像 def show_image(total_image): n = int(math.ceil(math.sqrt(total_image.shape[0]))) total_image = total_image.reshape((n, n, 28, 28)).transpose((0, 2, 1, 3)).reshape((n*28, n*28)) fig= plt.figure(figsize=(8, 8)) plt.axis('off') plt.imshow(total_image, cmap='Greys_r', vmin=-1, vmax=1) plt.show(fig) # 训练模型 def train(): # 读取数据 train_reader = paddle.batch( paddle.reader.shuffle(paddle.dataset.mnist.train(), buf_size=60000), batch_size=batch_size) # 配置训练判别网络 d_program = fluid.Program() # 获取判别网络 with fluid.program_guard(d_program): # 输入图像 d_image = fluid.data(name='image', shape=[None, 784], dtype='float32') # 输入图像: N*1*28*28 d_label = fluid.data(name='label', shape=[None, 1], dtype='float32') # 图像标签: N*1 # 判别图像 d_logit = D(d_image) # 计算损失 d_loss = fluid.layers.sigmoid_cross_entropy_with_logits(x=d_logit, label=d_label) d_avg_loss = fluid.layers.mean(d_loss) # 配置训练生成网络 dg_program = fluid.Program() # 获取判别生成网络 with fluid.program_guard(dg_program): # 输入噪声 g_noise = fluid.data(name='noise', shape=[None, noise_size], dtype='float32') # 输入噪声: N*100 noise_shape = fluid.layers.shape(g_noise) g_label = fluid.layers.fill_constant(value=1.0, shape=[noise_shape[0], 1], dtype='float32') # 图像标签: N*1 # 生成图像 g_image = G(g_noise) # 克隆生成网络 g_program = dg_program.clone() g_program_test = dg_program.clone(for_test=True) # 判别图像 dg_logit = D(g_image) # 计算损失 dg_loss = fluid.layers.sigmoid_cross_entropy_with_logits(x=dg_logit, label=g_label) dg_avg_loss = fluid.layers.mean(dg_loss) # 配置优化方法 optimizer = fluid.optimizer.Adam(learning_rate=0.0002) #Adam算法 d_parameters = [p.name for p in d_program.global_block().all_parameters()] optimizer.minimize(loss=d_avg_loss, parameter_list=d_parameters) # 最小化判别网络平均损失值 g_parameters = [p.name for p in g_program.global_block().all_parameters()] optimizer.minimize(loss=dg_avg_loss, parameter_list=g_parameters) # 最小化生成网络平均损失值 # 启动程序 place = fluid.CUDAPlace(0) if use_cuda else fluid.CPUPlace() # 获取执行设备 exe = fluid.Executor(place) # 获取执行程序 exe.run(fluid.default_startup_program()) # 运行启动程序 # 训练模型 for epoch in range(epoch_num): for batch, train_data in enumerate(train_reader()): # 准备训练数据 if len(train_data) != batch_size: continue real_images = np.array(list(map(lambda x: x[0], train_data))).reshape(-1, 784).astype('float32') real_labels = np.ones(shape=[real_images.shape[0], 1], dtype='float32') fake_noises = np.random.uniform(low=-1.0, high=1.0, size=[batch_size, noise_size]).astype('float32') fake_labels = np.zeros(shape=[real_images.shape[0], 1], dtype='float32') # 生成虚假图像 fake_images = exe.run( program=g_program, feed={'noise': fake_noises}, fetch_list=[g_image])[0] # 训练判别模型:判断虚假图片为假的损失 d_avg_loss_fake = exe.run( program=d_program, feed={'image': fake_images, 'label': fake_labels}, fetch_list=[d_avg_loss])[0][0] # 训练判别模型:判断真实图片为真的损失 d_avg_loss_real = exe.run( program=d_program, feed={'image': real_images, 'label': real_labels}, fetch_list=[d_avg_loss])[0][0] # 计算判别损失 d_avg_loss_n = d_avg_loss_fake + d_avg_loss_real # 训练生成模型 for i in range(2): noise = np.random.uniform(low=-1.0, high=1.0, size=[batch_size, noise_size]).astype('float32') dg_avg_loss_n = exe.run( program=dg_program, feed={'noise': noise}, fetch_list=[dg_avg_loss])[0][0] # 显示生成图像 if batch % 10 == 0: # 获取生成图像 noise = np.random.uniform(low=-1.0, high=1.0, size=[batch_size, noise_size]).astype('float32') generate_images = exe.run( program=g_program_test, feed={'noise': noise}, fetch_list=[g_image])[0] total_image = np.concatenate([real_images, generate_images]) # 显示生成图像 print("Epoch: {0}, Batch: {1}, D AVG Loss: {2}, DG AVG Loss: {3}".format(epoch, batch, d_avg_loss_n, dg_avg_loss_n)) show_image(total_image) # 主函数 if __name__ == "__main__": train()

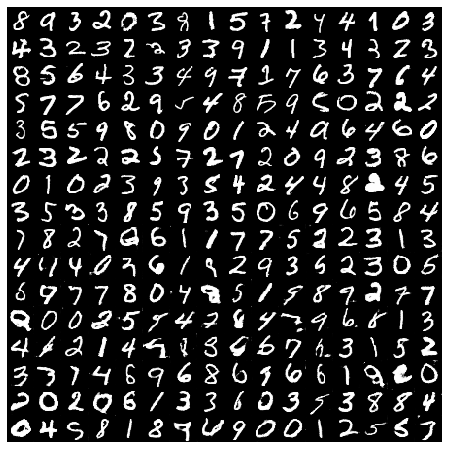

实验结果:

前8行为训练集字符,后8行为生成字符

Epoch: 19, Batch: 460, D AVG Loss: 1.1578519344329834, DG AVG Loss: 0.6636089086532593

参考资料:

https://www.paddlepaddle.org.cn/documentation/docs/zh/user_guides/cv_case/gan/README.cn.html

分类:

Paddle

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 如何编写易于单元测试的代码

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 周边上新:园子的第一款马克杯温暖上架

· Open-Sora 2.0 重磅开源!

· 提示词工程——AI应用必不可少的技术