urllib发送请求

from urllib import request url = "http://www.baidu.com" res = request.urlopen(url) # 获取相应 print(res.info()) # 响应头 print(res.getcode()) # 状态码 print(res.geturl()) # 返回响应地址

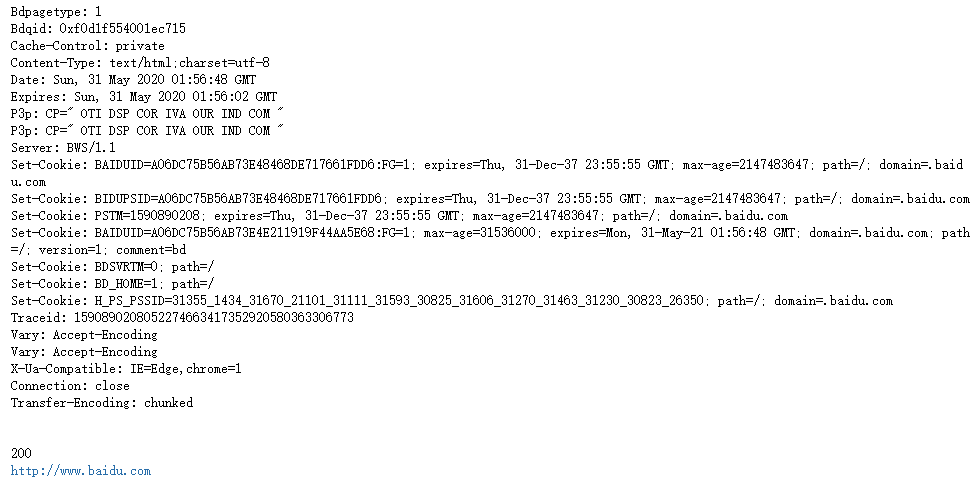

输出结果为:

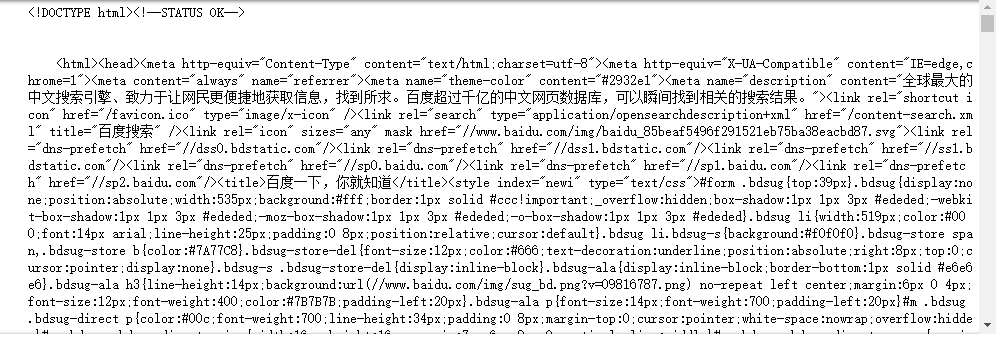

from urllib import request url = "http://www.baidu.com" res = request.urlopen(url) # 获取相应 html = res.read() html = html.decode("utf-8") print(html)

上面这种方式是最初级的,没有考虑任何反爬机制,换个网站就行不通了

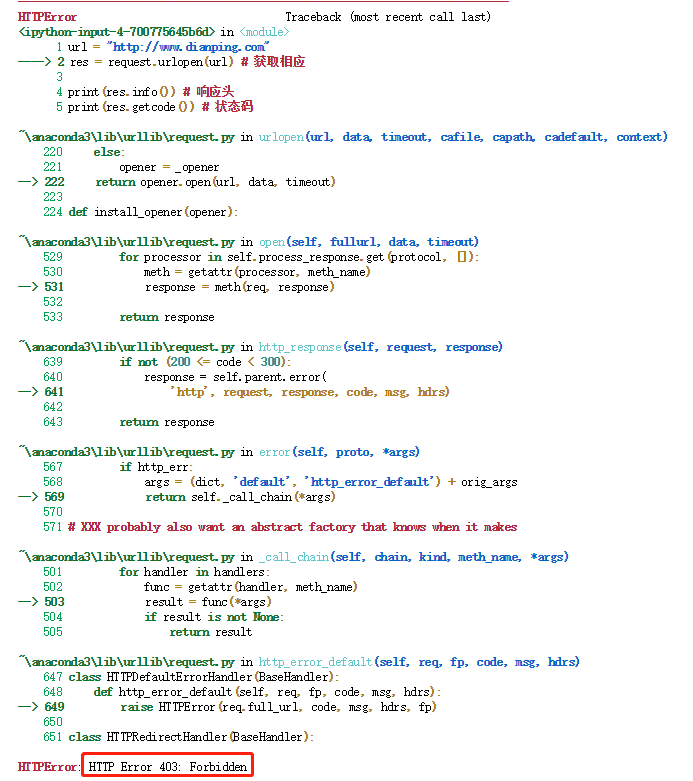

from urllib import request url = "http://www.dianping.com" res = request.urlopen(url) # 获取相应 print(res.info()) # 响应头 print(res.getcode()) # 状态码 print(res.geturl()) # 返回响应地址

最基础的措施为添加header,可以输入F12,在network选项中的Request Headers找到

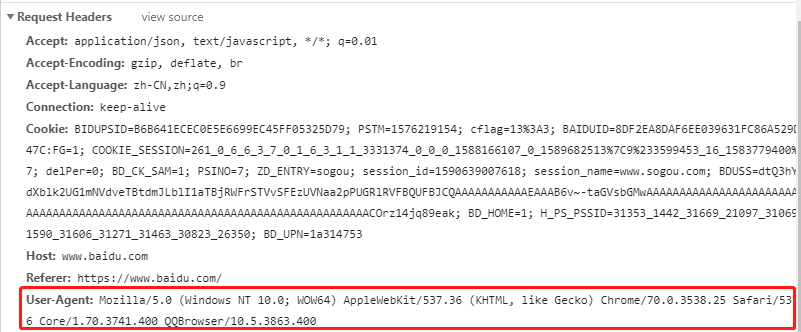

找到后,给User-Agent添加引号,对冒号后面的部分也添加引号,然后写入header变量中

再通过request.Request(url,headers=header)来发送请求

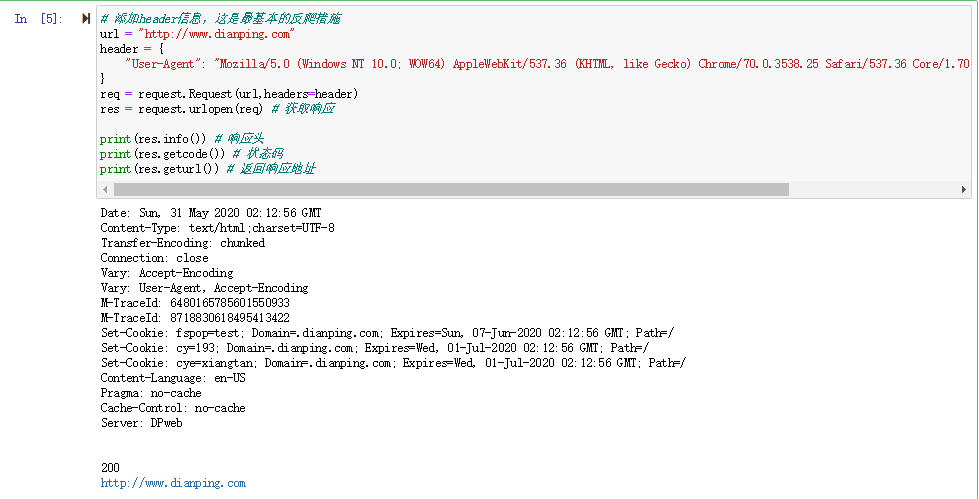

# 添加header信息,这是最基本的反爬措施 url = "http://www.dianping.com" header = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3741.400 QQBrowser/10.5.3863.400" } req = request.Request(url,headers=header) res = request.urlopen(req) # 获取响应 print(res.info()) # 响应头 print(res.getcode()) # 状态码 print(res.geturl()) # 返回响应地址

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· .NET Core GC压缩(compact_phase)底层原理浅谈

· 现代计算机视觉入门之:什么是图片特征编码

· .NET 9 new features-C#13新的锁类型和语义

· Linux系统下SQL Server数据库镜像配置全流程详解

· 现代计算机视觉入门之:什么是视频

· Sdcb Chats 技术博客:数据库 ID 选型的曲折之路 - 从 Guid 到自增 ID,再到

· .NET Core GC压缩(compact_phase)底层原理浅谈

· Winform-耗时操作导致界面渲染滞后

· Phi小模型开发教程:C#使用本地模型Phi视觉模型分析图像,实现图片分类、搜索等功能

· 深度学习基础理论————CV中常用Backbone(Resnet/Unet/Vit系列/多模态系列等