2023数据采集与融合技术实践作业2

作业①:

- 要求:

在中国气象网(http://www.weather.com.cn)给定城市集的 7日天气预报,并保存在数据库。 - Gitee链接:

https://gitee.com/cyosyo/crawl_project/blob/master/作业2/10.7爬取天气.py

实验代码:

from bs4 import UnicodeDammit # type:ignore

import urllib.request

import sqlite3

class WeatherDB:

def openDB(self):

self.con = sqlite3.connect("weathers.db")

self.cursor = self.con.cursor()

try:

self.cursor.execute("""create table weathers (wCity \

varchar(16),wDate rchar(16),wWeather varchar(64),\

wTemp varchar(32),constraint pk_weather \

primary key (wCity,wDate))""")

except Exception:

self.cursor.execute("delete from weathers")

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self, city, date, weather, temp):

try:

self.cursor.execute("""insert into weathers \

(wCity,wDate,wWeather,wTemp) values

(?,?,?,?)""", (city, date, weather, temp))

except Exception as err:

print(err)

def show(self):

try:

self.cursor.execute("select * from weathers")

rows = self.cursor.fetchall()

print("%-16s%-16s%-32s%-16s" % ("city", "date", "weather", "temp"))

for row in rows:

print(

"%-16s%-16s%-32s%-16s" %

(row[0], row[1], row[2], row[3])

)

except Exception as err:

print(err)

class WeatherForecast:

def __init__(self):

self.headers = {

"User-Agent": """Mozilla/5.0 (X11; Linux x86_64) \

AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36 \

Edg/112.0.1722.54"""

}

self.cityCode = {

"北京": "101010100",

"上海": "101020100",

"广州": "101280101",

"深圳": "101280601",

"福州": "101230101",

"厦门": "101230201",

"莆田": "101230401",

"三明": "101230801",

"泉州": "101230501",

}

def forecastCity(self, city):

if city not in self.cityCode.keys():

print(city + " code cannot be found")

return

url = "http://www.weather.com.cn/weather/" + self.cityCode[city] + \

".shtml"

try:

req = urllib.request.Request(url, headers=self.headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data, "lxml")

lis = soup.select("ul[class='t clearfix'] li")

print("序号\t地区\t日期\t天气信息\t温度")

num = 0

for li in lis:

try:

date = li.select('h1')[0].text

weather = li.select('p[class="wea"]')[0].text

temp = li.select('p[class="tem"] span')[0].text + \

"/" + li.select('p[class="tem"] i')[0].text

num += 1

print(num, city, date, weather, temp)

self.db.insert(city, date, weather, temp)

except Exception as err:

print(err)

except Exception as err:

print(err)

def process(self, cities):

self.db = WeatherDB()

self.db.openDB()

for city in cities:

self.forecastCity(city)

self.db.closeDB()

ws = WeatherForecast()

ws.process(["福州", "厦门", "莆田", "三明", "泉州"])

print("完成")

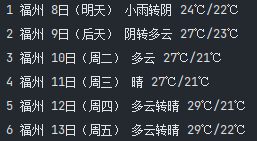

输出信息:

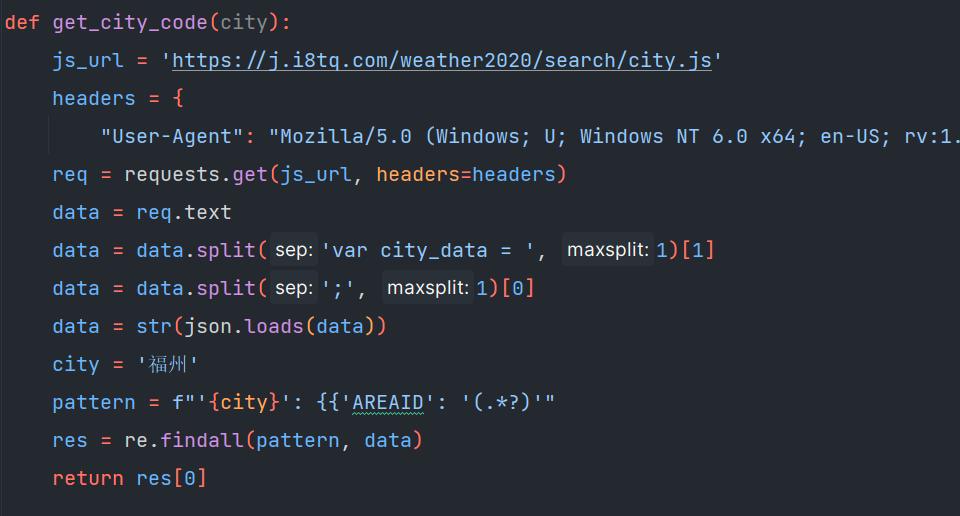

其实代码还有改进的空间,先通过js文件获得城市代号和名字的映射关系,然后再传入函数中进行爬取。如图所示是从主界面抓取的city.js,里面包含了数字和城市的映射关系。

所以改进后可以加入一段函数获取城市名字与id的对应关系:

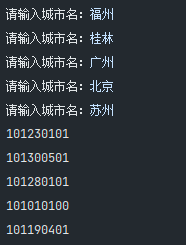

函数效果如下:

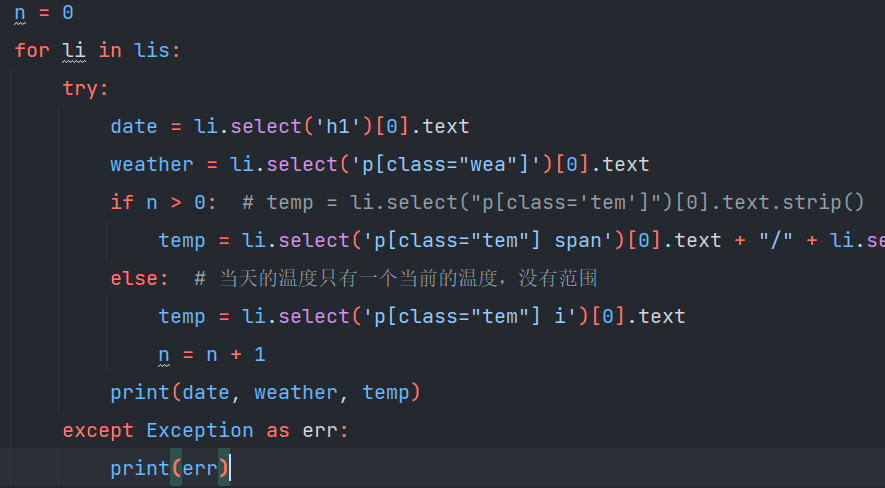

并且原来代码中,爬取今日天气会提示出错,所以还可以继续更改爬取逻辑为:

最终代码如下:

from bs4 import UnicodeDammit # type:ignore

import urllib.request

import sqlite3

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import re

import requests

import json

def get_city_code(city):

js_url = 'https://j.i8tq.com/weather2020/search/city.js'

headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

req = requests.get(js_url, headers=headers)

data = req.text

data = data.split('var city_data = ', 1)[1]

data = data.split(';', 1)[0]

data = str(json.loads(data))

pattern = f"'{city}': {{'AREAID': '(.*?)'"

res = re.findall(pattern, data)

return res[0]

class WeatherDB:

def openDB(self):

self.con = sqlite3.connect("weathers.db")

self.cursor = self.con.cursor()

try:

self.cursor.execute("""create table weathers (wCity \

varchar(16),wDate rchar(16),wWeather varchar(64),\

wTemp varchar(32),constraint pk_weather \

primary key (wCity,wDate))""")

except Exception:

self.cursor.execute("delete from weathers")

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self, city, date, weather, temp):

try:

self.cursor.execute("""insert into weathers \

(wCity,wDate,wWeather,wTemp) values

(?,?,?,?)""", (city, date, weather, temp))

except Exception as err:

print(err)

def show(self):

try:

self.cursor.execute("select * from weathers")

rows = self.cursor.fetchall()

print("%-16s%-16s%-32s%-16s" % ("city", "date", "weather", "temp"))

for row in rows:

print(

"%-16s%-16s%-32s%-16s" %

(row[0], row[1], row[2], row[3])

)

except Exception as err:

print(err)

class WeatherForecast:

def __init__(self):

self.headers = {

"User-Agent": """Mozilla/5.0 (X11; Linux x86_64) \

AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36 \

Edg/112.0.1722.54"""

}

def forecastCity(self, city):

citycode=get_city_code(city)

url = "http://www.weather.com.cn/weather/" + citycode + \

".shtml"

try:

req = urllib.request.Request(url, headers=self.headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data, "lxml")

lis = soup.select("ul[class='t clearfix'] li")

print("序号\t地区\t日期\t天气信息\t温度")

n = 0

for li in lis:

try:

date = li.select('h1')[0].text

weather = li.select('p[class="wea"]')[0].text

if n > 0: # temp = li.select("p[class='tem']")[0].text.strip()

temp = li.select('p[class="tem"] span')[0].text + "/" + li.select('p[class="tem"] i')[0].text

else: # 当天的温度只有一个当前的温度,没有范围

temp = li.select('p[class="tem"] i')[0].text

n = n + 1

print(date, weather, temp)

except Exception as err:

print(err)

except Exception as err:

print(err)

def process(self, cities):

self.db = WeatherDB()

self.db.openDB()

for city in cities:

self.forecastCity(city)

self.db.closeDB()

ws = WeatherForecast()

n=input("请输入城市个数:")

li=[]

for i in range(int(n)):

city=input("请输入城市名:")

li.append(city)

ws.process(li)

print("完成")

心得体会:

通过这次学习,学到了sqlite的用法,以及如何通过改变url中的一些数字来映射不同的城市。

作业②:

- 要求:

用 requests 和 BeautifulSoup 库方法定向爬取股票相关信息,并存储在数据库中。 - 候选网站:

东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock - 技巧:

在谷歌浏览器中进入 F12 调试模式进行抓包,查找股票列表加载使用的 url,并分析 api 返回的值,并根据所要求的参数可适当更改api 的请求参数。根据 URL 可观察请求的参数 f1、f2 可获取不同的数值,根据情况可删减请求的参数。 - Gitee链接:

https://gitee.com/cyosyo/crawl_project/blob/master/作业2/10.7股票.py

实验代码:

import re

import json

import sqlite3

iid=1

for j in range(1,11):

url=f'http://41.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112406279131482570521_1696658826817&pn={j}&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1696658826818'

headers={"User-Agent":"Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

req=requests.get(url,headers=headers)

data=req.text

json_data = data.split("(", 1)[1].rsplit(")", 1)[0]

data_json=json.loads(json_data)

columns = {1:"代码",2:"名称",3:"最新价格",4:"涨跌额",5:"涨跌幅",6:"成交量",7:"成交额",8:"振幅",9:"最高",10:"最低",11:"今开",12:"昨收"}

con=sqlite3.connect('stock.db')

cur=con.cursor()

print("id 代码 名称 最新价格 涨跌额 涨跌幅 成交量 成交额 振幅 最高 最低 今开 昨收")

cur.execute('create table if not exists stock(id varchar(10),code varchar(10),name varchar(10),price varchar(10),change varchar(10),change_rate varchar(10),volume varchar(10),turnover varchar(10),amplitude varchar(10),high varchar(10),low varchar(10),open varchar(10),close varchar(10),primary key(id))')

for i in data_json['data']['diff']:

try:

cur.execute('insert into stock values(?,?,?,?,?,?,?,?,?,?,?,?,?)',(iid,i['f12'],i['f14'],i['f2'],i['f4'],i['f3'],i['f5'],i['f6'],i['f18'],i['f15'],i['f16'],i['f17'],i['f17']))

iid=iid+1

print(iid,i['f12'],i['f14'],i['f2'],i['f4'],i['f3'],i['f5'],i['f6'],i['f18'],i['f15'],i['f16'],i['f17'],i['f17'])

except Exception as err:

print(err)

con.commit()

con.close()

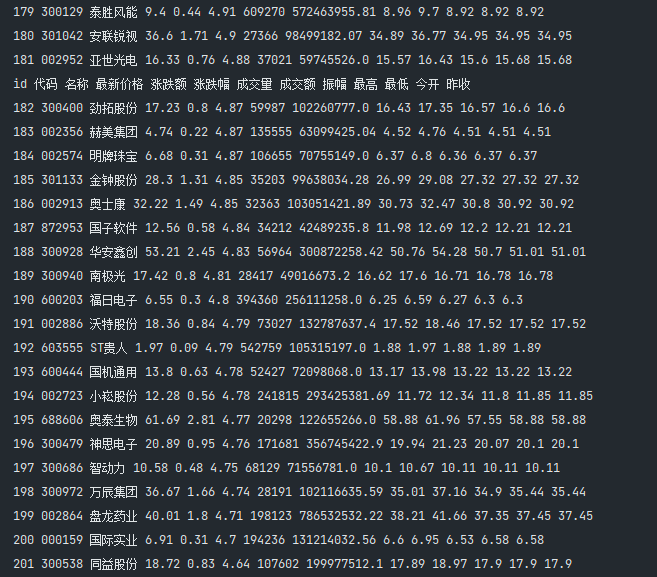

爬取10页后输出如下:

结果保存在db中

心得体会

这次学到了抓取js文件,提取传回的数据,然后再进行数据挖掘,对get和post的流程理解更加深刻。

作业③:

- 要求:

爬取中国大学2021主榜 https://www.shanghairanking.cn/rankings/bcur/2021 所有院校信息,并存储在数据库中,同时将浏览器 F12 调试分析的程录制 Gif 加入至博客中。 - Gitee链接:

https://gitee.com/cyosyo/crawl_project/blob/master/作业2/10.7大学爬取.py - 技巧:

分析该网站的发包情况,分析获取数据的 api

实验代码

import json

import sqlite3

import re

import sqlite3

url='https://www.shanghairanking.cn/_nuxt/static/1695811954/rankings/bcur/2021/payload.js'

html=requests.get(url).text.split('return',1)[1].split('}("",',1)[0]

name_pattern = r'univNameCn:"(.*?)"'

score_pattern = r'score:([\d.]+)'

iid=1

name_pattern=re.findall(name_pattern,html)

score_pattern=re.findall(score_pattern,html)

con=sqlite3.connect('university.db')

cur=con.cursor()

cur.execute('create table if not exists university(id varchar(10),name varchar(10),score varchar(10),primary key(id))')

try:

for i in range(len(name_pattern)):

print(iid,name_pattern[i],score_pattern[i])

cur.execute('insert into university values(?,?,?)',(iid,name_pattern[i],score_pattern[i]))

iid+=1

except Exception as err:

print(err)

con.commit()

con.close()

gif图片抓取过程:

输出信息:

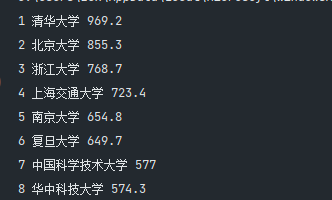

输出:

结果保存在university.db中:

心得体会

这次更加深刻的理解了要如何抓取返回的js文件并进行进一步的提取,相较于之前的作业,这次的理解更加深刻。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 【杭电多校比赛记录】2025“钉耙编程”中国大学生算法设计春季联赛(1)