爬虫大作业

1.选一个自己感兴趣的主题或网站。(所有同学不能雷同)

2.用python 编写爬虫程序,从网络上爬取相关主题的数据。

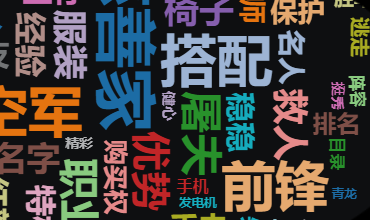

3.对爬了的数据进行文本分析,生成词云。

4.对文本分析结果进行解释说明。

5.写一篇完整的博客,描述上述实现过程、遇到的问题及解决办法、数据分析思想及结论。

6.最后提交爬取的全部数据、爬虫及数据分析源代码。

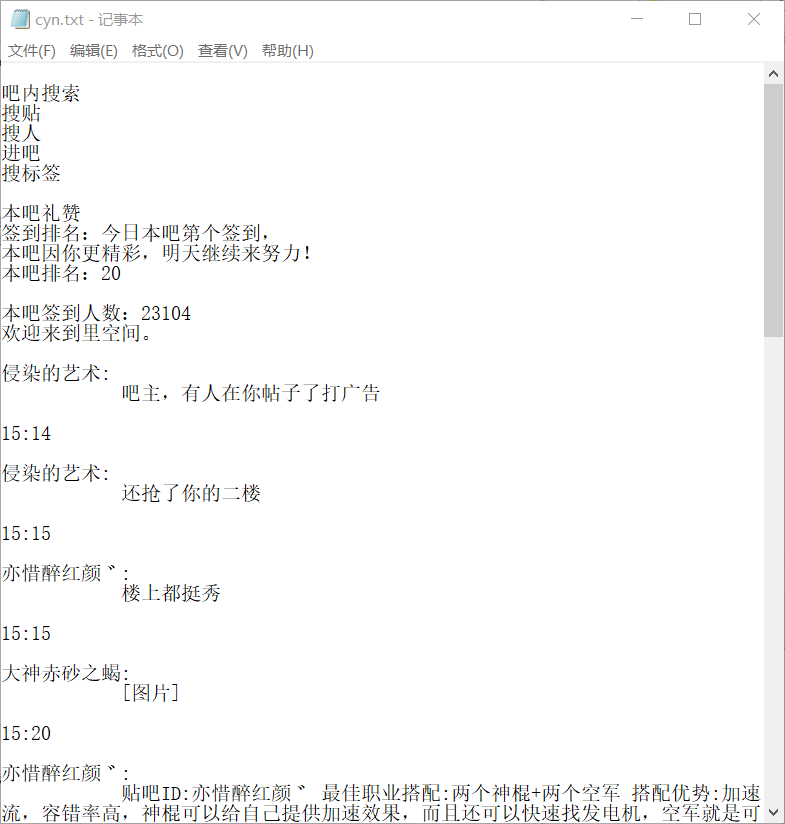

一、爬取第五人格百度贴吧内容

import requests from bs4 import BeautifulSoup import re import jieba

from wordcloud import WordCloud,ImageColorGenerator,STOPWORDS url = "https://tieba.baidu.com/f?kw=%B5%DA%CE%E5%C8%CB%B8%F1&fr=ala0&tpl=5" res = requests.get(url) res.encoding = "utf-8" soup = BeautifulSoup(res.text, "html.parser") output = open("cyn.txt", "a+", encoding="utf-8") for p in soup.find_all("p"): output.write(p.get_text() + "\n") output.close() txt = open("cyn.txt", "r", encoding="utf-8").read()

二、

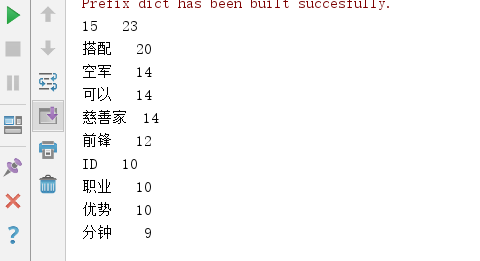

words = jieba.lcut(txt) ls = [] counts = {} for word in words: ls.append(word) if len(word) == 1: continue else: counts[word] = counts.get(word,0)+1 items = list(counts.items()) items.sort(key = lambda x:x[1], reverse = True) for i in range(10): word , count = items[i] print ("{:<5}{:>2}".format(word,count))

三、生成词云

abel_mask = np.array(Image.open("filepath")) text_from_file_with_apath = open('filepath').read() wordlist_after_jieba = jieba.cut(text_from_file_with_apath, cut_all = True) wl_space_split = " ".join(wordlist_after_jieba) my_wordcloud = WordCloud( background_color='white',

mask = abel_mask, max_words = 200, stopwords = STOPWORDS, font_path = C:/Users/Windows/fonts/simkai.ttf', max_font_size = 50, random_state = 30, scale=.5 ).generate(wl_space_split) image_colors = ImageColorGenerator(abel_mask) plt.imshow(my_wordcloud) plt.axis("off") plt.show()