EFK(Elasticsearch+Filebeat+Kibana) 收集K8s容器日志

一、Kubernetes日志采集难点

在 Kubernetes 中,日志采集相比传统虚拟机、物理机方式要复杂很多,最根本的原因是 Kubernetes 把底层异常屏蔽,提供更加细粒度的资源调度,向上提供稳定、动态的环境。因此日志采集面对的是更加丰富、动态的环境,需要考虑的点也更加的多。

1. 对于运行时间很短的 Job 类应用,从启动到停止只有几秒的时间,如何保证日志采集的实时性能够跟上而且数据不丢?

2. K8s 一般推荐使用大规格节点,每个节点可以运行 10-100+ 的容器,如何在资源消耗尽可能低的情况下采集 100+ 的容器?

3. 在 K8s 中,应用都以 yaml 的方式部署,而日志采集还是以手工的配置文件形式为主,如何能够让日志采集以 K8s 的方式进行部署?

二、Kubernetes日志采集方式

1. 业务直写:在应用中集成日志采集的 SDK,通过 SDK 直接将日志发送到服务端。这种方式省去了落盘采集的逻辑,也不需要额外部署 Agent,对于系统的资源消耗最低,但由于业务和日志 SDK 强绑定,整体灵活性很低,一般只有日志量极大的场景中使用;

2. DaemonSet 方式:在每个 node 节点上只运行一个日志 agent,采集这个节点上所有的日志。DaemonSet 相对资源占用要小很多,但扩展性、租户隔离性受限,比较适用于功能单一或业务不是很多的集群;

3. Sidecar 方式:为每个 POD 单独部署日志 agent,这个 agent 只负责一个业务应用的日志采集。Sidecar 相对资源占用较多,但灵活性以及多租户隔离性较强,建议大型的 K8s 集群或作为 PaaS 平台为多个业务方服务的集群使用该方式。

总结:

1. 业务直写推荐在日志量极大的场景中使用

2. DaemonSet一般在中小型集群中使用

3. Sidecar推荐在超大型的集群中使用

实际应用场景中,一般都是使用 DaemonSet 或 DaemonSet 与 Sidecar 混用方式,DaemonSet 的优势是资源利用率高,但有一个问题是 DaemonSet 的所有 Logtail 都共享全局配置,而单一的 Logtail 有配置支撑的上限,因此无法支撑应用数比较多的集群。

- 一个配置尽可能多的采集同类数据,减少配置数,降低 DaemonSet 压力;

- 核心的应用采集要给予充分的资源,可以使用 Sidecar 方式;

- 配置方式尽可能使用 CRD 方式;

- Sidecar 由于每个 Logtail 是单独的配置,所以没有配置数的限制,这种比较适合于超大型的集群使用。

三、以SeatefulSet的方式创建单节点elasticsearch的yaml文件

# cat elasticsearch.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elasticsearch

namespace: kube-system

labels:

k8s-app: elasticsearch

spec:

serviceName: elasticsearch

selector:

matchLabels:

k8s-app: elasticsearch

template:

metadata:

labels:

k8s-app: elasticsearch

spec:

initContainers:

- name: busybox

imagePullPolicy: IfNotPresent

image: busybox:latest

securityContext:

privileged: true

command: ["sh", "-c", "mkdir -p /usr/share/elasticsearch/data/logs;chown -R 1000:1000 /usr/share/elasticsearch/data"]

volumeMounts:

- name: elasticsearch-data

mountPath: "/usr/share/elasticsearch/data"

containers:

- image: elasticsearch:7.10.1

name: elasticsearch

resources:

limits:

cpu: 1

memory: 2Gi

requests:

cpu: 0.5

memory: 500Mi

env:

- name: "discovery.type"

value: "single-node"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx2g"

ports:

- containerPort: 9200

name: db

protocol: TCP

volumeMounts:

- name: elasticsearch-data

mountPath: /usr/share/elasticsearch/data

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

storageClassName: "disk-sc"

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1000Gi

---

apiVersion: v1

kind: Service

metadata:

name: elasticsearch

namespace: kube-system

spec:

clusterIP: None

ports:

- port: 9200

protocol: TCP

targetPort: db

selector:

k8s-app: elasticsearch

四、以Deployment的方式创建kibana的yaml文件

# cat kibana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: kube-system

labels:

k8s-app: kibana

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kibana

template:

metadata:

labels:

k8s-app: kibana

spec:

containers:

- name: kibana

image: kibana:7.10.1

resources:

limits:

cpu: 1

memory: 500Mi

requests:

cpu: 0.5

memory: 200Mi

env:

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch-0.elasticsearch.kube-system:9200

- name: I18N_LOCALE

value: zh-CN

ports:

- containerPort: 5601

name: ui

protocol: TCP

--- # 使用云厂商的负载均衡

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: kube-system

annotations:

service.kubernetes.io/qcloud-loadbalancer-internal-subnetid: subnet-cadefsb

spec:

ports:

- name: kibana-pvc

protocol: TCP

port: 5601

targetPort: 5601

selector:

k8s-app: kibana

type: LoadBalancer

五、以DaemonSet的方式创建filebeat的yaml文件

# cat filebeat-kubernetes.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: kube-system

labels:

k8s-app: filebeat

data:

filebeat.yml: |-

filebeat.config:

inputs:

# Mounted `filebeat-inputs` configmap:

path: ${path.config}/inputs.d/*.yml

# Reload inputs configs as they change:

reload.enabled: false

modules:

path: ${path.config}/modules.d/*.yml

# Reload module configs as they change:

reload.enabled: false

filebeat.autodiscover: # 使用filebeat自动发现的方式

providers:

- type: kubernetes

templates:

- condition:

equals:

kubernetes.namespace: prod # 收集prod命名空间的日志

config:

- type: log # 日志类型为log而非docker或者container,因为我们输出的日志非json格式。

containers.ids:

- "${data.kubernetes.container.id}"

paths:

# 收集日志的路径,如果设置为"/var/lib/kubelet/pods/**/*info.log",则会收集多余的日志,使用云k8s,所以非/var/lib/docker/containers/目录

- "/var/lib/kubelet/pods/${data.kubernetes.pod.uid}/volumes/kubernetes.io~empty-dir/data-vol/log/java/*/*info.log"

encoding: utf-8

scan_frequency: 1s # 扫描新文件的时间间隔,默认为10秒

tail_files: true # 设置为true时filebeat从每个文件的末尾取新文件,而不是开始。首次启用filebeat时忽略旧的日志,下次起动时关闭此选项。

fields_under_root: true # 设置为true后,fields存储在输出文档的顶级位置

fields:

type: "prod-info"

- type: kubernetes

templates:

- condition:

equals:

kubernetes.namespace: prod

config:

- type: log

containers.ids:

- "${data.kubernetes.container.id}"

paths:

- "/var/lib/kubelet/pods/${data.kubernetes.pod.uid}/volumes/kubernetes.io~empty-dir/data-vol/log/java/*/*error.log"

encoding: utf-8

scan_frequency: 1s

tail_files: true

fields_under_root: true

fields:

type: "prod-error"

multiline.type: pattern

multiline.pattern: '^[[:space:]]+(at|\.{3})[[:space:]]+\b|^Caused by:'

multiline.negate: false

multiline.match: after

- type: kubernetes

templates:

- condition:

equals:

kubernetes.namespace: test

config:

- type: log

containers.ids:

- "${data.kubernetes.container.id}"

paths:

- "/var/lib/kubelet/pods/${data.kubernetes.pod.uid}/volumes/kubernetes.io~empty-dir/data-vol/log/java/*/*info.log"

encoding: utf-8

scan_frequency: 1s

tail_files: true

fields_under_root: true

fields:

type: "test-info"

- type: kubernetes

templates:

- condition:

equals:

kubernetes.namespace: test

config:

- type: log

containers.ids:

- "${data.kubernetes.container.id}"

paths:

- "/var/lib/kubelet/pods/${data.kubernetes.pod.uid}/volumes/kubernetes.io~empty-dir/data-vol/log/java/*/*error.log"

encoding: utf-8

scan_frequency: 1s

tail_files: true

fields_under_root: true

fields:

type: "test-error"

multiline.type: pattern

multiline.pattern: '^[[:space:]]+(at|\.{3})[[:space:]]+\b|^Caused by:' # 标准的java多行匹配

multiline.negate: false

multiline.match: after

setup.ilm.enabled: false

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

indices:

- index: "k8s-test-info-%{+yyyy.MM.dd}"

when.contains:

type: "test-info"

- index: "k8s-test-error-%{+yyyy.MM.dd}"

when.contains:

type: "test-error"

- index: "k8s-prod-info-%{+yyyy.MM.dd}"

when.contains:

type: "prod-info"

- index: "k8s-prod-error-%{+yyyy.MM.dd}"

when.contains:

type: "prod-error"

# output.redis: # 将日志输出到redis中,再用logstash收日志输出到es中

# hosts: ["192.168.5.99:6379"]

# db: 0

# password: "password"

# key: "default_list"

# keys:

# - key: "k8s-prod-error-%{+yyyy.MM.dd}"

# when.contains:

# type: "prod-error"

# - key: "k8s-prod-info-%{+yyyy.MM.dd}"

# when.contains:

# type: "prod-info"

--- # 此configmap没有使用,可以将上面的配置文件粘贴到下面,也可删除

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-inputs

namespace: kube-system

labels:

k8s-app: filebeat

data:

kubernetes.yml: |-

#- type: log

# paths:

# - "/var/lib/kubelet/pods/**/*info.log"

#processors:

# - add_kubernetes_metadata:

# default_indexers.enabled: false

# default_matchers.enabled: false

# in_cluster: true

# indexers:

# - ip_port:

# matchers:

# - field_format:

# format: '%{[destination.ip]}:%{[destination.port]}'

# matchers:

# - logs_path:

# logs_path: '/var/log/pods'

# resource_type: 'pod'

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: elastic/filebeat:7.10.1

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

env:

- name: ELASTICSEARCH_HOST

value: elasticsearch-0.elasticsearch.kube-system

- name: ELASTICSEARCH_PORT

value: "9200"

securityContext:

runAsUser: 0

# If using Red Hat OpenShift uncomment this:

#privileged: true

resources:

limits:

memory: 6144Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: inputs

mountPath: /usr/share/filebeat/inputs.d

readOnly: true

- name: data

mountPath: /usr/share/filebeat/data

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: varlibkubeletpods

mountPath: /var/lib/kubelet/pods

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: varlibkubeletpods

hostPath:

path: /var/lib/kubelet/pods

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: inputs

configMap:

defaultMode: 0600

name: filebeat-inputs

# data folder 存储所有文件的读取状态注册表,因此不会在filebeat pod重启后再次发送所有的文件。

- name: data

hostPath:

path: /var/lib/filebeat-data

type: DirectoryOrCreate

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-system

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- pods

verbs:

- get

- watch

- list

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

---

六、参考信息及报错

参考链接 :https://www.elastic.co/guide/en/beats/filebeat/current/configuration-autodiscover.html

以下报错是因为日志格式非json类型

2021-02-16T12:23:33.920Z ERROR [reader_docker_json] readjson/docker_json.go:204 Parse line error: invalid CRI log format 2021-02-16T12:23:33.920Z INFO log/harvester.go:335 Skipping unparsable line in file: /var/lib/kubelet/pods/694538b6-4629-4903-804e-8e9fc36ced4a/volumes/kubernetes.io~empty-dir/data-vol/log/java/daemon/daemon-info.log

配置信息如下:

filebeat.yml: |-

filebeat.inputs:

- type: container # 日志类型必须为json格式日志才可以正常收集,k8s转到前台的日志可以正常收集。

paths:

- "/var/lib/kubelet/pods/*/volumes/kubernetes.io~empty-dir/data-vol/log/java/*/*.log"

七、filebeat排除某些不需要的字段和容器项目日志

processors:

- drop_fields:

fields: ["agent.ephemeral_id","agent.id","agent.name","agent.type","container.runtime","ecs.version","host.hostname","host.name","kubernetes.labels.pod-template-hash","host.os.version","agent.version","container.image.name","container.id","kubernetes.pod.uid","kubernetes.replicaset.name"]

ignore_missing: false

- add_kubernetes_metadata:

in_cluster: true

- drop_event.when:

or:

- equals:

kubernetes.labels.app: "tapd-page"

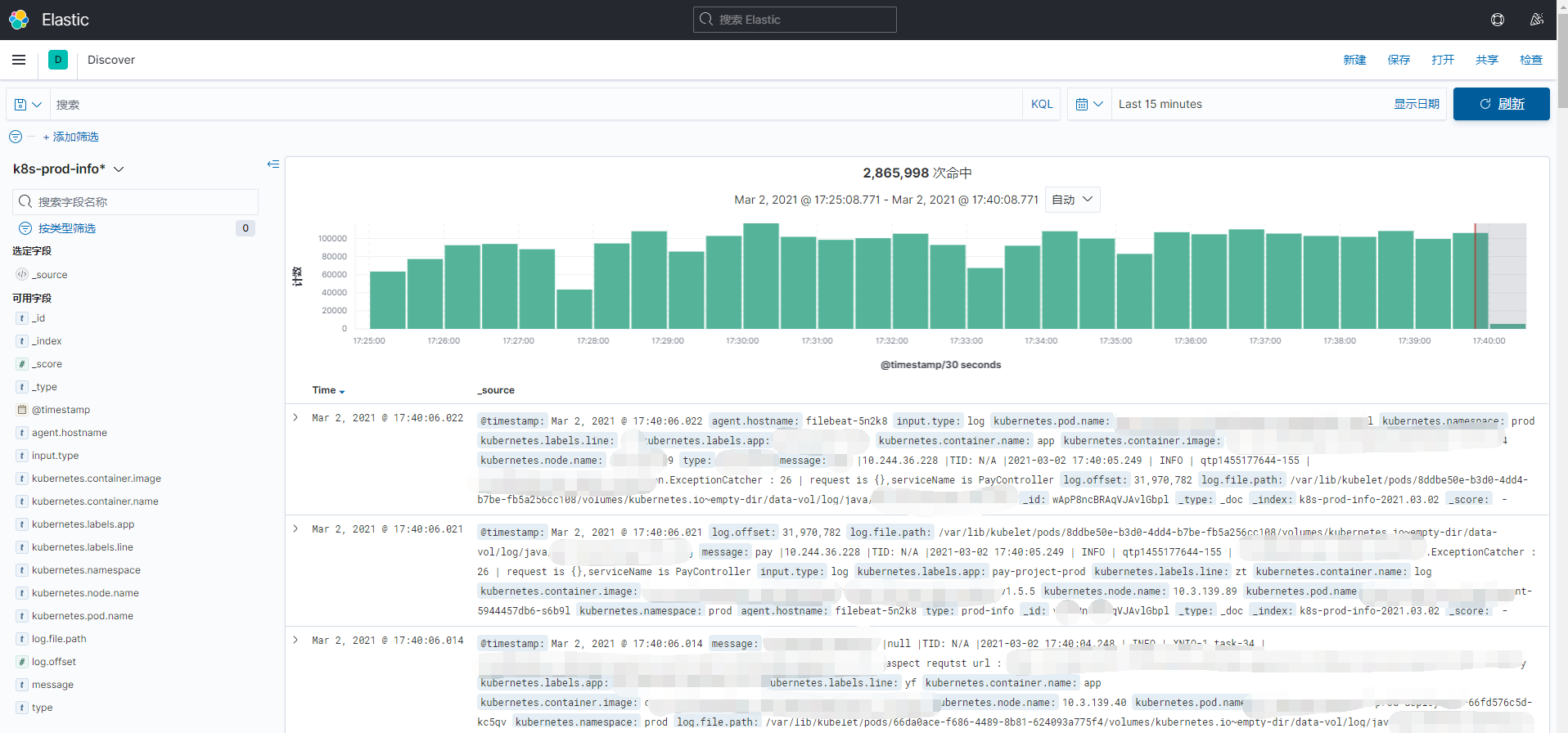

结果展示:

浙公网安备 33010602011771号

浙公网安备 33010602011771号