mall-swarm微服务商城

官网地址:https://github.com/macrozheng/mall-swarm

https://www.macrozheng.com/ 参考地址:https://github.com/iKubernetes/learning-k8s/tree/master/Mall-MicroService https://gitee.com/mageedu/mall-microservice

mall ├── mall-common -- 工具类及通用代码模块 ├── mall-mbg -- MyBatisGenerator生成的数据库操作代码模块 ├── mall-auth -- 基于Spring Security Oauth2的统一的认证中心 ├── mall-gateway -- 基于Spring Cloud Gateway的微服务API网关服务 ├── mall-monitor -- 基于Spring Boot Admin的微服务监控中心 ├── mall-admin -- 后台管理系统服务 ├── mall-search -- 基于Elasticsearch的商品搜索系统服务 ├── mall-portal -- 移动端商城系统服务 ├── mall-demo -- 微服务远程调用测试服务 └── config -- 配置中心存储的配置

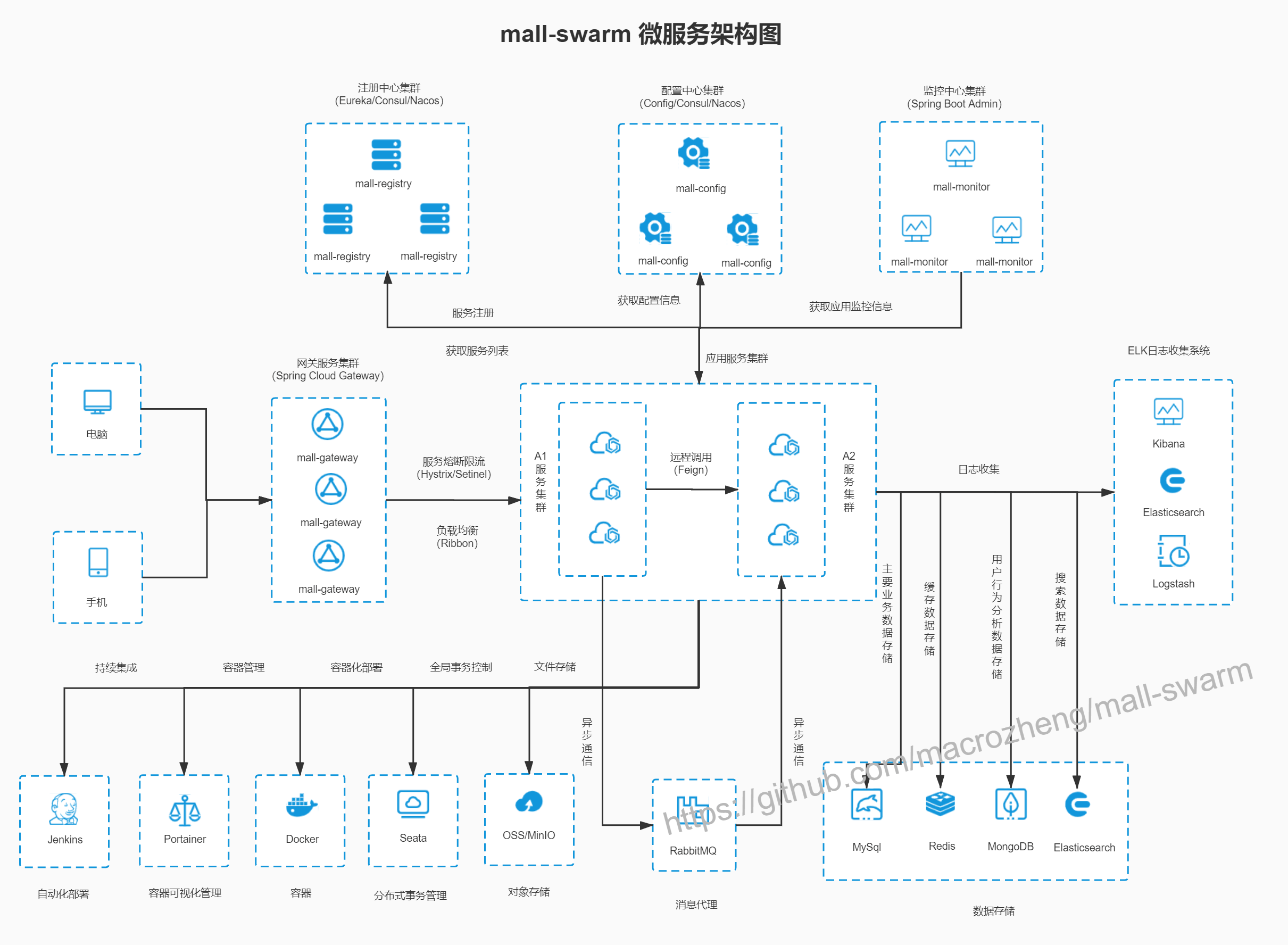

架构分析

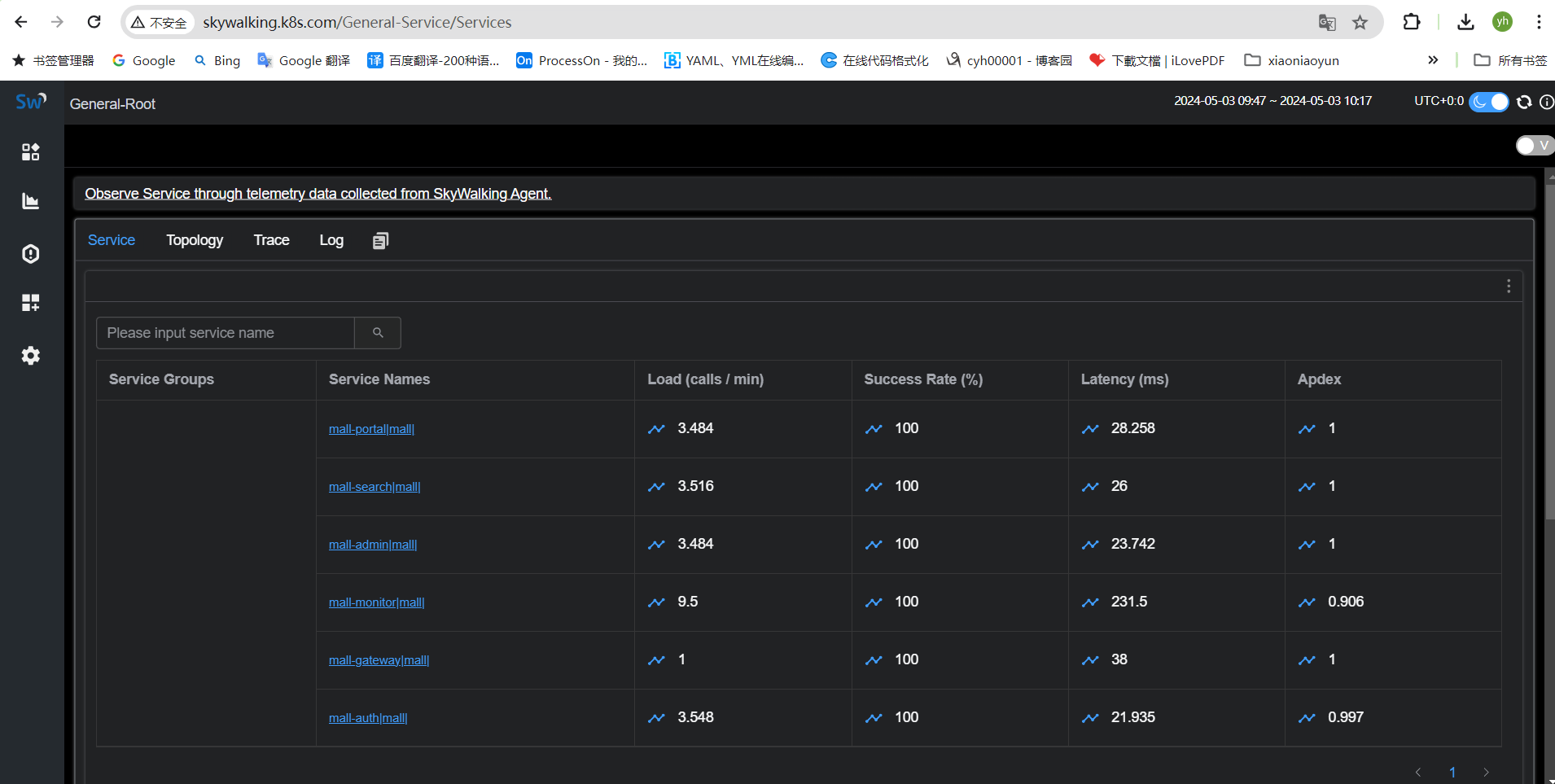

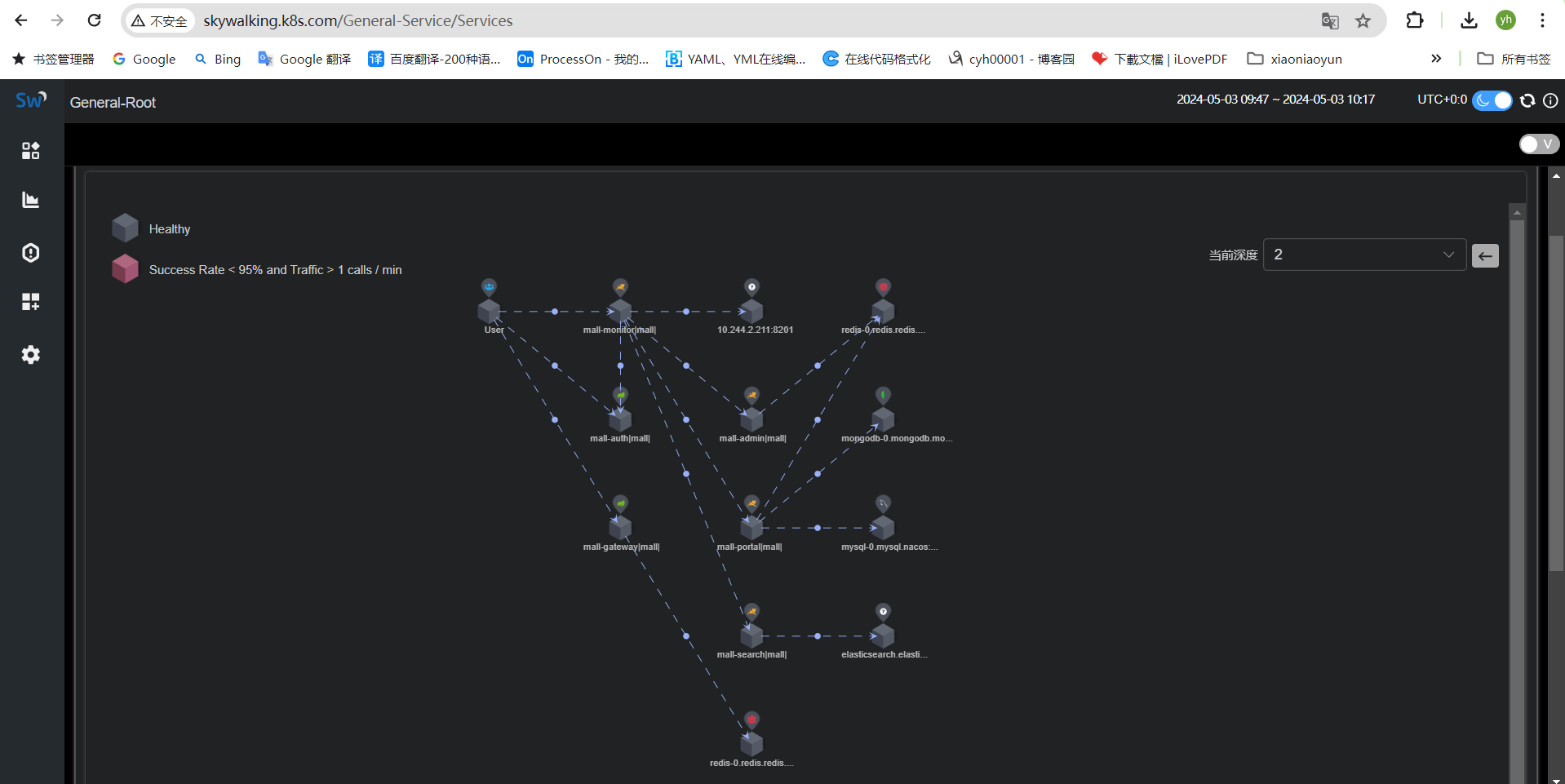

mall-microservice项目实战: 微服务化的电子商城(Spring Cloud) 电子商城后台:mall-microservice 前台:移动端 商家前台:Vue, Web UI, mall-admin-web mall-microservice部署: 后端服务 Prometheus Server (http/https,且仅支持prometheus专用的格式的指标) mysql --> mysql exporter 部署形式:同一个Pod中的多个容器 mysql-server: 主容器 mysql-exporter: adapter容器 Nacos: 依赖于特有jar直接暴露prometheus格式的指标; ... MySQL Nacos --> MySQL 业务数据 --> MySQL Nacos: 服务注册中心 配置中心 ElasticSearch 商品搜索服务: 安装中文分词器 Image: ikubernetes/elasticsearch:7.17.7-ik 日志中心:Fluent-bit, Kibana fluent-bit: DaemonSet Kibana: Web UI Redis master/slave MongoDB ReplicaSet Cluster RabbitMQ MinIO SkyWalking 商城后台: mall-gateway mall-portal mall-serach ... 商城管理端: mall-admin-web --> API --> mall-gateway 移动端:用户前台

一、集群信息

操作系统:ubuntu 22.04 内核:5.15.0-105-generic containerd版本:1.6.31 kubernetes版本:1.28.9 网络插件:cilium cilium版本:1.15.3 pod负载均衡: metallb metallb版本:0.14.5

1.1、节点准备

k8s-cilium-master-01 172.16.88.61 4vcpu 8G 50G k8s-cilium-master-02 172.16.88.62 4vcpu 8G 50G k8s-cilium-master-03 172.16.88.63 4vcpu 8G 50G k8s-cilium-node-01 172.16.88.71 8vcpu 16G 50G k8s-cilium-node-02 172.16.88.72 8vcpu 16G 50G k8s-cilium-node-03 172.16.88.73 8vcpu 16G 50G k8s-cilium-node-04 172.16.88.74 8vcpu 16G 50G k8s-cilium-node-05 172.16.88.75 8vcpu 16G 50G

1.2、初始节点信息

通过ansible批量修改主机名

[root@cyh-dell-rocky9-02 ~]# cat /etc/ansible/hosts [vm1] 172.16.88.61 hostname=k8s-cilium-master-01 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.62 hostname=k8s-cilium-master-02 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.63 hostname=k8s-cilium-master-03 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.71 hostname=k8s-cilium-node-01 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.72 hostname=k8s-cilium-node-02 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.73 hostname=k8s-cilium-node-03 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.74 hostname=k8s-cilium-node-04 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.75 hostname=k8s-cilium-node-05 ansible_ssh_port=22 ansible_ssh_pass=redhat [vm] 172.16.88.61 172.16.88.62 172.16.88.63 172.16.88.71 172.16.88.72 172.16.88.73 172.16.88.74 172.16.88.75 [root@cyh-dell-rocky9-02 ~]# [root@cyh-dell-rocky9-02 ~]# cat name.yml --- - hosts: vm1 remote_user: root tasks: - name: change name raw: "echo {{hostname|quote}} > /etc/hostname" - name: shell: hostname {{hostname|quote}} [root@cyh-dell-rocky9-02 ~]#

批量修改主机名:ansible-playbook ./name.yaml

在master推送ssh key

root@k8s-cilium-master-01:~#ssh-keygen -t rsa -P "" -f ~/.ssh/id_rsa root@k8s-cilium-master-01:~#for i in {62,63,71,72,73,74,75}; do sshpass -p 'redhat' ssh-copy-id -o StrictHostKeyChecking=no -i /root/.ssh/id_rsa -p 22 root@172.16.88.$i; done

初始化节点

cat >> /etc/hosts <<EOF 172.16.88.61 k8s-cilium-master-01 172.16.88.62 k8s-cilium-master-02 172.16.88.63 k8s-cilium-master-03 172.16.88.71 k8s-cilium-node-01 172.16.88.72 k8s-cilium-node-02 172.16.88.73 k8s-cilium-node-03 172.16.88.74 k8s-cilium-node-04 172.16.88.75 k8s-cilium-node-05 EOF swapoff -a sed -i 's@/swap.img@#/swap.img@g' /etc/fstab rm -fr /swap.img systemctl mask swap.img.swap ufw disable apt -y install apt-transport-https ca-certificates curl software-properties-common curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | apt-key add - add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" apt update && apt-get install containerd.io cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter EOF modprobe overlay && modprobe br_netfilter # sysctl params required by setup, params persist across reboots cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF sysctl -p /etc/sysctl.d/k8s.conf

ansible 'vm' -m script -a "./base.sh"

1.3、安装配置Containerd

首先,运行如下命令打印默认并保存默认配置 ~# mkdir /etc/containerd ~# containerd config default > /etc/containerd/config.toml 接下来,编辑生成的配置文件,完成如下几项相关的配置: 修改containerd使用SystemdCgroup [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] SystemdCgroup = true 配置Containerd使用国内Mirror站点上的pause镜像及指定的版本 [plugins."io.containerd.grpc.v1.cri"] sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9" 配置Containerd使用国内的Image加速服务,以加速Image获取 [plugins."io.containerd.grpc.v1.cri".registry] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] endpoint = ["https://docker.mirrors.ustc.edu.cn", "https://registry.docker-cn.com"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."registry.k8s.io"] endpoint = ["https://registry.aliyuncs.com/google_containers"]

完整config.toml文件

root@k8s-cilium-master-01:~# cat /etc/containerd/config.toml disabled_plugins = [] imports = [] oom_score = 0 plugin_dir = "" required_plugins = [] root = "/var/lib/containerd" state = "/run/containerd" temp = "" version = 2 [cgroup] path = "" [debug] address = "" format = "" gid = 0 level = "" uid = 0 [grpc] address = "/run/containerd/containerd.sock" gid = 0 max_recv_message_size = 16777216 max_send_message_size = 16777216 tcp_address = "" tcp_tls_ca = "" tcp_tls_cert = "" tcp_tls_key = "" uid = 0 [metrics] address = "" grpc_histogram = false [plugins] [plugins."io.containerd.gc.v1.scheduler"] deletion_threshold = 0 mutation_threshold = 100 pause_threshold = 0.02 schedule_delay = "0s" startup_delay = "100ms" [plugins."io.containerd.grpc.v1.cri"] device_ownership_from_security_context = false disable_apparmor = false disable_cgroup = false disable_hugetlb_controller = true disable_proc_mount = false disable_tcp_service = true drain_exec_sync_io_timeout = "0s" enable_selinux = false enable_tls_streaming = false enable_unprivileged_icmp = false enable_unprivileged_ports = false ignore_deprecation_warnings = [] ignore_image_defined_volumes = false max_concurrent_downloads = 3 max_container_log_line_size = 16384 netns_mounts_under_state_dir = false restrict_oom_score_adj = false sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9" selinux_category_range = 1024 stats_collect_period = 10 stream_idle_timeout = "4h0m0s" stream_server_address = "127.0.0.1" stream_server_port = "0" systemd_cgroup = false tolerate_missing_hugetlb_controller = true unset_seccomp_profile = "" [plugins."io.containerd.grpc.v1.cri".cni] bin_dir = "/opt/cni/bin" conf_dir = "/etc/cni/net.d" conf_template = "" ip_pref = "" max_conf_num = 1 [plugins."io.containerd.grpc.v1.cri".containerd] default_runtime_name = "runc" disable_snapshot_annotations = true discard_unpacked_layers = false ignore_rdt_not_enabled_errors = false no_pivot = false snapshotter = "overlayfs" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "io.containerd.runc.v2" [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] BinaryName = "" CriuImagePath = "" CriuPath = "" CriuWorkPath = "" IoGid = 0 IoUid = 0 NoNewKeyring = false NoPivotRoot = false Root = "" ShimCgroup = "" SystemdCgroup = true [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options] [plugins."io.containerd.grpc.v1.cri".image_decryption] key_model = "node" [plugins."io.containerd.grpc.v1.cri".registry] config_path = "" [plugins."io.containerd.grpc.v1.cri".registry.auths] [plugins."io.containerd.grpc.v1.cri".registry.configs] [plugins."io.containerd.grpc.v1.cri".registry.headers] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] endpoint = ["https://docker.mirrors.ustc.edu.cn", "https://registry.docker-cn.com"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."registry.k8s.io"] endpoint = ["https://registry.aliyuncs.com/google_containers"] [plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming] tls_cert_file = "" tls_key_file = "" [plugins."io.containerd.internal.v1.opt"] path = "/opt/containerd" [plugins."io.containerd.internal.v1.restart"] interval = "10s" [plugins."io.containerd.internal.v1.tracing"] sampling_ratio = 1.0 service_name = "containerd" [plugins."io.containerd.metadata.v1.bolt"] content_sharing_policy = "shared" [plugins."io.containerd.monitor.v1.cgroups"] no_prometheus = false [plugins."io.containerd.runtime.v1.linux"] no_shim = false runtime = "runc" runtime_root = "" shim = "containerd-shim" shim_debug = false [plugins."io.containerd.runtime.v2.task"] platforms = ["linux/amd64"] sched_core = false [plugins."io.containerd.service.v1.diff-service"] default = ["walking"] [plugins."io.containerd.service.v1.tasks-service"] rdt_config_file = "" [plugins."io.containerd.snapshotter.v1.aufs"] root_path = "" [plugins."io.containerd.snapshotter.v1.btrfs"] root_path = "" [plugins."io.containerd.snapshotter.v1.devmapper"] async_remove = false base_image_size = "" discard_blocks = false fs_options = "" fs_type = "" pool_name = "" root_path = "" [plugins."io.containerd.snapshotter.v1.native"] root_path = "" [plugins."io.containerd.snapshotter.v1.overlayfs"] mount_options = [] root_path = "" sync_remove = false upperdir_label = false [plugins."io.containerd.snapshotter.v1.zfs"] root_path = "" [plugins."io.containerd.tracing.processor.v1.otlp"] endpoint = "" insecure = false protocol = "" [proxy_plugins] [stream_processors] [stream_processors."io.containerd.ocicrypt.decoder.v1.tar"] accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar" [stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"] accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar+gzip" [timeouts] "io.containerd.timeout.bolt.open" = "0s" "io.containerd.timeout.shim.cleanup" = "5s" "io.containerd.timeout.shim.load" = "5s" "io.containerd.timeout.shim.shutdown" = "3s" "io.containerd.timeout.task.state" = "2s" [ttrpc] address = "" gid = 0 uid = 0 root@k8s-cilium-master-01:~#

最后,同步到其他节点并重新启动containerd服务。

~# for i in {62,63,71,72,73,74,75};do scp /etc/containerd/config.toml root@172.16.88.$i:/etc/containerd/;done ~# systemctl daemon-reload && systemctl restart containerd

配置crictl客户端

cat > /etc/crictl.yaml <<EOF runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 10 debug: true EOF

1.4、安装配置kubernetes

curl -fsSL https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.28/deb/Release.key | gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.28/deb/ /" | tee /etc/apt/sources.list.d/kubernetes.list apt-get update && apt-get install -y kubelet kubeadm kubectl 设置kubelet kubeadm kubectl不允许被动态更新 apt-mark hold kubelet kubeadm kubectl

配置kubernetes 控制平面endpoint节点信息,方便做高可用

root@k8s-cilium-master-01:~# cat /etc/hosts 172.16.88.61 k8s-cilium-master-01 kubeapi.k8s.com root@k8s-cilium-master-01:~# root@k8s-cilium-master-01:~# for i in {62,63,71,72,73,74,75};do scp /etc/hosts root@172.16.88.$i:/etc;done

1.5、初始化kubernetes

kubeadm init \ --control-plane-endpoint="kubeapi.k8s.com" \ --kubernetes-version=v1.28.9 \ --pod-network-cidr=10.244.0.0/16 \ --service-cidr=10.96.0.0/12 \ --image-repository=registry.aliyuncs.com/google_containers \ --cri-socket unix:///var/run/containerd/containerd.sock \ --upload-certs

初始化详情

root@k8s-cilium-master-01:~# kubeadm init \ --control-plane-endpoint="kubeapi.k8s.com" \ --kubernetes-version=v1.28.9 \ --pod-network-cidr=10.244.0.0/16 \ --service-cidr=10.96.0.0/12 \ --image-repository=registry.aliyuncs.com/google_containers \ --cri-socket unix:///var/run/containerd/containerd.sock \ --upload-certs [init] Using Kubernetes version: v1.28.9 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-cilium-master-01 kubeapi.k8s.com kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.16.88.61] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-cilium-master-01 localhost] and IPs [172.16.88.61 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-cilium-master-01 localhost] and IPs [172.16.88.61 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 9.005007 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: ba8510df5514ef3178b1d08293f7d4d7a725fdabacc1602a03587c1a8ee244ec [mark-control-plane] Marking the node k8s-cilium-master-01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node k8s-cilium-master-01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] [bootstrap-token] Using token: 6i1bqc.gk7sf1alyiunuzry [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join kubeapi.k8s.com:6443 --token 6i1bqc.gk7sf1alyiunuzry \ --discovery-token-ca-cert-hash sha256:77df5c95946f6467da3c9fe2c5360887290f6322d04c40d525970eebea814655 \ --control-plane --certificate-key ba8510df5514ef3178b1d08293f7d4d7a725fdabacc1602a03587c1a8ee244ec Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join kubeapi.k8s.com:6443 --token 6i1bqc.gk7sf1alyiunuzry \ --discovery-token-ca-cert-hash sha256:77df5c95946f6467da3c9fe2c5360887290f6322d04c40d525970eebea814655 root@k8s-cilium-master-01:~#

同步key到master02、master03

master02、03创建etcd目录文件 mkdir -p /etc/kubernetes/pki/etcd/ 拷贝key scp /etc/kubernetes/pki/ca.* 172.16.88.62:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/ca.* 172.16.88.63:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/sa.* 172.16.88.62:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/sa.* 172.16.88.63:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/front-proxy-ca.* 172.16.88.62:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/front-proxy-ca.* 172.16.88.63:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/etcd/ca.* 172.16.88.62:/etc/kubernetes/pki/etcd/ scp /etc/kubernetes/pki/etcd/ca.* 172.16.88.63:/etc/kubernetes/pki/etcd/ # 将master2、master3加入集群,成为控制节点 kubeadm join kubeapi.k8s.com:6443 --token 6i1bqc.gk7sf1alyiunuzry \ --discovery-token-ca-cert-hash sha256:77df5c95946f6467da3c9fe2c5360887290f6322d04c40d525970eebea814655 \ --control-plane --certificate-key ba8510df5514ef3178b1d08293f7d4d7a725fdabacc1602a03587c1a8ee244ec 添加node节点 kubeadm join kubeapi.k8s.com:6443 --token 6i1bqc.gk7sf1alyiunuzry \ --discovery-token-ca-cert-hash sha256:77df5c95946f6467da3c9fe2c5360887290f6322d04c40d525970eebea814655

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

root@k8s-cilium-master-01:~# kubectl get node -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-cilium-master-01 NotReady control-plane 10m v1.28.9 172.16.88.61 <none> Ubuntu 22.04.4 LTS 5.15.0-105-generic containerd://1.6.31 k8s-cilium-master-02 NotReady control-plane 2m8s v1.28.9 172.16.88.62 <none> Ubuntu 22.04.4 LTS 5.15.0-105-generic containerd://1.6.31 k8s-cilium-master-03 NotReady control-plane 73s v1.28.9 172.16.88.63 <none> Ubuntu 22.04.4 LTS 5.15.0-105-generic containerd://1.6.31 k8s-cilium-node-01 NotReady <none> 74s v1.28.9 172.16.88.71 <none> Ubuntu 22.04.4 LTS 5.15.0-105-generic containerd://1.6.31 k8s-cilium-node-02 NotReady <none> 72s v1.28.9 172.16.88.72 <none> Ubuntu 22.04.4 LTS 5.15.0-105-generic containerd://1.6.31 k8s-cilium-node-03 NotReady <none> 67s v1.28.9 172.16.88.73 <none> Ubuntu 22.04.4 LTS 5.15.0-105-generic containerd://1.6.31 k8s-cilium-node-04 NotReady <none> 65s v1.28.9 172.16.88.74 <none> Ubuntu 22.04.4 LTS 5.15.0-105-generic containerd://1.6.31 k8s-cilium-node-05 NotReady <none> 62s v1.28.9 172.16.88.75 <none> Ubuntu 22.04.4 LTS 5.15.0-105-generic containerd://1.6.31 root@k8s-cilium-master-01:~# root@k8s-cilium-master-01:~# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-66f779496c-j68dq 0/1 Pending 0 10m kube-system coredns-66f779496c-khhw5 0/1 Pending 0 10m kube-system etcd-k8s-cilium-master-01 1/1 Running 7 10m kube-system etcd-k8s-cilium-master-02 1/1 Running 0 2m25s kube-system etcd-k8s-cilium-master-03 1/1 Running 0 70s kube-system kube-apiserver-k8s-cilium-master-01 1/1 Running 7 10m kube-system kube-apiserver-k8s-cilium-master-02 1/1 Running 0 2m24s kube-system kube-controller-manager-k8s-cilium-master-01 1/1 Running 8 (2m14s ago) 10m kube-system kube-controller-manager-k8s-cilium-master-02 1/1 Running 0 2m24s kube-system kube-controller-manager-k8s-cilium-master-03 1/1 Running 0 18s kube-system kube-proxy-4l9s5 1/1 Running 0 91s kube-system kube-proxy-5fg8w 1/1 Running 0 84s kube-system kube-proxy-67z5q 1/1 Running 0 79s kube-system kube-proxy-8668p 1/1 Running 0 89s kube-system kube-proxy-d28rk 1/1 Running 0 10m kube-system kube-proxy-lztwh 1/1 Running 0 2m25s kube-system kube-proxy-qcl2x 1/1 Running 0 82s kube-system kube-proxy-xwp2p 1/1 Running 0 90s kube-system kube-scheduler-k8s-cilium-master-01 1/1 Running 8 (2m9s ago) 10m kube-system kube-scheduler-k8s-cilium-master-02 1/1 Running 0 2m24s kube-system kube-scheduler-k8s-cilium-master-03 1/1 Running 0 29s root@k8s-cilium-master-01:~#

1.6、安装cilium网络插件

root@k8s-cilium-master-01:~# wget https://github.com/cilium/cilium-cli/releases/download/v0.16.4/cilium-linux-amd64.tar.gz root@k8s-cilium-master-01:~# tar -xf cilium-linux-amd64.tar.gz && mv cilium /usr/local/bin/ root@k8s-cilium-master-01:~# cilium version cilium-cli: v0.16.4 compiled with go1.22.1 on linux/amd64 cilium image (default): v1.15.3 cilium image (stable): unknown cilium image (running): unknown. Unable to obtain cilium version. Reason: release: not found root@k8s-cilium-master-01:~# root@k8s-cilium-master-01:~# cilium status /¯¯\ /¯¯\__/¯¯\ Cilium: 1 errors \__/¯¯\__/ Operator: disabled /¯¯\__/¯¯\ Envoy DaemonSet: disabled (using embedded mode) \__/¯¯\__/ Hubble Relay: disabled \__/ ClusterMesh: disabled Containers: cilium cilium-operator Cluster Pods: 0/2 managed by Cilium Helm chart version: Errors: cilium cilium daemonsets.apps "cilium" not found status check failed: [daemonsets.apps "cilium" not found] root@k8s-cilium-master-01:~#

#配置cilium vxlan隧道模式,并开启ingress、hubble

cilium install \ --set kubeProxyReplacement=true \ --set ingressController.enabled=true \ --set ingressController.loadbalancerMode=dedicated \ --set ipam.mode=kubernetes \ --set routingMode=tunnel \ --set tunnelProtocol=vxlan \ --set ipam.operator.clusterPoolIPv4PodCIDRList=10.244.0.0/16 \ --set ipam.Operator.ClusterPoolIPv4MaskSize=24 \ --set hubble.enabled="true" \ --set hubble.listenAddress=":4244" \ --set hubble.relay.enabled="true" \ --set hubble.ui.enabled="true" \ --set prometheus.enabled=true \ --set operator.prometheus.enabled=true \ --set hubble.metrics.port=9665 \ --set hubble.metrics.enableOpenMetrics=true \ --set hubble.metrics.enabled="{dns,drop,tcp,flow,port-distribution,icmp,httpV2:exemplars=true;labelsContext=source_ip\,source_namespace\,source_workload\,destination_ip\,destination_namespace\,destination_workload\,traffic_direction}"

安装详情

root@k8s-cilium-master-01:~# cilium install \ --set kubeProxyReplacement=true \ --set ingressController.enabled=true \ --set ingressController.loadbalancerMode=dedicated \ --set ipam.mode=kubernetes \ --set routingMode=tunnel \ --set tunnelProtocol=vxlan \ --set ipam.operator.clusterPoolIPv4PodCIDRList=10.244.0.0/16 \ --set ipam.Operator.ClusterPoolIPv4MaskSize=24 \ --set hubble.enabled="true" \ --set hubble.listenAddress=":4244" \ --set hubble.relay.enabled="true" \ --set hubble.ui.enabled="true" \ --set prometheus.enabled=true \ --set operator.prometheus.enabled=true \ --set hubble.metrics.port=9665 \ --set hubble.metrics.enableOpenMetrics=true \ --set hubble.metrics.enabled="{dns,drop,tcp,flow,port-distribution,icmp,httpV2:exemplars=true;labelsContext=source_ip\,source_namespace\,source_workload\,destination_ip\,destination_namespace\,destination_workload\,traffic_direction}" ℹ️ Using Cilium version 1.15.3 🔮 Auto-detected cluster name: kubernetes 🔮 Auto-detected kube-proxy has been installed root@k8s-cilium-master-01:~# root@k8s-cilium-master-01:~# cilium status /¯¯\ /¯¯\__/¯¯\ Cilium: OK \__/¯¯\__/ Operator: OK /¯¯\__/¯¯\ Envoy DaemonSet: disabled (using embedded mode) \__/¯¯\__/ Hubble Relay: OK \__/ ClusterMesh: disabled Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1 Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1 DaemonSet cilium Desired: 8, Ready: 8/8, Available: 8/8 Deployment hubble-ui Desired: 1, Ready: 1/1, Available: 1/1 Containers: cilium Running: 8 hubble-relay Running: 1 cilium-operator Running: 1 hubble-ui Running: 1 Cluster Pods: 4/4 managed by Cilium Helm chart version: Image versions cilium quay.io/cilium/cilium:v1.15.3@sha256:da74ab61d1bc665c1c088dff41d5be388d252ca5800f30c7d88844e6b5e440b0: 8 hubble-relay quay.io/cilium/hubble-relay:v1.15.3@sha256:b9c6431aa4f22242a5d0d750c621d9d04bdc25549e4fb1116bfec98dd87958a2: 1 cilium-operator quay.io/cilium/operator-generic:v1.15.3@sha256:c97f23161906b82f5c81a2d825b0646a5aa1dfb4adf1d49cbb87815079e69d61: 1 hubble-ui quay.io/cilium/hubble-ui:v0.13.0@sha256:7d663dc16538dd6e29061abd1047013a645e6e69c115e008bee9ea9fef9a6666: 1 hubble-ui quay.io/cilium/hubble-ui-backend:v0.13.0@sha256:1e7657d997c5a48253bb8dc91ecee75b63018d16ff5e5797e5af367336bc8803: 1 root@k8s-cilium-master-01:~# root@k8s-cilium-master-01:~# kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 121m kube-system cilium-agent ClusterIP None <none> 9964/TCP 108s kube-system cilium-ingress LoadBalancer 10.106.144.101 <pending> 80:31461/TCP,443:30840/TCP 108s kube-system hubble-metrics ClusterIP None <none> 9665/TCP 108s kube-system hubble-peer ClusterIP 10.102.70.39 <none> 443/TCP 108s kube-system hubble-relay ClusterIP 10.106.193.178 <none> 80/TCP 108s kube-system hubble-ui ClusterIP 10.109.144.197 <none> 80/TCP 108s kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 121m root@k8s-cilium-master-01:~#

1.7、安装配置metallb

root@k8s-cilium-master-01:~# wget https://github.com/metallb/metallb/archive/refs/tags/v0.14.5.tar.gz root@k8s-cilium-master-01:~# tar -xf v0.14.5.tar.gz && cd /root/metallb-0.14.5/config/manifests root@k8s-cilium-master-01:~/metallb-0.14.5/config/manifests# kubectl apply -f metallb-native.yaml namespace/metallb-system created customresourcedefinition.apiextensions.k8s.io/bfdprofiles.metallb.io created customresourcedefinition.apiextensions.k8s.io/bgpadvertisements.metallb.io created customresourcedefinition.apiextensions.k8s.io/bgppeers.metallb.io created customresourcedefinition.apiextensions.k8s.io/communities.metallb.io created customresourcedefinition.apiextensions.k8s.io/ipaddresspools.metallb.io created customresourcedefinition.apiextensions.k8s.io/l2advertisements.metallb.io created customresourcedefinition.apiextensions.k8s.io/servicel2statuses.metallb.io created serviceaccount/controller created serviceaccount/speaker created role.rbac.authorization.k8s.io/controller created role.rbac.authorization.k8s.io/pod-lister created clusterrole.rbac.authorization.k8s.io/metallb-system:controller created clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created rolebinding.rbac.authorization.k8s.io/controller created rolebinding.rbac.authorization.k8s.io/pod-lister created clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created configmap/metallb-excludel2 created secret/metallb-webhook-cert created service/metallb-webhook-service created deployment.apps/controller created daemonset.apps/speaker created validatingwebhookconfiguration.admissionregistration.k8s.io/metallb-webhook-configuration created root@k8s-cilium-master-01:~/metallb-0.14.5/config/manifests# root@k8s-cilium-master-01:~/metallb-0.14.5/config/manifests# kubectl get pod -n metallb-system NAME READY STATUS RESTARTS AGE controller-56bb48dcd4-ctfhp 1/1 Running 0 2m32s speaker-2fht9 1/1 Running 0 2m32s speaker-bjvfw 1/1 Running 0 2m32s speaker-d7kx9 1/1 Running 0 2m32s speaker-dbxtf 1/1 Running 0 2m32s speaker-fdw9s 1/1 Running 0 2m32s speaker-kxmfk 1/1 Running 0 2m32s speaker-p8qwv 1/1 Running 0 2m32s speaker-t975r 1/1 Running 0 2m32s root@k8s-cilium-master-01:~/metallb-0.14.5/config/manifests#

配置metallb

root@k8s-cilium-master-01:~# git clone https://github.com/iKubernetes/learning-k8s.git root@k8s-cilium-master-01:~/learning-k8s/MetalLB# cat metallb-ipaddresspool.yaml apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: name: localip-pool namespace: metallb-system spec: addresses: - 172.16.88.90-172.16.88.100 autoAssign: true avoidBuggyIPs: true root@k8s-cilium-master-01:~/learning-k8s/MetalLB# root@k8s-cilium-master-01:~/learning-k8s/MetalLB# kubectl apply -f metallb-ipaddresspool.yaml ipaddresspool.metallb.io/localip-pool created root@k8s-cilium-master-01:~/learning-k8s/MetalLB# root@k8s-cilium-master-01:~/learning-k8s/MetalLB# cat metallb-l2advertisement.yaml apiVersion: metallb.io/v1beta1 kind: L2Advertisement metadata: name: localip-pool-l2a namespace: metallb-system spec: ipAddressPools: - localip-pool interfaces: - enp1s0 root@k8s-cilium-master-01:~/learning-k8s/MetalLB# kubectl apply -f metallb-l2advertisement.yaml l2advertisement.metallb.io/localip-pool-l2a created root@k8s-cilium-master-01:~/learning-k8s/MetalLB# root@k8s-cilium-master-01:~/learning-k8s/MetalLB# kubectl get ipaddresspool -n metallb-system NAME AUTO ASSIGN AVOID BUGGY IPS ADDRESSES localip-pool true true ["172.16.88.90-172.16.88.100"] root@k8s-cilium-master-01:~/learning-k8s/MetalLB#

查看cilium ingress loadbalanecer

root@k8s-cilium-master-01:~/learning-k8s/MetalLB# kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 144m kube-system cilium-agent ClusterIP None <none> 9964/TCP 24m kube-system cilium-ingress LoadBalancer 10.106.144.101 172.16.88.90 80:31461/TCP,443:30840/TCP 24m kube-system hubble-metrics ClusterIP None <none> 9665/TCP 24m kube-system hubble-peer ClusterIP 10.102.70.39 <none> 443/TCP 24m kube-system hubble-relay ClusterIP 10.106.193.178 <none> 80/TCP 24m kube-system hubble-ui ClusterIP 10.109.144.197 <none> 80/TCP 24m kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 144m metallb-system metallb-webhook-service ClusterIP 10.98.152.230 <none> 443/TCP 7m55s root@k8s-cilium-master-01:~/learning-k8s/MetalLB#

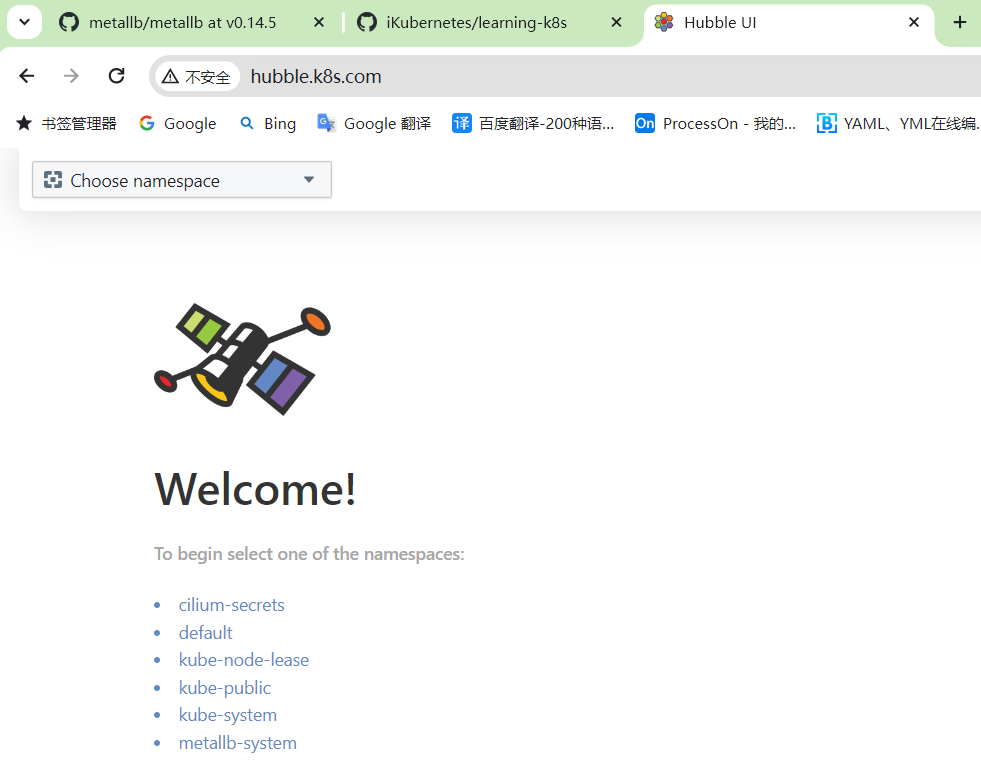

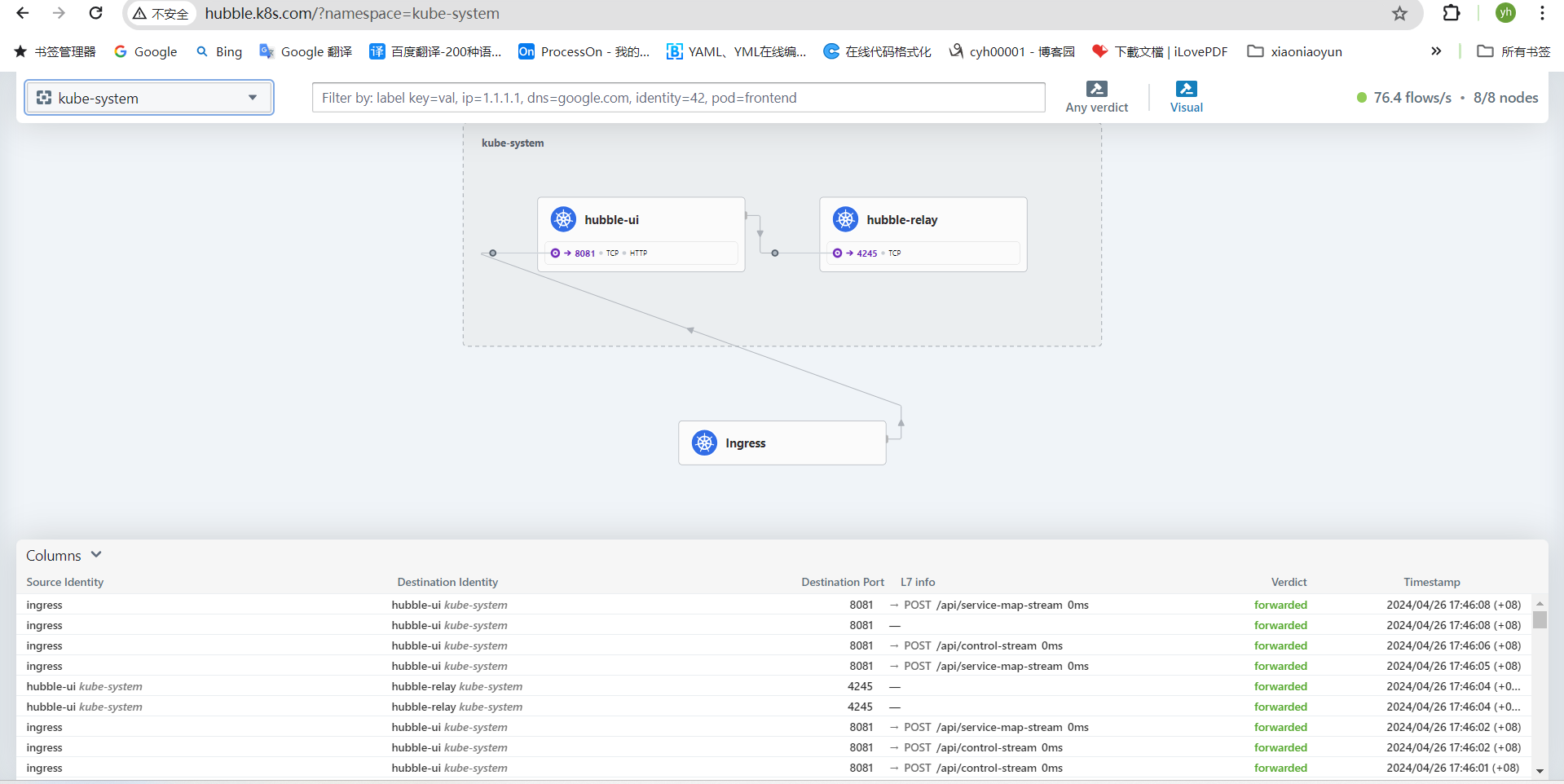

启用hubble ui

root@k8s-cilium-master-01:~/learning-k8s/MetalLB# cilium hubble enable --ui root@k8s-cilium-master-01:~/learning-k8s/MetalLB# kubectl get ingressclass NAME CONTROLLER PARAMETERS AGE cilium cilium.io/ingress-controller <none> 32m root@k8s-cilium-master-01:~/learning-k8s/MetalLB# root@k8s-cilium-master-01:~/learning-k8s/MetalLB# kubectl create ingress hubble-ui --rule='hubble.k8s.com/*=hubble-ui:80' --class='cilium' -n kube-system --dry-run=client -o yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: creationTimestamp: null name: hubble-ui namespace: kube-system spec: ingressClassName: cilium rules: - host: hubble.k8s.com http: paths: - backend: service: name: hubble-ui port: number: 80 path: / pathType: Prefix status: loadBalancer: {} root@k8s-cilium-master-01:~/learning-k8s/MetalLB# kubectl create ingress hubble-ui --rule='hubble.k8s.com/*=hubble-ui:80' --class='cilium' -n kube-system ingress.networking.k8s.io/hubble-ui created root@k8s-cilium-master-01:~/learning-k8s/MetalLB# kubectl get ingress -n kube-system NAME CLASS HOSTS ADDRESS PORTS AGE hubble-ui cilium hubble.k8s.com 172.16.88.91 80 65s root@k8s-cilium-master-01:~/learning-k8s/MetalLB# root@k8s-cilium-master-01:~/learning-k8s/MetalLB# kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 157m kube-system cilium-agent ClusterIP None <none> 9964/TCP 37m kube-system cilium-ingress LoadBalancer 10.106.144.101 172.16.88.90 80:31461/TCP,443:30840/TCP 37m kube-system cilium-ingress-hubble-ui LoadBalancer 10.108.201.184 172.16.88.91 80:31462/TCP,443:30975/TCP 95s kube-system hubble-metrics ClusterIP None <none> 9665/TCP 37m kube-system hubble-peer ClusterIP 10.102.70.39 <none> 443/TCP 37m kube-system hubble-relay ClusterIP 10.106.193.178 <none> 80/TCP 37m kube-system hubble-ui ClusterIP 10.109.144.197 <none> 80/TCP 37m kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 157m metallb-system metallb-webhook-service ClusterIP 10.98.152.230 <none> 443/TCP 21m root@k8s-cilium-master-01:~/learning-k8s/MetalLB#

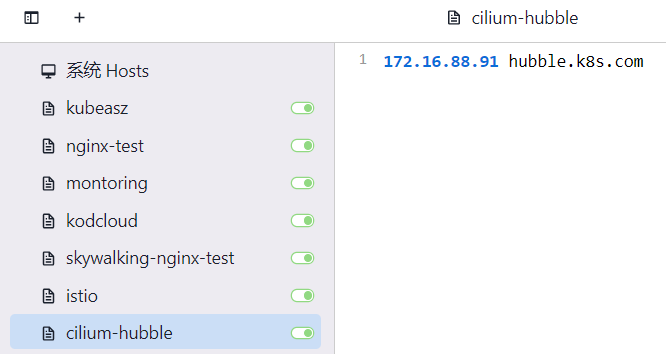

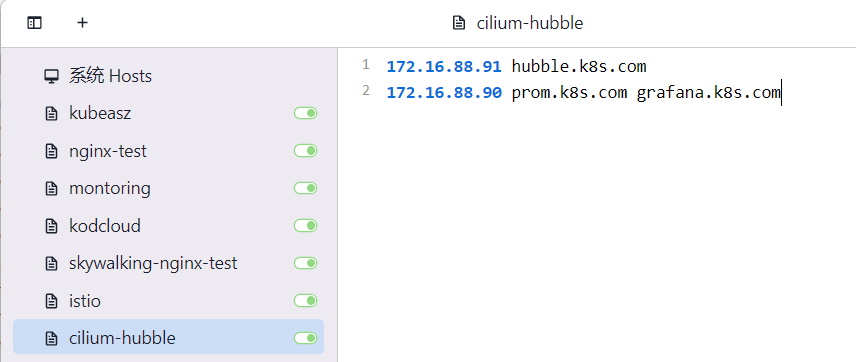

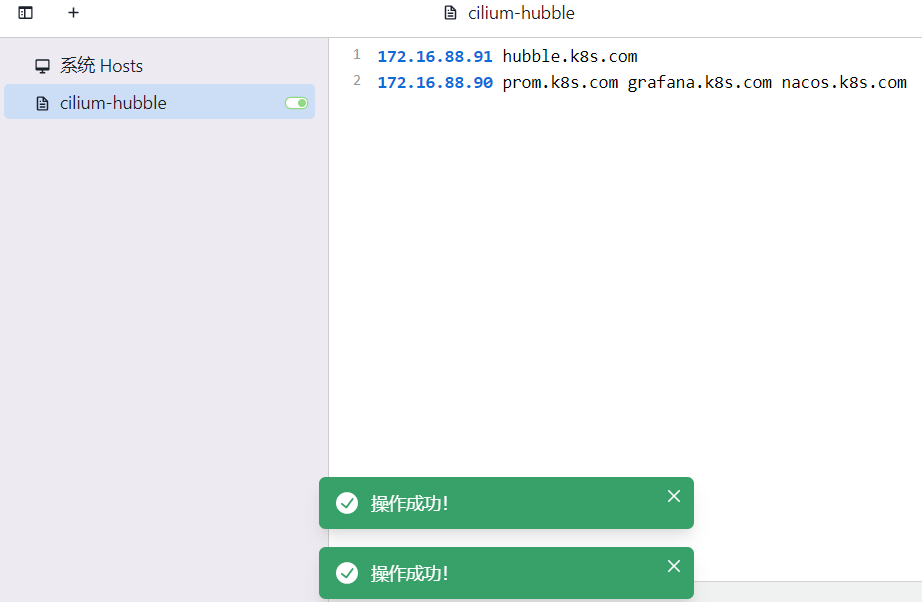

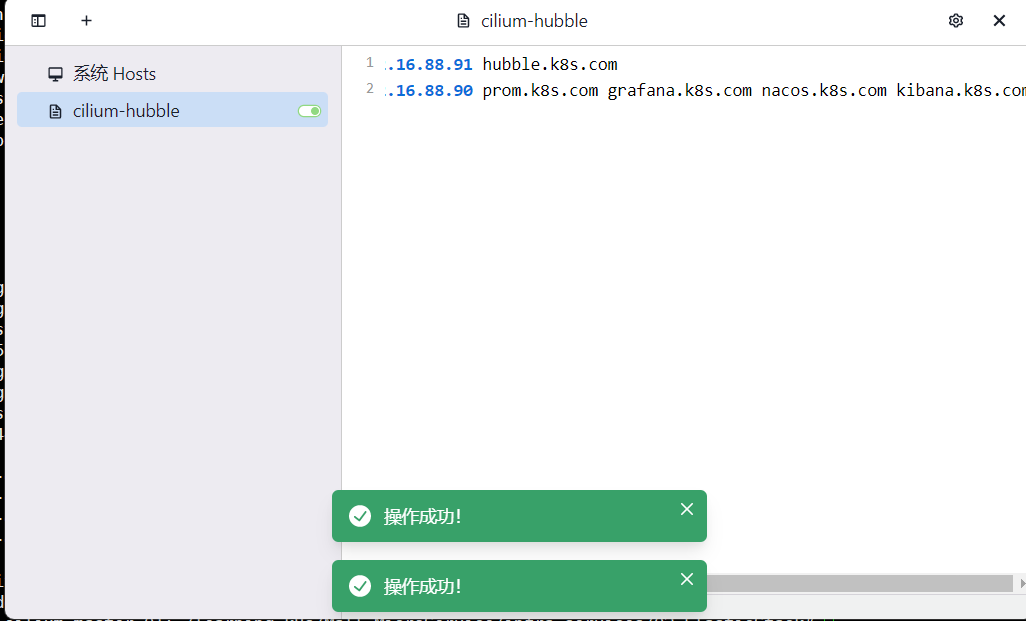

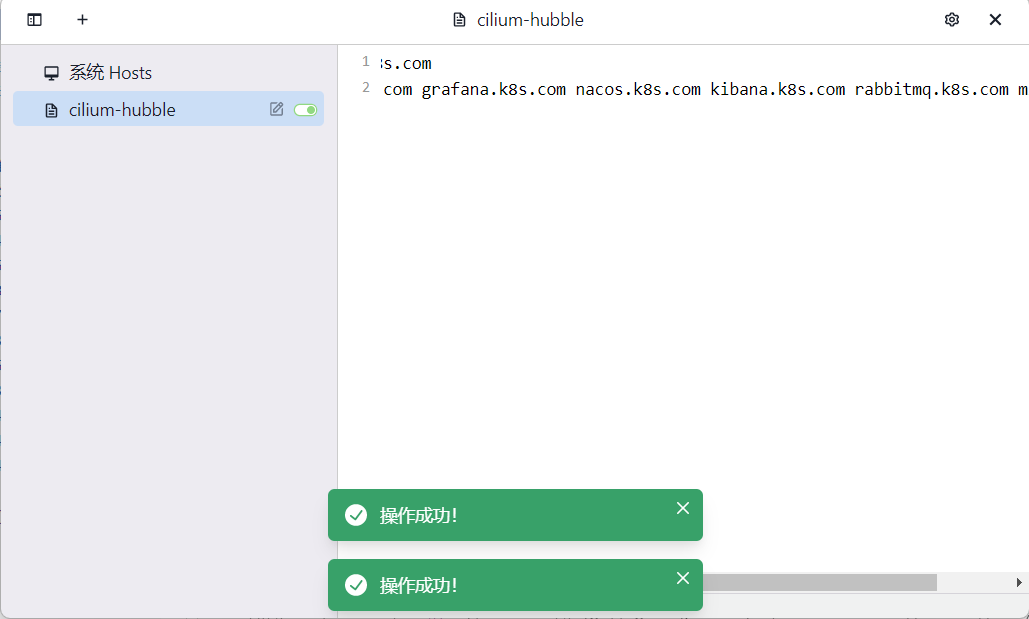

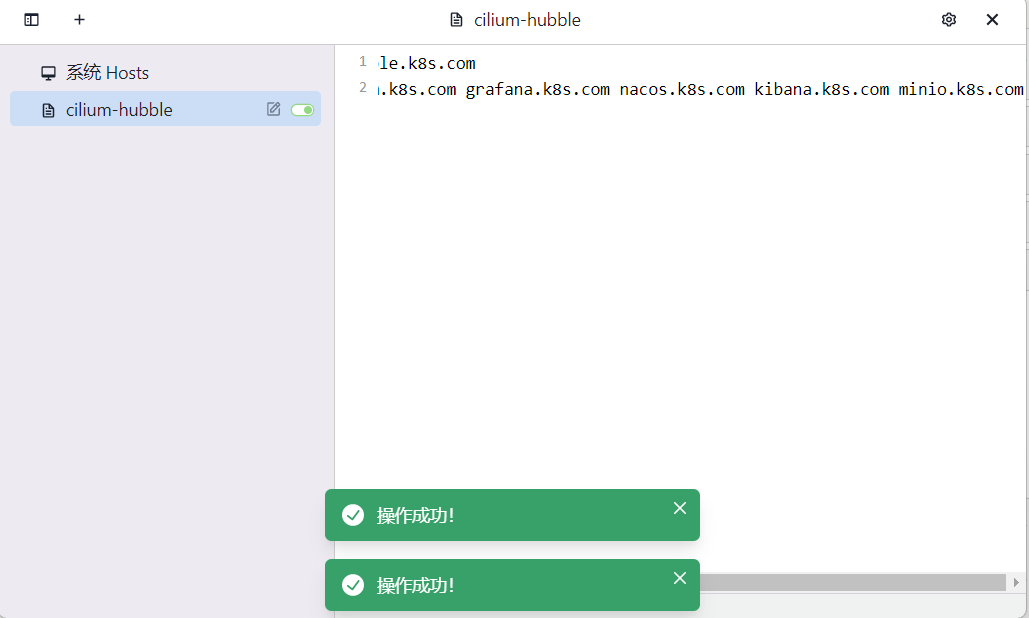

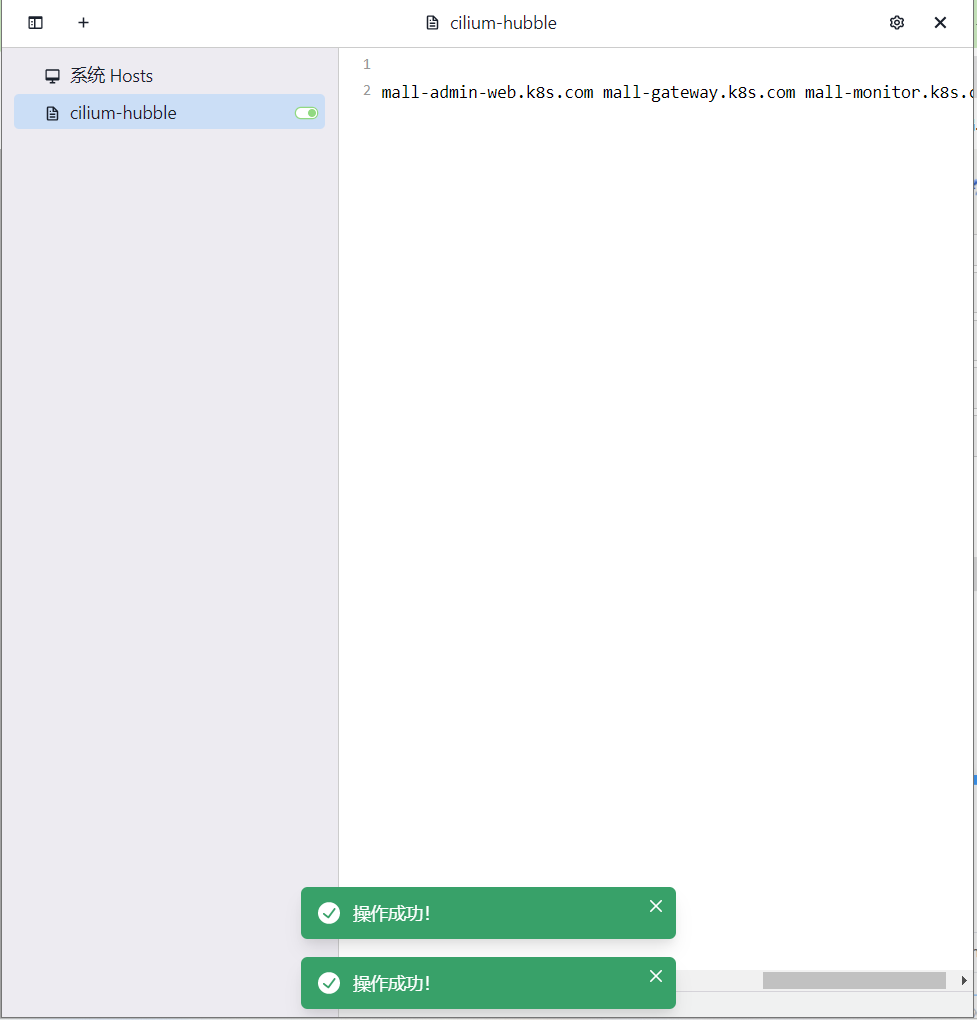

添加本地域名解析

编辑本地hosts

172.16.88.91 hubble.k8s.com

浏览器访问:http://hubble.k8s.com

1.8、安装openebs存储环境

1.8、安装openebs存储环境

# apply this yaml kubectl apply -f https://openebs.github.io/charts/openebs-operator.yaml root@k8s-cilium-master-01:~# kubectl apply -f https://openebs.github.io/charts/openebs-operator.yaml namespace/openebs created serviceaccount/openebs-maya-operator created clusterrole.rbac.authorization.k8s.io/openebs-maya-operator created clusterrolebinding.rbac.authorization.k8s.io/openebs-maya-operator created customresourcedefinition.apiextensions.k8s.io/blockdevices.openebs.io created customresourcedefinition.apiextensions.k8s.io/blockdeviceclaims.openebs.io created configmap/openebs-ndm-config created daemonset.apps/openebs-ndm created deployment.apps/openebs-ndm-operator created deployment.apps/openebs-ndm-cluster-exporter created service/openebs-ndm-cluster-exporter-service created daemonset.apps/openebs-ndm-node-exporter created service/openebs-ndm-node-exporter-service created deployment.apps/openebs-localpv-provisioner created storageclass.storage.k8s.io/openebs-hostpath created storageclass.storage.k8s.io/openebs-device created root@k8s-cilium-master-01:~# root@k8s-cilium-master-01:~# kubectl get pod -n openebs NAME READY STATUS RESTARTS AGE openebs-localpv-provisioner-6787b599b9-l9czz 1/1 Running 0 17m openebs-ndm-2lgz5 1/1 Running 0 17m openebs-ndm-6ptjr 1/1 Running 0 17m openebs-ndm-77nwx 1/1 Running 0 17m openebs-ndm-cluster-exporter-7bfd5746f4-52mnw 1/1 Running 0 17m openebs-ndm-h9qj6 1/1 Running 0 17m openebs-ndm-node-exporter-2dl7w 1/1 Running 0 17m openebs-ndm-node-exporter-4b6kc 1/1 Running 0 17m openebs-ndm-node-exporter-dbxgt 1/1 Running 0 17m openebs-ndm-node-exporter-dk6w2 1/1 Running 0 17m openebs-ndm-node-exporter-xc44x 1/1 Running 0 17m openebs-ndm-operator-845b8858db-t2j25 1/1 Running 0 17m openebs-ndm-t7cqx 1/1 Running 0 17m root@k8s-cilium-master-01:~# root@k8s-cilium-master-01:~# kubectl get sc -A NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE openebs-device openebs.io/local Delete WaitForFirstConsumer false 18m openebs-hostpath openebs.io/local Delete WaitForFirstConsumer false 18m root@k8s-cilium-master-01:~#

指定默认存储类

root@k8s-cilium-master-01:~# kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}' storageclass.storage.k8s.io/openebs-hostpath patched root@k8s-cilium-master-01:~# kubectl get sc -A NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE openebs-device openebs.io/local Delete WaitForFirstConsumer false 106m openebs-hostpath (default) openebs.io/local Delete WaitForFirstConsumer false 106m root@k8s-cilium-master-01:~#

nfs存储类安装(可选)

服务端: apt-get install -y nfs-kernel-server mkdir -pv /data/nfs echo "/data/nfs 172.16.88.0/24(rw,fsid=0,async,no_subtree_check,no_auth_nlm,insecure,no_root_squash)" >> /etc/exports exportfs -arv 客户端: apt-get install -y nfs-common k8s部署csi-driver-nfs [root@cyh-dell-rocky9-02 ~]# ansible 'vm' -m shell -a "apt-get install -y nfs-common"ansible 'vm' -m shell -a "apt-get install -y nfs-common" root@k8s-cilium-master-01:~# git clone https://github.com/kubernetes-csi/csi-driver-nfs.git cd /root/csi-driver-nfs/deploy kubectl apply -f v4.6.0/ 创建存储类 vi nfs-sc.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: nfs-csi provisioner: nfs.csi.k8s.io parameters: server: 172.16.88.81 share: /data/nfs reclaimPolicy: Delete volumeBindingMode: Immediate kubectl apply -f nfs-sc.yaml

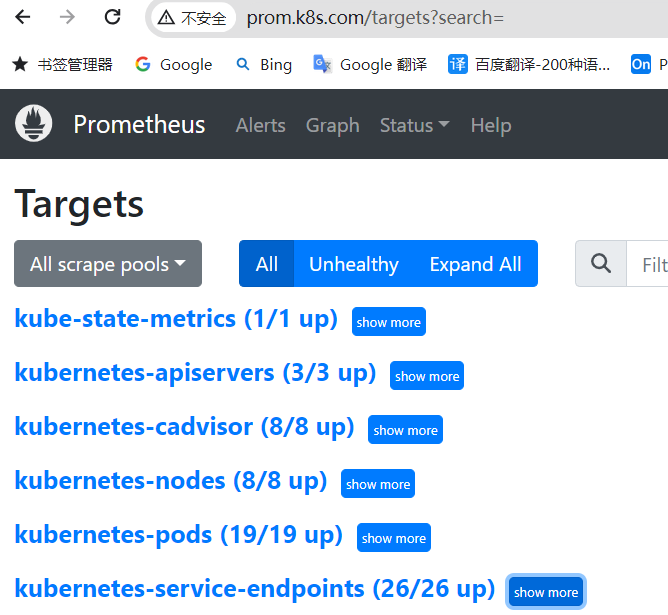

1.9、安装Prometheus监控

部署Prometheus监控系统

cd /root/learning-k8s/Mall-MicroService/infra-services-with-prometheus

kubectl apply -f namespace.yaml

kubectl apply -f prometheus-server/ -n prom

# ll -h prometheus-server/ total 40K drwxr-xr-x 2 root root 4.0K Apr 29 06:59 ./ drwxr-xr-x 8 root root 4.0K Apr 28 08:00 ../ -rw-r--r-- 1 root root 6.0K Apr 28 08:00 prometheus-cfg.yaml -rw-r--r-- 1 root root 1.9K Apr 28 08:00 prometheus-deploy.yaml -rw-r--r-- 1 root root 685 Apr 29 06:59 prometheus-ingress.yaml -rw-r--r-- 1 root root 728 Apr 28 08:00 prometheus-rbac.yaml -rw-r--r-- 1 root root 7.3K Apr 28 08:00 prometheus-rules.yaml -rw-r--r-- 1 root root 357 Apr 28 08:00 prometheus-svc.yaml #

---

apiVersion: v1

kind: Namespace

metadata:

name: prom

--- kind: ConfigMap apiVersion: v1 metadata: labels: app: prometheus name: prometheus-config namespace: prom data: prometheus.yml: |- global: scrape_interval: 15s scrape_timeout: 10s evaluation_interval: 15s rule_files: - /etc/prometheus/prometheus.rules alerting: alertmanagers: - scheme: http static_configs: - targets: - "alertmanager.prom.svc:9093" scrape_configs: # Scape config for prometheus node exporters. # - job_name: 'node-exporter' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_endpoints_name] regex: 'node-exporter' action: keep # Scrape config for kube-state-metrics. # - job_name: 'kube-state-metrics' static_configs: - targets: ['kube-state-metrics.prom.svc:8080'] # Scrape config for kubernetes apiservers. # - job_name: 'kubernetes-apiservers' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https # Scrape config for nodes (kubelet). # - job_name: 'kubernetes-nodes' scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token kubernetes_sd_configs: - role: node relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics # Example scrape config for pods. # # The relabeling allows the actual pod scrape endpoint to be configured via the # following annotations: # # * `prometheus.io/scrape`: Only scrape pods that have a value of `true` # * `prometheus.io/path`: If the metrics path is not `/metrics` override this. # * `prometheus.io/port`: Scrape the pod on the indicated port instead of the # - job_name: 'kubernetes-pods' kubernetes_sd_configs: - role: pod relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name # Scrape config for Kubelet cAdvisor. # - job_name: 'kubernetes-cadvisor' scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token kubernetes_sd_configs: - role: node relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor # Scrape config for service endpoints. # # The relabeling allows the actual service scrape endpoint to be configured # via the following annotations: # # * `prometheus.io/scrape`: Only scrape services that have a value of `true` # * `prometheus.io/scheme`: If the metrics endpoint is secured then you will need # to set this to `https` & most likely set the `tls_config` of the scrape config. # * `prometheus.io/path`: If the metrics path is not `/metrics` override this. # * `prometheus.io/port`: If the metrics are exposed on a different port to the # service then set this appropriately. # - job_name: 'kubernetes-service-endpoints' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme] action: replace target_label: __scheme__ regex: (https?) - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port] action: replace target_label: __address__ regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: replace target_label: kubernetes_name

--- apiVersion: apps/v1 kind: Deployment metadata: name: prometheus-server namespace: prom labels: app: prometheus spec: replicas: 1 selector: matchLabels: app: prometheus component: server #matchExpressions: #- {key: app, operator: In, values: [prometheus]} #- {key: component, operator: In, values: [server]} template: metadata: labels: app: prometheus component: server annotations: prometheus.io/scrape: 'true' prometheus.io/port: '9090' spec: serviceAccountName: prometheus containers: - name: prometheus image: prom/prometheus:v2.50.1 imagePullPolicy: Always command: - prometheus - --config.file=/etc/prometheus/prometheus.yml - --storage.tsdb.path=/prometheus - --storage.tsdb.retention=720h ports: - containerPort: 9090 protocol: TCP resources: limits: memory: 2Gi volumeMounts: - mountPath: /etc/prometheus/prometheus.yml name: prometheus-config subPath: prometheus.yml - mountPath: /etc/prometheus/prometheus.rules name: prometheus-rules subPath: prometheus.rules - mountPath: /prometheus/ name: prometheus-storage-volume volumes: - name: prometheus-config configMap: name: prometheus-config items: - key: prometheus.yml path: prometheus.yml mode: 0644 - name: prometheus-rules configMap: name: prometheus-rules items: - key: prometheus.rules path: prometheus.rules mode: 0644 - name: prometheus-storage-volume emptyDir: {}

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: prometheus namespace: prom labels: app: prometheus annotations: ingress.cilium.io/loadbalancer-mode: 'shared' ingress.cilium.io/service-type: 'Loadbalancer' spec: ingressClassName: 'cilium' rules: - host: prom.k8s.com http: paths: - path: / pathType: Prefix backend: service: name: prometheus port: number: 9090 - host: prometheus.k8s.com http: paths: - path: / pathType: Prefix backend: service: name: prometheus port: number: 9090

--- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: prometheus rules: - apiGroups: [""] resources: - nodes - nodes/proxy - services - endpoints - pods verbs: ["get", "list", "watch"] - apiGroups: - extensions - networking.k8s.io resources: - ingresses verbs: ["get", "list", "watch"] - nonResourceURLs: ["/metrics"] verbs: ["get"] --- apiVersion: v1 kind: ServiceAccount metadata: name: prometheus namespace: prom --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: prometheus roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus subjects: - kind: ServiceAccount name: prometheus namespace: prom

apiVersion: v1 kind: ConfigMap metadata: name: prometheus-rules namespace: prom data: prometheus.rules: | groups: - name: kube-state-metrics rules: - alert: KubernetesNodeReady expr: kube_node_status_condition{condition="Ready",status="true"} == 0 for: 10m labels: severity: critical annotations: summary: Kubernetes Node ready (instance {{ $labels.instance }}) description: "Node {{ $labels.node }} has been unready for a long time\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesMemoryPressure expr: kube_node_status_condition{condition="MemoryPressure",status="true"} == 1 for: 2m labels: severity: critical annotations: summary: Kubernetes memory pressure (instance {{ $labels.instance }}) description: "{{ $labels.node }} has MemoryPressure condition\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesDiskPressure expr: kube_node_status_condition{condition="DiskPressure",status="true"} == 1 for: 2m labels: severity: critical annotations: summary: Kubernetes disk pressure (instance {{ $labels.instance }}) description: "{{ $labels.node }} has DiskPressure condition\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesNetworkUnavailable expr: kube_node_status_condition{condition="NetworkUnavailable",status="true"} == 1 for: 2m labels: severity: critical annotations: summary: Kubernetes network unavailable (instance {{ $labels.instance }}) description: "{{ $labels.node }} has NetworkUnavailable condition\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesOutOfCapacity expr: sum by (node) ((kube_pod_status_phase{phase="Running"} == 1) + on(uid) group_left(node) (0 * kube_pod_info{pod_template_hash=""})) / sum by (node) (kube_node_status_allocatable{resource="pods"}) * 100 > 90 for: 2m labels: severity: warning annotations: summary: Kubernetes out of capacity (instance {{ $labels.instance }}) description: "{{ $labels.node }} is out of capacity\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesContainerOomKiller expr: (kube_pod_container_status_restarts_total - kube_pod_container_status_restarts_total offset 10m >= 1) and ignoring (reason) min_over_time(kube_pod_container_status_last_terminated_reason{reason="OOMKilled"}[10m]) == 1 for: 0m labels: severity: warning annotations: summary: Kubernetes container oom killer (instance {{ $labels.instance }}) description: "Container {{ $labels.container }} in pod {{ $labels.namespace }}/{{ $labels.pod }} has been OOMKilled {{ $value }} times in the last 10 minutes.\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesPersistentvolumeclaimPending expr: kube_persistentvolumeclaim_status_phase{phase="Pending"} == 1 for: 2m labels: severity: warning annotations: summary: Kubernetes PersistentVolumeClaim pending (instance {{ $labels.instance }}) description: "PersistentVolumeClaim {{ $labels.namespace }}/{{ $labels.persistentvolumeclaim }} is pending\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesVolumeOutOfDiskSpace expr: kubelet_volume_stats_available_bytes / kubelet_volume_stats_capacity_bytes * 100 < 10 for: 2m labels: severity: warning annotations: summary: Kubernetes Volume out of disk space (instance {{ $labels.instance }}) description: "Volume is almost full (< 10% left)\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesPersistentvolumeError expr: kube_persistentvolume_status_phase{phase=~"Failed|Pending", job="kube-state-metrics"} > 0 for: 0m labels: severity: critical annotations: summary: Kubernetes PersistentVolume error (instance {{ $labels.instance }}) description: "Persistent volume is in bad state\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesPodNotHealthy expr: min_over_time(sum by (namespace, pod) (kube_pod_status_phase{phase=~"Pending|Unknown|Failed"})[15m:1m]) > 0 for: 0m labels: severity: critical annotations: summary: Kubernetes Pod not healthy (instance {{ $labels.instance }}) description: "Pod has been in a non-ready state for longer than 15 minutes.\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesPodCrashLooping expr: increase(kube_pod_container_status_restarts_total[1m]) > 3 for: 2m labels: severity: warning annotations: summary: Kubernetes pod crash looping (instance {{ $labels.instance }}) description: "Pod {{ $labels.pod }} is crash looping\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesApiServerErrors expr: sum(rate(apiserver_request_total{job="apiserver",code=~"^(?:5..)$"}[1m])) / sum(rate(apiserver_request_total{job="apiserver"}[1m])) * 100 > 3 for: 2m labels: severity: critical annotations: summary: Kubernetes API server errors (instance {{ $labels.instance }}) description: "Kubernetes API server is experiencing high error rate\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesApiClientErrors expr: (sum(rate(rest_client_requests_total{code=~"(4|5).."}[1m])) by (instance, job) / sum(rate(rest_client_requests_total[1m])) by (instance, job)) * 100 > 1 for: 2m labels: severity: critical annotations: summary: Kubernetes API client errors (instance {{ $labels.instance }}) description: "Kubernetes API client is experiencing high error rate\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesClientCertificateExpiresNextWeek expr: apiserver_client_certificate_expiration_seconds_count{job="apiserver"} > 0 and histogram_quantile(0.01, sum by (job, le) (rate(apiserver_client_certificate_expiration_seconds_bucket{job="apiserver"}[5m]))) < 7*24*60*60 for: 0m labels: severity: warning annotations: summary: Kubernetes client certificate expires next week (instance {{ $labels.instance }}) description: "A client certificate used to authenticate to the apiserver is expiring next week.\n VALUE = {{ $value }}\n LABELS = {{ $labels }}" - alert: KubernetesClientCertificateExpiresSoon expr: apiserver_client_certificate_expiration_seconds_count{job="apiserver"} > 0 and histogram_quantile(0.01, sum by (job, le) (rate(apiserver_client_certificate_expiration_seconds_bucket{job="apiserver"}[5m]))) < 24*60*60 for: 0m labels: severity: critical annotations: summary: Kubernetes client certificate expires soon (instance {{ $labels.instance }}) description: "A client certificate used to authenticate to the apiserver is expiring in less than 24.0 hours.\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

--- apiVersion: v1 kind: Service metadata: name: prometheus namespace: prom annotations: prometheus.io/scrape: 'true' prometheus.io/port: '9090' labels: app: prometheus spec: type: NodePort ports: - port: 9090 targetPort: 9090 nodePort: 30090 protocol: TCP selector: app: prometheus component: server

部署node-exporter

kubectl apply -f node-exporter/

# ll -h node_exporter/ total 16K drwxr-xr-x 2 root root 4.0K Apr 28 08:00 ./ drwxr-xr-x 8 root root 4.0K Apr 28 08:00 ../ -rw-r--r-- 1 root root 782 Apr 28 08:00 node-exporter-ds.yaml -rw-r--r-- 1 root root 383 Apr 28 08:00 node-exporter-svc.yaml #

apiVersion: apps/v1 kind: DaemonSet metadata: name: prometheus-node-exporter namespace: prom labels: app: prometheus component: node-exporter spec: selector: matchLabels: app: prometheus component: node-exporter template: metadata: name: prometheus-node-exporter labels: app: prometheus component: node-exporter spec: tolerations: - effect: NoSchedule key: node-role.kubernetes.io/master containers: - image: prom/node-exporter:v1.7.0 #image: registry.magedu.com/prom/node-exporter:v1.5.0 name: prometheus-node-exporter ports: - name: prom-node-exp containerPort: 9100 hostPort: 9100 hostNetwork: true hostPID: true

apiVersion: v1 kind: Service metadata: annotations: prometheus.io/scrape: 'true' name: prometheus-node-exporter namespace: prom labels: app: prometheus component: node-exporter spec: clusterIP: None ports: - name: prometheus-node-exporter port: 9100 protocol: TCP selector: app: prometheus component: node-exporter type: ClusterIP

部署Kube-State-Metrics

部署kube-state-metrics,监控Kubernetes集群的服务指标。

kubectl apply -f kube-state-metrics/

# ll -h kube-state-metrics/ total 20K drwxr-xr-x 2 root root 4.0K Apr 28 08:00 ./ drwxr-xr-x 8 root root 4.0K Apr 28 08:00 ../ -rw-r--r-- 1 root root 523 Apr 28 08:00 kube-state-metrics-deploy.yaml -rw-r--r-- 1 root root 1007 Apr 28 08:00 kube-state-metrics-rbac.yaml -rw-r--r-- 1 root root 318 Apr 28 08:00 kube-state-metrics-svc.yaml #

apiVersion: apps/v1 kind: Deployment metadata: name: kube-state-metrics namespace: prom spec: replicas: 1 selector: matchLabels: app: kube-state-metrics template: metadata: labels: app: kube-state-metrics spec: serviceAccountName: kube-state-metrics containers: - name: kube-state-metrics image: gcmirrors/kube-state-metrics:v1.9.5 #image: registry.magedu.com/gcmirrors/kube-state-metrics-amd64:v1.7.1 ports: - containerPort: 8080

--- apiVersion: v1 kind: ServiceAccount metadata: name: kube-state-metrics namespace: prom --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: kube-state-metrics rules: - apiGroups: [""] resources: ["nodes", "pods", "services", "resourcequotas", "replicationcontrollers", "limitranges", "persistentvolumeclaims", "persistentvolumes", "namespaces", "endpoints"] verbs: ["list", "watch"] - apiGroups: ["apps"] resources: ["daemonsets", "deployments", "replicasets", "statefulsets"] verbs: ["list", "watch"] - apiGroups: ["batch"] resources: ["cronjobs", "jobs"] verbs: ["list", "watch"] - apiGroups: ["autoscaling"] resources: ["horizontalpodautoscalers"] verbs: ["list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kube-state-metrics roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kube-state-metrics subjects: - kind: ServiceAccount name: kube-state-metrics namespace: prom

apiVersion: v1 kind: Service metadata: annotations: prometheus.io/scrape: 'true' prometheus.io/port: '8080' name: kube-state-metrics namespace: prom labels: app: kube-state-metrics spec: ports: - name: kube-state-metrics port: 8080 protocol: TCP selector: app: kube-state-metrics

部署AlertManager

部署AlertManager,为Prometheus-Server提供可用的告警发送服务。

kubectl apply -f alertmanager/

# ll -h alertmanager/ total 28K drwxr-xr-x 2 root root 4.0K Apr 28 08:00 ./ drwxr-xr-x 8 root root 4.0K Apr 28 08:00 ../ -rw-r--r-- 1 root root 1.3K Apr 28 08:00 alertmanager-cfg.yaml -rw-r--r-- 1 root root 1.2K Apr 28 08:00 alertmanager-deployment.yaml -rw-r--r-- 1 root root 274 Apr 28 08:00 alertmanager-service.yaml -rw-r--r-- 1 root root 4.2K Apr 28 08:00 alertmanager-templates-cfg.yaml #

# Maintainer: MageEdu <mage@magedu.com> # --- kind: ConfigMap apiVersion: v1 metadata: name: alertmanager-config namespace: prom data: config.yml: |- global: templates: - '/etc/alertmanager-templates/*.tmpl' route: receiver: ops-team group_by: ['alertname', 'priority'] group_wait: 10s repeat_interval: 30m routes: - receiver: ops-manager match: severity: critical group_wait: 10s repeat_interval: 30m receivers: - name: ops-team email_configs: - to: ops@magedu.com send_resolved: false from: monitoring@magedu.com smarthost: smtp.magedu.com:25 require_tls: false headers: subject: "{{ .Status | toUpper }} {{ .CommonLabels.env }}:{{ .CommonLabels.cluster }} {{ .CommonLabels.alertname }}" html: '{{ template "email.default.html" . }}' - name: ops-manager webhook_configs: - url: http://prometheus-webhook-dingtalk:8060/dingtalk/webhook1/send send_resolved: true inhibit_rules: - source_match: severity: 'critical' target_match: severity: 'warning' equal: ['alertname', 'job'] ---

apiVersion: apps/v1 kind: Deployment metadata: name: alertmanager namespace: prom spec: replicas: 1 selector: matchLabels: app: alertmanager template: metadata: name: alertmanager labels: app: alertmanager spec: containers: - name: alertmanager image: prom/alertmanager:v0.27.0 #image: registry.magedu.com/prom/alertmanager:v0.25.0 args: - "--config.file=/etc/alertmanager/config.yml" - "--storage.path=/alertmanager" ports: - name: alertmanager containerPort: 9093 resources: requests: cpu: 500m memory: 500M limits: cpu: 1 memory: 1Gi volumeMounts: - name: config-volume mountPath: /etc/alertmanager - name: templates-volume mountPath: /etc/alertmanager-templates - name: alertmanager mountPath: /alertmanager volumes: - name: config-volume configMap: name: alertmanager-config - name: templates-volume configMap: name: alertmanager-templates - name: alertmanager emptyDir: {}

apiVersion: v1 kind: Service metadata: name: alertmanager namespace: prom annotations: prometheus.io/scrape: 'true' prometheus.io/port: '9093' spec: selector: app: alertmanager type: LoadBalancer ports: - port: 9093 targetPort: 9093

# Maintainer: MageEdu <mage@magedu.com> # --- apiVersion: v1 kind: ConfigMap metadata: name: alertmanager-templates namespace: prom data: dingtalk_template.tmpl: "{{ define \"dingtalk.default.message\" }}\n{{- if gt (len .Alerts.Firing) 0 -}}\n{{- range $index, $alert := .Alerts -}}\n{{- if eq $index 0 }}\n========= 监控报警 ========= \n告警状态:{{ .Status }} \n告警级别:{{ .Labels.severity }} \n告警类型:{{ $alert.Labels.alertname }} \n故障主机: {{ $alert.Labels.instance }} \ \n告警主题: {{ $alert.Annotations.summary }} \n告警详情: {{ $alert.Annotations.message }}{{ $alert.Annotations.description}} \n触发阈值:{{ .Annotations.value }} \n故障时间: {{ ($alert.StartsAt.Add 28800e9).Format \"2006-01-02 15:04:05\" }} \n========= = end = ========= \n{{- end }}\n{{- end }}\n{{- end }}\n\n{{- if gt (len .Alerts.Resolved) 0 -}}\n{{- range $index, $alert := .Alerts -}}\n{{- if eq $index 0 }}\n========= 异常恢复 ========= \n告警类型:{{ .Labels.alertname }} \n告警状态:{{ .Status }} \n告警主题: {{ $alert.Annotations.summary }} \n告警详情: {{ $alert.Annotations.message }}{{ $alert.Annotations.description}} \ \n故障时间: {{ ($alert.StartsAt.Add 28800e9).Format \"2006-01-02 15:04:05\" }} \n恢复时间: {{ ($alert.EndsAt.Add 28800e9).Format \"2006-01-02 15:04:05\" }} \n{{- if gt (len $alert.Labels.instance) 0 }} \n实例信息: {{ $alert.Labels.instance }} \n{{- end }} \n========= = end = ========= \n{{- end }}\n{{- end }}\n{{- end }}\n{{- end }}\n" email_template.tmpl: "{{ define \"email.default.html\" }}\n{{- if gt (len .Alerts.Firing) 0 -}}\n{{ range .Alerts }}\n=========start==========<br>\n告警程序: prometheus_alert <br>\n告警级别: {{ .Labels.severity }} <br>\n告警类型: {{ .Labels.alertname }} <br>\n告警主机: {{ .Labels.instance }} <br>\n告警主题: {{ .Annotations.summary }} <br>\n告警详情: {{ .Annotations.description }} <br>\n触发时间: {{ .StartsAt.Format \"2006-01-02 15:04:05\" }} <br>\n=========end==========<br>\n{{ end }}{{ end -}}\n \n{{- if gt (len .Alerts.Resolved) 0 -}}\n{{ range .Alerts }}\n=========start==========<br>\n告警程序: prometheus_alert <br>\n告警级别: {{ .Labels.severity }} <br>\n告警类型: {{ .Labels.alertname }} <br>\n告警主机: {{ .Labels.instance }} <br>\n告警主题: {{ .Annotations.summary }} <br>\n告警详情: {{ .Annotations.description }} <br>\n触发时间: {{ .StartsAt.Format \"2006-01-02 15:04:05\" }} <br>\n恢复时间: {{ .EndsAt.Format \"2006-01-02 15:04:05\" }} <br>\n=========end==========<br>\n{{ end }}{{ end -}}\n{{- end }}\n" wechat_template.tmpl: | {{ define "wechat.default.message" }} {{- if gt (len .Alerts.Firing) 0 -}} {{- range $index, $alert := .Alerts -}} {{- if eq $index 0 }} ========= 监控报警 ========= 告警状态:{{ .Status }} 告警级别:{{ .Labels.severity }} 告警类型:{{ $alert.Labels.alertname }} 故障主机: {{ $alert.Labels.instance }} 告警主题: {{ $alert.Annotations.summary }} 告警详情: {{ $alert.Annotations.message }}{{ $alert.Annotations.description}}; 触发阈值:{{ .Annotations.value }} 故障时间: {{ ($alert.StartsAt.Add 28800e9).Format "2006-01-02 15:04:05" }} ========= = end = ========= {{- end }} {{- end }} {{- end }} {{- if gt (len .Alerts.Resolved) 0 -}} {{- range $index, $alert := .Alerts -}} {{- if eq $index 0 }} ========= 异常恢复 ========= 告警类型:{{ .Labels.alertname }} 告警状态:{{ .Status }} 告警主题: {{ $alert.Annotations.summary }} 告警详情: {{ $alert.Annotations.message }}{{ $alert.Annotations.description}}; 故障时间: {{ ($alert.StartsAt.Add 28800e9).Format "2006-01-02 15:04:05" }} 恢复时间: {{ ($alert.EndsAt.Add 28800e9).Format "2006-01-02 15:04:05" }} {{- if gt (len $alert.Labels.instance) 0 }} 实例信息: {{ $alert.Labels.instance }} {{- end }} ========= = end = ========= {{- end }} {{- end }} {{- end }} {{- end }} ---

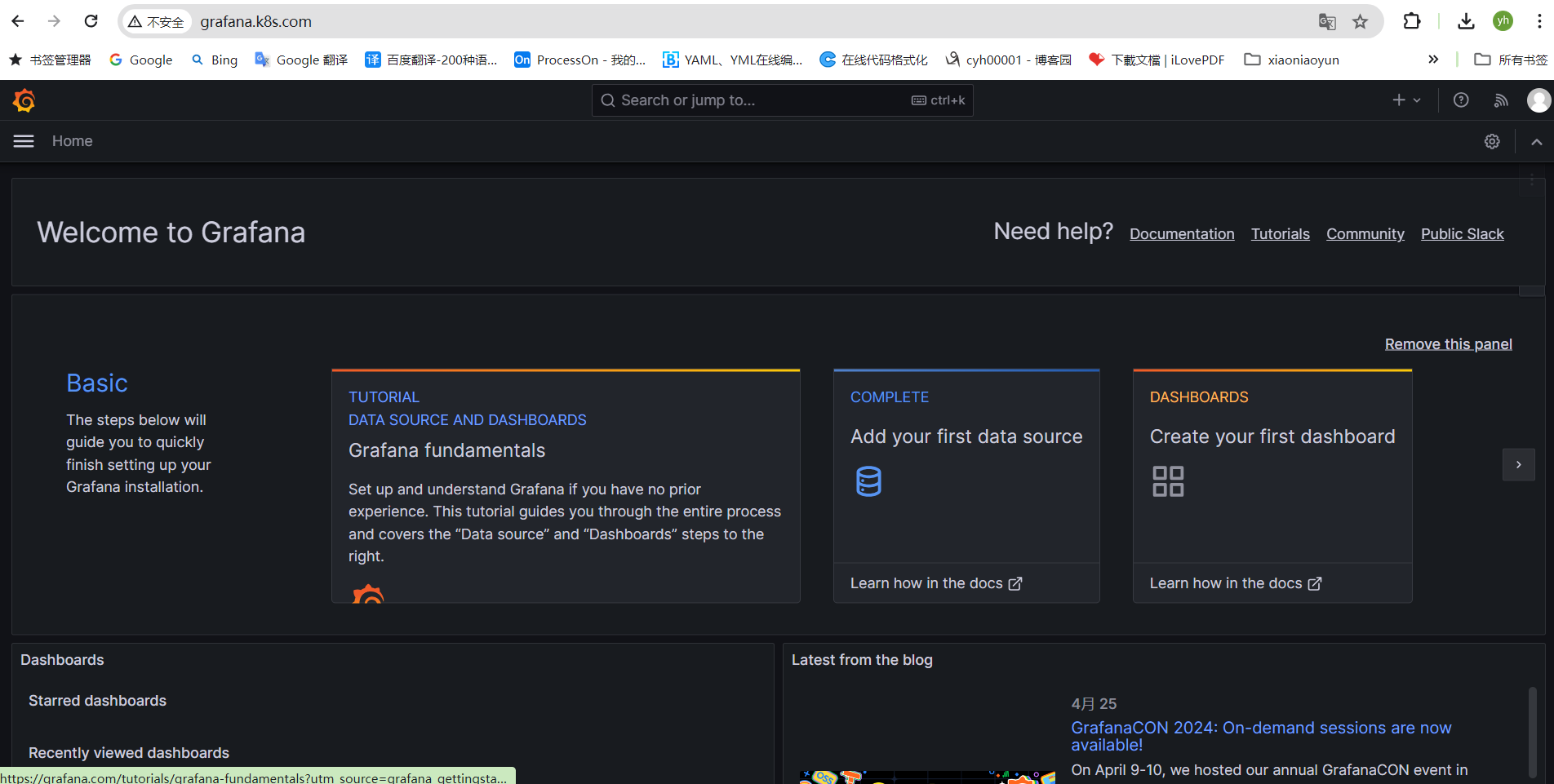

安装grafana

# kubectl apply -f grafana/

# ll -h grafana/ total 28K drwxr-xr-x 2 root root 4.0K Apr 28 08:00 ./ drwxr-xr-x 8 root root 4.0K Apr 28 08:00 ../ -rw-r--r-- 1 root root 532 Apr 28 08:00 01-grafana-cfg.yaml -rw-r--r-- 1 root root 270 Apr 28 08:00 02-grafana-service.yaml -rw-r--r-- 1 root root 210 Apr 28 08:00 03-grafana-pvc.yaml -rw-r--r-- 1 root root 1.6K Apr 28 08:00 04-grafana-deployment.yaml -rw-r--r-- 1 root root 478 Apr 28 08:00 05-grafana-ingress.yaml #

# Maintainer: MageEdu <mage@magedu.com> apiVersion: v1 kind: ConfigMap metadata: name: grafana-datasources namespace: prom data: prometheus.yaml: |- { "apiVersion": 1, "datasources": [ { "access":"proxy", "editable": true, "name": "prometheus", "orgId": 1, "type": "prometheus", "url": "http://prometheus.prom.svc.cluster.local.:9090", "version": 1 } ] } ---

--- apiVersion: v1 kind: Service metadata: name: grafana namespace: prom annotations: prometheus.io/scrape: 'true' prometheus.io/port: '3000' spec: selector: app: grafana type: NodePort ports: - port: 3000 targetPort: 3000 ---

# Maintainer: MageEdu <mage@magedu.com> # --- apiVersion: apps/v1 kind: Deployment metadata: name: grafana namespace: prom spec: replicas: 1 selector: matchLabels: app: grafana template: metadata: name: grafana labels: app: grafana spec: initContainers: - name: fix-permissions image: alpine command: ["sh", "-c", "chown -R 472:472 /var/lib/grafana/"] securityContext: privileged: true volumeMounts: - name: grafana-storage mountPath: /var/lib/grafana containers: - name: grafana image: grafana/grafana:10.2.5 #image: registry.magedu.com/grafana/grafana:9.5.13 imagePullPolicy: IfNotPresent ports: - name: grafana containerPort: 3000 resources: limits: cpu: 200m memory: 200Mi requests: cpu: 100m memory: 100Mi volumeMounts: - mountPath: /etc/grafana/provisioning/datasources name: grafana-datasources readOnly: false #- mountPath: /etc/grafana/provisioning/dashboards # name: grafana-dashboards - mountPath: /var/lib/grafana name: grafana-storage volumes: - name: grafana-datasources configMap: defaultMode: 420 name: grafana-datasources #- configMap: # defaultMode: 420 # name: grafana-dashboards - name: grafana-storage persistentVolumeClaim: claimName: grafana-pvc

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: grafana namespace: prom labels: app: grafana annotations: ingress.cilium.io/loadbalancer-mode: 'shared' ingress.cilium.io/service-type: 'Loadbalancer' spec: ingressClassName: 'cilium' rules: - host: grafana.k8s.com http: paths: - path: / pathType: Prefix backend: service: name: grafana port: number: 3000

--- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: grafana-pvc namespace: prom spec: accessModes: - ReadWriteOnce resources: requests: storage: 5Gi storageClassName: openebs-hostpath

安装后详细

root@k8s-cilium-master-01:~# kubectl get sc -A NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE openebs-device openebs.io/local Delete WaitForFirstConsumer false 145m openebs-hostpath (default) openebs.io/local Delete WaitForFirstConsumer false 145m root@k8s-cilium-master-01:~# kubectl get pvc -A NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE prom grafana-pvc Bound pvc-9529a417-586b-4178-8c1b-36d53f8805d3 5Gi RWO openebs-hostpath 19m root@k8s-cilium-master-01:~# kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25h kube-system cilium-agent ClusterIP None <none> 9964/TCP 25h kube-system cilium-ingress LoadBalancer 10.103.136.93 172.16.88.90 80:30857/TCP,443:31638/TCP 25h kube-system cilium-ingress-hubble-ui LoadBalancer 10.99.117.51 172.16.88.91 80:31222/TCP,443:30301/TCP 25h kube-system hubble-metrics ClusterIP None <none> 9665/TCP 25h kube-system hubble-peer ClusterIP 10.100.154.82 <none> 443/TCP 25h kube-system hubble-relay ClusterIP 10.110.242.159 <none> 80/TCP 25h kube-system hubble-ui ClusterIP 10.111.100.166 <none> 80/TCP 25h kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 25h metallb-system metallb-webhook-service ClusterIP 10.109.66.191 <none> 443/TCP 25h openebs openebs-ndm-cluster-exporter-service ClusterIP None <none> 9100/TCP 145m openebs openebs-ndm-node-exporter-service ClusterIP None <none> 9101/TCP 145m prom grafana NodePort 10.96.83.159 <none> 3000:31211/TCP 19m prom kube-state-metrics ClusterIP 10.108.85.55 <none> 8080/TCP 100m prom prometheus NodePort 10.97.173.12 <none> 9090:30090/TCP 132m prom prometheus-node-exporter ClusterIP None <none> 9100/TCP 103m root@k8s-cilium-master-01:~# kubectl get pod -n prom NAME READY STATUS RESTARTS AGE grafana-69465f69d6-tttqn 1/1 Running 0 19m kube-state-metrics-7bfbbcdbb7-xk9s5 1/1 Running 0 100m prometheus-node-exporter-52bdq 1/1 Running 0 103m prometheus-node-exporter-8cqg7 1/1 Running 0 103m prometheus-node-exporter-gnvd5 1/1 Running 0 103m prometheus-node-exporter-sjg8d 1/1 Running 0 103m prometheus-node-exporter-xp776 1/1 Running 0 103m prometheus-server-5974664c5d-bz7jk 1/1 Running 0 91m root@k8s-cilium-master-01:~# root@k8s-cilium-master-01:~# kubectl get ingress -n prom NAME CLASS HOSTS ADDRESS PORTS AGE grafana cilium grafana.k8s.com 172.16.88.90 80 20m prometheus cilium prom.k8s.com,prometheus.k8s.com 172.16.88.90 80 133m root@k8s-cilium-master-01:~#

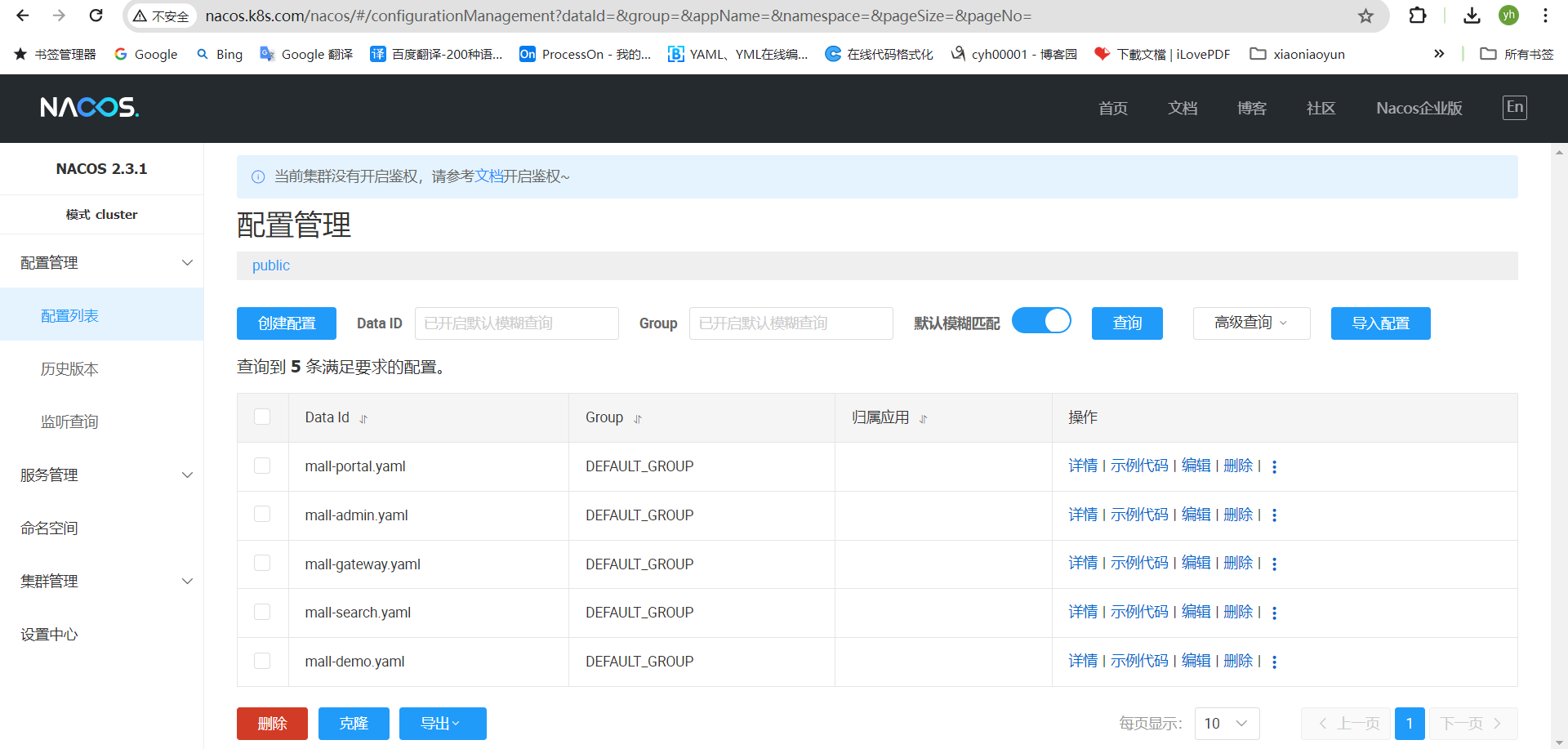

二、业务环境部署

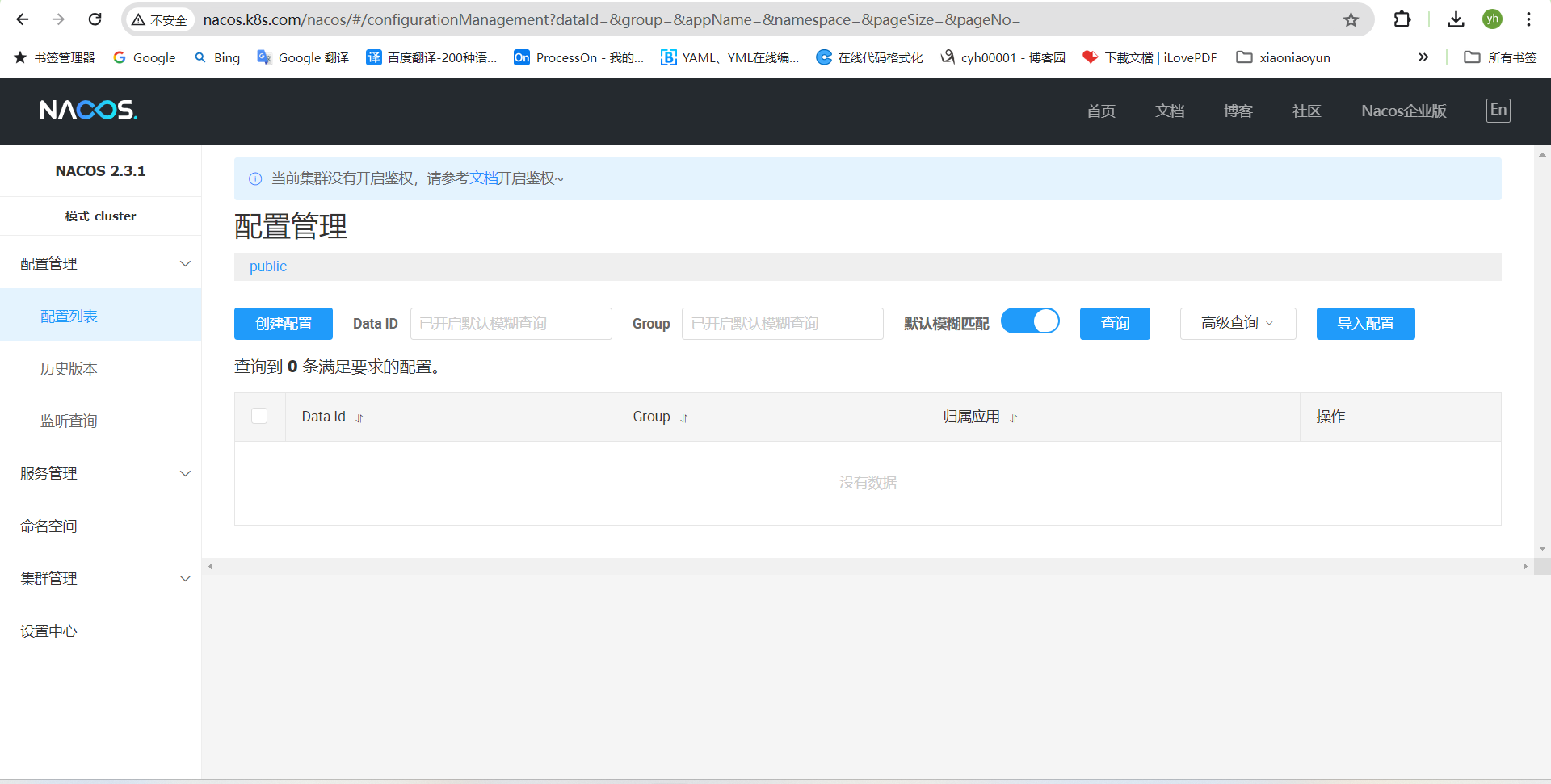

2.1、安装部署nacos

创建名称空间

kubectl create namespace nacos

kubectl apply -f 01-secrets-mysql.yaml -f 02-mysql-persistent.yaml -n nacos

root@k8s-cilium-master-01:~/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos# pwd /root/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos root@k8s-cilium-master-01:~/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos# ll -h total 52K drwxr-xr-x 3 root root 4.0K May 1 07:56 ./ drwxr-xr-x 10 root root 4.0K May 1 07:56 ../ -rw-r--r-- 1 root root 265 May 1 07:56 01-secrets-mysql.yaml -rw-r--r-- 1 root root 8.2K May 1 07:56 02-mysql-persistent.yaml -rw-r--r-- 1 root root 3.2K May 1 07:56 03-nacos-cfg.yaml -rw-r--r-- 1 root root 4.2K May 1 07:56 04-nacos-persistent.yaml -rw-r--r-- 1 root root 342 May 1 07:56 05-nacos-service.yaml -rw-r--r-- 1 root root 430 May 1 07:56 06-nacos-ingress.yaml drwxr-xr-x 2 root root 4.0K May 1 07:56 examples/ -rw-r--r-- 1 root root 2.7K May 1 07:56 README.md root@k8s-cilium-master-01:~/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos#

--- apiVersion: v1 kind: Secret metadata: name: mysql-secret data: database.name: bmFjb3NkYg== # DB name: nacosdb root.password: "" # root password: null user.name: bmFjb3M= # username: nacos user.password: bWFnZWR1LmNvbQo= # password: magedu.com

--- apiVersion: v1 kind: Secret metadata: name: mysql-secret data: database.name: bmFjb3NkYg== # DB name: nacosdb root.password: "" # root password: null user.name: bmFjb3M= # username: nacos user.password: bWFnZWR1LmNvbQo= # password: magedu.com root@k8s-cilium-master-01:~/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos# root@k8s-cilium-master-01:~/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos# cat 02-mysql-persistent.yaml # Maintainer: MageEdu <mage@magedu.com> # Site: http://www.magedu.com # MySQL Replication Cluster for Nacos --- apiVersion: v1 kind: ConfigMap metadata: name: mysql data: primary.cnf: | # Apply this config only on the primary. [mysql] default-character-set=utf8mb4 [mysqld] log-bin character-set-server=utf8mb4 #innodb-file-per-table=on [client] default-character-set=utf8mb4 replica.cnf: | # Apply this config only on replicas. [mysql] default-character-set=utf8mb4 [mysqld] super-read-only character-set-server=utf8mb4 [client] default-character-set=utf8mb4 --- # Headless service for stable DNS entries of StatefulSet members. apiVersion: v1 kind: Service metadata: name: mysql spec: ports: - name: mysql port: 3306 clusterIP: None selector: app: mysql --- # Client service for connecting to any MySQL instance for reads. # For writes, you must instead connect to the primary: mysql-0.mysql. apiVersion: v1 kind: Service metadata: name: mysql-read labels: app: mysql spec: ports: - name: mysql port: 3306 selector: app: mysql --- # StatefulSet for mysql replication cluster apiVersion: apps/v1 kind: StatefulSet metadata: name: mysql spec: selector: matchLabels: app: mysql serviceName: mysql replicas: 2 template: metadata: labels: app: mysql annotations: prometheus.io/scrape: "true" prometheus.io/port: "9104" prometheus.io/path: "/metrics" spec: initContainers: - name: init-mysql #image: registry.magedu.com/nacos/nacos-mysql:5.7 image: nacos/nacos-mysql:5.7 command: - bash - "-c" - | set -ex # Generate mysql server-id from pod ordinal index. [[ $(cat /proc/sys/kernel/hostname) =~ -([0-9]+)$ ]] || exit 1 ordinal=${BASH_REMATCH[1]} echo [mysqld] > /mnt/conf.d/server-id.cnf # Add an offset to avoid reserved server-id=0 value. echo server-id=$((100 + $ordinal)) >> /mnt/conf.d/server-id.cnf # Copy appropriate conf.d files from config-map to emptyDir. if [[ $ordinal -eq 0 ]]; then cp /mnt/config-map/primary.cnf /mnt/conf.d/ else cp /mnt/config-map/replica.cnf /mnt/conf.d/ fi volumeMounts: - name: conf mountPath: /mnt/conf.d - name: config-map mountPath: /mnt/config-map - name: clone-mysql image: ikubernetes/xtrabackup:1.0 command: - bash - "-c" - | set -ex # Skip the clone if data already exists. [[ -d /var/lib/mysql/mysql ]] && exit 0 # Skip the clone on primary (ordinal index 0). [[ $(cat /proc/sys/kernel/hostname) =~ -([0-9]+)$ ]] || exit 1 ordinal=${BASH_REMATCH[1]} [[ $ordinal -eq 0 ]] && exit 0 # Clone data from previous peer. ncat --recv-only mysql-$(($ordinal-1)).mysql 3307 | xbstream -x -C /var/lib/mysql # Prepare the backup. xtrabackup --prepare --target-dir=/var/lib/mysql volumeMounts: - name: data mountPath: /var/lib/mysql subPath: mysql - name: conf mountPath: /etc/mysql/conf.d containers: - name: mysql #image: registry.magedu.com/nacos/nacos-mysql:5.7 image: nacos/nacos-mysql:5.7 env: - name: LANG value: "C.UTF-8" - name: MYSQL_ROOT_PASSWORD valueFrom: secretKeyRef: name: mysql-secret key: root.password - name: MYSQL_ALLOW_EMPTY_PASSWORD value: "1" - name: MYSQL_USER valueFrom: secretKeyRef: name: mysql-secret key: user.name - name: MYSQL_PASSWORD valueFrom: secretKeyRef: name: mysql-secret key: user.password - name: MYSQL_DATABASE valueFrom: secretKeyRef: name: mysql-secret key: database.name ports: - name: mysql containerPort: 3306 volumeMounts: - name: data mountPath: /var/lib/mysql subPath: mysql - name: conf mountPath: /etc/mysql/conf.d livenessProbe: exec: command: ["mysqladmin", "ping"] initialDelaySeconds: 30 periodSeconds: 10 timeoutSeconds: 5 readinessProbe: exec: # Check we can execute queries over TCP (skip-networking is off). command: ["mysql", "-h", "127.0.0.1", "-e", "SELECT 1"] initialDelaySeconds: 5 periodSeconds: 2 timeoutSeconds: 1 - name: xtrabackup image: ikubernetes/xtrabackup:1.0 ports: - name: xtrabackup containerPort: 3307 command: - bash - "-c" - | set -ex cd /var/lib/mysql # Determine binlog position of cloned data, if any. if [[ -f xtrabackup_slave_info && "x$(<xtrabackup_slave_info)" != "x" ]]; then # XtraBackup already generated a partial "CHANGE MASTER TO" query # because we're cloning from an existing replica. (Need to remove the tailing semicolon!) cat xtrabackup_slave_info | sed -E 's/;$//g' > change_master_to.sql.in # Ignore xtrabackup_binlog_info in this case (it's useless). rm -f xtrabackup_slave_info xtrabackup_binlog_info elif [[ -f xtrabackup_binlog_info ]]; then # We're cloning directly from primary. Parse binlog position. [[ `cat xtrabackup_binlog_info` =~ ^(.*?)[[:space:]]+(.*?)$ ]] || exit 1 rm -f xtrabackup_binlog_info xtrabackup_slave_info echo "CHANGE MASTER TO MASTER_LOG_FILE='${BASH_REMATCH[1]}',\ MASTER_LOG_POS=${BASH_REMATCH[2]}" > change_master_to.sql.in fi # Check if we need to complete a clone by starting replication. if [[ -f change_master_to.sql.in ]]; then echo "Waiting for mysqld to be ready (accepting connections)" until mysql -h 127.0.0.1 -e "SELECT 1"; do sleep 1; done echo "Initializing replication from clone position" mysql -h 127.0.0.1 \ -e "$(<change_master_to.sql.in), \ MASTER_HOST='mysql-0.mysql', \ MASTER_USER='root', \ MASTER_PASSWORD='', \ MASTER_CONNECT_RETRY=10; \ START SLAVE;" || exit 1 # In case of container restart, attempt this at-most-once. mv change_master_to.sql.in change_master_to.sql.orig fi # Start a server to send backups when requested by peers. exec ncat --listen --keep-open --send-only --max-conns=1 3307 -c \ "xtrabackup --backup --slave-info --stream=xbstream --host=127.0.0.1 --user=root" volumeMounts: - name: data mountPath: /var/lib/mysql subPath: mysql - name: conf mountPath: /etc/mysql/conf.d - name: mysqld-exporter image: prom/mysqld-exporter:v0.14.0 #image: registry.magedu.com/prom/mysqld-exporter:v0.14.0 env: - name: DATA_SOURCE_NAME value: 'root:""@(localhost:3306)/' args: - --collect.info_schema.innodb_metrics - --collect.info_schema.innodb_tablespaces - --collect.perf_schema.eventsstatementssum - --collect.perf_schema.memory_events - --collect.global_status - --collect.engine_innodb_status - --collect.binlog_size ports: - name: mysqld-exporter containerPort: 9104 volumes: - name: conf emptyDir: {} - name: config-map configMap: name: mysql volumeClaimTemplates: - metadata: name: data spec: accessModes: ["ReadWriteOnce"] # Modify StorageClass before deployment storageClassName: "openebs-hostpath" resources: requests: storage: 10Gi

安装详情

root@k8s-cilium-master-01:~/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos# kubectl create namespace nacos namespace/nacos created root@k8s-cilium-master-01:~/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos# kubectl apply -f 01-secrets-mysql.yaml -f 02-mysql-persistent.yaml -n nacos secret/mysql-secret created configmap/mysql created service/mysql created service/mysql-read created statefulset.apps/mysql created root@k8s-cilium-master-01:~/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos# root@k8s-cilium-master-01:~/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos# kubectl get pod -n nacos NAME READY STATUS RESTARTS AGE mysql-0 3/3 Running 0 60m mysql-1 3/3 Running 0 34m root@k8s-cilium-master-01:~/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos# kubectl get pvc -A NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nacos data-mysql-0 Bound pvc-84cfc00e-a254-4e23-b718-cf9a38c65ac0 10Gi RWO openebs-hostpath 61m nacos data-mysql-1 Bound pvc-89fa2b48-51f6-406e-b2e7-cef565c2777d 10Gi RWO openebs-hostpath 34m prom grafana-pvc Bound pvc-9529a417-586b-4178-8c1b-36d53f8805d3 5Gi RWO openebs-hostpath 2d root@k8s-cilium-master-01:~/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos#

访问入口

读请求:mysql-read.nacos.svc.cluster.local

写请求:mysql-0.mysql.nacos.svc.cluster.local

创建用户账号

在mysql上创建nacos专用的用户账号,本示例中,Naocs默认使用nacos用户名和"magedu.com"密码访问mysql服务上的nacosdb数据库。

kubectl exec -it mysql-0 -n nacos -- mysql -uroot -hlocalhost

在mysql的提示符下运行如下SQL语句后退出即可

mysql> GRANT ALL ON nacosdb.* TO nacos@'%' IDENTIFIED BY 'magedu.com';

root@k8s-cilium-master-01:~/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos# kubectl exec -it mysql-0 -n nacos -- mysql -uroot -hlocalhost Defaulted container "mysql" out of: mysql, xtrabackup, mysqld-exporter, init-mysql (init), clone-mysql (init) Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 1583 Server version: 5.7.39-log MySQL Community Server (GPL) Copyright (c) 2000, 2022, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> GRANT ALL ON nacosdb.* TO nacos@'%' IDENTIFIED BY 'magedu.com'; Query OK, 0 rows affected, 1 warning (0.01 sec) mysql> exit Bye root@k8s-cilium-master-01:~/learning-k8s/Mall-MicroService/infra-services-with-prometheus/01-Nacos#

部署Nacos

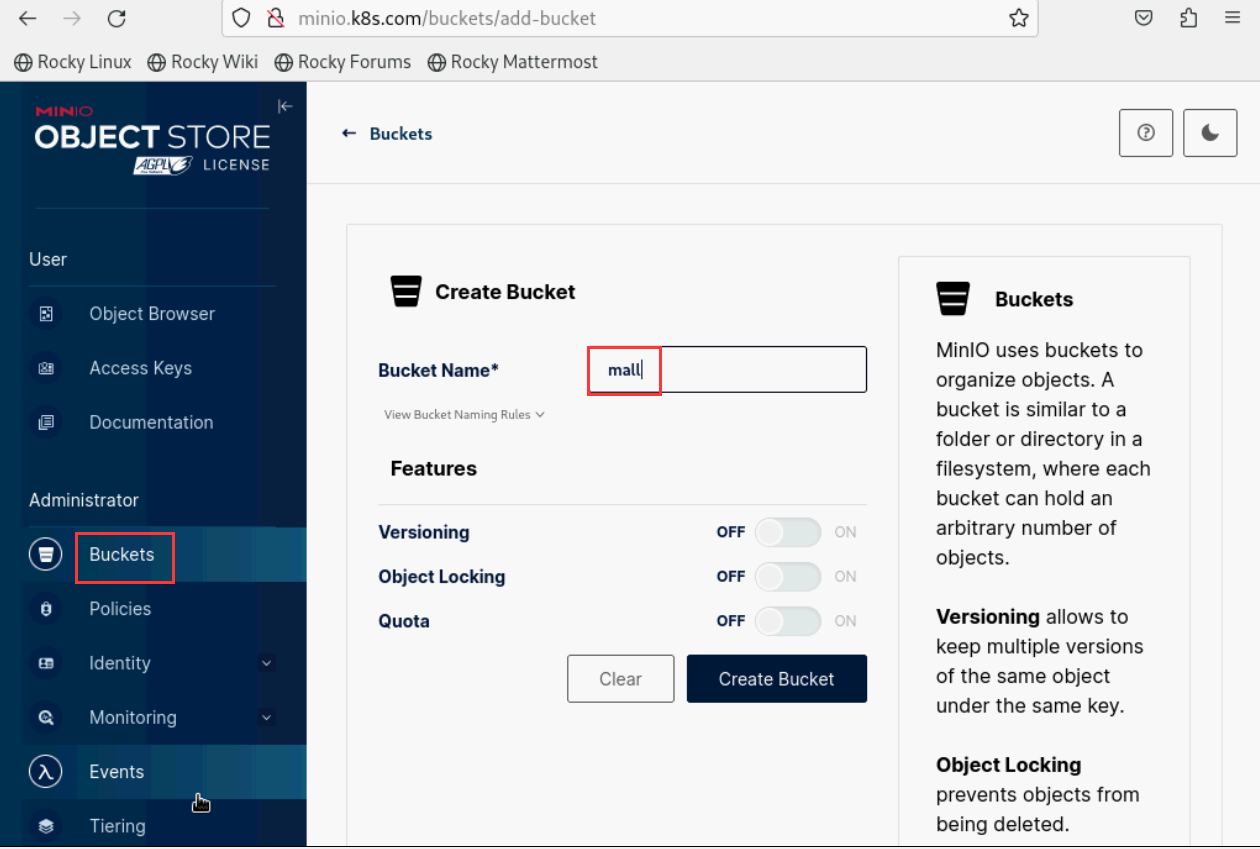

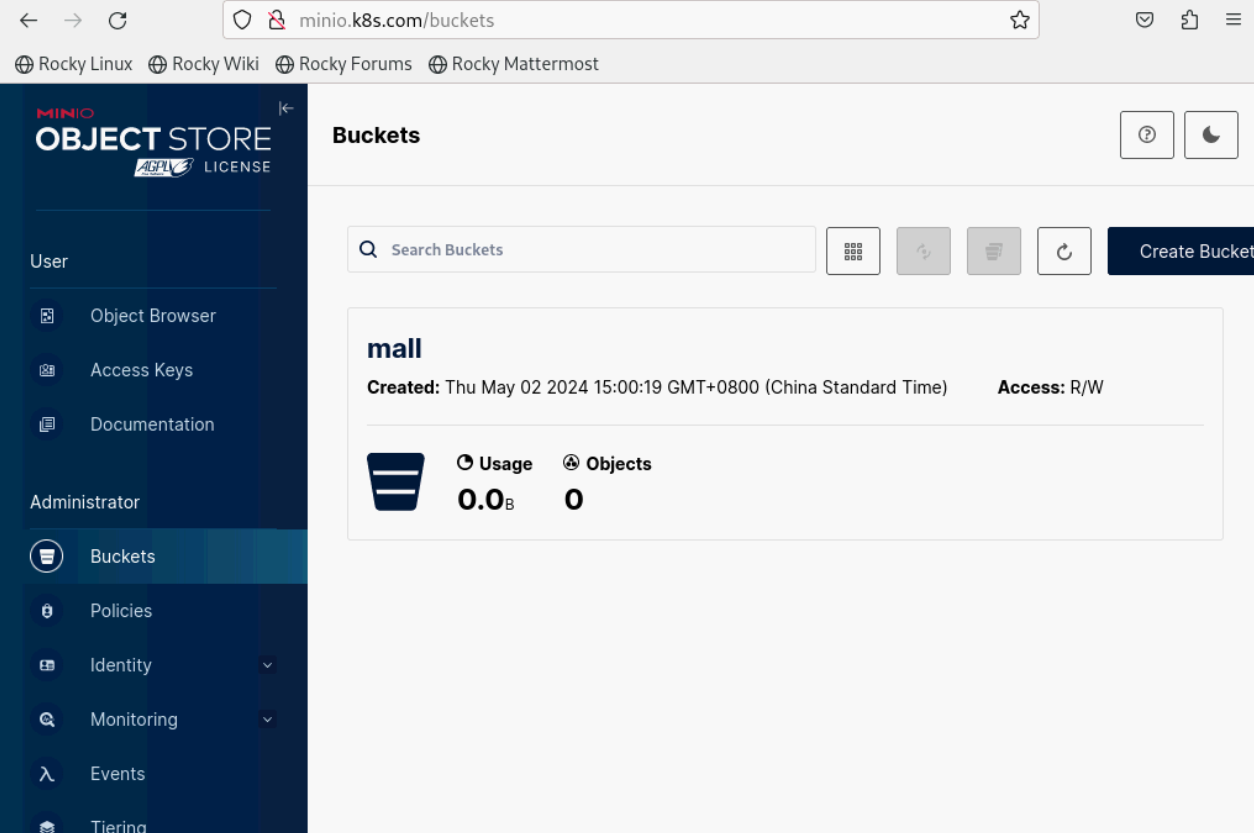

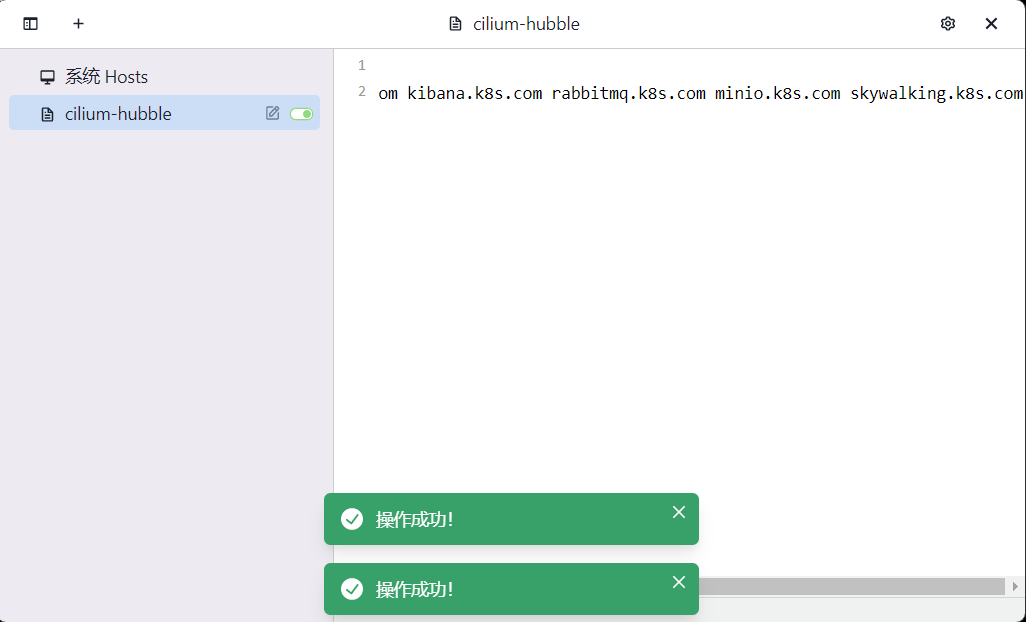

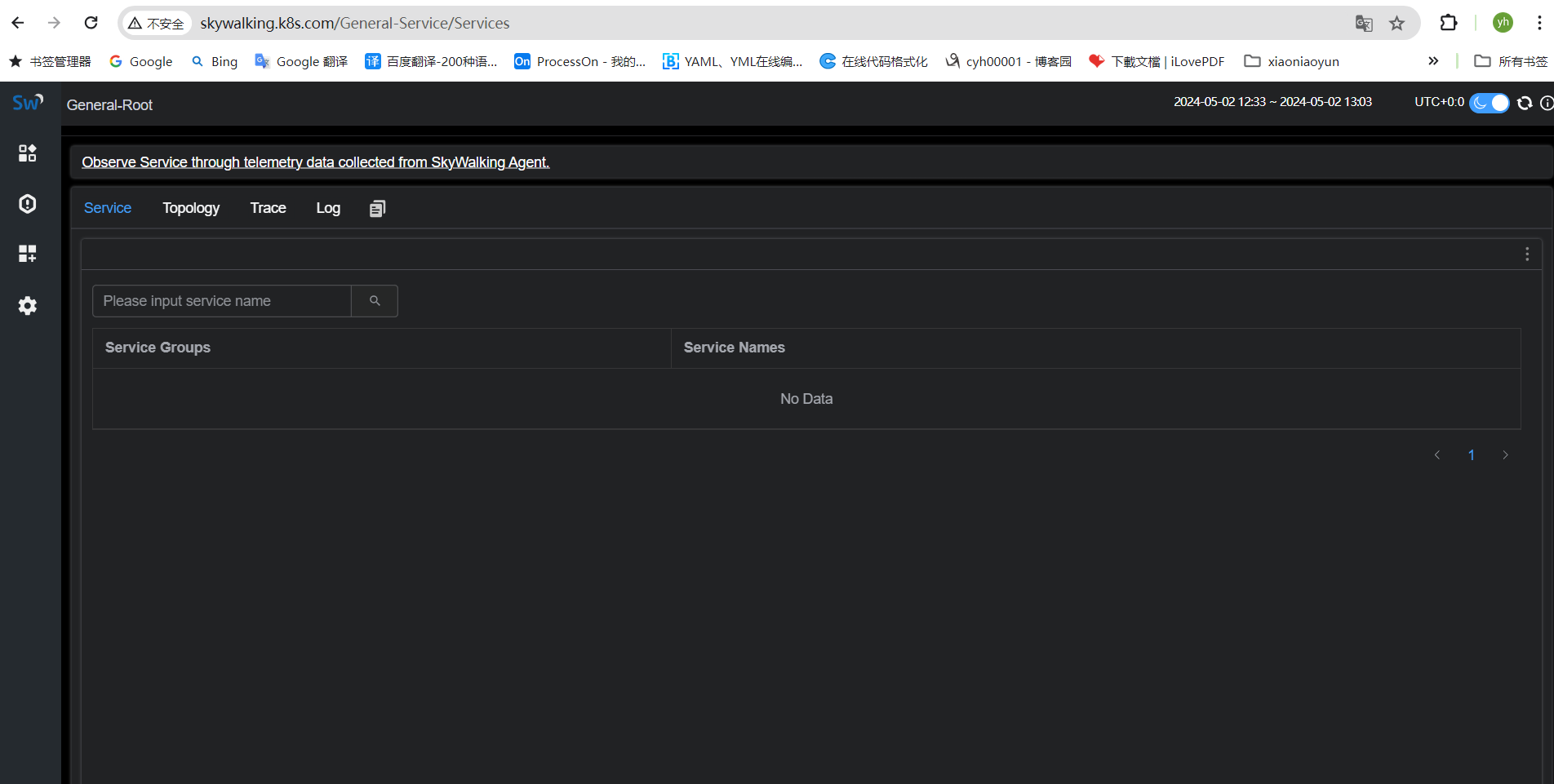

kubectl apply -f 03-nacos-cfg.yaml -f 04-nacos-persistent.yaml -f 05-nacos-service.yaml -n nacos