网络组件Flannel总结

一、Flannel简介

1.1、Flannel原理架构

github地址:https://github.com/flannel-io/flannel

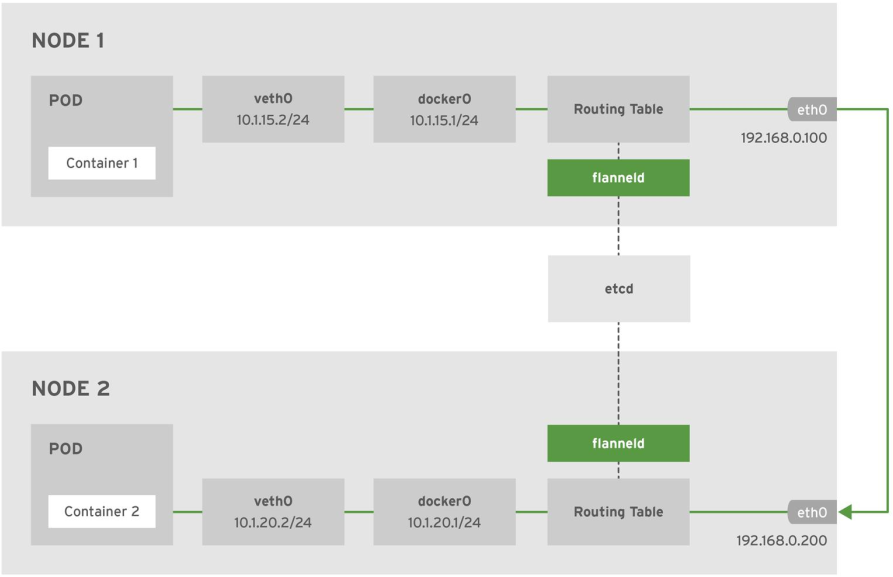

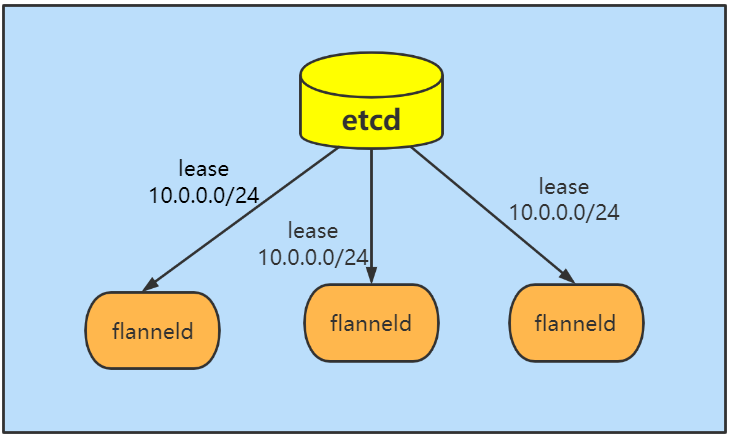

flannel最早由CoreOS开发,它是容器编排系统中最成熟的网络插件示例之一。随着CNI概念的兴起,flannel也是最早实现CNI标准的网络插件(CNI标准也是由CoreOS提出的)。flannel的功能非常简单明确,解决容器跨节点访问的问题。flannel的设计目的是为集群中的所有节点重新规划IP地址的使用规则,从而使得集群中的不同节点主机创建的容器都具有全集群“唯一”且“可路由的IP地址”,并让属于不同节点上的容器能够直接通过内网IP通信。那么节点是如何知道哪些IP可用,哪些不可用,即其他节点已经使用了哪些网段,flannel就用到了etcd的分布式协同功能。

flannel网络中pod跨主机转发流程

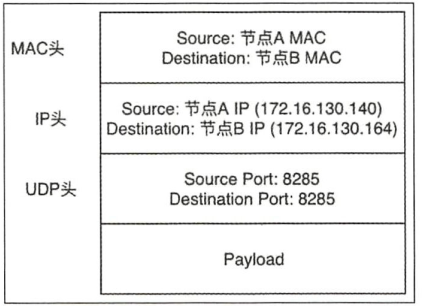

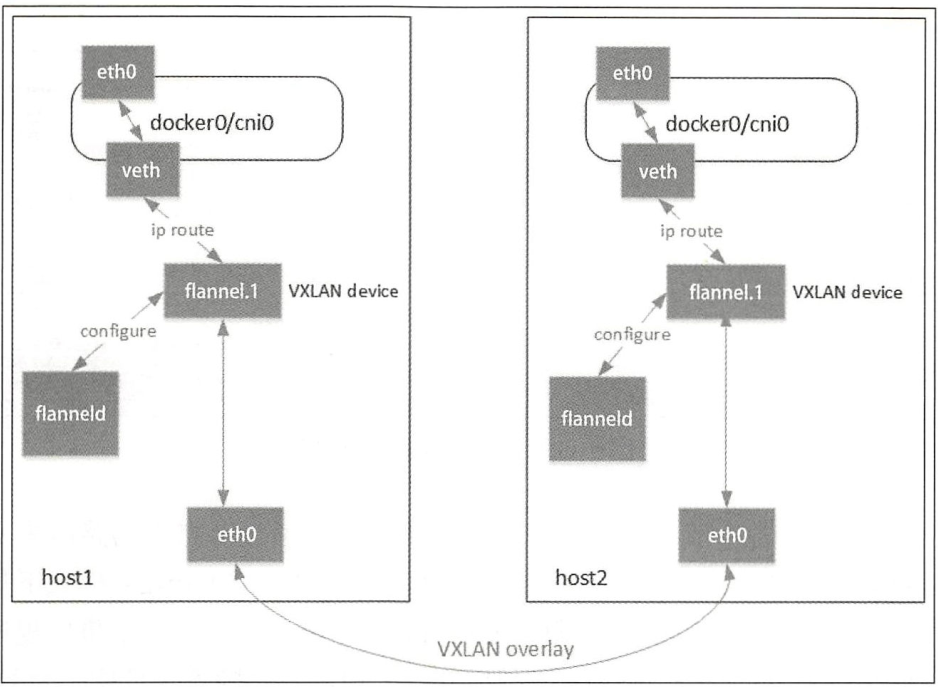

flannel在架构上分为管理面和数据面,管理面主要包含一个etcd,用于协调各个节点上容器分配的网段,数据面即在每个节点上运行一个flanneld进程。与其他网络方案不同的是,flannel采用的是no server架构,即不存在所谓的控制节点,简化了flannel的部署与运维。集群内所有flannel节点共享一个大的容器地址段(在我们的例子中就是10.0.0.0/16),flanneld一启动便会观察etcd,从etcd得知其他节点上的容器已占用网段信息,然后像etcd申请该节点可用的IP地址段(在大网段中划分一个,例如10.0.2.0/24),并把该网段和主机IP地址等信息都记录在etcd中。flannel通过etcd分配了每个节点可用的IP地址段后,修改了Docker的启动参数,例如--bip=172.17.18.1/24限制了所在节点容器获得的IP范围,以确保每个节点上的Docker会使用不同的IP地址段,需要注意的是,这个IP范围是有flannel自动分配的,由flannel通过保存在etcd服务中的记录确保它们不会重复,无需用户手动干预。flannel的底层实现实质上是一种overlay网络(除了Host-Gateway模式),即把某一协议的数据包封装在另一种网络协议中进行路由转发。flannel在封包的时候会观察etcd的数据,在其他节点向etcd更新网段和主机IP信息时,etcd感知到,在向其他主机上的容器转发网络包,用对方的容器所在主机的IP进行封包,然后将数据发往对应主机上的flanneld,再交由其转发给目的容器。

flannel架构图

flannel网络模式

Flannel 网络模型 (后端模型),Flannel目前有三种方式实现 UDP/VXLAN/host-gw

- UDP:早期版本的Flannel使用UDP封装完成报文的跨越主机转发,其安全性及性能略有不足。

- VXLAN:Linux 内核在在2012年底的v3.7.0之后加入了VXLAN协议支持, 因此新版本的Flannel也有UDP转换为VXLAN,VXLAN本质上是一种tunnel(隧道) 协议,用来基于3层网络实现虚拟的2层网络,目前flannel 的网络模型已经是基于VXLAN的叠加(覆盖)网络,目前推荐使用vxlan作为其网络模型。

- Host-gw:也就是Host GateWay,通过在node节点上创建到达各目标容器地址的路由表而完成报文的转发,因此这种方式要求各node节点本身必须处于同一个局域网(二层网络)中,因此不适用于网络变动频繁或比较大型的网络环境,但是其性能较好。

Flannel 组件:

- Cni0:网桥设备,每创建一个pod都会创建一对veth pair,其中一端是pod中的eth0,另一端是Cni0网桥中的端口(网卡),Pod中从网卡eth0发出的流量都会发送到Cni0网桥设备的端口(网卡)上,Cni0设备获得的ip地址是该节点分配到的网段的第一个地址。

- Flannel.1: overlay网络的设备,用来进行vxlan报文的处理(封包和解包),不同node之间的pod数据流量都从overlay设备以隧道的形式发送到对端。

1.2、flannel UDP模式跨主机通信

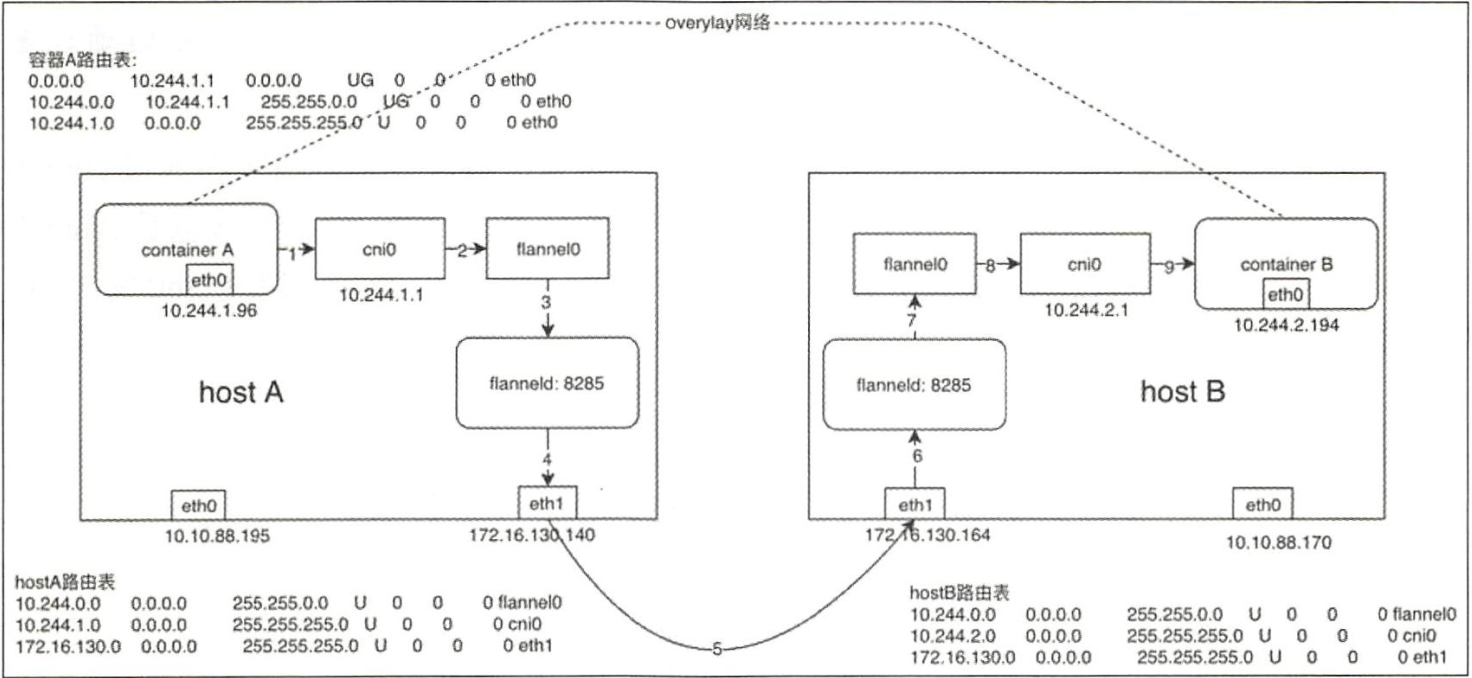

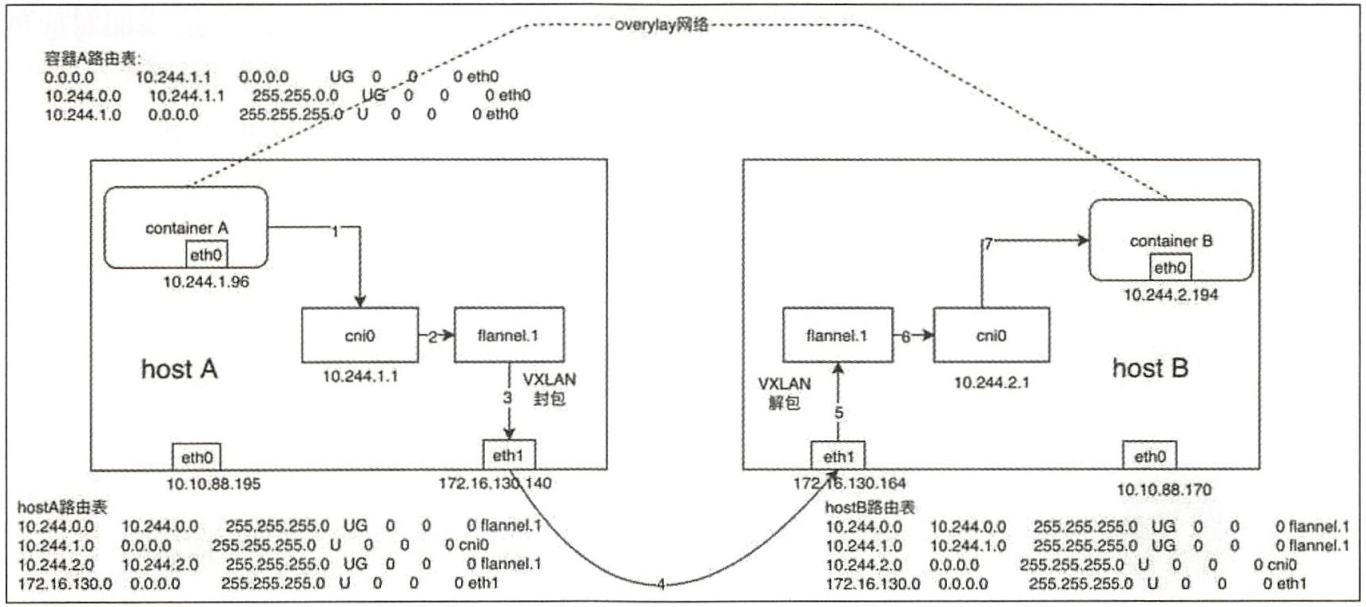

容器跨节点通信实现流程:假设在节点A上有容器A(10.244.1.96),在节点B上有容器B(10.244.2.194)。此时容器A向容器发送一个ICMP请求报文(ping)。

(1)容器A发出ICMP请求报文,通过IP封装后的形式为10.244.1.96(源)--->10.244.2.194(目的),通过容器A内的路由表匹配到应该将IP包发送到网关10.244.1.1(cni0网桥)。

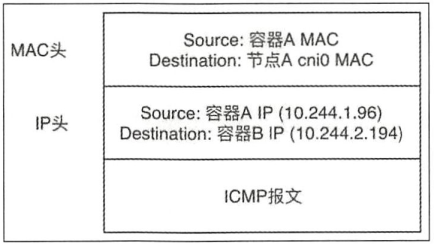

此时的ICMP报文以太网帧格式

(2)到达cni0的IP包目的地IP 10.244.2.194,匹配到节点A上第一条路由规则(10.244.0.0),内核通过查本机路由表知道应该将RAW IP包发送给flannel0接口。

(3)flannel0 为tun设备,发送给flannel0接口的RAW IP包(无MAC信息)将被flanneld进程接收,flanneld进程接收RAW IP包后在原有的基础上进行UDP封包,UDP封包的形式为172.16.130.140:{系统管理的随机端口}--->172.16.130.164;8285。

注:172.16.130.164是10.244.2.194这个目的容器所在宿主机的IP地址,flanneld通过查询etcd很容易得到,8285是flanneld监听端口。

(4)flanneld将封装好的UDP报文经eth0发出,从这里可以看出网络包在通过eth0发出前先是加上UDP头(8个字节),再加上IP头(20个字节)进行封装,这也是flannel0的MTU要被eth0的MTU小28个字节的原因,即防止封包的以太网帧超过eth0的MTU,而在经过eth0时被丢弃。

此时,完整的封包后的ICMP以太网帧格式

(5)网络包经过主机网络从节点A到达节点B。

(6)主机B收到UDP报文后,Linux内核通过UDP端口号8285将包交给正在监听的flanneld。

(7)运行在hostB中的flanneld将UDP包解封包后得到RAW IP包:10.244.1.96--->10.244.2.194。

(8)解封包后的RAW IP包匹配到主机B上的路由规则(10.244.2.0),内核通过查本机路由表知道应该将RAW IP包发送到cni0网桥。

此时,完整的解封包后的以太网帧格式

(9)cni0网桥将IP包转发给连接在该网桥上的容器B,由docker0转发到目标容器,至此整个流程结束。回程报文将按上面的数据流原路返回。

flannel UDP 模式数据流简图

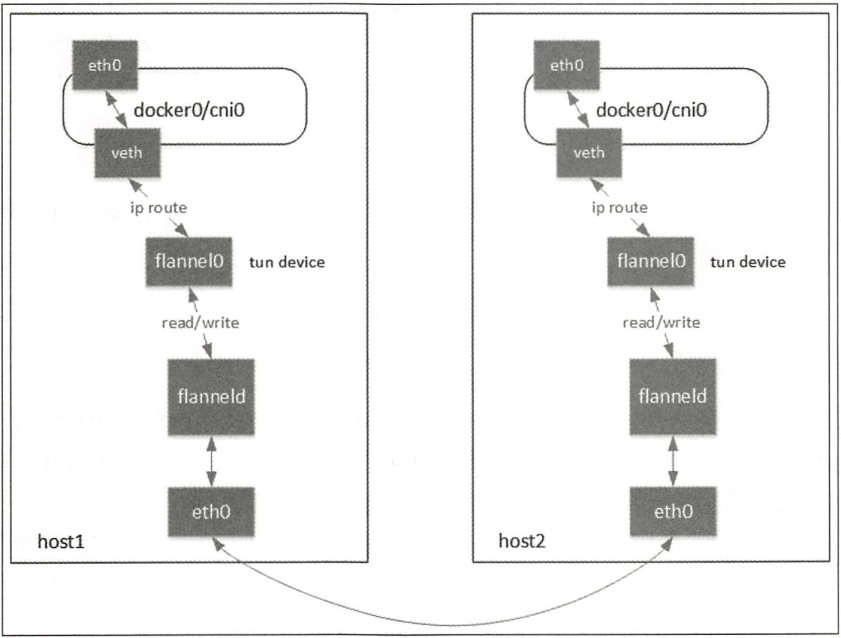

1.3、flannel VXLAN模式数据通信

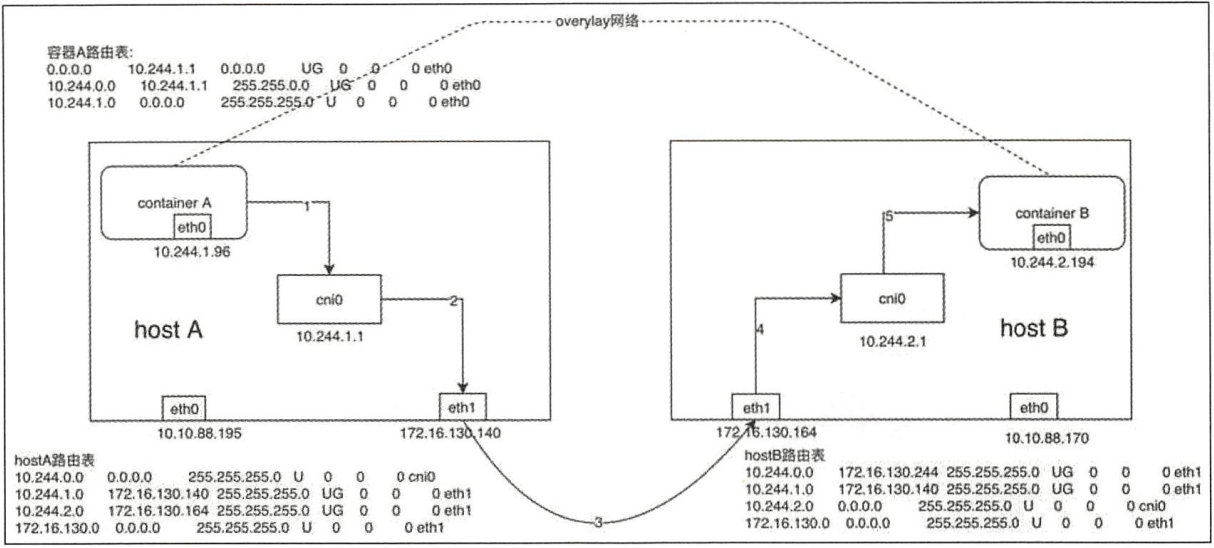

(1)同UDP Backend模式,容器A中的IP包通过容器A内的路由表被发送到cni0。

(2)到达cni0中的IP包通过匹配hostA中的路由表发现通过10.244.2.194的IP包应该交给flannel1.1接口。

(3)flannel1.1作为一个VTEP设备,收到报文后将按照VTEP的配置进行封包。首先,通过etcd得知10.244.2.194属于节点B,并得到节点B的IP。然后,通过节点A中的转发表得到节点B对应的VTEP的MAC,根据flannel1.1设备创建时的设置参数(VNI、localIP、Port)进行VXLAN封包。

(4)通过host A 跟host B 之间的网络连接,VXLAN包到达hostB的eth1接口。

(5)通过端口8472,VXLAN包被转发给VTEP设备flannel1.1进行解包。

(6)解封装后的IP包匹配hostB中的路由表(10.244.2.0),内核将IP包转发给cni0。

(7)cni0将IP包转发给连接在cni0上的容器B。

flannel VXLAN模式工作原理

1.4、flannel Host-gw模式数据通信

(1)同UDP、VXLAN模式一致,通过容器A的路由表IP包到达cni0。

(2)到达cni0的IP包匹配到hostA中的路由规则(10.244.2.0),并且网关为172.16.130.164,即hostB,所以内核将IP包发送给hostB(172.16.130.164)。

(3)IP包通过物理网络到达hostB的eth1。

(4)到达hostB eth1的IP包匹配到hostB中的路由表(10.244.2.0),IP包被转发给cni0。

(5)cni0 将IP包转发给连接在cni0上的容器B。

二、flannel环境部署

#环境准备 192.168.247.81 k8s-flannel-master-01 kubeapi.flannel.com 2vcpu 4G 50G ubuntu20.04 192.168.247.84 k8s-flannel-node-01 2vcpu 4G 50G ubuntu20.04 192.168.247.85 k8s-flannel-node-02 2vcpu 4G 50G ubuntu20.04 #关闭swap swapoff -a && sed -i 's@/swap.img@#/swap.img@g' /etc/fstab #关闭防火墙 ufw disable && ufw status #配置时间同步 apt install chrony -y cat > /etc/chrony/chrony.conf <<EOF server ntp.aliyun.com iburst stratumweight 0 driftfile /var/lib/chrony/drift rtcsync makestep 10 3 bindcmdaddress 127.0.0.1 bindcmdaddress ::1 keyfile /etc/chrony.keys commandkey 1 generatecommandkey logchange 0.5 logdir /var/log/chrony EOF systemctl restart chrony #安装并启用docker apt -y install apt-transport-https ca-certificates curl software-properties-common curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | apt-key add - add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" apt update && apt install docker-ce -y #配置docker加速 cat > /etc/docker/daemon.json <<EOF { "registry-mirrors": [ "https://docker.mirrors.ustc.edu.cn", "https://hub-mirror.c.163.com", "https://reg-mirror.qiniu.com", "https://registry.docker-cn.com", "https://a7h8080e.mirror.aliyuncs.com" ], "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "200m" }, "storage-driver": "overlay2" } EOF systemctl restart docker docker info #查看docker版本及加速是否生效 #安装cri-dockerd curl -LO https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.6/cri-dockerd_0.2.6.3-0.ubuntu-focal_amd64.deb apt install ./cri-dockerd_0.2.6.3-0.ubuntu-focal_amd64.deb #安装kubelet、kubeadm、kubectl apt update && apt install -y apt-transport-https curl curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main EOF apt-get update && apt install -y kubelet kubeadm kubectl systemctl enable kubelet #整合kubelet和cri-dockerd

如果启用国内阿里云镜像,需要如下设置

ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.8 --container-runtime-endpoint fd:// --network-plugin=cni --cni-bin-dir=/opt/cni/bin --cni-cache-dir=/var/lib/cni/cache --cni-conf-dir=/etc/cni/net.d

# vim /usr/lib/systemd/system/cri-docker.service # cat /usr/lib/systemd/system/cri-docker.service [Unit] Description=CRI Interface for Docker Application Container Engine Documentation=https://docs.mirantis.com After=network-online.target firewalld.service docker.service Wants=network-online.target Requires=cri-docker.socket [Service] Type=notify #ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd://

#ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.8 --container-runtime-endpoint fd:// --network-plugin=cni --cni-bin-dir=/opt/cni/bin --cni-cache-dir=/var/lib/cni/cache --cni-conf-dir=/etc/cni/net.d

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --network-plugin=cni --cni-bin-dir=/opt/cni/bin --cni-cache-dir=/var/lib/cni/cache --cni-conf-dir=/etc/cni/net.d

ExecReload=/bin/kill -s HUP $MAINPID TimeoutSec=0 RestartSec=2 Restart=always StartLimitBurst=3 StartLimitInterval=60s LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TasksMax=infinity Delegate=yes KillMode=process [Install] WantedBy=multi-user.target systemctl daemon-reload systemctl restart cri-docker #配置kubelet mkdir /etc/sysconfig/ cat > /etc/sysconfig/kubelet <<EOF

KUBELET_KUBEADM_ARGS="--container-runtime=remote --container-runtime-endpoint=/run/cri-dockerd.sock"

EOF

#初始化master

# kubeadm config images list #查看所需的容器镜像

registry.k8s.io/kube-apiserver:v1.25.3

registry.k8s.io/kube-controller-manager:v1.25.3

registry.k8s.io/kube-scheduler:v1.25.3

registry.k8s.io/kube-proxy:v1.25.3

registry.k8s.io/pause:3.8

registry.k8s.io/etcd:3.5.4-0

registry.k8s.io/coredns/coredns:v1.9.3

#拉取镜像 # kubeadm config images pull --cri-socket unix:///run/cri-dockerd.sock #拉取镜像 #初始化集群 kubeadm init --control-plane-endpoint="kubeapi.flannel.com" \ --kubernetes-version=v1.25.3 \ --pod-network-cidr=10.244.0.0/16 \ --service-cidr=10.96.0.0/12 \ --token-ttl=0 \ --cri-socket unix:///run/cri-dockerd.sock \ --upload-certs

#master添加

kubeadm join kubeapi.flannel.com:6443 --token 9qajrn.br48bhnpqyhq593p \

--discovery-token-ca-cert-hash sha256:4b72b489daf2bb7052c67065339796f966a666de6068735babf510259c0717ca \

--control-plane --certificate-key 19db0bbf09af6973df6cd0ad93b974be028bbabcde41c714b5ecc3616487a776 \

--cri-socket unix:///run/cri-dockerd.sock

#node添加 kubeadm join kubeapi.flannel.com:6443 --token 9qajrn.br48bhnpqyhq593p \ --discovery-token-ca-cert-hash sha256:4b72b489daf2bb7052c67065339796f966a666de6068735babf510259c0717ca \ --cri-socket unix:///run/cri-dockerd.sock [root@k8s-flannel-master-01 ~]# mkdir -p $HOME/.kube [root@k8s-flannel-master-01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-flannel-master-01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config [root@k8s-flannel-master-01 ~]# [root@k8s-flannel-master-01 ~]# kubectl get node -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-flannel-master-01 NotReady control-plane 13m v1.25.3 192.168.247.81 <none> Ubuntu 20.04.3 LTS 5.4.0-122-generic docker://20.10.21 k8s-flannel-node-01 NotReady <none> 83s v1.25.3 192.168.247.84 <none> Ubuntu 20.04.3 LTS 5.4.0-122-generic docker://20.10.21 k8s-flannel-node-02 NotReady <none> 79s v1.25.3 192.168.247.85 <none> Ubuntu 20.04.3 LTS 5.4.0-122-generic docker://20.10.21 [root@k8s-flannel-master-01 ~]# [root@k8s-flannel-master-01 ~]# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-565d847f94-khn5v 0/1 Pending 0 128m kube-system coredns-565d847f94-xsvrp 0/1 Pending 0 128m kube-system etcd-k8s-flannel-master-01 1/1 Running 0 128m kube-system kube-apiserver-k8s-flannel-master-01 1/1 Running 0 128m kube-system kube-controller-manager-k8s-flannel-master-01 1/1 Running 0 128m kube-system kube-proxy-ccsp9 1/1 Running 0 115m kube-system kube-proxy-jlhdb 1/1 Running 0 128m kube-system kube-proxy-wdnjh 1/1 Running 0 115m kube-system kube-scheduler-k8s-flannel-master-01 1/1 Running 0 128m [root@k8s-flannel-master-01 ~]# #安装flannel mkdir /opt/bin/ curl -L https://github.com/flannel-io/flannel/releases/download/v0.20.1/flanneld-amd64 -o /opt/bin/flanneld chmod +x /opt/bin/flanneld #下载flannel部署文件

wget https://github.com/flannel-io/flannel/archive/refs/tags/v0.20.1.zip unzip v0.20.1.zip

cd flannel-0.20.1/Documentation

[root@k8s-flannel-master-01 ~]# kubectl apply -f kube-flannel.yaml #安装flannel网络插件 namespace/kube-flannel created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds created [root@k8s-flannel-master-01 ~]# [root@k8s-flannel-master-01 ~]# kubectl get node -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-flannel-master-01 Ready control-plane 158m v1.25.3 192.168.247.81 <none> Ubuntu 20.04.3 LTS 5.4.0-122-generic docker://20.10.21 k8s-flannel-node-01 Ready <none> 146m v1.25.3 192.168.247.84 <none> Ubuntu 20.04.3 LTS 5.4.0-122-generic docker://20.10.21 k8s-flannel-node-02 Ready <none> 146m v1.25.3 192.168.247.85 <none> Ubuntu 20.04.3 LTS 5.4.0-122-generic docker://20.10.21 [root@k8s-flannel-master-01 ~]# [root@k8s-flannel-master-01 ~]# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-flannel kube-flannel-ds-5n4qv 1/1 Running 0 5m8s kube-flannel kube-flannel-ds-8b97k 1/1 Running 0 5m8s kube-flannel kube-flannel-ds-bbksr 1/1 Running 0 5m8s kube-system coredns-565d847f94-khn5v 1/1 Running 0 158m kube-system coredns-565d847f94-xsvrp 1/1 Running 0 158m kube-system etcd-k8s-flannel-master-01 1/1 Running 0 158m kube-system kube-apiserver-k8s-flannel-master-01 1/1 Running 0 158m kube-system kube-controller-manager-k8s-flannel-master-01 1/1 Running 0 158m kube-system kube-proxy-ccsp9 1/1 Running 0 146m kube-system kube-proxy-jlhdb 1/1 Running 0 158m kube-system kube-proxy-wdnjh 1/1 Running 0 146m kube-system kube-scheduler-k8s-flannel-master-01 1/1 Running 0 158m [root@k8s-flannel-master-01 ~]# [root@k8s-flannel-master-01 ~]# kubectl create deployment nginx --image=nginx:1.23.2-alpine --replicas=3 deployment.apps/nginx created [root@k8s-flannel-master-01 ~]# [root@k8s-flannel-master-01 ~]# kubectl get pod -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-5cd78c5d7-2nll4 1/1 Running 0 3m8s 10.244.1.2 k8s-flannel-node-01 <none> <none> nginx-5cd78c5d7-gb7f6 1/1 Running 0 3m8s 10.244.2.4 k8s-flannel-node-02 <none> <none> nginx-5cd78c5d7-hf6nr 1/1 Running 0 3m8s 10.244.1.3 k8s-flannel-node-01 <none> <none> [root@k8s-flannel-master-01 ~]# [root@k8s-flannel-master-01 ~]# kubectl create service nodeport nginx --tcp=80:80 service/nginx created [root@k8s-flannel-master-01 ~]# kubectl get svc -l app=nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE nginx NodePort 10.101.156.194 <none> 80:31818/TCP 13s [root@k8s-flannel-master-01 ~]# [root@k8s-flannel-master-01 ~]# curl 192.168.247.81:31818 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html> [root@k8s-flannel-master-01 ~]#

三、Flannel Pod通信

3.1、CNI信息与路由表

[root@k8s-flannel-master-01 /]# cat /run/flannel/subnet.env #子网信息

FLANNEL_NETWORK=10.244.0.0/16

FLANNEL_SUBNET=10.244.0.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

[root@k8s-flannel-master-01 /]#

[root@k8s-flannel-node-01 ~]# ll -h /var/lib/cni/flannel/ total 20K drwx------ 2 root root 4.0K Nov 5 22:26 ./ drwx------ 5 root root 4.0K Nov 5 14:26 ../ -rw------- 1 root root 242 Nov 5 14:26 0f19c73c3ace6ecabab499e3780b374c39f2eb59141dd6620b6c8d9f4565a2a6 -rw------- 1 root root 242 Nov 5 14:26 20562f6e4041bd1b3d2e28666955f7e8d9c20e69d9f40d2a21743cb1f95bb270 -rw------- 1 root root 242 Nov 5 22:25 55572972a6b6768b4874a99beb41887400de8d963a23ff46fa8c78481d868ed7 [root@k8s-flannel-node-01 ~]# cd /var/lib/cni/flannel/ [root@k8s-flannel-node-01 flannel]# cat 0f19c73c3ace6ecabab499e3780b374c39f2eb59141dd6620b6c8d9f4565a2a6 |jq { "cniVersion": "0.3.1", "hairpinMode": true, "ipMasq": false, "ipam": { "ranges": [ [ { "subnet": "10.244.1.0/24" } ] ], "routes": [ { "dst": "10.244.0.0/16" } ], "type": "host-local" }, "isDefaultGateway": true, "isGateway": true, "mtu": 1450, "name": "cbr0", "type": "bridge" } [root@k8s-flannel-node-01 flannel]# cat 20562f6e4041bd1b3d2e28666955f7e8d9c20e69d9f40d2a21743cb1f95bb270 |jq { "cniVersion": "0.3.1", "hairpinMode": true, "ipMasq": false, "ipam": { "ranges": [ [ { "subnet": "10.244.1.0/24" } ] ], "routes": [ { "dst": "10.244.0.0/16" } ], "type": "host-local" }, "isDefaultGateway": true, "isGateway": true, "mtu": 1450, "name": "cbr0", "type": "bridge" } [root@k8s-flannel-node-01 flannel]# cat 55572972a6b6768b4874a99beb41887400de8d963a23ff46fa8c78481d868ed7 |jq { "cniVersion": "0.3.1", "hairpinMode": true, "ipMasq": false, "ipam": { "ranges": [ [ { "subnet": "10.244.1.0/24" } ] ], "routes": [ { "dst": "10.244.0.0/16" } ], "type": "host-local" }, "isDefaultGateway": true, "isGateway": true, "mtu": 1450, "name": "cbr0", "type": "bridge" } [root@k8s-flannel-node-01 flannel]# [root@k8s-flannel-node-01 flannel]# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.247.2 0.0.0.0 UG 100 0 0 eth0 10.244.0.0 10.244.0.0 255.255.255.0 UG 0 0 0 flannel.1 10.244.1.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0 10.244.2.0 10.244.2.0 255.255.255.0 UG 0 0 0 flannel.1 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 192.168.247.0 0.0.0.0 255.255.255.0 U 100 0 0 eth0 [root@k8s-flannel-node-01 flannel]# [root@k8s-flannel-node-01 flannel]# brctl show bridge name bridge id STP enabled interfaces cni0 8000.8683b3645fd1 no veth16a9e5bd veth42d65ecf vethc6b64752 docker0 8000.0242913af571 no [root@k8s-flannel-node-01 flannel]#

#验证当前backend类型 [root@k8s-flannel-master-01 ~]# kubectl get configmap -n kube-flannel kube-flannel-cfg -oyaml apiVersion: v1 data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } } kind: ConfigMap metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","data":{"cni-conf.json":"{\n \"name\": \"cbr0\",\n \"cniVersion\": \"0.3.1\",\n \"plugins\": [\n {\n \"type\": \"flannel\",\n \"delegate\": {\n \"hairpinMode\": true,\n \"isDefaultGateway\": true\n }\n },\n {\n \"type\": \"portmap\",\n \"capabilities\": {\n \"portMappings\": true\n }\n }\n ]\n}\n","net-conf.json":"{\n \"Network\": \"10.244.0.0/16\",\n \"Backend\": {\n \"Type\": \"vxlan\"\n }\n}\n"},"kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app":"flannel","tier":"node"},"name":"kube-flannel-cfg","namespace":"kube-flannel"}} creationTimestamp: "2022-11-05T06:16:06Z" labels: app: flannel tier: node name: kube-flannel-cfg namespace: kube-flannel resourceVersion: "13236" uid: be066181-a4ce-4604-b221-8a7d4c3629f7 [root@k8s-flannel-master-01 ~]#

#flannel vxlan使用UDP端口8472传输封装好的报文 [root@k8s-flannel-node-01 flannel]# netstat -tnulp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 25287/kubelet tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 25670/kube-proxy tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN 4769/systemd-resolv tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 993/sshd: /usr/sbin tcp 0 0 127.0.0.1:6010 0.0.0.0:* LISTEN 91467/sshd: root@pt tcp6 0 0 :::36769 :::* LISTEN 13867/cri-dockerd tcp6 0 0 :::10250 :::* LISTEN 25287/kubelet tcp6 0 0 :::10256 :::* LISTEN 25670/kube-proxy tcp6 0 0 :::22 :::* LISTEN 993/sshd: /usr/sbin tcp6 0 0 ::1:6010 :::* LISTEN 91467/sshd: root@pt udp 0 0 127.0.0.53:53 0.0.0.0:* 4769/systemd-resolv udp 0 0 0.0.0.0:8472 0.0.0.0:* - udp 0 0 127.0.0.1:323 0.0.0.0:* 5740/chronyd udp6 0 0 ::1:323 :::* 5740/chronyd [root@k8s-flannel-node-01 flannel]#

3.2、Flannel Pod跨主机通信流程

Flannel vxlan架构图

准备测试环境pod

net-test1、net-test2

[root@k8s-flannel-master-01 ~]# kubectl run net-test1 --image=centos:7.9.2009 sleep 360000 pod/net-test1 created [root@k8s-flannel-master-01 ~]# [root@k8s-flannel-master-01 ~]# kubectl run net-test2 --image=centos:7.9.2009 sleep 360000 pod/net-test2 created [root@k8s-flannel-master-01 ~]# [root@k8s-flannel-master-01 ~]# kubectl get pod -owide #创建的pod必须分配在不同的主机 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES net-test1 1/1 Running 0 8m43s 10.244.1.4 k8s-flannel-node-01 <none> <none> net-test2 1/1 Running 0 8m18s 10.244.2.5 k8s-flannel-node-02 <none> <none> nginx-5cd78c5d7-2nll4 1/1 Running 0 8h 10.244.1.2 k8s-flannel-node-01 <none> <none> nginx-5cd78c5d7-gb7f6 1/1 Running 0 8h 10.244.2.4 k8s-flannel-node-02 <none> <none> nginx-5cd78c5d7-hf6nr 1/1 Running 0 8h 10.244.1.3 k8s-flannel-node-01 <none> <none> [root@k8s-flannel-master-01 ~]# [root@k8s-flannel-master-01 ~]# kubectl exec -it net-test1 bash kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead. [root@net-test1 /]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 3: eth0@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default link/ether b6:f2:23:66:4b:fa brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 10.244.1.4/24 brd 10.244.1.255 scope global eth0 valid_lft forever preferred_lft forever [root@net-test1 /]# [root@k8s-flannel-master-01 ~]# kubectl exec -it net-test2 bash kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead. [root@net-test2 /]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 3: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default link/ether 46:70:6e:a3:c7:e5 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 10.244.2.5/24 brd 10.244.2.255 scope global eth0 valid_lft forever preferred_lft forever [root@net-test2 /]# #在net-test1--ping-->net-test2 [root@net-test1 /]# ping 10.244.2.5 PING 10.244.2.5 (10.244.2.5) 56(84) bytes of data. 64 bytes from 10.244.2.5: icmp_seq=1 ttl=62 time=0.847 ms 64 bytes from 10.244.2.5: icmp_seq=2 ttl=62 time=1.31 ms 64 bytes from 10.244.2.5: icmp_seq=3 ttl=62 time=1.16 ms ^C --- 10.244.2.5 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2004ms rtt min/avg/max/mdev = 0.847/1.109/1.312/0.194 ms [root@net-test1 /]# [root@net-test1 /]# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 10.244.1.1 0.0.0.0 UG 0 0 0 eth0 10.244.0.0 10.244.1.1 255.255.0.0 UG 0 0 0 eth0 10.244.1.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0 [root@net-test1 /]# traceroute 10.244.2.5 traceroute to 10.244.2.5 (10.244.2.5), 30 hops max, 60 byte packets 1 bogon (10.244.1.1) 3.905 ms 3.474 ms 3.415 ms 2 bogon (10.244.2.0) 3.374 ms 3.338 ms 3.172 ms 3 bogon (10.244.2.5) 3.041 ms 2.920 ms 2.786 ms [root@net-test1 /]# #在net-test2--ping-->net-test1 [root@net-test2 /]# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 10.244.2.1 0.0.0.0 UG 0 0 0 eth0 10.244.0.0 10.244.2.1 255.255.0.0 UG 0 0 0 eth0 10.244.2.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0 [root@net-test2 /]# ping 10.244.1.4 PING 10.244.1.4 (10.244.1.4) 56(84) bytes of data. 64 bytes from 10.244.1.4: icmp_seq=1 ttl=62 time=1.13 ms 64 bytes from 10.244.1.4: icmp_seq=2 ttl=62 time=1.27 ms ^C --- 10.244.1.4 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1002ms rtt min/avg/max/mdev = 1.139/1.204/1.270/0.074 ms [root@net-test2 /]# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 10.244.2.1 0.0.0.0 UG 0 0 0 eth0 10.244.0.0 10.244.2.1 255.255.0.0 UG 0 0 0 eth0 10.244.2.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0 [root@net-test2 /]# traceroute 10.244.1.4 traceroute to 10.244.1.4 (10.244.1.4), 30 hops max, 60 byte packets 1 bogon (10.244.2.1) 1.442 ms 1.325 ms 1.296 ms 2 bogon (10.244.1.0) 1.280 ms 1.210 ms 1.173 ms 3 bogon (10.244.1.4) 1.139 ms 1.092 ms 1.087 ms [root@net-test2 /]#

查找pod 网卡信息与node节点网卡对应关系

[root@net-test1 /]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 3: eth0@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default link/ether b6:f2:23:66:4b:fa brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 10.244.1.4/24 brd 10.244.1.255 scope global eth0 valid_lft forever preferred_lft forever [root@net-test1 /]# [root@k8s-flannel-node-01 ~]# ip link |grep ^8 8: vethc6b64752@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default [root@k8s-flannel-node-01 ~]# [root@net-test2 /]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 3: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default link/ether 46:70:6e:a3:c7:e5 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 10.244.2.5/24 brd 10.244.2.255 scope global eth0 valid_lft forever preferred_lft forever [root@net-test2 /]# [root@k8s-flannel-node-02 ~]# ip link |grep ^9 9: veth86e37253@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default [root@k8s-flannel-node-02 ~]#

第二种方式

[root@net-test1 /]# ethtool -S eth0 NIC statistics: peer_ifindex: 8 rx_queue_0_xdp_packets: 0 rx_queue_0_xdp_bytes: 0 rx_queue_0_xdp_drops: 0 [root@net-test1 /]# [root@k8s-flannel-node-01 ~]# ip link |grep ^8 8: vethc6b64752@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default [root@k8s-flannel-node-01 ~]# [root@net-test2 /]# ethtool -S eth0 NIC statistics: peer_ifindex: 9 rx_queue_0_xdp_packets: 0 rx_queue_0_xdp_bytes: 0 rx_queue_0_xdp_drops: 0 [root@net-test2 /]# [root@k8s-flannel-node-02 ~]# ip link |grep ^9 9: veth86e37253@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default [root@k8s-flannel-node-02 ~]#

flannel通信流程

使用tcpdump抓包,通过wireshark分析

#查找环境mac地址

[root@k8s-flannel-node-01 ~]# bridge fdb 01:00:5e:00:00:01 dev eth0 self permanent 33:33:00:00:00:01 dev docker0 self permanent 01:00:5e:00:00:6a dev docker0 self permanent 33:33:00:00:00:6a dev docker0 self permanent 01:00:5e:00:00:01 dev docker0 self permanent 02:42:91:3a:f5:71 dev docker0 vlan 1 master docker0 permanent 02:42:91:3a:f5:71 dev docker0 master docker0 permanent f6:f6:fe:32:18:7f dev flannel.1 dst 192.168.247.85 self permanent c6:46:e5:05:86:db dev flannel.1 dst 192.168.247.81 self permanent 33:33:00:00:00:01 dev cni0 self permanent 01:00:5e:00:00:6a dev cni0 self permanent 33:33:00:00:00:6a dev cni0 self permanent 01:00:5e:00:00:01 dev cni0 self permanent 33:33:ff:64:5f:d1 dev cni0 self permanent 86:83:b3:64:5f:d1 dev cni0 vlan 1 master cni0 permanent 86:83:b3:64:5f:d1 dev cni0 master cni0 permanent 4a:22:34:35:69:f7 dev vethc6b64752 vlan 1 master cni0 permanent 4a:22:34:35:69:f7 dev vethc6b64752 master cni0 permanent 33:33:00:00:00:01 dev vethc6b64752 self permanent 01:00:5e:00:00:01 dev vethc6b64752 self permanent 33:33:ff:35:69:f7 dev vethc6b64752 self permanent 42:32:48:43:7e:80 dev veth18446f29 master cni0 f2:f4:a9:d8:31:8c dev veth18446f29 vlan 1 master cni0 permanent f2:f4:a9:d8:31:8c dev veth18446f29 master cni0 permanent 33:33:00:00:00:01 dev veth18446f29 self permanent 01:00:5e:00:00:01 dev veth18446f29 self permanent 33:33:ff:d8:31:8c dev veth18446f29 self permanent 3a:1e:83:fe:13:f2 dev vethfe896faa vlan 1 master cni0 permanent 3a:1e:83:fe:13:f2 dev vethfe896faa master cni0 permanent 33:33:00:00:00:01 dev vethfe896faa self permanent 01:00:5e:00:00:01 dev vethfe896faa self permanent 33:33:ff:fe:13:f2 dev vethfe896faa self permanent [root@k8s-flannel-node-01 ~]#

[root@k8s-flannel-node-02 ~]# bridge fdb

01:00:5e:00:00:01 dev eth0 self permanent

33:33:00:00:00:01 dev docker0 self permanent

01:00:5e:00:00:6a dev docker0 self permanent

33:33:00:00:00:6a dev docker0 self permanent

01:00:5e:00:00:01 dev docker0 self permanent

02:42:1e:1c:5f:6f dev docker0 vlan 1 master docker0 permanent

02:42:1e:1c:5f:6f dev docker0 master docker0 permanent

2a:6a:0a:34:73:e0 dev flannel.1 dst 192.168.247.84 self permanent

c6:46:e5:05:86:db dev flannel.1 dst 192.168.247.81 self permanent

33:33:00:00:00:01 dev cni0 self permanent

01:00:5e:00:00:6a dev cni0 self permanent

33:33:00:00:00:6a dev cni0 self permanent

01:00:5e:00:00:01 dev cni0 self permanent

33:33:ff:4c:7e:2a dev cni0 self permanent

1e:9b:66:4c:7e:2a dev cni0 vlan 1 master cni0 permanent

1e:9b:66:4c:7e:2a dev cni0 master cni0 permanent

de:7c:83:d8:5c:e5 dev vethc85a035a master cni0

ce:d7:1d:26:f6:e2 dev vethc85a035a vlan 1 master cni0 permanent

ce:d7:1d:26:f6:e2 dev vethc85a035a master cni0 permanent

33:33:00:00:00:01 dev vethc85a035a self permanent

01:00:5e:00:00:01 dev vethc85a035a self permanent

33:33:ff:26:f6:e2 dev vethc85a035a self permanent

e6:9a:5a:a5:37:01 dev vethe1af2650 master cni0

ce:40:0a:82:c2:f7 dev vethe1af2650 vlan 1 master cni0 permanent

ce:40:0a:82:c2:f7 dev vethe1af2650 master cni0 permanent

33:33:00:00:00:01 dev vethe1af2650 self permanent

01:00:5e:00:00:01 dev vethe1af2650 self permanent

33:33:ff:82:c2:f7 dev vethe1af2650 self permanent

96:ae:9c:ba:2e:3a dev veth86e37253 vlan 1 master cni0 permanent

96:ae:9c:ba:2e:3a dev veth86e37253 master cni0 permanent

33:33:00:00:00:01 dev veth86e37253 self permanent

01:00:5e:00:00:01 dev veth86e37253 self permanent

33:33:ff:ba:2e:3a dev veth86e37253 self permanent

0a:a5:b8:15:83:18 dev veth14f1215f master cni0

fa:8d:63:80:59:6c dev veth14f1215f vlan 1 master cni0 permanent

fa:8d:63:80:59:6c dev veth14f1215f master cni0 permanent

33:33:00:00:00:01 dev veth14f1215f self permanent

01:00:5e:00:00:01 dev veth14f1215f self permanent

33:33:ff:80:59:6c dev veth14f1215f self permanent

[root@k8s-flannel-node-02 ~]#

#测试pod mac地址

net-test1 eth0 b6:f2:23:66:4b:fa net-test2 eth0 46:70:6e:a3:c7:e5 #node-01节点 eth0 00:0c:29:0f:da:1d vethc6b64752 4a:22:34:35:69:f7 cni0 86:83:b3:64:5f:d1 flannel.1 2a:6a:0a:34:73:e0 #node-02节点 eth0 00:0c:29:44:70:8c veth86e37253 96:ae:9c:ba:2e:3a cni0 1e:9b:66:4c:7e:2a flannel.1 f6:f6:fe:32:18:7f [root@k8s-flannel-master-01 ~]# kubectl exec -it net-test1 bash kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead. [root@net-test1 /]# ping 10.244.2.5 PING 10.244.2.5 (10.244.2.5) 56(84) bytes of data. 64 bytes from 10.244.2.5: icmp_seq=1 ttl=62 time=1.28 ms 64 bytes from 10.244.2.5: icmp_seq=2 ttl=62 time=1.33 ms 64 bytes from 10.244.2.5: icmp_seq=3 ttl=62 time=1.19 ms 64 bytes from 10.244.2.5: icmp_seq=4 ttl=62 time=1.04 ms 64 bytes from 10.244.2.5: icmp_seq=5 ttl=62 time=1.10 ms 64 bytes from 10.244.2.5: icmp_seq=6 ttl=62 time=0.975 ms 64 bytes from 10.244.2.5: icmp_seq=7 ttl=62 time=1.55 ms 64 bytes from 10.244.2.5: icmp_seq=8 ttl=62 time=3.10 ms 64 bytes from 10.244.2.5: icmp_seq=9 ttl=62 time=0.979 ms 64 bytes from 10.244.2.5: icmp_seq=10 ttl=62 time=1.70 ms 64 bytes from 10.244.2.5: icmp_seq=11 ttl=62 time=1.24 ms 64 bytes from 10.244.2.5: icmp_seq=12 ttl=62 time=1.13 ms 64 bytes from 10.244.2.5: icmp_seq=13 ttl=62 time=1.51 ms 64 bytes from 10.244.2.5: icmp_seq=14 ttl=62 time=1.31 ms 64 bytes from 10.244.2.5: icmp_seq=15 ttl=62 time=1.02 ms 64 bytes from 10.244.2.5: icmp_seq=16 ttl=62 time=0.931 ms 64 bytes from 10.244.2.5: icmp_seq=17 ttl=62 time=1.38 ms 64 bytes from 10.244.2.5: icmp_seq=18 ttl=62 time=2.56 ms^C --- 10.244.2.5 ping statistics --- 18 packets transmitted, 18 received, 0% packet loss, time 34146ms rtt min/avg/max/mdev = 0.843/1.373/4.367/0.556 ms [root@net-test1 /]#

tcpdump抓包命令

#node-01节点抓包

tcpdump -nn -vvv -i vethc6b64752 -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 -w 01.flannel-vxlan-vethc6b64752-out.pcap tcpdump -nn -vvv -i cni0 -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 -w 02.flannel-vxlan-cni-out.pcap tcpdump -nn -vvv -i flannel.1 -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 -w 03.flannel-vxlan-flannel-1-out.pcap tcpdump -nn -vvv -i eth0 -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 -w 04.flannel-vxlan-eth0-out.pcap tcpdump -i eth0 udp port 8472 -w 05.flannel-vxlan-eth0-8472-out.pcap

#node-02节点抓包

tcpdump -nn -vvv -i eth0 -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 -w 06.flannel-vxlan-eth0-in.pcap

tcpdump -nn -vvv -i flannel.1 -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 -w 07.flannel-vxlan-flannel-1-in.pcap

tcpdump -nn -vvv -i cni0 -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 -w 08.flannel-vxlan-cni-in.pcap

tcpdump -nn -vvv -i veth86e37253 -vvv -nn ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 -w 09.flannel-vxlan-vethc6b64752-in.pcap

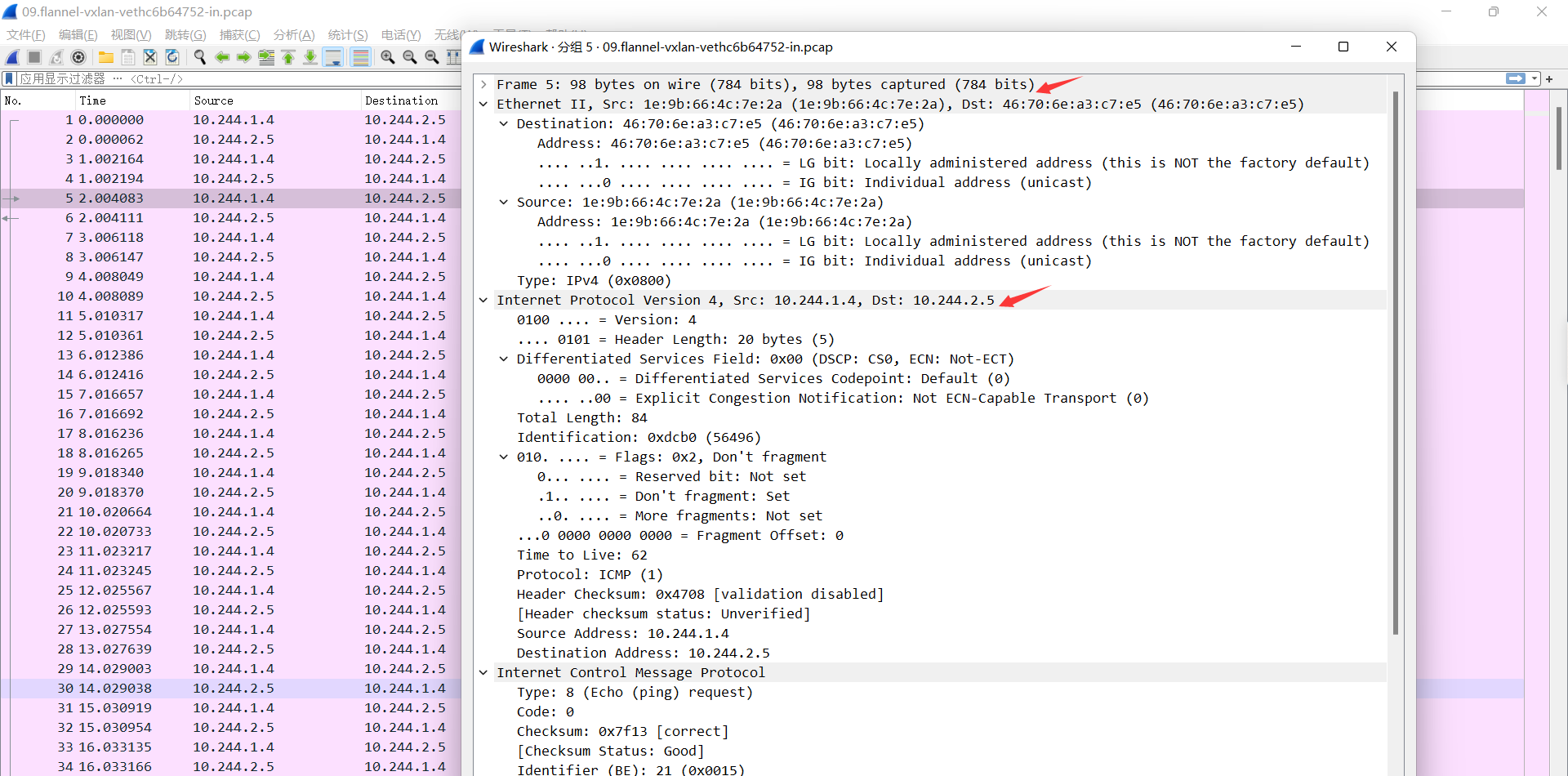

3.3、通过wireshark分析查看

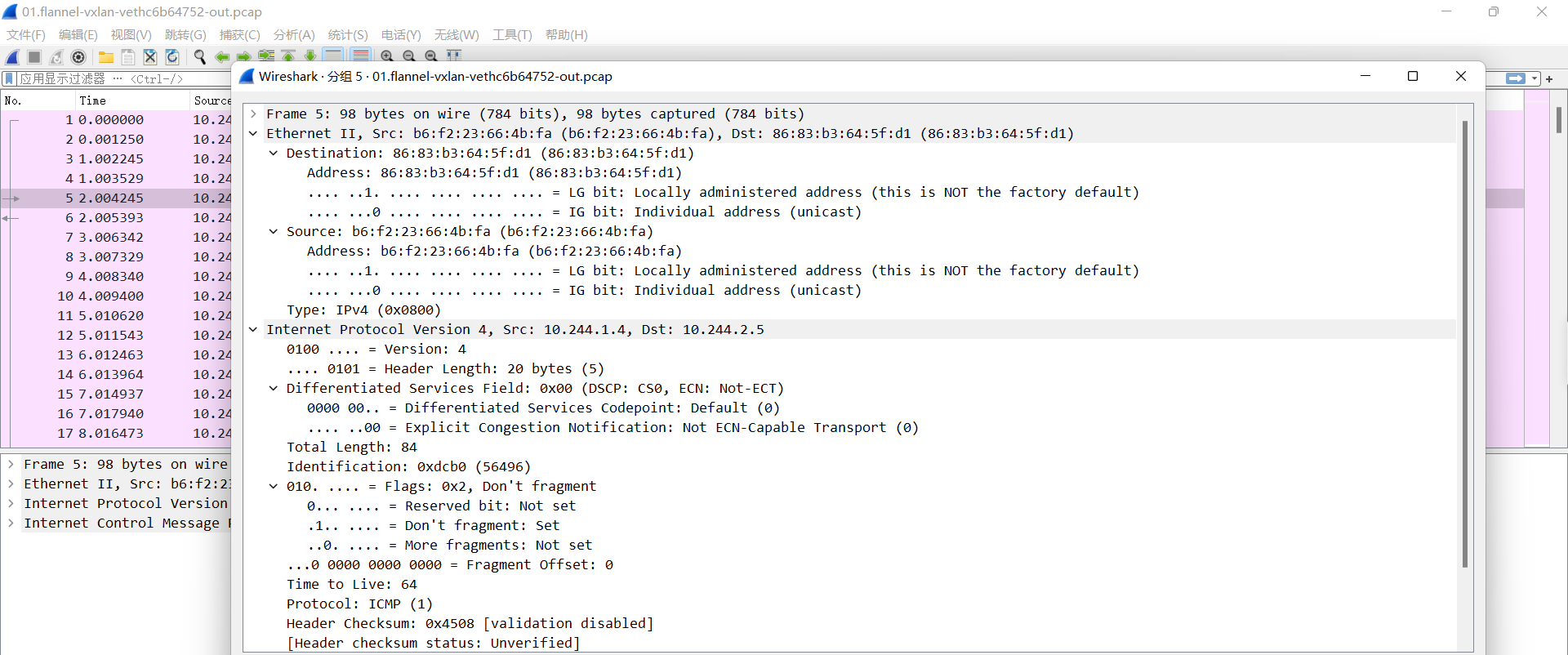

01.flannel-vxlan-vethc6b64752-out.pcap

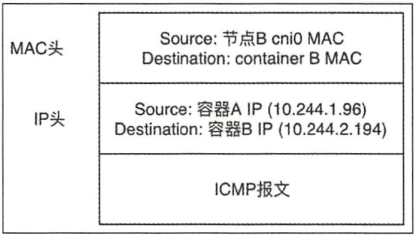

源pod发起请求, 此时报文中源IP为pod的eth0 的ip,源mac 为pod的eth0的mac, 目的Pod为目的Pod的IP, 目的mac为网关(cni0)的MAC

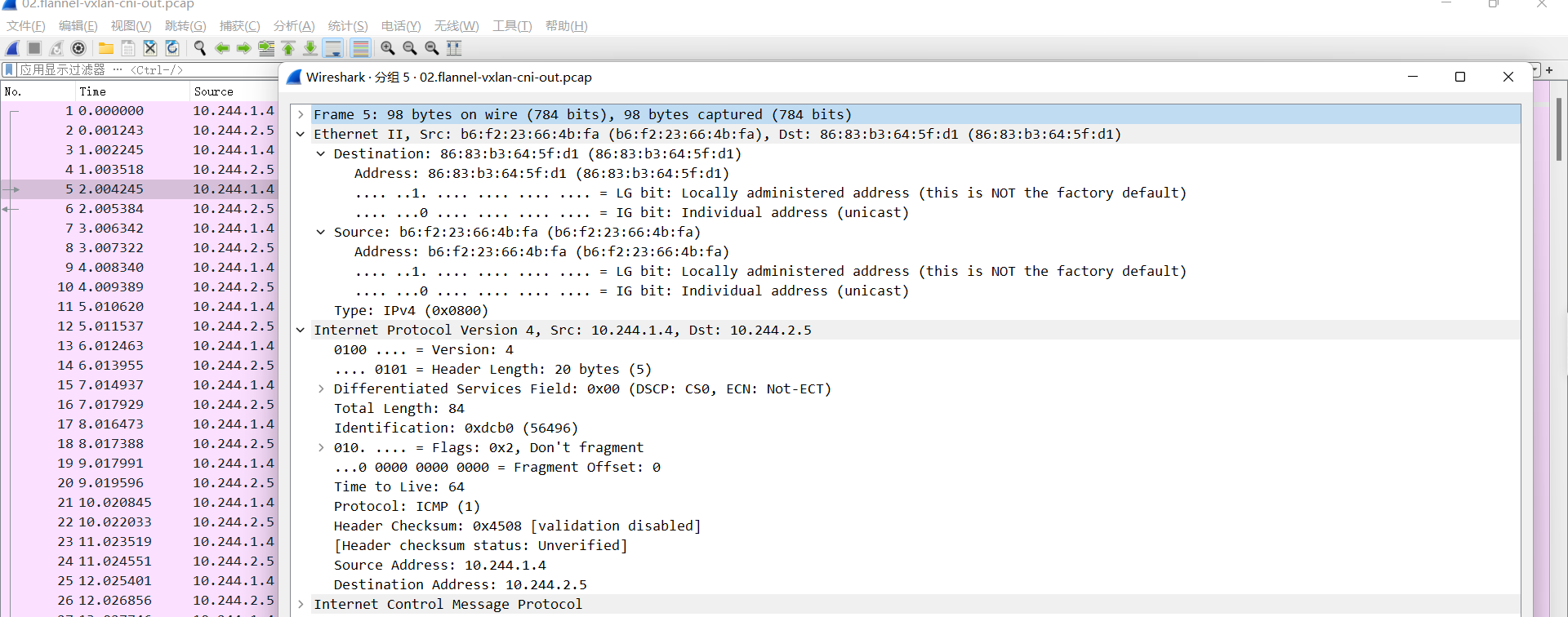

02.flannel-vxlan-cni-out.pcap

数据报文通过veth peer发送给网关cni0,检查目的mac就是发给自己的, cni0进行目标IP检查,如果是同一个网桥的报文就直接转发, 不是的话就发送给flannel.1, 此时报文被修改如下:

源IP: Pod IP, 10.244.1.4

目的IP: Pod IP, 10.244.2.5

源MAC: b6:f2:23:66:4b:fa

目的MAC: 86:83:b3:64:5f:d1

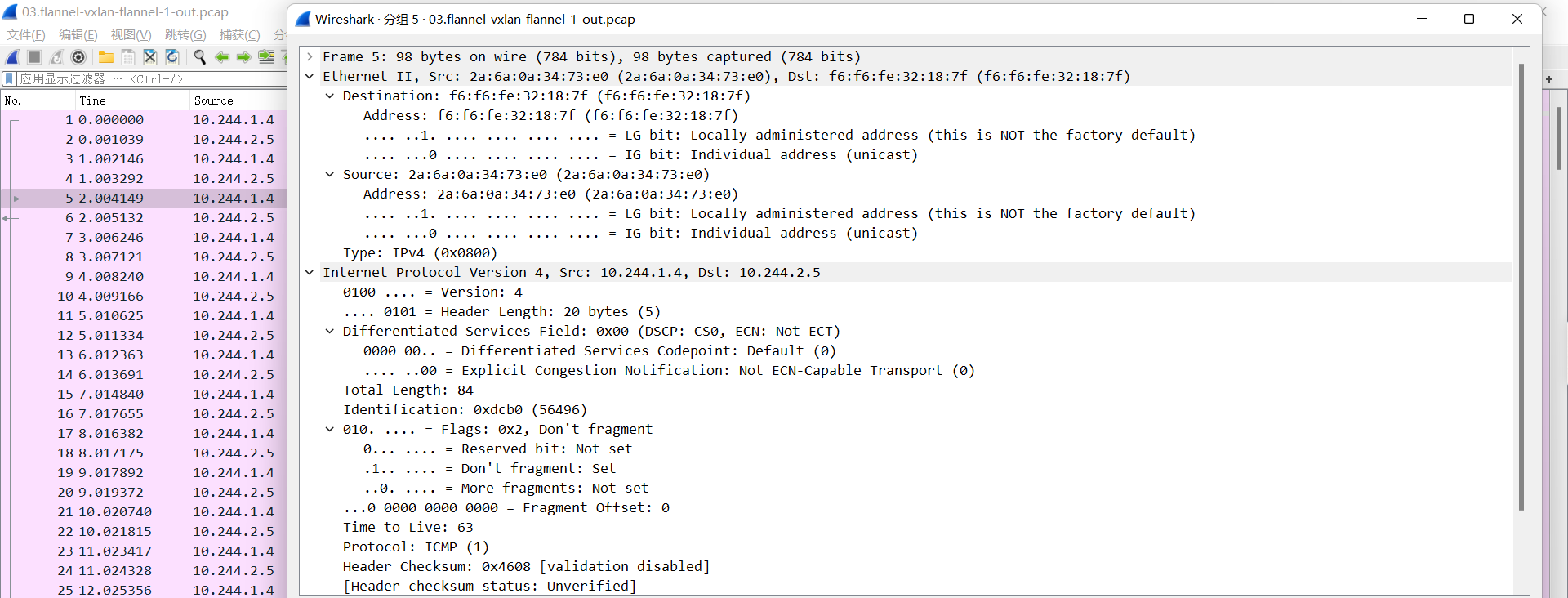

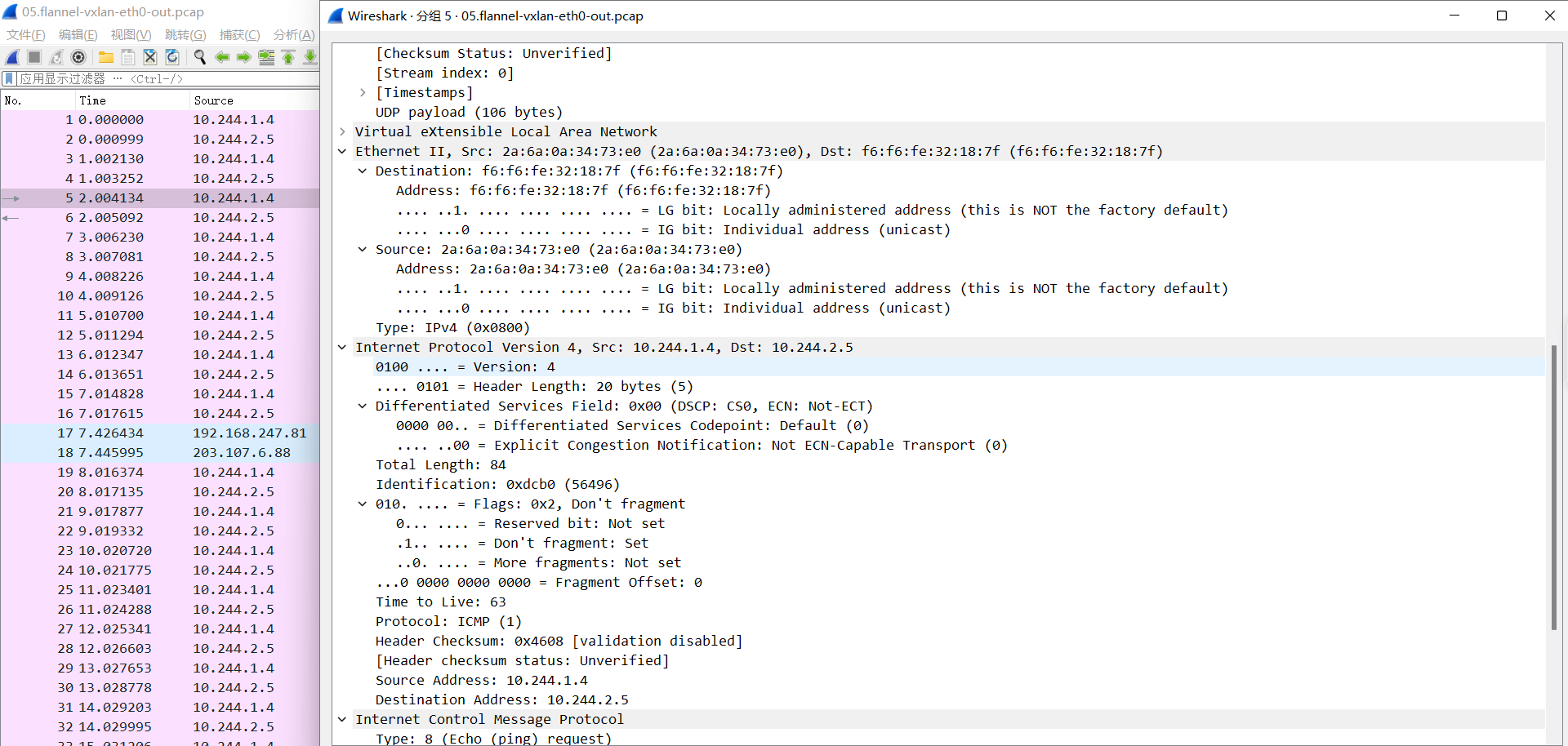

03.flannel-vxlan-flannel-1-out.pcap

到达flannel.1,检查目的mac就是发给自己的, 开始匹配路由表,先实现overlay报文的内层封装(主要是修改目的Pod的对端flannel.1的 MAC、源MAC为当前宿主机flannel.1的MAC)

源IP: Pod IP, 10.244.1.4

目的IP: Pod IP, 10.244.2.5

源MAC: 2a:6a:0a:34:73:e0, 源Pod所在宿主机flannel.1的MAC

目的MAC: f6:f6:fe:32:18:7f, 目的Pod所在主机flannel.1的MAC

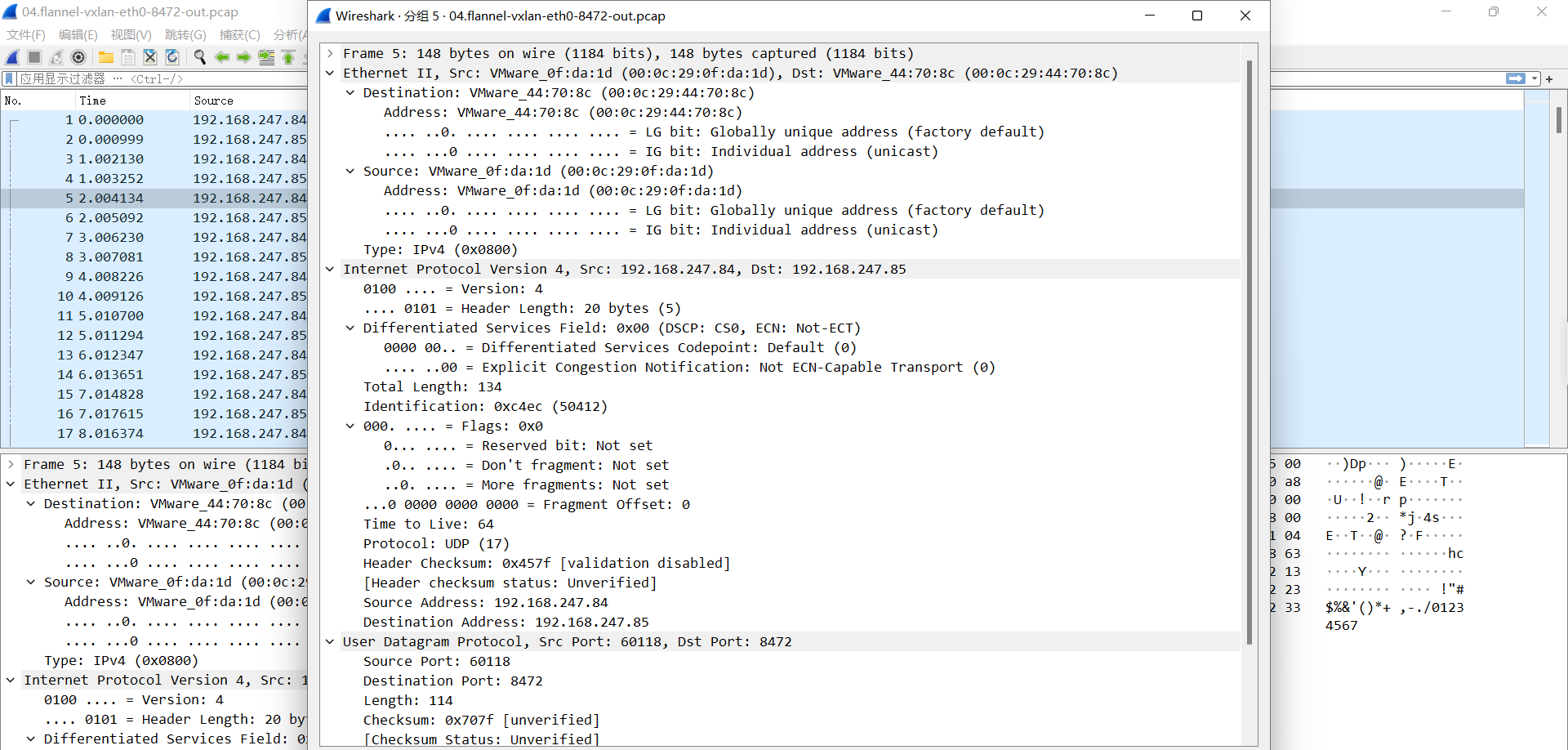

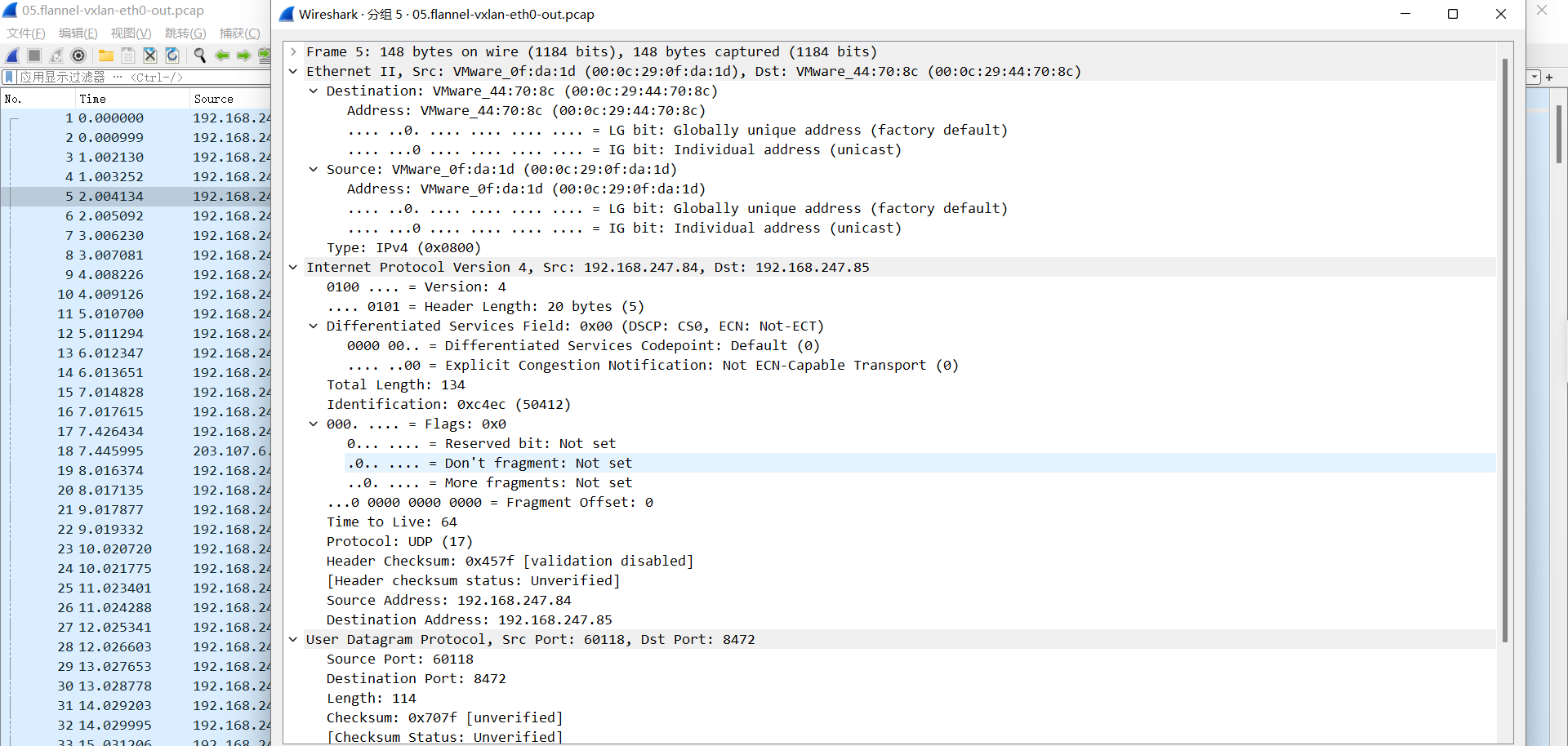

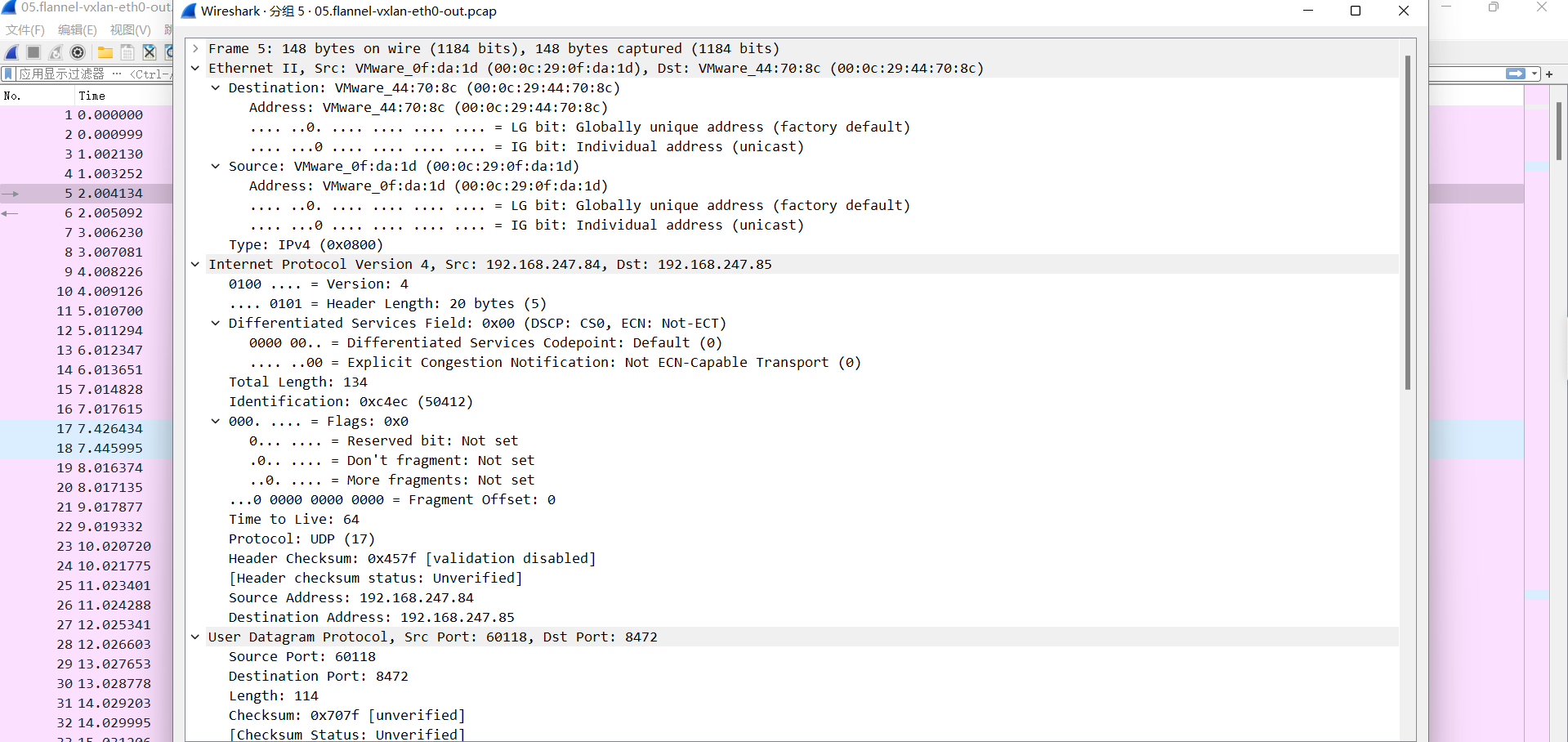

04.flannel-vxlan-eth0-8472-out.pcap

VXLAN ID: 1

UDP 源端口: 随机

UDP 目的端口: 8472

源IP #源Pod所在宿主机的物理网卡IP#

目的IP #目的Pod所在宿主机的物理网卡IP

源MAC: 00:0c:29:0f:da:1d #源Pod所在宿主机的物理网卡

目的MAC: 00:0c:29:44:70:8c #目的Pod所在宿主机的物理网卡

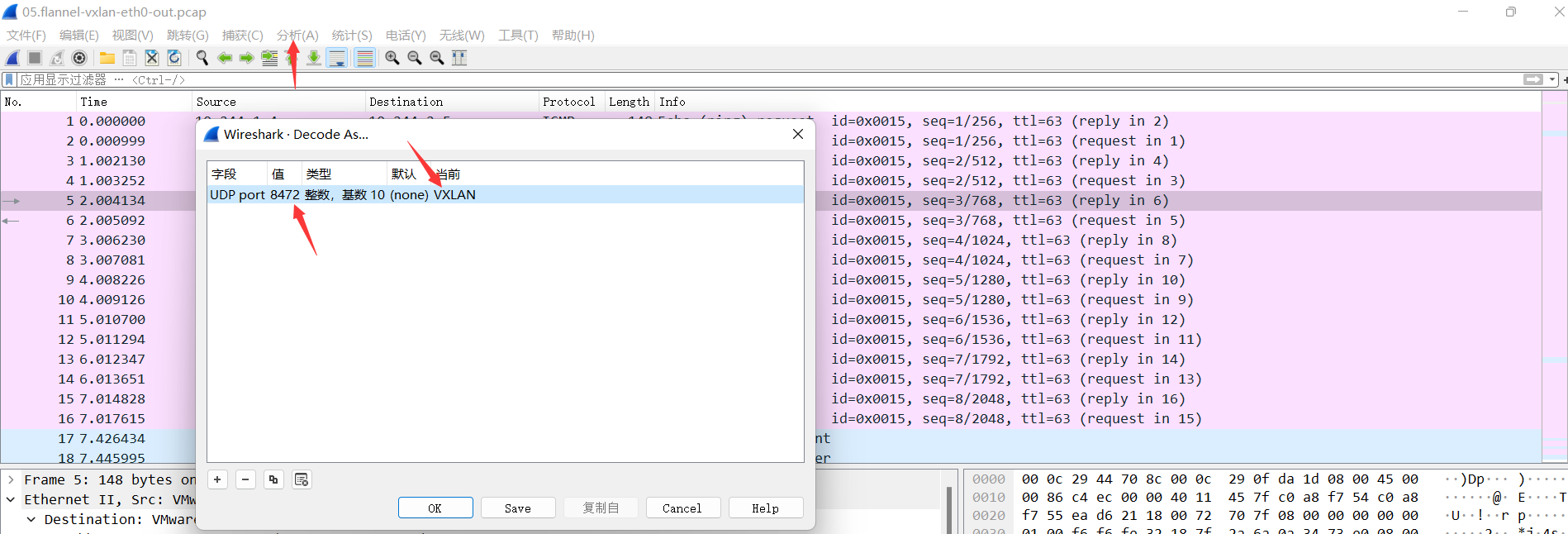

05.flannel-vxlan-eth0-out.pcap

通过修改包分析,可以看到vxlan隧道封装信息

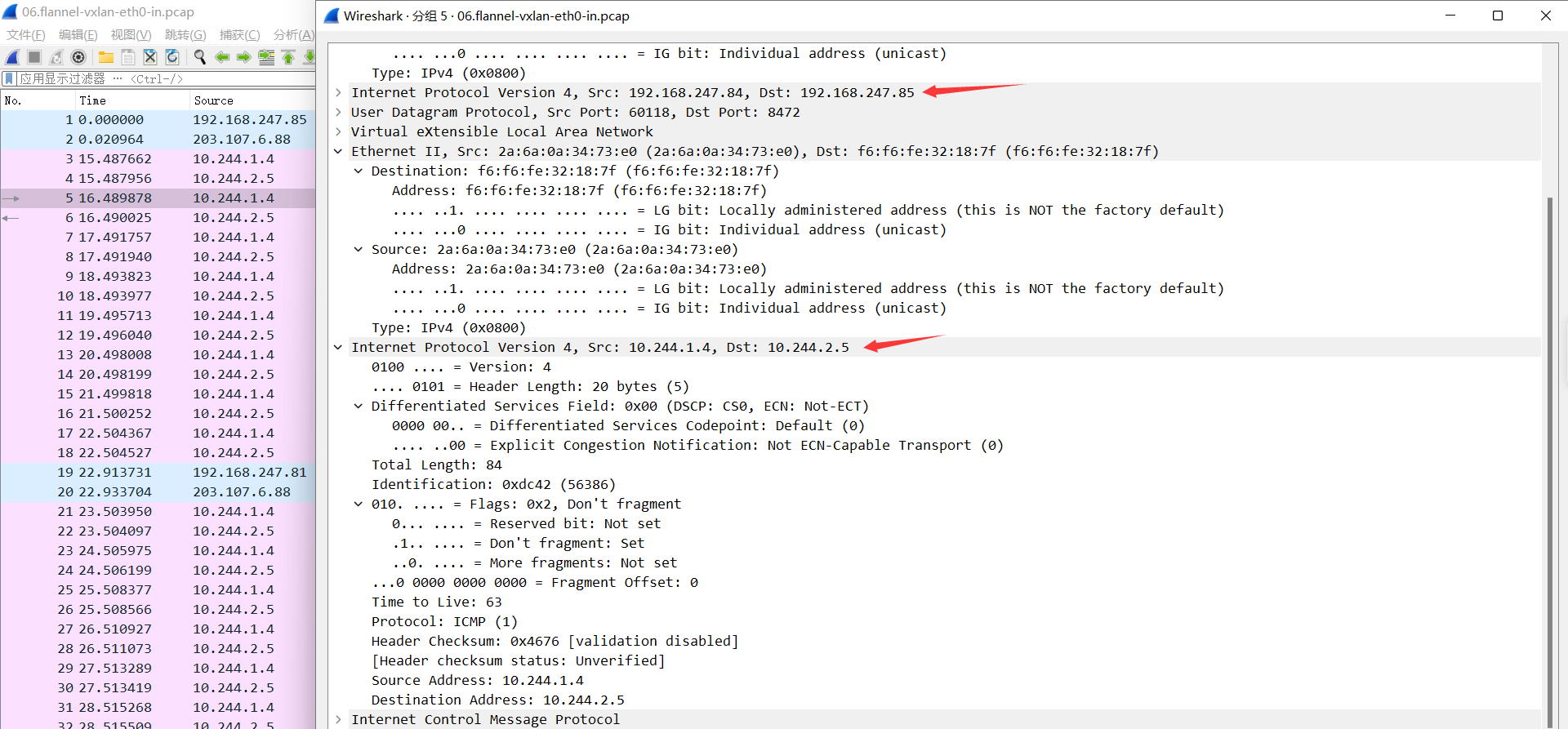

06.flannel-vxlan-eth0-in.pcap

外层目的IP为本机物理网卡, 解开后发现里面还有一层目的IP和目的MAC, 发现目的IP为10.244.2.5, 目的MAC为f6:f6:fe:32:18:7f(目的flannel.1的MAC), 然后将报文发送给flannel.1

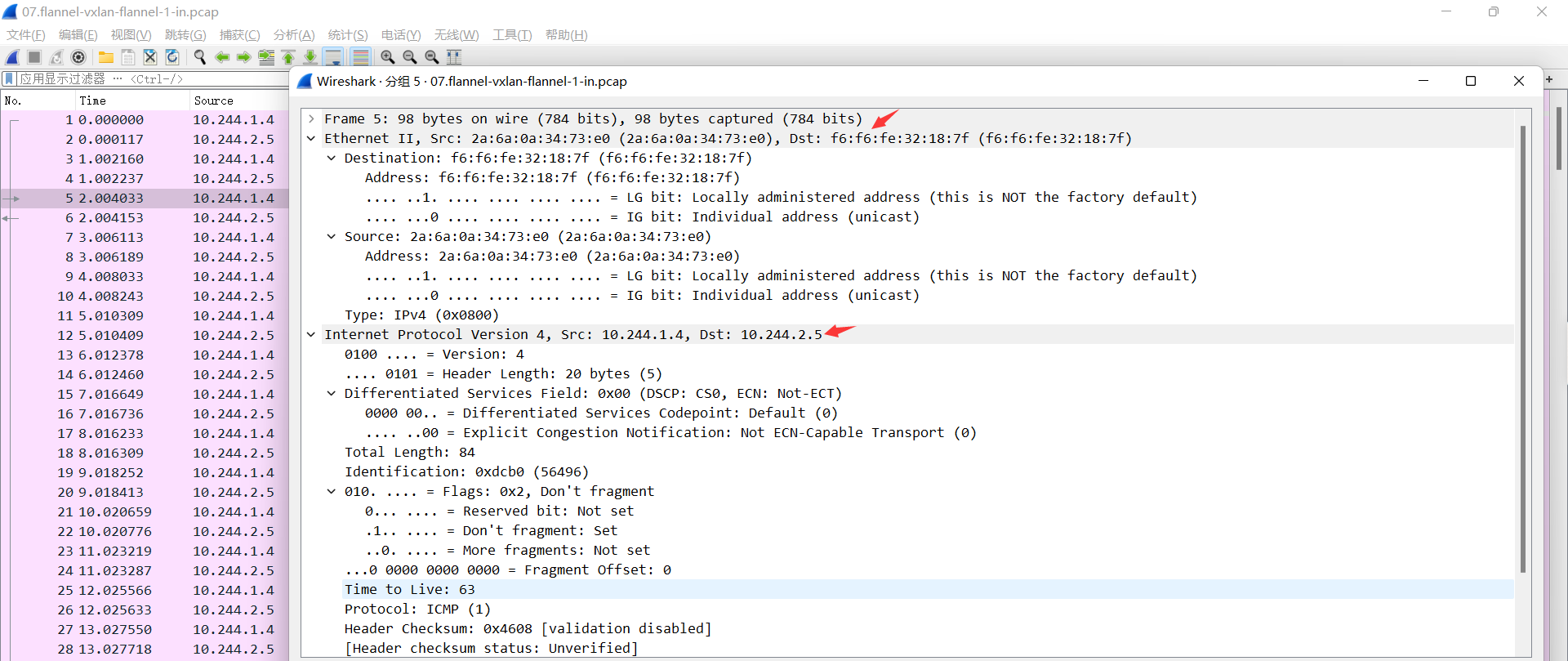

07.flannel-vxlan-flannel-1-in.pcap

flannel.1检查报文的目的IP, 发现是去往本机cni0的子网, 将请求报文转发至cni0

目的IP: 10.244.2.5 #目的Pod

源IP: 10.244.1.4 #源Pod

目的 MAC: f6:f6:fe:32:18:7f #目的pod所在宿主机的flannel.1

源MAC: 2a:6a:0a:34:73:e0 #源pod所在宿主机flannel.1的MAC

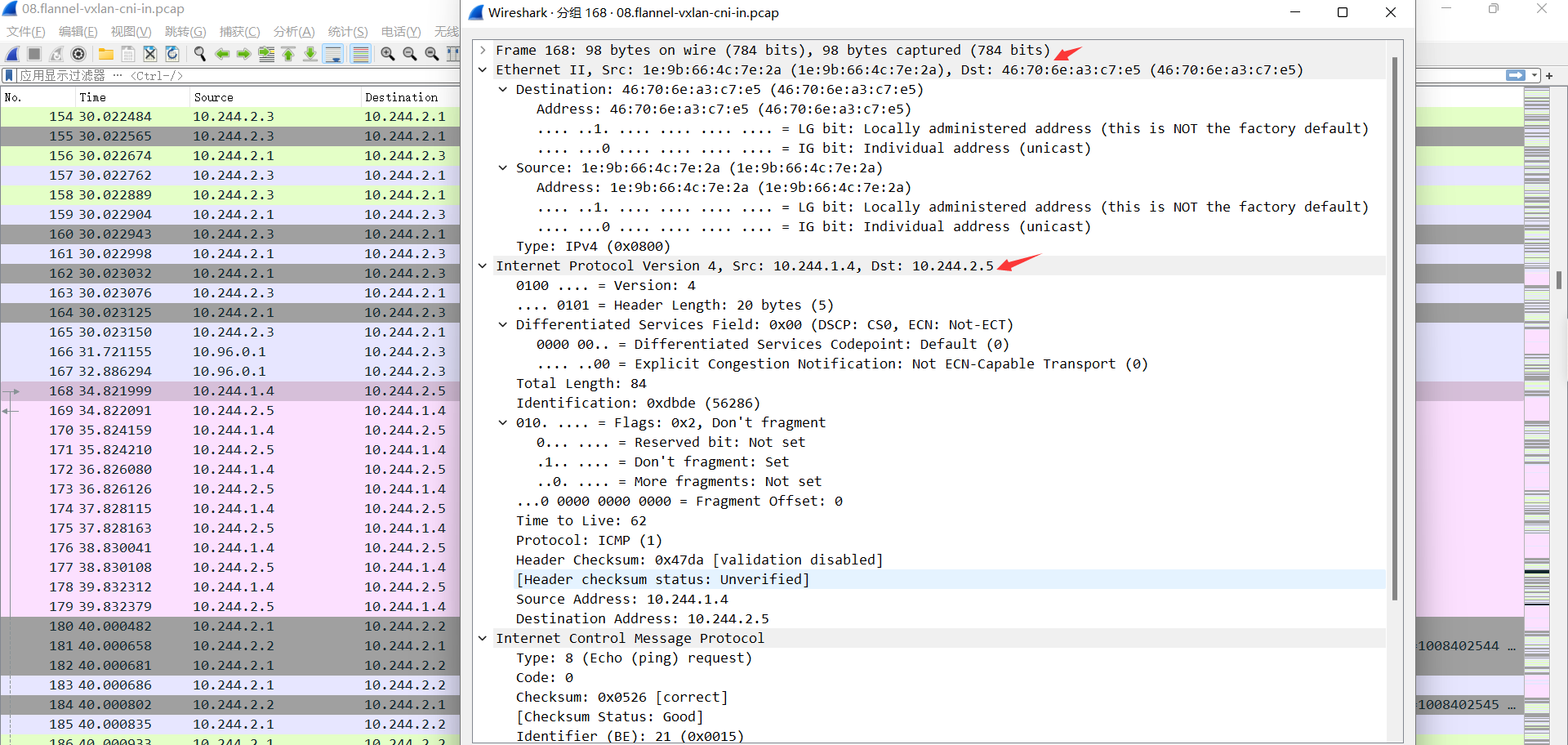

08.flannel-vxlan-cni-in.pcap

cni0基于目的IP检查mac地址表, 修改目的MAC为目的MAC后将来请求转给pod:

源IP: 10.244.1.4 #源Pod

目的IP: 10.244.2.5 #目的Pod

源MAC: cni0的MAC, 1e:9b:66:4c:7e:2a

目的MAC: 目的Pod的MAC 46:70:6e:a3:c7:e5

09.flannel-vxlan-vethc6b64752-in.pcap

cni0收到报文返现去往10.100.1.6,检查MAC地址表发现是本地接口, 然后通过网桥接口发给pod

目的IP: 目的pod IP 10.244.1.4

源IP: 源Pod IP 10.244.2.5

目的MAC: 目的pod MAC, 46:70:6e:a3:c7:e5

源MAC: cni0的MAC, 1e:9b:66:4c:7e:2a