easzlab二进制安装k8s(1.24.2)高可用集群---集群维护与管理

一、扩容集群节点

1.1、扩容master

root@easzlab-deploy:/etc/kubeasz# ./ezctl add-master k8s-01 172.16.88.xxx #扩容节点IP

扩完后会出现拉取镜像失败,此时配置containerd下config.toml可以解决k8s集群向harbor私有仓库拉取镜像,避开出公网拉取失败镜像问题

修改/etc/containerd/config.toml文件

[root@easzlab-k8s-master-01 ~]# cat -n /etc/containerd/config.toml |grep -C 10 harbor

51 disable_tcp_service = true

52 enable_selinux = false

53 enable_tls_streaming = false

54 enable_unprivileged_icmp = false

55 enable_unprivileged_ports = false

56 ignore_image_defined_volumes = false

57 max_concurrent_downloads = 3

58 max_container_log_line_size = 16384

59 netns_mounts_under_state_dir = false

60 restrict_oom_score_adj = false

61 sandbox_image = "harbor.magedu.net/baseimages/pause:3.7"

62 selinux_category_range = 1024

63 stats_collect_period = 10

64 stream_idle_timeout = "4h0m0s"

65 stream_server_address = "127.0.0.1"

66 stream_server_port = "0"

67 systemd_cgroup = false

68 tolerate_missing_hugetlb_controller = true

69 unset_seccomp_profile = ""

70

71 [plugins."io.containerd.grpc.v1.cri".cni]

--

145 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."easzlab.io.local:5000"]

146 endpoint = ["http://easzlab.io.local:5000"]

147 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

148 endpoint = ["https://docker.mirrors.ustc.edu.cn", "http://hub-mirror.c.163.com"]

149 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."gcr.io"]

150 endpoint = ["https://gcr.mirrors.ustc.edu.cn"]

151 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s.gcr.io"]

152 endpoint = ["https://gcr.mirrors.ustc.edu.cn/google-containers/"]

153 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."quay.io"]

154 endpoint = ["https://quay.mirrors.ustc.edu.cn"]

155 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.magedu.net"]

156 endpoint = ["https://harbor.magedu.net"]

157 [plugins."io.containerd.grpc.v1.cri".registry.configs."harbor.magedu.net".tls]

158 insecure_skip_verify = true

159 [plugins."io.containerd.grpc.v1.cri".registry.configs."harbor.magedu.net".auth]

160 username = "admin"

161 password = "Harbor12345"

162

163 [plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming]

164 tls_cert_file = ""

165 tls_key_file = ""

166

167 [plugins."io.containerd.internal.v1.opt"]

168 path = "/opt/containerd"

169

[root@easzlab-k8s-master-01 ~]#

重启containerd

systemctl daemon-reload && systemctl restart containerd.service

1.2、扩容node

root@easzlab-deploy:/etc/kubeasz# ./ezctl add-node k8s-01 172.16.88.yyy

二、k8s集群升级

升级前状况 root@easzlab-deploy:/etc/kubeasz# root@easzlab-deploy:/etc/kubeasz# kubectl get node -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME 172.16.88.154 Ready,SchedulingDisabled master 11h v1.24.2 172.16.88.154 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 172.16.88.155 Ready,SchedulingDisabled master 11h v1.24.2 172.16.88.155 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 172.16.88.156 Ready,SchedulingDisabled master 11h v1.24.2 172.16.88.156 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 172.16.88.157 Ready node 11h v1.24.2 172.16.88.157 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 172.16.88.158 Ready node 11h v1.24.2 172.16.88.158 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 172.16.88.159 Ready node 10h v1.24.2 172.16.88.159 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 root@easzlab-deploy:/etc/kubeasz# 升级前准备 下载kubernetes组件 https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.24.md#downloads-for-v1243 #k8s组件二进制下载官方地址 wget https://dl.k8s.io/v1.24.3/bin/linux/amd64/kubectl wget https://dl.k8s.io/v1.24.3/bin/linux/amd64/kubelet wget https://dl.k8s.io/v1.24.3/bin/linux/amd64/kubeadm wget https://dl.k8s.io/v1.24.3/bin/linux/amd64/kube-scheduler wget https://dl.k8s.io/v1.24.3/bin/linux/amd64/kube-proxy wget https://dl.k8s.io/v1.24.3/bin/linux/amd64/kube-controller-manager wget https://dl.k8s.io/v1.24.3/bin/linux/amd64/kube-apiserver 升级master containerd,注意,如果此时集群节点有pod需要提前关闭pod节点调度,在驱逐上面pod,相应命令如下: kubectl cordon <node name> #设置节点不可调度 kubectl drain <node name> #驱逐节点上pod kubectl uncordon <node name> #恢复节点pod调度 升级步骤: 升级master 停止kubernetes组件服务 systemctl stop kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet containerd cd /root/docker/ cp containerd /opt/kube/bin/ cp containerd-shim /opt/kube/bin/ cp containerd-shim-runc-v2 /opt/kube/bin/#复制时报cp: cannot create regular file '/opt/kube/bin/containerd-shim-runc-v2': Text file busy,需要重启虚机,重新拷贝 cp ctr /opt/kube/bin/ cp runc /opt/kube/bin/ 拷贝新版本组件进行替换 cd /root/zujian/ cp ./kube-apiserver /opt/kube/bin/kube-apiserver cp ./kube-controller-manager /opt/kube/bin/kube-controller-manager cp ./kubectl /opt/kube/bin/kubectl cp ./kubelet /opt/kube/bin/kubelet cp ./kube-proxy /opt/kube/bin/kube-proxy cp ./kube-scheduler /opt/kube/bin/kube-scheduler 重启组件服务 systemctl start kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet containerd systemctl enable kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet containerd 升级node节点 systemctl stop kubectl kube-proxy kubelet containerd reboot cd ./docker/ cp containerd /opt/kube/bin/ cp containerd-shim /opt/kube/bin/ cp containerd-shim-runc-v2 /opt/kube/bin/ #复制时报cp: cannot create regular file '/opt/kube/bin/containerd-shim-runc-v2': Text file busy,需要重启虚机,重新拷贝 cp ctr /opt/kube/bin/ cp runc /opt/kube/bin/ cd ./zujian/ cp ./kubectl /opt/kube/bin/kubectl cp ./kubelet /opt/kube/bin/kubelet cp ./kube-proxy /opt/kube/bin/kube-proxy systemctl start kubectl kube-proxy kubelet containerd systemctl enable kubectl kube-proxy kubelet containerd 升级前 root@easzlab-deploy:/etc/kubeasz# kubectl get node -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME 172.16.88.154 Ready,SchedulingDisabled master 12h v1.24.3 172.16.88.154 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.6 172.16.88.155 Ready,SchedulingDisabled master 12h v1.24.2 172.16.88.155 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 172.16.88.156 Ready,SchedulingDisabled master 11h v1.24.2 172.16.88.156 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 172.16.88.157 Ready node 12h v1.24.2 172.16.88.157 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 172.16.88.158 Ready node 12h v1.24.2 172.16.88.158 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 172.16.88.159 Ready node 10h v1.24.2 172.16.88.159 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 逐步升级其他节点 root@easzlab-deploy:/etc/kubeasz# kubectl get node -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME 172.16.88.154 Ready,SchedulingDisabled master 12h v1.24.3 172.16.88.154 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.6 172.16.88.155 Ready,SchedulingDisabled master 12h v1.24.3 172.16.88.155 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.6 172.16.88.156 Ready,SchedulingDisabled master 12h v1.24.3 172.16.88.156 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.6 172.16.88.157 Ready node 12h v1.24.2 172.16.88.157 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 172.16.88.158 Ready node 12h v1.24.2 172.16.88.158 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 172.16.88.159 Ready node 10h v1.24.2 172.16.88.159 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.4 root@easzlab-deploy:/etc/kubeasz# root@easzlab-deploy:~# root@easzlab-deploy:~# root@easzlab-deploy:~# 升级后 root@easzlab-deploy:~# kubectl get node -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME 172.16.88.154 Ready,SchedulingDisabled master 14h v1.24.3 172.16.88.154 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.6 172.16.88.155 Ready,SchedulingDisabled master 14h v1.24.3 172.16.88.155 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.6 172.16.88.156 Ready,SchedulingDisabled master 14h v1.24.3 172.16.88.156 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.6 172.16.88.157 Ready node 14h v1.24.3 172.16.88.157 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.6 172.16.88.158 Ready node 14h v1.24.3 172.16.88.158 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.6 172.16.88.159 Ready node 12h v1.24.3 172.16.88.159 <none> Ubuntu 20.04.4 LTS 5.4.0-122-generic containerd://1.6.6 root@easzlab-deploy:~# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-5c8bb696bb-tjw6d 1/1 Running 1 (3m33s ago) 14h kube-system calico-node-9qxxt 1/1 Running 1 (130m ago) 14h kube-system calico-node-bnp54 1/1 Running 1 (105s ago) 12h kube-system calico-node-d5wn5 1/1 Running 1 (122m ago) 14h kube-system calico-node-lgmg8 1/1 Running 1 (121m ago) 14h kube-system calico-node-wknkt 1/1 Running 1 (2m27s ago) 14h kube-system calico-node-zpdtf 1/1 Running 1 (3m33s ago) 14h root@easzlab-deploy:~# ls chrony.sh ezdown name-change.sh name.yaml snap ubuntu-20-network-script.sh root@easzlab-deploy:~#

三、etcd备份数据与恢复

ETCDCTL_API=3 etcdctl snapshot save /etcd-backup/snapshot-`date +%F-%s`.db #备份数据

ETCDCTL_API=3 etcdctl snapshot restore snapshot-2022-07-28-1659004944.db --data-dir=/test-etcd-restore #恢复数据到指定的目录下

安装ngingx、tomcat应用服务作为测试对象

#准备nginx部署yaml文件

root@easzlab-deploy:~# mkdir pod-test root@easzlab-deploy:~# cd pod-test/ root@easzlab-deploy:~/pod-test# vi nginx.yaml root@easzlab-deploy:~/pod-test# cat nginx.yaml

kind: Deployment #apiVersion: extensions/v1beta1 apiVersion: apps/v1 metadata: labels: app: linux70-nginx-deployment-label name: linux70-nginx-deployment namespace: myserver spec: replicas: 4 selector: matchLabels: app: linux70-nginx-selector template: metadata: labels: app: linux70-nginx-selector spec: containers: - name: linux70-nginx-container image: nginx:1.20 #command: ["/apps/tomcat/bin/run_tomcat.sh"] #imagePullPolicy: IfNotPresent imagePullPolicy: Always ports: - containerPort: 80 protocol: TCP name: http - containerPort: 443 protocol: TCP name: https env: - name: "password" value: "123456" - name: "age" value: "18" # resources: # limits: # cpu: 2 # memory: 2Gi # requests: # cpu: 500m # memory: 1Gi --- kind: Service apiVersion: v1 metadata: labels: app: linux70-nginx-service-label name: linux70-nginx-service namespace: myserver spec: type: NodePort ports: - name: http port: 80 protocol: TCP targetPort: 80 nodePort: 30004 - name: https port: 443 protocol: TCP targetPort: 443 nodePort: 30443 selector: app: linux70-nginx-selector

root@easzlab-deploy:~/pod-test#

#准备tomcat部署yaml文件

root@easzlab-deploy:~/pod-test#

root@easzlab-deploy:~/pod-test# vi tomcat.yaml

root@easzlab-deploy:~/pod-test# cat tomcat.yaml

kind: Deployment #apiVersion: extensions/v1beta1 apiVersion: apps/v1 metadata: labels: app: linux70-tomcat-app1-deployment-label name: linux70-tomcat-app1-deployment namespace: linux70 spec: replicas: 1 selector: matchLabels: app: linux70-tomcat-app1-selector template: metadata: labels: app: linux70-tomcat-app1-selector spec: containers: - name: linux70-tomcat-app1-container image: tomcat:7.0.109-jdk8-openjdk #command: ["/apps/tomcat/bin/run_tomcat.sh"] #imagePullPolicy: IfNotPresent imagePullPolicy: Always ports: - containerPort: 8080 protocol: TCP name: http env: - name: "password" value: "123456" - name: "age" value: "18" # resources: # limits: # cpu: 2 # memory: 2Gi # requests: # cpu: 500m # memory: 1Gi --- kind: Service apiVersion: v1 metadata: labels: app: linux70-tomcat-app1-service-label name: linux70-tomcat-app1-service namespace: linux70 spec: type: NodePort ports: - name: http port: 80 protocol: TCP targetPort: 8080 nodePort: 30005 selector: app: linux70-tomcat-app1-selector root@easzlab-deploy:~/pod-test#

#创建对应的命名空间 root@easzlab-deploy:~/pod-test# kubectl create ns myserver #创建myserver命名空间 namespace/myserver created root@easzlab-deploy:~/pod-test# kubectl create ns linux70 #创建linux70命名空间 namespace/linux70 created root@easzlab-deploy:~/pod-test#

#在k8s集群部署nginx、tomcatl应用服务 root@easzlab-deploy:~/pod-test# kubectl apply -f nginx.yaml -f tomcat.yaml deployment.apps/linux70-nginx-deployment created service/linux70-nginx-service created deployment.apps/linux70-tomcat-app1-deployment created service/linux70-tomcat-app1-service created root@easzlab-deploy:~/pod-test# root@easzlab-deploy:~/pod-test# kubectl get pod -A #查看nginx、tomcat服务pod状态 NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-5c8bb696bb-69kws 1/1 Running 0 77m kube-system calico-node-cjx4k 1/1 Running 0 77m kube-system calico-node-mr977 1/1 Running 0 77m kube-system calico-node-q7jbn 1/1 Running 0 77m kube-system calico-node-rf9fv 1/1 Running 0 77m kube-system calico-node-th8dt 1/1 Running 0 77m kube-system calico-node-xlhbr 1/1 Running 0 77m kube-system coredns-69548bdd5f-k7qwt 1/1 Running 0 52m kube-system coredns-69548bdd5f-xvkbc 1/1 Running 0 52m kubernetes-dashboard dashboard-metrics-scraper-8c47d4b5d-hp47z 1/1 Running 0 13m kubernetes-dashboard kubernetes-dashboard-5676d8b865-t9j6r 1/1 Running 0 13m linux70 linux70-tomcat-app1-deployment-5d666575cc-t5jqm 1/1 Running 0 2m36s myserver linux70-nginx-deployment-55dc5fdcf9-ghgpq 1/1 Running 0 2m36s myserver linux70-nginx-deployment-55dc5fdcf9-hqv2m 1/1 Running 0 2m36s myserver linux70-nginx-deployment-55dc5fdcf9-nxwgq 1/1 Running 0 2m36s myserver linux70-nginx-deployment-55dc5fdcf9-zq7q6 1/1 Running 0 2m36s root@easzlab-deploy:~/pod-test#

通过etcd服务备份应用

root@easzlab-k8s-etcd-01:~# apt install etcd-client #安装etcd客户端

root@easzlab-k8s-etcd-01:~# mkdir /etcd-backup/ #创建etcd备份目录 root@easzlab-k8s-etcd-01:~# ETCDCTL_API=3 etcdctl snapshot save /etcd-backup/snapshot-`date +%F-%s`.db #手动备份etcd数据 Snapshot saved at /etcd-backup/snapshot-2022-07-28-1659004944.db root@easzlab-k8s-etcd-01:~# root@easzlab-k8s-etcd-01:~# ll -h /etcd-backup/ total 3.5M drwxr-xr-x 2 root root 4.0K Jul 28 18:42 ./ drwxr-xr-x 21 root root 4.0K Jul 28 18:41 ../ -rw-r--r-- 1 root root 3.5M Jul 28 18:42 snapshot-2022-07-28-1659004944.db root@easzlab-k8s-etcd-01:~#

模拟删除pod,通过备份数据恢复

root@easzlab-deploy:~/pod-test# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-5c8bb696bb-69kws 1/1 Running 0 143m kube-system calico-node-cjx4k 1/1 Running 0 143m kube-system calico-node-mr977 1/1 Running 0 143m kube-system calico-node-q7jbn 1/1 Running 0 143m kube-system calico-node-rf9fv 1/1 Running 0 143m kube-system calico-node-th8dt 1/1 Running 0 143m kube-system calico-node-xlhbr 1/1 Running 0 143m kube-system coredns-69548bdd5f-k7qwt 1/1 Running 0 118m kube-system coredns-69548bdd5f-xvkbc 1/1 Running 0 118m kubernetes-dashboard dashboard-metrics-scraper-8c47d4b5d-hp47z 1/1 Running 0 78m kubernetes-dashboard kubernetes-dashboard-5676d8b865-t9j6r 1/1 Running 0 78m linux70 linux70-tomcat-app1-deployment-5d666575cc-t5jqm 1/1 Running 0 68m myserver linux70-nginx-deployment-55dc5fdcf9-ghgpq 1/1 Running 0 68m myserver linux70-nginx-deployment-55dc5fdcf9-hqv2m 1/1 Running 0 68m myserver linux70-nginx-deployment-55dc5fdcf9-nxwgq 1/1 Running 0 68m myserver linux70-nginx-deployment-55dc5fdcf9-zq7q6 1/1 Running 0 68m root@easzlab-deploy:~/pod-test# kubectl delete -f nginx.yaml deployment.apps "linux70-nginx-deployment" deleted service "linux70-nginx-service" deleted root@easzlab-deploy:~/pod-test# kubectl get pod -A #此时nginx服务已经不存在了 NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-5c8bb696bb-69kws 1/1 Running 0 143m kube-system calico-node-cjx4k 1/1 Running 0 143m kube-system calico-node-mr977 1/1 Running 0 143m kube-system calico-node-q7jbn 1/1 Running 0 143m kube-system calico-node-rf9fv 1/1 Running 0 143m kube-system calico-node-th8dt 1/1 Running 0 143m kube-system calico-node-xlhbr 1/1 Running 0 143m kube-system coredns-69548bdd5f-k7qwt 1/1 Running 0 118m kube-system coredns-69548bdd5f-xvkbc 1/1 Running 0 118m kubernetes-dashboard dashboard-metrics-scraper-8c47d4b5d-hp47z 1/1 Running 0 79m kubernetes-dashboard kubernetes-dashboard-5676d8b865-t9j6r 1/1 Running 0 79m linux70 linux70-tomcat-app1-deployment-5d666575cc-t5jqm 1/1 Running 0 68m root@easzlab-deploy:~/pod-test#

停止所有与k8s相关服务,建议把整个集群关闭

root@easzlab-deploy:~# ansible 'vm' -m shell -a "poweroff"

停止etcd服务,并清除旧的etcd数据

#删除etcd-01节点数据

[root@easzlab-k8s-etcd-01 ~]# systemctl stop etcd [root@easzlab-k8s-etcd-01 ~]# ll -h /var/lib/etcd/ total 12K drwx------ 3 root root 4.0K Oct 13 20:29 ./ drwxr-xr-x 45 root root 4.0K Oct 13 20:29 ../ drwx------ 4 root root 4.0K Oct 13 20:29 member/ [root@easzlab-k8s-etcd-01 ~]# rm -fr /var/lib/etcd/* [root@easzlab-k8s-etcd-01 ~]# ll -h /var/lib/etcd/ total 8.0K drwx------ 2 root root 4.0K Oct 18 15:09 ./ drwxr-xr-x 45 root root 4.0K Oct 13 20:29 ../ [root@easzlab-k8s-etcd-01 ~]#

#删除etcd-02节点数据 [root@easzlab-k8s-etcd-02 ~]# systemctl stop etcd [root@easzlab-k8s-etcd-02 ~]# rm -fr /var/lib/etcd/* [root@easzlab-k8s-etcd-02 ~]# ll -h /var/lib/etcd/ total 8.0K drwx------ 2 root root 4.0K Oct 18 15:10 ./ drwxr-xr-x 45 root root 4.0K Oct 13 20:29 ../ [root@easzlab-k8s-etcd-02 ~]#

#删除etcd-03节点数据 [root@easzlab-k8s-etcd-03 ~]# systemctl stop etcd [root@easzlab-k8s-etcd-03 ~]# rm -fr /var/lib/etcd/* [root@easzlab-k8s-etcd-03 ~]# ll -h /var/lib/etcd/ total 8.0K drwx------ 2 root root 4.0K Oct 18 15:10 ./ drwxr-xr-x 45 root root 4.0K Oct 13 20:29 ../ [root@easzlab-k8s-etcd-03 ~]#

通过备份数据恢复etcd

[root@easzlab-k8s-etcd-01 ~]# mkdir /test-etcd-restore

[root@easzlab-k8s-etcd-01 ~]# cd /etcd-backup/

root@easzlab-k8s-etcd-01:/etcd-backup# ETCDCTL_API=3 etcdctl snapshot restore snapshot-2022-07-28-1659004944.db --data-dir=/test-etcd-restore 2022-07-28 19:07:10.228436 I | mvcc: restore compact to 137736 2022-07-28 19:07:10.245163 I | etcdserver/membership: added member 8e9e05c52164694d [http://localhost:2380] to cluster cdf818194e3a8c32 root@easzlab-k8s-etcd-01:/etcd-backup# root@easzlab-k8s-etcd-01:/etcd-backup# cd /test-etcd-restore/ root@easzlab-k8s-etcd-01:/test-etcd-restore# ls member root@easzlab-k8s-etcd-01:/test-etcd-restore#

同步备份数据到/var/lib/etcd/下

[root@easzlab-k8s-etcd-01 ~]# cd /test-etcd-restore/ [root@easzlab-k8s-etcd-01 test-etcd-restore]# ls member [root@easzlab-k8s-etcd-01 test-etcd-restore]# cp -r member/ /var/lib/etcd/ [root@easzlab-k8s-etcd-01 test-etcd-restore]# ll -h /var/lib/etcd/ total 12K drwx------ 3 root root 4.0K Oct 18 15:12 ./ drwxr-xr-x 45 root root 4.0K Oct 13 20:29 ../ drwx------ 4 root root 4.0K Oct 18 15:12 member/ [root@easzlab-k8s-etcd-01 test-etcd-restore]# [root@easzlab-k8s-etcd-01 test-etcd-restore]# scp -r member/ root@172.16.88.155:/var/lib/etcd/ [root@easzlab-k8s-etcd-01 test-etcd-restore]# scp -r member/ root@172.16.88.156:/var/lib/etcd/

[root@easzlab-k8s-etcd-02 ~]# ll -h /var/lib/etcd/ total 12K drwx------ 3 root root 4.0K Oct 18 15:13 ./ drwxr-xr-x 45 root root 4.0K Oct 13 20:29 ../ drwx------ 4 root root 4.0K Oct 18 15:13 member/ [root@easzlab-k8s-etcd-02 ~]#

[root@easzlab-k8s-etcd-03 ~]# ll -h /var/lib/etcd/ total 12K drwx------ 3 root root 4.0K Oct 18 15:14 ./ drwxr-xr-x 45 root root 4.0K Oct 13 20:29 ../ drwx------ 4 root root 4.0K Oct 18 15:14 member/ [root@easzlab-k8s-etcd-03 ~]#

启动etcd服务

[root@easzlab-k8s-etcd-01 ~]# systemctl restart etcd [root@easzlab-k8s-etcd-02 ~]# systemctl restart etcd [root@easzlab-k8s-etcd-03 ~]# systemctl restart etcd

启动k8s集群节点

[root@cyh-dell-rocky-8-6 ~]# for i in `virsh list --all |grep easzlab-k8s |awk '{print $2}'`;do virsh start $i;done

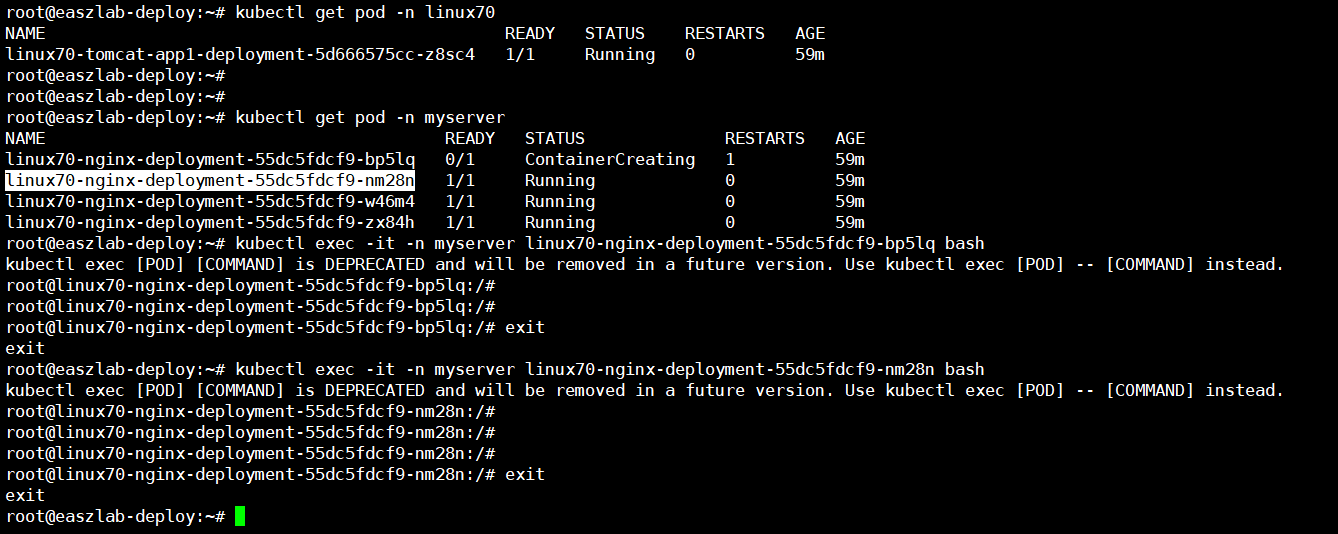

验证pod

四、通过easzlab备份恢复

备份过程

root@easzlab-deploy:/etc/kubeasz# ./ezctl backup k8s-01

ansible-playbook -i clusters/k8s-01/hosts -e @clusters/k8s-01/config.yml playbooks/94.backup.yml 2022-10-18 19:52:05 INFO cluster:k8s-01 backup begins in 5s, press any key to abort: PLAY [localhost] *************************************************************************************************************************************************************************** TASK [Gathering Facts] ********************************************************************************************************************************************************************* ok: [localhost] TASK [set NODE_IPS of the etcd cluster] **************************************************************************************************************************************************** ok: [localhost] TASK [get etcd cluster status] ************************************************************************************************************************************************************* changed: [localhost] TASK [debug] ******************************************************************************************************************************************************************************* ok: [localhost] => { "ETCD_CLUSTER_STATUS": { "changed": true, "cmd": "for ip in 172.16.88.154 172.16.88.155 172.16.88.156 ;do ETCDCTL_API=3 /etc/kubeasz/bin/etcdctl --endpoints=https://\"$ip\":2379 --cacert=/etc/kubeasz/clusters/k8s-01/ssl/ca.pem --cert=/etc/kubeasz/clusters/k8s-01/ssl/etcd.pem --key=/etc/kubeasz/clusters/k8s-01/ssl/etcd-key.pem endpoint health; done", "delta": "0:00:00.343173", "end": "2022-10-18 19:52:15.523143", "failed": false, "rc": 0, "start": "2022-10-18 19:52:15.179970", "stderr": "", "stderr_lines": [], "stdout": "https://172.16.88.154:2379 is healthy: successfully committed proposal: took = 41.052703ms\nhttps://172.16.88.155:2379 is healthy: successfully committed proposal: took = 39.800171ms\nhttps://172.16.88.156:2379 is healthy: successfully committed proposal: took = 33.177414ms", "stdout_lines": [ "https://172.16.88.154:2379 is healthy: successfully committed proposal: took = 41.052703ms", "https://172.16.88.155:2379 is healthy: successfully committed proposal: took = 39.800171ms", "https://172.16.88.156:2379 is healthy: successfully committed proposal: took = 33.177414ms" ] } } TASK [get a running ectd node] ************************************************************************************************************************************************************* changed: [localhost] TASK [debug] ******************************************************************************************************************************************************************************* ok: [localhost] => { "RUNNING_NODE.stdout": "172.16.88.154" } TASK [get current time] ******************************************************************************************************************************************************************** changed: [localhost] TASK [make a backup on the etcd node] ****************************************************************************************************************************************************** changed: [localhost -> 172.16.88.154] TASK [fetch the backup data] *************************************************************************************************************************************************************** changed: [localhost -> 172.16.88.154] TASK [update the latest backup] ************************************************************************************************************************************************************ changed: [localhost] PLAY RECAP ********************************************************************************************************************************************************************************* localhost : ok=10 changed=6 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 root@easzlab-deploy:/etc/kubeasz#

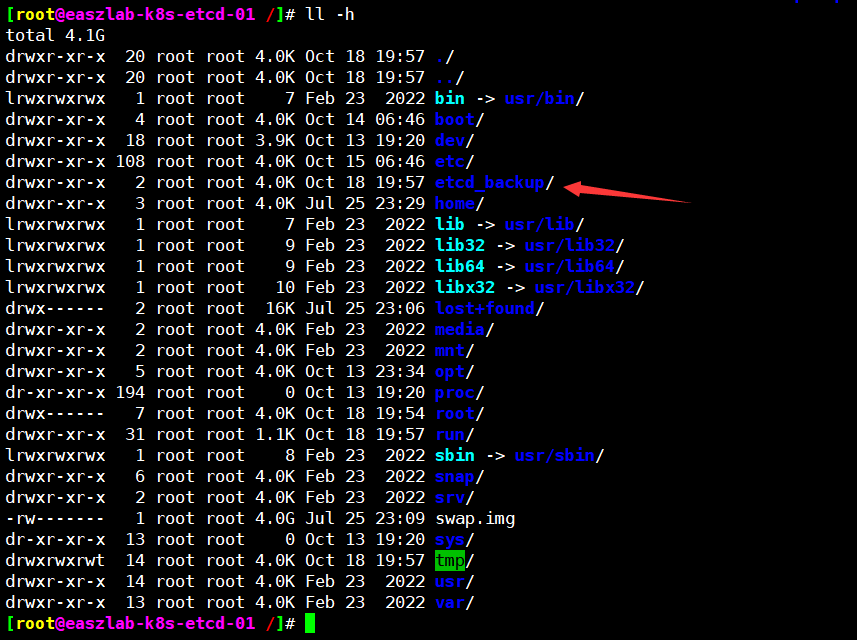

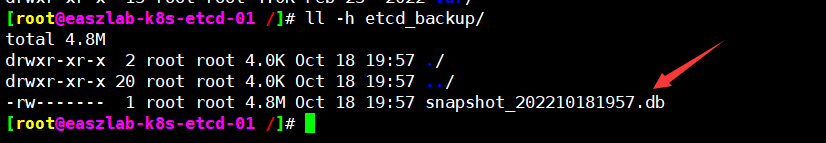

在etcd-01节点查看备份数据

模拟删除pod,通过etcd备份恢复

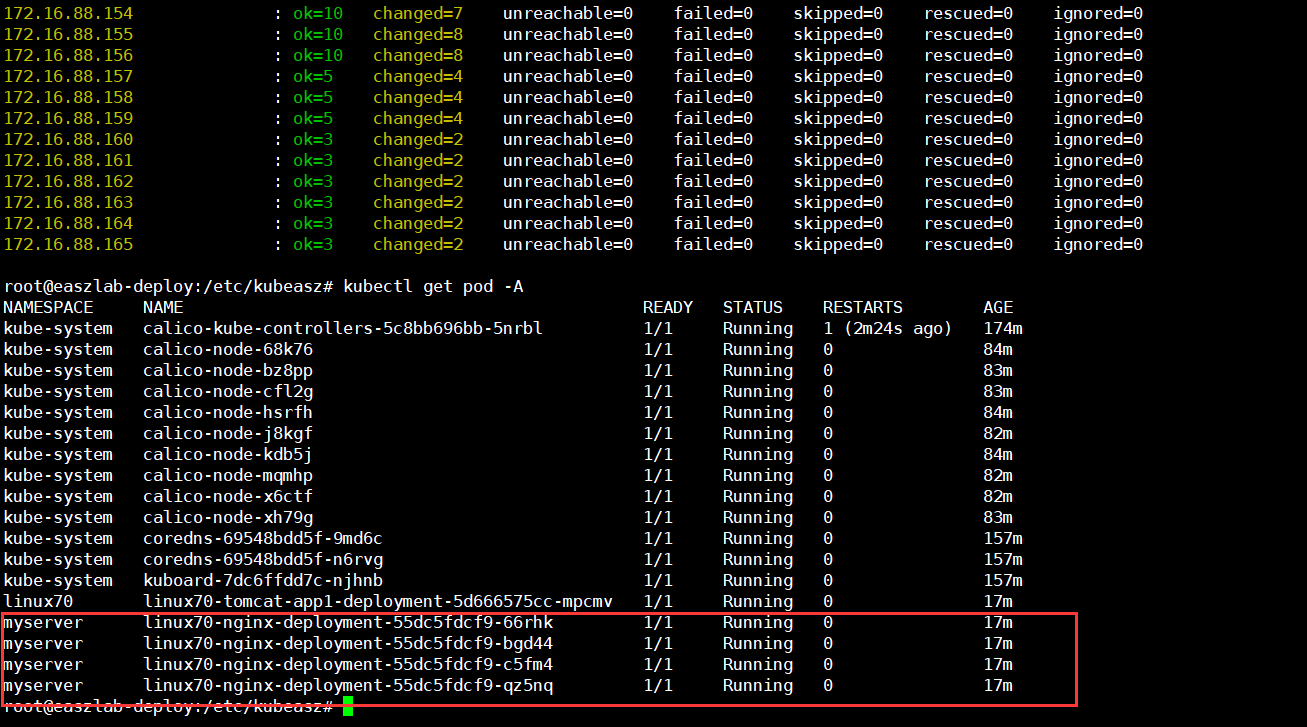

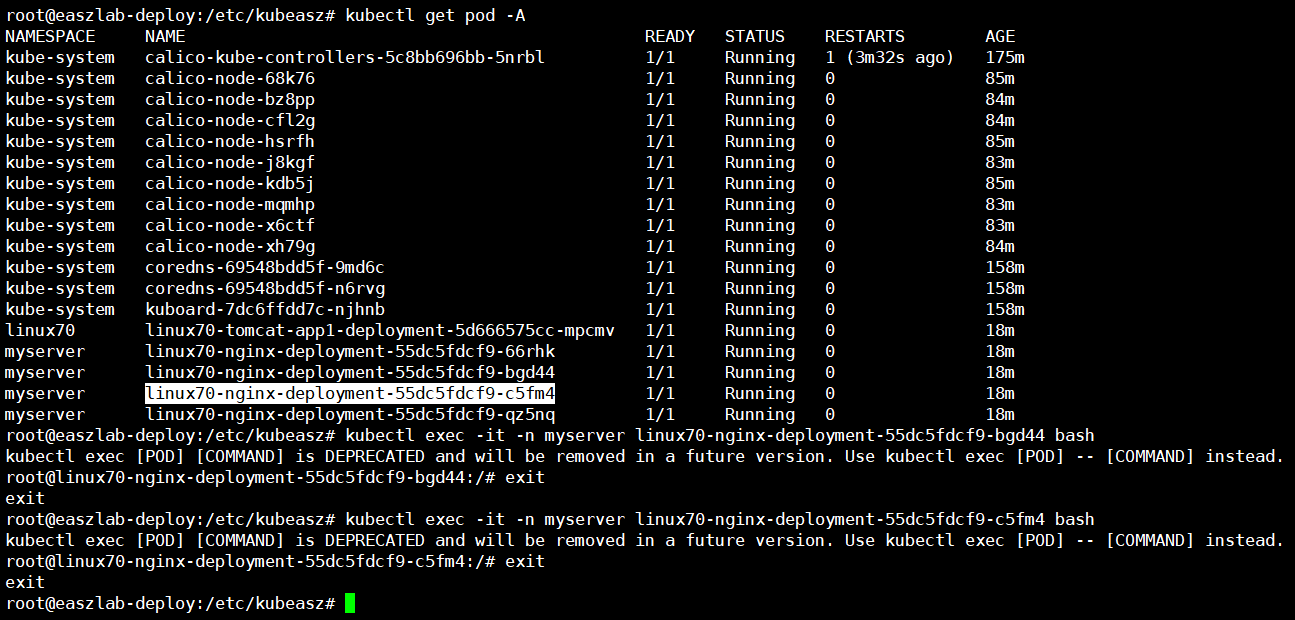

root@easzlab-deploy:/etc/kubeasz# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-5c8bb696bb-5nrbl 1/1 Running 0 168m kube-system calico-node-68k76 1/1 Running 0 78m kube-system calico-node-bz8pp 1/1 Running 0 77m kube-system calico-node-cfl2g 1/1 Running 0 77m kube-system calico-node-hsrfh 1/1 Running 0 78m kube-system calico-node-j8kgf 1/1 Running 0 76m kube-system calico-node-kdb5j 1/1 Running 0 78m kube-system calico-node-mqmhp 1/1 Running 0 76m kube-system calico-node-x6ctf 1/1 Running 0 76m kube-system calico-node-xh79g 1/1 Running 0 77m kube-system coredns-69548bdd5f-9md6c 1/1 Running 0 151m kube-system coredns-69548bdd5f-n6rvg 1/1 Running 0 151m kube-system kuboard-7dc6ffdd7c-njhnb 1/1 Running 0 151m linux70 linux70-tomcat-app1-deployment-5d666575cc-mpcmv 1/1 Running 0 11m myserver linux70-nginx-deployment-55dc5fdcf9-66rhk 1/1 Running 0 11m myserver linux70-nginx-deployment-55dc5fdcf9-bgd44 1/1 Running 0 11m myserver linux70-nginx-deployment-55dc5fdcf9-c5fm4 1/1 Running 0 11m myserver linux70-nginx-deployment-55dc5fdcf9-qz5nq 1/1 Running 0 11m root@easzlab-deploy:/etc/kubeasz# kubectl delete -f /root/jiege-k8s/pod-test/nginx.yaml #删掉nginx应用 deployment.apps "linux70-nginx-deployment" deleted service "linux70-nginx-service" deleted root@easzlab-deploy:/etc/kubeasz# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-5c8bb696bb-5nrbl 1/1 Running 0 169m kube-system calico-node-68k76 1/1 Running 0 79m kube-system calico-node-bz8pp 1/1 Running 0 78m kube-system calico-node-cfl2g 1/1 Running 0 77m kube-system calico-node-hsrfh 1/1 Running 0 78m kube-system calico-node-j8kgf 1/1 Running 0 76m kube-system calico-node-kdb5j 1/1 Running 0 78m kube-system calico-node-mqmhp 1/1 Running 0 76m kube-system calico-node-x6ctf 1/1 Running 0 77m kube-system calico-node-xh79g 1/1 Running 0 77m kube-system coredns-69548bdd5f-9md6c 1/1 Running 0 151m kube-system coredns-69548bdd5f-n6rvg 1/1 Running 0 151m kube-system kuboard-7dc6ffdd7c-njhnb 1/1 Running 0 151m linux70 linux70-tomcat-app1-deployment-5d666575cc-mpcmv 1/1 Running 0 11m root@easzlab-deploy:/etc/kubeasz#

通过自带恢复机制恢复应用

root@easzlab-deploy:/etc/kubeasz# ./ezctl restore k8s-01

ansible-playbook -i clusters/k8s-01/hosts -e @clusters/k8s-01/config.yml playbooks/95.restore.yml 2022-10-18 20:01:13 INFO cluster:k8s-01 restore begins in 5s, press any key to abort: PLAY [kube_master] ************************************************************************************************************************************************************************* TASK [Gathering Facts] ********************************************************************************************************************************************************************* ok: [172.16.88.157] ok: [172.16.88.159] ok: [172.16.88.158] TASK [stopping kube_master services] ******************************************************************************************************************************************************* changed: [172.16.88.158] => (item=kube-apiserver) changed: [172.16.88.159] => (item=kube-apiserver) changed: [172.16.88.157] => (item=kube-apiserver) changed: [172.16.88.158] => (item=kube-controller-manager) changed: [172.16.88.159] => (item=kube-controller-manager) changed: [172.16.88.157] => (item=kube-controller-manager) changed: [172.16.88.158] => (item=kube-scheduler) changed: [172.16.88.159] => (item=kube-scheduler) changed: [172.16.88.157] => (item=kube-scheduler) PLAY [kube_master,kube_node] *************************************************************************************************************************************************************** TASK [Gathering Facts] ********************************************************************************************************************************************************************* ok: [172.16.88.161] ok: [172.16.88.160] ok: [172.16.88.164] ok: [172.16.88.163] ok: [172.16.88.162] ok: [172.16.88.165] TASK [stopping kube_node services] ********************************************************************************************************************************************************* changed: [172.16.88.157] => (item=kubelet) changed: [172.16.88.161] => (item=kubelet) changed: [172.16.88.160] => (item=kubelet) changed: [172.16.88.158] => (item=kubelet) changed: [172.16.88.157] => (item=kube-proxy) changed: [172.16.88.159] => (item=kubelet) changed: [172.16.88.161] => (item=kube-proxy) changed: [172.16.88.160] => (item=kube-proxy) changed: [172.16.88.158] => (item=kube-proxy) changed: [172.16.88.159] => (item=kube-proxy) changed: [172.16.88.162] => (item=kubelet) changed: [172.16.88.163] => (item=kubelet) changed: [172.16.88.164] => (item=kubelet) changed: [172.16.88.165] => (item=kubelet) changed: [172.16.88.162] => (item=kube-proxy) changed: [172.16.88.163] => (item=kube-proxy) changed: [172.16.88.164] => (item=kube-proxy) changed: [172.16.88.165] => (item=kube-proxy) PLAY [etcd] ******************************************************************************************************************************************************************************** TASK [Gathering Facts] ********************************************************************************************************************************************************************* ok: [172.16.88.154] ok: [172.16.88.155] ok: [172.16.88.156] TASK [cluster-restore : 停止ectd 服务] ********************************************************************************************************************************************************* changed: [172.16.88.154] changed: [172.16.88.156] changed: [172.16.88.155] TASK [cluster-restore : 清除etcd 数据目录] ******************************************************************************************************************************************************* changed: [172.16.88.154] changed: [172.16.88.155] changed: [172.16.88.156] TASK [cluster-restore : 生成备份目录] ************************************************************************************************************************************************************ ok: [172.16.88.154] changed: [172.16.88.155] changed: [172.16.88.156] TASK [cluster-restore : 准备指定的备份etcd 数据] **************************************************************************************************************************************************** changed: [172.16.88.154] changed: [172.16.88.156] changed: [172.16.88.155] TASK [cluster-restore : 清理上次备份恢复数据] ******************************************************************************************************************************************************** ok: [172.16.88.154] ok: [172.16.88.155] ok: [172.16.88.156] TASK [cluster-restore : etcd 数据恢复] ********************************************************************************************************************************************************* changed: [172.16.88.154] changed: [172.16.88.155] changed: [172.16.88.156] TASK [cluster-restore : 恢复数据至etcd 数据目录] **************************************************************************************************************************************************** changed: [172.16.88.155] changed: [172.16.88.154] changed: [172.16.88.156] TASK [cluster-restore : 重启etcd 服务] ********************************************************************************************************************************************************* changed: [172.16.88.156] changed: [172.16.88.155] changed: [172.16.88.154] TASK [cluster-restore : 以轮询的方式等待服务同步完成] **************************************************************************************************************************************************** changed: [172.16.88.154] changed: [172.16.88.155] changed: [172.16.88.156] PLAY [kube_master] ************************************************************************************************************************************************************************* TASK [starting kube_master services] ******************************************************************************************************************************************************* changed: [172.16.88.159] => (item=kube-apiserver) changed: [172.16.88.158] => (item=kube-apiserver) changed: [172.16.88.159] => (item=kube-controller-manager) changed: [172.16.88.157] => (item=kube-apiserver) changed: [172.16.88.158] => (item=kube-controller-manager) changed: [172.16.88.159] => (item=kube-scheduler) changed: [172.16.88.157] => (item=kube-controller-manager) changed: [172.16.88.158] => (item=kube-scheduler) changed: [172.16.88.157] => (item=kube-scheduler) PLAY [kube_master,kube_node] *************************************************************************************************************************************************************** TASK [starting kube_node services] ********************************************************************************************************************************************************* changed: [172.16.88.157] => (item=kubelet) changed: [172.16.88.159] => (item=kubelet) changed: [172.16.88.158] => (item=kubelet) changed: [172.16.88.161] => (item=kubelet) changed: [172.16.88.160] => (item=kubelet) changed: [172.16.88.157] => (item=kube-proxy) changed: [172.16.88.161] => (item=kube-proxy) changed: [172.16.88.159] => (item=kube-proxy) changed: [172.16.88.158] => (item=kube-proxy) changed: [172.16.88.160] => (item=kube-proxy) changed: [172.16.88.162] => (item=kubelet) changed: [172.16.88.163] => (item=kubelet) changed: [172.16.88.164] => (item=kubelet) changed: [172.16.88.165] => (item=kubelet) changed: [172.16.88.162] => (item=kube-proxy) changed: [172.16.88.163] => (item=kube-proxy) changed: [172.16.88.165] => (item=kube-proxy) changed: [172.16.88.164] => (item=kube-proxy) PLAY RECAP ********************************************************************************************************************************************************************************* 172.16.88.154 : ok=10 changed=7 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 172.16.88.155 : ok=10 changed=8 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 172.16.88.156 : ok=10 changed=8 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 172.16.88.157 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 172.16.88.158 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 172.16.88.159 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 172.16.88.160 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 172.16.88.161 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 172.16.88.162 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 172.16.88.163 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 172.16.88.164 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 172.16.88.165 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 root@easzlab-deploy:/etc/kubeasz#