Proxmox5.4+OvS+Ceph(L)集群搭建

一、proxmox介绍

proxmox简介:

Proxmox VE(Proxmox Virtual Environment)是一个既可以运行虚拟机也可以运行容器的虚拟化平台(KVM 虚拟机和 LXC 容器); Proxmox VE 基于Debian Linux 开发,并且完全开源。

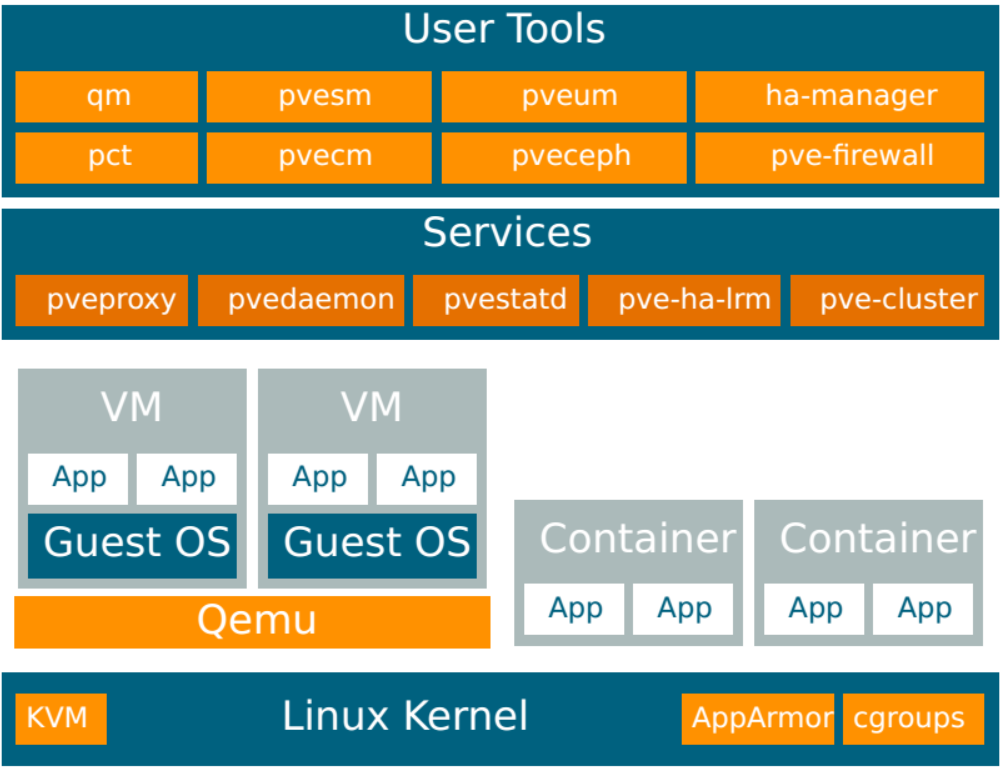

proxmox逻辑组件架构:

qm qemu虚拟机管理工具 pct lxc容器管理工具 pvesm 存储池管理工具 pvecm 集群管理工具 pveum 用户管理工具 pveceph ceph管理工具 ha-manager ha管理工具 pve-firewall 防火墙管理工具 pveproxy Proxmox VE API代理进程,为https在TCP 8006端口向外提供API调用接口 pvedaemon Proxmox VE API 守护进程,监听本地地址,外部无法访问,向外部提供API调用接口 pvestatd Proxmox VE 监控守护进程,定时获取虚拟机、存储和容器的状态数据,自动将结果发送集群中所有节点 pve-ha-lrm 本地资源管理器,控制本地节点的资源运行状态 pve-cluter 集群管理服务,负责proxmox集群正常运行,包括集群增删 管理方式: 1)基于 Web界面管理 Proxmox VE通过内嵌的 WebGUI 完成基本安装与使用,WebGUI基于JavaScript 框架(ExtJS)开发, Web集中式管理,不仅能够让你通过 GUI 界面控制一切功能,而且可以浏览每个节点的历史活动和 syslog 日志,例如虚拟机备份恢复日志、虚拟机在线迁移日志、 HA 活动日志等。 2)命令行管理 Proxmox VE 提供了一个命令行界面,可以管理虚拟化环境里的全部组件。这个命令行工具不仅有 Tab 键补全功能,而且提供了完善的 Unix man 形式的技术文档。Proxmox VE 使用了 RESTful 形式的 API。开发人员选用 JSON 作为主要数据格式,所有的 API 定义均采用 JSON 语法。

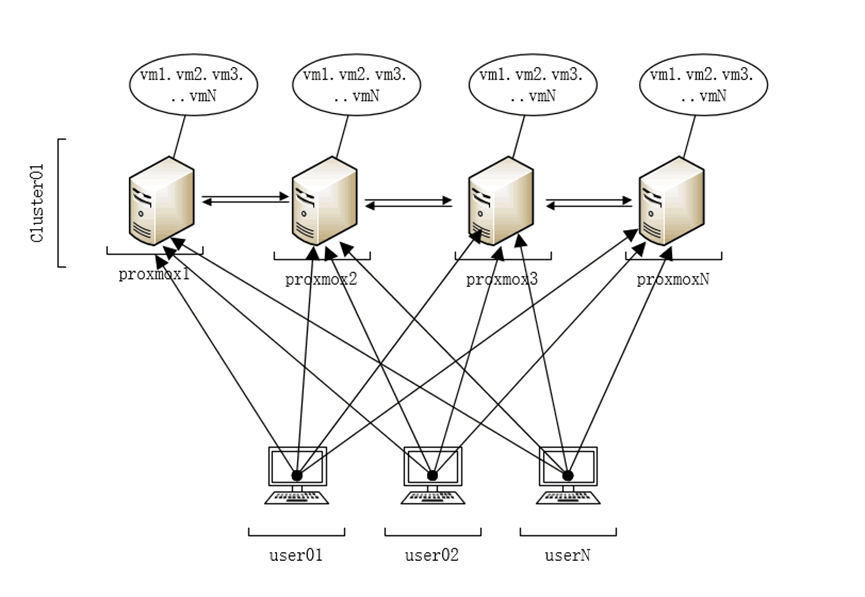

proxmox集群模式:去中心化,集群中所有节点都可以管理集群中所有节点资源,集群节点信息实时同步。

官方地址:https://proxmox.com/en/

官方论坛:https://forum.proxmox.com/

官方文档:https://pve.proxmox.com/wiki/Main_Page

二、安装部署

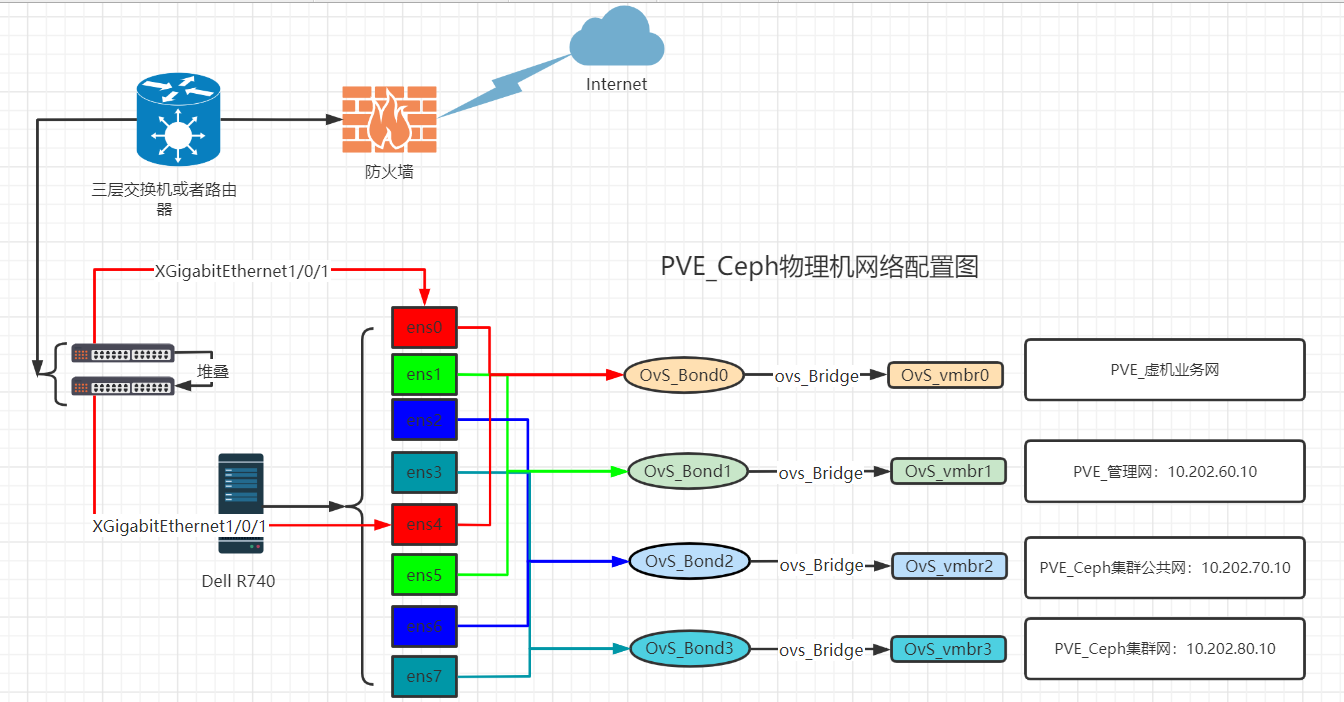

2.1、物理机配置逻辑图(计算+存储共存)

2.2、安装前硬件设置

本次环境服务器型号Dell R710 R720xd R740xd

1) 配置raid 1

选择物理机后槽两块SAS 15K 2.5寸300G磁盘作为系统盘,设置raid模式为1

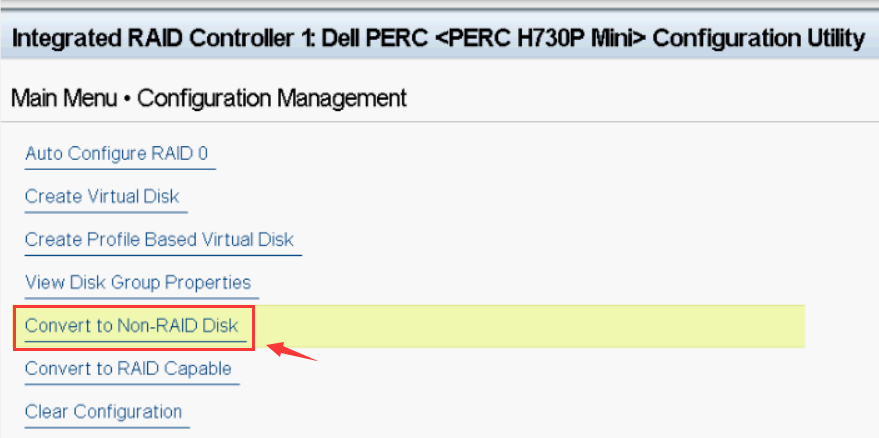

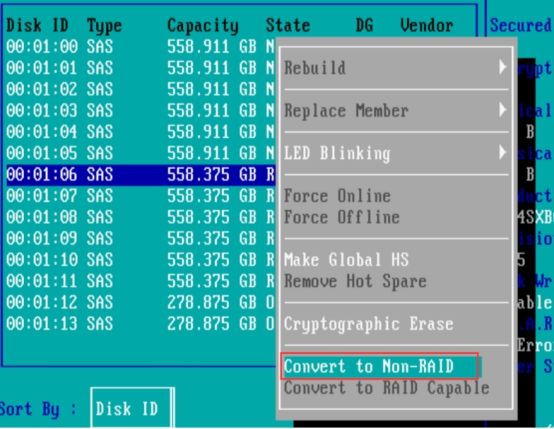

2) 设置附加磁盘为Non-RAID模式(非系统盘)

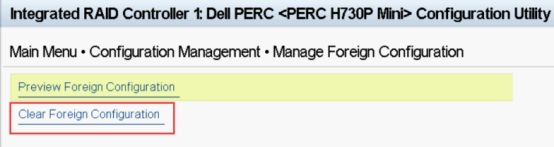

取消所有磁盘Foreign

设置磁盘为Non-RAID模式

BIOS里面直接修改

或者

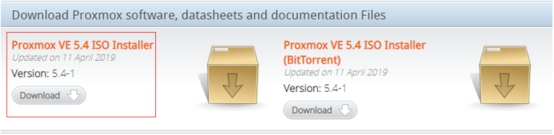

2.3、proxmox ISO下载

官方下载ISO镜像(目前已经更新到7.2版本,推荐使用新版本):

https://www.proxmox.com/en/downloads

百度网盘:

链接:https://pan.baidu.com/s/1AI0zIFtVoUt9Fd2Y5KdAwg

提取码:plqd

2.4、proxmox操作系统安装

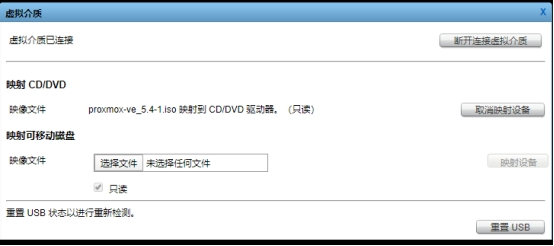

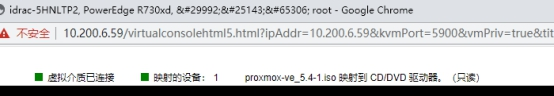

idrac方式安装:

映射虚拟介质

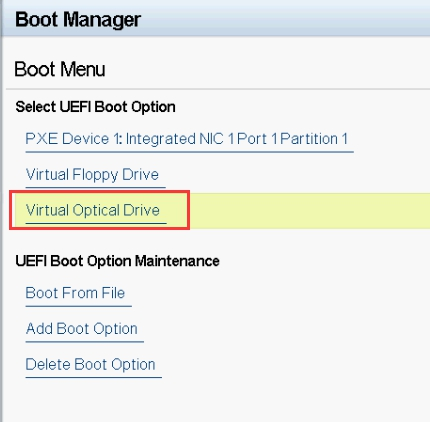

重启物理机,长按F11键,进入此页面,选择"One-shot UEFI Boot Menu"

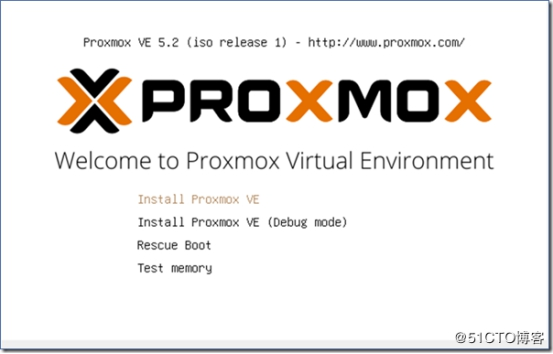

大概等待两分钟,进入安装界面

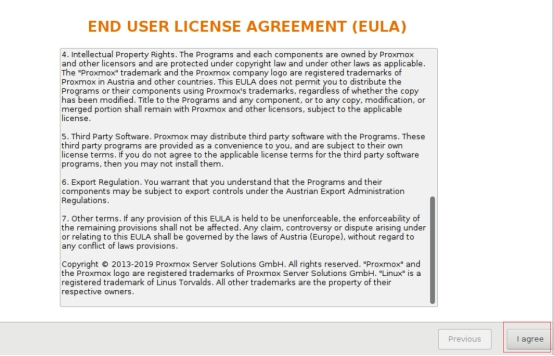

选择“I agree”

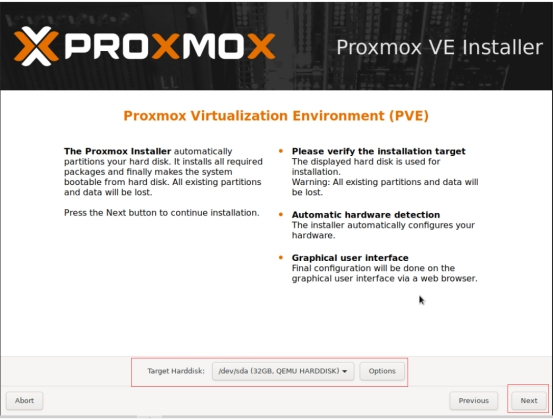

此处选择系统盘(raid 1),选择Next

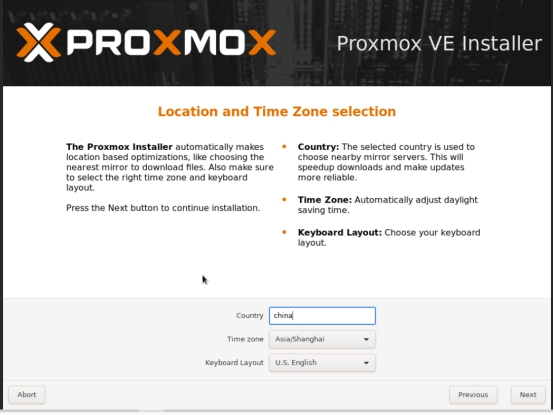

国家和时区,“china””asia/Shanghai”,点击“Next”

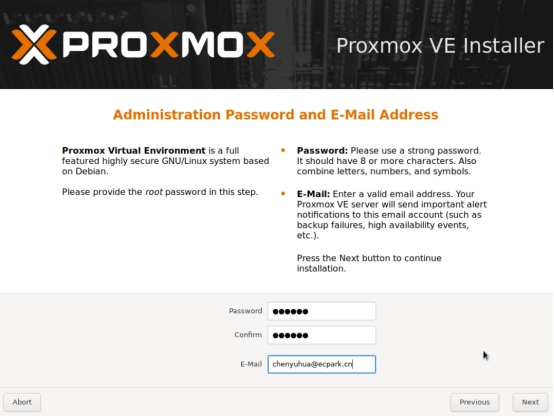

初始系统密码设置“redhat”,方便安装部署,后期可以通过ansible批量修改,点击“Next”

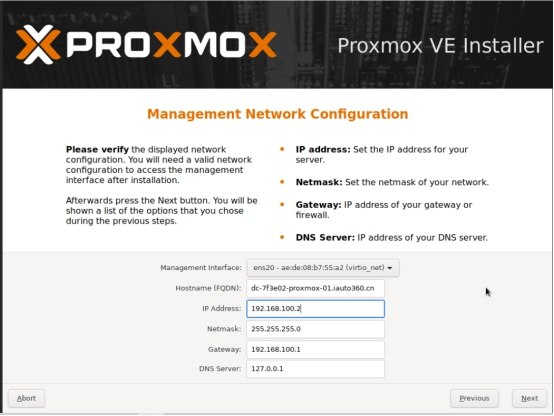

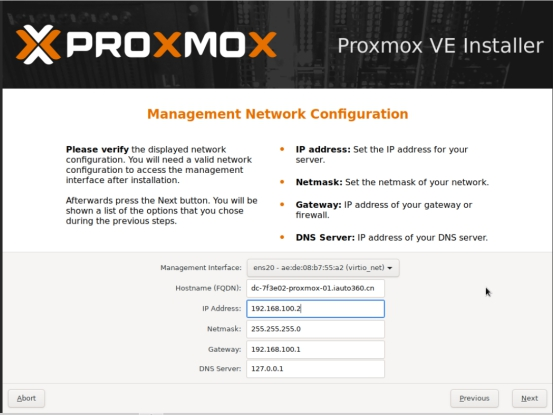

网卡设置(根据真实环境规划配置)

Management Network configuration默认选择物理机第三张网卡

FQDN格式为 物理机节点主机名:

机房-机房楼层及机柜区域-proxmox-序号.iauto360.cn

IP地址:10.101.1.0/24 (IP范围10--250)

gateway:10.101.1.1

“Next”开始安装

安装完毕,“Reboot”重启服务器。

三、proxmox服务器集群配置

proxmox 所需的网络ceph私有仓库源搭建

参考文档:

https://www.cnblogs.com/surplus/p/11441206.html

https://linux.cn/article-3384-1.0.html

https://lanseyujie.com/post/build-ppa-with-reprepro.html

#镜像拉取

root@dc-ubuntu16-netmirrors-source:/data2# cat /etc/apt/mirror.list

############# config ##################

#

# set base_path /data/spool/apt-mirror/Ubuntu16-04

set base_path /data2/spool/apt-mirror/proxmox5u4

#

# set mirror_path $base_path/mirror

# set skel_path $base_path/skel

# set var_path $base_path/var

# set cleanscript $var_path/clean.sh

# set defaultarch <running host architecture>

# set postmirror_script $var_path/postmirror.sh

# set run_postmirror 0

set nthreads 3

set _tilde 0

#

############# end config ##############

deb https://mirrors.tuna.tsinghua.edu.cn/debian/dists/ stretch main contrib non-free

deb https://mirrors.tuna.tsinghua.edu.cn/debian/dists/ stretch-updates main contrib non-free

deb https://mirrors.tuna.tsinghua.edu.cn/debian/dists/ stretch-backports main contrib non-free

deb https://mirrors.tuna.tsinghua.edu.cn/debian-security/dists/ stretch/updates main contrib non-free

deb http://download.proxmox.com/debian/ceph-luminous stretch main

deb http://download.proxmox.com/debian/pve stretch pve-no-subscription

clean http://archive.ubuntu.com/ubuntu

root@dc-ubuntu16-netmirrors-source:/data2#

root@dc-ubuntu16-netmirrors-source:/data2# crontab -l

0 1 * * 5 /usr/bin/apt-mirror >> /var/spool/apt-mirror/var/cron.log

#通过Nginx配置,让外部访问

server {

listen 80;

listen 443 ssl http2;

server_name ppa.ccccxxx.com;

ssl_certificate /etc/nginx/ssl/ccccxxx.com.cer;

ssl_certificate_key /etc/nginx/ssl/ccccxxx.com.key;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers TLS13-AES-256-GCM-SHA384:TLS13-CHACHA20-POLY1305-SHA256:TLS13-AES-128-GCM-SHA256:TLS13-AES-128-CCM-8-SHA256:TLS13-AES-128-CCM-SHA256:EECDH+CHACHA20:EECDH+AES128:RSA+AES128:EECDH+AES256:RSA+AES256:EECDH+3DES:RSA+3DES:!MD5;

ssl_prefer_server_ciphers on;

ssl_session_timeout 10m;

ssl_session_cache builtin:1000 shared:SSL:10m;

ssl_buffer_size 1400;

ssl_stapling on;

ssl_stapling_verify on;

charset utf-8;

access_log /var/log/nginx/access.log combined;

root /data/wwwroot/ppa/public;

index index.html index.htm;

if ($ssl_protocol = "") {

return 301 https://$host$request_uri;

}

# error_page 404 /404.html;

location / {

autoindex on;

autoindex_exact_size on;

autoindex_localtime on;

}

location ~ /\. {

deny all;

}

}

服务器环境基本配置

root@netmis03:/data/dc_init# cat pve_item/files/pve5.4_sysinit.sh

#!/bin/bash

#配置仓库源 echo "#deb https://enterprise.proxmox.com/debian/pve stretch pve-enterprise" >/etc/apt/sources.list.d/pve-enterprise.list wget -q -O- 'https://mirrors.ustc.edu.cn/proxmox/debian/pve/dists/stretch/proxmox-ve-release-5.x.gpg' | apt-key add - echo "deb https://mirrors.ustc.edu.cn/proxmox/debian/pve/ stretch pve-no-subscription" > /etc/apt/sources.list.d/pve-no-subscription.list

#取消登录提示订阅信息 sed -i.bak "s/data.status !== 'Active'/false/g" /usr/share/javascript/proxmox-widget-toolkit/proxmoxlib.js && systemctl restart pveproxy.service

#配置时间同步

systemctl stop pve-daily-update.timer && systemctl disable pve-daily-update.timer

#关闭邮件服务 systemctl stop postfix && systemctl disable postfix

#增加ceph私有仓库源,为了节省带宽与安装速度,提前配置ceph仓库源 cat <<EOT > /etc/apt/sources.list deb http://mirrors.ustc.edu.cn/debian/ stretch main contrib non-free #deb http://mirrors.ustc.edu.cn/debian/ stretch-backports main contrib non-free deb http://mirrors.ustc.edu.cn/debian/ stretch-proposed-updates main contrib non-free deb http://mirrors.ustc.edu.cn/debian/ stretch-updates main contrib non-free deb http://mirrors.ustc.edu.cn/debian-security stretch/updates main contrib non-free deb http://10.202.15.252/pve/ceph-luminous stretch main deb http://10.202.15.252/pve/pve stretch pve-no-subscription EOT

#安装所需的ovs、ceph组件 apt update && apt-get install openvswitch-switch ceph -y

root@netmis03:/data/dc_init/pve_item/files# cat rc.local

#!/bin/sh -e # # rc.local # # This script is executed at the end of each multiuser runlevel. # Make sure that the script will "exit 0" on success or any other # value on error. # # In order to enable or disable this script just change the execution # bits. # # By default this script does nothing. /bin/bash /data/disk_change.sh echo 4194304 > /proc/sys/kernel/pid_max exit 0

内核优化

root@netmis03:/data/dc_init/pve_item/files# cat sysctl.conf

fs.file-max = 20000000 fs.aio-max-nr = 262144 kernel.pid_max = 4194303 kernel.threads-max = 6558899 vm.zone_reclaim_mode = 0 vm.dirty_ratio = 15 vm.dirty_background_ratio = 5 vm.swappiness = 0 vm.vfs_cache_pressure = 300 net.core.somaxconn = 262144 net.core.netdev_max_backlog = 50000 net.ipv4.ip_forward = 0 net.ipv4.conf.default.rp_filter = 1 net.ipv4.conf.default.accept_source_route = 0 net.ipv4.icmp_echo_ignore_broadcasts = 1 net.ipv4.icmp_ignore_bogus_error_responses = 1 net.ipv4.tcp_fin_timeout = 30 net.ipv4.tcp_keepalive_intvl = 30 net.ipv4.tcp_keepalive_time = 120 net.ipv4.ip_local_port_range = 2000 65000 net.ipv4.tcp_max_syn_backlog = 65535 net.ipv4.tcp_tw_reuse = 1 net.core.wmem_default = 87380 net.core.wmem_max = 16777216 net.core.rmem_default = 87380 net.core.rmem_max = 16777216 kernel.sysrq = 0 kernel.core_uses_pid = 1 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_tw_buckets = 55000 net.ipv4.tcp_sack = 1 net.ipv4.tcp_window_scaling = 1 net.ipv4.tcp_rmem = 4096 87380 134217728 net.ipv4.tcp_wmem = 4096 65536 134217728 net.ipv4.tcp_max_orphans = 3276800 net.ipv4.tcp_timestamps = 1 net.ipv4.tcp_synack_retries = 1 net.ipv4.tcp_syn_retries = 1 net.ipv4.tcp_mem = 94500000 915000000 927000000 net.ipv4.icmp_echo_ignore_broadcasts=1 net.core.default_qdisc=fq net.ipv4.tcp_congestion_control=bbr kernel.sched_min_granularity_ns = 10000000 kernel.sched_wakeup_granularity_ns = 15000000 net.ipv4.tcp_slow_start_after_idle = 0 net.ipv4.tcp_mtu_probing = 1

root@netmis03:/data/dc_init/pve_item/files# cat limits.conf

* soft nproc 655350 * hard nproc 655350 * soft nofile 655350 * hard nofile 655350 root soft nproc 655350 root hard nproc 655350 root soft nofile 655350 root hard nofile 655350

磁盘优化参数

root@netmis03:/data/dc_init/pve_item/files# cat disk_change.sh

#!/bin/bash ssd=(`lsblk -d -o name,rota|sed -r -n '1!p'|awk -F'sd' '{print $2}'|grep 0|awk -F' ' '{print $1}'`) hdd=(`lsblk -d -o name,rota|sed -r -n '1!p'|awk -F'sd' '{print $2}'|grep 1|awk -F' ' '{print $1}'`) ssdnum=`echo ${#ssd[*]}` hddnum=`echo ${#hdd[*]}` if [ "$ssdnum" != "0" ];then for i in "${ssd[@]}" do echo "8192" > /sys/block/sd$i/queue/read_ahead_kb echo "noop" > /sys/block/sd$i/queue/scheduler done if [ "$hddnum" == "0" ];then exit else for i in "${hdd[@]}" do echo "8192" > /sys/block/sd$i/queue/read_ahead_kb echo "deadline" > /sys/block/sd$i/queue/scheduler echo "8" > /sys/block/sd$i/queue/iosched/fifo_batch echo "100" > /sys/block/sd$i/queue/iosched/read_expire echo "4" > /sys/block/sd$i/queue/iosched/writes_starved done fi else if [ "$hddnum" == "0" ];then exit else for i in "${hdd[@]}" do echo "8193" > /sys/block/sd$i/queue/read_ahead_kb echo "deadline" > /sys/block/sd$i/queue/scheduler echo "8" > /sys/block/sd$i/queue/iosched/fifo_batch echo "100" > /sys/block/sd$i/queue/iosched/read_expire echo "4" > /sys/block/sd$i/queue/iosched/writes_starved done fi fi

四、网络配置

root@dt-1ap213-proxmox-01:~# cat /etc/network/interfaces

allow-vmbr0 bond0 iface bond0 inet manual ovs_bonds eno1np0 enp95s0f0np0 ovs_type OVSBond ovs_bridge vmbr0 ovs_options bond_mode=balance-tcp vlan_mode=trunk other_config:lacp-time=fast lacp=active pre-up (ifconfig eno1np0 mtu 9000 && ifconfig enp95s0f0np0 mtu 9000) mtu 9000 #PVE_业务网段 allow-vmbr1 bond1 iface bond1 inet manual ovs_bonds eno2np1 enp95s0f1np1 ovs_type OVSBond ovs_bridge vmbr1 ovs_options bond_mode=balance-tcp other_config:lacp-time=fast lacp=active #PVE_管理网段 allow-vmbr2 bond2 iface bond2 inet manual ovs_bonds enp59s0f0np0 enp94s0f0np0 ovs_type OVSBond ovs_bridge vmbr2 ovs_options lacp=active bond_mode=balance-tcp other_config:lacp-time=fast pre-up (ifconfig enp59s0f0np0 mtu 9000 && ifconfig enp94s0f0np0 mtu 9000) mtu 9000 #PVE_Ceph公共网段 allow-vmbr3 bond3 iface bond3 inet manual ovs_bonds enp59s0f1np1 enp94s0f1np1 ovs_type OVSBond ovs_bridge vmbr3 ovs_options other_config:lacp-time=fast bond_mode=balance-tcp lacp=active pre-up (ifconfig enp59s0f1np1 mtu 9000 && ifconfig enp94s0f1np1 mtu 9000) mtu 9000 #PVE_Ceph集群网段 auto lo iface lo inet loopback iface eno3 inet manual iface eno4 inet manual iface eno1np0 inet manual iface eno2np1 inet manual iface enp59s0f0np0 inet manual iface enp59s0f1np1 inet manual iface enp94s0f0np0 inet manual iface enp94s0f1np1 inet manual iface enp95s0f0np0 inet manual iface enp95s0f1np1 inet manual auto vmbr0 iface vmbr0 inet manual ovs_type OVSBridge ovs_ports bond0 mtu 9000 #PVE_业务网段 auto vmbr1 iface vmbr1 inet static address 10.101.1.130 netmask 255.255.255.0 gateway 10.101.1.1 ovs_type OVSBridge ovs_ports bond1 #PVE_管理网段 auto vmbr2 iface vmbr2 inet static address 10.101.30.130 netmask 255.255.255.0 ovs_type OVSBridge ovs_ports bond2 mtu 9000 #PVE_Ceph公共网段 auto vmbr3 iface vmbr3 inet static address 10.101.50.130 netmask 255.255.255.0 ovs_type OVSBridge ovs_ports bond3 mtu 9000 #PVE_Ceph集群网段

此处可以写成脚本,通过ansible批量配置

cat interfaces-config.sh

#!/bin/bash IP=`ifconfig vmbr0 | grep -Eo 'inet (addr:)?([0-9]*\.){3}[0-9]*' | grep -Eo '([0-9]*\.){3}[0-9]*' |awk -F '.' '{print $4}'` cp /etc/network/interfaces /etc/network/`date +\%F`_interfaces.bak cat <<EOF > /etc/network/interfaces allow-vmbr0 bond0 iface bond0 inet manual ovs_bonds eno1np0 enp95s0f0np0 ovs_type OVSBond ovs_bridge vmbr0 ovs_options bond_mode=balance-tcp vlan_mode=trunk other_config:lacp-time=fast lacp=active pre-up (ifconfig eno1np0 mtu 9000 && ifconfig enp95s0f0np0 mtu 9000) mtu 9000 #PVE_业务网段 allow-vmbr1 bond1 iface bond1 inet manual ovs_bonds eno2np1 enp95s0f1np1 ovs_type OVSBond ovs_bridge vmbr1 ovs_options bond_mode=balance-tcp other_config:lacp-time=fast lacp=active #PVE_管理网段 allow-vmbr2 bond2 iface bond2 inet manual ovs_bonds enp59s0f0np0 enp94s0f0np0 ovs_type OVSBond ovs_bridge vmbr2 ovs_options lacp=active bond_mode=balance-tcp other_config:lacp-time=fast pre-up (ifconfig enp59s0f0np0 mtu 9000 && ifconfig enp94s0f0np0 mtu 9000) mtu 9000 #PVE_Ceph公共网段 allow-vmbr3 bond3 iface bond3 inet manual ovs_bonds enp59s0f1np1 enp94s0f1np1 ovs_type OVSBond ovs_bridge vmbr3 ovs_options other_config:lacp-time=fast bond_mode=balance-tcp lacp=active pre-up (ifconfig enp59s0f1np1 mtu 9000 && ifconfig enp94s0f1np1 mtu 9000) mtu 9000 #PVE_Ceph集群网段 auto lo iface lo inet loopback iface eno3 inet manual iface eno4 inet manual iface eno1np0 inet manual iface eno2np1 inet manual iface enp59s0f0np0 inet manual iface enp59s0f1np1 inet manual iface enp94s0f0np0 inet manual iface enp94s0f1np1 inet manual iface enp95s0f0np0 inet manual iface enp95s0f1np1 inet manual auto vmbr0 iface vmbr0 inet manual ovs_type OVSBridge ovs_ports bond0 mtu 9000 #PVE_业务网段 auto vmbr1 iface vmbr1 inet static address 10.101.1.$IP netmask 255.255.255.0 gateway 10.101.1.1 ovs_type OVSBridge ovs_ports bond1 #PVE_管理网段 auto vmbr2 iface vmbr2 inet static address 10.101.30.$IP netmask 255.255.255.0 ovs_type OVSBridge ovs_ports bond2 mtu 9000 #PVE_Ceph公共网段 auto vmbr3 iface vmbr3 inet static address 10.101.50.$IP netmask 255.255.255.0 ovs_type OVSBridge ovs_ports bond3 mtu 9000 #PVE_Ceph集群网段 EOF

ansible -i ./hosts 'vm' -m script -a "./interfaces-config.sh" -u root -k

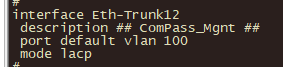

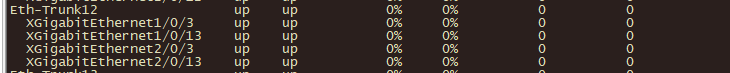

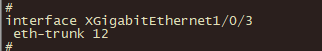

环境交换机华为S5720

当机器初始化完毕,系统层面按照规划配置后,重启物理机启动ovs功能,需要结合交换机配置lacp绑定

思科交换机配置:Creating LACP bundle with VLAN's between Proxmox and Cisco switch | Proxmox Support Forum

interface GigabitEthernet1/0/x switchport trunk allowed vlan 1000,1001 switchport mode trunk channel-group 1 mode active interface GigabitEthernet1/0/y switchport trunk allowed vlan 1000,1001 switchport mode trunk channel-group 1 mode active interface Port-channel1 switchport trunk allowed vlan 1000,1001 switchport mode trunk

创建集群

创建集群名称:pvecm create DT-Ceph-01

添加集群成员:pvecm add 10.101.1.130

root@dt-1ap213-proxmox-01:~# pvecm status

Quorum information ------------------ Date: Thu Apr 23 21:28:04 2020 Quorum provider: corosync_votequorum Nodes: 26 Node ID: 0x00000001 Ring ID: 1/1188 Quorate: Yes Votequorum information ---------------------- Expected votes: 27 Highest expected: 27 Total votes: 26 Quorum: 14 Flags: Quorate Membership information ---------------------- Nodeid Votes Name 0x00000001 1 10.101.1.130 (local) 0x00000002 1 10.101.1.131 0x0000001e 1 10.101.1.132 0x00000003 1 10.101.1.133 0x00000004 1 10.101.1.134 0x00000005 1 10.101.1.135 0x00000006 1 10.101.1.136 0x00000007 1 10.101.1.137 0x00000008 1 10.101.1.140 0x00000009 1 10.101.1.141 0x0000000a 1 10.101.1.142 0x0000000b 1 10.101.1.143 0x0000000c 1 10.101.1.144 0x0000000d 1 10.101.1.145 0x0000000e 1 10.101.1.146 0x0000000f 1 10.101.1.147 0x00000010 1 10.101.1.150 0x00000011 1 10.101.1.151 0x00000012 1 10.101.1.152 0x00000013 1 10.101.1.153 0x00000014 1 10.101.1.154 0x00000015 1 10.101.1.155 0x0000001d 1 10.101.1.156 0x00000016 1 10.101.1.157 0x0000001b 1 10.101.1.164 0x0000001c 1 10.101.1.165

root@dt-1ap213-proxmox-01:~#

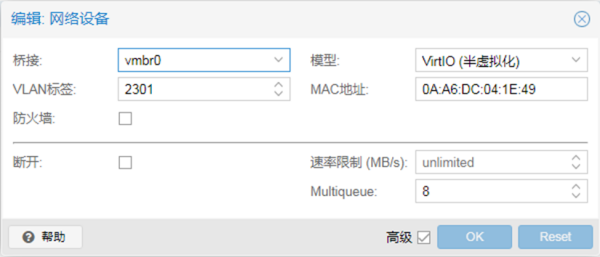

业务虚机网段需要网络运维在交换机或者路由器配置好

虚机创建指点vlan号配置对于ip即可

五、配置ceph集群

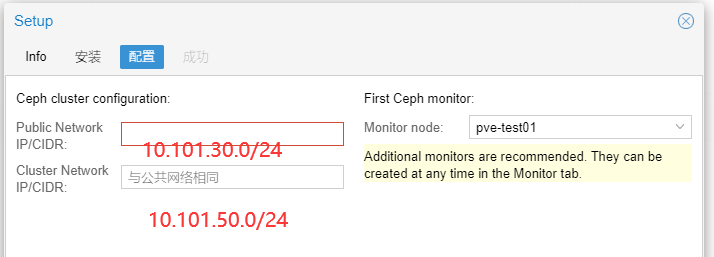

配置ceph集群网络与公共网络

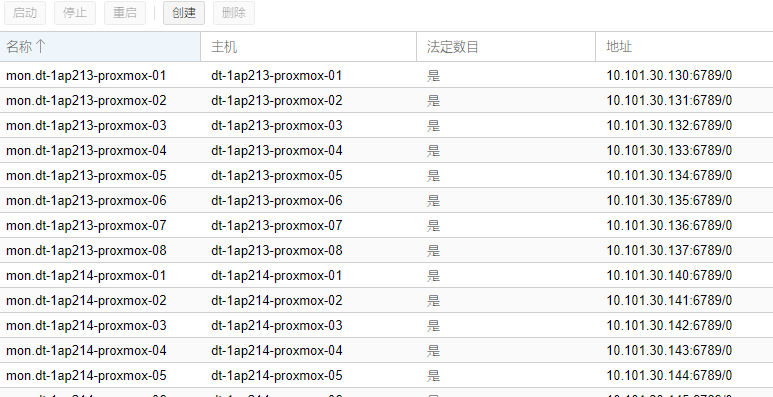

添加ceph monitor监视器

配置优化ceph.conf文件

root@dt-1ap213-proxmox-01:~# cat /etc/ceph/ceph.conf

[global] auth client required = cephx auth cluster required = cephx auth service required = cephx bluestore cache autotune = false bluestore cache kv ratio = 0.2 bluestore cache meta ratio = 0.8 bluestore csum type = none bluestore extent map shard target size = 100 bluestore rocksdb options = compression=kNoCompression,max_write_buffer_number=32,min_write_buffer_number_to_merge=2,recycle_log_file_num=32,compaction_style=kCompactionStyleLevel,write_buffer_size=67108864,target_file_size_base=67108864,max_background_compactions=31,level0_file_num_compaction_trigger=8,level0_slowdown_writes_trigger=32,level0_stop_writes_trigger=64,max_bytes_for_level_base=536870912,compaction_threads=32,max_bytes_for_level_multiplier=8,flusher_threads=8,compaction_readahead_size=2097152 bluestore shard finishers = true bluestore_block_db_size = 107374182400 bluestore_block_wal_size = 42949672960 bluestore_cache_size_hdd = 2147483648 bluestore_cache_size_ssd = 6442450944 cluster network = 10.101.50.0/24 debug asok = 0/0 debug auth = 0/0 debug buffer = 0/0 debug client = 0/0 debug context = 0/0 debug crush = 0/0 debug filer = 0/0 debug filestore = 0/0 debug finisher = 0/0 debug heartbeatmap = 0/0 debug journal = 0/0 debug journaler = 0/0 debug lockdep = 0/0 debug mon = 0/0 debug monc = 0/0 debug ms = 0/0 debug objclass = 0/0 debug objectcatcher = 0/0 debug objecter = 0/0 debug optracker = 0/0 debug osd = 0/0 debug paxos = 0/0 debug perfcounter = 0/0 debug rados = 0/0 debug rbd = 0/0 debug rgw = 0/0 debug throttle = 0/0 debug timer = 0/0 debug tp = 0/0 debug_bdev = 0/0 debug_bluefs = 0/0 debug_bluestore = 0/0 debug_civetweb = 0/0 debug_compressor = 0/0 debug_crypto = 0/0 debug_dpdk = 0/0 debug_eventtrace = 0/0 debug_fuse = 0/0 debug_javaclient = 0/0 debug_kinetic = 0/0 debug_kstore = 0/0 debug_leveldb = 0/0 debug_mds = 0/0 debug_mds_balancer = 0/0 debug_mds_locker = 0/0 debug_mds_log = 0/0 debug_mds_log_expire = 0/0 debug_mds_migrator = 0/0 debug_memdb = 0/0 debug_mgr = 0/0 debug_mgrc = 0/0 debug_none = 0/0 debug_rbd_mirror = 0/0 debug_rbd_replay = 0/0 debug_refs = 0/0 debug_reserver = 0/0 debug_rocksdb = 0/0 debug_striper = 0/0 debug_xio = 0/0 err_to_stderr = true fsid = c1ce328c-892e-4ef9-a262-58cae79dfc25 keyring = /etc/pve/priv/$cluster.$name.keyring log_max_recent = 10000 log_to_stderr = false max open files = 131071 mon allow pool delete = true mon_clock_drift_allowed = 2.000000 mon_clock_drift_warn_backoff = 30.000000 mon_osd_min_down_reporters = 13 ms_bind_before_connect = true ms_dispatch_throttle_bytes = 2097152000 objecter_inflight_op_bytes = 3048576000 objecter_inflight_ops = 819200 osd pool default min size = 2 osd pool default size = 3 osd_client_message_cap = 5000 osd_client_message_size_cap = 2147483648 osd_client_op_priority = 63 osd_deep_scrub_stride = 131072 osd_map_cache_size = 1024 osd_max_backfills = 1 osd_max_write_size = 512 osd_objectstore = bluestore osd_pg_object_context_cache_count = 2048 osd_recovery_max_active = 1 osd_recovery_max_single_start = 1 osd_recovery_op_priority = 3 osd_recovery_sleep = 0.5 public network = 10.101.30.0/24 rbd readahead disable after bytes = 0 rbd readahead max bytes = 8194304 rbd_cache_max_dirty = 251658240 rbd_cache_max_dirty_age = 5.000000 rbd_cache_size = 335544320 rbd_cache_target_dirty = 167772160 rbd_cache_writethrough_until_flush = false rbd_op_threads = 6 rocksdb_separate_wal_dir = true [client] rbd cache = true [osd] bluestore extent map shard max size = 200 bluestore extent map shard min size = 50 keyring = /var/lib/ceph/osd/ceph-$id/keyring ms crc data = false osd deep scrub interval = 2419200 osd map share max epochs = 100 osd max pg log entries = 10 osd memory target = 4294967296 osd min pg log entries = 10 osd op num shards = 8 osd op num threads per shard = 2 osd op threads = 4 osd pg log dups tracked = 10 osd pg log trim min = 10 osd scrub begin hour = 0 osd scrub chunk max = 1 osd scrub chunk min = 1 osd scrub end hour = 6 osd scrub sleep = 3 osd_mon_heartbeat_interval = 40 throttler_perf_counter = false [mon.dt-1ap214-proxmox-07] host = dt-1ap214-proxmox-07 mon addr = 10.101.30.146:6789 ................

添加ceph osd

添加前确保osd都是干净环境,推荐格式化一次

root@netmis03:/home/chenyuhua/ansible/pve-ceph# cat disk-gpt-hdd.sh

#!/bin/bash sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sda sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdb sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdc sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdd sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sde sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdf sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdg sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdh sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdi sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdj sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdk sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdl sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdm sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdn sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdo sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdp sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdq sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdr sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sds sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdt sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdu sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdv sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdw sgdisk --zap-all --clear --mbrtogpt -g -- /dev/sdx

添加osd(本环境启用ssd作为osd wal、db加速)

root@netmis03:/home/chenyuhua/ansible/pve-ceph# cat osd-hdd-add.sh

#!/bin/bash ceph-disk prepare --bluestore /dev/sde --block.db /dev/sda --block.wal /dev/sda ceph-disk prepare --bluestore /dev/sdf --block.db /dev/sda --block.wal /dev/sda ceph-disk prepare --bluestore /dev/sdg --block.db /dev/sda --block.wal /dev/sda ceph-disk prepare --bluestore /dev/sdh --block.db /dev/sda --block.wal /dev/sda ceph-disk prepare --bluestore /dev/sdi --block.db /dev/sda --block.wal /dev/sda ceph-disk prepare --bluestore /dev/sdj --block.db /dev/sdb --block.wal /dev/sdb ceph-disk prepare --bluestore /dev/sdk --block.db /dev/sdb --block.wal /dev/sdb ceph-disk prepare --bluestore /dev/sdl --block.db /dev/sdb --block.wal /dev/sdb ceph-disk prepare --bluestore /dev/sdm --block.db /dev/sdb --block.wal /dev/sdb ceph-disk prepare --bluestore /dev/sdn --block.db /dev/sdb --block.wal /dev/sdb ceph-disk prepare --bluestore /dev/sdo --block.db /dev/sdc --block.wal /dev/sdc ceph-disk prepare --bluestore /dev/sdp --block.db /dev/sdc --block.wal /dev/sdc ceph-disk prepare --bluestore /dev/sdq --block.db /dev/sdc --block.wal /dev/sdc ceph-disk prepare --bluestore /dev/sdr --block.db /dev/sdc --block.wal /dev/sdc ceph-disk prepare --bluestore /dev/sds --block.db /dev/sdc --block.wal /dev/sdc ceph-disk prepare --bluestore /dev/sdt --block.db /dev/sdd --block.wal /dev/sdd ceph-disk prepare --bluestore /dev/sdu --block.db /dev/sdd --block.wal /dev/sdd ceph-disk prepare --bluestore /dev/sdv --block.db /dev/sdd --block.wal /dev/sdd ceph-disk prepare --bluestore /dev/sdw --block.db /dev/sdd --block.wal /dev/sdd ceph-disk prepare --bluestore /dev/sdx --block.db /dev/sdd --block.wal /dev/sdd

配置ceph桶规则,ssd、hdd分类管理

root@zhc-a01-proxmox-03:~# ceph osd getcrushmap -o crushmap #导出ceph crush map文件

55

root@zhc-a01-proxmox-03:~#

root@zhc-a01-proxmox-03:~# crushtool -d crushmap -o cc.txt #反编译为文本文件

root@zhc-a01-proxmox-03:~# vim.tiny cc.txt

# begin crush map tunable choose_local_tries 0 tunable choose_local_fallback_tries 0 tunable choose_total_tries 50 tunable chooseleaf_descend_once 1 tunable chooseleaf_vary_r 1 tunable chooseleaf_stable 1 tunable straw_calc_version 1 tunable allowed_bucket_algs 54 # devices device 0 osd.0 class hdd device 1 osd.1 class hdd device 2 osd.2 class hdd device 3 osd.3 class hdd

...............................

device 438 osd.438 class ssd device 439 osd.439 class ssd # types type 0 osd type 1 host type 2 chassis type 3 rack type 4 row type 5 pdu type 6 pod type 7 room type 8 datacenter type 9 region type 10 root # buckets host dt-1ap213-proxmox-01 { id -3 # do not change unnecessarily id -4 class hdd # do not change unnecessarily id -35 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.0 weight 2.183 item osd.1 weight 2.183 item osd.2 weight 2.183 item osd.3 weight 2.183 item osd.4 weight 2.183 item osd.5 weight 2.183 item osd.6 weight 2.183 item osd.7 weight 2.183 item osd.8 weight 2.183 item osd.9 weight 2.183 item osd.10 weight 2.183 item osd.11 weight 2.183 item osd.12 weight 2.183 item osd.13 weight 2.183 item osd.14 weight 2.183 } host dt-1ap213-proxmox-02 { id -5 # do not change unnecessarily id -6 class hdd # do not change unnecessarily id -36 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.20 weight 2.183 item osd.21 weight 2.183 item osd.22 weight 2.183 item osd.23 weight 2.183 item osd.24 weight 2.183 item osd.25 weight 2.183 item osd.26 weight 2.183 item osd.27 weight 2.183 item osd.28 weight 2.183 item osd.29 weight 2.183 item osd.30 weight 2.183 item osd.31 weight 2.183 item osd.32 weight 2.183 item osd.33 weight 2.183 item osd.34 weight 2.183 } host dt-1ap213-proxmox-03 { id -7 # do not change unnecessarily id -8 class hdd # do not change unnecessarily id -37 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.40 weight 2.183 item osd.41 weight 2.183 item osd.42 weight 2.183 item osd.43 weight 2.183 item osd.44 weight 2.183 item osd.45 weight 2.183 item osd.46 weight 2.183 item osd.47 weight 2.183 item osd.48 weight 2.183 item osd.49 weight 2.183 item osd.50 weight 2.183 item osd.51 weight 2.183 item osd.52 weight 2.183 item osd.53 weight 2.183 item osd.54 weight 2.183 }

...............................

host dt-1ap214-proxmox-08 { id -33 # do not change unnecessarily id -34 class hdd # do not change unnecessarily id -50 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.300 weight 2.183 item osd.301 weight 2.183 item osd.302 weight 2.183 item osd.303 weight 2.183 item osd.304 weight 2.183 item osd.305 weight 2.183 item osd.306 weight 2.183 item osd.307 weight 2.183 item osd.308 weight 2.183 item osd.309 weight 2.183 item osd.310 weight 2.183 item osd.311 weight 2.183 item osd.312 weight 2.183 item osd.313 weight 2.183 item osd.314 weight 2.183 } root default { id -1 # do not change unnecessarily id -2 class hdd # do not change unnecessarily id -51 class ssd # do not change unnecessarily # weight 523.920 alg straw2 hash 0 # rjenkins1 item dt-1ap213-proxmox-01 weight 32.745 item dt-1ap213-proxmox-02 weight 32.745 item dt-1ap213-proxmox-03 weight 32.745 item dt-1ap213-proxmox-04 weight 32.745 item dt-1ap213-proxmox-05 weight 32.745 item dt-1ap213-proxmox-06 weight 32.745 item dt-1ap213-proxmox-07 weight 32.745 item dt-1ap213-proxmox-08 weight 32.745 item dt-1ap214-proxmox-01 weight 32.745 item dt-1ap214-proxmox-02 weight 32.745 item dt-1ap214-proxmox-03 weight 32.745 item dt-1ap214-proxmox-04 weight 32.745 item dt-1ap214-proxmox-05 weight 32.745 item dt-1ap214-proxmox-06 weight 32.745 item dt-1ap214-proxmox-07 weight 32.745 item dt-1ap214-proxmox-08 weight 32.745 } host dt-1ap215-proxmox-01 { id -52 # do not change unnecessarily id -53 class hdd # do not change unnecessarily id -54 class ssd # do not change unnecessarily # weight 10.476 alg straw2 hash 0 # rjenkins1 item osd.320 weight 0.873 item osd.321 weight 0.873 item osd.322 weight 0.873 item osd.323 weight 0.873 item osd.324 weight 0.873 item osd.325 weight 0.873 item osd.326 weight 0.873 item osd.327 weight 0.873 item osd.328 weight 0.873 item osd.329 weight 0.873 item osd.330 weight 0.873 item osd.331 weight 0.873 }

.................................

host dt-1ap216-proxmox-06 { id -79 # do not change unnecessarily id -80 class hdd # do not change unnecessarily id -81 class ssd # do not change unnecessarily # weight 10.476 alg straw2 hash 0 # rjenkins1 item osd.428 weight 0.873 item osd.429 weight 0.873 item osd.430 weight 0.873 item osd.431 weight 0.873 item osd.432 weight 0.873 item osd.433 weight 0.873 item osd.434 weight 0.873 item osd.435 weight 0.873 item osd.436 weight 0.873 item osd.437 weight 0.873 item osd.438 weight 0.873 item osd.439 weight 0.873 } root ssd { id -82 # do not change unnecessarily id -83 class hdd # do not change unnecessarily id -84 class ssd # do not change unnecessarily # weight 104.780 alg straw2 hash 0 # rjenkins1 item dt-1ap215-proxmox-01 weight 10.478 item dt-1ap215-proxmox-02 weight 10.478 item dt-1ap215-proxmox-03 weight 10.478 item dt-1ap215-proxmox-04 weight 10.478 item dt-1ap215-proxmox-05 weight 10.478 item dt-1ap215-proxmox-06 weight 10.478 item dt-1ap215-proxmox-07 weight 10.478 item dt-1ap215-proxmox-08 weight 10.478 item dt-1ap216-proxmox-05 weight 10.478 item dt-1ap216-proxmox-06 weight 10.478 } # rules rule replicated_rule { id 0 type replicated min_size 1 max_size 10 step take default step chooseleaf firstn 0 type host step emit } rule ssd_replicated_rule { id 1 type replicated min_size 1 max_size 10 step take ssd step chooseleaf firstn 0 type host step emit }

反编译新的新crushmap文件

root@zhc-a01-proxmox-03:~# crushtool -c cc.txt -o crushmap2

root@zhc-a01-proxmox-03:~#

root@zhc-a01-proxmox-03:~# ceph osd setcrushmap -i crushmap2 #使新的crushmap文件生效

57

root@zhc-a01-proxmox-03:~#

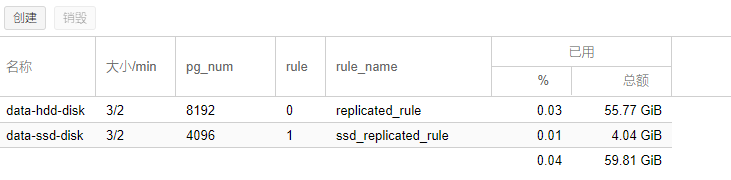

创建新的pool池

六、批量修改服务器root密码

cat change_passwd.yml

--- - hosts: vm gather_facts: false tasks: - name: change user passwd user: name={{ item.name }} password={{ item.chpass | password_hash('sha512') }} update_password=always with_items: - { name: 'root', chpass: 'zrwRaoYUfYKSh5g3' }

cat /etc/ansible/hosts

[vm] 10.101.1.[130:150] [vm:vars] ansible_become=yes ansible_become_method=sudo ansible_become_user=root

执行脚本:ansible-playbook -i ./hosts ./change_passwd.yml -u root -k

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏

· Manus爆火,是硬核还是营销?