Ceph使用---对接K8s集群使用案例

一、环境准备

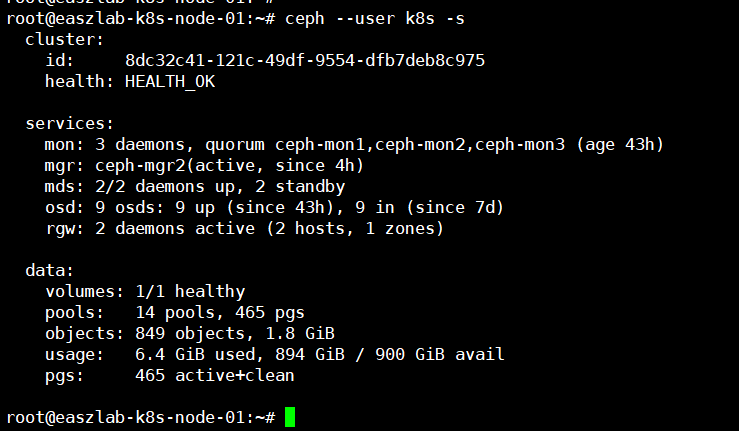

让 k8s 中的 pod 可以访问 ceph 中 rbd 提供的镜像作为存储设备, 需要在 ceph 创建 rbd、并且让 k8s node 节点能够通过 ceph 的认证。

k8s 在使用 ceph 作为动态存储卷的时候, 需要 kube-controller-manager 组件能够访问ceph, 因此需要在包括 k8s master 及 node 节点在内的每一个 node 同步认证文件。

创建初始化 rbd:

[root@ceph-deploy ~]# ceph osd pool create k8s-rbd-pool 32 32 pool 'k8s-rbd-pool' created [root@ceph-deploy ~]# ceph osd pool ls device_health_metrics myrbd1 .rgw.root default.rgw.log default.rgw.control default.rgw.meta cephfs-metadata cephfs-data mypool rbd-data1 default.rgw.buckets.index default.rgw.buckets.data ssd-pool k8s-rbd-pool [root@ceph-deploy ~]# [root@ceph-deploy ~]# ceph osd pool application enable k8s-rbd-pool rbd enabled application 'rbd' on pool 'k8s-rbd-pool' [root@ceph-deploy ~]# rbd pool init -p k8s-rbd-pool [root@ceph-deploy ~]#

创建 image:

[root@ceph-deploy ~]# rbd create k8s-img-img1 --size 3G --pool k8s-rbd-pool --image-format 2 --image-feature layering [root@ceph-deploy ~]# rbd ls --pool k8s-rbd-pool k8s-img-img1 [root@ceph-deploy ~]# rbd --image k8s-img-img1 --pool k8s-rbd-pool info #查看img详细信息 rbd image 'k8s-img-img1': size 3 GiB in 768 objects order 22 (4 MiB objects) snapshot_count: 0 id: 288243b6f7a1b block_name_prefix: rbd_data.288243b6f7a1b format: 2 features: layering op_features: flags: create_timestamp: Wed Oct 12 19:44:41 2022 access_timestamp: Wed Oct 12 19:44:41 2022 modify_timestamp: Wed Oct 12 19:44:41 2022 [root@ceph-deploy ~]#

客户端安装 ceph-common

分别在 k8s master 与各 node 节点安装 ceph-common 组件包。

以下为操作示例:

root@easzlab-deploy:~# cat /etc/ansible/hosts [vm] 172.16.88.[154:165] root@easzlab-deploy:~# root@easzlab-deploy:~# ansible 'vm' -m shell -a "wget -q -O- 'https://download.ceph.com/keys/release.asc' | sudo apt-key add -" root@easzlab-deploy:~# ansible 'vm' -m shell -a "echo 'deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific/ focal main' >> /etc/apt/sources.list" root@easzlab-deploy:~# ansible 'vm' -m shell -a "apt update" root@easzlab-deploy:~# ansible 'vm' -m shell -a "apt-cache madison ceph-common" root@easzlab-deploy:~# ansible 'vm' -m shell -a "apt install ceph-common=16.2.10-1focal -y"

创建 ceph 用户与授权:

cephadmin@ceph-deploy:~/ceph-cluster$ ceph auth get-or-create client.k8s mon 'allow r' osd 'allow * pool=k8s-rbd-pool' [client.k8s] key = AQDjq0ZjlVD5LxAAM+b/GZv+RBdmxNO7Zf77/g== cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ ceph auth get client.k8s [client.k8s] key = AQDjq0ZjlVD5LxAAM+b/GZv+RBdmxNO7Zf77/g== caps mon = "allow r" caps osd = "allow * pool=k8s-rbd-pool" exported keyring for client.k8s cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ ceph auth get client.k8s -o ceph.client.k8s.keyring exported keyring for client.k8s cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ for i in {154..165};do scp ceph.conf ceph.client.k8s.keyring root@172.16.88.$i:/etc/ceph/;done #同步账号key及ceph集群配置信息

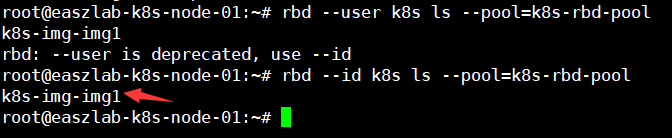

在 k8s node 节点验证用户权限

验证镜像访问权限:

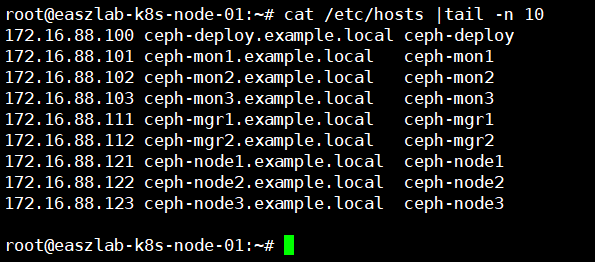

k8s 节点配置主机名叫解析:

在 ceph.conf 配置文件中包含 ceph 主机的主机名, 因此需要在 k8s 各 master 及 node 配置配置主机名解析:

root@easzlab-deploy:~# vi name-change.sh root@easzlab-deploy:~# cat name-change.sh cat >> /etc/hosts <<EOF 172.16.88.100 ceph-deploy.example.local ceph-deploy 172.16.88.101 ceph-mon1.example.local ceph-mon1 172.16.88.102 ceph-mon2.example.local ceph-mon2 172.16.88.103 ceph-mon3.example.local ceph-mon3 172.16.88.111 ceph-mgr1.example.local ceph-mgr1 172.16.88.112 ceph-mgr2.example.local ceph-mgr2 172.16.88.121 ceph-node1.example.local ceph-node1 172.16.88.122 ceph-node2.example.local ceph-node2 172.16.88.123 ceph-node3.example.local ceph-node3 EOF root@easzlab-deploy:~# ansible 'vm' -m script -a "./name-change.sh"

二、通过 keyring 文件挂载 rbd

基于 ceph 提供的 rbd 实现存储卷的动态提供, 由两种实现方式, 一是通过宿主机的 keyring文件挂载 rbd, 另外一个是通过将 keyring 中 key 定义为 k8s 中的 secret, 然后 pod 通过secret 挂载 rbd。

通过 keyring 文件直接挂载-busybox:

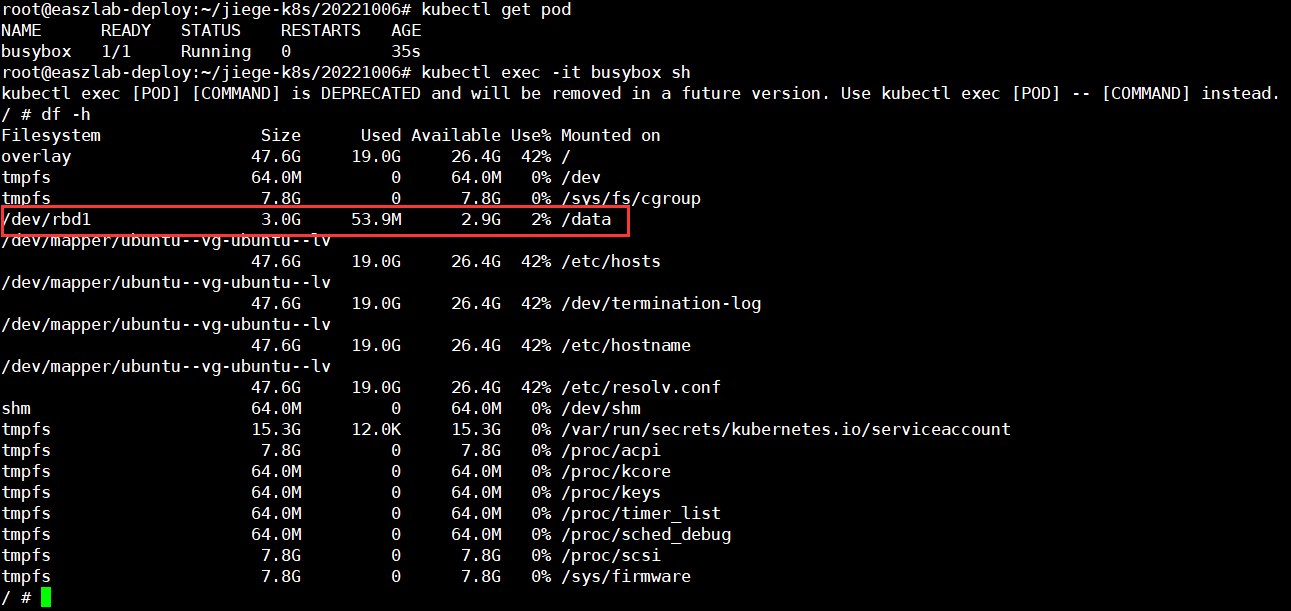

root@easzlab-deploy:~/jiege-k8s/20221006# vi case1-busybox-keyring.yaml root@easzlab-deploy:~/jiege-k8s/20221006# cat case1-busybox-keyring.yaml apiVersion: v1 kind: Pod metadata: name: busybox namespace: default spec: containers: - image: busybox command: - sleep - "3600" imagePullPolicy: Always name: busybox #restartPolicy: Always volumeMounts: - name: rbd-k8s-data1 mountPath: /data volumes: - name: rbd-k8s-data1 rbd: monitors: - '172.16.88.101:6789' - '172.16.88.102:6789' - '172.16.88.103:6789' pool: k8s-rbd-pool image: k8s-img-img1 fsType: xfs readOnly: false user: k8s keyring: /etc/ceph/ceph.client.k8s.keyring root@easzlab-deploy:~/jiege-k8s/20221006# root@easzlab-deploy:~/jiege-k8s/20221006# kubectl apply -f case1-busybox-keyring.yaml pod/busybox created root@easzlab-deploy:~/jiege-k8s/20221006# kubectl get pod NAME READY STATUS RESTARTS AGE busybox 1/1 Running 0 35s root@easzlab-deploy:~/jiege-k8s/20221006#

到 pod 验证 rbd 是否挂载成功

通过 keyring 文件直接挂载-nginx:

如果img有在使用中,需要重新新建,否则pod同时挂载一个img会报"rbd image k8s-rbd-pool/k8s-img-img1 is still being used"

Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 3m2s default-scheduler Successfully assigned default/nginx-deployment-678c759f7c-hdx9w to 172.16.88.158 Normal SuccessfulAttachVolume 3m2s attachdetach-controller AttachVolume.Attach succeeded for volume "rbd-k8s-data1" Warning FailedMount 59s kubelet Unable to attach or mount volumes: unmounted volumes=[rbd-k8s-data1], unattached volumes=[rbd-k8s-data1 kube-api-access-8f5cr]: timed out waiting for the condition Warning FailedMount 17s (x3 over 2m1s) kubelet MountVolume.WaitForAttach failed for volume "rbd-k8s-data1" : rbd image k8s-rbd-pool/k8s-img-img1 is still being used

cephadmin@ceph-deploy:~/ceph-cluster$ rbd create k8s-img-img2 --size 3G --pool k8s-rbd-pool --image-format 2 --image-feature layering cephadmin@ceph-deploy:~/ceph-cluster$ root@easzlab-deploy:~/jiege-k8s/20221006# vim case2-nginx-keyring.yaml root@easzlab-deploy:~/jiege-k8s/20221006# cat case2-nginx-keyring.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 1 selector: matchLabels: #rs or deployment app: ng-deploy-80 template: metadata: labels: app: ng-deploy-80 spec: containers: - name: ng-deploy-80 #image: nginx image: mysql:5.6.46 env: # Use secret in real usage - name: MYSQL_ROOT_PASSWORD value: magedu123456 ports: - containerPort: 80 volumeMounts: - name: rbd-k8s-data2 #mountPath: /data mountPath: /var/lib/mysql volumes: - name: rbd-k8s-data2 rbd: monitors: - '172.16.88.101:6789' - '172.16.88.102:6789' - '172.16.88.103:6789' pool: k8s-rbd-pool image: k8s-img-img2 fsType: xfs readOnly: false user: k8s keyring: /etc/ceph/ceph.client.k8s.keyring root@easzlab-deploy:~/jiege-k8s/20221006# kubectl apply -f case2-nginx-keyring.yaml deployment.apps/nginx-deployment created root@easzlab-deploy:~/jiege-k8s/20221006# kubectl get pod NAME READY STATUS RESTARTS AGE busybox 1/1 Running 0 32m nginx-deployment-795c7756cc-9z87b 1/1 Running 0 8m11s root@easzlab-deploy:~/jiege-k8s/20221006#

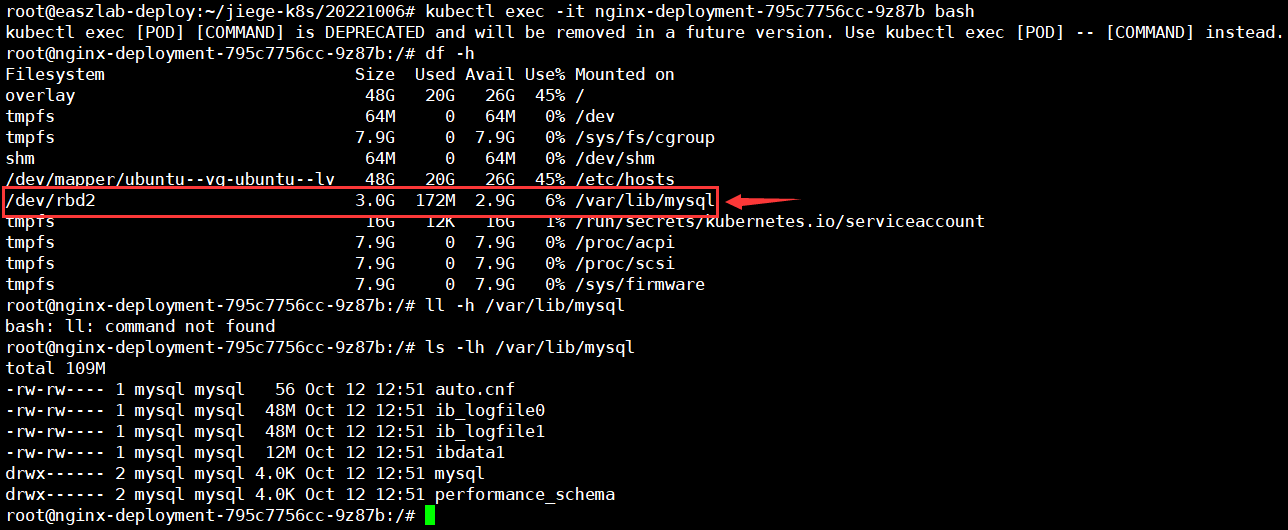

到 pod 验证 rbd 是否挂载成功:

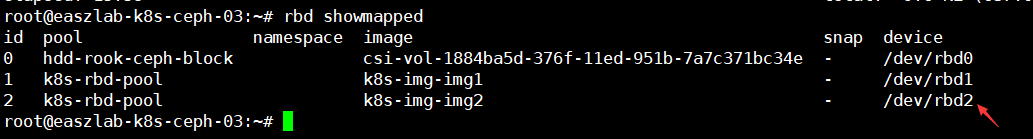

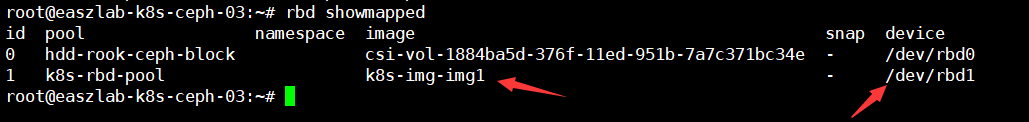

宿主机验证 rbd:

rbd 在 pod 里面看是挂载到了 pod, 但是由于 pod 是使用的宿主机内核, 因此实际是在宿主机挂载的。

到宿主机所在的 node 验证 rbd 挂载:

三、通过 secret 挂载 rbd

将 key 先定义为 secret, 然后再挂载至 pod, 每个 k8s node 节点就不再需要保存 keyring文件。

首先要创建 secret, secret 中主要就是包含 ceph 中被授权用户 keyrin 文件中的 key, 需要将 key 内容通过 base64 编码后即可创建 secret。

cephadmin@ceph-deploy:~/ceph-cluster$ ceph auth print-key client.k8s AQDjq0ZjlVD5LxAAM+b/GZv+RBdmxNO7Zf77/g==cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ ceph auth print-key client.k8s |base64 QVFEanEwWmpsVkQ1THhBQU0rYi9HWnYrUkJkbXhOTzdaZjc3L2c9PQ== cephadmin@ceph-deploy:~/ceph-cluster$ root@easzlab-deploy:~/jiege-k8s/20221006# vim case3-secret-client-k8s.yaml root@easzlab-deploy:~/jiege-k8s/20221006# cat case3-secret-client-k8s.yaml apiVersion: v1 kind: Secret metadata: name: ceph-secret-k8s type: "kubernetes.io/rbd" data: key: QVFEanEwWmpsVkQ1THhBQU0rYi9HWnYrUkJkbXhOTzdaZjc3L2c9PQ== root@easzlab-deploy:~/jiege-k8s/20221006# root@easzlab-deploy:~/jiege-k8s/20221006# kubectl apply -f case3-secret-client-k8s.yaml secret/ceph-secret-k8s created root@easzlab-deploy:~/jiege-k8s/20221006# root@easzlab-deploy:~/jiege-k8s/20221006# kubectl get secret NAME TYPE DATA AGE argocd-initial-admin-secret Opaque 1 59d ceph-secret-k8s kubernetes.io/rbd 1 76s root@easzlab-deploy:~/jiege-k8s/20221006#

创建 pod

root@easzlab-deploy:~/jiege-k8s/20221006# kubectl delete -f case1-busybox-keyring.yaml pod "busybox" deleted root@easzlab-deploy:~/jiege-k8s/20221006# root@easzlab-deploy:~/jiege-k8s/20221006# kubectl delete -f case2-nginx-keyring.yaml deployment.apps "nginx-deployment" deleted root@easzlab-deploy:~/jiege-k8s/20221006# kubectl get pod No resources found in default namespace. root@easzlab-deploy:~/jiege-k8s/20221006# root@easzlab-deploy:~/jiege-k8s/20221006# vim case4-nginx-secret.yaml root@easzlab-deploy:~/jiege-k8s/20221006# cat case4-nginx-secret.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 1 selector: matchLabels: #rs or deployment app: ng-deploy-80 template: metadata: labels: app: ng-deploy-80 spec: containers: - name: ng-deploy-80 image: nginx ports: - containerPort: 80 volumeMounts: - name: rbd-k8s-data1 mountPath: /usr/share/nginx/html/rbd volumes: - name: rbd-k8s-data1 rbd: monitors: - '172.16.88.101:6789' - '172.16.88.102:6789' - '172.16.88.103:6789' pool: k8s-rbd-pool image: k8s-img-img1 fsType: xfs readOnly: false user: k8s secretRef: name: ceph-secret-k8s root@easzlab-deploy:~/jiege-k8s/20221006# kubectl apply -f case4-nginx-secret.yaml deployment.apps/nginx-deployment created root@easzlab-deploy:~/jiege-k8s/20221006#

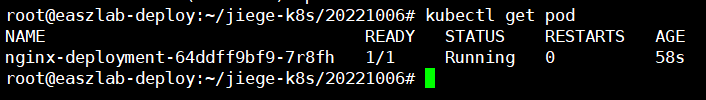

验证 pod

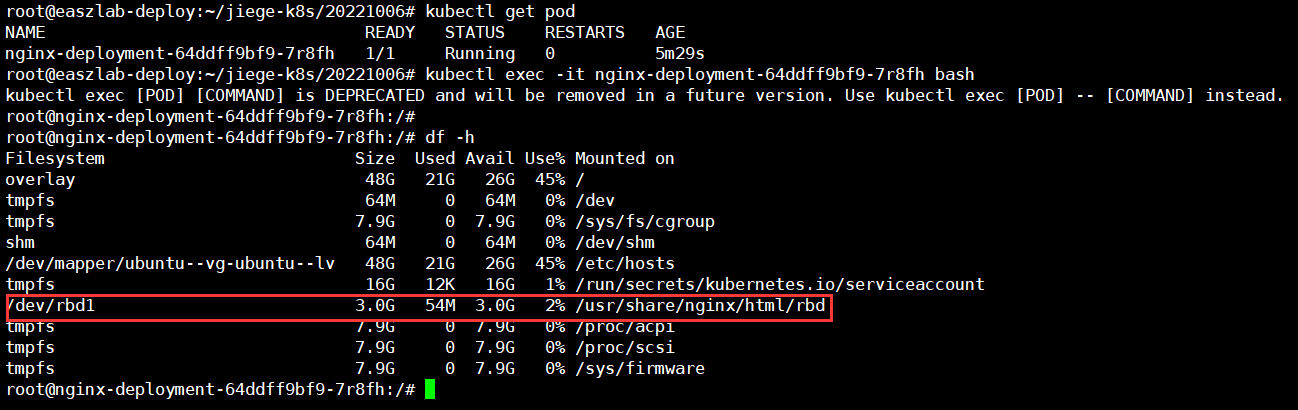

pod 验证挂载

宿主机验证挂载:

四、动态存储卷供给

需要使用二进制安装 k8s

https://github.com/kubernetes/kubernetes/issues/38923

存储卷可以通过 kube-controller-manager 组件动态创建, 适用于有状态服务需要多个存储卷的场合。

将 ceph admin 用户 key 文件定义为 k8s secret, 用于 k8s 调用 ceph admin 权限动态创建存储卷, 即不再需要提前创建好 image 而是 k8s 在需要使用的时候再调用 ceph 创建。

创建 admin 用户 secret:

[root@ceph-deploy ~]# ceph auth print-key client.admin | base64 QVFBZFFqeGpLOXhMRUJBQTNWV2hYSWpxMGVzUkszclFQYVUrWmc9PQ== [root@ceph-deploy ~]# root@easzlab-deploy:~/jiege-k8s/20221006# vim case5-secret-admin.yaml root@easzlab-deploy:~/jiege-k8s/20221006# cat case5-secret-admin.yaml apiVersion: v1 kind: Secret metadata: name: ceph-secret-admin type: "kubernetes.io/rbd" data: key: QVFBZFFqeGpLOXhMRUJBQTNWV2hYSWpxMGVzUkszclFQYVUrWmc9PQ== root@easzlab-deploy:~/jiege-k8s/20221006# kubectl apply -f case5-secret-admin.yaml secret/ceph-secret-admin created root@easzlab-deploy:~/jiege-k8s/20221006# kubectl get secret NAME TYPE DATA AGE argocd-initial-admin-secret Opaque 1 59d ceph-secret-admin kubernetes.io/rbd 1 22s ceph-secret-k8s kubernetes.io/rbd 1 57m root@easzlab-deploy:~/jiege-k8s/20221006#

创建动态存储类, 为 pod 提供动态 pvc

root@easzlab-deploy:~/jiege-k8s/20221006# vim case6-ceph-storage-class.yaml root@easzlab-deploy:~/jiege-k8s/20221006# cat case6-ceph-storage-class.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: ceph-storage-class-k8s annotations: storageclass.kubernetes.io/is-default-class: "false" #设置为默认存储类 provisioner: kubernetes.io/rbd parameters: monitors: 172.16.88.101:6789,172.16.88.102:6789,172.16.88.103:6789 adminId: admin adminSecretName: ceph-secret-admin adminSecretNamespace: default pool: k8s-rbd-pool userId: k8s userSecretName: ceph-secret-k8s root@easzlab-deploy:~/jiege-k8s/20221006# kubectl apply -f case6-ceph-storage-class.yaml storageclass.storage.k8s.io/ceph-storage-class-k8s created root@easzlab-deploy:~/jiege-k8s/20221006# root@easzlab-deploy:~/jiege-k8s/20221006# kubectl get sc -A NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE ceph-storage-class-k8s kubernetes.io/rbd Delete Immediate false 12s hdd-rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate false 50d root@easzlab-deploy:~/jiege-k8s/20221006#

创建基于存储类的 PVC:

root@easzlab-deploy:~/jiege-k8s/20221006# vim case7-mysql-pvc.yaml root@easzlab-deploy:~/jiege-k8s/20221006# cat case7-mysql-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: mysql-data-pvc spec: accessModes: - ReadWriteOnce storageClassName: ceph-storage-class-k8s resources: requests: storage: '5Gi' root@easzlab-deploy:~/jiege-k8s/20221006# root@easzlab-deploy:~/jiege-k8s/20221006# kubectl apply -f case7-mysql-pvc.yaml persistentvolumeclaim/mysql-data-pvc created root@easzlab-deploy:~/jiege-k8s/20221006#

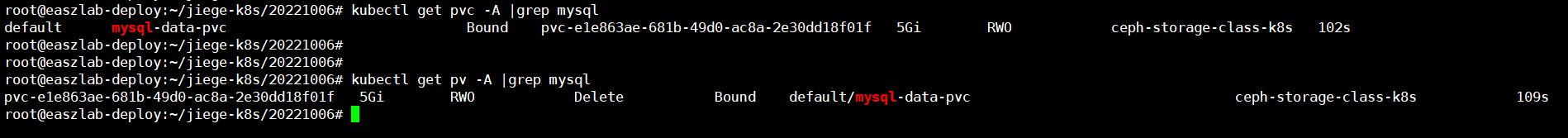

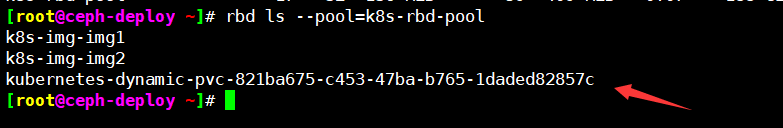

ceph 验证是否自动创建 image:

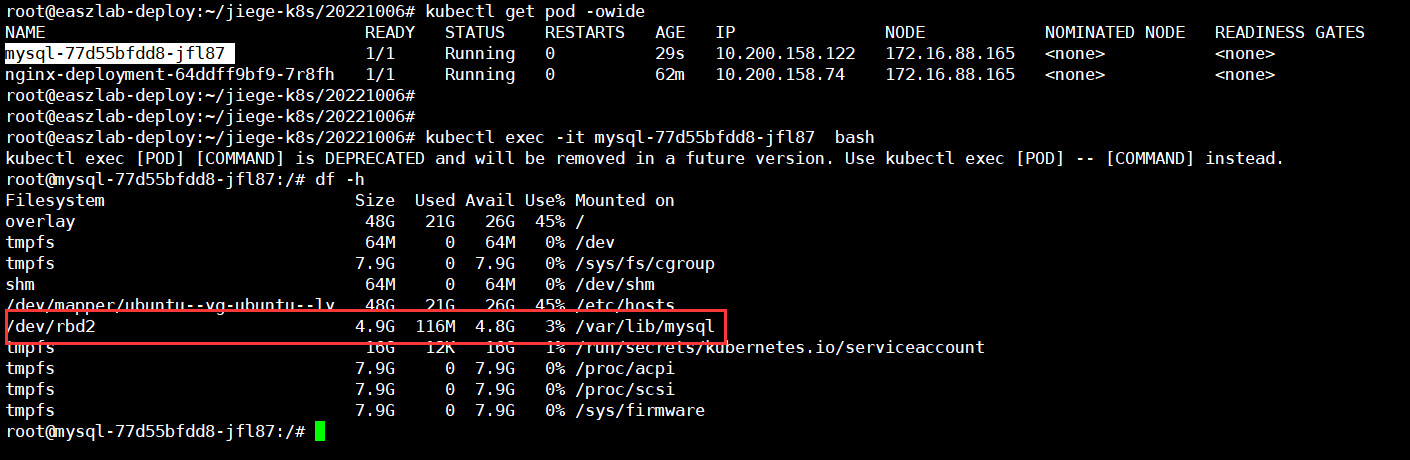

运行单机 mysql 并验证:

root@easzlab-deploy:~/jiege-k8s/20221006# vim case8-mysql-single.yaml root@easzlab-deploy:~/jiege-k8s/20221006# cat case8-mysql-single.yaml apiVersion: apps/v1 kind: Deployment metadata: name: mysql spec: selector: matchLabels: app: mysql strategy: type: Recreate template: metadata: labels: app: mysql spec: containers: - image: mysql:5.6.46 name: mysql env: # Use secret in real usage - name: MYSQL_ROOT_PASSWORD value: magedu123456 ports: - containerPort: 3306 name: mysql volumeMounts: - name: mysql-persistent-storage mountPath: /var/lib/mysql volumes: - name: mysql-persistent-storage persistentVolumeClaim: claimName: mysql-data-pvc --- kind: Service apiVersion: v1 metadata: labels: app: mysql-service-label name: mysql-service spec: type: NodePort ports: - name: http port: 3306 protocol: TCP targetPort: 3306 nodePort: 33306 selector: app: mysql root@easzlab-deploy:~/jiege-k8s/20221006# root@easzlab-deploy:~/jiege-k8s/20221006# kubectl apply -f case8-mysql-single.yaml deployment.apps/mysql created service/mysql-service created root@easzlab-deploy:~/jiege-k8s/20221006# kubectl get pod -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES mysql-77d55bfdd8-jfl87 1/1 Running 0 29s 10.200.158.122 172.16.88.165 <none> <none> nginx-deployment-64ddff9bf9-7r8fh 1/1 Running 0 62m 10.200.158.74 172.16.88.165 <none> <none> root@easzlab-deploy:~/jiege-k8s/20221006#

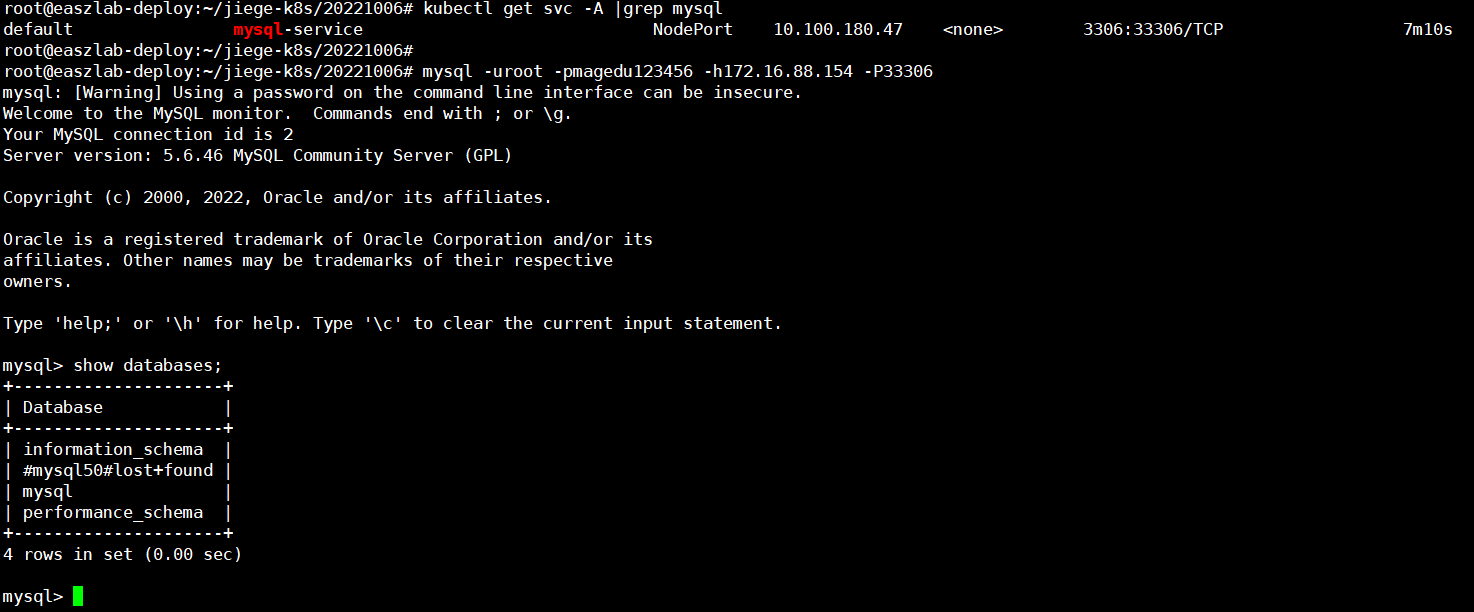

验证 mysql 挂载

验证 mysql 访问:

root@easzlab-deploy:~/jiege-k8s/20221006# apt install mysql-clien mysql-common #安装mysql客户端

五、cephfs 使用

k8s 中的 pod 挂载 ceph 的 cephfs 共享存储, 实现业务中数据共享、 持久化、 高性能、 高可用的目的。

创建 pod

root@easzlab-deploy:~/jiege-k8s/20221006# vim case9-nginx-cephfs.yaml root@easzlab-deploy:~/jiege-k8s/20221006# cat case9-nginx-cephfs.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 1 selector: matchLabels: #rs or deployment app: ng-deploy-80 template: metadata: labels: app: ng-deploy-80 spec: containers: - name: ng-deploy-80 image: nginx ports: - containerPort: 80 volumeMounts: - name: magedu-staticdata-cephfs mountPath: /usr/share/nginx/html/cephfs volumes: - name: magedu-staticdata-cephfs cephfs: monitors: - '172.16.88.101:6789' - '172.16.88.102:6789' - '172.16.88.103:6789' path: / user: admin secretRef: name: ceph-secret-admin --- kind: Service apiVersion: v1 metadata: labels: app: ng-deploy-80-service-label name: ng-deploy-80-service spec: type: NodePort ports: - name: http port: 80 protocol: TCP targetPort: 80 nodePort: 33380 selector: app: ng-deploy-80 root@easzlab-deploy:~/jiege-k8s/20221006# kubectl apply -f case9-nginx-cephfs.yaml deployment.apps/nginx-deployment configured service/ng-deploy-80-service created root@easzlab-deploy:~/jiege-k8s/20221006#

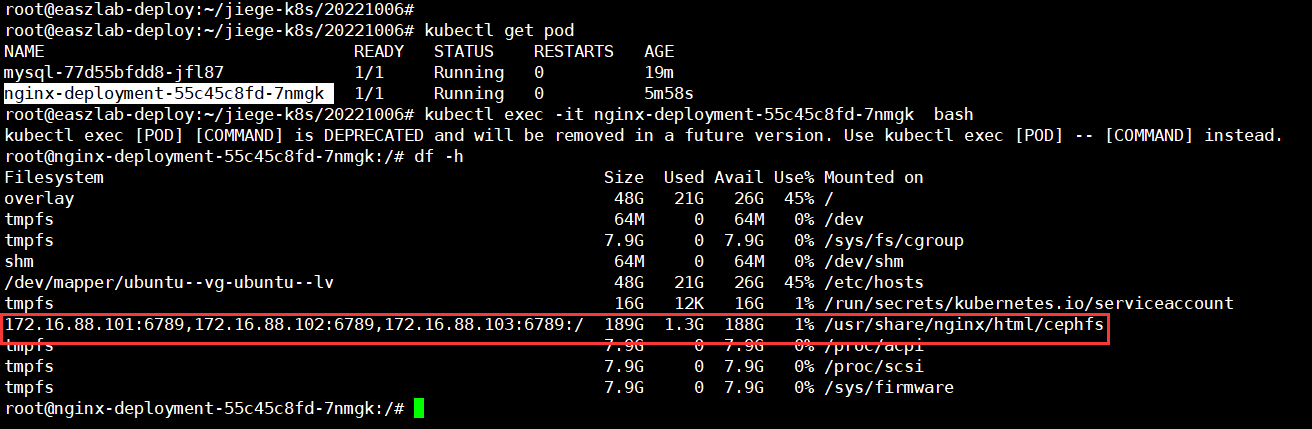

pod 多副本验证:

修改case9-nginx-cephfs.yaml中replicas: 1为replicas: 3

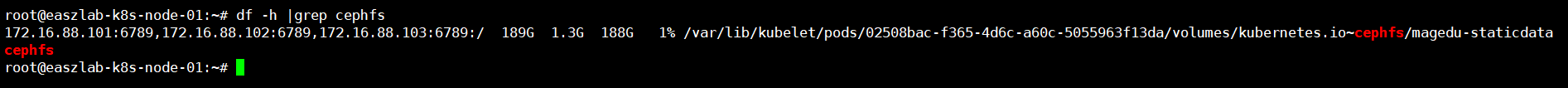

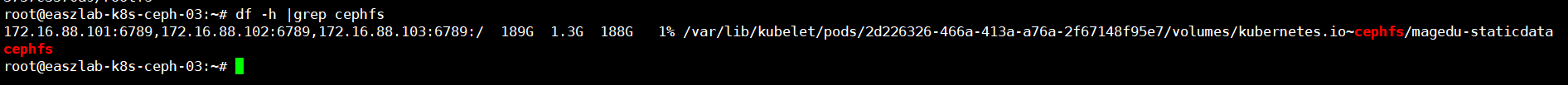

宿主机验证:

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 葡萄城 AI 搜索升级:DeepSeek 加持,客户体验更智能

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏