Ceph使用---Crush Map进阶

一、Ceph Crush Map介绍

ceph 集群中由 mon 服务器维护的的五种运行图:

Monitor map #监视器运行图

OSD map #OSD 运行图

PG map #PG 运行图

Crush map #(Controllers replication under scalable hashing #可控的、 可复制的、 可伸缩的一致性 hash 算法。

MDS map #cephfs metadata 运行图

crush 运行图,当新建存储池时会基于 OSD map 创建出新的 PG 组合列表用于存储数据

crush 算法针对目的节点的选择:

目前有 5 种算法来实现节点的选择, 包括 Uniform、 List、 Tree、 Straw、 Straw2, 早期版本使用的是 ceph 项目的发起者发明的算法 straw, 目前已经发展社区优化的 straw2 版本。

straw(抽签算法):

抽签是指挑取一个最长的签, 而这个签值就是 OSD 的权重, 当创建存储池的时候会向 PG分配 OSD, straw 算法会遍历当前可用的 OSD 并优先使用中签的 OSD, 以让权重高的 OSD被分配较多的 PG 以存储更多

的数据。

二、PG 与 OSD 映射调整

默认情况下,crush 算法自行对创建的 pool 中的 PG 分配 OSD, 但是可以手动基于权重设置crush 算法分配数据的倾向性, 比如 1T 的磁盘权重是 1, 2T 的就是 2, 推荐使用相同大小的设备。

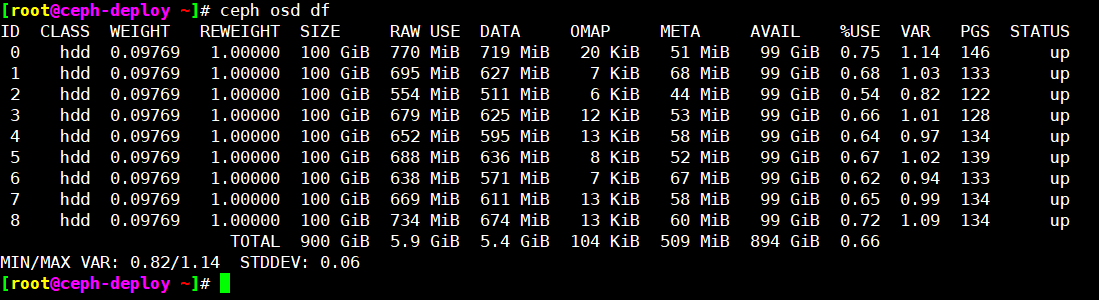

查看当前状态:

weight 表示设备(device)的容量相对值, 比如 1TB 对应 1.00, 那么 500G 的 OSD 的 weight就应该是 0.5, weight 是基于磁盘空间分配 PG 的数量, 让 crush 算法尽可能往磁盘空间大的 OSD 多分

配 PG.往磁盘空间小的 OSD 分配较少的 PG。 Reweight 参数的目的是重新平衡 ceph 的 CRUSH 算法随机分配的 PG, 默认的分配是概率上的均衡, 即使 OSD 都是一样的磁盘空间也会产生一些 PG 分布不均匀的情况, 此时可以通过调整 reweight 参数,

让 ceph 集群立即重新平衡当前磁盘的 PG, 以达到数据均衡分布的目的, REWEIGHT 是 PG 已经分配完成, 要在 ceph 集群重新平衡 PG 的分布。

修改 WEIGHT 并验证:

[root@ceph-deploy ~]# ceph osd crush reweight osd.1 1.5 #此操作属于高危操作,会立即触发ceph集群数据重平衡,谨慎操作!!!! reweighted item id 1 name 'osd.1' to 1.5 in crush map [root@ceph-deploy ~]# ceph osd df ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS 0 hdd 0.09769 1.00000 100 GiB 770 MiB 719 MiB 20 KiB 51 MiB 99 GiB 0.75 1.14 133 up 1 hdd 1.50000 1.00000 100 GiB 695 MiB 627 MiB 7 KiB 68 MiB 99 GiB 0.68 1.03 147 up 2 hdd 0.09769 1.00000 100 GiB 555 MiB 511 MiB 6 KiB 44 MiB 99 GiB 0.54 0.82 118 up 3 hdd 0.09769 1.00000 100 GiB 679 MiB 625 MiB 12 KiB 53 MiB 99 GiB 0.66 1.00 121 up 4 hdd 0.09769 1.00000 100 GiB 652 MiB 595 MiB 13 KiB 58 MiB 99 GiB 0.64 0.97 132 up 5 hdd 0.09769 1.00000 100 GiB 688 MiB 636 MiB 8 KiB 52 MiB 99 GiB 0.67 1.02 147 up 6 hdd 0.09769 1.00000 100 GiB 638 MiB 571 MiB 7 KiB 67 MiB 99 GiB 0.62 0.94 125 up 7 hdd 0.09769 1.00000 100 GiB 669 MiB 611 MiB 13 KiB 58 MiB 99 GiB 0.65 0.99 141 up 8 hdd 0.09769 1.00000 100 GiB 734 MiB 674 MiB 13 KiB 60 MiB 99 GiB 0.72 1.09 135 up TOTAL 900 GiB 5.9 GiB 5.4 GiB 104 KiB 510 MiB 894 GiB 0.66 MIN/MAX VAR: 0.82/1.14 STDDEV: 0.06 [root@ceph-deploy ~]# ceph -s cluster: id: 8dc32c41-121c-49df-9554-dfb7deb8c975 health: HEALTH_WARN Degraded data redundancy: 1484/2529 objects degraded (58.679%), 151 pgs degraded #此操作属于高危操作,会立即触发ceph集群数据重平衡,谨慎操作!!!! services: mon: 3 daemons, quorum ceph-mon1,ceph-mon2,ceph-mon3 (age 21h) mgr: ceph-mgr1(active, since 21h), standbys: ceph-mgr2 mds: 2/2 daemons up, 2 standby osd: 9 osds: 9 up (since 21h), 9 in (since 6d); 58 remapped pgs rgw: 2 daemons active (2 hosts, 1 zones) data: volumes: 1/1 healthy pools: 12 pools, 401 pgs objects: 843 objects, 1.8 GiB usage: 6.0 GiB used, 894 GiB / 900 GiB avail pgs: 1484/2529 objects degraded (58.679%) 571/2529 objects misplaced (22.578%) 211 active+clean 127 active+recovery_wait+degraded 31 active+remapped+backfill_wait 24 active+recovery_wait+undersized+degraded+remapped 4 active+recovery_wait 3 active+recovery_wait+remapped 1 active+recovering io: client: 511 KiB/s wr, 0 op/s rd, 0 op/s wr recovery: 4.5 MiB/s, 1 keys/s, 3 objects/s #数据量过大,此处值阈值不放开,会造成osd拥塞,最终集群无法正常提供服务 [root@ceph-deploy ~]#

[root@ceph-deploy ~]# ceph health detail

HEALTH_WARN Degraded data redundancy: 1143/2529 objects degraded (45.196%), 111 pgs degraded [WRN] PG_DEGRADED: Degraded data redundancy: 1143/2529 objects degraded (45.196%), 111 pgs degraded pg 2.0 is active+recovery_wait+degraded, acting [3,1,8] pg 2.1 is active+recovery_wait+degraded, acting [1,3,8] pg 2.2 is active+recovery_wait+degraded, acting [1,5,6] pg 2.11 is active+recovery_wait+degraded, acting [1,3,8] pg 2.13 is active+recovery_wait+degraded, acting [1,4,6] pg 2.15 is active+recovery_wait+degraded, acting [1,8,5] pg 2.16 is active+recovery_wait+degraded, acting [1,7,4] pg 2.18 is active+recovery_wait+degraded, acting [1,6,3] pg 2.19 is active+recovery_wait+degraded, acting [1,7,3] pg 2.1a is active+recovery_wait+degraded, acting [1,4,7] pg 2.1b is active+recovery_wait+degraded, acting [6,1,5] pg 2.39 is active+recovery_wait+degraded, acting [7,5,1] pg 2.3d is active+recovery_wait+degraded, acting [1,7,4] pg 2.3e is active+recovery_wait+degraded, acting [7,1,4] pg 3.f is active+recovery_wait+degraded, acting [1,8,4] pg 6.5 is active+recovery_wait+degraded, acting [0,4,8] pg 6.6 is active+recovery_wait+degraded, acting [1,3,8] pg 6.7 is active+recovery_wait+degraded, acting [1,6,3] pg 8.4 is active+recovery_wait+degraded, acting [1,5,7] pg 8.5 is active+recovery_wait+degraded, acting [6,2,5] pg 8.6 is active+recovery_wait+degraded, acting [6,0,3] pg 8.7 is active+recovery_wait+degraded, acting [1,6,3] pg 8.8 is active+recovery_wait+degraded, acting [1,7,5] pg 8.9 is active+recovery_wait+degraded, acting [1,4,7] pg 8.a is active+recovery_wait+degraded, acting [1,7,3] pg 8.10 is active+recovery_wait+degraded, acting [3,7,1] pg 8.13 is active+recovery_wait+degraded, acting [1,7,5] pg 8.18 is active+recovery_wait+degraded, acting [1,5,6] pg 8.1a is active+recovery_wait+degraded, acting [1,3,7] pg 8.1b is active+recovery_wait+degraded, acting [1,5,8] pg 8.1c is active+recovery_wait+degraded, acting [1,3,7] pg 8.1d is active+recovery_wait+degraded, acting [1,4,8] pg 8.1f is active+recovery_wait+degraded, acting [7,1,3] pg 8.37 is active+recovery_wait+degraded, acting [1,3,6] pg 13.0 is active+recovery_wait+undersized+degraded+remapped, acting [7,4] pg 13.2 is active+recovery_wait+undersized+degraded+remapped, acting [5,6] pg 13.3 is active+recovery_wait+undersized+degraded+remapped, acting [0,4] pg 13.16 is active+recovery_wait+undersized+degraded+remapped, acting [8,3] pg 13.18 is active+recovery_wait+undersized+degraded+remapped, acting [5,8] pg 13.1c is active+recovery_wait+undersized+degraded+remapped, acting [5,1] pg 13.1d is active+recovery_wait+undersized+degraded+remapped, acting [3,7] pg 13.1e is active+recovery_wait+undersized+degraded+remapped, acting [1,5] pg 13.1f is active+recovery_wait+undersized+degraded+remapped, acting [0,5] pg 15.1 is active+recovery_wait+degraded, acting [1,3,7] pg 15.3 is active+recovery_wait+degraded, acting [1,6,4] pg 15.c is active+recovery_wait+degraded, acting [7,1,4] pg 15.e is active+recovery_wait+degraded, acting [1,6,4] pg 15.15 is active+recovery_wait+degraded, acting [5,7,1] pg 15.16 is active+recovery_wait+degraded, acting [1,4,8] pg 15.1d is active+recovery_wait+degraded, acting [1,7,3] pg 15.1f is active+recovery_wait+degraded, acting [1,4,8]

修改 REWEIGHT 并验证:

OSD 的 REWEIGHT 的值默认为 1, 值可以调整, 范围在 0~1 之间, 值越低 PG 越小, 如果调整了任何一个 OSD 的 REWEIGHT 值, 那么 OSD 的 PG 会立即和其它 OSD 进行重新平衡, 即数据的重新分配, 用于当某个 OSD 的 PG 相对较多需要降低其 PG 数量的场景。

[root@ceph-deploy ~]# ceph osd reweight 2 0.6 reweighted osd.2 to 0.6 (9999) [root@ceph-deploy ~]# [root@ceph-deploy ~]# ceph osd df ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS 0 hdd 0.09769 1.00000 100 GiB 828 MiB 771 MiB 20 KiB 57 MiB 99 GiB 0.81 1.22 163 up 1 hdd 0.09769 1.00000 100 GiB 772 MiB 677 MiB 7 KiB 96 MiB 99 GiB 0.75 1.13 153 up 2 hdd 0.09769 0.59999 100 GiB 454 MiB 400 MiB 6 KiB 53 MiB 100 GiB 0.44 0.67 85 up 3 hdd 0.09769 1.00000 100 GiB 684 MiB 623 MiB 12 KiB 61 MiB 99 GiB 0.67 1.00 127 up 4 hdd 0.09769 1.00000 100 GiB 661 MiB 592 MiB 13 KiB 69 MiB 99 GiB 0.65 0.97 135 up 5 hdd 0.09769 1.00000 100 GiB 696 MiB 633 MiB 8 KiB 62 MiB 99 GiB 0.68 1.02 139 up 6 hdd 0.09769 1.00000 100 GiB 662 MiB 580 MiB 7 KiB 82 MiB 99 GiB 0.65 0.97 134 up 7 hdd 0.09769 1.00000 100 GiB 662 MiB 608 MiB 13 KiB 54 MiB 99 GiB 0.65 0.97 134 up 8 hdd 0.09769 1.00000 100 GiB 713 MiB 659 MiB 13 KiB 54 MiB 99 GiB 0.70 1.05 133 up TOTAL 900 GiB 6.0 GiB 5.4 GiB 104 KiB 588 MiB 894 GiB 0.67 MIN/MAX VAR: 0.67/1.22 STDDEV: 0.08 [root@ceph-deploy ~]#

三、crush 运行图管理

通过工具将 ceph 的 crush 运行图导出并进行编辑, 然后导入

导出 crush 运行图:

注: 导出的 crush 运行图为二进制格式, 无法通过文本编辑器直接打开, 需要使用 crushtool工具转换为文本格式后才能通过 vim 等文本编辑宫工具打开和编辑。

[root@ceph-deploy ~]# mkdir /data/ceph -p [root@ceph-deploy ~]# ceph osd getcrushmap -o /data/ceph/crushmap-v1 32 [root@ceph-deploy ~]# crushtool -d /data/ceph/crushmap-v1 > /data/ceph/crushmap-v1.txt #二进制转文本文件 [root@ceph-deploy ~]# file /data/ceph/crushmap-v1.txt /data/ceph/crushmap-v1.txt: ASCII text [root@ceph-deploy ~]#

[root@ceph-deploy ~]# cat /data/ceph/crushmap-v1.txt

# begin crush map #可调整的 crush map 参数 tunable choose_local_tries 0 tunable choose_local_fallback_tries 0 tunable choose_total_tries 50 tunable chooseleaf_descend_once 1 tunable chooseleaf_vary_r 1 tunable chooseleaf_stable 1 tunable straw_calc_version 1 tunable allowed_bucket_algs 54 # devices #当前的设备列表 device 0 osd.0 class hdd device 1 osd.1 class hdd device 2 osd.2 class hdd device 3 osd.3 class hdd device 4 osd.4 class hdd device 5 osd.5 class hdd device 6 osd.6 class hdd device 7 osd.7 class hdd device 8 osd.8 class hdd # types #当前支持的 bucket 类型 type 0 osd #osd 守护进程, 对应到一个磁盘设备 type 1 host #一个主机 type 2 chassis #刀片服务器的机箱 type 3 rack #包含若干个服务器的机柜/机架 type 4 row #包含若干个机柜的一排机柜(一行机柜) type 5 pdu #机柜的接入电源插座 type 6 pod #一个机房中的若干个小房间 type 7 room #包含若干机柜的房间, 一个数据中心有好多这样的房间组成 type 8 datacenter #一个数据中心或 IDC type 9 zone #区域, 地区 type 10 region #可用域, 比如 AWS 宁夏中卫数据中心 type 11 root #bucket 分层的最顶部, 根 # buckets host ceph-node1 { #类型 Host 名称为 ceph-node1 id -3 # do not change unnecessarily #ceph 生成的 OSD ID, 非必要不要改 id -4 class hdd # do not change unnecessarily # weight 0.293 #crush 算法, 管理 OSD 角色 alg straw2 hash 0 # rjenkins1 # rjenkins1 #使用是哪个 hash 算法, 0 表示选择 rjenkins1 这种 hash算法 item osd.0 weight 0.098 #osd0 权重比例, crush 会自动根据磁盘空间计算, 不同的磁盘空间的权重不一样 item osd.1 weight 0.098 item osd.2 weight 0.098 } host ceph-node2 { id -5 # do not change unnecessarily id -6 class hdd # do not change unnecessarily # weight 0.293 alg straw2 hash 0 # rjenkins1 item osd.3 weight 0.098 item osd.4 weight 0.098 item osd.5 weight 0.098 } host ceph-node3 { id -7 # do not change unnecessarily id -8 class hdd # do not change unnecessarily # weight 0.293 alg straw2 hash 0 # rjenkins1 item osd.6 weight 0.098 item osd.7 weight 0.098 item osd.8 weight 0.098 } root default { #根的配置 id -1 # do not change unnecessarily id -2 class hdd # do not change unnecessarily # weight 0.879 alg straw2 hash 0 # rjenkins1 item ceph-node1 weight 0.293 item ceph-node2 weight 0.293 item ceph-node3 weight 0.293 } # rules rule replicated_rule { #副本池的默认配置 id 0 type replicated min_size 1 max_size 10 #默认最大副本为 10 step take default #基于 default 定义的主机分配 OSD step chooseleaf firstn 0 type host #选择主机, 故障域类型为主机 step emit #弹出配置即返回给客户端 } rule erasure-code { #纠删码池的默认配置 id 1 type erasure min_size 3 max_size 4 step set_chooseleaf_tries 5 step set_choose_tries 100 step take default step chooseleaf indep 0 type host step emit } # end crush map

将文本转换为 crush 格式

root@ceph-deploy:~# crushtool -c /data/ceph/crushmap-v1.txt -o /data/ceph/crushmap-v2

导入新的 crush:

导入的运行图会立即覆盖原有的运行图并立即生效。

root@ceph-deploy:~# ceph osd setcrushmap -i /data/ceph/crushmap-v2

验证 crush 运行图是否生效:

[root@ceph-deploy ~]# ceph osd crush rule dump

[ { "rule_id": 0, "rule_name": "replicated_rule", "ruleset": 0, "type": 1, "min_size": 1, "max_size": 8, "steps": [ { "op": "take", "item": -1, "item_name": "default" }, { "op": "chooseleaf_firstn", "num": 0, "type": "host" }, { "op": "emit" } ] }, { "rule_id": 1, "rule_name": "erasure-code", "ruleset": 1, "type": 3, "min_size": 3, "max_size": 4, "steps": [ { "op": "set_chooseleaf_tries", "num": 5 }, { "op": "set_choose_tries", "num": 100 }, { "op": "take", "item": -1, "item_name": "default" }, { "op": "chooseleaf_indep", "num": 0, "type": "host" }, { "op": "emit" } ] } ]

四、crush 数据分类管理

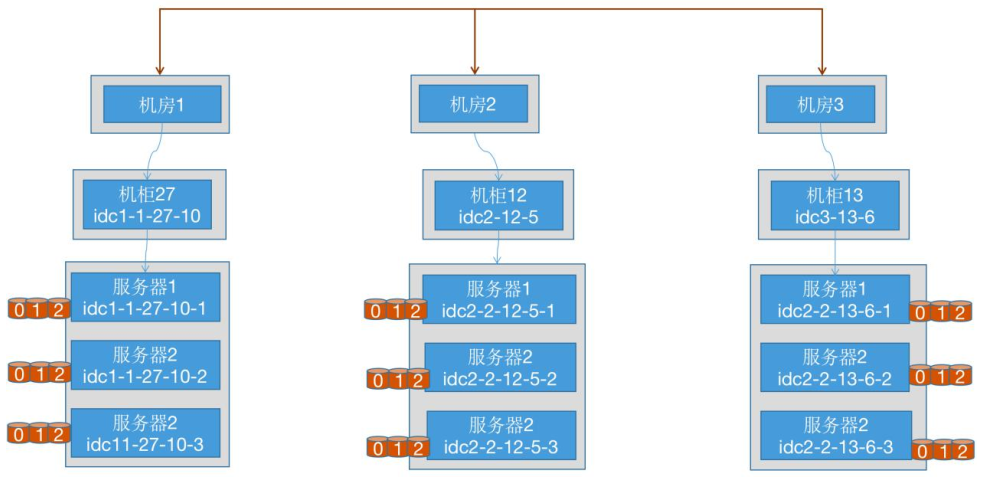

Ceph crush 算法分配的 PG 的时候可以将 PG 分配到不同主机的 OSD 上, 以实现以主机为单位的高可用, 这也是默认机制, 但是无法保证不同 PG 位于不同机柜或者机房的主机, 如果要实现基于机柜或者是更高级的 IDC 等方式的数据高可用, 而且也不能实现 A 项目的数据在 SSD, B 项目的数据在机械盘,如果想要实现此功能则需要导出 crush 运行图并手动编辑, 之后再导入并覆盖原有的 crush 运行图。

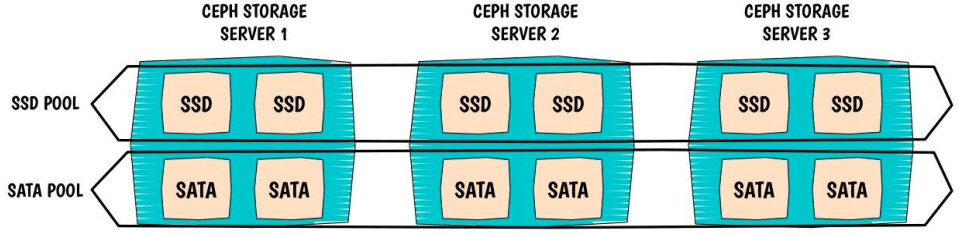

五、集群中ssh、hdd分类管理

模拟集群内,物理机有ssd、hdd两种不同类型磁盘,然后进行分类管理

[root@ceph-deploy ~]# ceph osd getcrushmap -o /data/ceph/crushmap-v3

[root@ceph-deploy ~]# crushtool -d /data/ceph/crushmap-v3 > /data/ceph/crushmap-v3.txt

[root@ceph-deploy ~]# vi /data/ceph/crushmap-v3.txt

# begin crush map tunable choose_local_tries 0 tunable choose_local_fallback_tries 0 tunable choose_total_tries 50 tunable chooseleaf_descend_once 1 tunable chooseleaf_vary_r 1 tunable chooseleaf_stable 1 tunable straw_calc_version 1 tunable allowed_bucket_algs 54 # devices device 0 osd.0 class hdd device 1 osd.1 class hdd device 2 osd.2 class hdd device 3 osd.3 class hdd device 4 osd.4 class hdd device 5 osd.5 class hdd device 6 osd.6 class hdd device 7 osd.7 class hdd device 8 osd.8 class hdd # types type 0 osd type 1 host type 2 chassis type 3 rack type 4 row type 5 pdu type 6 pod type 7 room type 8 datacenter type 9 zone type 10 region type 11 root # buckets host ceph-node1 { id -3 # do not change unnecessarily id -4 class hdd # do not change unnecessarily # weight 0.293 alg straw2 hash 0 # rjenkins1 item osd.0 weight 0.098 item osd.1 weight 0.098 } host ceph-node2 { id -5 # do not change unnecessarily id -6 class hdd # do not change unnecessarily # weight 0.293 alg straw2 hash 0 # rjenkins1 item osd.3 weight 0.098 item osd.4 weight 0.098 } host ceph-node3 { id -7 # do not change unnecessarily id -8 class hdd # do not change unnecessarily # weight 0.293 alg straw2 hash 0 # rjenkins1 item osd.6 weight 0.098 item osd.7 weight 0.098 } root default { id -1 # do not change unnecessarily id -2 class hdd # do not change unnecessarily # weight 0.879 alg straw2 hash 0 # rjenkins1 item ceph-node1 weight 0.293 item ceph-node2 weight 0.293 item ceph-node3 weight 0.293 } #ssd node host ceph-ssd-node1 { id -13 # do not change unnecessarily id -14 class ssd # do not change unnecessarily # weight 0.293 alg straw2 hash 0 # rjenkins1 item osd.2 weight 0.098 } host ceph-ssd-node2 { id -15 # do not change unnecessarily id -16 class ssd # do not change unnecessarily # weight 0.293 alg straw2 hash 0 # rjenkins1 item osd.5 weight 0.098 } host ceph-ssd-node3 { id -17 # do not change unnecessarily id -18 class ssd # do not change unnecessarily # weight 0.293 alg straw2 hash 0 # rjenkins1 item osd.8 weight 0.098 } root ssd { id -11 # do not change unnecessarily id -12 class hdd # do not change unnecessarily #真实环境这里需要写真是ssd类型 # weight 0.879 alg straw2 hash 0 # rjenkins1 item ceph-ssd-node1 weight 0.293 item ceph-ssd-node2 weight 0.293 item ceph-ssd-node3 weight 0.293 } # rules rule replicated_rule { id 0 type replicated min_size 1 max_size 10 step take default step chooseleaf firstn 0 type host step emit } rule ssd_rule { id 10 type replicated min_size 1 max_size 5 step take ssd step chooseleaf firstn 0 type host step emit } rule erasure-code { id 1 type erasure min_size 3 max_size 4 step set_chooseleaf_tries 5 step set_choose_tries 100 step take default step chooseleaf indep 0 type host step emit } # end crush map [root@ceph-deploy ~]#

将v3文本文件编译成v4二进制文件导入

[root@ceph-deploy ~]# crushtool -c /data/ceph/crushmap-v3.txt -o /data/ceph/crushmap-v4 [root@ceph-deploy ~]# ceph osd setcrushmap -i /data/ceph/crushmap-v4 33 [root@ceph-deploy ~]#

[root@ceph-deploy ~]# ceph osd crush rule dump

[ { "rule_id": 0, "rule_name": "replicated_rule", "ruleset": 0, "type": 1, "min_size": 1, "max_size": 10, "steps": [ { "op": "take", "item": -1, "item_name": "default" }, { "op": "chooseleaf_firstn", "num": 0, "type": "host" }, { "op": "emit" } ] }, { "rule_id": 1, "rule_name": "erasure-code", "ruleset": 1, "type": 3, "min_size": 3, "max_size": 4, "steps": [ { "op": "set_chooseleaf_tries", "num": 5 }, { "op": "set_choose_tries", "num": 100 }, { "op": "take", "item": -1, "item_name": "default" }, { "op": "chooseleaf_indep", "num": 0, "type": "host" }, { "op": "emit" } ] }, { "rule_id": 10, "rule_name": "ssd_rule", "ruleset": 10, "type": 1, "min_size": 1, "max_size": 5, "steps": [ { "op": "take", "item": -11, "item_name": "ssd" }, { "op": "chooseleaf_firstn", "num": 0, "type": "host" }, { "op": "emit" } ] } ]

查看集群状态

[root@ceph-deploy ~]# ceph osd df ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS 2 hdd 0.09799 1.00000 100 GiB 63 MiB 10 MiB 6 KiB 53 MiB 100 GiB 0.06 0.09 0 up #分类后的osd pgs会自动移除 5 hdd 0.09799 1.00000 100 GiB 87 MiB 10 MiB 8 KiB 77 MiB 100 GiB 0.09 0.13 0 up 8 hdd 0.09799 1.00000 100 GiB 78 MiB 10 MiB 13 KiB 68 MiB 100 GiB 0.08 0.11 0 up 0 hdd 0.09799 1.00000 100 GiB 989 MiB 911 MiB 20 KiB 78 MiB 99 GiB 0.97 1.42 197 up 1 hdd 0.09799 1.00000 100 GiB 1.0 GiB 932 MiB 7 KiB 111 MiB 99 GiB 1.02 1.50 204 up 3 hdd 0.09799 1.00000 100 GiB 881 MiB 816 MiB 12 KiB 65 MiB 99 GiB 0.86 1.27 200 up 4 hdd 0.09799 1.00000 100 GiB 1.1 GiB 1.0 GiB 13 KiB 78 MiB 99 GiB 1.08 1.59 201 up 6 hdd 0.09799 1.00000 100 GiB 1.0 GiB 967 MiB 7 KiB 91 MiB 99 GiB 1.03 1.52 197 up 7 hdd 0.09799 1.00000 100 GiB 949 MiB 876 MiB 13 KiB 73 MiB 99 GiB 0.93 1.37 204 up TOTAL 900 GiB 6.1 GiB 5.4 GiB 104 KiB 694 MiB 894 GiB 0.68 MIN/MAX VAR: 0.09/1.59 STDDEV: 0.43 [root@ceph-deploy ~]# ceph osd df tree ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS TYPE NAME -11 0.87900 - 300 GiB 228 MiB 30 MiB 27 KiB 198 MiB 300 GiB 0.07 0.11 - root ssd -13 0.29300 - 100 GiB 63 MiB 10 MiB 6 KiB 53 MiB 100 GiB 0.06 0.09 - host ceph-ssd-node1 2 hdd 0.09799 1.00000 100 GiB 63 MiB 10 MiB 6 KiB 53 MiB 100 GiB 0.06 0.09 0 up osd.2 -15 0.29300 - 100 GiB 87 MiB 10 MiB 8 KiB 77 MiB 100 GiB 0.09 0.13 - host ceph-ssd-node2 5 hdd 0.09799 1.00000 100 GiB 87 MiB 10 MiB 8 KiB 77 MiB 100 GiB 0.09 0.13 0 up osd.5 -17 0.29300 - 100 GiB 78 MiB 10 MiB 13 KiB 68 MiB 100 GiB 0.08 0.11 - host ceph-ssd-node3 8 hdd 0.09799 1.00000 100 GiB 78 MiB 10 MiB 13 KiB 68 MiB 100 GiB 0.08 0.11 0 up osd.8 -1 0.87900 - 600 GiB 5.9 GiB 5.4 GiB 72 KiB 500 MiB 594 GiB 0.98 1.45 - root default -3 0.29300 - 200 GiB 2.0 GiB 1.8 GiB 27 KiB 189 MiB 198 GiB 0.99 1.46 - host ceph-node1 0 hdd 0.09799 1.00000 100 GiB 990 MiB 911 MiB 20 KiB 78 MiB 99 GiB 0.97 1.42 197 up osd.0 1 hdd 0.09799 1.00000 100 GiB 1.0 GiB 932 MiB 7 KiB 111 MiB 99 GiB 1.02 1.50 204 up osd.1 -5 0.29300 - 200 GiB 1.9 GiB 1.8 GiB 25 KiB 147 MiB 198 GiB 0.97 1.43 - host ceph-node2 3 hdd 0.09799 1.00000 100 GiB 885 MiB 816 MiB 12 KiB 69 MiB 99 GiB 0.86 1.27 200 up osd.3 4 hdd 0.09799 1.00000 100 GiB 1.1 GiB 1.0 GiB 13 KiB 78 MiB 99 GiB 1.08 1.59 201 up osd.4 -7 0.29300 - 200 GiB 2.0 GiB 1.8 GiB 20 KiB 163 MiB 198 GiB 0.98 1.44 - host ceph-node3 6 hdd 0.09799 1.00000 100 GiB 1.0 GiB 967 MiB 7 KiB 91 MiB 99 GiB 1.03 1.52 197 up osd.6 7 hdd 0.09799 1.00000 100 GiB 949 MiB 876 MiB 13 KiB 73 MiB 99 GiB 0.93 1.36 204 up osd.7 TOTAL 900 GiB 6.1 GiB 5.4 GiB 104 KiB 698 MiB 894 GiB 0.68 MIN/MAX VAR: 0.09/1.59 STDDEV: 0.43 [root@ceph-deploy ~]# ceph -s cluster: id: 8dc32c41-121c-49df-9554-dfb7deb8c975 health: HEALTH_OK services: mon: 3 daemons, quorum ceph-mon1,ceph-mon2,ceph-mon3 (age 23h) mgr: ceph-mgr1(active, since 23h), standbys: ceph-mgr2 mds: 2/2 daemons up, 2 standby osd: 9 osds: 9 up (since 23h), 9 in (since 7d) rgw: 2 daemons active (2 hosts, 1 zones) data: volumes: 1/1 healthy pools: 12 pools, 401 pgs objects: 843 objects, 1.8 GiB usage: 6.1 GiB used, 894 GiB / 900 GiB avail pgs: 401 active+clean [root@ceph-deploy ~]#

再次导出验证crush map文件(一般主体文件不写错,次要配置ceph会自动帮忙修正)

[root@ceph-deploy ~]# ceph osd getcrushmap -o /data/ceph/crushmap-v5 33 [root@ceph-deploy ~]# crushtool -d /data/ceph/crushmap-v5 > /data/ceph/crushmap-v5.txt [root@ceph-deploy ~]#

[root@ceph-deploy ceph]# cat crushmap-v5.txt

# begin crush map tunable choose_local_tries 0 tunable choose_local_fallback_tries 0 tunable choose_total_tries 50 tunable chooseleaf_descend_once 1 tunable chooseleaf_vary_r 1 tunable chooseleaf_stable 1 tunable straw_calc_version 1 tunable allowed_bucket_algs 54 # devices device 0 osd.0 class hdd device 1 osd.1 class hdd device 2 osd.2 class hdd device 3 osd.3 class hdd device 4 osd.4 class hdd device 5 osd.5 class hdd device 6 osd.6 class hdd device 7 osd.7 class hdd device 8 osd.8 class hdd # types type 0 osd type 1 host type 2 chassis type 3 rack type 4 row type 5 pdu type 6 pod type 7 room type 8 datacenter type 9 zone type 10 region type 11 root # buckets host ceph-node1 { id -3 # do not change unnecessarily id -4 class hdd # do not change unnecessarily id -21 class ssd # do not change unnecessarily # weight 0.196 alg straw2 hash 0 # rjenkins1 item osd.0 weight 0.098 item osd.1 weight 0.098 } host ceph-node2 { id -5 # do not change unnecessarily id -6 class hdd # do not change unnecessarily id -22 class ssd # do not change unnecessarily # weight 0.196 alg straw2 hash 0 # rjenkins1 item osd.3 weight 0.098 item osd.4 weight 0.098 } host ceph-node3 { id -7 # do not change unnecessarily id -8 class hdd # do not change unnecessarily id -23 class ssd # do not change unnecessarily # weight 0.196 alg straw2 hash 0 # rjenkins1 item osd.6 weight 0.098 item osd.7 weight 0.098 } root default { id -1 # do not change unnecessarily id -2 class hdd # do not change unnecessarily id -24 class ssd # do not change unnecessarily # weight 0.879 alg straw2 hash 0 # rjenkins1 item ceph-node1 weight 0.293 item ceph-node2 weight 0.293 item ceph-node3 weight 0.293 } host ceph-ssd-node1 { id -13 # do not change unnecessarily id -9 class hdd # do not change unnecessarily id -14 class ssd # do not change unnecessarily # weight 0.098 alg straw2 hash 0 # rjenkins1 item osd.2 weight 0.098 } host ceph-ssd-node2 { id -15 # do not change unnecessarily id -10 class hdd # do not change unnecessarily id -16 class ssd # do not change unnecessarily # weight 0.098 alg straw2 hash 0 # rjenkins1 item osd.5 weight 0.098 } host ceph-ssd-node3 { id -17 # do not change unnecessarily id -19 class hdd # do not change unnecessarily id -18 class ssd # do not change unnecessarily # weight 0.098 alg straw2 hash 0 # rjenkins1 item osd.8 weight 0.098 } root ssd { id -11 # do not change unnecessarily id -12 class hdd # do not change unnecessarily id -20 class ssd # do not change unnecessarily # weight 0.879 alg straw2 hash 0 # rjenkins1 item ceph-ssd-node1 weight 0.293 item ceph-ssd-node2 weight 0.293 item ceph-ssd-node3 weight 0.293 } # rules rule replicated_rule { id 0 type replicated min_size 1 max_size 10 step take default step chooseleaf firstn 0 type host step emit } rule erasure-code { id 1 type erasure min_size 3 max_size 4 step set_chooseleaf_tries 5 step set_choose_tries 100 step take default step chooseleaf indep 0 type host step emit } rule ssd_rule { id 10 type replicated min_size 1 max_size 5 step take ssd step chooseleaf firstn 0 type host step emit } # end crush map

测试创建存储池:

[root@ceph-deploy ~]# ceph osd pool create ssd-pool 32 32 ssd_rule pool 'ssd-pool' created [root@ceph-deploy ~]# [root@ceph-deploy ~]# ceph osd pool ls device_health_metrics myrbd1 .rgw.root default.rgw.log default.rgw.control default.rgw.meta cephfs-metadata cephfs-data mypool rbd-data1 default.rgw.buckets.index default.rgw.buckets.data ssd-pool [root@ceph-deploy ~]#

验证 pgp 状态:

[root@ceph-deploy ~]# ceph pg ls-by-pool ssd-pool | awk '{print $1,$2,$15}' PG OBJECTS ACTING 16.0 0 [8,5,2]p8 16.1 0 [2,5,8]p2 16.2 0 [2,8,5]p2 16.3 0 [2,5,8]p2 16.4 0 [5,8,2]p5 16.5 0 [8,2,5]p8 16.6 0 [2,8,5]p2 16.7 0 [8,2,5]p8 16.8 0 [5,2,8]p5 16.9 0 [8,2,5]p8 16.a 0 [2,8,5]p2 16.b 0 [8,2,5]p8 16.c 0 [8,5,2]p8 16.d 0 [5,2,8]p5 16.e 0 [8,2,5]p8 16.f 0 [8,2,5]p8 16.10 0 [8,5,2]p8 16.11 0 [8,5,2]p8 16.12 0 [8,5,2]p8 16.13 0 [8,5,2]p8 16.14 0 [2,5,8]p2 16.15 0 [8,5,2]p8 16.16 0 [5,2,8]p5 16.17 0 [2,8,5]p2 16.18 0 [2,5,8]p2 16.19 0 [5,2,8]p5 16.1a 0 [2,8,5]p2 16.1b 0 [5,8,2]p5 16.1c 0 [2,8,5]p2 16.1d 0 [5,8,2]p5 16.1e 0 [2,5,8]p2 16.1f 0 [8,5,2]p8 * NOTE: afterwards [root@ceph-deploy ~]#

真是生产环境中ssd、hdd节点分类管理情形

# begin crush map tunable choose_local_tries 0 tunable choose_local_fallback_tries 0 tunable choose_total_tries 50 tunable chooseleaf_descend_once 1 tunable chooseleaf_vary_r 1 tunable chooseleaf_stable 1 tunable straw_calc_version 1 tunable allowed_bucket_algs 54 # devices device 0 osd.0 class hdd device 1 osd.1 class hdd device 2 osd.2 class hdd device 3 osd.3 class hdd device 4 osd.4 class hdd device 5 osd.5 class hdd device 6 osd.6 class hdd device 7 osd.7 class hdd device 8 osd.8 class hdd device 9 osd.9 class hdd device 10 osd.10 class hdd device 11 osd.11 class hdd device 12 osd.12 class hdd device 13 osd.13 class hdd device 14 osd.14 class hdd device 20 osd.20 class hdd device 21 osd.21 class hdd device 22 osd.22 class hdd device 23 osd.23 class hdd device 24 osd.24 class hdd device 25 osd.25 class hdd device 26 osd.26 class hdd device 27 osd.27 class hdd device 28 osd.28 class hdd device 29 osd.29 class hdd device 30 osd.30 class hdd device 31 osd.31 class hdd device 32 osd.32 class hdd device 33 osd.33 class hdd device 34 osd.34 class hdd device 40 osd.40 class hdd device 41 osd.41 class hdd device 42 osd.42 class hdd device 43 osd.43 class hdd device 44 osd.44 class hdd device 45 osd.45 class hdd device 46 osd.46 class hdd device 47 osd.47 class hdd device 48 osd.48 class hdd device 49 osd.49 class hdd device 50 osd.50 class hdd device 51 osd.51 class hdd device 52 osd.52 class hdd device 53 osd.53 class hdd device 54 osd.54 class hdd device 60 osd.60 class hdd device 61 osd.61 class hdd device 62 osd.62 class hdd device 63 osd.63 class hdd device 64 osd.64 class hdd device 65 osd.65 class hdd device 66 osd.66 class hdd device 67 osd.67 class hdd device 68 osd.68 class hdd device 69 osd.69 class hdd device 70 osd.70 class hdd device 71 osd.71 class hdd device 72 osd.72 class hdd device 73 osd.73 class hdd device 74 osd.74 class hdd device 80 osd.80 class hdd device 81 osd.81 class hdd device 82 osd.82 class hdd device 83 osd.83 class hdd device 84 osd.84 class hdd device 85 osd.85 class hdd device 86 osd.86 class hdd device 87 osd.87 class hdd device 88 osd.88 class hdd device 89 osd.89 class hdd device 90 osd.90 class hdd device 91 osd.91 class hdd device 92 osd.92 class hdd device 93 osd.93 class hdd device 94 osd.94 class hdd device 100 osd.100 class hdd device 101 osd.101 class hdd device 102 osd.102 class hdd device 103 osd.103 class hdd device 104 osd.104 class hdd device 105 osd.105 class hdd device 106 osd.106 class hdd device 107 osd.107 class hdd device 108 osd.108 class hdd device 109 osd.109 class hdd device 110 osd.110 class hdd device 111 osd.111 class hdd device 112 osd.112 class hdd device 113 osd.113 class hdd device 114 osd.114 class hdd device 120 osd.120 class hdd device 121 osd.121 class hdd device 122 osd.122 class hdd device 123 osd.123 class hdd device 124 osd.124 class hdd device 125 osd.125 class hdd device 126 osd.126 class hdd device 127 osd.127 class hdd device 128 osd.128 class hdd device 129 osd.129 class hdd device 130 osd.130 class hdd device 131 osd.131 class hdd device 132 osd.132 class hdd device 133 osd.133 class hdd device 134 osd.134 class hdd device 140 osd.140 class hdd device 141 osd.141 class hdd device 142 osd.142 class hdd device 143 osd.143 class hdd device 144 osd.144 class hdd device 145 osd.145 class hdd device 146 osd.146 class hdd device 147 osd.147 class hdd device 148 osd.148 class hdd device 149 osd.149 class hdd device 150 osd.150 class hdd device 151 osd.151 class hdd device 152 osd.152 class hdd device 153 osd.153 class hdd device 154 osd.154 class hdd device 160 osd.160 class hdd device 161 osd.161 class hdd device 162 osd.162 class hdd device 163 osd.163 class hdd device 164 osd.164 class hdd device 165 osd.165 class hdd device 166 osd.166 class hdd device 167 osd.167 class hdd device 168 osd.168 class hdd device 169 osd.169 class hdd device 170 osd.170 class hdd device 171 osd.171 class hdd device 172 osd.172 class hdd device 173 osd.173 class hdd device 174 osd.174 class hdd device 180 osd.180 class hdd device 181 osd.181 class hdd device 182 osd.182 class hdd device 183 osd.183 class hdd device 184 osd.184 class hdd device 185 osd.185 class hdd device 186 osd.186 class hdd device 187 osd.187 class hdd device 188 osd.188 class hdd device 189 osd.189 class hdd device 190 osd.190 class hdd device 191 osd.191 class hdd device 192 osd.192 class hdd device 193 osd.193 class hdd device 194 osd.194 class hdd device 200 osd.200 class hdd device 201 osd.201 class hdd device 202 osd.202 class hdd device 203 osd.203 class hdd device 204 osd.204 class hdd device 205 osd.205 class hdd device 206 osd.206 class hdd device 207 osd.207 class hdd device 208 osd.208 class hdd device 209 osd.209 class hdd device 210 osd.210 class hdd device 211 osd.211 class hdd device 212 osd.212 class hdd device 213 osd.213 class hdd device 214 osd.214 class hdd device 220 osd.220 class hdd device 221 osd.221 class hdd device 222 osd.222 class hdd device 223 osd.223 class hdd device 224 osd.224 class hdd device 225 osd.225 class hdd device 226 osd.226 class hdd device 227 osd.227 class hdd device 228 osd.228 class hdd device 229 osd.229 class hdd device 230 osd.230 class hdd device 231 osd.231 class hdd device 232 osd.232 class hdd device 233 osd.233 class hdd device 234 osd.234 class hdd device 240 osd.240 class hdd device 241 osd.241 class hdd device 242 osd.242 class hdd device 243 osd.243 class hdd device 244 osd.244 class hdd device 245 osd.245 class hdd device 246 osd.246 class hdd device 247 osd.247 class hdd device 248 osd.248 class hdd device 249 osd.249 class hdd device 250 osd.250 class hdd device 251 osd.251 class hdd device 252 osd.252 class hdd device 253 osd.253 class hdd device 254 osd.254 class hdd device 260 osd.260 class hdd device 261 osd.261 class hdd device 262 osd.262 class hdd device 263 osd.263 class hdd device 264 osd.264 class hdd device 265 osd.265 class hdd device 266 osd.266 class hdd device 267 osd.267 class hdd device 268 osd.268 class hdd device 269 osd.269 class hdd device 270 osd.270 class hdd device 271 osd.271 class hdd device 272 osd.272 class hdd device 273 osd.273 class hdd device 274 osd.274 class hdd device 280 osd.280 class hdd device 281 osd.281 class hdd device 282 osd.282 class hdd device 283 osd.283 class hdd device 284 osd.284 class hdd device 285 osd.285 class hdd device 286 osd.286 class hdd device 287 osd.287 class hdd device 288 osd.288 class hdd device 289 osd.289 class hdd device 290 osd.290 class hdd device 291 osd.291 class hdd device 292 osd.292 class hdd device 293 osd.293 class hdd device 294 osd.294 class hdd device 300 osd.300 class hdd device 301 osd.301 class hdd device 302 osd.302 class hdd device 303 osd.303 class hdd device 304 osd.304 class hdd device 305 osd.305 class hdd device 306 osd.306 class hdd device 307 osd.307 class hdd device 308 osd.308 class hdd device 309 osd.309 class hdd device 310 osd.310 class hdd device 311 osd.311 class hdd device 312 osd.312 class hdd device 313 osd.313 class hdd device 314 osd.314 class hdd device 320 osd.320 class ssd device 321 osd.321 class ssd device 322 osd.322 class ssd device 323 osd.323 class ssd device 324 osd.324 class ssd device 325 osd.325 class ssd device 326 osd.326 class ssd device 327 osd.327 class ssd device 328 osd.328 class ssd device 329 osd.329 class ssd device 330 osd.330 class ssd device 331 osd.331 class ssd device 332 osd.332 class ssd device 333 osd.333 class ssd device 334 osd.334 class ssd device 335 osd.335 class ssd device 336 osd.336 class ssd device 337 osd.337 class ssd device 338 osd.338 class ssd device 339 osd.339 class ssd device 340 osd.340 class ssd device 341 osd.341 class ssd device 342 osd.342 class ssd device 343 osd.343 class ssd device 344 osd.344 class ssd device 345 osd.345 class ssd device 346 osd.346 class ssd device 347 osd.347 class ssd device 348 osd.348 class ssd device 349 osd.349 class ssd device 350 osd.350 class ssd device 351 osd.351 class ssd device 352 osd.352 class ssd device 353 osd.353 class ssd device 354 osd.354 class ssd device 355 osd.355 class ssd device 356 osd.356 class ssd device 357 osd.357 class ssd device 358 osd.358 class ssd device 359 osd.359 class ssd device 360 osd.360 class ssd device 361 osd.361 class ssd device 362 osd.362 class ssd device 363 osd.363 class ssd device 364 osd.364 class ssd device 365 osd.365 class ssd device 366 osd.366 class ssd device 367 osd.367 class ssd device 368 osd.368 class ssd device 369 osd.369 class ssd device 370 osd.370 class ssd device 371 osd.371 class ssd device 372 osd.372 class ssd device 373 osd.373 class ssd device 374 osd.374 class ssd device 375 osd.375 class ssd device 376 osd.376 class ssd device 377 osd.377 class ssd device 378 osd.378 class ssd device 379 osd.379 class ssd device 380 osd.380 class ssd device 381 osd.381 class ssd device 382 osd.382 class ssd device 383 osd.383 class ssd device 384 osd.384 class ssd device 385 osd.385 class ssd device 386 osd.386 class ssd device 387 osd.387 class ssd device 388 osd.388 class ssd device 389 osd.389 class ssd device 390 osd.390 class ssd device 391 osd.391 class ssd device 392 osd.392 class ssd device 393 osd.393 class ssd device 394 osd.394 class ssd device 395 osd.395 class ssd device 396 osd.396 class ssd device 397 osd.397 class ssd device 398 osd.398 class ssd device 399 osd.399 class ssd device 400 osd.400 class ssd device 401 osd.401 class ssd device 402 osd.402 class ssd device 403 osd.403 class ssd device 404 osd.404 class ssd device 405 osd.405 class ssd device 406 osd.406 class ssd device 407 osd.407 class ssd device 408 osd.408 class ssd device 409 osd.409 class ssd device 410 osd.410 class ssd device 411 osd.411 class ssd device 412 osd.412 class ssd device 413 osd.413 class ssd device 414 osd.414 class ssd device 415 osd.415 class ssd device 416 osd.416 class ssd device 417 osd.417 class ssd device 418 osd.418 class ssd device 419 osd.419 class ssd device 420 osd.420 class ssd device 421 osd.421 class ssd device 422 osd.422 class ssd device 423 osd.423 class ssd device 424 osd.424 class ssd device 425 osd.425 class ssd device 426 osd.426 class ssd device 427 osd.427 class ssd device 428 osd.428 class ssd device 429 osd.429 class ssd device 430 osd.430 class ssd device 431 osd.431 class ssd device 432 osd.432 class ssd device 433 osd.433 class ssd device 434 osd.434 class ssd device 435 osd.435 class ssd device 436 osd.436 class ssd device 437 osd.437 class ssd device 438 osd.438 class ssd device 439 osd.439 class ssd # types type 0 osd type 1 host type 2 chassis type 3 rack type 4 row type 5 pdu type 6 pod type 7 room type 8 datacenter type 9 region type 10 root # buckets host dt-1ap213-proxmox-01 { id -3 # do not change unnecessarily id -4 class hdd # do not change unnecessarily id -35 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.0 weight 2.183 item osd.1 weight 2.183 item osd.2 weight 2.183 item osd.3 weight 2.183 item osd.4 weight 2.183 item osd.5 weight 2.183 item osd.6 weight 2.183 item osd.7 weight 2.183 item osd.8 weight 2.183 item osd.9 weight 2.183 item osd.10 weight 2.183 item osd.11 weight 2.183 item osd.12 weight 2.183 item osd.13 weight 2.183 item osd.14 weight 2.183 } host dt-1ap213-proxmox-02 { id -5 # do not change unnecessarily id -6 class hdd # do not change unnecessarily id -36 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.20 weight 2.183 item osd.21 weight 2.183 item osd.22 weight 2.183 item osd.23 weight 2.183 item osd.24 weight 2.183 item osd.25 weight 2.183 item osd.26 weight 2.183 item osd.27 weight 2.183 item osd.28 weight 2.183 item osd.29 weight 2.183 item osd.30 weight 2.183 item osd.31 weight 2.183 item osd.32 weight 2.183 item osd.33 weight 2.183 item osd.34 weight 2.183 } host dt-1ap213-proxmox-03 { id -7 # do not change unnecessarily id -8 class hdd # do not change unnecessarily id -37 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.40 weight 2.183 item osd.41 weight 2.183 item osd.42 weight 2.183 item osd.43 weight 2.183 item osd.44 weight 2.183 item osd.45 weight 2.183 item osd.46 weight 2.183 item osd.47 weight 2.183 item osd.48 weight 2.183 item osd.49 weight 2.183 item osd.50 weight 2.183 item osd.51 weight 2.183 item osd.52 weight 2.183 item osd.53 weight 2.183 item osd.54 weight 2.183 } host dt-1ap213-proxmox-04 { id -9 # do not change unnecessarily id -10 class hdd # do not change unnecessarily id -38 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.60 weight 2.183 item osd.61 weight 2.183 item osd.62 weight 2.183 item osd.63 weight 2.183 item osd.64 weight 2.183 item osd.65 weight 2.183 item osd.66 weight 2.183 item osd.67 weight 2.183 item osd.68 weight 2.183 item osd.69 weight 2.183 item osd.70 weight 2.183 item osd.71 weight 2.183 item osd.72 weight 2.183 item osd.73 weight 2.183 item osd.74 weight 2.183 } host dt-1ap213-proxmox-05 { id -11 # do not change unnecessarily id -12 class hdd # do not change unnecessarily id -39 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.80 weight 2.183 item osd.81 weight 2.183 item osd.82 weight 2.183 item osd.83 weight 2.183 item osd.84 weight 2.183 item osd.85 weight 2.183 item osd.86 weight 2.183 item osd.87 weight 2.183 item osd.88 weight 2.183 item osd.89 weight 2.183 item osd.90 weight 2.183 item osd.91 weight 2.183 item osd.92 weight 2.183 item osd.93 weight 2.183 item osd.94 weight 2.183 } host dt-1ap213-proxmox-06 { id -13 # do not change unnecessarily id -14 class hdd # do not change unnecessarily id -40 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.100 weight 2.183 item osd.101 weight 2.183 item osd.102 weight 2.183 item osd.103 weight 2.183 item osd.104 weight 2.183 item osd.105 weight 2.183 item osd.106 weight 2.183 item osd.107 weight 2.183 item osd.108 weight 2.183 item osd.109 weight 2.183 item osd.110 weight 2.183 item osd.111 weight 2.183 item osd.112 weight 2.183 item osd.113 weight 2.183 item osd.114 weight 2.183 } host dt-1ap213-proxmox-07 { id -15 # do not change unnecessarily id -16 class hdd # do not change unnecessarily id -41 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.120 weight 2.183 item osd.121 weight 2.183 item osd.122 weight 2.183 item osd.123 weight 2.183 item osd.124 weight 2.183 item osd.125 weight 2.183 item osd.126 weight 2.183 item osd.127 weight 2.183 item osd.128 weight 2.183 item osd.129 weight 2.183 item osd.130 weight 2.183 item osd.131 weight 2.183 item osd.132 weight 2.183 item osd.133 weight 2.183 item osd.134 weight 2.183 } host dt-1ap213-proxmox-08 { id -17 # do not change unnecessarily id -18 class hdd # do not change unnecessarily id -42 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.140 weight 2.183 item osd.141 weight 2.183 item osd.142 weight 2.183 item osd.143 weight 2.183 item osd.144 weight 2.183 item osd.145 weight 2.183 item osd.146 weight 2.183 item osd.147 weight 2.183 item osd.148 weight 2.183 item osd.149 weight 2.183 item osd.150 weight 2.183 item osd.151 weight 2.183 item osd.152 weight 2.183 item osd.153 weight 2.183 item osd.154 weight 2.183 } host dt-1ap214-proxmox-01 { id -19 # do not change unnecessarily id -20 class hdd # do not change unnecessarily id -43 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.160 weight 2.183 item osd.161 weight 2.183 item osd.162 weight 2.183 item osd.163 weight 2.183 item osd.164 weight 2.183 item osd.165 weight 2.183 item osd.166 weight 2.183 item osd.167 weight 2.183 item osd.168 weight 2.183 item osd.169 weight 2.183 item osd.170 weight 2.183 item osd.171 weight 2.183 item osd.172 weight 2.183 item osd.173 weight 2.183 item osd.174 weight 2.183 } host dt-1ap214-proxmox-02 { id -21 # do not change unnecessarily id -22 class hdd # do not change unnecessarily id -44 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.180 weight 2.183 item osd.181 weight 2.183 item osd.182 weight 2.183 item osd.183 weight 2.183 item osd.184 weight 2.183 item osd.185 weight 2.183 item osd.186 weight 2.183 item osd.187 weight 2.183 item osd.188 weight 2.183 item osd.189 weight 2.183 item osd.190 weight 2.183 item osd.191 weight 2.183 item osd.192 weight 2.183 item osd.193 weight 2.183 item osd.194 weight 2.183 } host dt-1ap214-proxmox-03 { id -23 # do not change unnecessarily id -24 class hdd # do not change unnecessarily id -45 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.200 weight 2.183 item osd.201 weight 2.183 item osd.202 weight 2.183 item osd.203 weight 2.183 item osd.204 weight 2.183 item osd.205 weight 2.183 item osd.206 weight 2.183 item osd.207 weight 2.183 item osd.208 weight 2.183 item osd.209 weight 2.183 item osd.210 weight 2.183 item osd.211 weight 2.183 item osd.212 weight 2.183 item osd.213 weight 2.183 item osd.214 weight 2.183 } host dt-1ap214-proxmox-04 { id -25 # do not change unnecessarily id -26 class hdd # do not change unnecessarily id -46 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.220 weight 2.183 item osd.221 weight 2.183 item osd.222 weight 2.183 item osd.223 weight 2.183 item osd.224 weight 2.183 item osd.225 weight 2.183 item osd.226 weight 2.183 item osd.227 weight 2.183 item osd.228 weight 2.183 item osd.229 weight 2.183 item osd.230 weight 2.183 item osd.231 weight 2.183 item osd.232 weight 2.183 item osd.233 weight 2.183 item osd.234 weight 2.183 } host dt-1ap214-proxmox-05 { id -27 # do not change unnecessarily id -28 class hdd # do not change unnecessarily id -47 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.240 weight 2.183 item osd.241 weight 2.183 item osd.242 weight 2.183 item osd.243 weight 2.183 item osd.244 weight 2.183 item osd.245 weight 2.183 item osd.246 weight 2.183 item osd.247 weight 2.183 item osd.248 weight 2.183 item osd.249 weight 2.183 item osd.250 weight 2.183 item osd.251 weight 2.183 item osd.252 weight 2.183 item osd.253 weight 2.183 item osd.254 weight 2.183 } host dt-1ap214-proxmox-06 { id -29 # do not change unnecessarily id -30 class hdd # do not change unnecessarily id -48 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.260 weight 2.183 item osd.261 weight 2.183 item osd.262 weight 2.183 item osd.263 weight 2.183 item osd.264 weight 2.183 item osd.265 weight 2.183 item osd.266 weight 2.183 item osd.267 weight 2.183 item osd.268 weight 2.183 item osd.269 weight 2.183 item osd.270 weight 2.183 item osd.271 weight 2.183 item osd.272 weight 2.183 item osd.273 weight 2.183 item osd.274 weight 2.183 } host dt-1ap214-proxmox-07 { id -31 # do not change unnecessarily id -32 class hdd # do not change unnecessarily id -49 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.280 weight 2.183 item osd.281 weight 2.183 item osd.282 weight 2.183 item osd.283 weight 2.183 item osd.284 weight 2.183 item osd.285 weight 2.183 item osd.286 weight 2.183 item osd.287 weight 2.183 item osd.288 weight 2.183 item osd.289 weight 2.183 item osd.290 weight 2.183 item osd.291 weight 2.183 item osd.292 weight 2.183 item osd.293 weight 2.183 item osd.294 weight 2.183 } host dt-1ap214-proxmox-08 { id -33 # do not change unnecessarily id -34 class hdd # do not change unnecessarily id -50 class ssd # do not change unnecessarily # weight 32.745 alg straw2 hash 0 # rjenkins1 item osd.300 weight 2.183 item osd.301 weight 2.183 item osd.302 weight 2.183 item osd.303 weight 2.183 item osd.304 weight 2.183 item osd.305 weight 2.183 item osd.306 weight 2.183 item osd.307 weight 2.183 item osd.308 weight 2.183 item osd.309 weight 2.183 item osd.310 weight 2.183 item osd.311 weight 2.183 item osd.312 weight 2.183 item osd.313 weight 2.183 item osd.314 weight 2.183 } root default { id -1 # do not change unnecessarily id -2 class hdd # do not change unnecessarily id -51 class ssd # do not change unnecessarily # weight 523.920 alg straw2 hash 0 # rjenkins1 item dt-1ap213-proxmox-01 weight 32.745 item dt-1ap213-proxmox-02 weight 32.745 item dt-1ap213-proxmox-03 weight 32.745 item dt-1ap213-proxmox-04 weight 32.745 item dt-1ap213-proxmox-05 weight 32.745 item dt-1ap213-proxmox-06 weight 32.745 item dt-1ap213-proxmox-07 weight 32.745 item dt-1ap213-proxmox-08 weight 32.745 item dt-1ap214-proxmox-01 weight 32.745 item dt-1ap214-proxmox-02 weight 32.745 item dt-1ap214-proxmox-03 weight 32.745 item dt-1ap214-proxmox-04 weight 32.745 item dt-1ap214-proxmox-05 weight 32.745 item dt-1ap214-proxmox-06 weight 32.745 item dt-1ap214-proxmox-07 weight 32.745 item dt-1ap214-proxmox-08 weight 32.745 } host dt-1ap215-proxmox-01 { id -52 # do not change unnecessarily id -53 class hdd # do not change unnecessarily id -54 class ssd # do not change unnecessarily # weight 10.476 alg straw2 hash 0 # rjenkins1 item osd.320 weight 0.873 item osd.321 weight 0.873 item osd.322 weight 0.873 item osd.323 weight 0.873 item osd.324 weight 0.873 item osd.325 weight 0.873 item osd.326 weight 0.873 item osd.327 weight 0.873 item osd.328 weight 0.873 item osd.329 weight 0.873 item osd.330 weight 0.873 item osd.331 weight 0.873 } host dt-1ap215-proxmox-02 { id -55 # do not change unnecessarily id -56 class hdd # do not change unnecessarily id -57 class ssd # do not change unnecessarily # weight 10.476 alg straw2 hash 0 # rjenkins1 item osd.332 weight 0.873 item osd.333 weight 0.873 item osd.334 weight 0.873 item osd.335 weight 0.873 item osd.336 weight 0.873 item osd.337 weight 0.873 item osd.338 weight 0.873 item osd.339 weight 0.873 item osd.340 weight 0.873 item osd.341 weight 0.873 item osd.342 weight 0.873 item osd.343 weight 0.873 } host dt-1ap215-proxmox-03 { id -58 # do not change unnecessarily id -59 class hdd # do not change unnecessarily id -60 class ssd # do not change unnecessarily # weight 10.476 alg straw2 hash 0 # rjenkins1 item osd.344 weight 0.873 item osd.345 weight 0.873 item osd.346 weight 0.873 item osd.347 weight 0.873 item osd.348 weight 0.873 item osd.349 weight 0.873 item osd.350 weight 0.873 item osd.351 weight 0.873 item osd.352 weight 0.873 item osd.353 weight 0.873 item osd.354 weight 0.873 item osd.355 weight 0.873 } host dt-1ap215-proxmox-04 { id -61 # do not change unnecessarily id -62 class hdd # do not change unnecessarily id -63 class ssd # do not change unnecessarily # weight 10.476 alg straw2 hash 0 # rjenkins1 item osd.356 weight 0.873 item osd.357 weight 0.873 item osd.358 weight 0.873 item osd.359 weight 0.873 item osd.360 weight 0.873 item osd.361 weight 0.873 item osd.362 weight 0.873 item osd.363 weight 0.873 item osd.364 weight 0.873 item osd.365 weight 0.873 item osd.366 weight 0.873 item osd.367 weight 0.873 } host dt-1ap215-proxmox-05 { id -64 # do not change unnecessarily id -65 class hdd # do not change unnecessarily id -66 class ssd # do not change unnecessarily # weight 10.476 alg straw2 hash 0 # rjenkins1 item osd.368 weight 0.873 item osd.369 weight 0.873 item osd.370 weight 0.873 item osd.371 weight 0.873 item osd.373 weight 0.873 item osd.372 weight 0.873 item osd.374 weight 0.873 item osd.375 weight 0.873 item osd.376 weight 0.873 item osd.377 weight 0.873 item osd.378 weight 0.873 item osd.379 weight 0.873 } host dt-1ap215-proxmox-06 { id -67 # do not change unnecessarily id -68 class hdd # do not change unnecessarily id -69 class ssd # do not change unnecessarily # weight 10.476 alg straw2 hash 0 # rjenkins1 item osd.380 weight 0.873 item osd.381 weight 0.873 item osd.382 weight 0.873 item osd.383 weight 0.873 item osd.384 weight 0.873 item osd.385 weight 0.873 item osd.386 weight 0.873 item osd.387 weight 0.873 item osd.388 weight 0.873 item osd.389 weight 0.873 item osd.390 weight 0.873 item osd.391 weight 0.873 } host dt-1ap215-proxmox-07 { id -70 # do not change unnecessarily id -71 class hdd # do not change unnecessarily id -72 class ssd # do not change unnecessarily # weight 10.476 alg straw2 hash 0 # rjenkins1 item osd.392 weight 0.873 item osd.393 weight 0.873 item osd.394 weight 0.873 item osd.395 weight 0.873 item osd.396 weight 0.873 item osd.397 weight 0.873 item osd.398 weight 0.873 item osd.399 weight 0.873 item osd.400 weight 0.873 item osd.401 weight 0.873 item osd.402 weight 0.873 item osd.403 weight 0.873 } host dt-1ap215-proxmox-08 { id -73 # do not change unnecessarily id -74 class hdd # do not change unnecessarily id -75 class ssd # do not change unnecessarily # weight 10.476 alg straw2 hash 0 # rjenkins1 item osd.404 weight 0.873 item osd.405 weight 0.873 item osd.406 weight 0.873 item osd.407 weight 0.873 item osd.408 weight 0.873 item osd.409 weight 0.873 item osd.410 weight 0.873 item osd.411 weight 0.873 item osd.412 weight 0.873 item osd.413 weight 0.873 item osd.414 weight 0.873 item osd.415 weight 0.873 } host dt-1ap216-proxmox-05 { id -76 # do not change unnecessarily id -77 class hdd # do not change unnecessarily id -78 class ssd # do not change unnecessarily # weight 10.476 alg straw2 hash 0 # rjenkins1 item osd.416 weight 0.873 item osd.417 weight 0.873 item osd.418 weight 0.873 item osd.419 weight 0.873 item osd.420 weight 0.873 item osd.421 weight 0.873 item osd.422 weight 0.873 item osd.423 weight 0.873 item osd.424 weight 0.873 item osd.425 weight 0.873 item osd.426 weight 0.873 item osd.427 weight 0.873 } host dt-1ap216-proxmox-06 { id -79 # do not change unnecessarily id -80 class hdd # do not change unnecessarily id -81 class ssd # do not change unnecessarily # weight 10.476 alg straw2 hash 0 # rjenkins1 item osd.428 weight 0.873 item osd.429 weight 0.873 item osd.430 weight 0.873 item osd.431 weight 0.873 item osd.432 weight 0.873 item osd.433 weight 0.873 item osd.434 weight 0.873 item osd.435 weight 0.873 item osd.436 weight 0.873 item osd.437 weight 0.873 item osd.438 weight 0.873 item osd.439 weight 0.873 } root ssd { id -82 # do not change unnecessarily id -83 class hdd # do not change unnecessarily id -84 class ssd # do not change unnecessarily # weight 104.780 alg straw2 hash 0 # rjenkins1 item dt-1ap215-proxmox-01 weight 10.478 item dt-1ap215-proxmox-02 weight 10.478 item dt-1ap215-proxmox-03 weight 10.478 item dt-1ap215-proxmox-04 weight 10.478 item dt-1ap215-proxmox-05 weight 10.478 item dt-1ap215-proxmox-06 weight 10.478 item dt-1ap215-proxmox-07 weight 10.478 item dt-1ap215-proxmox-08 weight 10.478 item dt-1ap216-proxmox-05 weight 10.478 item dt-1ap216-proxmox-06 weight 10.478 } # rules rule replicated_rule { id 0 type replicated min_size 1 max_size 10 step take default step chooseleaf firstn 0 type host step emit } rule ssd_replicated_rule { id 1 type replicated min_size 1 max_size 10 step take ssd step chooseleaf firstn 0 type host step emit }

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!