Ceph使用---对象存储网关RadosGW

一、RadosGW 对象存储网关简介

http://docs.ceph.org.cn/radosgw/

对象存储特性:

- 数据不需要放置在目录层次结构中, 而是存在于平面地址空间内的同一级别

- 应用通过唯一地址来识别每个单独的数据对象

- 每个对象可包含有助于检索的元数据

- 通过 RESTful API 在应用级别(而非用户级别) 进行访问

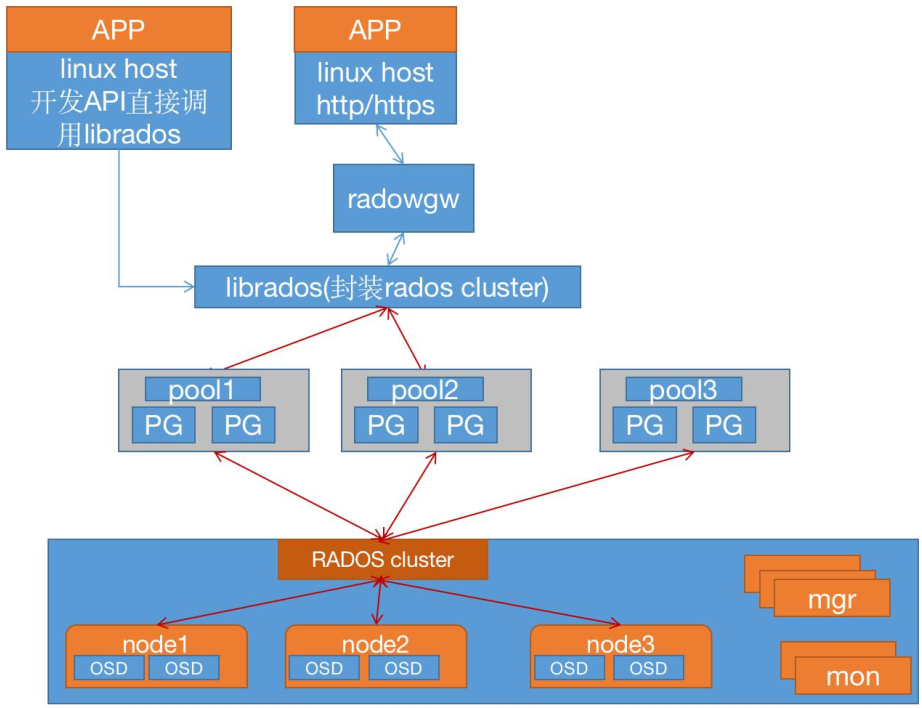

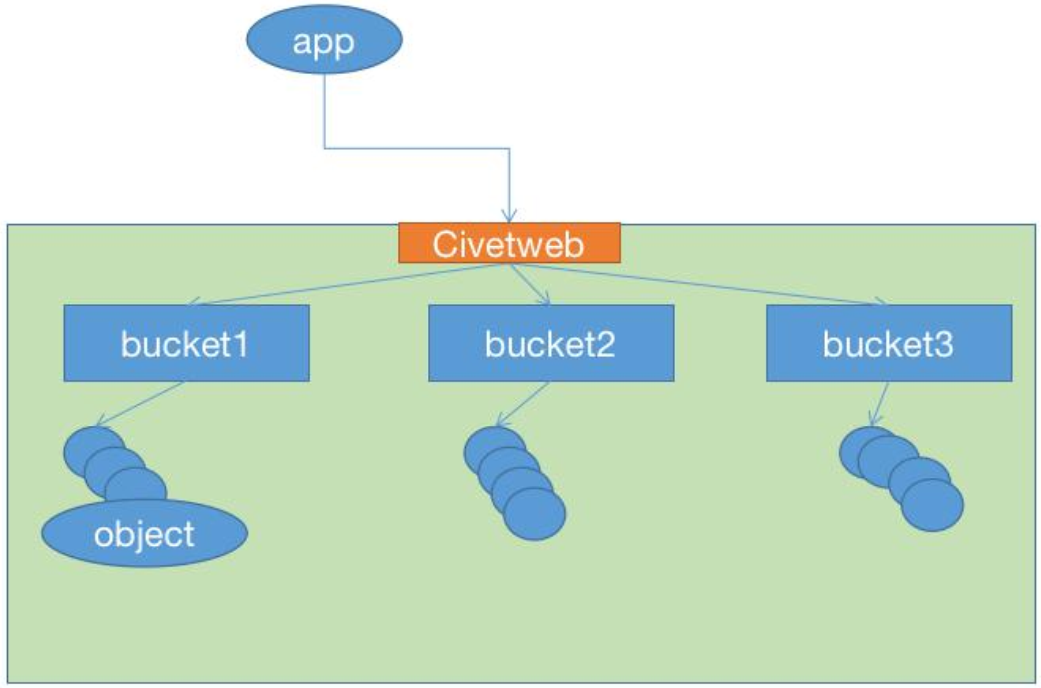

RadosGW 是对象存储(OSS,Object Storage Service)的一种访问实现方式, RADOS 网关也称为 Ceph 对象网关、 RadosGW、 RGW, 是一种服务, 使客户端能够利用标准对象存储API 来访问 Ceph 集群, 它支持 AWS S3 和 Swift API, 在 ceph 0.8 版本之后使用 Civetweb(https://github.com/civetweb/civetweb) 的 web 服务器来响应 api 请求, 客户端使用http/https 协议通过 RESTful API 与 RGW 通信, 而 RGW 则通过 librados 与 ceph 集群通信, RGW 客户端通过 s3 或者 swift api 使用 RGW 用户进行身份验证, 然后 RGW 网关代表用户利用 cephx 与 ceph 存储进行身份验证。

S3 由 Amazon 于 2006 年推出, 全称为 Simple Storage Service,S3 定义了对象存储, 是对象存储事实上的标准, 从某种意义上说, S3 就是对象存储, 对象存储就是 S3,它是对象存储市场的霸主, 后续的对象存储都是对 S3 的模仿

RadosGW 存储特点

- 通过对象存储网关将数据存储为对象, 每个对象除了包含数据, 还包含数据自身的元数据。

- 对象通过 Object ID 来检索, 无法通过普通文件系统的挂载方式通过文件路径加文件名称操作来直接访问对象, 只能通过 API 来访问, 或者第三方客户端(实际上也是对 API 的封装) 。

- 对象的存储不是垂直的目录树结构, 而是存储在扁平的命名空间中, Amazon S3 将这个扁平命名空间称为 bucket, 而 swift 则将其称为容器。

- 无论是 bucket 还是容器, 都不能再嵌套(在 bucket 不能再包含 bucket)。

- bucket 需要被授权才能访问到, 一个帐户可以对多个 bucket 授权, 而权限可以不同。

- 方便横向扩展、 快速检索数据。

- 不支持客户端挂载,且需要客户端在访问的时候指定文件名称。

- 不是很适用于文件过于频繁修改及删除的场景。

ceph 使用 bucket 作为存储桶(存储空间), 实现对象数据的存储和多用户隔离, 数据存储在bucket 中, 用户的权限也是针对 bucket 进行授权, 可以设置用户对不同的 bucket 拥有不同的权限, 以实现权限管理。

bucket 特性

- 存储空间(bucket)是用于存储对象(Object) 的容器, 所有的对象都必须隶属于某个存储空间, 可以设置和修改存储空间属性用来控制地域、 访问权限、 生命周期等, 这些属性设置直接作用于该存储空间内所有对象, 因此可以通过灵活创建不同的存储空间来完成不同的管理功能。

- 同一个存储空间的内部是扁平的, 没有文件系统的目录等概念, 所有的对象都直接隶属于其对应的存储空间。

- 每个用户可以拥有多个存储空间

- 存储空间的名称在 OSS 范围内必须是全局唯一的, 一旦创建之后无法修改名称。

- 存储空间内部的对象数目没有限制。

bucket 命名规范:

https://docs.amazonaws.cn/AmazonS3/latest/userguide/bucketnamingrules.html

- 只能包括小写字母、 数字和短横线(-) 。

- 必须以小写字母或者数字开头和结尾。

- 长度必须在 3-63 字节之间。

- 存储桶名称不能使用用 IP 地址格式。

- Bucket 名称必须全局唯一。

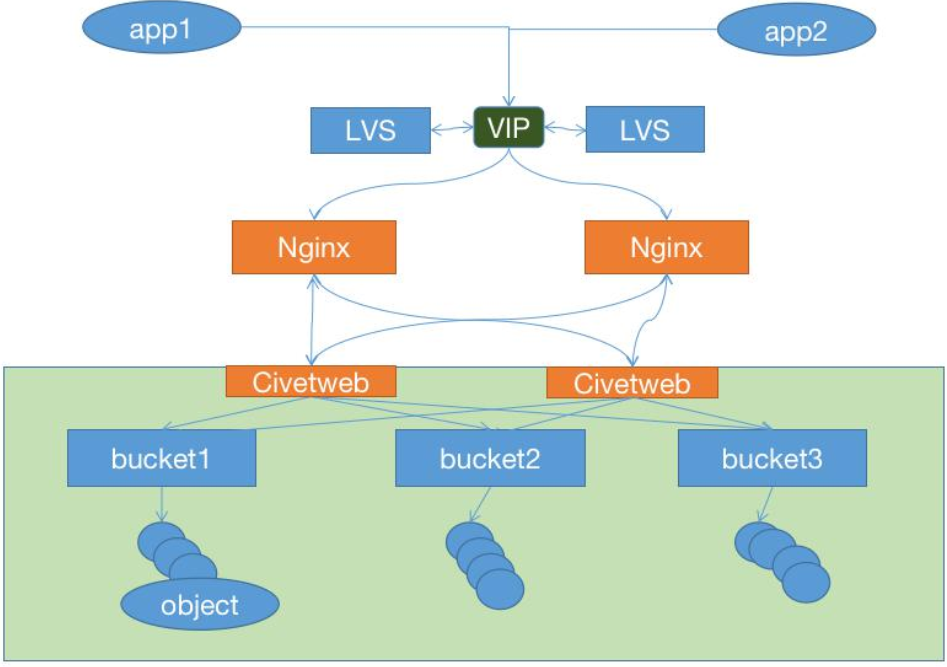

radosgw 架构图

radosgw 逻辑图

对象存储访问对比:

Amazon S3: 提供了 user、 bucket 和 object 分别表示用户、 存储桶和对象, 其中 bucket隶属于 user, 可以针对 user 设置不同 bucket 的名称空间的访问权限, 而且不同用户允许访问相同的 bucket。

OpenStack Swift: 提供了 user、 container 和 object 分别对应于用户、 存储桶和对象, 不过它还额外为 user 提供了父级组件 account, account 用于表示一个项目或租户(OpenStack用户), 因此一个 account 中可包含一到多个 user, 它们可共享使用同一组 container, 并为container 提供名称空间。

RadosGW: 提供了 user、 subuser、 bucket 和 object, 其中的 user 对应于 S3 的 user, 而subuser 则对应于 Swift 的 user, 不过 user 和 subuser 都不支持为 bucket 提供名称空间,因此, 不同用户的存储桶也不允许同名; 不过, 自 Jewel 版本起, RadosGW 引入了 tenant( 租户) 用于为 user 和 bucket 提供名称空间, 但它是个可选组件, RadosGW 基于 ACL为不同的用户设置不同的权限控制, 如:

Read 读权限

Write 写权限

Readwrite 读写权限

full-control 全部控制权限

部署 RadosGW 服务:

将 ceph-mgr1、 ceph-mgr2 服务器部署为高可用的 radosGW 服务

安装 radosgw 服务并初始化:

[root@ceph-mgr1 ~]# apt install radosgw -y [root@ceph-mgr2 ~]# apt install radosgw -y #在 ceph deploy 服务器将 ceph-mgr1 初始化为 radosGW 服务: [cephadmin@ceph-deploy ~]$ cd ceph-cluster/ [cephadmin@ceph-deploy ceph-cluster]$ ceph-deploy rgw create ceph-mgr2 [cephadmin@ceph-deploy ceph-cluster]$ ceph-deploy rgw create ceph-mgr1

操作执行过程

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy rgw create ceph-mgr1 [ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy rgw create ceph-mgr1 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7f36ede91850> [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] func : <function rgw at 0x7f36ede71b80> [ceph_deploy.cli][INFO ] rgw : [('ceph-mgr1', 'rgw.ceph-mgr1')] [ceph_deploy.rgw][DEBUG ] Deploying rgw, cluster ceph hosts ceph-mgr1:rgw.ceph-mgr1 [ceph-mgr1][DEBUG ] connection detected need for sudo [ceph-mgr1][DEBUG ] connected to host: ceph-mgr1 [ceph_deploy.rgw][INFO ] Distro info: ubuntu 20.04 focal [ceph_deploy.rgw][DEBUG ] remote host will use systemd [ceph_deploy.rgw][DEBUG ] deploying rgw bootstrap to ceph-mgr1 [ceph-mgr1][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-rgw --keyring /var/lib/ceph/bootstrap-rgw/ceph.keyring auth get-or-create client.rgw.ceph-mgr1 osd allow rwx mon allow rw -o /var/lib/ceph/radosgw/ceph-rgw.ceph-mgr1/keyring [ceph-mgr1][INFO ] Running command: sudo systemctl enable ceph-radosgw@rgw.ceph-mgr1 [ceph-mgr1][INFO ] Running command: sudo systemctl start ceph-radosgw@rgw.ceph-mgr1 [ceph-mgr1][INFO ] Running command: sudo systemctl enable ceph.target [ceph_deploy.rgw][INFO ] The Ceph Object Gateway (RGW) is now running on host ceph-mgr1 and default port 7480 cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy rgw create ceph-mgr2 [ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy rgw create ceph-mgr2 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7f3f4d0e7880> [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] func : <function rgw at 0x7f3f4d0c5b80> [ceph_deploy.cli][INFO ] rgw : [('ceph-mgr2', 'rgw.ceph-mgr2')] [ceph_deploy.rgw][DEBUG ] Deploying rgw, cluster ceph hosts ceph-mgr2:rgw.ceph-mgr2 [ceph-mgr2][DEBUG ] connection detected need for sudo [ceph-mgr2][DEBUG ] connected to host: ceph-mgr2 [ceph_deploy.rgw][INFO ] Distro info: ubuntu 20.04 focal [ceph_deploy.rgw][DEBUG ] remote host will use systemd [ceph_deploy.rgw][DEBUG ] deploying rgw bootstrap to ceph-mgr2 [ceph-mgr2][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-rgw --keyring /var/lib/ceph/bootstrap-rgw/ceph.keyring auth get-or-create client.rgw.ceph-mgr2 osd allow rwx mon allow rw -o /var/lib/ceph/radosgw/ceph-rgw.ceph-mgr2/keyring [ceph-mgr2][INFO ] Running command: sudo systemctl enable ceph-radosgw@rgw.ceph-mgr2 [ceph-mgr2][WARNIN] Created symlink /etc/systemd/system/ceph-radosgw.target.wants/ceph-radosgw@rgw.ceph-mgr2.service → /lib/systemd/system/ceph-radosgw@.service. [ceph-mgr2][INFO ] Running command: sudo systemctl start ceph-radosgw@rgw.ceph-mgr2 [ceph-mgr2][INFO ] Running command: sudo systemctl enable ceph.target [ceph_deploy.rgw][INFO ] The Ceph Object Gateway (RGW) is now running on host ceph-mgr2 and default port 7480 cephadmin@ceph-deploy:~/ceph-cluster$

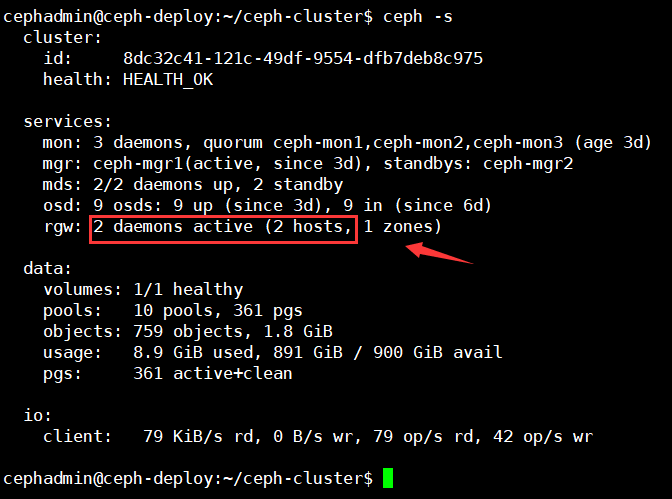

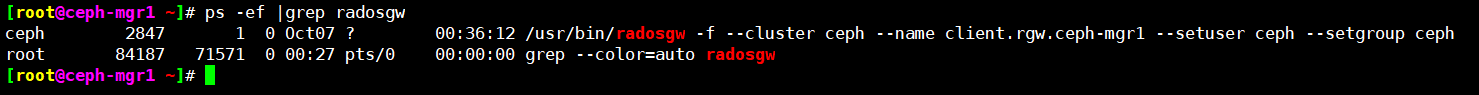

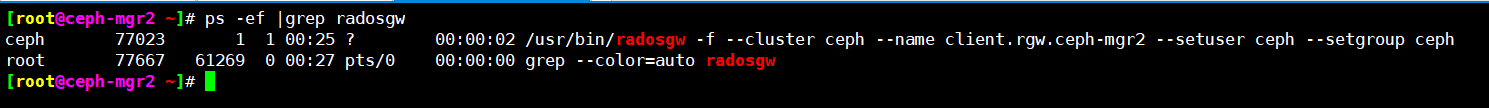

验证 radosgw 服务状态:

验证 radosgw 服务进程:

radosgw 的存储池类型:

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool ls device_health_metrics .rgw.root default.rgw.log default.rgw.control default.rgw.meta cephfs-metadata cephfs-data rbd-data1 default.rgw.buckets.index default.rgw.buckets.data k8s-rbd-pool cephadmin@ceph-deploy:~/ceph-cluster$

查看默认 radosgw 的存储池信息:

cephadmin@ceph-deploy:~/ceph-cluster$ radosgw-admin zone get --rgw-zone=default --rgw-zonegroup=default

{ "id": "a55c81f4-16a0-4cec-b6f1-d345e054f568", "name": "default", "domain_root": "default.rgw.meta:root", "control_pool": "default.rgw.control", "gc_pool": "default.rgw.log:gc", "lc_pool": "default.rgw.log:lc", "log_pool": "default.rgw.log", "intent_log_pool": "default.rgw.log:intent", "usage_log_pool": "default.rgw.log:usage", "roles_pool": "default.rgw.meta:roles", "reshard_pool": "default.rgw.log:reshard", "user_keys_pool": "default.rgw.meta:users.keys", "user_email_pool": "default.rgw.meta:users.email", "user_swift_pool": "default.rgw.meta:users.swift", "user_uid_pool": "default.rgw.meta:users.uid", "otp_pool": "default.rgw.otp", "system_key": { "access_key": "", "secret_key": "" }, "placement_pools": [ { "key": "default-placement", "val": { "index_pool": "default.rgw.buckets.index", "storage_classes": { "STANDARD": { "data_pool": "default.rgw.buckets.data" } }, "data_extra_pool": "default.rgw.buckets.non-ec", "index_type": 0 } } ], "realm_id": "", "notif_pool": "default.rgw.log:notif" }

#rgw pool 信息:

- .rgw.root: 包含 realm(领域信息), 比如 zone 和 zonegroup。

- default.rgw.log: 存储日志信息, 用于记录各种 log 信息。

- default.rgw.control: 系统控制池, 在有数据更新时, 通知其它 RGW 更新缓存。

- default.rgw.meta: 元数据存储池, 通过不同的名称空间分别存储不同的 rados 对象, 这些名称空间包括用户UID 及其 bucket 映射信息的名称空间 users.uid、用户的密钥名称空间users.keys、 用户的 email 名称空间 users.email、用户的 subuser 的名称空间 users.swift,以及 bucket 的名称空间 root 等。

- default.rgw.buckets.index: 存放 bucket 到 object 的索引信息。

- default.rgw.buckets.data: 存放对象的数据。

- default.rgw.buckets.non-ec #数据的额外信息存储池

- default.rgw.users.uid: 存放用户信息的存储池。

- default.rgw.data.root: 存放 bucket 的元数据, 结构体对应 RGWBucketInfo, 比如存放桶名、 桶 ID、 data_pool 等。

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool get default.rgw.meta crush_rule crush_rule: replicated_rule #默认是副本池 cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool get default.rgw.meta size size: 3 #默认的副本数 cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool get default.rgw.meta pgp_num pgp_num: 8 #默认的 pg 数量 cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool get default.rgw.meta pg_num pg_num: 8 cephadmin@ceph-deploy:~/ceph-cluster$

验证 RGW zone 信息:

cephadmin@ceph-deploy:~/ceph-cluster$ radosgw-admin zone get --rgw-zone=default { "id": "a55c81f4-16a0-4cec-b6f1-d345e054f568", "name": "default", "domain_root": "default.rgw.meta:root", "control_pool": "default.rgw.control", "gc_pool": "default.rgw.log:gc", "lc_pool": "default.rgw.log:lc", "log_pool": "default.rgw.log", "intent_log_pool": "default.rgw.log:intent", "usage_log_pool": "default.rgw.log:usage", "roles_pool": "default.rgw.meta:roles", "reshard_pool": "default.rgw.log:reshard", "user_keys_pool": "default.rgw.meta:users.keys", "user_email_pool": "default.rgw.meta:users.email", "user_swift_pool": "default.rgw.meta:users.swift", "user_uid_pool": "default.rgw.meta:users.uid", "otp_pool": "default.rgw.otp", "system_key": { "access_key": "", "secret_key": "" }, "placement_pools": [ { "key": "default-placement", "val": { "index_pool": "default.rgw.buckets.index", "storage_classes": { "STANDARD": { "data_pool": "default.rgw.buckets.data" } }, "data_extra_pool": "default.rgw.buckets.non-ec", "index_type": 0 } } ], "realm_id": "", "notif_pool": "default.rgw.log:notif" } cephadmin@ceph-deploy:~/ceph-cluster$

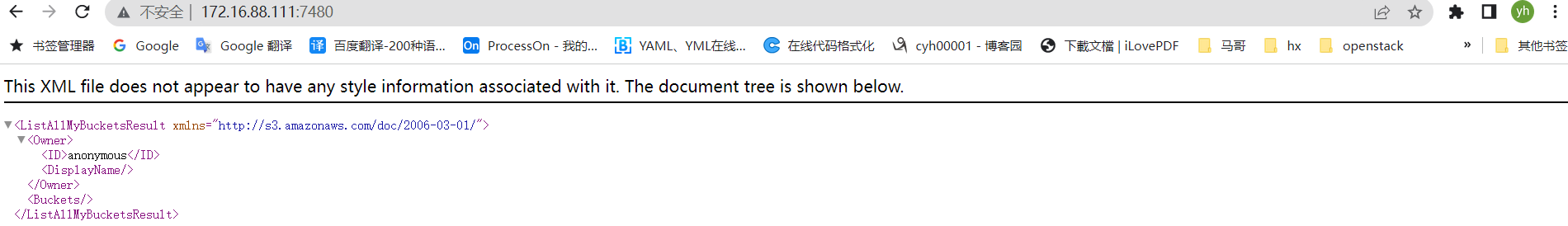

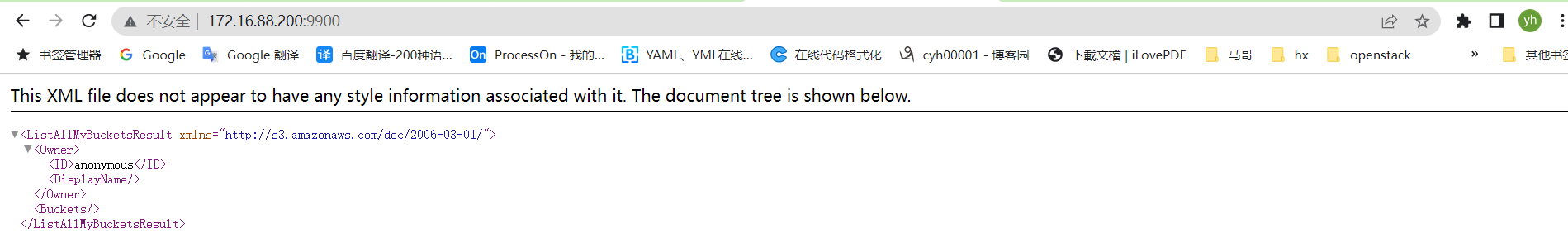

访问 radosgw 服务:

二、radosgw 服务高可用配置

radosgw http 高可用:

自定义 http 端口:

配置文件可以在 ceph deploy 服务器修改然后统一推送, 或者单独修改每个 radosgw 服务器的配置为统一配置, 然后重启 RGW 服务。

https://docs.ceph.com/en/latest/radosgw/frontends/

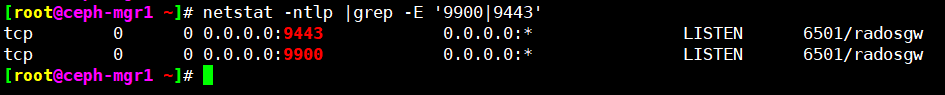

在ceph-mgr1、ceph-mgr2配置ceph.conf

# vi /etc/ceph/ceph.conf [global] fsid = 8dc32c41-121c-49df-9554-dfb7deb8c975 public_network = 172.16.88.0/24 cluster_network = 192.168.122.0/24 mon_initial_members = ceph-mon1,ceph-mon2,ceph-mon3 mon_host = 172.16.88.101,172.16.88.102,172.16.88.103 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx mon clock drift allowed = 2 mon clock drift warn backoff = 30 [mds.ceph-mgr2] mds_standby_for_name = ceph-mgr1 mds_standby_replay = true [mds.ceph-mgr1] mds_standby_for_name = ceph-mgr2 mds_standby_replay = true [mds.ceph-mon3] mds_standby_for_name = ceph-mon2 mds_standby_replay = true [mds.ceph-mon2] mds_standby_for_name = ceph-mon3 mds_standby_replay = true [client.rgw.ceph-mgr1] rgw_host = ceph-mgr1 rgw_frontends = civetweb port=9900 [client.rgw.ceph-mgr2] rgw_host = ceph-mgr2 rgw_frontends = civetweb port=9900

[root@ceph-mgr1 ~]# systemctl restart ceph-radosgw@rgw.ceph-mgr1.service

[root@ceph-mgr2 ~]# systemctl restart ceph-radosgw@rgw.ceph-mgr2.service

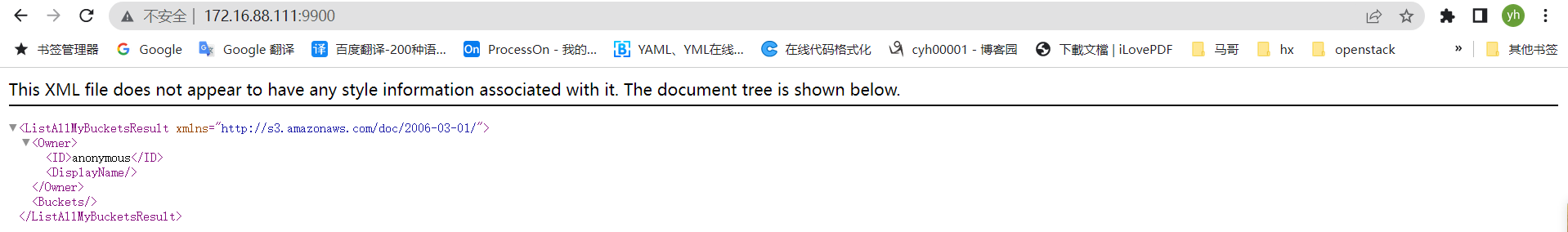

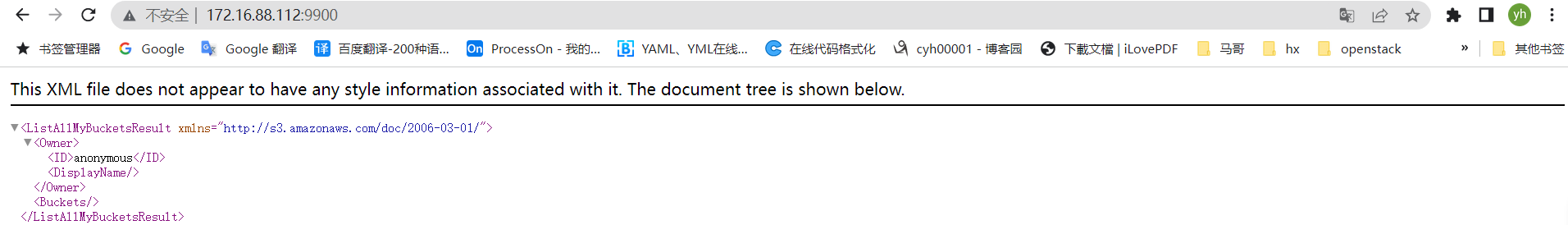

访问测试

安装并配置反向代理:

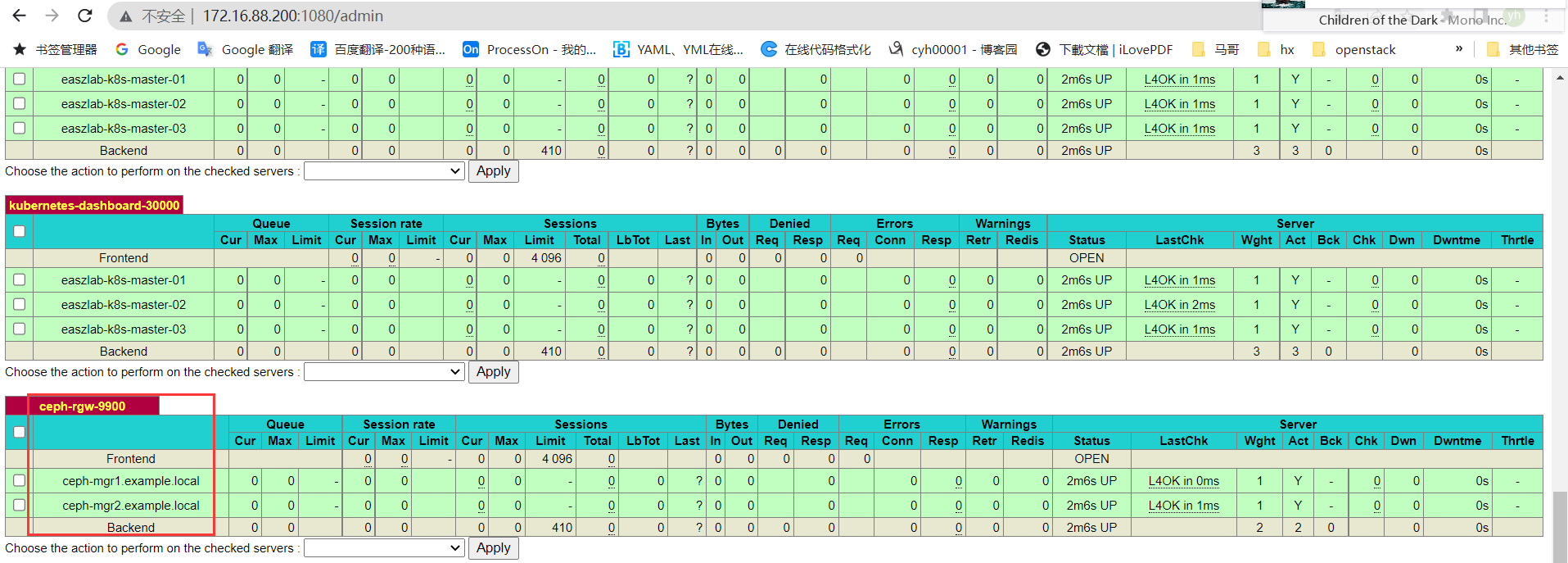

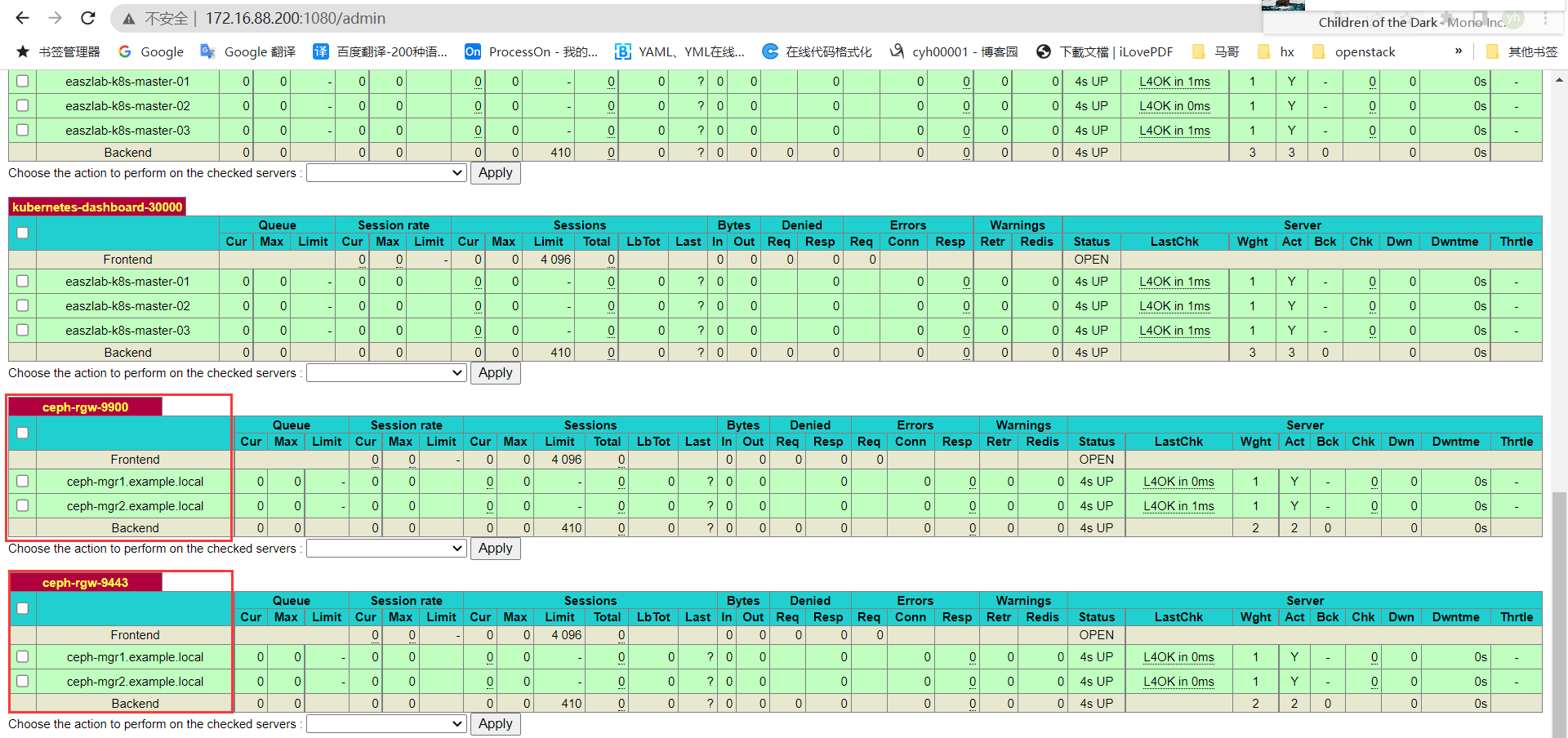

在keepalive+haproxy节点添加如下配置

root@easzlab-haproxy-keepalive-01:~# vi /etc/haproxy/haproxy.cfg root@easzlab-haproxy-keepalive-02:~# vi /etc/haproxy/haproxy.cfg listen ceph-rgw-9900 bind 172.16.88.200:9900 mode tcp server ceph-mgr1.example.local 172.16.88.111:9900 check inter 3s fall 3 rise 5 server ceph-mgr2.example.local 172.16.88.112:9900 check inter 3s fall 3 rise 5

测试 http 反向代理:

radosgw https:

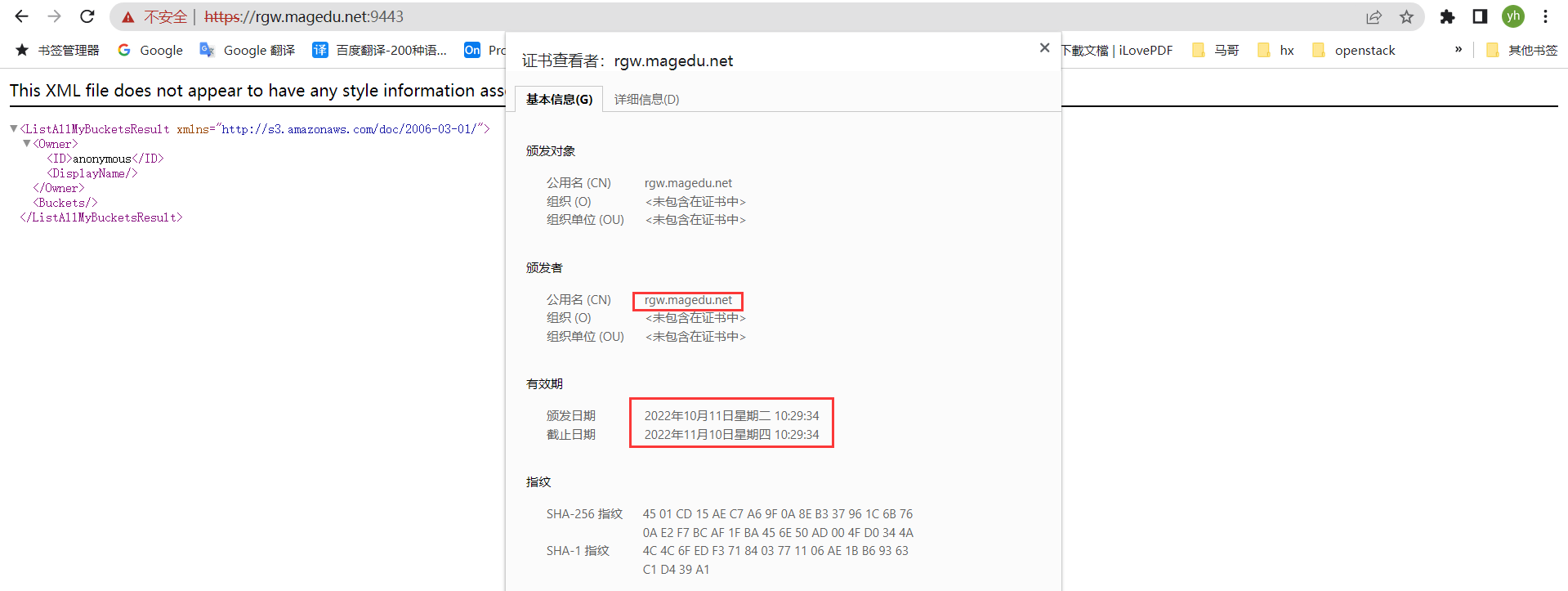

在 rgw 节点生成签名证书并配置 radosgw 启用 SSL

自签名证书:

[root@ceph-mgr2 ~]# cd /etc/ceph/ [root@ceph-mgr2 ceph]# mkdir certs [root@ceph-mgr2 ceph]# cd certs/ [root@ceph-mgr2 certs]# openssl genrsa -out civetweb.key 2048 Generating RSA private key, 2048 bit long modulus (2 primes) ............+++++ ....+++++ e is 65537 (0x010001) [root@ceph-mgr2 certs]# openssl req -new -x509 -key civetweb.key -out civetweb.crt -subj "/CN=rgw.magedu.net" [root@ceph-mgr2 certs]# cat civetweb.key civetweb.crt > civetweb.pem [root@ceph-mgr2 certs]# [root@ceph-mgr2 certs]# tree . ├── civetweb.crt ├── civetweb.key └── civetweb.pem 0 directories, 3 files [root@ceph-mgr2 certs]#

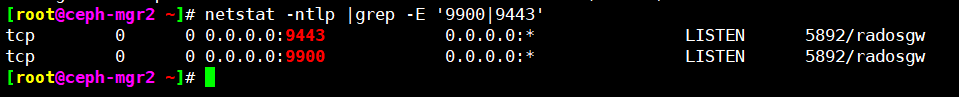

SSL 配置:

同步key到mgr1

[root@ceph-mgr2 ceph]# scp -r /etc/ceph/certs root@172.16.88.111:/etc/ceph

修改/etc/ceph/ceph.conf

[global] fsid = 8dc32c41-121c-49df-9554-dfb7deb8c975 public_network = 172.16.88.0/24 cluster_network = 192.168.122.0/24 mon_initial_members = ceph-mon1,ceph-mon2,ceph-mon3 mon_host = 172.16.88.101,172.16.88.102,172.16.88.103 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx mon clock drift allowed = 2 mon clock drift warn backoff = 30 [mds.ceph-mgr2] mds_standby_for_name = ceph-mgr1 mds_standby_replay = true [mds.ceph-mgr1] mds_standby_for_name = ceph-mgr2 mds_standby_replay = true [mds.ceph-mon3] mds_standby_for_name = ceph-mon2 mds_standby_replay = true [mds.ceph-mon2] mds_standby_for_name = ceph-mon3 mds_standby_replay = true [client.rgw.ceph-mgr1] rgw_host = ceph-mgr1 #rgw_frontends = civetweb port=9900 rgw_frontends = "civetweb port=9900+9443s ssl_certificate=/etc/ceph/certs/civetweb.pem" [client.rgw.ceph-mgr2] rgw_host = ceph-mgr2 #rgw_frontends = civetweb port=9900 rgw_frontends = "civetweb port=9900+9443s ssl_certificate=/etc/ceph/certs/civetweb.pem"

[root@ceph-mgr1 ceph]# systemctl restart ceph-radosgw@rgw.ceph-mgr1.service

[root@ceph-mgr2 ceph]# systemctl restart ceph-radosgw@rgw.ceph-mgr2.service

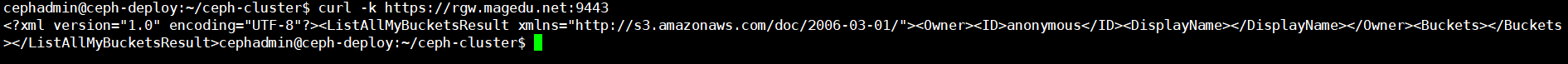

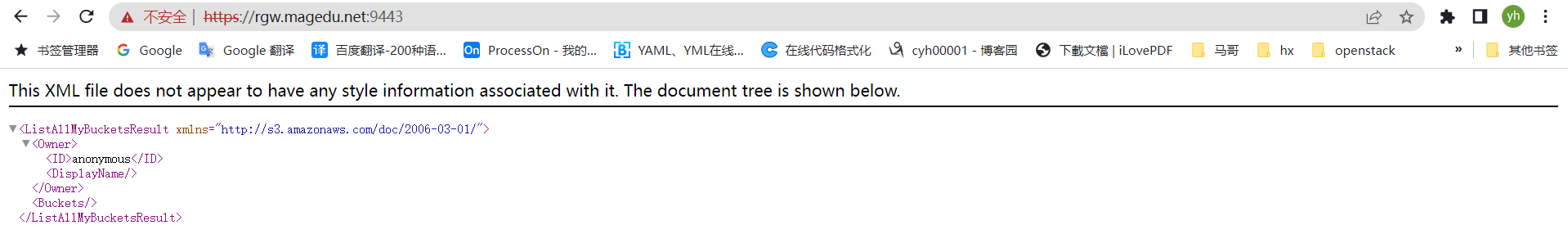

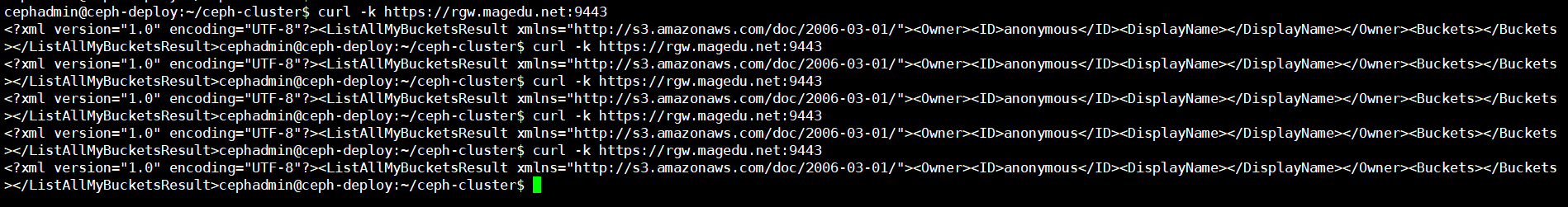

验证访问

本地测试

cephadmin@ceph-deploy:~/ceph-cluster$ vi /etc/hosts

172.16.88.112 rgw.magedu.net #新增此行

访问 https 界面

在keepalive+haproxy代理节点下新增https转发

listen ceph-rgw-9900 bind 172.16.88.200:9900 mode tcp server ceph-mgr1.example.local 172.16.88.111:9900 check inter 3s fall 3 rise 5 server ceph-mgr2.example.local 172.16.88.112:9900 check inter 3s fall 3 rise 5 listen ceph-rgw-9443 bind 172.16.88.200:9443 mode tcp server ceph-mgr1.example.local 172.16.88.111:9443 check inter 3s fall 3 rise 5 server ceph-mgr2.example.local 172.16.88.112:9443 check inter 3s fall 3 rise 5

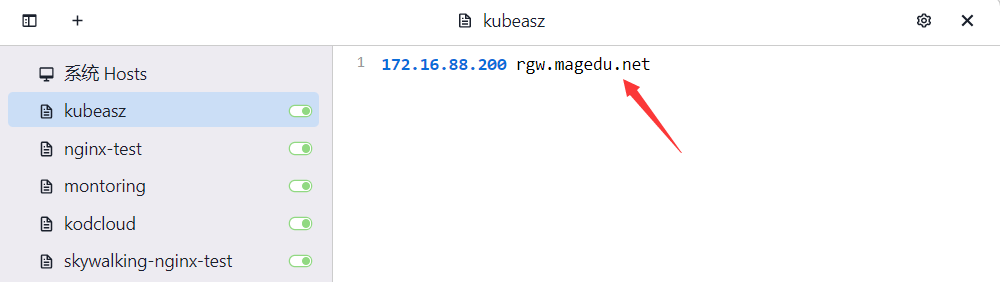

本地windows添加hosts解析

三、日志及其它优化配置

ceph-mgr1、ceph-mgr2创建日志目录

mkdir /var/log/radosgw

chown ceph.ceph /var/log/radosgw

ceph-mgr1、ceph-mgr2修改/etc/ceph/ceph.conf

# vi /etc/ceph/ceph.conf

[global] fsid = 8dc32c41-121c-49df-9554-dfb7deb8c975 public_network = 172.16.88.0/24 cluster_network = 192.168.122.0/24 mon_initial_members = ceph-mon1,ceph-mon2,ceph-mon3 mon_host = 172.16.88.101,172.16.88.102,172.16.88.103 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx mon clock drift allowed = 2 mon clock drift warn backoff = 30 [mds.ceph-mgr2] mds_standby_for_name = ceph-mgr1 mds_standby_replay = true [mds.ceph-mgr1] mds_standby_for_name = ceph-mgr2 mds_standby_replay = true [mds.ceph-mon3] mds_standby_for_name = ceph-mon2 mds_standby_replay = true [mds.ceph-mon2] mds_standby_for_name = ceph-mon3 mds_standby_replay = true [client.rgw.ceph-mgr1] rgw_host = ceph-mgr1 #rgw_frontends = civetweb port=9900 rgw_frontends = "civetweb port=9900+9443s ssl_certificate=/etc/ceph/certs/civetweb.pem error_log_file=/var/log/radosgw/civetweb.error.log access_log_file=/var/log/radosgw/civetweb.access.log request_timeout_ms=30000 num_threads=200" [client.rgw.ceph-mgr2] rgw_host = ceph-mgr2 #rgw_frontends = civetweb port=9900 rgw_frontends = "civetweb port=9900+9443s ssl_certificate=/etc/ceph/certs/civetweb.pem error_log_file=/var/log/radosgw/civetweb.error.log access_log_file=/var/log/radosgw/civetweb.access.log request_timeout_ms=30000 num_threads=200"

[root@ceph-mgr1 ~]# systemctl restart ceph-radosgw@rgw.ceph-mgr1.service

[root@ceph-mgr2 ~]# systemctl restart ceph-radosgw@rgw.ceph-mgr2.service

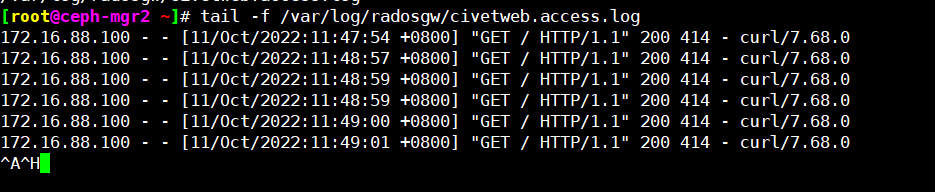

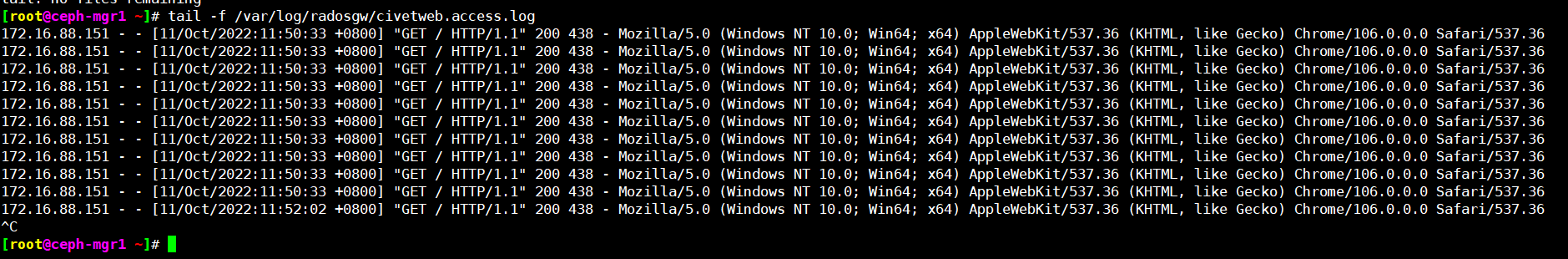

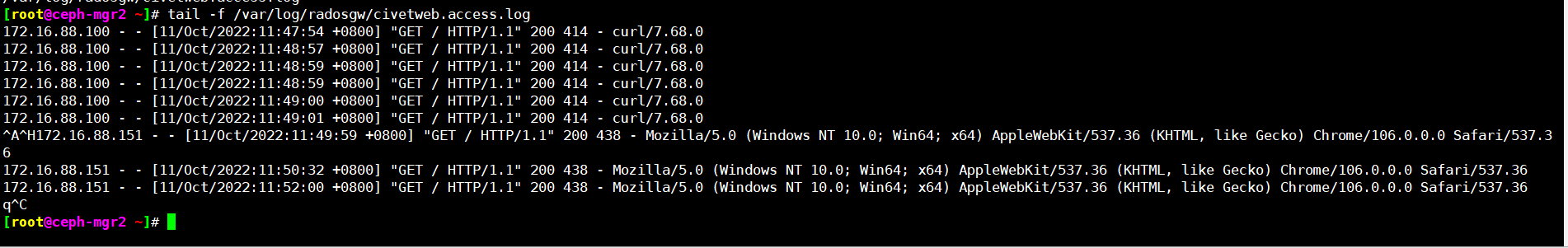

日志测试验证

本地windows客户端刷新https://rgw.magedu.net:9443/

四、客户端(s3cmd)测试数据读写

RGW Server 配置

在实际的生产环境, RGW1 和 RGW2 的配置参数是完全一样的。

配置/etc/ceph/ceph.conf

[global] fsid = 8dc32c41-121c-49df-9554-dfb7deb8c975 public_network = 172.16.88.0/24 cluster_network = 192.168.122.0/24 mon_initial_members = ceph-mon1,ceph-mon2,ceph-mon3 mon_host = 172.16.88.101,172.16.88.102,172.16.88.103 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx mon clock drift allowed = 2 mon clock drift warn backoff = 30 [mds.ceph-mgr2] mds_standby_for_name = ceph-mgr1 mds_standby_replay = true [mds.ceph-mgr1] mds_standby_for_name = ceph-mgr2 mds_standby_replay = true [mds.ceph-mon3] mds_standby_for_name = ceph-mon2 mds_standby_replay = true [mds.ceph-mon2] mds_standby_for_name = ceph-mon3 mds_standby_replay = true [client.rgw.ceph-mgr1] rgw_host = ceph-mgr1 #rgw_frontends = civetweb port=9900 rgw_frontends = "civetweb port=9900+9443s ssl_certificate=/etc/ceph/certs/civetweb.pem error_log_file=/var/log/radosgw/civetweb.error.log access_log_file=/var/log/radosgw/civetweb.access.log request_timeout_ms=30000 num_threads=200" rgw_dns_name = rgw.magedu.net [client.rgw.ceph-mgr2] rgw_host = ceph-mgr2 #rgw_frontends = civetweb port=9900 rgw_frontends = "civetweb port=9900+9443s ssl_certificate=/etc/ceph/certs/civetweb.pem error_log_file=/var/log/radosgw/civetweb.error.log access_log_file=/var/log/radosgw/civetweb.access.log request_timeout_ms=30000 num_threads=200" rgw_dns_name = rgw.magedu.net

[root@ceph-mgr1 ~]# systemctl restart ceph-radosgw@rgw.ceph-mgr1.service

[root@ceph-mgr2 ~]# systemctl restart ceph-radosgw@rgw.ceph-mgr2.service

创建 RGW 账户

cephadmin@ceph-deploy:~/ceph-cluster$ radosgw-admin user create --uid="user1" --display-name="user1" { "user_id": "user1", "display_name": "user1", "email": "", "suspended": 0, "max_buckets": 1000, "subusers": [], "keys": [ { "user": "user1", "access_key": "04XUIEYRYTDUXC332R7H", "secret_key": "uysQEmdYcp9UCv56UHimnMNKQwdiGFfuv4TsMPWy" } ], "swift_keys": [], "caps": [], "op_mask": "read, write, delete", "default_placement": "", "default_storage_class": "", "placement_tags": [], "bucket_quota": { "enabled": false, "check_on_raw": false, "max_size": -1, "max_size_kb": 0, "max_objects": -1 }, "user_quota": { "enabled": false, "check_on_raw": false, "max_size": -1, "max_size_kb": 0, "max_objects": -1 }, "temp_url_keys": [], "type": "rgw", "mfa_ids": [] } cephadmin@ceph-deploy:~/ceph-cluster$

安装 s3cmd 客户端:

s3cmd 是一个通过命令行访问 ceph RGW 实现创建存储同桶、 上传、 下载以及管理数据到对象存储的命令行客户端工具。

cephadmin@ceph-deploy:~/ceph-cluster$ sudo apt-cache madison s3cmd s3cmd | 2.0.2-1ubuntu1 | https://mirrors.tuna.tsinghua.edu.cn/ubuntu focal-updates/universe amd64 Packages s3cmd | 2.0.2-1 | https://mirrors.tuna.tsinghua.edu.cn/ubuntu focal/universe amd64 Packages cephadmin@ceph-deploy:~/ceph-cluster$ sudo apt install s3cmd

配置 s3cmd 客户端执行环境

客户端添加域名解析:

cephadmin@ceph-deploy:~/ceph-cluster$ sudo vi /etc/hosts cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ cat vi /etc/hosts cat: vi: No such file or directory 127.0.0.1 localhost 127.0.1.1 magedu # The following lines are desirable for IPv6 capable hosts ::1 ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters 172.16.88.100 ceph-deploy.example.local ceph-deploy 172.16.88.101 ceph-mon1.example.local ceph-mon1 172.16.88.102 ceph-mon2.example.local ceph-mon2 172.16.88.103 ceph-mon3.example.local ceph-mon3 172.16.88.111 ceph-mgr1.example.local ceph-mgr1 172.16.88.112 ceph-mgr2.example.local ceph-mgr2 172.16.88.121 ceph-node1.example.local ceph-node1 172.16.88.122 ceph-node2.example.local ceph-node2 172.16.88.123 ceph-node3.example.local ceph-node3 172.16.88.200 rgw.magedu.net cephadmin@ceph-deploy:~/ceph-cluster$

配置命令执行环境:

[root@ceph-deploy ~]# s3cmd --configure Enter new values or accept defaults in brackets with Enter. Refer to user manual for detailed description of all options. Access key and Secret key are your identifiers for Amazon S3. Leave them empty for using the env variables. Access Key: 04XUIEYRYTDUXC332R7H Secret Key: uysQEmdYcp9UCv56UHimnMNKQwdiGFfuv4TsMPWy Default Region [US]: #回车 Use "s3.amazonaws.com" for S3 Endpoint and not modify it to the target Amazon S3. S3 Endpoint [s3.amazonaws.com]: rgw.magedu.net:9900 Use "%(bucket)s.s3.amazonaws.com" to the target Amazon S3. "%(bucket)s" and "%(location)s" vars can be used if the target S3 system supports dns based buckets. DNS-style bucket+hostname:port template for accessing a bucket [%(bucket)s.s3.amazonaws.com]: rgw.magedu.net:9900/%(bucket) Encryption password is used to protect your files from reading by unauthorized persons while in transfer to S3 Encryption password: redhat #随便设置一个密码 Path to GPG program [/usr/bin/gpg]: #回车 When using secure HTTPS protocol all communication with Amazon S3 servers is protected from 3rd party eavesdropping. This method is slower than plain HTTP, and can only be proxied with Python 2.7 or newer Use HTTPS protocol [Yes]: No On some networks all internet access must go through a HTTP proxy. Try setting it here if you can't connect to S3 directly HTTP Proxy server name: #回车 New settings: #最终配置 Access Key: 04XUIEYRYTDUXC332R7H Secret Key: uysQEmdYcp9UCv56UHimnMNKQwdiGFfuv4TsMPWy Default Region: US S3 Endpoint: rgw.magedu.net:9900 DNS-style bucket+hostname:port template for accessing a bucket: rgw.magedu.net:9900/%(bucket) Encryption password: redhat Path to GPG program: /usr/bin/gpg Use HTTPS protocol: False HTTP Proxy server name: HTTP Proxy server port: 0 Test access with supplied credentials? [Y/n] Y #是否保存以上配置 Please wait, attempting to list all buckets... WARNING: Retrying failed request: /?delimiter=%2F (Remote end closed connection without response) WARNING: Waiting 3 sec... Success. Your access key and secret key worked fine :-) Now verifying that encryption works... Success. Encryption and decryption worked fine :-) Save settings? [y/N] Y Configuration saved to '/root/.s3cfg' #默认文件保存路径 [root@ceph-deploy ~]#

[root@ceph-deploy ~]# cat /root/.s3cfg

[default] access_key = 04XUIEYRYTDUXC332R7H access_token = add_encoding_exts = add_headers = bucket_location = US ca_certs_file = cache_file = check_ssl_certificate = True check_ssl_hostname = True cloudfront_host = cloudfront.amazonaws.com content_disposition = content_type = default_mime_type = binary/octet-stream delay_updates = False delete_after = False delete_after_fetch = False delete_removed = False dry_run = False enable_multipart = True encoding = UTF-8 encrypt = False expiry_date = expiry_days = expiry_prefix = follow_symlinks = False force = False get_continue = False gpg_command = /usr/bin/gpg gpg_decrypt = %(gpg_command)s -d --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)s gpg_encrypt = %(gpg_command)s -c --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)s gpg_passphrase = redhat guess_mime_type = True host_base = rgw.magedu.net:9900 host_bucket = rgw.magedu.net:9900/%(bucket) human_readable_sizes = False invalidate_default_index_on_cf = False invalidate_default_index_root_on_cf = True invalidate_on_cf = False kms_key = limit = -1 limitrate = 0 list_md5 = False log_target_prefix = long_listing = False max_delete = -1 mime_type = multipart_chunk_size_mb = 15 multipart_max_chunks = 10000 preserve_attrs = True progress_meter = True proxy_host = proxy_port = 0 put_continue = False recursive = False recv_chunk = 65536 reduced_redundancy = False requester_pays = False restore_days = 1 restore_priority = Standard secret_key = uysQEmdYcp9UCv56UHimnMNKQwdiGFfuv4TsMPWy send_chunk = 65536 server_side_encryption = False signature_v2 = False signurl_use_https = False simpledb_host = sdb.amazonaws.com skip_existing = False socket_timeout = 300 stats = False stop_on_error = False storage_class = throttle_max = 100 upload_id = urlencoding_mode = normal use_http_expect = False use_https = False use_mime_magic = True verbosity = WARNING website_endpoint = http://%(bucket)s.s3-website-%(location)s.amazonaws.com/ website_error = website_index = index.html

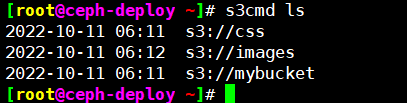

命令行客户端 s3cmd 验证数据上传

查看帮助信息

[root@ceph-deploy ~]# s3cmd --help Usage: s3cmd [options] COMMAND [parameters] S3cmd is a tool for managing objects in Amazon S3 storage. It allows for making and removing "buckets" and uploading, downloading and removing "objects" from these buckets. Options: -h, --help show this help message and exit --configure Invoke interactive (re)configuration tool. Optionally use as '--configure s3://some-bucket' to test access to a specific bucket instead of attempting to list them all. -c FILE, --config=FILE Config file name. Defaults to $HOME/.s3cfg --dump-config Dump current configuration after parsing config files and command line options and exit. --access_key=ACCESS_KEY AWS Access Key --secret_key=SECRET_KEY AWS Secret Key --access_token=ACCESS_TOKEN AWS Access Token -n, --dry-run Only show what should be uploaded or downloaded but don't actually do it. May still perform S3 requests to get bucket listings and other information though (only for file transfer commands) -s, --ssl Use HTTPS connection when communicating with S3. (default) --no-ssl Don't use HTTPS. -e, --encrypt Encrypt files before uploading to S3. --no-encrypt Don't encrypt files. -f, --force Force overwrite and other dangerous operations. --continue Continue getting a partially downloaded file (only for [get] command). --continue-put Continue uploading partially uploaded files or multipart upload parts. Restarts/parts files that don't have matching size and md5. Skips files/parts that do. Note: md5sum checks are not always sufficient to check (part) file equality. Enable this at your own risk. --upload-id=UPLOAD_ID UploadId for Multipart Upload, in case you want continue an existing upload (equivalent to --continue- put) and there are multiple partial uploads. Use s3cmd multipart [URI] to see what UploadIds are associated with the given URI. --skip-existing Skip over files that exist at the destination (only for [get] and [sync] commands). -r, --recursive Recursive upload, download or removal. --check-md5 Check MD5 sums when comparing files for [sync]. (default) --no-check-md5 Do not check MD5 sums when comparing files for [sync]. Only size will be compared. May significantly speed up transfer but may also miss some changed files. -P, --acl-public Store objects with ACL allowing read for anyone. --acl-private Store objects with default ACL allowing access for you only. --acl-grant=PERMISSION:EMAIL or USER_CANONICAL_ID Grant stated permission to a given amazon user. Permission is one of: read, write, read_acp, write_acp, full_control, all --acl-revoke=PERMISSION:USER_CANONICAL_ID Revoke stated permission for a given amazon user. Permission is one of: read, write, read_acp, write_acp, full_control, all -D NUM, --restore-days=NUM Number of days to keep restored file available (only for 'restore' command). --restore-priority=RESTORE_PRIORITY Priority for restoring files from S3 Glacier (only for 'restore' command). Choices available: bulk, standard, expedited --delete-removed Delete destination objects with no corresponding source file [sync] --no-delete-removed Don't delete destination objects. --delete-after Perform deletes AFTER new uploads when delete-removed is enabled [sync] --delay-updates *OBSOLETE* Put all updated files into place at end [sync] --max-delete=NUM Do not delete more than NUM files. [del] and [sync] --limit=NUM Limit number of objects returned in the response body (only for [ls] and [la] commands) --add-destination=ADDITIONAL_DESTINATIONS Additional destination for parallel uploads, in addition to last arg. May be repeated. --delete-after-fetch Delete remote objects after fetching to local file (only for [get] and [sync] commands). -p, --preserve Preserve filesystem attributes (mode, ownership, timestamps). Default for [sync] command. --no-preserve Don't store FS attributes --exclude=GLOB Filenames and paths matching GLOB will be excluded from sync --exclude-from=FILE Read --exclude GLOBs from FILE --rexclude=REGEXP Filenames and paths matching REGEXP (regular expression) will be excluded from sync --rexclude-from=FILE Read --rexclude REGEXPs from FILE --include=GLOB Filenames and paths matching GLOB will be included even if previously excluded by one of --(r)exclude(-from) patterns --include-from=FILE Read --include GLOBs from FILE --rinclude=REGEXP Same as --include but uses REGEXP (regular expression) instead of GLOB --rinclude-from=FILE Read --rinclude REGEXPs from FILE --files-from=FILE Read list of source-file names from FILE. Use - to read from stdin. --region=REGION, --bucket-location=REGION Region to create bucket in. As of now the regions are: us-east-1, us-west-1, us-west-2, eu-west-1, eu- central-1, ap-northeast-1, ap-southeast-1, ap- southeast-2, sa-east-1 --host=HOSTNAME HOSTNAME:PORT for S3 endpoint (default: s3.amazonaws.com, alternatives such as s3-eu- west-1.amazonaws.com). You should also set --host- bucket. --host-bucket=HOST_BUCKET DNS-style bucket+hostname:port template for accessing a bucket (default: %(bucket)s.s3.amazonaws.com) --reduced-redundancy, --rr Store object with 'Reduced redundancy'. Lower per-GB price. [put, cp, mv] --no-reduced-redundancy, --no-rr Store object without 'Reduced redundancy'. Higher per- GB price. [put, cp, mv] --storage-class=CLASS Store object with specified CLASS (STANDARD, STANDARD_IA, or REDUCED_REDUNDANCY). Lower per-GB price. [put, cp, mv] --access-logging-target-prefix=LOG_TARGET_PREFIX Target prefix for access logs (S3 URI) (for [cfmodify] and [accesslog] commands) --no-access-logging Disable access logging (for [cfmodify] and [accesslog] commands) --default-mime-type=DEFAULT_MIME_TYPE Default MIME-type for stored objects. Application default is binary/octet-stream. -M, --guess-mime-type Guess MIME-type of files by their extension or mime magic. Fall back to default MIME-Type as specified by --default-mime-type option --no-guess-mime-type Don't guess MIME-type and use the default type instead. --no-mime-magic Don't use mime magic when guessing MIME-type. -m MIME/TYPE, --mime-type=MIME/TYPE Force MIME-type. Override both --default-mime-type and --guess-mime-type. --add-header=NAME:VALUE Add a given HTTP header to the upload request. Can be used multiple times. For instance set 'Expires' or 'Cache-Control' headers (or both) using this option. --remove-header=NAME Remove a given HTTP header. Can be used multiple times. For instance, remove 'Expires' or 'Cache- Control' headers (or both) using this option. [modify] --server-side-encryption Specifies that server-side encryption will be used when putting objects. [put, sync, cp, modify] --server-side-encryption-kms-id=KMS_KEY Specifies the key id used for server-side encryption with AWS KMS-Managed Keys (SSE-KMS) when putting objects. [put, sync, cp, modify] --encoding=ENCODING Override autodetected terminal and filesystem encoding (character set). Autodetected: UTF-8 --add-encoding-exts=EXTENSIONs Add encoding to these comma delimited extensions i.e. (css,js,html) when uploading to S3 ) --verbatim Use the S3 name as given on the command line. No pre- processing, encoding, etc. Use with caution! --disable-multipart Disable multipart upload on files bigger than --multipart-chunk-size-mb --multipart-chunk-size-mb=SIZE Size of each chunk of a multipart upload. Files bigger than SIZE are automatically uploaded as multithreaded- multipart, smaller files are uploaded using the traditional method. SIZE is in Mega-Bytes, default chunk size is 15MB, minimum allowed chunk size is 5MB, maximum is 5GB. --list-md5 Include MD5 sums in bucket listings (only for 'ls' command). -H, --human-readable-sizes Print sizes in human readable form (eg 1kB instead of 1234). --ws-index=WEBSITE_INDEX Name of index-document (only for [ws-create] command) --ws-error=WEBSITE_ERROR Name of error-document (only for [ws-create] command) --expiry-date=EXPIRY_DATE Indicates when the expiration rule takes effect. (only for [expire] command) --expiry-days=EXPIRY_DAYS Indicates the number of days after object creation the expiration rule takes effect. (only for [expire] command) --expiry-prefix=EXPIRY_PREFIX Identifying one or more objects with the prefix to which the expiration rule applies. (only for [expire] command) --progress Display progress meter (default on TTY). --no-progress Don't display progress meter (default on non-TTY). --stats Give some file-transfer stats. --enable Enable given CloudFront distribution (only for [cfmodify] command) --disable Disable given CloudFront distribution (only for [cfmodify] command) --cf-invalidate Invalidate the uploaded filed in CloudFront. Also see [cfinval] command. --cf-invalidate-default-index When using Custom Origin and S3 static website, invalidate the default index file. --cf-no-invalidate-default-index-root When using Custom Origin and S3 static website, don't invalidate the path to the default index file. --cf-add-cname=CNAME Add given CNAME to a CloudFront distribution (only for [cfcreate] and [cfmodify] commands) --cf-remove-cname=CNAME Remove given CNAME from a CloudFront distribution (only for [cfmodify] command) --cf-comment=COMMENT Set COMMENT for a given CloudFront distribution (only for [cfcreate] and [cfmodify] commands) --cf-default-root-object=DEFAULT_ROOT_OBJECT Set the default root object to return when no object is specified in the URL. Use a relative path, i.e. default/index.html instead of /default/index.html or s3://bucket/default/index.html (only for [cfcreate] and [cfmodify] commands) -v, --verbose Enable verbose output. -d, --debug Enable debug output. --version Show s3cmd version (2.0.2) and exit. -F, --follow-symlinks Follow symbolic links as if they are regular files --cache-file=FILE Cache FILE containing local source MD5 values -q, --quiet Silence output on stdout --ca-certs=CA_CERTS_FILE Path to SSL CA certificate FILE (instead of system default) --check-certificate Check SSL certificate validity --no-check-certificate Do not check SSL certificate validity --check-hostname Check SSL certificate hostname validity --no-check-hostname Do not check SSL certificate hostname validity --signature-v2 Use AWS Signature version 2 instead of newer signature methods. Helpful for S3-like systems that don't have AWS Signature v4 yet. --limit-rate=LIMITRATE Limit the upload or download speed to amount bytes per second. Amount may be expressed in bytes, kilobytes with the k suffix, or megabytes with the m suffix --requester-pays Set the REQUESTER PAYS flag for operations -l, --long-listing Produce long listing [ls] --stop-on-error stop if error in transfer --content-disposition=CONTENT_DISPOSITION Provide a Content-Disposition for signed URLs, e.g., "inline; filename=myvideo.mp4" --content-type=CONTENT_TYPE Provide a Content-Type for signed URLs, e.g., "video/mp4" Commands: Make bucket s3cmd mb s3://BUCKET Remove bucket s3cmd rb s3://BUCKET List objects or buckets s3cmd ls [s3://BUCKET[/PREFIX]] List all object in all buckets s3cmd la Put file into bucket s3cmd put FILE [FILE...] s3://BUCKET[/PREFIX] Get file from bucket s3cmd get s3://BUCKET/OBJECT LOCAL_FILE Delete file from bucket s3cmd del s3://BUCKET/OBJECT Delete file from bucket (alias for del) s3cmd rm s3://BUCKET/OBJECT Restore file from Glacier storage s3cmd restore s3://BUCKET/OBJECT Synchronize a directory tree to S3 (checks files freshness using size and md5 checksum, unless overridden by options, see below) s3cmd sync LOCAL_DIR s3://BUCKET[/PREFIX] or s3://BUCKET[/PREFIX] LOCAL_DIR Disk usage by buckets s3cmd du [s3://BUCKET[/PREFIX]] Get various information about Buckets or Files s3cmd info s3://BUCKET[/OBJECT] Copy object s3cmd cp s3://BUCKET1/OBJECT1 s3://BUCKET2[/OBJECT2] Modify object metadata s3cmd modify s3://BUCKET1/OBJECT Move object s3cmd mv s3://BUCKET1/OBJECT1 s3://BUCKET2[/OBJECT2] Modify Access control list for Bucket or Files s3cmd setacl s3://BUCKET[/OBJECT] Modify Bucket Policy s3cmd setpolicy FILE s3://BUCKET Delete Bucket Policy s3cmd delpolicy s3://BUCKET Modify Bucket CORS s3cmd setcors FILE s3://BUCKET Delete Bucket CORS s3cmd delcors s3://BUCKET Modify Bucket Requester Pays policy s3cmd payer s3://BUCKET Show multipart uploads s3cmd multipart s3://BUCKET [Id] Abort a multipart upload s3cmd abortmp s3://BUCKET/OBJECT Id List parts of a multipart upload s3cmd listmp s3://BUCKET/OBJECT Id Enable/disable bucket access logging s3cmd accesslog s3://BUCKET Sign arbitrary string using the secret key s3cmd sign STRING-TO-SIGN Sign an S3 URL to provide limited public access with expiry s3cmd signurl s3://BUCKET/OBJECT <expiry_epoch|+expiry_offset> Fix invalid file names in a bucket s3cmd fixbucket s3://BUCKET[/PREFIX] Create Website from bucket s3cmd ws-create s3://BUCKET Delete Website s3cmd ws-delete s3://BUCKET Info about Website s3cmd ws-info s3://BUCKET Set or delete expiration rule for the bucket s3cmd expire s3://BUCKET Upload a lifecycle policy for the bucket s3cmd setlifecycle FILE s3://BUCKET Get a lifecycle policy for the bucket s3cmd getlifecycle s3://BUCKET Remove a lifecycle policy for the bucket s3cmd dellifecycle s3://BUCKET List CloudFront distribution points s3cmd cflist Display CloudFront distribution point parameters s3cmd cfinfo [cf://DIST_ID] Create CloudFront distribution point s3cmd cfcreate s3://BUCKET Delete CloudFront distribution point s3cmd cfdelete cf://DIST_ID Change CloudFront distribution point parameters s3cmd cfmodify cf://DIST_ID Display CloudFront invalidation request(s) status s3cmd cfinvalinfo cf://DIST_ID[/INVAL_ID] For more information, updates and news, visit the s3cmd website: http://s3tools.org [root@ceph-deploy ~]#

创建 bucket 以验证权限:

存储空间(Bucket)是用于存储对象(Object)的容器,在上传任意类型的 Object 前,您需要先创建 Bucket。

[root@ceph-deploy ~]# s3cmd mb s3://mybucket Bucket 's3://mybucket/' created [root@ceph-deploy ~]# [root@ceph-deploy ~]# s3cmd mb s3://css Bucket 's3://css/' created [root@ceph-deploy ~]# [root@ceph-deploy ~]# s3cmd mb s3://images Bucket 's3://images/' created [root@ceph-deploy ~]#

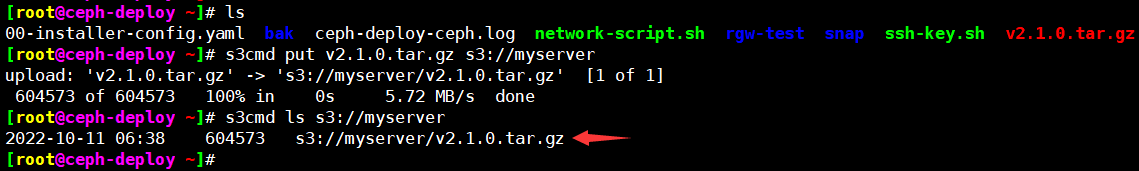

验证上传数测试

[root@ceph-deploy ~]# s3cmd put v2.1.0.tar.gz s3://myserver

上传文件目录

[root@ceph-deploy ~]# s3cmd put rgw-test s3://images ERROR: Parameter problem: Use --recursive to upload a directory: rgw-test [root@ceph-deploy ~]# [root@ceph-deploy ~]# s3cmd put --recursive rgw-test s3://images upload: 'rgw-test/bdlg.jfif' -> 's3://images/rgw-test/bdlg.jfif' [1 of 7] 337895 of 337895 100% in 0s 3.31 MB/s done upload: 'rgw-test/nqtgls1.jpg' -> 's3://images/rgw-test/nqtgls1.jpg' [2 of 7] 6895166 of 6895166 100% in 0s 16.91 MB/s done upload: 'rgw-test/nqtgls2.jpg' -> 's3://images/rgw-test/nqtgls2.jpg' [3 of 7] 5984471 of 5984471 100% in 0s 11.75 MB/s done upload: 'rgw-test/nqtgls3.jpg' -> 's3://images/rgw-test/nqtgls3.jpg' [4 of 7] 6923235 of 6923235 100% in 0s 16.50 MB/s done upload: 'rgw-test/nqtgls4.jpg' -> 's3://images/rgw-test/nqtgls4.jpg' [5 of 7] 4622031 of 4622031 100% in 0s 16.90 MB/s done upload: 'rgw-test/yzyc1.jpg' -> 's3://images/rgw-test/yzyc1.jpg' [6 of 7] 3979758 of 3979758 100% in 0s 12.51 MB/s done upload: 'rgw-test/yzyc2.jpg' -> 's3://images/rgw-test/yzyc2.jpg' [7 of 7] 3469897 of 3469897 100% in 0s 17.89 MB/s done [root@ceph-deploy ~]# s3cmd ls s3://images DIR s3://images/jpg/ DIR s3://images/rgw-test/ [root@ceph-deploy ~]# [root@ceph-deploy ~]# s3cmd ls s3://images/rgw-test/ #查看上传的文件 2022-10-11 06:45 337895 s3://images/rgw-test/bdlg.jfif 2022-10-11 06:45 6895166 s3://images/rgw-test/nqtgls1.jpg 2022-10-11 06:45 5984471 s3://images/rgw-test/nqtgls2.jpg 2022-10-11 06:45 6923235 s3://images/rgw-test/nqtgls3.jpg 2022-10-11 06:45 4622031 s3://images/rgw-test/nqtgls4.jpg 2022-10-11 06:45 3979758 s3://images/rgw-test/yzyc1.jpg 2022-10-11 06:45 3469897 s3://images/rgw-test/yzyc2.jpg [root@ceph-deploy ~]#

下载测试

[root@ceph-deploy ~]# s3cmd get s3://myserver/v2.1.0.tar.gz /tmp download: 's3://myserver/v2.1.0.tar.gz' -> '/tmp/v2.1.0.tar.gz' [1 of 1] 604573 of 604573 100% in 0s 22.83 MB/s done [root@ceph-deploy ~]# ll -h /tmp/ total 640K drwxrwxrwt 12 root root 4.0K Oct 11 14:41 ./ drwxr-xr-x 19 root root 4.0K Jul 25 23:49 ../ drwxrwxrwt 2 root root 4.0K Oct 11 00:30 .ICE-unix/ drwxrwxrwt 2 root root 4.0K Oct 11 00:30 .Test-unix/ drwxrwxrwt 2 root root 4.0K Oct 11 00:30 .X11-unix/ drwxrwxrwt 2 root root 4.0K Oct 11 00:30 .XIM-unix/ drwxrwxrwt 2 root root 4.0K Oct 11 00:30 .font-unix/ drwx------ 3 root root 4.0K Oct 11 00:31 snap.lxd/ drwx------ 3 root root 4.0K Oct 11 00:31 systemd-private-19527b40241540bda8cd30087e14e984-ModemManager.service-cOQ69f/ drwx------ 3 root root 4.0K Oct 11 00:31 systemd-private-19527b40241540bda8cd30087e14e984-systemd-logind.service-l4f6pi/ drwx------ 3 root root 4.0K Oct 11 00:31 systemd-private-19527b40241540bda8cd30087e14e984-systemd-resolved.service-wJO8ei/ drwx------ 3 root root 4.0K Oct 11 00:30 systemd-private-19527b40241540bda8cd30087e14e984-systemd-timesyncd.service-VCgbig/ -rw-r--r-- 1 root root 591K Oct 11 06:38 v2.1.0.tar.gz [root@ceph-deploy ~]#

验证数据完整性

[root@ceph-deploy ~]# md5sum v2.1.0.tar.gz 2e5b96084fe616c248b639c06a3b5e3c v2.1.0.tar.gz [root@ceph-deploy ~]# [root@ceph-deploy ~]# md5sum /tmp/v2.1.0.tar.gz 2e5b96084fe616c248b639c06a3b5e3c /tmp/v2.1.0.tar.gz [root@ceph-deploy ~]#

删除文件

[root@ceph-deploy ~]# s3cmd ls s3://images/ DIR s3://images/jpg/ DIR s3://images/rgw-test/ [root@ceph-deploy ~]# s3cmd ls s3://images/jpg/ 2022-10-11 06:18 6895166 s3://images/jpg/nqtgls1.jpg 2022-10-11 06:19 5984471 s3://images/jpg/nqtgls2.jpg [root@ceph-deploy ~]# s3cmd rm s3://images/jpg/nqtgls2.jpg delete: 's3://images/jpg/nqtgls2.jpg' [root@ceph-deploy ~]# s3cmd ls s3://images/jpg/ 2022-10-11 06:18 6895166 s3://images/jpg/nqtgls1.jpg [root@ceph-deploy ~]#

通过脚本管理

[root@ceph-test-02 ~]# cat ceph-rgw-client.py

#coding:utf-8 # python 3.8 from boto3.session import Session # 新版本boto3 import os class objectclient(): def __init__(self): access_key = '04XUIEYRYTDUXC332R7H' secret_key = 'uysQEmdYcp9UCv56UHimnMNKQwdiGFfuv4TsMPWy' self.session = Session(aws_access_key_id=access_key, aws_secret_access_key=secret_key) #self.url = 'http://172.16.88.112:7480' self.s3_client = self.session.client('s3', endpoint_url='http://172.16.88.200:9900') def get_bucket(self): buckets = [bucket['Name'] for bucket in self.s3_client.list_buckets()['Buckets']] print(buckets) return buckets def create_bucket(self): #指定创建的存储桶名称,默认为私有的存储桶 #self.s3_client.create bucket(Bucket='mytest1111111111') #指定存储桶的权限 #ACL有如下几种"private","public-read","public-read-write","authenticated-read" self.s3_client_create_bucket(Bucket='20221011', ACL='public-read') def upload(self): file_list=os.listdir("./videos/") for name in file_list: print(name) resp = self.s3_client.put_object( ContentType='video/mp4', Bucket="video", #上传到这个存储桶里 Key="%s" % name, #上传后的目录文件名称 Boby=open("./videos/%s" % name, 'rb').read() ) print(resp) #return resp #resp = self.s3_client.put_object( # Bucket="20221011",#上传到这个存储桶里面 # Key='xxxxxx.txt',#上传后的目录文件名称 # Boby=open("xxx/xxx/xxx.txt", 'rb').read() #) #print(resp) #return resp def download(self): resp = self.s3_client.get_object( Bucket='test-s3cmd', Key='xxxxxxxx.tar.gz' ) with open('./xxx.tar.gz', 'wb') as f: #保存到本地的此文件 f.write(resp['Boby'].read()) if __name__ == " main ": # boto3 s3_boto3 = objectclient() # s3_boto3.create_bucket() #创建bucket s3_boto3.get_bucket() #查询bucket # s3_boto3.upload() #上传文件 # s3_boto3.download() #下载文件

分别选择开启创建、查询、上传、下载选项

# s3_boto3.create_bucket() #创建bucket

# s3_boto3.get_bucket() #查询bucket

# s3_boto3.upload() #上传文件

# s3_boto3.download() #下载文件

[root@ceph-deploy ~]# python3 ceph-rgw-client.py

五、ceph对象存储允许匿名用户访问案例

5.1、授权简介及预览

https://docs.aws.amazon.com/zh_cn/AmazonS3/latest/userguide/example-bucket-policies.html

- Resources:授权的目的Buckets、objects等资源,必须指定。

- Actions: 要授予的动作,CreateBucket、DeleteObject、GetObject、PutObject。必须指定

- Effect:要授予的操作效果是允许(allow)还是拒绝(deny),默认为拒绝访问所有资源,必须指定。

- Principal: 要授予的目的账号,必须指定。

- Condition: 授权策略生效的条件,比如访问的TLS版本等,非必须,可不写。

"Condition": { "NumericLessThan": { "s3:TlsVersion": 1.2 } }

5.2:权限集合

https://docs.aws.amazon.com/zh_cn/AmazonS3/latest/API/API_Operations.html

s3:AbortMultipartUpload

s3:CompleteMultipartUpload

s3:CopyObject

s3:CreateBucket

s3:CreateMultipartUpload

s3:DeleteBucket

s3:DeleteBucketAnalyticConfiguration

s3:DeleteBucketCor

s3:DeleteBucketEncryption

s3:DeleteBucketIntelligentTieringConfiguration

s3:DeleteBucketInventoryConfiguration

s3:DeleteBucketLifecycle

s3:DeleteBucketMetricConfiguration

s3:DeleteBucketOwnerhipControl

s3:DeleteBucketPolicy

s3:DeleteBucketReplication

s3:DeleteBucketTagging

s3:DeleteBucketWebite

s3:DeleteObject

s3:DeleteObject

s3:DeleteObjectTagging

s3:DeletePublicAcceBlock

s3:GetBucketAccelerateConfiguration

s3:GetBucketAcl

s3:GetBucketAnalyticConfiguration

s3:GetBucketCor

s3:GetBucketEncryption

s3:GetBucketIntelligentTieringConfiguration

s3:GetBucketInventoryConfiguration

s3:GetBucketLifecycle

s3:GetBucketLifecycleConfiguration

s3:GetBucketLocation

s3:GetBucketLogging

s3:GetBucketMetricConfiguration

s3:GetBucketNotification

s3:GetBucketNotificationConfiguration

s3:GetBucketOwnerhipControl

s3:GetBucketPolicy

s3:GetBucketPolicyStatu

s3:GetBucketReplication

s3:GetBucketRequetPayment

s3:GetBucketTagging

s3:GetBucketVerioning

s3:GetBucketWebite

s3:GetObject

s3:GetObjectAcl

s3:GetObjectAttribute

s3:GetObjectLegalHold

s3:GetObjectLockConfiguration

s3:GetObjectRetention

s3:GetObjectTagging

s3:GetObjectTorrent

s3:GetPublicAcceBlock

s3:HeadBucket

s3:HeadObject

s3:LitBucketAnalyticConfiguration

s3:LitBucketIntelligentTieringConfiguration

s3:LitBucketInventoryConfiguration

s3:LitBucketMetricConfiguration

s3:LitBucket

s3:LitMultipartUpload

s3:LitObject

s3:LitObjectV2

s3:LitObjectVerion

s3:LitPart

s3:PutBucketAccelerateConfiguration

s3:PutBucketAcl

s3:PutBucketAnalyticConfiguration

s3:PutBucketCor

s3:PutBucketEncryption

s3:PutBucketIntelligentTieringConfiguration

s3:PutBucketInventoryConfiguration

s3:PutBucketLifecycle

s3:PutBucketLifecycleConfiguration

s3:PutBucketLogging

s3:PutBucketMetricConfiguration

s3:PutBucketNotification

s3:PutBucketNotificationConfiguration

s3:PutBucketOwnerhipControl

s3:PutBucketPolicy

s3:PutBucketReplication

s3:PutBucketRequetPayment

s3:PutBucketTagging

s3:PutBucketVerioning

s3:PutBucketWebite

s3:PutObject

s3:PutObjectAcl

s3:PutObjectLegalHold

s3:PutObjectLockConfiguration

s3:PutObjectRetention

s3:PutObjectTagging

s3:PutPublicAcceBlock

s3:RetoreObject

s3:SelectObjectContent

s3:UploadPart

s3:UploadPartCopy

s3:WriteGetObjectRepone

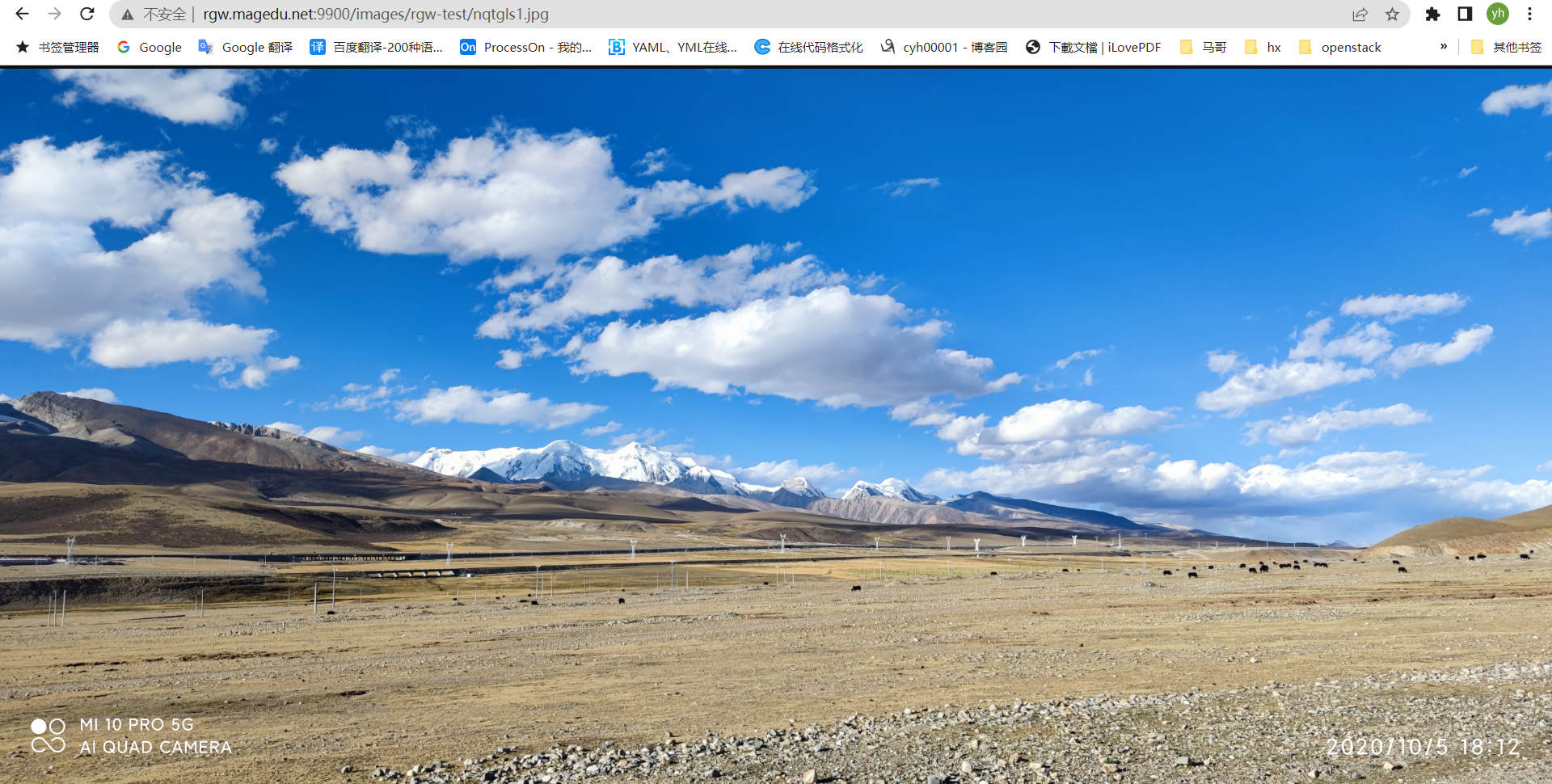

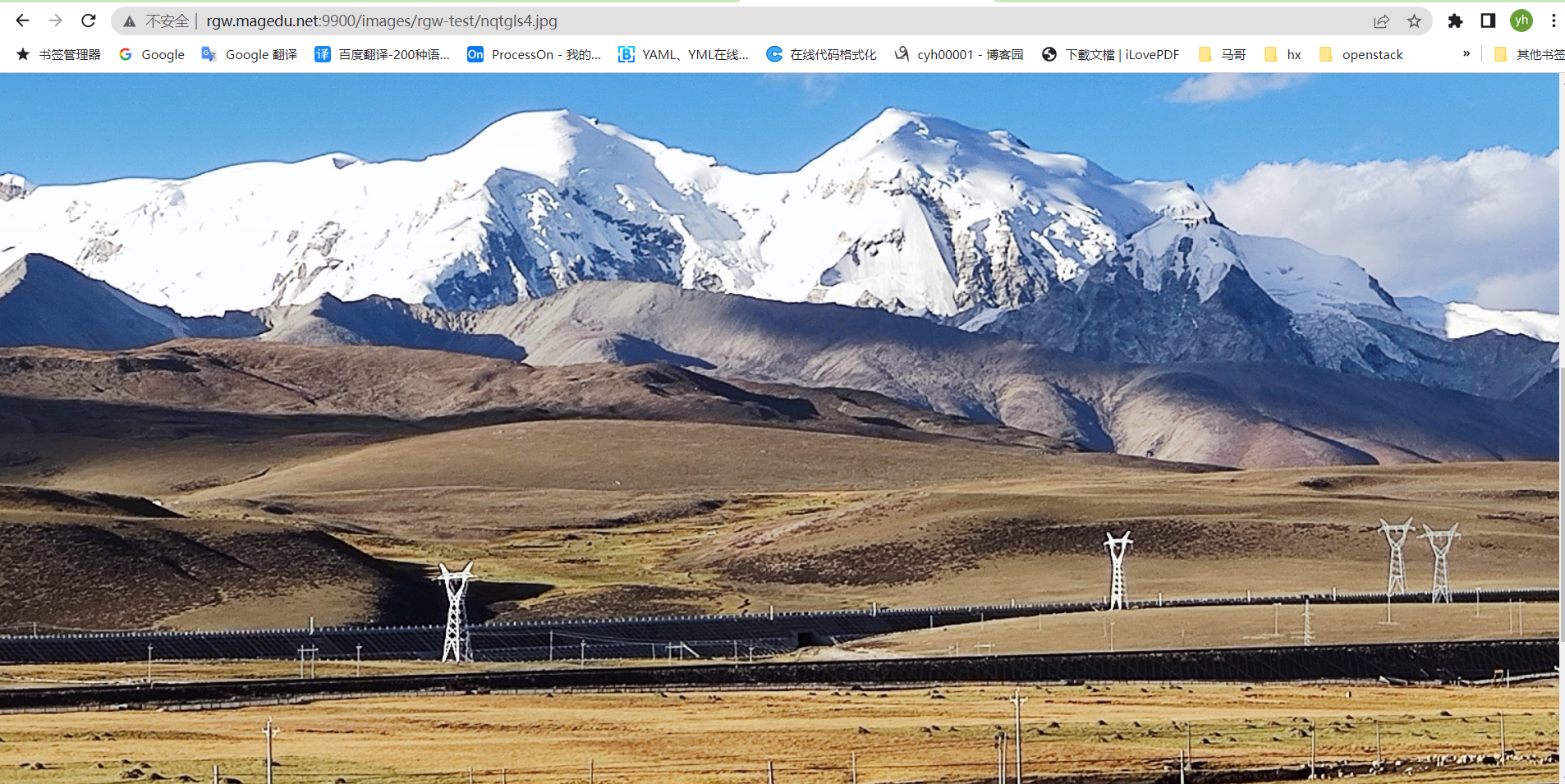

授权匿名用户GetObject权限:

[root@ceph-deploy ~]# cat images-bucket-single_policy { "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": "*", "Action": "s3:GetObject", "Resource": [ "arn:aws:s3:::images/*" ] }] } [root@ceph-deploy ~]# [root@ceph-deploy ~]# s3cmd setpolicy images-bucket-single_policy s3://images s3://images/: Policy updated [root@ceph-deploy ~]#

[root@ceph-deploy ~]# s3cmd ls s3://images/rgw-test/ 2022-10-11 06:45 337895 s3://images/rgw-test/bdlg.jfif 2022-10-11 06:45 6895166 s3://images/rgw-test/nqtgls1.jpg 2022-10-11 06:45 5984471 s3://images/rgw-test/nqtgls2.jpg 2022-10-11 06:45 6923235 s3://images/rgw-test/nqtgls3.jpg 2022-10-11 06:45 4622031 s3://images/rgw-test/nqtgls4.jpg 2022-10-11 06:45 3979758 s3://images/rgw-test/yzyc1.jpg 2022-10-11 06:45 3469897 s3://images/rgw-test/yzyc2.jpg [root@ceph-deploy ~]#

访问测试

5.3、测试video bucket

[root@ceph-deploy ~]# s3cmd mb s3://video #创建video视频目录bucket Bucket 's3://video/' created [root@ceph-deploy ~]# [root@ceph-deploy ~]# mkdir video-test

#上传测试视频 [root@ceph-deploy ~]# ll -h video-test/ total 2.7M drwxr-xr-x 2 root root 4.0K Oct 11 20:52 ./ drwx------ 10 root root 4.0K Oct 11 20:51 ../ -rw-r--r-- 1 root root 1.8M Oct 11 20:52 410a50fef9df7f06cbbe623f3057569f.mp4 -rw-r--r-- 1 root root 816K Oct 11 20:52 f9fc43f9c45b22f446657da53bf338a4.mp4 [root@ceph-deploy ~]# [root@ceph-deploy ~]# s3cmd put --recursive video-test s3://video #指定目录批量上传 upload: 'video-test/410a50fef9df7f06cbbe623f3057569f.mp4' -> 's3://video/video-test/410a50fef9df7f06cbbe623f3057569f.mp4' [1 of 2] 1882656 of 1882656 100% in 0s 12.75 MB/s done upload: 'video-test/f9fc43f9c45b22f446657da53bf338a4.mp4' -> 's3://video/video-test/f9fc43f9c45b22f446657da53bf338a4.mp4' [2 of 2] 835506 of 835506 100% in 0s 11.89 MB/s done [root@ceph-deploy ~]# [root@ceph-deploy ~]# s3cmd ls s3://video/video-test/ #查看上传到文件 2022-10-11 12:53 1882656 s3://video/video-test/410a50fef9df7f06cbbe623f3057569f.mp4 2022-10-11 12:53 835506 s3://video/video-test/f9fc43f9c45b22f446657da53bf338a4.mp4 [root@ceph-deploy ~]# [root@ceph-deploy ~]# vi video-bucket-single_policy #创建video bucket匿名访问授权json [root@ceph-deploy ~]# cat video-bucket-single_policy { "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": "*", "Action": "s3:GetObject", "Resource": [ "arn:aws:s3:::video/*" ] }] } [root@ceph-deploy ~]# [root@ceph-deploy ~]# s3cmd setpolicy video-bucket-single_policy s3://video #对video目录匿名用户授权 s3://video/: Policy updated [root@ceph-deploy ~]#