Ceph使用---RBD使用详解

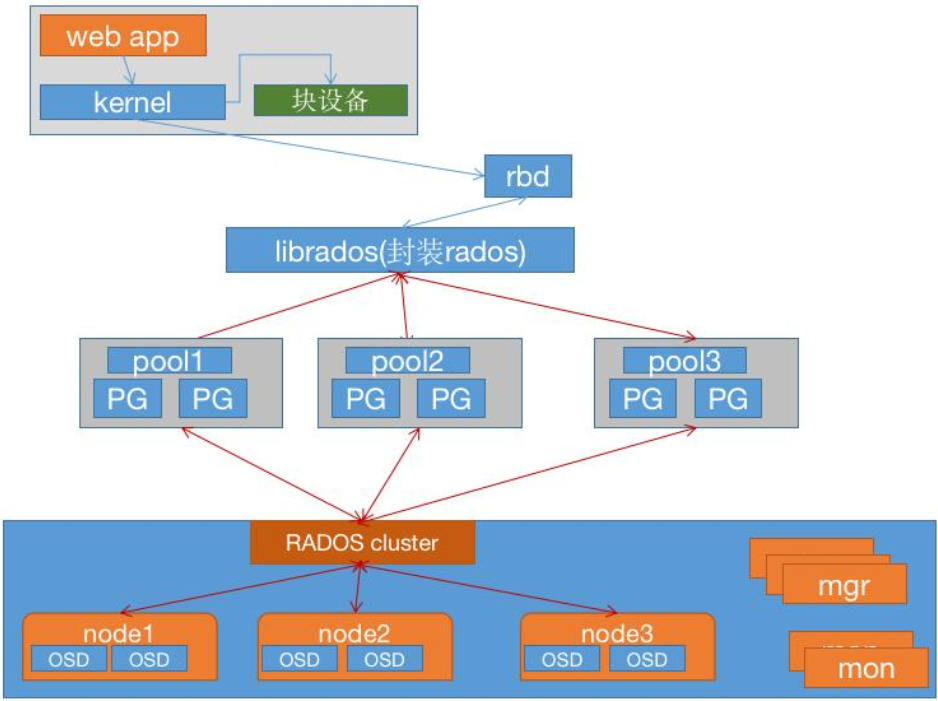

一、RBD架构与介绍

Ceph 可以同时提供 RADOSGW(对象存储网关)、 RBD(块存储)、 Ceph FS(文件系统存储),RBD 即 RADOS Block Device 的简称, RBD 块存储是常用的存储类型之一, RBD 块设备类似磁盘可以被挂载, RBD 块设备具有快照、 多副本、 克隆和一致性等特性, 数据以条带化的方式存储在 Ceph 集群的多个 OSD 中。

条带化技术就是一种自动的将 I/O 的负载均衡到多个物理磁盘上的技术, 条带化技术就是将一块连续的数据分成很多小部分并把他们分别存储到不同磁盘上去。 这就能使多个进程同时访问数据的多个不同部分而不会造成磁盘冲突, 而且在需要对这种数据进行顺序访问的时候可以获得最大程度上的 I/O 并行能力, 从而获得非常好的性能。

二、创建存储池

#创建存储池

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool create rbd-data1 32 32 pool 'rbd-data1' created cephadmin@ceph-deploy:~/ceph-cluster$

#验证存储池 cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool ls device_health_metrics myrbd1 .rgw.root default.rgw.log default.rgw.control default.rgw.meta cephfs-metadata cephfs-data mypool rbd-data1

cephadmin@ceph-deploy:~/ceph-cluster$

#在存储池中启用rbd

osd pool application enable <poolname> <app> {--yes-i-really-mean-it} enable use of an application <app> [cephfs,rbd,rgw] on pool <poolname>

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool application enable rbd-data1 rbd enabled application 'rbd' on pool 'rbd-data1' cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ rbd pool init -p rbd-data1 #初始化rbd cephadmin@ceph-deploy:~/ceph-cluster$

创建img镜像

rbd 存储池并不能直接用于块设备,而是需要事先在其中按需创建映像(image),并把映像文件作为块设备使用。 rbd 命令可用于创建、查看及删除块设备相在的映像(image),以及克隆映像、创建快照、 将映像回滚到快照和查看快照等管理操作。 例如, 下面的命令能够在指定的 RBD 即 rbd-data1 创建一个名为 myimg1 的映像

创建两个镜像

cephadmin@ceph-deploy:~/ceph-cluster$ rbd create data-img1 --size 3G --pool rbd-data1 --image-format 2 --image-feature layering cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ rbd create data-img2 --size 5G --pool rbd-data1 --image-format 2 --image-feature layering cephadmin@ceph-deploy:~/ceph-cluster$

验证镜像

cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls --pool rbd-data1 data-img1 data-img2 cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$

列出多个镜像信息

cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls --pool rbd-data1 -l NAME SIZE PARENT FMT PROT LOCK data-img1 3 GiB 2 data-img2 5 GiB 2 cephadmin@ceph-deploy:~/ceph-cluster$

查看镜像详细信息

cephadmin@ceph-deploy:~/ceph-cluster$ rbd --image data-img2 --pool rbd-data1 info rbd image 'data-img2': size 5 GiB in 1280 objects order 22 (4 MiB objects) #对象大小, 每个对象是 2^22/1024/1024=4MiB

snapshot_count: 0 id: 8b3f28c53db4 #镜像id block_name_prefix: rbd_data.8b3f28c53db4 #上面5G size 里面的 1280 个对象名称前缀 format: 2 #镜像文件格式版本 features: layering #特性, layering 支持分层快照以写时复制 op_features: flags: create_timestamp: Sun Oct 9 13:59:31 2022 access_timestamp: Sun Oct 9 13:59:31 2022 modify_timestamp: Sun Oct 9 13:59:31 2022 cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ rbd --image data-img1 --pool rbd-data1 info rbd image 'data-img1': size 3 GiB in 768 objects order 22 (4 MiB objects) snapshot_count: 0 id: 8b3958172543 block_name_prefix: rbd_data.8b3958172543 format: 2 features: layering op_features: flags: create_timestamp: Sun Oct 9 13:59:19 2022 access_timestamp: Sun Oct 9 13:59:19 2022 modify_timestamp: Sun Oct 9 13:59:19 2022 cephadmin@ceph-deploy:~/ceph-cluster$

以json格式现实镜像信息

cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls --pool rbd-data1 -l --format json --pretty-format [ { "image": "data-img1", "id": "8b3958172543", "size": 3221225472, "format": 2 }, { "image": "data-img2", "id": "8b3f28c53db4", "size": 5368709120, "format": 2 } ] cephadmin@ceph-deploy:~/ceph-cluster$

镜像的其他特性

cephadmin@ceph-deploy:~/ceph-cluster$ rbd help feature enable usage: rbd feature enable [--pool <pool>] [--namespace <namespace>] [--image <image>] [--journal-splay-width <journal-splay-width>] [--journal-object-size <journal-object-size>] [--journal-pool <journal-pool>] <image-spec> <features> [<features> ...] Enable the specified image feature. Positional arguments <image-spec> image specification (example: [<pool-name>/[<namespace>/]]<image-name>) <features> image features [exclusive-lock, object-map, journaling] Optional arguments -p [ --pool ] arg pool name --namespace arg namespace name --image arg image name --journal-splay-width arg number of active journal objects --journal-object-size arg size of journal objects [4K <= size <= 64M] --journal-pool arg pool for journal objects cephadmin@ceph-deploy:~/ceph-cluster$ #特性简介 layering: 支持镜像分层快照特性,用于快照及写时复制,可以对image创建快照并保护,然后从快照克隆出新的image出来,父子image之间采用COW技术,共享对象数据。 striping: 支持条带化v2,类似raid 0,只不过在ceph环境中的数据被分散到不同的对象中,可改善顺序读写场景较多情况下的性能。 exclusive-lock: 支持独占锁,限制一个镜像只能被一个客户端使用。 object-map: 支持对象映射(依赖 exclusive-lock),加速数据导入导出及已用空间统计等,此特性开启的时候,会记录image所有对象的一个位图,用以标记对象是否真的存在,在一些场景下可以加速 io。 fast-diff: 快速计算镜像与快照数据差异对比(依赖 object-map)。 deep-flatten: 支持快照扁平化操作, 用于快照管理时解决快照依赖关系等。 journaling: 修改数据是否记录日志, 该特性可以通过记录日志并通过日志恢复数据(依赖独占锁),开启此特性会增加系统磁盘 IO 使用。 jewel 默认开启的特性包括: layering/exlcusive lock/object map/fast diff/deep flatten

镜像特性的启用

cephadmin@ceph-deploy:~/ceph-cluster$ rbd feature enable exclusive-lock --pool rbd-data1 --image data-img1 cephadmin@ceph-deploy:~/ceph-cluster$ rbd feature enable object-map --pool rbd-data1 --image data-img1 cephadmin@ceph-deploy:~/ceph-cluster$ rbd feature enable fast-diff --pool rbd-data1 --image data-img1 #新版ceph,object-map、fast-diff合在一起 rbd: failed to update image features: (22) Invalid argument 2022-10-09T14:12:29.206+0800 7f0c25f3d340 -1 librbd::Operations: one or more requested features are already enabled cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ rbd --image data-img1 --pool rbd-data1 info rbd image 'data-img1': size 3 GiB in 768 objects order 22 (4 MiB objects) snapshot_count: 0 id: 8b3958172543 block_name_prefix: rbd_data.8b3958172543 format: 2 features: layering, exclusive-lock, object-map, fast-diff op_features: flags: object map invalid, fast diff invalid create_timestamp: Sun Oct 9 13:59:19 2022 access_timestamp: Sun Oct 9 13:59:19 2022 modify_timestamp: Sun Oct 9 13:59:19 2022 cephadmin@ceph-deploy:~/ceph-cluster$

镜像特性的禁用

cephadmin@ceph-deploy:~/ceph-cluster$ rbd --image data-img1 --pool rbd-data1 info rbd image 'data-img1': size 3 GiB in 768 objects order 22 (4 MiB objects) snapshot_count: 0 id: 8b3958172543 block_name_prefix: rbd_data.8b3958172543 format: 2 features: layering, exclusive-lock, object-map, fast-diff op_features: flags: object map invalid, fast diff invalid create_timestamp: Sun Oct 9 13:59:19 2022 access_timestamp: Sun Oct 9 13:59:19 2022 modify_timestamp: Sun Oct 9 13:59:19 2022 cephadmin@ceph-deploy:~/ceph-cluster$

#禁用指定存储池中指定镜像的特性

cephadmin@ceph-deploy:~/ceph-cluster$ rbd feature disable fast-diff --pool rbd-data1 --image data-img1

#验证镜像特性

cephadmin@ceph-deploy:~/ceph-cluster$ rbd --image data-img1 --pool rbd-data1 info rbd image 'data-img1': size 3 GiB in 768 objects order 22 (4 MiB objects) snapshot_count: 0 id: 8b3958172543 block_name_prefix: rbd_data.8b3958172543 format: 2 features: layering, exclusive-lock #少了object-map, fast-diff op_features: flags: create_timestamp: Sun Oct 9 13:59:19 2022 access_timestamp: Sun Oct 9 13:59:19 2022 modify_timestamp: Sun Oct 9 13:59:19 2022 cephadmin@ceph-deploy:~/ceph-cluster$

三、配置客户端使用 RBD

在centos客户端挂载RBD,并分别使用admin及普通用户挂载RBD并验证使用。

客户端要想挂载使用 ceph RBD,需要安装ceph客户端组件ceph-common,但是ceph-common不在cenos的yum仓库,因此需要单独配置yum源。

#配置 yum 源: #yum install epel-release #yum install https://mirrors.aliyun.com/ceph/rpm-octopus/el7/noarch/ceph-release-1-1.el7.noarch.rpm -y

#yum install ceph-common #安装ceph客户端

客户端使用 admin 账户挂载并使用 RBD

测试使用 admin 账户:

#从部署服务器同步认证文件:

cephadmin@ceph-deploy:~/ceph-cluster$ sudo scp ceph.conf ceph.client.admin.keyring root@172.16.88.60:/etc/ceph

客户端映射镜像

[root@ceph-client-test ~]# rbd -p rbd-data1 map data-img1 rbd: sysfs write failed RBD image feature set mismatch. You can disable features unsupported by the kernel with "rbd feature disable rbd-data1/data-img1 object-map fast-diff". In some cases useful info is found in syslog - try "dmesg | tail". rbd: map failed: (6) No such device or address #部分特性不支持,需要在 ceph 管理端关闭特性 object-map [root@ceph-client-test ~]# cephadmin@ceph-deploy:~/ceph-cluster$ rbd --image data-img1 --pool rbd-data1 info rbd image 'data-img1': size 3 GiB in 768 objects order 22 (4 MiB objects) snapshot_count: 0 id: 8b3958172543 block_name_prefix: rbd_data.8b3958172543 format: 2 features: layering, exclusive-lock, object-map, fast-diff op_features: flags: object map invalid, fast diff invalid create_timestamp: Sun Oct 9 13:59:19 2022 access_timestamp: Sun Oct 9 13:59:19 2022 modify_timestamp: Sun Oct 9 13:59:19 2022 cephadmin@ceph-deploy:~/ceph-cluster$

#管理端关闭 img data-img1 特性 object-map

cephadmin@ceph-deploy:~/ceph-cluster$ rbd feature disable object-map --pool rbd-data1 --image data-img1 cephadmin@ceph-deploy:~/ceph-cluster$ rbd --image data-img1 --pool rbd-data1 info rbd image 'data-img1': size 3 GiB in 768 objects order 22 (4 MiB objects) snapshot_count: 0 id: 8b3958172543 block_name_prefix: rbd_data.8b3958172543 format: 2 features: layering, exclusive-lock op_features: flags: create_timestamp: Sun Oct 9 13:59:19 2022 access_timestamp: Sun Oct 9 13:59:19 2022 modify_timestamp: Sun Oct 9 13:59:19 2022 cephadmin@ceph-deploy:~/ceph-cluster$ [root@ceph-client-test ~]# [root@ceph-client-test ~]# rbd -p rbd-data1 map data-img1 /dev/rbd0 [root@ceph-client-test ~]#

[root@ceph-client-test ~]# rbd -p rbd-data1 map data-img2

/dev/rbd1

[root@ceph-client-test ~]#

客户端验证镜像

[root@ceph-client-test ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sr0 11:0 1 1024M 0 rom vda 252:0 0 50G 0 disk ├─vda1 252:1 0 500M 0 part /boot └─vda2 252:2 0 49G 0 part └─centos-root 253:0 0 49G 0 lvm / rbd0 251:0 0 3G 0 disk rbd1 251:16 0 5G 0 disk [root@ceph-client-test ~]#

客户端格式化磁盘并挂载使用

在客户端格式化 rbd 并挂载

[root@ceph-client-test ~]# mkfs.xfs /dev/rbd0 Discarding blocks...Done. meta-data=/dev/rbd0 isize=512 agcount=8, agsize=98304 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=786432, imaxpct=25 = sunit=1024 swidth=1024 blks naming =version 2 bsize=4096 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=8 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 [root@ceph-client-test ~]# [root@ceph-client-test ~]# mkfs.xfs /dev/rbd1 Discarding blocks...Done. meta-data=/dev/rbd1 isize=512 agcount=8, agsize=163840 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=1310720, imaxpct=25 = sunit=1024 swidth=1024 blks naming =version 2 bsize=4096 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=8 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 [root@ceph-client-test ~]# [root@ceph-client-test ~]# mkdir /data /data1 -p [root@ceph-client-test ~]# mount /dev/rbd0 /data [root@ceph-client-test ~]# mount /dev/rbd1 /data1 [root@ceph-client-test ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 3.9G 0 3.9G 0% /dev tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs 3.9G 8.6M 3.9G 1% /run tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/mapper/centos-root 49G 2.1G 47G 5% / /dev/vda1 497M 186M 311M 38% /boot tmpfs 783M 0 783M 0% /run/user/0 /dev/rbd0 3.0G 33M 3.0G 2% /data /dev/rbd1 5.0G 33M 5.0G 1% /data1 [root@ceph-client-test ~]#

客户端验证写入数据

安装 docker 并创建 mysql 容器, 验证容器数据能否写入 rbd 挂载的路径/data

[root@ceph-client-test ~]# yum install -y yum-utils device-mapper-persistent-data lvm2 [root@ceph-client-test ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo [root@ceph-client-test ~]# yum -y install docker-ce -y [root@ceph-client-test ~]# systemctl enable --now docker [root@ceph-client-test ~]# docker run --name mysql-server --restart=unless-stopped -it -d -p 3306:3306 -v /data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD="123456" -d mysql:8.0.26 Unable to find image 'mysql:8.0.26' locally 8.0.26: Pulling from library/mysql b380bbd43752: Pull complete f23cbf2ecc5d: Pull complete 30cfc6c29c0a: Pull complete b38609286cbe: Pull complete 8211d9e66cd6: Pull complete 2313f9eeca4a: Pull complete 7eb487d00da0: Pull complete a5d2b117a938: Pull complete 1f6cb474cd1c: Pull complete 896b3fd2ab07: Pull complete 532e67ebb376: Pull complete 233c7958b33f: Pull complete Digest: sha256:5d52dc010398db422949f079c76e98f6b62230e5b59c0bf7582409d2c85abacb Status: Downloaded newer image for mysql:8.0.26 955082efde41ebe44519dd1ed587eda5e3fcec956eb706ae20aa7f744ae044a8 [root@ceph-client-test ~]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 955082efde41 mysql:8.0.26 "docker-entrypoint.s…" 17 seconds ago Up 12 seconds 0.0.0.0:3306->3306/tcp, :::3306->3306/tcp, 33060/tcp mysql-server [root@ceph-client-test ~]# netstat -tnlp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 894/sshd tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1119/master tcp 0 0 0.0.0.0:3306 0.0.0.0:* LISTEN 3297/docker-proxy tcp6 0 0 :::22 :::* LISTEN 894/sshd tcp6 0 0 ::1:25 :::* LISTEN 1119/master tcp6 0 0 :::3306 :::* LISTEN 3304/docker-proxy [root@ceph-client-test ~]# ll -h /data total 188M -rw-r----- 1 polkitd input 56 Oct 9 15:52 auto.cnf -rw------- 1 polkitd input 1.7K Oct 9 15:52 ca-key.pem -rw-r--r-- 1 polkitd input 1.1K Oct 9 15:52 ca.pem -rw-r--r-- 1 polkitd input 1.1K Oct 9 15:52 client-cert.pem -rw------- 1 polkitd input 1.7K Oct 9 15:52 client-key.pem -rw-r----- 1 polkitd input 192K Oct 9 15:52 #ib_16384_0.dblwr -rw-r----- 1 polkitd input 8.2M Oct 9 15:52 #ib_16384_1.dblwr -rw-r----- 1 polkitd input 12M Oct 9 15:52 ibdata1 -rw-r----- 1 polkitd input 48M Oct 9 15:52 ib_logfile0 -rw-r----- 1 polkitd input 48M Oct 9 15:52 ib_logfile1 -rw-r----- 1 polkitd input 12M Oct 9 15:52 ibtmp1 drwxr-x--- 2 polkitd input 187 Oct 9 15:52 #innodb_temp drwxr-x--- 2 polkitd input 143 Oct 9 15:52 mysql -rw-r----- 1 polkitd input 19M Oct 9 15:52 mysql.ibd drwxr-x--- 2 polkitd input 8.0K Oct 9 15:52 performance_schema -rw------- 1 polkitd input 1.7K Oct 9 15:52 private_key.pem -rw-r--r-- 1 polkitd input 452 Oct 9 15:52 public_key.pem -rw-r--r-- 1 polkitd input 1.1K Oct 9 15:52 server-cert.pem -rw------- 1 polkitd input 1.7K Oct 9 15:52 server-key.pem -rw-r----- 1 polkitd input 16M Oct 9 15:52 undo_001 -rw-r----- 1 polkitd input 16M Oct 9 15:52 undo_002 [root@ceph-client-test ~]#

验证 mysql 访问

测试数据库访问及创建数据库

[root@ceph-deploy ~]# apt-get install mysql-common mysql-client -y [root@ceph-deploy ~]# mysql -uroot -p123456 -h172.16.88.60 mysql: [Warning] Using a password on the command line interface can be insecure. Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 8 Server version: 8.0.26 MySQL Community Server - GPL Copyright (c) 2000, 2022, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | sys | +--------------------+ 4 rows in set (0.03 sec) mysql> use mysql; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changed mysql> show tables; +------------------------------------------------------+ | Tables_in_mysql | +------------------------------------------------------+ | columns_priv | | component | | db | | default_roles | | engine_cost | | func | | general_log | | global_grants | | gtid_executed | | help_category | | help_keyword | | help_relation | | help_topic | | innodb_index_stats | | innodb_table_stats | | password_history | | plugin | | procs_priv | | proxies_priv | | replication_asynchronous_connection_failover | | replication_asynchronous_connection_failover_managed | | replication_group_configuration_version | | replication_group_member_actions | | role_edges | | server_cost | | servers | | slave_master_info | | slave_relay_log_info | | slave_worker_info | | slow_log | | tables_priv | | time_zone | | time_zone_leap_second | | time_zone_name | | time_zone_transition | | time_zone_transition_type | | user | +------------------------------------------------------+ 37 rows in set (0.01 sec) mysql>

创建数据库

[root@ceph-deploy ~]# mysql -uroot -p123456 -h172.16.88.60 mysql: [Warning] Using a password on the command line interface can be insecure. Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 9 Server version: 8.0.26 MySQL Community Server - GPL Copyright (c) 2000, 2022, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> create database mydatabase; Query OK, 1 row affected (0.08 sec) mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mydatabase | | mysql | | performance_schema | | sys | +--------------------+ 5 rows in set (0.01 sec) mysql> [root@ceph-client-test ~]# ll -h /data/ total 194M -rw-r----- 1 polkitd input 1.6K Oct 9 15:53 955082efde41.err -rw-r----- 1 polkitd input 56 Oct 9 15:52 auto.cnf -rw-r----- 1 polkitd input 3.0M Oct 9 15:53 binlog.000001 -rw-r----- 1 polkitd input 359 Oct 9 16:02 binlog.000002 -rw-r----- 1 polkitd input 32 Oct 9 15:53 binlog.index -rw------- 1 polkitd input 1.7K Oct 9 15:52 ca-key.pem -rw-r--r-- 1 polkitd input 1.1K Oct 9 15:52 ca.pem -rw-r--r-- 1 polkitd input 1.1K Oct 9 15:52 client-cert.pem -rw------- 1 polkitd input 1.7K Oct 9 15:52 client-key.pem -rw-r----- 1 polkitd input 192K Oct 9 16:02 #ib_16384_0.dblwr -rw-r----- 1 polkitd input 8.2M Oct 9 15:52 #ib_16384_1.dblwr -rw-r----- 1 polkitd input 5.6K Oct 9 15:53 ib_buffer_pool -rw-r----- 1 polkitd input 12M Oct 9 16:02 ibdata1 -rw-r----- 1 polkitd input 48M Oct 9 16:02 ib_logfile0 -rw-r----- 1 polkitd input 48M Oct 9 15:52 ib_logfile1 -rw-r----- 1 polkitd input 12M Oct 9 15:53 ibtmp1 drwxr-x--- 2 polkitd input 187 Oct 9 15:53 #innodb_temp drwxr-x--- 2 polkitd input 6 Oct 9 16:02 mydatabase drwxr-x--- 2 polkitd input 143 Oct 9 15:52 mysql -rw-r----- 1 polkitd input 30M Oct 9 16:02 mysql.ibd drwxr-x--- 2 polkitd input 8.0K Oct 9 15:52 performance_schema -rw------- 1 polkitd input 1.7K Oct 9 15:52 private_key.pem -rw-r--r-- 1 polkitd input 452 Oct 9 15:52 public_key.pem -rw-r--r-- 1 polkitd input 1.1K Oct 9 15:52 server-cert.pem -rw------- 1 polkitd input 1.7K Oct 9 15:52 server-key.pem drwxr-x--- 2 polkitd input 28 Oct 9 15:52 sys -rw-r----- 1 polkitd input 16M Oct 9 15:55 undo_001 -rw-r----- 1 polkitd input 16M Oct 9 16:02 undo_002 [root@ceph-client-test ~]#

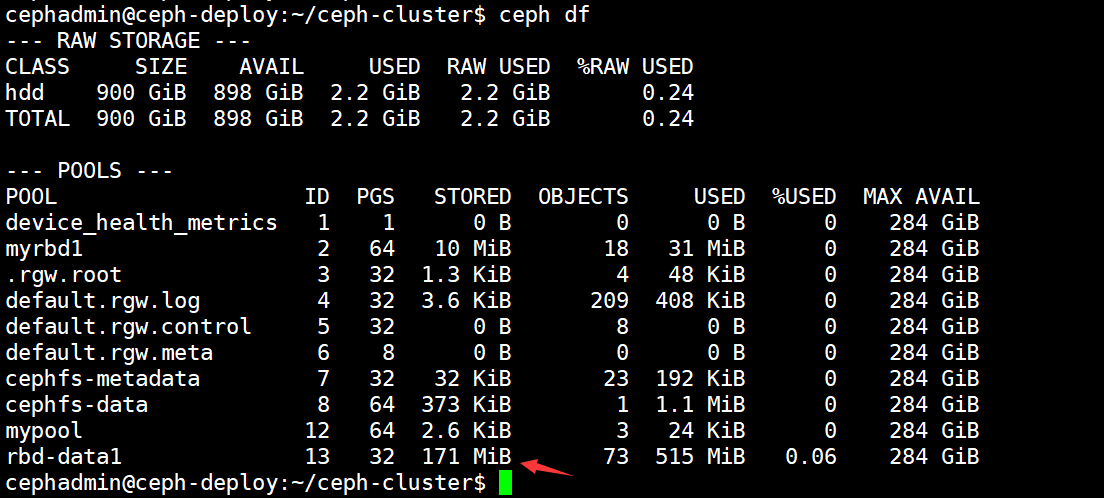

查看存储池空间使用情况,发现已经有数据存入到rbd中

四、客户端使用普通账户挂载RBD

测试客户端使用普通账户挂载并使用 RBD

cephadmin@ceph-deploy:~/ceph-cluster$ ceph auth add client.cyh mon 'allow r' osd 'allow rwx pool=rbd-data1' added key for client.cyh cephadmin@ceph-deploy:~/ceph-cluster$ ceph auth get client.cyh [client.cyh] key = AQAbgkJjaHUMNxAAgEWwUwYqhGVnJEme3qa8EA== caps mon = "allow r" caps osd = "allow rwx pool=rbd-data1" exported keyring for client.cyh cephadmin@ceph-deploy:~/ceph-cluster$ ceph-authtool --create-keyring ceph.client.cyh.keyring creating ceph.client.cyh.keyring cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ ceph auth get client.cyh -o ceph.client.cyh.keyring exported keyring for client.cyh cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ cat ceph.client.cyh.keyring [client.cyh] key = AQAbgkJjaHUMNxAAgEWwUwYqhGVnJEme3qa8EA== caps mon = "allow r" caps osd = "allow rwx pool=rbd-data1" cephadmin@ceph-deploy:~/ceph-cluster$

安装 ceph 客户端

[root@ceph-test-02 ~]# wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add - OK [root@ceph-test-02 ~]# [root@ceph-test-02 ~]# vim /etc/apt/sources.list [root@ceph-test-02 ~]# cat /etc/apt/sources.list deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse # 预发布软件源,不建议启用 # deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific/ focal main # deb-src https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific/ focal main [root@ceph-test-02 ~]# apt-get install ceph-common -y

同步普通用户认证文件

cephadmin@ceph-deploy:~/ceph-cluster$ scp ceph.conf ceph.client.cyh.keyring root@172.16.88.61:/etc/ceph The authenticity of host '172.16.88.61 (172.16.88.61)' can't be established. ECDSA key fingerprint is SHA256:7AqksCNUj/VQZZW2kY+NX7KSFsGj1HMPJGqNEfKXHP4. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added '172.16.88.61' (ECDSA) to the list of known hosts. root@172.16.88.61's password: ceph.conf 100% 315 154.3KB/s 00:00 ceph.client.cyh.keyring 100% 122 97.2KB/s 00:00 cephadmin@ceph-deploy:~/ceph-cluster$

在客户端验证权限

[root@ceph-test-02 ~]# cd /etc/ceph/ [root@ceph-test-02 ceph]# ll -h total 20K drwxr-xr-x 2 root root 4.0K Oct 9 16:25 ./ drwxr-xr-x 104 root root 4.0K Oct 9 16:23 ../ -rw------- 1 root root 122 Oct 9 16:25 ceph.client.cyh.keyring -rw-r--r-- 1 root root 315 Oct 9 16:25 ceph.conf -rw-r--r-- 1 root root 92 Mar 30 2022 rbdmap [root@ceph-test-02 ceph]# ceph --user cyh -s cluster: id: 8dc32c41-121c-49df-9554-dfb7deb8c975 health: HEALTH_OK services: mon: 3 daemons, quorum ceph-mon1,ceph-mon2,ceph-mon3 (age 2d) mgr: ceph-mgr1(active, since 2d), standbys: ceph-mgr2 mds: 1/1 daemons up osd: 9 osds: 9 up (since 2d), 9 in (since 4d) rgw: 1 daemon active (1 hosts, 1 zones) data: volumes: 1/1 healthy pools: 10 pools, 361 pgs objects: 339 objects, 199 MiB usage: 2.2 GiB used, 898 GiB / 900 GiB avail pgs: 361 active+clean [root@ceph-test-02 ceph]#

映射 rbd

使用普通用户权限映射 rbd

映射rbd

[root@ceph-test-02 ceph]# rbd --user cyh -p rbd-data1 map data-img2 /dev/rbd0 [root@ceph-test-02 ceph]#

验证rbd [root@ceph-test-02 ceph]# fdisk -l /dev/rbd0 Disk /dev/rbd0: 5 GiB, 5368709120 bytes, 10485760 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 65536 bytes / 65536 bytes [root@ceph-test-02 ceph]#

格式化并使用 rbd 镜像

[root@ceph-test-02 ceph]# mkfs.xfs /dev/rbd0 meta-data=/dev/rbd0 isize=512 agcount=8, agsize=163840 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=1, sparse=1, rmapbt=0 = reflink=1 data = bsize=4096 blocks=1310720, imaxpct=25 = sunit=16 swidth=16 blks naming =version 2 bsize=4096 ascii-ci=0, ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=16 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 [root@ceph-test-02 ceph]# mkdir /data [root@ceph-test-02 ceph]# mount /dev/rbd0 /data/ [root@ceph-test-02 ceph]# cp /var/log/syslog /data/ [root@ceph-test-02 ceph]# ll -h /data/ total 40K drwxr-xr-x 2 root root 20 Oct 9 16:33 ./ drwxr-xr-x 20 root root 4.0K Oct 9 16:32 ../ -rw-r----- 1 root root 35K Oct 9 16:33 syslog [root@ceph-test-02 ceph]# df -Th Filesystem Type Size Used Avail Use% Mounted on udev devtmpfs 1.9G 0 1.9G 0% /dev tmpfs tmpfs 394M 1.1M 393M 1% /run /dev/mapper/ubuntu--vg-ubuntu--lv ext4 48G 6.8G 39G 15% / tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm tmpfs tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup /dev/loop0 squashfs 62M 62M 0 100% /snap/core20/1587 /dev/loop1 squashfs 68M 68M 0 100% /snap/lxd/21835 /dev/loop3 squashfs 68M 68M 0 100% /snap/lxd/22753 /dev/loop2 squashfs 64M 64M 0 100% /snap/core20/1623 /dev/loop5 squashfs 47M 47M 0 100% /snap/snapd/16292 /dev/loop4 squashfs 48M 48M 0 100% /snap/snapd/17029 /dev/vda2 ext4 1.5G 106M 1.3G 8% /boot tmpfs tmpfs 394M 0 394M 0% /run/user/0 /dev/rbd0 xfs 5.0G 69M 5.0G 2% /data [root@ceph-test-02 ceph]#

#管理端验证镜像状态

cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls -p rbd-data1 -l

NAME SIZE PARENT FMT PROT LOCK

data-img1 3 GiB 2 excl #施加锁文件, 已经被客户端映射

data-img2 5 GiB 2

cephadmin@ceph-deploy:~/ceph-cluster$

验证 ceph 内核模块

挂载 rbd 之后系统内核会自动加载 libceph.ko 模块

Ubuntu

[root@ceph-test-02 ceph]# lsmod |grep ceph libceph 327680 1 rbd libcrc32c 16384 4 btrfs,xfs,raid456,libceph [root@ceph-test-02 ceph]# [root@ceph-test-02 ceph]# modinfo libceph filename: /lib/modules/5.4.0-122-generic/kernel/net/ceph/libceph.ko license: GPL description: Ceph core library author: Patience Warnick <patience@newdream.net> author: Yehuda Sadeh <yehuda@hq.newdream.net> author: Sage Weil <sage@newdream.net> srcversion: 915EC0D99CBE44982F02F3B depends: libcrc32c retpoline: Y intree: Y name: libceph vermagic: 5.4.0-122-generic SMP mod_unload modversions sig_id: PKCS#7 signer: Build time autogenerated kernel key sig_key: 71:A4:C5:68:1B:4C:C6:E2:6D:11:37:59:EF:96:3F:B0:D9:4B:DD:F7 sig_hashalgo: sha512 signature: 47:E5:CD:D6:5A:56:BF:99:0F:9B:44:08:6B:27:DE:5D:92:83:18:16: F9:44:11:E0:2E:FF:A2:04:4D:A0:87:18:86:8B:27:F6:43:F3:36:C8: 57:1B:15:7A:C7:F6:D4:7F:EB:7F:53:A3:C4:FB:98:F1:08:0E:BA:BA: 10:CB:75:4A:63:15:EC:DD:6A:6B:DD:EB:65:DC:88:0D:1F:7A:69:B5: C0:74:24:BB:1C:65:5F:53:D4:4F:68:51:BD:5F:9A:35:37:3D:C8:C0: 05:DF:28:86:A5:2A:E3:83:BB:84:21:5D:F6:54:AA:FB:EE:30:05:AA: 5C:A3:D1:1C:18:28:8B:7A:08:E2:A3:50:97:63:7D:B1:EE:3E:36:20: 5B:E4:2C:38:64:6B:BD:E0:5B:19:23:79:A4:C9:85:E6:51:E6:CD:B2: 22:D8:89:1F:E7:F7:19:68:3E:69:5B:B6:A9:1E:17:DC:CB:03:00:D7: AC:1E:58:77:D4:D0:B2:6A:6A:78:71:F4:EA:12:28:86:51:AD:C1:C4: 5D:C6:B4:90:0B:28:1F:AD:AD:69:7A:34:0B:3D:75:DC:BD:DD:8C:62: EB:D6:99:91:C8:93:C0:E8:DD:8A:87:7F:0E:DD:62:61:27:98:DF:AF: 96:5A:38:A1:3F:7A:99:AE:C1:9C:5C:2F:26:A8:A1:37:FD:03:DF:1B: 34:07:02:2B:0A:0D:08:F7:D3:A3:E5:57:CE:4F:A5:0B:B1:12:CE:3E: FB:8C:17:30:F7:B2:03:27:97:ED:FF:9D:A3:E1:0F:D1:19:3C:11:68: 60:CE:AD:3D:72:F1:CB:42:B6:BB:45:74:19:03:CC:05:9E:0A:FB:49: 4A:87:61:5E:CD:76:75:21:30:72:E0:5E:86:73:B8:BD:79:F3:FC:80: 35:C6:9F:87:F8:A8:00:D4:08:3E:C6:42:2B:C2:9A:03:22:83:E0:22: 01:63:81:E8:D0:2C:DC:F6:5D:33:A0:4F:98:65:9F:5F:D7:7C:67:D3: 7A:5E:7B:3E:EC:74:19:8C:77:55:62:47:A4:E4:9C:EA:06:9E:3A:4A: 0F:94:29:27:33:C8:8E:A7:F1:E6:67:9D:C2:03:B6:75:3B:59:AA:5B: BB:E0:BB:0E:AB:EB:84:5C:3D:93:6C:8F:89:AE:53:F5:66:F7:2C:9F: E9:4A:74:12:92:5F:51:D9:CF:77:FF:BB:E7:0F:53:91:A4:AD:E7:83: 28:10:93:FF:A0:7C:EF:65:38:5C:91:C0:91:AA:F1:6B:74:BE:05:A5: 64:F2:FB:1F:3B:36:ED:9B:8F:83:9F:1F:CB:4A:93:A9:B9:03:9F:93: B1:B0:C1:0A:FF:8F:24:ED:13:E2:35:E2 [root@ceph-test-02 ceph]#

centos

[root@ceph-client-test ~]# lsmod |grep ceph libceph 310679 1 rbd dns_resolver 13140 1 libceph libcrc32c 12644 4 xfs,libceph,nf_nat,nf_conntrack [root@ceph-client-test ~]# modinfo libceph filename: /lib/modules/3.10.0-1160.71.1.el7.x86_64/kernel/net/ceph/libceph.ko.xz license: GPL description: Ceph core library author: Patience Warnick <patience@newdream.net> author: Yehuda Sadeh <yehuda@hq.newdream.net> author: Sage Weil <sage@newdream.net> retpoline: Y rhelversion: 7.9 srcversion: F2C01C6CEEF088485EC699B depends: libcrc32c,dns_resolver intree: Y vermagic: 3.10.0-1160.71.1.el7.x86_64 SMP mod_unload modversions signer: CentOS Linux kernel signing key sig_key: 6D:A7:C2:41:B1:C9:99:25:3F:B3:B0:36:89:C0:D1:E3:BE:27:82:E4 sig_hashalgo: sha256 [root@ceph-client-test ~]#

五、rbd 镜像空间拉伸

可以扩展空间, 不建议缩小空间

#当前rbd镜像空间大小

cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls -p rbd-data1 -l NAME SIZE PARENT FMT PROT LOCK data-img1 3 GiB 2 excl data-img2 5 GiB 2 cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$

#拉升rbd镜像空间

cephadmin@ceph-deploy:~/ceph-cluster$ rbd resize --pool rbd-data1 --image data-img2 --size 8G Resizing image: 100% complete...done. cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls -p rbd-data1 -l NAME SIZE PARENT FMT PROT LOCK data-img1 3 GiB 2 excl data-img2 8 GiB 2 cephadmin@ceph-deploy:~/ceph-cluster$

客户端验证镜像空间

[root@ceph-test-02 ceph]# df -h Filesystem Size Used Avail Use% Mounted on udev 1.9G 0 1.9G 0% /dev tmpfs 394M 1.1M 393M 1% /run /dev/mapper/ubuntu--vg-ubuntu--lv 48G 6.8G 39G 15% / tmpfs 2.0G 0 2.0G 0% /dev/shm tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup /dev/loop0 62M 62M 0 100% /snap/core20/1587 /dev/loop1 68M 68M 0 100% /snap/lxd/21835 /dev/loop3 68M 68M 0 100% /snap/lxd/22753 /dev/loop2 64M 64M 0 100% /snap/core20/1623 /dev/loop5 47M 47M 0 100% /snap/snapd/16292 /dev/loop4 48M 48M 0 100% /snap/snapd/17029 /dev/vda2 1.5G 106M 1.3G 8% /boot tmpfs 394M 0 394M 0% /run/user/0 /dev/rbd0 5.0G 69M 5.0G 2% /data [root@ceph-test-02 ceph]# [root@ceph-test-02 ceph]# fdisk -l /dev/rbd0 Disk /dev/rbd0: 8 GiB, 8589934592 bytes, 16777216 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 65536 bytes / 65536 bytes

[root@ceph-test-02 ceph]#

# resize2fs /dev/rbd0 #ext4文件类型方式,在 node 节点对磁盘重新识别

# xfs_growfs /data/ #xfs文件类型,在node 挂载点对挂载点识别

[root@ceph-test-02 ceph]# xfs_growfs /data/ meta-data=/dev/rbd0 isize=512 agcount=8, agsize=163840 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=1, sparse=1, rmapbt=0 = reflink=1 data = bsize=4096 blocks=1310720, imaxpct=25 = sunit=16 swidth=16 blks naming =version 2 bsize=4096 ascii-ci=0, ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=16 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 data blocks changed from 1310720 to 2097152 [root@ceph-test-02 ceph]# df -h Filesystem Size Used Avail Use% Mounted on udev 1.9G 0 1.9G 0% /dev tmpfs 394M 1.1M 393M 1% /run /dev/mapper/ubuntu--vg-ubuntu--lv 48G 6.8G 39G 15% / tmpfs 2.0G 0 2.0G 0% /dev/shm tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup /dev/loop0 62M 62M 0 100% /snap/core20/1587 /dev/loop1 68M 68M 0 100% /snap/lxd/21835 /dev/loop3 68M 68M 0 100% /snap/lxd/22753 /dev/loop2 64M 64M 0 100% /snap/core20/1623 /dev/loop5 47M 47M 0 100% /snap/snapd/16292 /dev/loop4 48M 48M 0 100% /snap/snapd/17029 /dev/vda2 1.5G 106M 1.3G 8% /boot tmpfs 394M 0 394M 0% /run/user/0 /dev/rbd0 8.0G 90M 8.0G 2% /data [root@ceph-test-02 ceph]#

六、k8s下pv、pvc自动扩容

创建存储类

root@easzlab-deploy:~/jiege-k8s/20221006# vim case6-ceph-storage-class.yaml root@easzlab-deploy:~/jiege-k8s/20221006# cat case6-ceph-storage-class.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: ceph-storage-class-k8s annotations: storageclass.kubernetes.io/is-default-class: "false" #设置为默认存储类 provisioner: kubernetes.io/rbd parameters: monitors: 172.16.88.101:6789,172.16.88.102:6789,172.16.88.103:6789 adminId: admin adminSecretName: ceph-secret-admin adminSecretNamespace: default pool: k8s-rbd-pool userId: k8s userSecretName: ceph-secret-k8s allowVolumeExpansion: true #此功能一定要开启,否则pvc、pv无法自动扩容 root@easzlab-deploy:~/jiege-k8s/20221006# root@easzlab-deploy:~/jiege-k8s/20221006# kubectl apply -f case6-ceph-storage-class.yaml storageclass.storage.k8s.io/ceph-storage-class-k8s created root@easzlab-deploy:~/jiege-k8s/20221006# kubectl get sc -A NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE ceph-storage-class-k8s kubernetes.io/rbd Delete Immediate true 10s hdd-rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate false 51d root@easzlab-deploy:~/jiege-k8s/20221006#

创建pvc、pv

root@easzlab-deploy:~/jiege-k8s/20221006# vim case7-mysql-pvc.yaml root@easzlab-deploy:~/jiege-k8s/20221006# cat case7-mysql-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: mysql-data-pvc spec: accessModes: - ReadWriteOnce storageClassName: ceph-storage-class-k8s resources: requests: storage: '10Gi' #初始设置10G root@easzlab-deploy:~/jiege-k8s/20221006# kubectl apply -f case7-mysql-pvc.yaml persistentvolumeclaim/mysql-data-pvc created root@easzlab-deploy:~/jiege-k8s/20221006# kubectl get pvc -A NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE default mysql-data-pvc Bound pvc-1bd0a590-e760-4d5f-af1e-5af241f80b7e 10Gi RWO ceph-storage-class-k8s 9s loki-logs loki-logs Bound pvc-e87e18c6-4356-43e3-b818-6326b24bc903 200Gi RWO hdd-rook-ceph-block 23d monitoring alertmanager-main-db-alertmanager-main-0 Bound pvc-3281b76b-4a92-4b10-bdc0-f1ca895818d4 100Gi RWO hdd-rook-ceph-block 24d monitoring alertmanager-main-db-alertmanager-main-1 Bound pvc-7ecd33ac-562c-4436-bdb3-6232e85fb3de 100Gi RWO hdd-rook-ceph-block 24d monitoring alertmanager-main-db-alertmanager-main-2 Bound pvc-6a5da2e4-0d06-47c8-aea3-699c6f10c0b4 100Gi RWO hdd-rook-ceph-block 24d monitoring grafana-storage Bound pvc-d094b40f-4760-4ca9-89da-ae4888d2dd1e 100Gi RWO hdd-rook-ceph-block 24d monitoring prometheus-k8s-db-prometheus-k8s-0 Bound pvc-ac89aa69-0be8-4bf6-8239-b607b05eb1a0 100Gi RWO hdd-rook-ceph-block 24d monitoring prometheus-k8s-db-prometheus-k8s-1 Bound pvc-69ce6cfa-ece0-49e7-82f5-3cc8d6ceedf4 100Gi RWO hdd-rook-ceph-block 24d root@easzlab-deploy:~/jiege-k8s/20221006# kubectl get pv -A NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-1bd0a590-e760-4d5f-af1e-5af241f80b7e 10Gi RWO Delete Bound default/mysql-data-pvc ceph-storage-class-k8s 17s pvc-3281b76b-4a92-4b10-bdc0-f1ca895818d4 100Gi RWO Delete Bound monitoring/alertmanager-main-db-alertmanager-main-0 hdd-rook-ceph-block 24d pvc-69ce6cfa-ece0-49e7-82f5-3cc8d6ceedf4 100Gi RWO Delete Bound monitoring/prometheus-k8s-db-prometheus-k8s-1 hdd-rook-ceph-block 24d pvc-6a5da2e4-0d06-47c8-aea3-699c6f10c0b4 100Gi RWO Delete Bound monitoring/alertmanager-main-db-alertmanager-main-2 hdd-rook-ceph-block 24d pvc-7ecd33ac-562c-4436-bdb3-6232e85fb3de 100Gi RWO Delete Bound monitoring/alertmanager-main-db-alertmanager-main-1 hdd-rook-ceph-block 24d pvc-ac89aa69-0be8-4bf6-8239-b607b05eb1a0 100Gi RWO Delete Bound monitoring/prometheus-k8s-db-prometheus-k8s-0 hdd-rook-ceph-block 24d pvc-d094b40f-4760-4ca9-89da-ae4888d2dd1e 100Gi RWO Delete Bound monitoring/grafana-storage hdd-rook-ceph-block 24d pvc-e87e18c6-4356-43e3-b818-6326b24bc903 200Gi RWO Delete Bound loki-logs/loki-logs hdd-rook-ceph-block 23d root@easzlab-deploy:~/jiege-k8s/20221006#

创建测试pod,并挂载pvc

root@easzlab-deploy:~/jiege-k8s/20221006# vim case8-mysql-single.yaml root@easzlab-deploy:~/jiege-k8s/20221006# cat case8-mysql-single.yaml apiVersion: apps/v1 kind: Deployment metadata: name: mysql spec: selector: matchLabels: app: mysql strategy: type: Recreate template: metadata: labels: app: mysql spec: containers: - image: mysql:5.6.46 name: mysql env: # Use secret in real usage - name: MYSQL_ROOT_PASSWORD value: magedu123456 ports: - containerPort: 3306 name: mysql volumeMounts: - name: mysql-persistent-storage mountPath: /var/lib/mysql volumes: - name: mysql-persistent-storage persistentVolumeClaim: claimName: mysql-data-pvc #指定挂载创建的pvc --- kind: Service apiVersion: v1 metadata: labels: app: mysql-service-label name: mysql-service spec: type: NodePort ports: - name: http port: 3306 protocol: TCP targetPort: 3306 nodePort: 33306 selector: app: mysql root@easzlab-deploy:~/jiege-k8s/20221006# kubectl apply -f case8-mysql-single.yaml deployment.apps/mysql created service/mysql-service created root@easzlab-deploy:~/jiege-k8s/20221006# kubectl get pod NAME READY STATUS RESTARTS AGE mysql-77d55bfdd8-pwrxk 1/1 Running 0 21s root@easzlab-deploy:~/jiege-k8s/20221006# kubectl exec -it mysql-77d55bfdd8-pwrxk bash kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead. root@mysql-77d55bfdd8-pwrxk:/# df -Th Filesystem Type Size Used Avail Use% Mounted on overlay overlay 48G 21G 26G 45% / tmpfs tmpfs 64M 0 64M 0% /dev tmpfs tmpfs 7.9G 0 7.9G 0% /sys/fs/cgroup shm tmpfs 64M 0 64M 0% /dev/shm /dev/mapper/ubuntu--vg-ubuntu--lv ext4 48G 21G 26G 45% /etc/hosts /dev/rbd1 ext4 9.8G 116M 9.7G 2% /var/lib/mysql tmpfs tmpfs 16G 12K 16G 1% /run/secrets/kubernetes.io/serviceaccount tmpfs tmpfs 7.9G 0 7.9G 0% /proc/acpi tmpfs tmpfs 7.9G 0 7.9G 0% /proc/scsi tmpfs tmpfs 7.9G 0 7.9G 0% /sys/firmware root@mysql-77d55bfdd8-pwrxk:/#

测试动态扩容pvc

root@easzlab-deploy:~/jiege-k8s/20221006# vim case7-mysql-pvc.yaml root@easzlab-deploy:~/jiege-k8s/20221006# cat case7-mysql-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: mysql-data-pvc spec: accessModes: - ReadWriteOnce storageClassName: ceph-storage-class-k8s resources: requests: storage: '20Gi' #手动修改此处值 root@easzlab-deploy:~/jiege-k8s/20221006# root@easzlab-deploy:~/jiege-k8s/20221006# kubectl apply -f case7-mysql-pvc.yaml #手动加载pvc文件 persistentvolumeclaim/mysql-data-pvc configured root@easzlab-deploy:~/jiege-k8s/20221006#

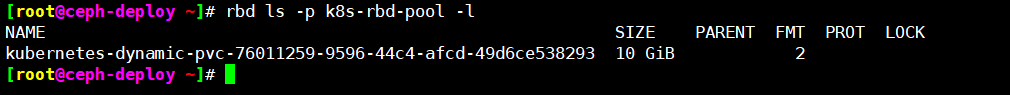

#查看rbd image是否自动扩容

[root@ceph-deploy ~]# rbd ls -p k8s-rbd-pool -l NAME SIZE PARENT FMT PROT LOCK kubernetes-dynamic-pvc-76011259-9596-44c4-afcd-49d6ce538293 10 GiB 2 [root@ceph-deploy ~]# rbd ls -p k8s-rbd-pool -l NAME SIZE PARENT FMT PROT LOCK kubernetes-dynamic-pvc-76011259-9596-44c4-afcd-49d6ce538293 20 GiB 2 [root@ceph-deploy ~]#

#验证pv、pvc是否自动扩容

root@easzlab-deploy:~/jiege-k8s/20221006# kubectl get pv -A NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-1bd0a590-e760-4d5f-af1e-5af241f80b7e 20Gi RWO Delete Bound default/mysql-data-pvc ceph-storage-class-k8s 6m8s pvc-3281b76b-4a92-4b10-bdc0-f1ca895818d4 100Gi RWO Delete Bound monitoring/alertmanager-main-db-alertmanager-main-0 hdd-rook-ceph-block 24d pvc-69ce6cfa-ece0-49e7-82f5-3cc8d6ceedf4 100Gi RWO Delete Bound monitoring/prometheus-k8s-db-prometheus-k8s-1 hdd-rook-ceph-block 24d pvc-6a5da2e4-0d06-47c8-aea3-699c6f10c0b4 100Gi RWO Delete Bound monitoring/alertmanager-main-db-alertmanager-main-2 hdd-rook-ceph-block 24d pvc-7ecd33ac-562c-4436-bdb3-6232e85fb3de 100Gi RWO Delete Bound monitoring/alertmanager-main-db-alertmanager-main-1 hdd-rook-ceph-block 24d pvc-ac89aa69-0be8-4bf6-8239-b607b05eb1a0 100Gi RWO Delete Bound monitoring/prometheus-k8s-db-prometheus-k8s-0 hdd-rook-ceph-block 24d pvc-d094b40f-4760-4ca9-89da-ae4888d2dd1e 100Gi RWO Delete Bound monitoring/grafana-storage hdd-rook-ceph-block 24d pvc-e87e18c6-4356-43e3-b818-6326b24bc903 200Gi RWO Delete Bound loki-logs/loki-logs hdd-rook-ceph-block 23d root@easzlab-deploy:~/jiege-k8s/20221006# root@easzlab-deploy:~/jiege-k8s/20221006# root@easzlab-deploy:~/jiege-k8s/20221006# kubectl get pv -A NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-1bd0a590-e760-4d5f-af1e-5af241f80b7e 20Gi RWO Delete Bound default/mysql-data-pvc ceph-storage-class-k8s 7m2s pvc-3281b76b-4a92-4b10-bdc0-f1ca895818d4 100Gi RWO Delete Bound monitoring/alertmanager-main-db-alertmanager-main-0 hdd-rook-ceph-block 24d pvc-69ce6cfa-ece0-49e7-82f5-3cc8d6ceedf4 100Gi RWO Delete Bound monitoring/prometheus-k8s-db-prometheus-k8s-1 hdd-rook-ceph-block 24d pvc-6a5da2e4-0d06-47c8-aea3-699c6f10c0b4 100Gi RWO Delete Bound monitoring/alertmanager-main-db-alertmanager-main-2 hdd-rook-ceph-block 24d pvc-7ecd33ac-562c-4436-bdb3-6232e85fb3de 100Gi RWO Delete Bound monitoring/alertmanager-main-db-alertmanager-main-1 hdd-rook-ceph-block 24d pvc-ac89aa69-0be8-4bf6-8239-b607b05eb1a0 100Gi RWO Delete Bound monitoring/prometheus-k8s-db-prometheus-k8s-0 hdd-rook-ceph-block 24d pvc-d094b40f-4760-4ca9-89da-ae4888d2dd1e 100Gi RWO Delete Bound monitoring/grafana-storage hdd-rook-ceph-block 24d pvc-e87e18c6-4356-43e3-b818-6326b24bc903 200Gi RWO Delete Bound loki-logs/loki-logs hdd-rook-ceph-block 23d root@easzlab-deploy:~/jiege-k8s/20221006#

#验证pod rbd挂载盘是否自动扩容

root@easzlab-deploy:~/jiege-k8s/20221006# kubectl exec -it mysql-77d55bfdd8-pwrxk bash kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead. root@mysql-77d55bfdd8-pwrxk:/# df -Th Filesystem Type Size Used Avail Use% Mounted on overlay overlay 48G 21G 26G 45% / tmpfs tmpfs 64M 0 64M 0% /dev tmpfs tmpfs 7.9G 0 7.9G 0% /sys/fs/cgroup shm tmpfs 64M 0 64M 0% /dev/shm /dev/mapper/ubuntu--vg-ubuntu--lv ext4 48G 21G 26G 45% /etc/hosts /dev/rbd1 ext4 20G 116M 20G 1% /var/lib/mysql tmpfs tmpfs 16G 12K 16G 1% /run/secrets/kubernetes.io/serviceaccount tmpfs tmpfs 7.9G 0 7.9G 0% /proc/acpi tmpfs tmpfs 7.9G 0 7.9G 0% /proc/scsi tmpfs tmpfs 7.9G 0 7.9G 0% /sys/firmware root@mysql-77d55bfdd8-pwrxk:/#

七、扩容已存在的pvc

修改原有的存储类

root@easzlab-deploy:~# vim hdd-sc.yaml root@easzlab-deploy:~# cat hdd-sc.yaml apiVersion: ceph.rook.io/v1 kind: CephBlockPool metadata: name: hdd-rook-ceph-block namespace: rook-ceph spec: failureDomain: host replicated: size: 2 deviceClass: hdd --- apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: hdd-rook-ceph-block provisioner: rook-ceph.rbd.csi.ceph.com parameters: clusterID: rook-ceph pool: hdd-rook-ceph-block imageFormat: "2" imageFeatures: layering csi.storage.k8s.io/provisioner-secret-name: rook-csi-rbd-provisioner csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph csi.storage.k8s.io/node-stage-secret-name: rook-csi-rbd-node csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph csi.storage.k8s.io/fstype: xfs reclaimPolicy: Delete allowVolumeExpansion: true root@easzlab-deploy:~# root@easzlab-deploy:~# kubectl apply -f hdd-sc.yaml

查看pv与ceph对应关系,手动扩容image

root@easzlab-deploy:~# kubectl get pv pvc-e87e18c6-4356-43e3-b818-6326b24bc903 -oyaml |grep imageName imageName: csi-vol-26fb7ed3-37c2-11ed-951b-7a7c371bc34e root@easzlab-deploy:~# [rook@rook-ceph-tools-74f48bf875-7q86r /]$ rbd ls -p hdd-rook-ceph-block -l NAME SIZE PARENT FMT PROT LOCK csi-vol-0302b19e-3d40-11ed-91a2-122dbe21808f 50 GiB 2 csi-vol-1884ba5d-376f-11ed-951b-7a7c371bc34e 150 GiB 2 csi-vol-1c86bc9a-376c-11ed-951b-7a7c371bc34e 100 GiB 2 csi-vol-26fb7ed3-37c2-11ed-951b-7a7c371bc34e 200 GiB 2 csi-vol-2cb371ad-376f-11ed-951b-7a7c371bc34e 100 GiB 2 csi-vol-2cd13e5d-376f-11ed-951b-7a7c371bc34e 100 GiB 2 csi-vol-2cf02bad-376f-11ed-951b-7a7c371bc34e 100 GiB 2 csi-vol-315a5cf7-376f-11ed-951b-7a7c371bc34e 100 GiB 2 csi-vol-3177941d-376f-11ed-951b-7a7c371bc34e 100 GiB 2 csi-vol-74985e43-3d6f-11ed-91a2-122dbe21808f 50 GiB 2 csi-vol-d4614a25-3d62-11ed-91a2-122dbe21808f 200 GiB 2 [rook@rook-ceph-tools-74f48bf875-7q86r /]$ rbd resize --pool hdd-rook-ceph-block --image csi-vol-26fb7ed3-37c2-11ed-951b-7a7c371bc34e --size 210G Resizing image: 100% complete...done. [rook@rook-ceph-tools-74f48bf875-7q86r /]$ rbd ls -p hdd-rook-ceph-block -l NAME SIZE PARENT FMT PROT LOCK csi-vol-0302b19e-3d40-11ed-91a2-122dbe21808f 50 GiB 2 csi-vol-1884ba5d-376f-11ed-951b-7a7c371bc34e 150 GiB 2 csi-vol-1c86bc9a-376c-11ed-951b-7a7c371bc34e 100 GiB 2 csi-vol-26fb7ed3-37c2-11ed-951b-7a7c371bc34e 210 GiB 2 csi-vol-2cb371ad-376f-11ed-951b-7a7c371bc34e 100 GiB 2 csi-vol-2cd13e5d-376f-11ed-951b-7a7c371bc34e 100 GiB 2 csi-vol-2cf02bad-376f-11ed-951b-7a7c371bc34e 100 GiB 2 csi-vol-315a5cf7-376f-11ed-951b-7a7c371bc34e 100 GiB 2 csi-vol-3177941d-376f-11ed-951b-7a7c371bc34e 100 GiB 2 csi-vol-74985e43-3d6f-11ed-91a2-122dbe21808f 50 GiB 2 csi-vol-d4614a25-3d62-11ed-91a2-122dbe21808f 200 GiB 2 [rook@rook-ceph-tools-74f48bf875-7q86r /]$

修改pvc、pv

root@easzlab-deploy:~# kubectl get pv pvc-e87e18c6-4356-43e3-b818-6326b24bc903 -oyaml apiVersion: v1 kind: PersistentVolume metadata: annotations: pv.kubernetes.io/provisioned-by: rook-ceph.rbd.csi.ceph.com creationTimestamp: "2022-09-19T02:24:13Z" finalizers: - kubernetes.io/pv-protection name: pvc-e87e18c6-4356-43e3-b818-6326b24bc903 resourceVersion: "16829144" uid: 9ab7cfae-dccc-4ab2-a632-e11194f5e98b spec: accessModes: - ReadWriteOnce capacity: storage: 210Gi #修改此处 claimRef: apiVersion: v1 kind: PersistentVolumeClaim name: loki-logs namespace: loki-logs resourceVersion: "11080574" uid: e87e18c6-4356-43e3-b818-6326b24bc903 csi: driver: rook-ceph.rbd.csi.ceph.com fsType: xfs nodeStageSecretRef: name: rook-csi-rbd-node namespace: rook-ceph volumeAttributes: clusterID: rook-ceph imageFeatures: layering imageFormat: "2" imageName: csi-vol-26fb7ed3-37c2-11ed-951b-7a7c371bc34e journalPool: hdd-rook-ceph-block pool: hdd-rook-ceph-block storage.kubernetes.io/csiProvisionerIdentity: 1660911673386-8081-rook-ceph.rbd.csi.ceph.com volumeHandle: 0001-0009-rook-ceph-0000000000000003-26fb7ed3-37c2-11ed-951b-7a7c371bc34e persistentVolumeReclaimPolicy: Delete storageClassName: hdd-rook-ceph-block volumeMode: Filesystem status: phase: Bound root@easzlab-deploy:~# root@easzlab-deploy:~# kubectl get pvc -n loki-logs loki-logs -oyaml apiVersion: v1 kind: PersistentVolumeClaim metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","kind":"PersistentVolumeClaim","metadata":{"annotations":{},"name":"loki-logs","namespace":"loki-logs"},"spec":{"accessModes":["ReadWriteOnce"],"resources":{"requests":{"storage":"200Gi"}},"storageClassName":"hdd-rook-ceph-block"}} pv.kubernetes.io/bind-completed: "yes" pv.kubernetes.io/bound-by-controller: "yes" volume.beta.kubernetes.io/storage-provisioner: rook-ceph.rbd.csi.ceph.com volume.kubernetes.io/storage-provisioner: rook-ceph.rbd.csi.ceph.com creationTimestamp: "2022-09-19T02:24:13Z" finalizers: - kubernetes.io/pvc-protection name: loki-logs namespace: loki-logs resourceVersion: "16829291" uid: e87e18c6-4356-43e3-b818-6326b24bc903 spec: accessModes: - ReadWriteOnce resources: requests: storage: 210Gi #修改此处 storageClassName: hdd-rook-ceph-block volumeMode: Filesystem volumeName: pvc-e87e18c6-4356-43e3-b818-6326b24bc903 status: accessModes: - ReadWriteOnce capacity: storage: 210Gi #修改此处 phase: Bound root@easzlab-deploy:~#

手动删除pod,即可自动扩容

root@easzlab-deploy:~# kubectl get pod -n loki-logs NAME READY STATUS RESTARTS AGE loki-logs-0 1/1 Running 1 (10d ago) 24d loki-logs-promtail-279hd 1/1 Running 1 (10d ago) 24d loki-logs-promtail-2ckcb 1/1 Running 1 (10d ago) 24d loki-logs-promtail-8h95x 1/1 Running 1 (10d ago) 24d loki-logs-promtail-dcqdr 1/1 Running 1 (10d ago) 24d loki-logs-promtail-n5pc5 1/1 Running 1 (10d ago) 24d loki-logs-promtail-rwcp4 1/1 Running 1 (10d ago) 24d loki-logs-promtail-s7tfb 1/1 Running 1 (10d ago) 24d loki-logs-promtail-wknd2 1/1 Running 1 (10d ago) 24d loki-logs-promtail-wlb9r 1/1 Running 1 (10d ago) 24d root@easzlab-deploy:~# kubectl delete pod -n loki-logs loki-logs-0 pod "loki-logs-0" deleted root@easzlab-deploy:~# root@easzlab-deploy:~# kubectl get pod -n loki-logs NAME READY STATUS RESTARTS AGE loki-logs-0 1/1 Running 0 15m loki-logs-promtail-279hd 1/1 Running 1 (10d ago) 24d loki-logs-promtail-2ckcb 1/1 Running 1 (10d ago) 24d loki-logs-promtail-8h95x 1/1 Running 1 (10d ago) 24d loki-logs-promtail-dcqdr 1/1 Running 1 (10d ago) 24d loki-logs-promtail-n5pc5 1/1 Running 1 (10d ago) 24d loki-logs-promtail-rwcp4 1/1 Running 1 (10d ago) 24d loki-logs-promtail-s7tfb 1/1 Running 1 (10d ago) 24d loki-logs-promtail-wknd2 1/1 Running 1 (10d ago) 24d loki-logs-promtail-wlb9r 1/1 Running 1 (10d ago) 24d root@easzlab-deploy:~# kubectl exec -it -n loki-logs loki-logs-0 sh #进入pod查看扩容情况 kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead. / $ df -Th Filesystem Type Size Used Available Use% Mounted on overlay overlay 47.6G 20.2G 25.1G 45% / tmpfs tmpfs 64.0M 0 64.0M 0% /dev tmpfs tmpfs 7.8G 0 7.8G 0% /sys/fs/cgroup /dev/mapper/ubuntu--vg-ubuntu--lv ext4 47.6G 20.2G 25.1G 45% /tmp /dev/rbd2 xfs 209.9G 1.5G 208.4G 1% /data #此时已经自动扩容到210G tmpfs tmpfs 15.3G 4.0K 15.3G 0% /etc/loki /dev/mapper/ubuntu--vg-ubuntu--lv ext4 47.6G 20.2G 25.1G 45% /etc/hosts /dev/mapper/ubuntu--vg-ubuntu--lv ext4 47.6G 20.2G 25.1G 45% /dev/termination-log /dev/mapper/ubuntu--vg-ubuntu--lv ext4 47.6G 20.2G 25.1G 45% /etc/hostname /dev/mapper/ubuntu--vg-ubuntu--lv ext4 47.6G 20.2G 25.1G 45% /etc/resolv.conf shm tmpfs 64.0M 0 64.0M 0% /dev/shm tmpfs tmpfs 15.3G 12.0K 15.3G 0% /run/secrets/kubernetes.io/serviceaccount tmpfs tmpfs 7.8G 0 7.8G 0% /proc/acpi tmpfs tmpfs 64.0M 0 64.0M 0% /proc/kcore tmpfs tmpfs 64.0M 0 64.0M 0% /proc/keys tmpfs tmpfs 64.0M 0 64.0M 0% /proc/timer_list tmpfs tmpfs 64.0M 0 64.0M 0% /proc/sched_debug tmpfs tmpfs 7.8G 0 7.8G 0% /proc/scsi tmpfs tmpfs 7.8G 0 7.8G 0% /sys/firmware / $ ls -lh /data/ total 0 drwxrwsr-x 7 loki loki 120 Sep 19 02:28 loki / $ ls -lh /data/loki/ #验证数据是否存在 total 8M drwxrwsr-x 4 loki loki 41 Oct 13 02:56 boltdb-shipper-active drwxrwsr-x 2 loki loki 6 Sep 27 03:28 boltdb-shipper-cache drwxrwsr-x 2 loki loki 6 Oct 13 03:06 boltdb-shipper-compactor drwxrwsr-x 3 loki loki 7.0M Oct 13 03:10 chunks drwxrwsr-x 3 loki loki 47 Oct 13 03:10 wal / $

八、rbd镜像开机自动挂载

[root@ceph-test-02 ~]# cat /etc/rc.local #!/bin/bash rbd --user cyh -p rbd-data1 map data-img2 mount /dev/rbd0 /data/ [root@ceph-test-02 ~]# [root@ceph-test-02 ~]# chmod a+x /etc/rc.local

重启机器验证

[root@ceph-test-02 ~]# cat /etc/rc.local #!/bin/bash rbd --user cyh -p rbd-data1 map data-img2 mount /dev/rbd0 /data/ [root@ceph-test-02 ~]# reboot Connection closing...Socket close. Connection closed by foreign host. Disconnected from remote host(172.16.88.61:22) at 19:10:03. Type `help' to learn how to use Xshell prompt. [C:\~]$ ssh root@172.16.88.61 Connecting to 172.16.88.61:22... Connection established. To escape to local shell, press 'Ctrl+Alt+]'. Welcome to Ubuntu 20.04.4 LTS (GNU/Linux 5.4.0-122-generic x86_64) * Documentation: https://help.ubuntu.com * Management: https://landscape.canonical.com * Support: https://ubuntu.com/advantage System information as of Sun 09 Oct 2022 07:10:30 PM CST System load: 1.57 Processes: 168 Usage of /: 14.1% of 47.56GB Users logged in: 0 Memory usage: 5% IPv4 address for enp1s0: 172.16.88.61 Swap usage: 0% 41 updates can be applied immediately. To see these additional updates run: apt list --upgradable New release '22.04.1 LTS' available. Run 'do-release-upgrade' to upgrade to it. Last login: Sun Oct 9 18:45:49 2022 from 172.16.1.10 [root@ceph-test-02 ~]# [root@ceph-test-02 ~]# rbd showmapped #查看映射 id pool namespace image snap device 0 rbd-data1 data-img2 - /dev/rbd0 [root@ceph-test-02 ~]# df -Th Filesystem Type Size Used Avail Use% Mounted on udev devtmpfs 1.9G 0 1.9G 0% /dev tmpfs tmpfs 394M 1.1M 393M 1% /run /dev/mapper/ubuntu--vg-ubuntu--lv ext4 48G 6.8G 39G 15% / tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm tmpfs tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup /dev/loop1 squashfs 62M 62M 0 100% /snap/core20/1587 /dev/vda2 ext4 1.5G 106M 1.3G 8% /boot /dev/loop0 squashfs 64M 64M 0 100% /snap/core20/1623 /dev/loop2 squashfs 68M 68M 0 100% /snap/lxd/21835 /dev/loop3 squashfs 68M 68M 0 100% /snap/lxd/22753 /dev/loop5 squashfs 48M 48M 0 100% /snap/snapd/17029 /dev/loop4 squashfs 47M 47M 0 100% /snap/snapd/16292 /dev/rbd0 xfs 8.0G 90M 8.0G 2% /data tmpfs tmpfs 394M 0 394M 0% /run/user/0 [root@ceph-test-02 ~]#

九、卸载 rbd 镜像

[root@ceph-test-02 ~]# umount /data [root@ceph-test-02 ~]# df -h Filesystem Size Used Avail Use% Mounted on udev 1.9G 0 1.9G 0% /dev tmpfs 394M 1.1M 393M 1% /run /dev/mapper/ubuntu--vg-ubuntu--lv 48G 6.8G 39G 15% / tmpfs 2.0G 0 2.0G 0% /dev/shm tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup /dev/loop1 62M 62M 0 100% /snap/core20/1587 /dev/vda2 1.5G 106M 1.3G 8% /boot /dev/loop0 64M 64M 0 100% /snap/core20/1623 /dev/loop2 68M 68M 0 100% /snap/lxd/21835 /dev/loop3 68M 68M 0 100% /snap/lxd/22753 /dev/loop5 48M 48M 0 100% /snap/snapd/17029 /dev/loop4 47M 47M 0 100% /snap/snapd/16292 tmpfs 394M 0 394M 0% /run/user/0 [root@ceph-test-02 ~]# rbd --user shijie -p rbd-data1 unmap data-img2 [root@ceph-test-02 ~]# rbd showmapped [root@ceph-test-02 ~]# vi /etc/rc.local [root@ceph-test-02 ~]# cat /etc/rc.local #!/bin/bash [root@ceph-test-02 ~]#

[root@ceph-test-02 ~]# ll -h /dev/rbd0

ls: cannot access '/dev/rbd0': No such file or directory

[root@ceph-test-02 ~]#

如果rbd -p rbd-data1 unmap data-img2 卸载失败,可以用rbd unmap /dev/rbd2

[root@ceph-client-test ~]# rbd showmapped id pool namespace image snap device 0 rbd-data1 data-img1 - /dev/rbd0 1 rbd-data1 data-img2 - /dev/rbd1 2 rbd-data1 data-img2 - /dev/rbd2 [root@ceph-client-test ~]# rbd unmap /dev/rbd2 [root@ceph-client-test ~]# rbd unmap /dev/rbd1 [root@ceph-client-test ~]# rbd showmapped id pool namespace image snap device 0 rbd-data1 data-img1 - /dev/rbd0 [root@ceph-client-test ~]#

删除 rbd 镜像

镜像删除后数据也会被删除而且是无法恢复, 因此在执行删除操作的时候要慎重。

cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls -p rbd-data1 -l NAME SIZE PARENT FMT PROT LOCK data-img1 3 GiB 2 data-img2 8 GiB 2 cephadmin@ceph-deploy:~/ceph-cluster$ rbd rm --pool rbd-data1 --image data-img2 Removing image: 100% complete...done. cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls -p rbd-data1 -l NAME SIZE PARENT FMT PROT LOCK data-img1 3 GiB 2 cephadmin@ceph-deploy:~/ceph-cluster$

十、rbd 镜像回收站机制

删除的镜像数据无法恢复,但是还有另外一种方法可以先把镜像移动到回收站,后期确认删除的时候再从回收站删除即可。

通过回收站还原镜像

#原始pool下的img镜像

cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls --pool rbd-data1 -l

NAME SIZE PARENT FMT PROT LOCK

data-img1 3 GiB 2

cephadmin@ceph-deploy:~/ceph-cluster$

cephadmin@ceph-deploy:~/ceph-cluster$ rbd create data-img2 --size 5G --pool rbd-data1 --image-format 2 --image-feature layering

cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls --pool rbd-data1 -l

NAME SIZE PARENT FMT PROT LOCK

data-img1 3 GiB 2

data-img2 5 GiB 2

cephadmin@ceph-deploy:~/ceph-cluster$

#在客户端挂载img镜像并写入数据,做相应测试 [root@ceph-client-test ~]# rbd -p rbd-data1 map data-img2 /dev/rbd1 [root@ceph-client-test ~]# mount /dev/rbd1 /data1 [root@ceph-client-test ~]# cd /data1 [root@ceph-client-test data1]# ls [root@ceph-client-test data1]# ll -h total 0 [root@ceph-client-test data1]# [root@ceph-client-test data1]# cp /etc/passwd . #复制现有的数据到当前目录下 [root@ceph-client-test data1]# cp /etc/shadow . [root@ceph-client-test data1]# dd if=/dev/zero of=./file-test bs=5M count=100 #创建块文件 100+0 records in 100+0 records out 524288000 bytes (524 MB) copied, 0.698598 s, 750 MB/s [root@ceph-client-test data1]# dd if=/dev/zero of=./file-test2 bs=5M count=100 100+0 records in 100+0 records out 524288000 bytes (524 MB) copied, 0.589398 s, 890 MB/s [root@ceph-client-test data1]# [root@ceph-client-test data1]# dd if=/dev/zero of=./file-test3 bs=5M count=100 100+0 records in 100+0 records out 524288000 bytes (524 MB) copied, 0.690832 s, 759 MB/s [root@ceph-client-test data1]# [root@ceph-client-test data1]# touch test-{1..9}.txt #创建多个空文件 [root@ceph-client-test data1]# ll -h total 1.5G -rw-r--r-- 1 root root 500M Oct 9 21:45 file-test -rw-r--r-- 1 root root 500M Oct 9 21:46 file-test2 -rw-r--r-- 1 root root 500M Oct 9 21:48 file-test3 -rw-r--r-- 1 root root 854 Oct 9 21:45 passwd ---------- 1 root root 583 Oct 9 21:45 shadow -rw-r--r-- 1 root root 0 Oct 9 21:47 test-1.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-2.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-3.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-4.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-5.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-6.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-7.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-8.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-9.txt [root@ceph-client-test data1]# #把镜像丢到回收站 cephadmin@ceph-deploy:~/ceph-cluster$ rbd trash move --pool rbd-data1 --image data-img2 cephadmin@ceph-deploy:~/ceph-cluster$ rbd trash list --pool rbd-data1 #查看回收站数据 8d78b3912c9 data-img2 cephadmin@ceph-deploy:~/ceph-cluster$ #此时img2已经消失了 cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls --pool rbd-data1 -l NAME SIZE PARENT FMT PROT LOCK data-img1 3 GiB 2 cephadmin@ceph-deploy:~/ceph-cluster$ #客户端查看数据还存在 [root@ceph-client-test ~]# ll -h /data1 total 1.5G -rw-r--r-- 1 root root 500M Oct 9 21:45 file-test -rw-r--r-- 1 root root 500M Oct 9 21:46 file-test2 -rw-r--r-- 1 root root 500M Oct 9 21:48 file-test3 -rw-r--r-- 1 root root 854 Oct 9 21:45 passwd ---------- 1 root root 583 Oct 9 21:45 shadow -rw-r--r-- 1 root root 0 Oct 9 21:47 test-1.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-2.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-3.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-4.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-5.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-6.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-7.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-8.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-9.txt [root@ceph-client-test ~]# #卸载rbd后在挂载 [root@ceph-client-test ~]# umount /data1 [root@ceph-client-test ~]# rbd showmapped id pool namespace image snap device 0 rbd-data1 data-img1 - /dev/rbd0 1 rbd-data1 data-img2 - /dev/rbd1 [root@ceph-client-test ~]# rbd unmap /dev/rbd1 [root@ceph-client-test ~]# [root@ceph-client-test ~]# rbd -p rbd-data1 map data-img2 rbd: error opening image data-img2: (2) No such file or directory #此时报错,因为data-img2镜像已经被丢到回收站,此时无法找到data-img2,符合正常逻辑 [root@ceph-client-test ~]# #通过回收站恢复img cephadmin@ceph-deploy:~/ceph-cluster$ rbd trash list --pool rbd-data1 #再次确认回收站镜像,并找出镜像id 8d78b3912c9 data-img2 cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls --pool rbd-data1 -l #再次确认rbd-data1池下面是否有data-img2镜像 NAME SIZE PARENT FMT PROT LOCK data-img1 3 GiB 2 cephadmin@ceph-deploy:~/ceph-cluster$ rbd trash restore --pool rbd-data1 --image data-img2 --image-id 8d78b3912c9 #通过回收站恢复数据 cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls --pool rbd-data1 -l NAME SIZE PARENT FMT PROT LOCK data-img1 3 GiB 2 data-img2 5 GiB 2 #data-img2镜像成功恢复 cephadmin@ceph-deploy:~/ceph-cluster$ #客户端重新挂载rbd数据恢复 [root@ceph-client-test ~]# rbd -p rbd-data1 map data-img2 /dev/rbd1 [root@ceph-client-test ~]# mount /dev/rbd1 /data1 [root@ceph-client-test ~]# ll -h /data1/ total 1.5G -rw-r--r-- 1 root root 500M Oct 9 21:45 file-test -rw-r--r-- 1 root root 500M Oct 9 21:46 file-test2 -rw-r--r-- 1 root root 500M Oct 9 21:48 file-test3 -rw-r--r-- 1 root root 854 Oct 9 21:45 passwd ---------- 1 root root 583 Oct 9 21:45 shadow -rw-r--r-- 1 root root 0 Oct 9 21:47 test-1.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-2.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-3.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-4.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-5.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-6.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-7.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-8.txt -rw-r--r-- 1 root root 0 Oct 9 21:47 test-9.txt [root@ceph-client-test ~]#

如果镜像不再使用, 可以直接使用 trash remove 将其从回收站删除

[root@ceph-client-test ~]# umount /data1 [root@ceph-client-test ~]# rbd unmap /dev/rbd1 [root@ceph-client-test ~]#

cephadmin@ceph-deploy:~/ceph-cluster$ rbd trash list --pool rbd-data1 8d78b3912c9 data-img2 cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ rbd trash remove --pool rbd-data1 8d78b3912c9 Removing image: 100% complete...done. cephadmin@ceph-deploy:~/ceph-cluster$ rbd trash list --pool rbd-data1 cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls --pool rbd-data1 data-img1 cephadmin@ceph-deploy:~/ceph-cluster$

十一、镜像快照

[cephadmin@ceph-deploy ceph-cluster]$ rbd help snap snap create (snap add) #创建快照 snap limit clear #清除镜像的快照数量限制 snap limit set #设置一个镜像的快照上限 snap list (snap ls) #列出快照 snap protect #保护快照被删除 snap purge #删除所有未保护的快照 snap remove (snap rm) #删除一个快照 snap rename #重命名快照 snap rollback (snap revert) #还原快照 snap unprotect #允许一个快照被删除(取消快照保护)

客户端当前数据

[root@ceph-client-test data1]# touch test-{1..9}.txt

[root@ceph-client-test data1]# cp /etc/passwd .

[root@ceph-client-test data1]# cp /etc/shadow .

[root@ceph-client-test data1]# ll -h

total 8.0K

-rw-r--r-- 1 root root 854 Oct 9 22:43 passwd

---------- 1 root root 583 Oct 9 22:43 shadow

-rw-r--r-- 1 root root 0 Oct 9 22:43 test-1.txt

-rw-r--r-- 1 root root 0 Oct 9 22:43 test-2.txt

-rw-r--r-- 1 root root 0 Oct 9 22:43 test-3.txt

-rw-r--r-- 1 root root 0 Oct 9 22:43 test-4.txt

-rw-r--r-- 1 root root 0 Oct 9 22:43 test-5.txt

-rw-r--r-- 1 root root 0 Oct 9 22:43 test-6.txt

-rw-r--r-- 1 root root 0 Oct 9 22:43 test-7.txt

-rw-r--r-- 1 root root 0 Oct 9 22:43 test-8.txt

-rw-r--r-- 1 root root 0 Oct 9 22:43 test-9.txt

[root@ceph-client-test data1]#

创建并验证快照

cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap create --pool rbd-data1 --image data-img2 --snap img2-snap-20221009 Creating snap: 100% complete...done. cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap list --pool rbd-data1 --image data-img2 SNAPID NAME SIZE PROTECTED TIMESTAMP 4 img2-snap-20201215 5 GiB Sun Oct 9 22:45:04 2022 cephadmin@ceph-deploy:~/ceph-cluster$

删除数据并还原快照

[root@ceph-client-test data1]# ll -h total 8.0K -rw-r--r-- 1 root root 854 Oct 9 22:43 passwd ---------- 1 root root 583 Oct 9 22:43 shadow -rw-r--r-- 1 root root 0 Oct 9 22:43 test-1.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-2.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-3.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-4.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-5.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-6.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-7.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-8.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-9.txt [root@ceph-client-test data1]# rm -fr ./* [root@ceph-client-test data1]# ll -h total 0 [root@ceph-client-test data1]# cd [root@ceph-client-test ~]# umount /data1 [root@ceph-client-test ~]# rbd unmap /dev/rbd1 [root@ceph-client-test ~]# cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap rollback --pool rbd-data1 --image data-img2 --snap img2-snap-20221009 Rolling back to snapshot: 100% complete...done. cephadmin@ceph-deploy:~/ceph-cluster$

客户端验证数据

[root@ceph-test-02 ~]# rbd --user cyh -p rbd-data1 map data-img2 /dev/rbd0 [root@ceph-test-02 ~]# mount /dev/rbd0 /data [root@ceph-test-02 ~]# cd /data/ [root@ceph-test-02 data]# ll -h total 12K drwxr-xr-x 2 root root 196 Oct 9 22:43 ./ drwxr-xr-x 20 root root 4.0K Oct 9 16:32 ../ -rw-r--r-- 1 root root 854 Oct 9 22:43 passwd ---------- 1 root root 583 Oct 9 22:43 shadow -rw-r--r-- 1 root root 0 Oct 9 22:43 test-1.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-2.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-3.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-4.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-5.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-6.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-7.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-8.txt -rw-r--r-- 1 root root 0 Oct 9 22:43 test-9.txt [root@ceph-test-02 data]#

删除快照

cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap list --pool rbd-data1 --image data-img2 SNAPID NAME SIZE PROTECTED TIMESTAMP 4 img2-snap-20221009 5 GiB Sun Oct 9 22:45:04 2022 cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap remove --pool rbd-data1 --image data-img2 --snap img2-snap-20221009 Removing snap: 100% complete...done. cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap remove --pool rbd-data1 --image data-img2 --snap img2-snap-20221009 Removing snap: 0% complete...failed. rbd: failed to remove snapshot: (2) No such file or directory cephadmin@ceph-deploy:~/ceph-cluster$

快照数量限制

cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap limit set --pool rbd-data1 --image data-img2 --limit 3 cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap create --pool rbd-data1 --image data-img2 --snap img2-snap-20221009 Creating snap: 100% complete...done. cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap create --pool rbd-data1 --image data-img2 --snap img2-snap-20221009-1 Creating snap: 100% complete...done. cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap create --pool rbd-data1 --image data-img2 --snap img2-snap-20221009-2 Creating snap: 100% complete...done. cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap create --pool rbd-data1 --image data-img2 --snap img2-snap-20221009-3 Creating snap: 10% complete...failed. rbd: failed to create snapshot: (122) Disk quota exceeded cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap create --pool rbd-data1 --image data-img2 --snap img2-snap-20221009-4 Creating snap: 10% complete...failed. rbd: failed to create snapshot: (122) Disk quota exceeded cephadmin@ceph-deploy:~/ceph-cluster$ cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap list --pool rbd-data1 --image data-img2 SNAPID NAME SIZE PROTECTED TIMESTAMP 6 img2-snap-20221009 5 GiB Sun Oct 9 22:58:59 2022 7 img2-snap-20221009-1 5 GiB Sun Oct 9 22:59:06 2022 8 img2-snap-20221009-2 5 GiB Sun Oct 9 22:59:11 2022 cephadmin@ceph-deploy:~/ceph-cluster$

清除快照数量限制

cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap limit clear --pool rbd-data1 --image data-img2 cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap create --pool rbd-data1 --image data-img2 --snap img2-snap-20221009-3 Creating snap: 100% complete...done. cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap create --pool rbd-data1 --image data-img2 --snap img2-snap-20221009-4 Creating snap: 100% complete...done. cephadmin@ceph-deploy:~/ceph-cluster$ rbd snap list --pool rbd-data1 --image data-img2 SNAPID NAME SIZE PROTECTED TIMESTAMP 6 img2-snap-20221009 5 GiB Sun Oct 9 22:58:59 2022 7 img2-snap-20221009-1 5 GiB Sun Oct 9 22:59:06 2022 8 img2-snap-20221009-2 5 GiB Sun Oct 9 22:59:11 2022 13 img2-snap-20221009-3 5 GiB Sun Oct 9 23:01:57 2022 14 img2-snap-20221009-4 5 GiB Sun Oct 9 23:02:00 2022 cephadmin@ceph-deploy:~/ceph-cluster$