基于cephadm部署ceph集群

一、环境准备

1.1、制作模板时将docker先安装

[root@ubuntu-20-04 docker]# pwd /root/docker [root@ubuntu-20-04 docker]# ll -h total 74M drwxr-xr-x 3 root root 4.0K Oct 5 14:21 ./ drwx------ 6 root root 4.0K Oct 5 14:21 ../ -rw-r--r-- 1 root root 647 Apr 11 2021 containerd.service -rw-r--r-- 1 root root 356 Jul 18 11:09 daemon.json drwxrwxr-x 2 docker docker 4.0K Jun 6 23:03 docker/ -rw-r--r-- 1 root root 62M Jun 7 08:42 docker-20.10.17.tgz -rwxr-xr-x 1 root root 12M Dec 7 2021 docker-compose-Linux-x86_64_1.28.6* -rwxr-xr-x 1 root root 2.9K Jul 21 07:29 docker-install.sh* -rw-r--r-- 1 root root 1.7K Apr 11 2021 docker.service -rw-r--r-- 1 root root 197 Apr 11 2021 docker.socket -rw-r--r-- 1 root root 454 Apr 11 2021 limits.conf -rw-r--r-- 1 root root 257 Apr 11 2021 sysctl.conf [root@ubuntu-20-04 docker]#

bash docker-install.sh

+ pwd + DIR=/root/docker + PACKAGE_NAME=docker-20.10.17.tgz + DOCKER_FILE=/root/docker/docker-20.10.17.tgz + main + centos_install_docker + + [ 0 -eq 0 ] + grep Kernel+ cat /etc/redhat-release /etc/issue cat: /etc/redhat-release: No such file or directory + /bin/echo 当前系统是,即将开始系统初始化、配置docker-compose与安装docker 当前系统是,即将开始系统初始化、配置docker-compose与安装docker + sleep 1 + systemctl stop firewalld Failed to stop firewalld.service: Unit firewalld.service not loaded. + systemctl stop NetworkManager + systemctl disable NetworkManager Removed /etc/systemd/system/dbus-org.freedesktop.nm-dispatcher.service. Removed /etc/systemd/system/multi-user.target.wants/NetworkManager.service. Removed /etc/systemd/system/network-online.target.wants/NetworkManager-wait-online.service. + echo NetworkManager NetworkManager + sleep 1 + sed -i s/SELINUX=enforcing/SELINUX=disabled/g /etc/sysconfig/selinux sed: can't read /etc/sysconfig/selinux: No such file or directory + cp /root/docker/limits.conf /etc/security/limits.conf + cp /root/docker/sysctl.conf /etc/sysctl.conf + /bin/tar xvf /root/docker/docker-20.10.17.tgz docker/ docker/docker-init docker/containerd docker/ctr docker/runc docker/dockerd docker/docker-proxy docker/containerd-shim docker/docker docker/containerd-shim-runc-v2 + cp docker/containerd docker/containerd-shim docker/containerd-shim-runc-v2 docker/ctr docker/docker docker/docker-init docker/docker-proxy docker/dockerd docker/runc /usr/bin + mkdir /etc/docker + cp daemon.json /etc/docker + cp containerd.service /lib/systemd/system/containerd.service + cp docker.service /lib/systemd/system/docker.service + cp docker.socket /lib/systemd/system/docker.socket + cp /root/docker/docker-compose-Linux-x86_64_1.28.6 /usr/bin/docker-compose + groupadd docker + useradd docker -s /sbin/nologin -g docker + + [ 0 -ne 0 ] + usermod magedu -G docker + id -u magedu id: ‘magedu’: no such user usermod: user 'magedu' does not exist + systemctl enable containerd.service Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /lib/systemd/system/containerd.service. + systemctl restart containerd.service + systemctl enable docker.service Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /lib/systemd/system/docker.service. + systemctl restart docker.service + systemctl enable docker.socket Created symlink /etc/systemd/system/sockets.target.wants/docker.socket → /lib/systemd/system/docker.socket. + systemctl restart docker.socket + ubuntu_install_docker + + + [ 0 -eqgrep 0 Ubuntu ] /etc/issue + cat /etc/issue + /bin/echo 当前系统是Ubuntu 20.04.4 LTS \n \l,即将开始系统初始化、配置docker-compose与安装docker Ubuntu 20.04.4 LTS \n \l 当前系统是Ubuntu 20.04.4 LTS \n \l,即将开始系统初始化、配置docker-compose与安装docker + sleep 1 + cp /root/docker/limits.conf /etc/security/limits.conf + cp /root/docker/sysctl.conf /etc/sysctl.conf + /bin/tar xvf /root/docker/docker-20.10.17.tgz docker/ docker/docker-init docker/containerd docker/ctr docker/runc docker/dockerd docker/docker-proxy docker/containerd-shim docker/docker docker/containerd-shim-runc-v2 + cp docker/containerd docker/containerd-shim docker/containerd-shim-runc-v2 docker/ctr docker/docker docker/docker-init docker/docker-proxy docker/dockerd docker/runc /usr/bin cp: cannot create regular file '/usr/bin/containerd': Text file busy cp: cannot create regular file '/usr/bin/dockerd': Text file busy + mkdir /etc/docker mkdir: cannot create directory ‘/etc/docker’: File exists + cp containerd.service /lib/systemd/system/containerd.service + cp docker.service /lib/systemd/system/docker.service + cp docker.socket /lib/systemd/system/docker.socket + cp /root/docker/docker-compose-Linux-x86_64_1.28.6 /usr/bin/docker-compose + /bin/echo docker 安装完成! docker 安装完成! + sleep 1 + groupadd docker groupadd: group 'docker' already exists + + [ 0 -ne 0 ] + usermod magedu -G docker + id -u magedu id: ‘magedu’: no such user usermod: user 'magedu' does not exist + systemctl enable containerd.service + systemctl restart containerd.service + systemctl enable docker.service + systemctl restart docker.service + systemctl enable docker.socket + systemctl restart docker.socket

1.2、机器准备

172.16.88.131 cephadm-ceph-mon-01 2vcpu 4G 50G 172.16.88.132 cephadm-ceph-mon-01 2vcpu 4G 50G 172.16.88.133 cephadm-ceph-mon-01 2vcpu 4G 50G 172.16.88.134 cephadm-ceph-mgr-01 2vcpu 4G 50G 172.16.88.135 cephadm-ceph-mgr-02 2vcpu 4G 50G 172.16.88.136 cephadm-ceph-node-01 2vcpu 4G 50G 3*50G 172.16.88.137 cephadm-ceph-node-02 2vcpu 4G 50G 3*50G 172.16.88.138 cephadm-ceph-node-03 2vcpu 4G 50G 3*50G

1.3、在mon-01节点安装cephadm

[root@cephadm-ceph-mon-01 ~]# wget https://mirrors.aliyun.com/ceph/debian-17.2.4/pool/main/c/ceph/cephadm_17.2.4-1focal_amd64.deb

[root@cephadm-ceph-mon-01 ~]# dpkg -i cephadm_17.2.4-1focal_amd64.deb

[root@cephadm-ceph-mon-01 ~]# cephadm pull ##所有机器都提前下载可以使用docker pull quay.io/ceph/ceph:v17

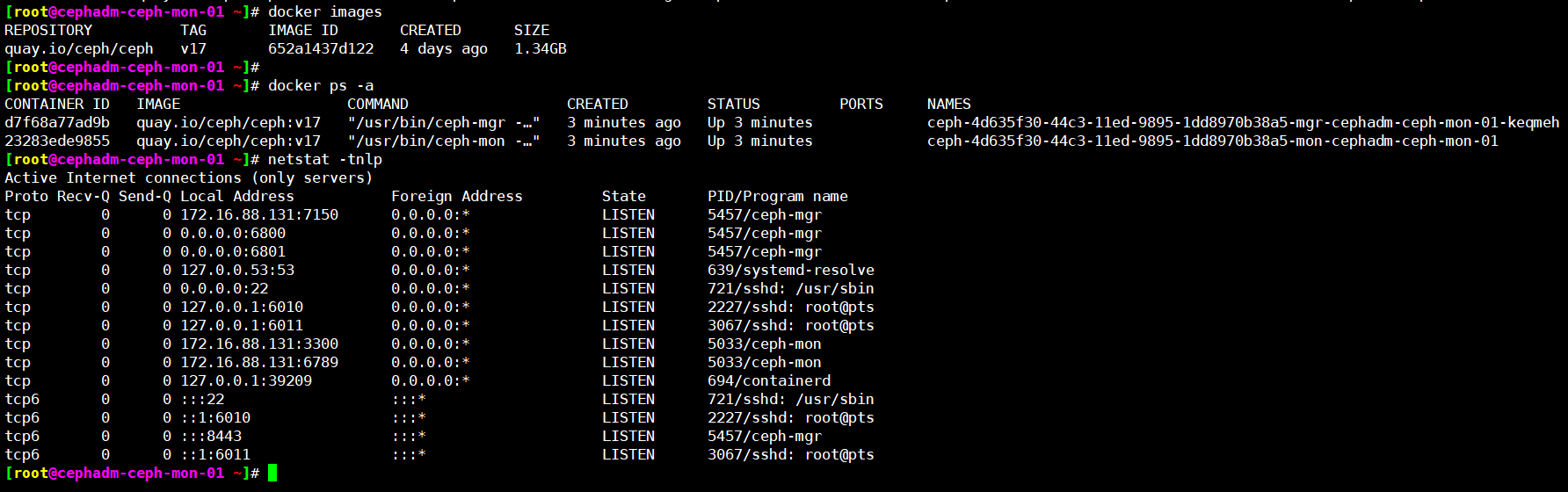

[root@cephadm-ceph-mon-01 ~]# cephadm pull Pulling container image quay.io/ceph/ceph:v17... { "ceph_version": "ceph version 17.2.4 (1353ed37dec8d74973edc3d5d5908c20ad5a7332) quincy (stable)", "image_id": "652a1437d122ef2016e6a87c4653bd58691e9255e3efb8f31a3a4df3f82c0636", "repo_digests": [ "quay.io/ceph/ceph@sha256:ffc55676b680c2428f7feb2daa331050d8606e2cb43a86139dbb9a2e3b02488e" ] } [root@cephadm-ceph-mon-01 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE quay.io/ceph/ceph v17 652a1437d122 4 days ago 1.34GB [root@cephadm-ceph-mon-01 ~]#

1.4、初始化 ceph 集群

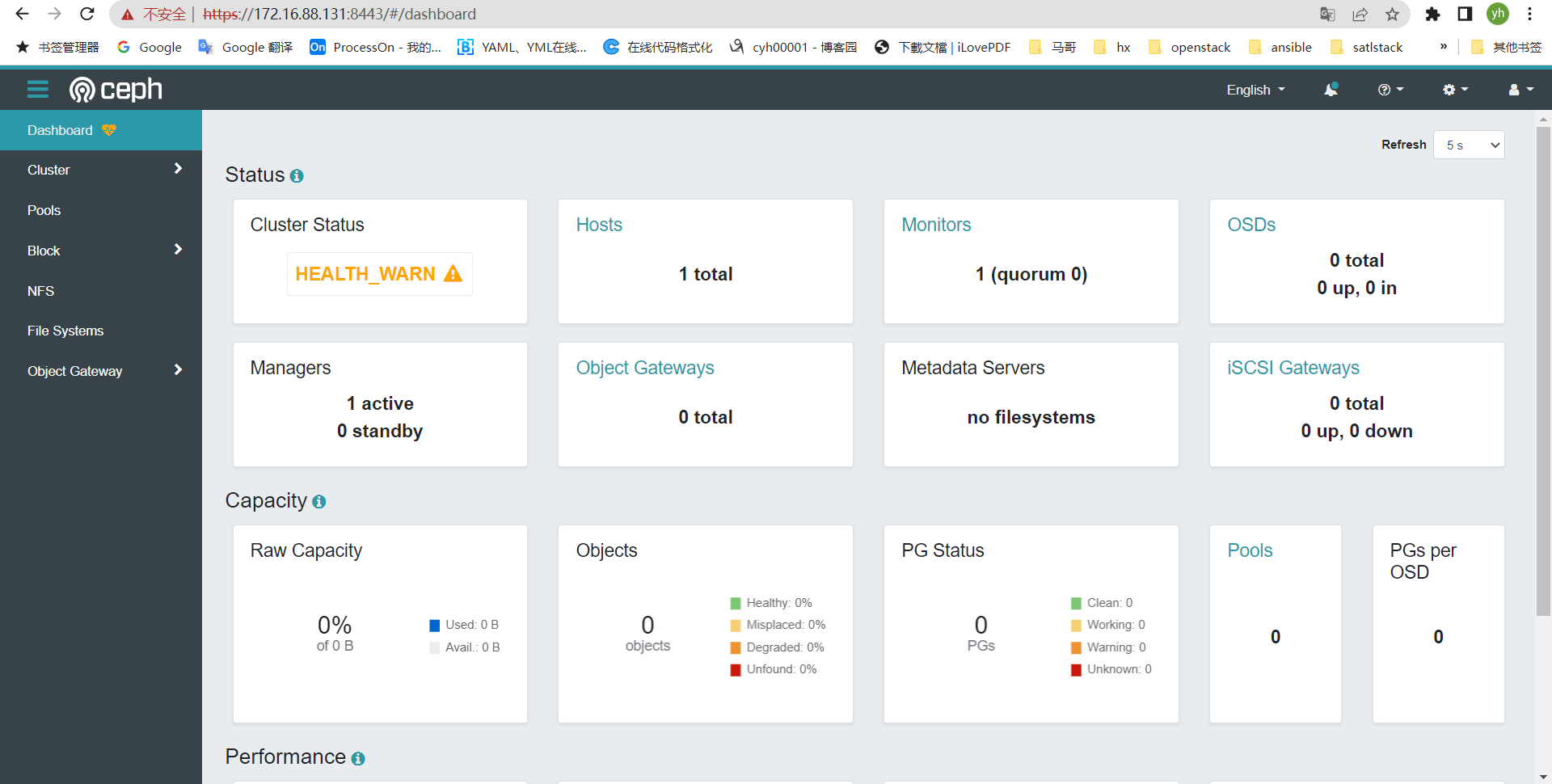

当前节点安装 mon、 mgr 角色,部署 prometheus、 grafana、 alertmanager、 node-exporter等服务。

[root@cephadm-ceph-mon-01 ~]# cephadm bootstrap --mon-ip 172.16.88.131 --cluster-network 172.16.88.0/24 --allow-fqdn-hostname

Creating directory /etc/ceph for ceph.conf Verifying podman|docker is present... Verifying lvm2 is present... Verifying time synchronization is in place... Unit systemd-timesyncd.service is enabled and running Repeating the final host check... docker (/usr/bin/docker) is present systemctl is present lvcreate is present Unit systemd-timesyncd.service is enabled and running Host looks OK Cluster fsid: 4d635f30-44c3-11ed-9895-1dd8970b38a5 Verifying IP 172.16.88.131 port 3300 ... Verifying IP 172.16.88.131 port 6789 ... Mon IP `172.16.88.131` is in CIDR network `172.16.88.0/24` Mon IP `172.16.88.131` is in CIDR network `172.16.88.0/24` Pulling container image quay.io/ceph/ceph:v17... Ceph version: ceph version 17.2.4 (1353ed37dec8d74973edc3d5d5908c20ad5a7332) quincy (stable) Extracting ceph user uid/gid from container image... Creating initial keys... Creating initial monmap... Creating mon... Waiting for mon to start... Waiting for mon... mon is available Assimilating anything we can from ceph.conf... Generating new minimal ceph.conf... Restarting the monitor... Setting mon public_network to 172.16.88.0/24 Setting cluster_network to 172.16.88.0/24 Wrote config to /etc/ceph/ceph.conf Wrote keyring to /etc/ceph/ceph.client.admin.keyring Creating mgr... Verifying port 9283 ... Waiting for mgr to start... Waiting for mgr... mgr not available, waiting (1/15)... mgr not available, waiting (2/15)... mgr not available, waiting (3/15)... mgr not available, waiting (4/15)... mgr not available, waiting (5/15)... mgr is available Enabling cephadm module... Waiting for the mgr to restart... Waiting for mgr epoch 5... mgr epoch 5 is available Setting orchestrator backend to cephadm... Generating ssh key... Wrote public SSH key to /etc/ceph/ceph.pub Adding key to root@localhost authorized_keys... Adding host cephadm-ceph-mon-01... Deploying mon service with default placement... Deploying mgr service with default placement... Deploying crash service with default placement... Deploying prometheus service with default placement... Deploying grafana service with default placement... Deploying node-exporter service with default placement... Deploying alertmanager service with default placement... Enabling the dashboard module... Waiting for the mgr to restart... Waiting for mgr epoch 9... mgr epoch 9 is available Generating a dashboard self-signed certificate... Creating initial admin user... Fetching dashboard port number... Ceph Dashboard is now available at: URL: https://cephadm-ceph-mon-01:8443/ User: admin Password: l59dve9gx6 Enabling client.admin keyring and conf on hosts with "admin" label Saving cluster configuration to /var/lib/ceph/4d635f30-44c3-11ed-9895-1dd8970b38a5/config directory Enabling autotune for osd_memory_target You can access the Ceph CLI as following in case of multi-cluster or non-default config: sudo /usr/sbin/cephadm shell --fsid 4d635f30-44c3-11ed-9895-1dd8970b38a5 -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring Or, if you are only running a single cluster on this host: sudo /usr/sbin/cephadm shell Please consider enabling telemetry to help improve Ceph: ceph telemetry on For more information see: https://docs.ceph.com/docs/master/mgr/telemetry/ Bootstrap complete.

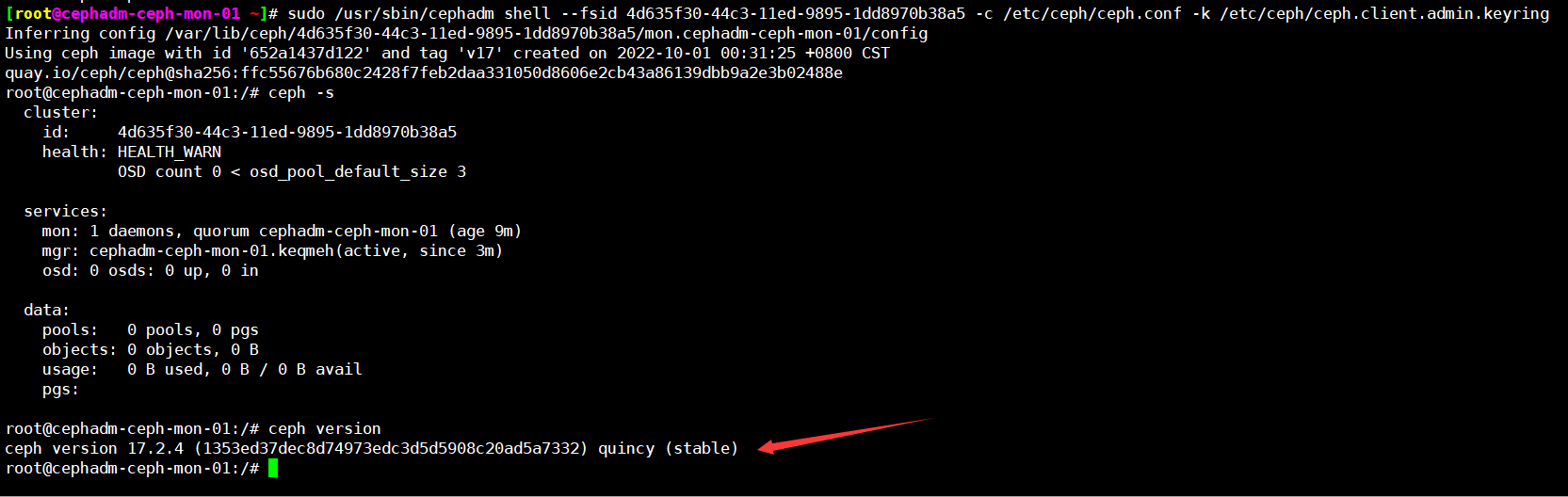

1.5、进入ceph管理终端

[root@cephadm-ceph-mon-01 ~]# sudo /usr/sbin/cephadm shell --fsid 4d635f30-44c3-11ed-9895-1dd8970b38a5 -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

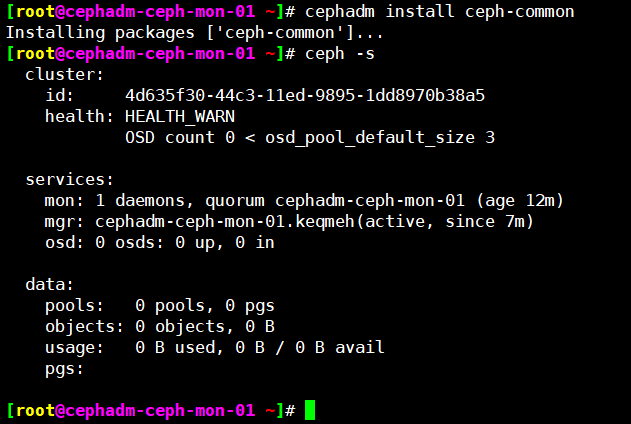

安装ceph客户端命令

[root@cephadm-ceph-mon-01 ~]# cephadm install ceph-common

二、添加node节点

2.1、将node主机添加到集群

创建密钥,并分发到其他机器

[root@cephadm-ceph-mon-01 ~]# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa Your public key has been saved in /root/.ssh/id_rsa.pub The key fingerprint is: SHA256:ytOO40r2/oMZYkD60/y2P9yMkmziWFAQUJ0d/2gN5cc root@cephadm-ceph-mon-01 The key's randomart image is: +---[RSA 3072]----+ |.o+o o.. . | | ..o .. o . | | o . o . E | |. .. = . | | ..+ S o | | o.=..+ | | o+++*.+ | | =..@+= o | | ..oB**+o | +----[SHA256]-----+ [root@cephadm-ceph-mon-01 ~]#

[root@cephadm-ceph-mon-01 ~]# apt-get install sshpass

[root@cephadm-ceph-mon-01 ~]# vi ssh-key.sh

#!/bin/bash for i in {131..138}; do sshpass -p 'redhat' ssh-copy-id -o StrictHostKeyChecking=no -i /root/.ssh/id_rsa -p 22 root@172.16.88.$i; done

[root@cephadm-ceph-mon-01 ~]# bash ssh-key.sh

分发ceph.pub到其他机器

[root@cephadm-ceph-mon-01 ceph]# for i in {132..138};do ssh-copy-id -f -i /etc/ceph/ceph.pub root@172.16.88.$i;done

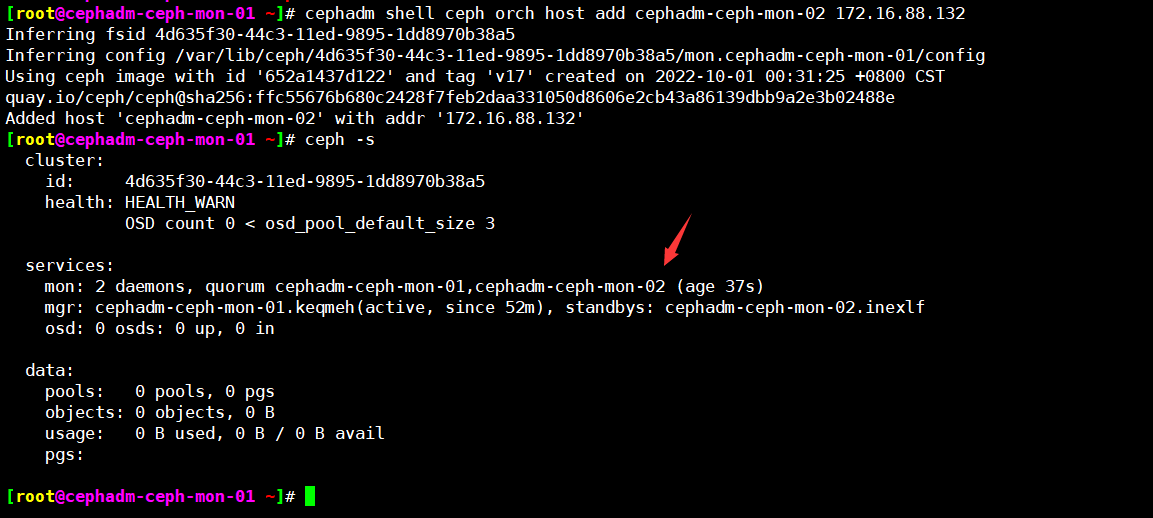

2.2、添加ceph-mon节点

[root@cephadm-ceph-mon-01 ~]# cephadm shell ceph orch host add cephadm-ceph-mon-02 172.16.88.132

Inferring fsid 4d635f30-44c3-11ed-9895-1dd8970b38a5 Inferring config /var/lib/ceph/4d635f30-44c3-11ed-9895-1dd8970b38a5/mon.cephadm-ceph-mon-01/config Using ceph image with id '652a1437d122' and tag 'v17' created on 2022-10-01 00:31:25 +0800 CST quay.io/ceph/ceph@sha256:ffc55676b680c2428f7feb2daa331050d8606e2cb43a86139dbb9a2e3b02488e Added host 'cephadm-ceph-mon-02' with addr '172.16.88.132'

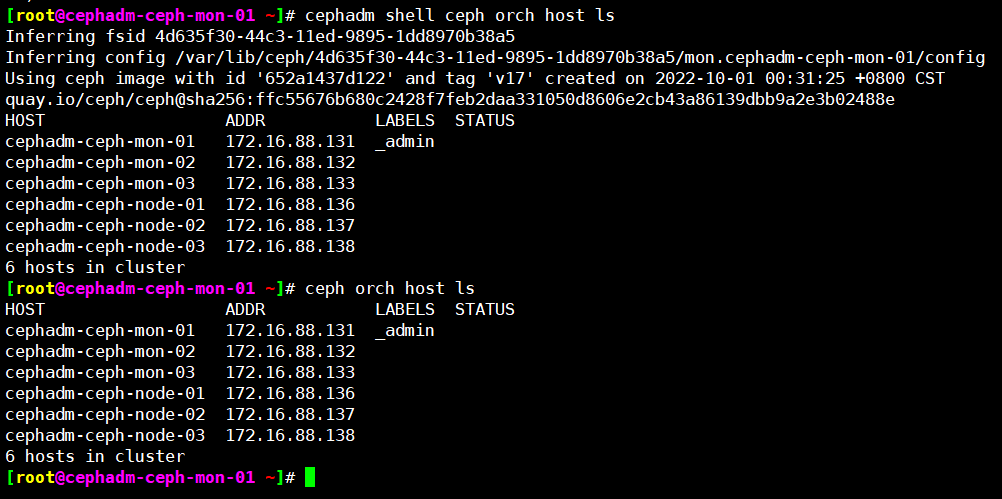

继续添加ceph-mon-03、ceph-node-01\02\03

cephadm shell ceph orch host add cephadm-ceph-mon-03 172.16.88.133

cephadm shell ceph orch host add cephadm-ceph-node-01 172.16.88.136

cephadm shell ceph orch host add cephadm-ceph-node-02 172.16.88.137

cephadm shell ceph orch host add cephadm-ceph-node-03 172.16.88.138

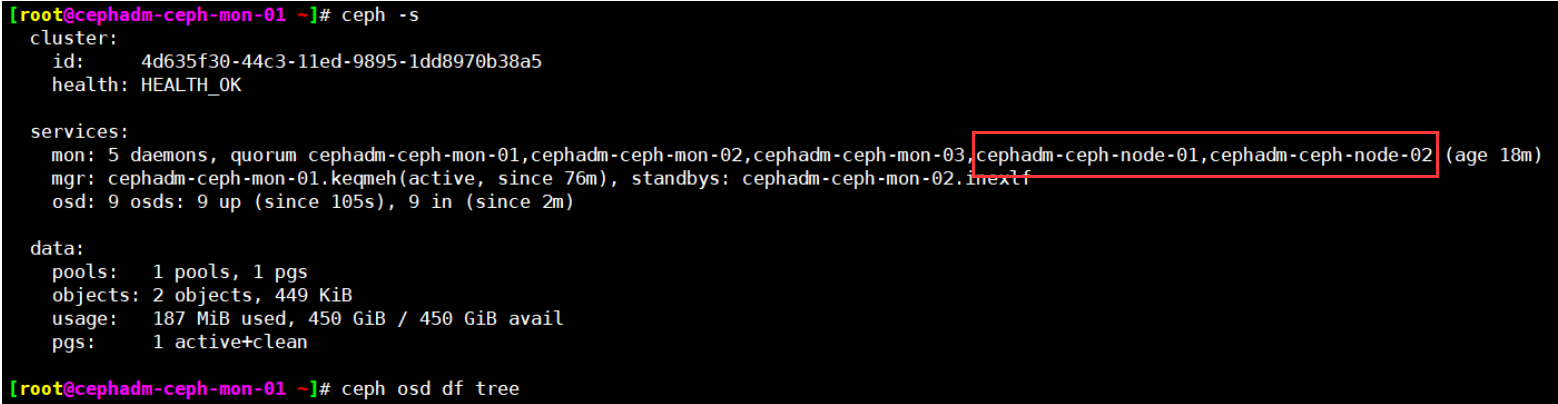

添加到集群的主机, 默认会部署 mon 服务

2.3、将磁盘添加到ceph集群

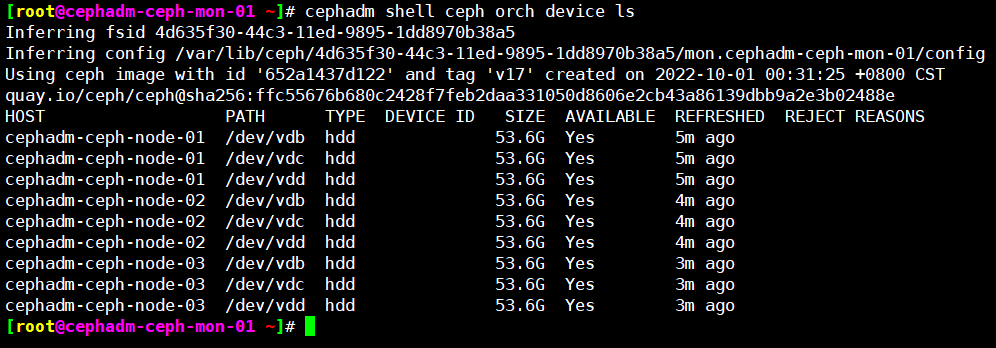

查看可用的磁盘

[root@cephadm-ceph-mon-01 ~]# cephadm shell ceph orch device ls

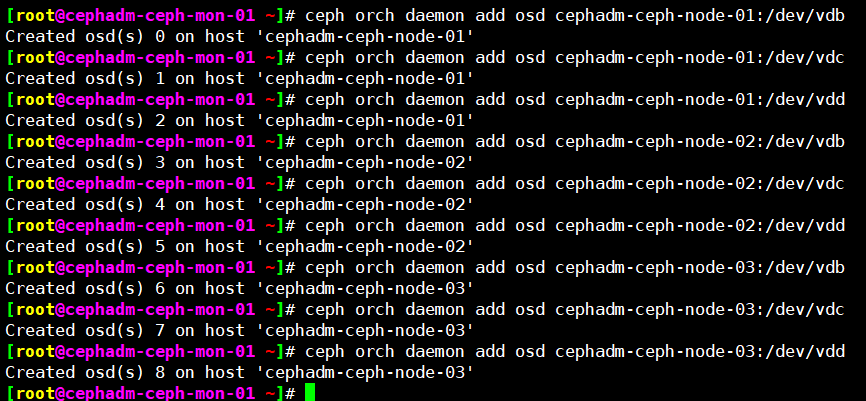

将所有node节点磁盘添加到ceph集群

ceph orch daemon add osd cephadm-ceph-node-01:/dev/vdb ceph orch daemon add osd cephadm-ceph-node-02:/dev/vdc ceph orch daemon add osd cephadm-ceph-node-03:/dev/vdd ceph orch daemon add osd cephadm-ceph-node-01:/dev/vdb ceph orch daemon add osd cephadm-ceph-node-02:/dev/vdc ceph orch daemon add osd cephadm-ceph-node-03:/dev/vdd ceph orch daemon add osd cephadm-ceph-node-01:/dev/vdb ceph orch daemon add osd cephadm-ceph-node-02:/dev/vdc ceph orch daemon add osd cephadm-ceph-node-03:/dev/vdd

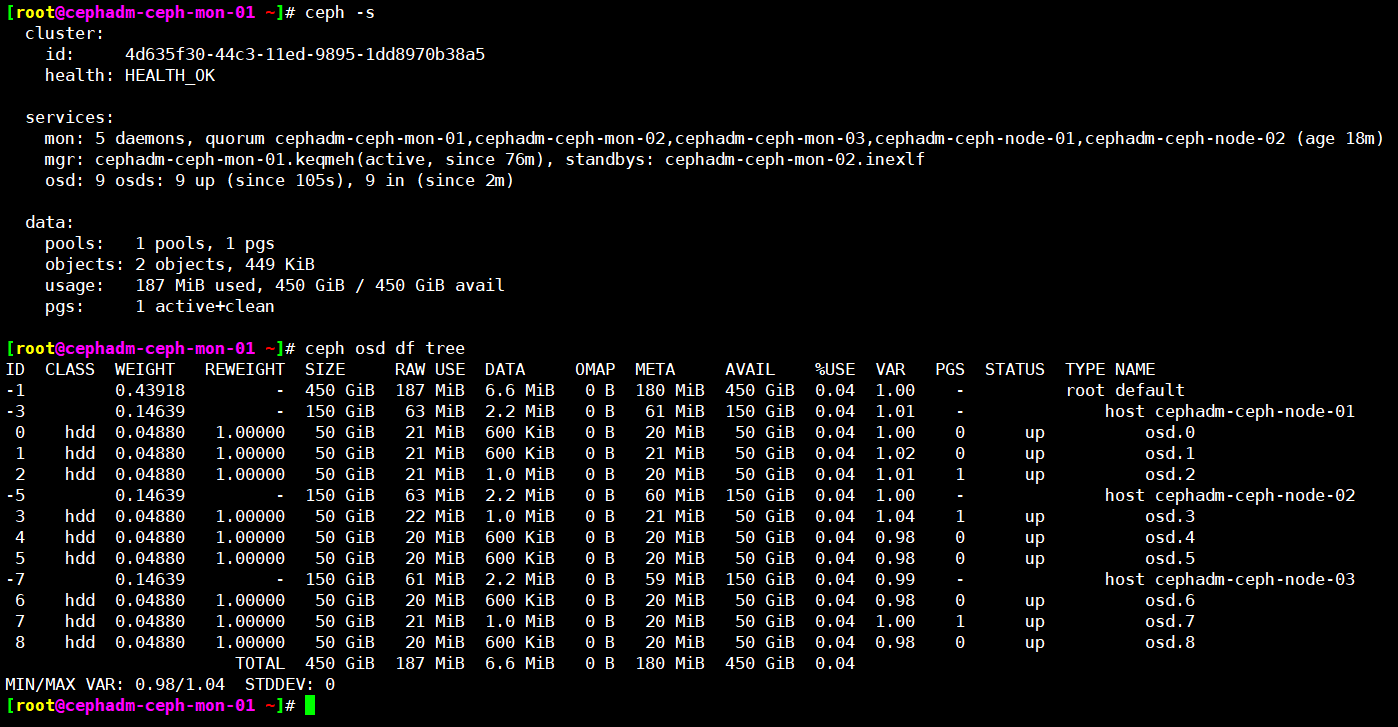

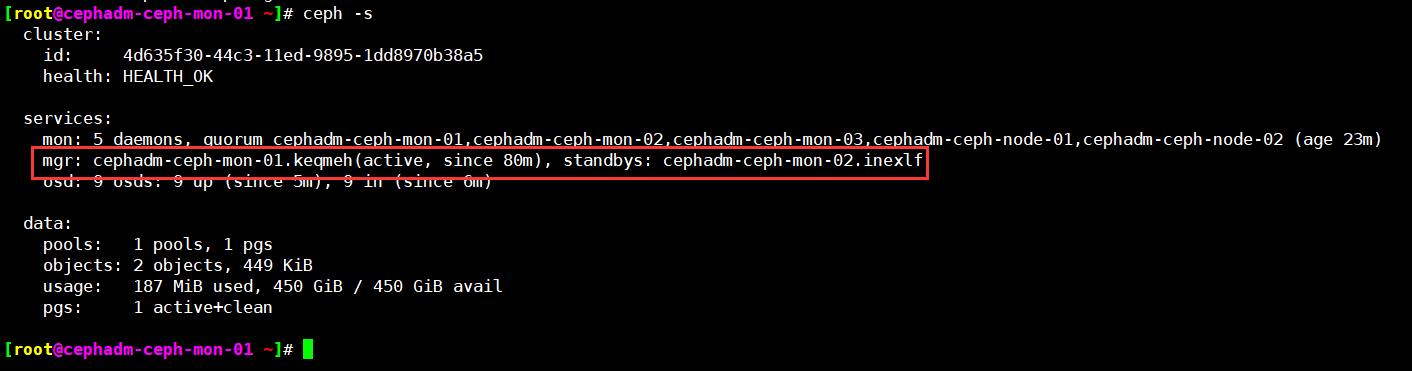

验证集群状态

2.4、添加专用的ceph-mgr节点

[root@cephadm-ceph-mon-01 ~]# cephadm shell ceph orch host add cephadm-ceph-mgr-01 172.16.88.134

[root@cephadm-ceph-mon-01 ~]# cephadm shell ceph orch host add cephadm-ceph-mgr-01 172.16.88.134 Inferring fsid 4d635f30-44c3-11ed-9895-1dd8970b38a5 Inferring config /var/lib/ceph/4d635f30-44c3-11ed-9895-1dd8970b38a5/mon.cephadm-ceph-mon-01/config Using ceph image with id '652a1437d122' and tag 'v17' created on 2022-10-01 00:31:25 +0800 CST quay.io/ceph/ceph@sha256:ffc55676b680c2428f7feb2daa331050d8606e2cb43a86139dbb9a2e3b02488e Added host 'cephadm-ceph-mgr-01' with addr '172.16.88.134' [root@cephadm-ceph-mon-01 ~]# cephadm shell ceph orch host add cephadm-ceph-mgr-02 172.16.88.135 Inferring fsid 4d635f30-44c3-11ed-9895-1dd8970b38a5 Inferring config /var/lib/ceph/4d635f30-44c3-11ed-9895-1dd8970b38a5/mon.cephadm-ceph-mon-01/config Using ceph image with id '652a1437d122' and tag 'v17' created on 2022-10-01 00:31:25 +0800 CST quay.io/ceph/ceph@sha256:ffc55676b680c2428f7feb2daa331050d8606e2cb43a86139dbb9a2e3b02488e Added host 'cephadm-ceph-mgr-02' with addr '172.16.88.135' [root@cephadm-ceph-mon-01 ~]#

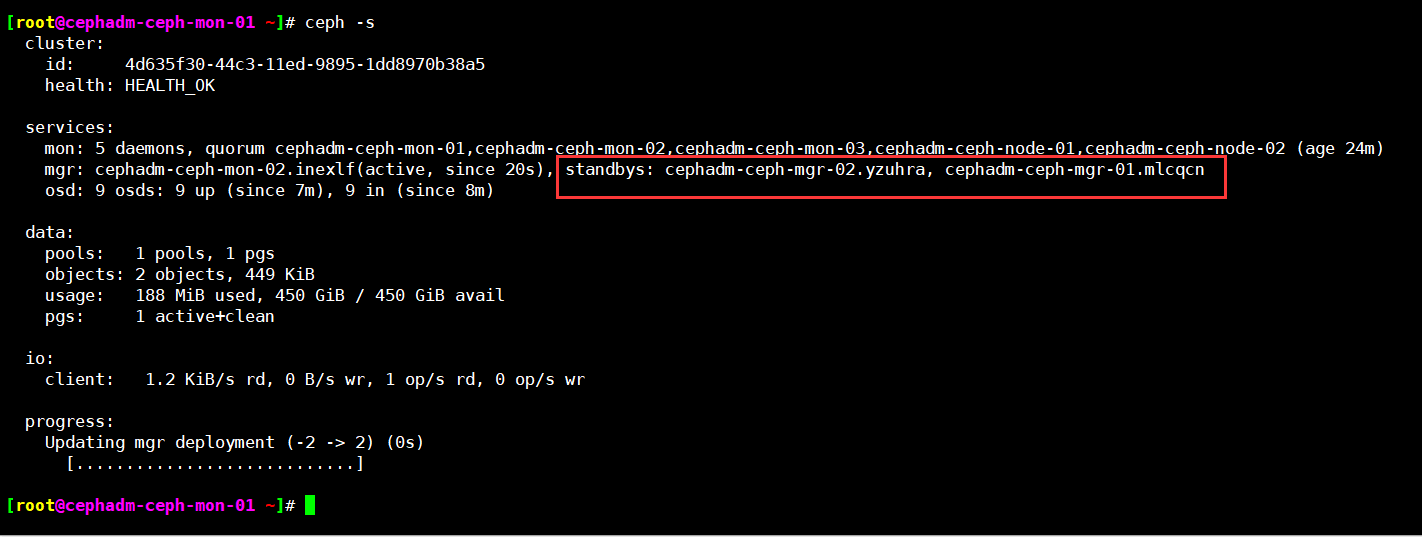

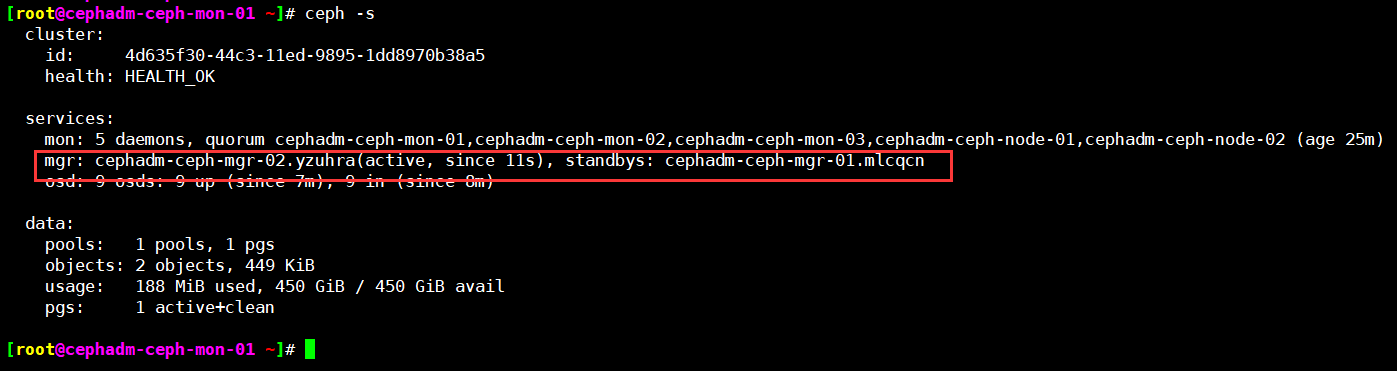

切换mgr节点

[root@cephadm-ceph-mon-01 ~]# ceph orch apply mgr cephadm-ceph-mgr-01,cephadm-ceph-mgr-02

三、创建pool测试

[root@cephadm-ceph-mon-01 ~]# ceph osd pool create rbd-data1 32 32 #创建rbd-data1 pool池 pool 'rbd-data1' created [root@cephadm-ceph-mon-01 ~]# [root@cephadm-ceph-mon-01 ~]# ceph osd pool ls #列出集群所有pool .mgr rbd-data1 [root@cephadm-ceph-mon-01 ~]# [root@cephadm-ceph-mon-01 ~]# ceph df --- RAW STORAGE --- CLASS SIZE AVAIL USED RAW USED %RAW USED hdd 450 GiB 450 GiB 190 MiB 190 MiB 0.04 TOTAL 450 GiB 450 GiB 190 MiB 190 MiB 0.04 --- POOLS --- POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL .mgr 1 1 449 KiB 2 1.3 MiB 0 142 GiB rbd-data1 2 32 0 B 0 0 B 0 142 GiB [root@cephadm-ceph-mon-01 ~]# ceph osd pool application enable rbd-data1 rbd #启用rbd-data1池应用 enabled application 'rbd' on pool 'rbd-data1' [root@cephadm-ceph-mon-01 ~]# rbd pool init -p rbd-data1 [root@cephadm-ceph-mon-01 ~]# rbd create data-img1 --size 3G --pool rbd-data1 --image-format 2 --image-feature layering #在rbd-data1池下创建3G大小data-img1镜像 [root@cephadm-ceph-mon-01 ~]# rbd create data-img2 --size 3G --pool rbd-data1 --image-format 2 --image-feature layering [root@cephadm-ceph-mon-01 ~]# rbd ls --pool rbd-data1 data-img1 data-img2 [root@cephadm-ceph-mon-01 ~]# rbd ls --pool rbd-data1 -l NAME SIZE PARENT FMT PROT LOCK data-img1 3 GiB 2 data-img2 3 GiB 2 [root@cephadm-ceph-mon-01 ~]# rbd --image data-img2 --pool rbd-data1 info #查看rbd-data1下data-img2镜像详细信息 rbd image 'data-img2': size 3 GiB in 768 objects order 22 (4 MiB objects) snapshot_count: 0 id: 39c13b224fb9 block_name_prefix: rbd_data.39c13b224fb9 format: 2 features: layering op_features: flags: create_timestamp: Thu Oct 6 21:20:59 2022 access_timestamp: Thu Oct 6 21:20:59 2022 modify_timestamp: Thu Oct 6 21:20:59 2022 [root@cephadm-ceph-mon-01 ~]# rbd --image data-img1 --pool rbd-data1 info rbd image 'data-img1': size 3 GiB in 768 objects order 22 (4 MiB objects) snapshot_count: 0 id: 39b7dc5e8e0a block_name_prefix: rbd_data.39b7dc5e8e0a format: 2 features: layering op_features: flags: create_timestamp: Thu Oct 6 21:20:53 2022 access_timestamp: Thu Oct 6 21:20:53 2022 modify_timestamp: Thu Oct 6 21:20:53 2022 [root@cephadm-ceph-mon-01 ~]#

挂载rbd

[root@cephadm-ceph-mon-01 ~]# rbd -p rbd-data1 map data-img1 #映射rbd到本地目录 /dev/rbd0 [root@cephadm-ceph-mon-01 ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT loop0 7:0 0 67.2M 1 loop /snap/lxd/21835 loop1 7:1 0 47M 1 loop /snap/snapd/16292 loop2 7:2 0 67.8M 1 loop /snap/lxd/22753 loop3 7:3 0 62M 1 loop /snap/core20/1587 loop4 7:4 0 63.2M 1 loop /snap/core20/1623 loop5 7:5 0 48M 1 loop /snap/snapd/17029 rbd0 251:0 0 3G 0 disk vda 252:0 0 50G 0 disk ├─vda1 252:1 0 1M 0 part ├─vda2 252:2 0 1.5G 0 part /boot └─vda3 252:3 0 48.5G 0 part └─ubuntu--vg-ubuntu--lv 253:0 0 48.5G 0 lvm / [root@cephadm-ceph-mon-01 ~]# mkdir /rbd-data [root@cephadm-ceph-mon-01 ~]# mkfs.xfs /dev/rbd0 meta-data=/dev/rbd0 isize=512 agcount=8, agsize=98304 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=1, sparse=1, rmapbt=0 = reflink=1 data = bsize=4096 blocks=786432, imaxpct=25 = sunit=16 swidth=16 blks naming =version 2 bsize=4096 ascii-ci=0, ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=16 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 [root@cephadm-ceph-mon-01 ~]# mount /dev/rbd0 /rbd-data/ [root@cephadm-ceph-mon-01 ~]# df -Th Filesystem Type Size Used Avail Use% Mounted on udev devtmpfs 1.9G 0 1.9G 0% /dev tmpfs tmpfs 394M 1.7M 392M 1% /run /dev/mapper/ubuntu--vg-ubuntu--lv ext4 48G 12G 35G 25% / tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm tmpfs tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup /dev/loop1 squashfs 47M 47M 0 100% /snap/snapd/16292 /dev/loop0 squashfs 68M 68M 0 100% /snap/lxd/21835 /dev/loop3 squashfs 62M 62M 0 100% /snap/core20/1587 /dev/loop2 squashfs 68M 68M 0 100% /snap/lxd/22753 /dev/vda2 ext4 1.5G 205M 1.2G 15% /boot /dev/loop5 squashfs 48M 48M 0 100% /snap/snapd/17029 /dev/loop4 squashfs 64M 64M 0 100% /snap/core20/1623 tmpfs tmpfs 394M 0 394M 0% /run/user/0 overlay overlay 48G 12G 35G 25% /var/lib/docker/overlay2/566d851e9c98bf7dc7fcfd9af57213657c3594981e9a90970dfcce54845b786c/merged overlay overlay 48G 12G 35G 25% /var/lib/docker/overlay2/719c6850931528a0ccb13cd89f660a481f82923ac0d710874ed2fad8f58852e8/merged overlay overlay 48G 12G 35G 25% /var/lib/docker/overlay2/3b2595d12e589f79a09ed36ea2e6760bfb73e04d866beb1352fe9230bc1e5520/merged overlay overlay 48G 12G 35G 25% /var/lib/docker/overlay2/3f3e47fc108ba57d194a8dc55431e0c6d7bce1b4dddf2ffd4ac171193eafe63d/merged overlay overlay 48G 12G 35G 25% /var/lib/docker/overlay2/53c9d28bf88152b45d148abdd80ed3a713a45c92c6f3c69452f7d8773a45928b/merged overlay overlay 48G 12G 35G 25% /var/lib/docker/overlay2/8bf9de2d17ff69e1efc0463417f1d0ce5a4a85a56eea2c195f6470173b25f7e8/merged /dev/rbd0 xfs 3.0G 54M 3.0G 2% /rbd-data [root@cephadm-ceph-mon-01 ~]# cd /rbd-data/ [root@cephadm-ceph-mon-01 rbd-data]# ls [root@cephadm-ceph-mon-01 rbd-data]# touch test-{1..9}.txt [root@cephadm-ceph-mon-01 rbd-data]# ll -h total 4.0K drwxr-xr-x 2 root root 168 Oct 6 21:29 ./ drwxr-xr-x 20 root root 4.0K Oct 6 21:27 ../ -rw-r--r-- 1 root root 0 Oct 6 21:29 test-1.txt -rw-r--r-- 1 root root 0 Oct 6 21:29 test-2.txt -rw-r--r-- 1 root root 0 Oct 6 21:29 test-3.txt -rw-r--r-- 1 root root 0 Oct 6 21:29 test-4.txt -rw-r--r-- 1 root root 0 Oct 6 21:29 test-5.txt -rw-r--r-- 1 root root 0 Oct 6 21:29 test-6.txt -rw-r--r-- 1 root root 0 Oct 6 21:29 test-7.txt -rw-r--r-- 1 root root 0 Oct 6 21:29 test-8.txt -rw-r--r-- 1 root root 0 Oct 6 21:29 test-9.txt [root@cephadm-ceph-mon-01 rbd-data]#

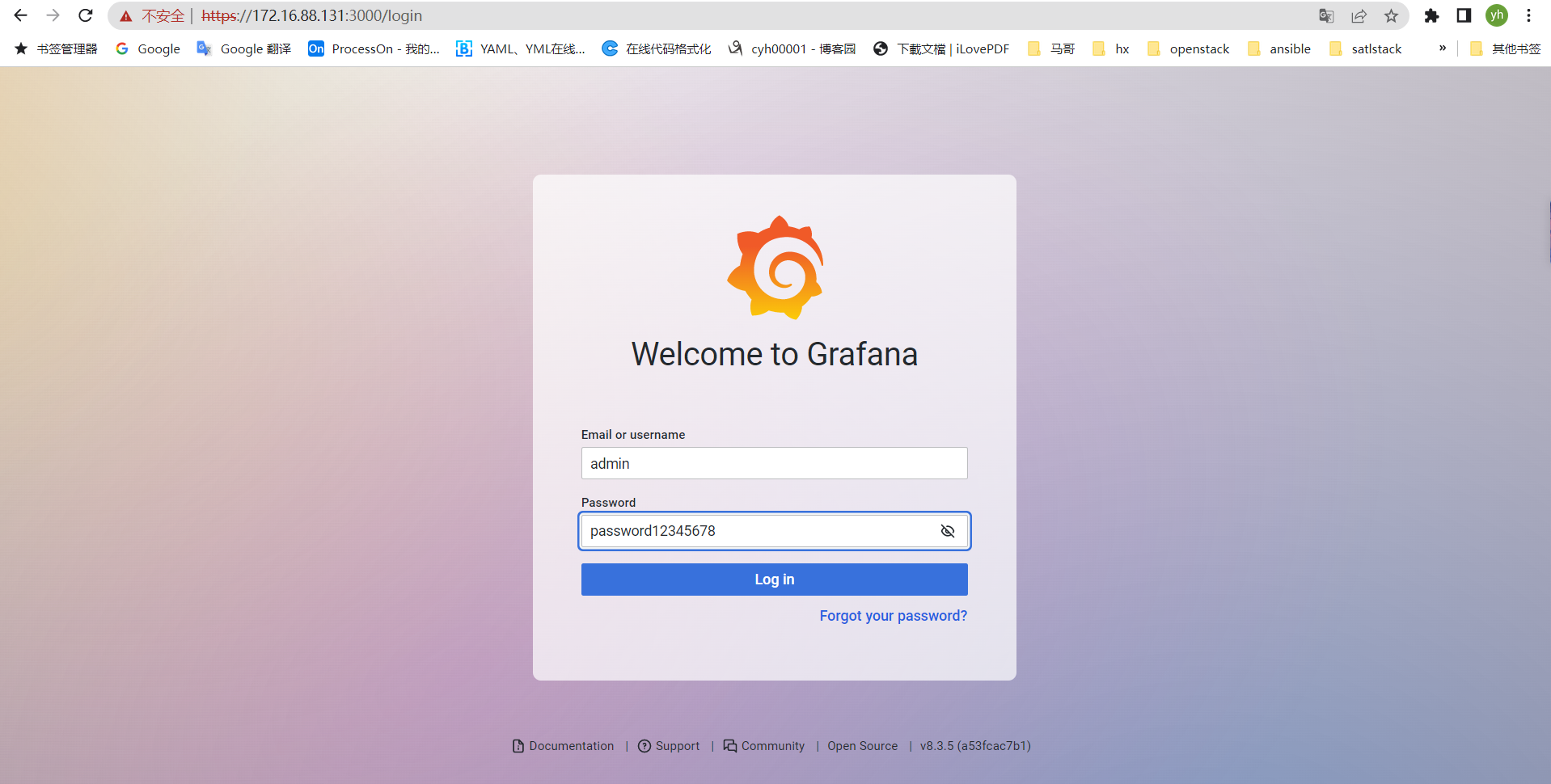

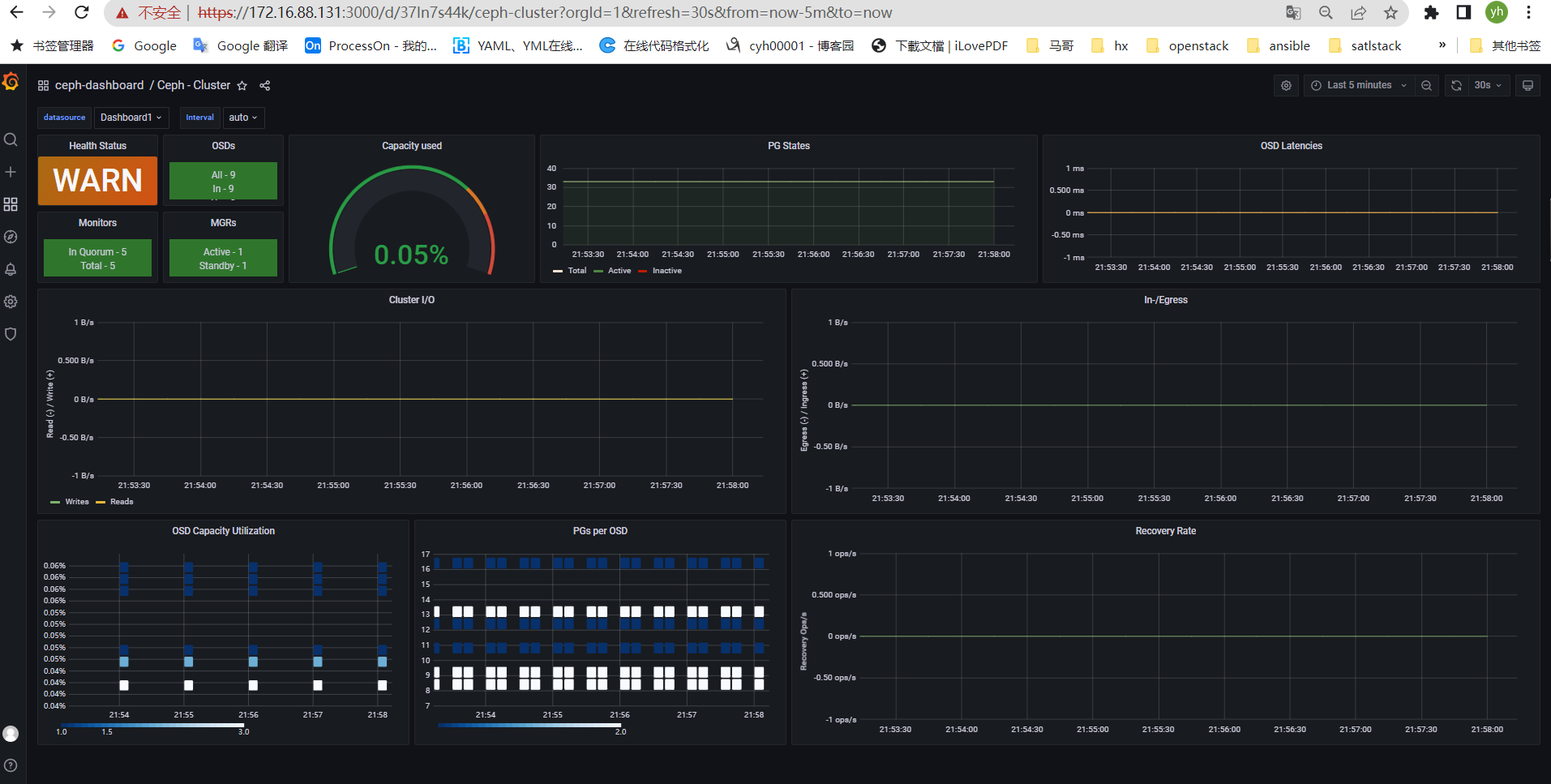

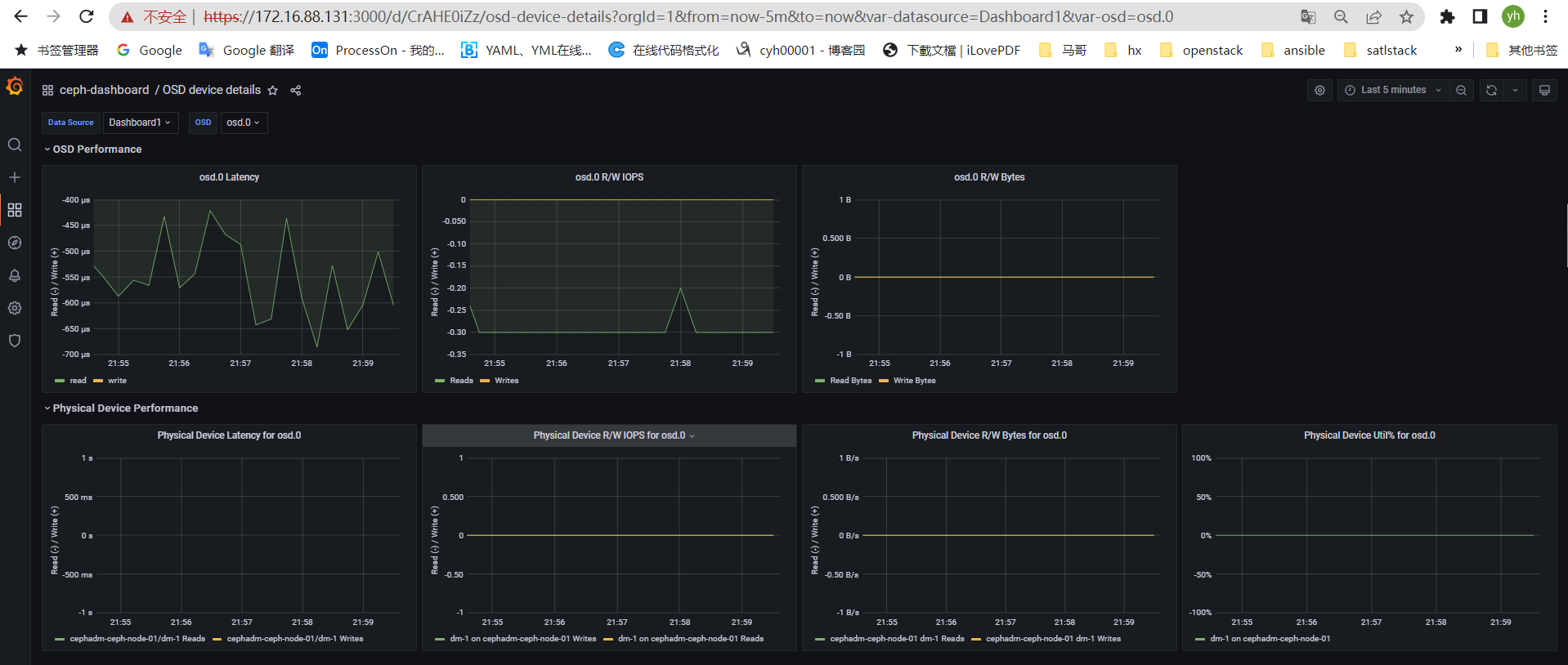

四、验证自带的Prometheus监控

修改grafana初始admin密码

[root@cephadm-ceph-mon-01 ~]# vim grafana.yaml [root@cephadm-ceph-mon-01 ~]# cat grafana.yaml service_type: grafana spec: initial_admin_password: password12345678 [root@cephadm-ceph-mon-01 ~]# [root@cephadm-ceph-mon-01 ~]# ceph orch apply -i grafana.yaml Scheduled grafana update... [root@cephadm-ceph-mon-01 ~]# ceph orch redeploy grafana Scheduled to redeploy grafana.cephadm-ceph-mon-01 on host 'cephadm-ceph-mon-01' [root@cephadm-ceph-mon-01 ~]# [root@cephadm-ceph-mon-01 ~]#

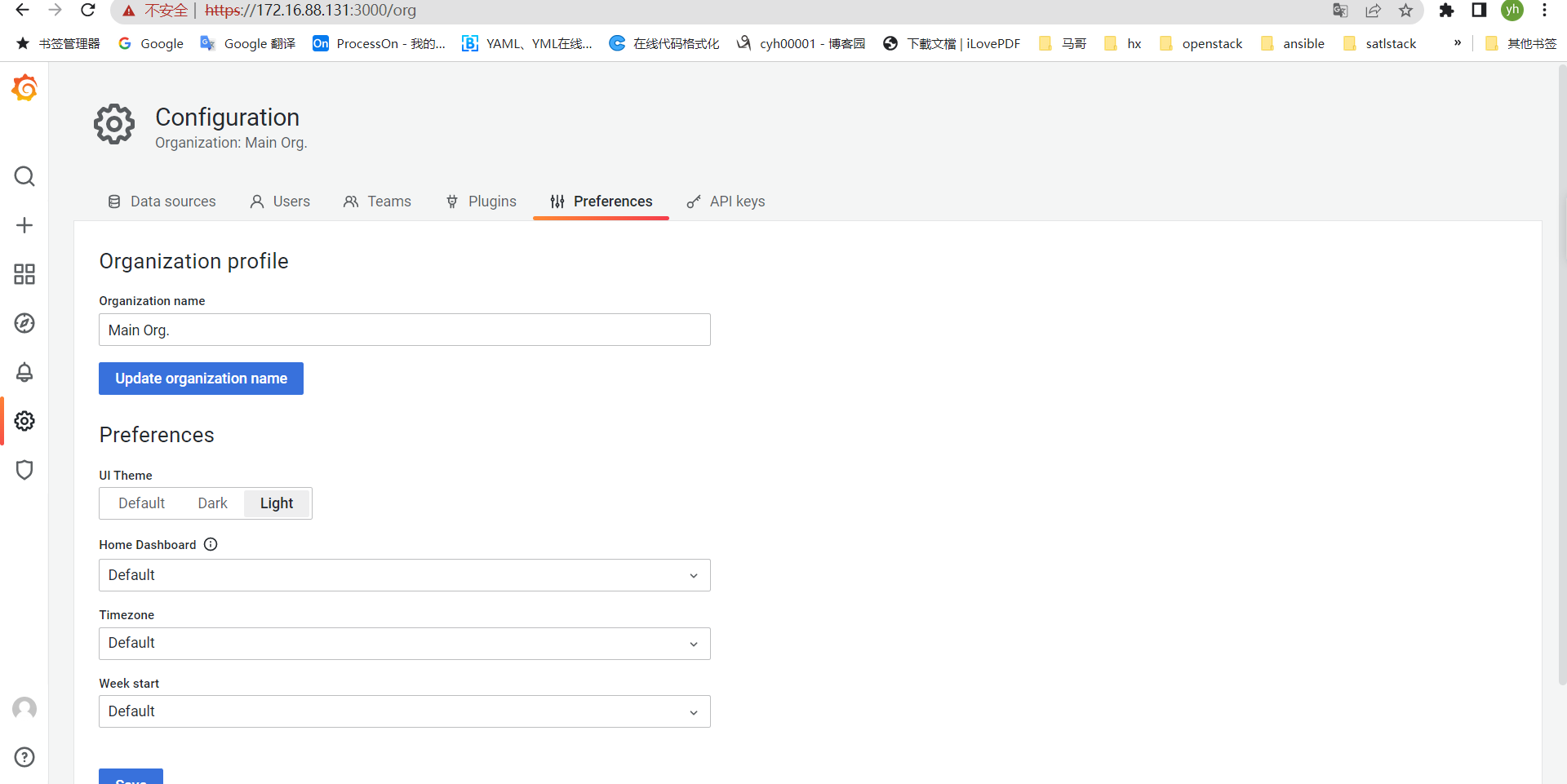

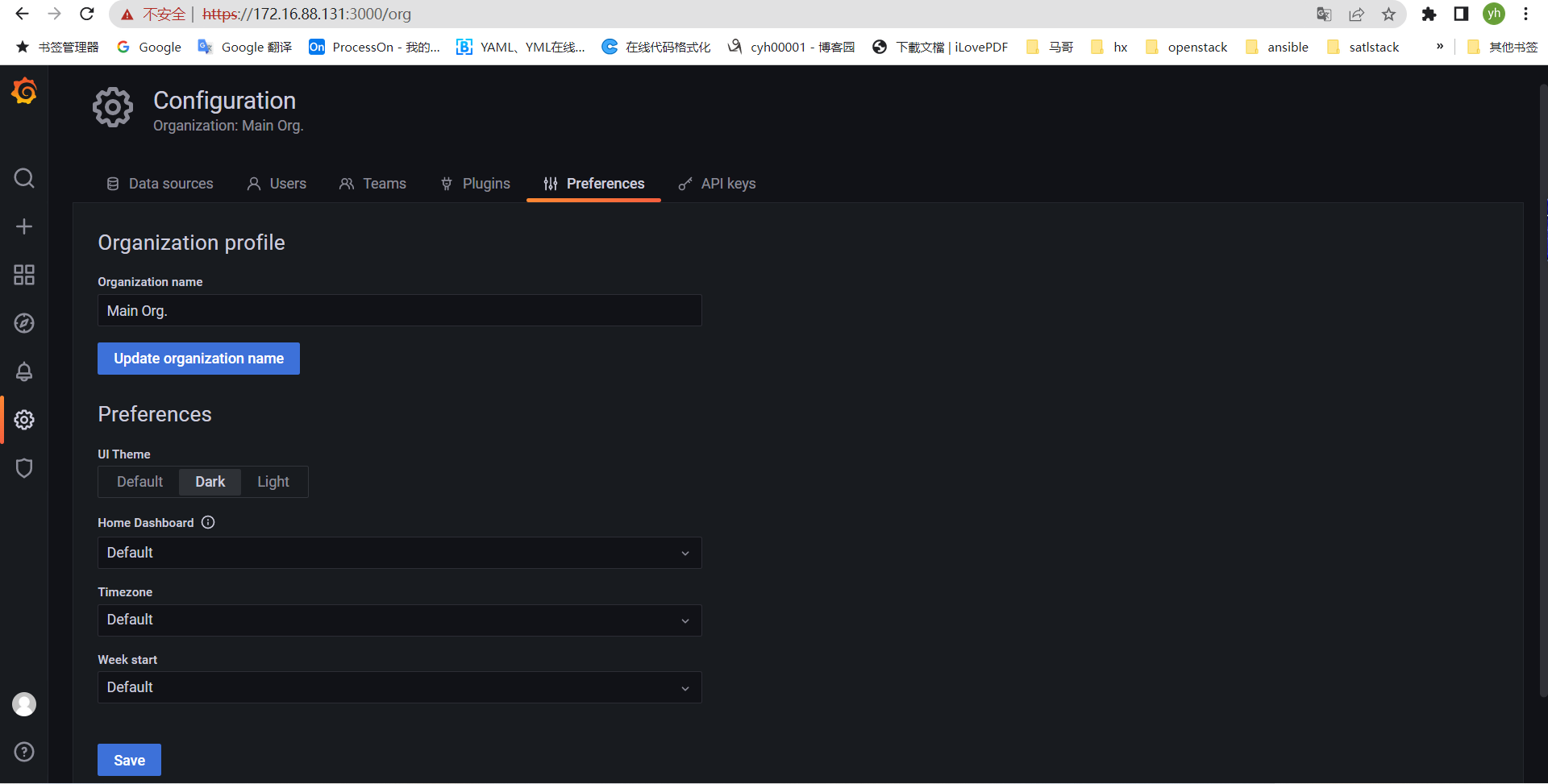

背景颜色调整

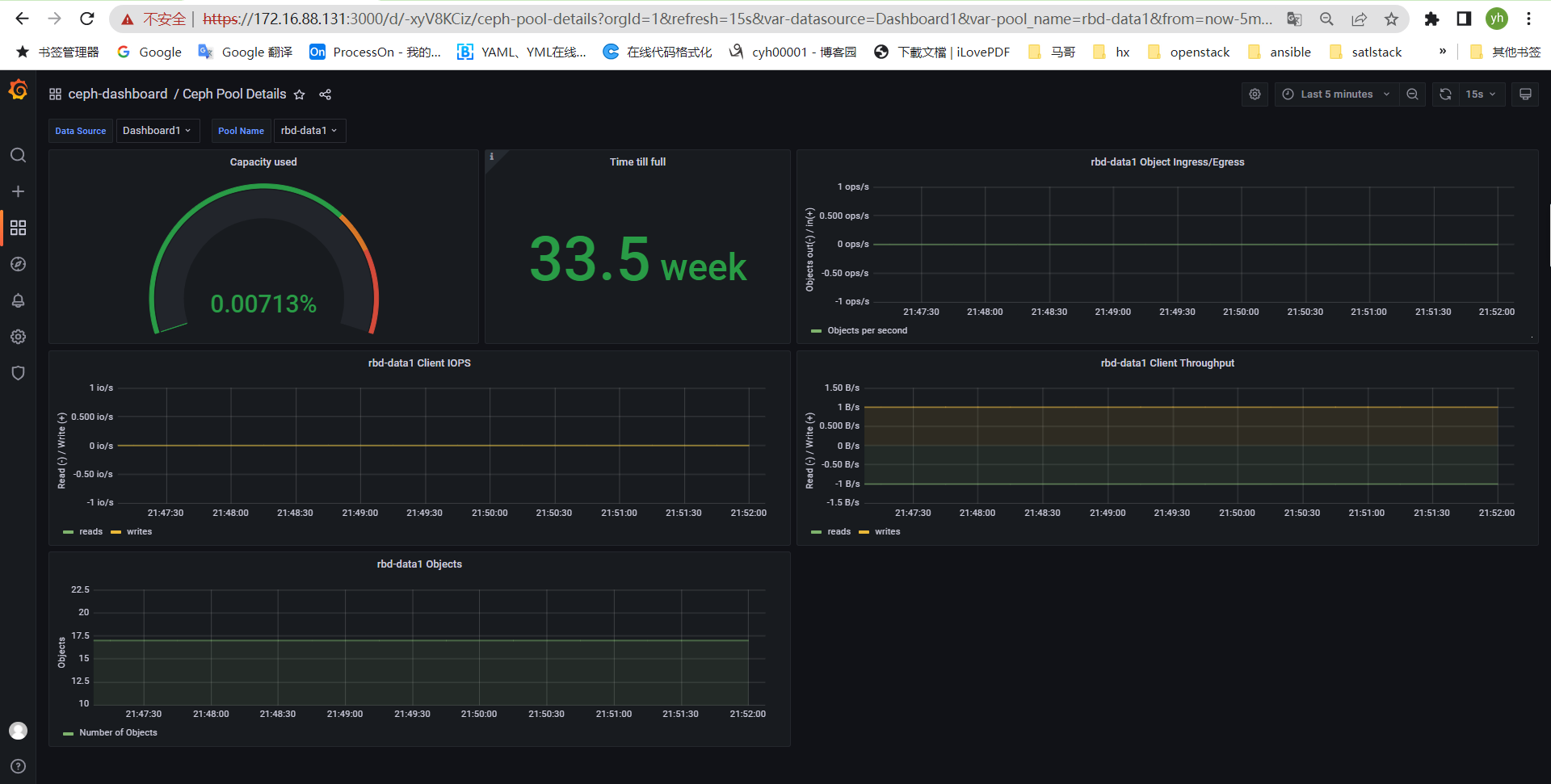

主机监控

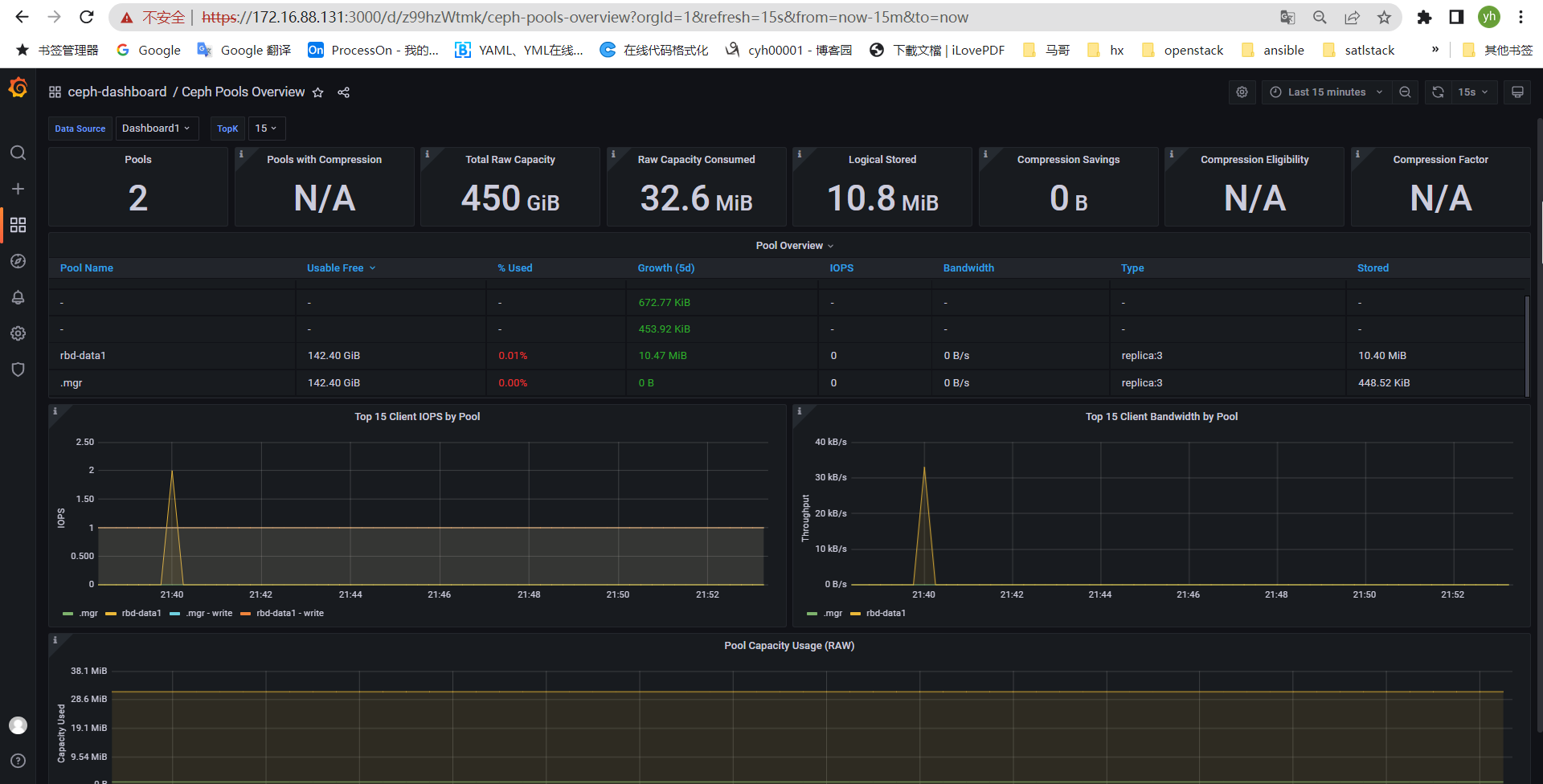

pool池监控

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!