在Ubuntu20.04下基于ceph-deploy部署ceph 16.2.10

一、ceph介绍

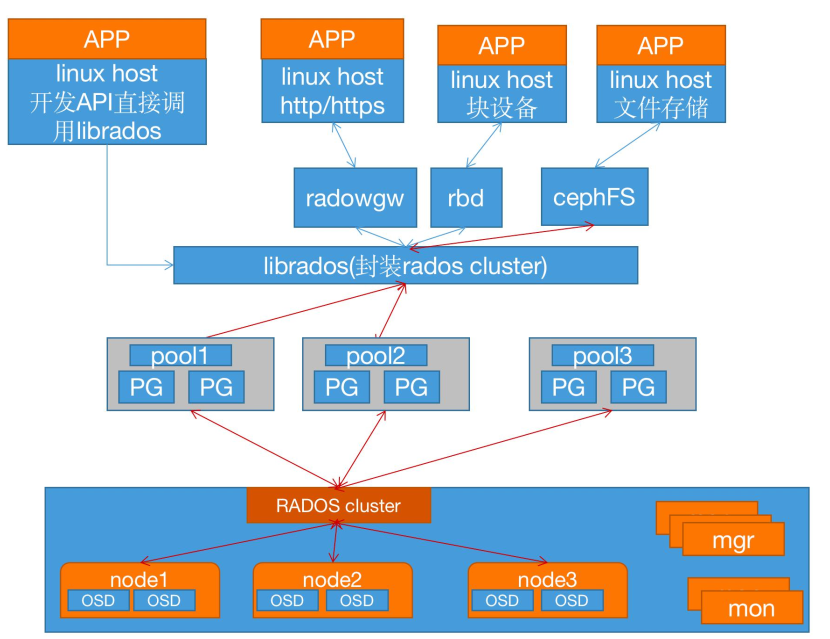

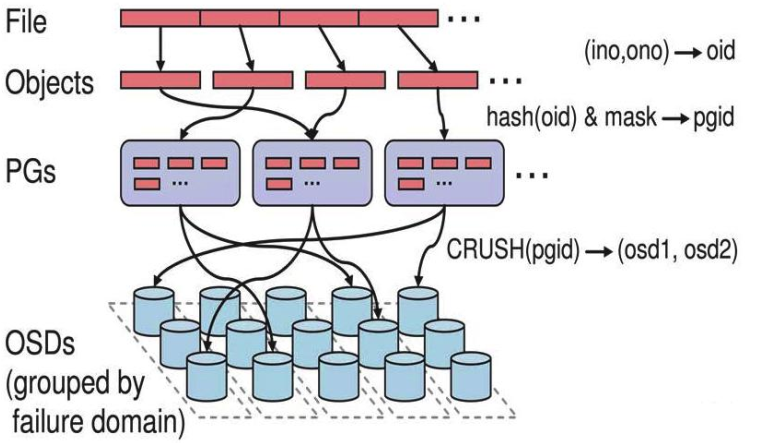

Ceph 是一个开源的分布式存储系统, 同时支持对象存储、 块设备、 文件系统。ceph 支持 EB(1EB=1,000,000,000GB)级别的数据存储, ceph 把每一个待管理的数据流(文件等数据)切分为一到多个固定大小(默认 4 兆)的对象数据, 并以其为原子单元(原子是构成元素的最小单元)完成数据的读写。ceph 的底层存储服务是由多个存储主机(host)组成的存储集群, 该集群也被称之为RADOS(reliable automatic distributed object store)存储集群, 即可靠的、 自动化的、 分布式的对象存储系统。

librados 是 RADOS 存储集群的 API, 支持 C/C++/JAVA/python/ruby/php/go等编程语言客户端。

ceph角色定义

LIBRADOS、 RADOSGW、 RBD 和 Ceph FS 统称为 Ceph 客户端接口。 RADOSGW、RBD、 Ceph FS 是基于 LIBRADOS 提供的多编程语言接口开发的。

一个 ceph 集群的组成部分:

- 若干的 Ceph OSD(对象存储守护程序)

- 至少需要一个 Ceph Monitors 监视器(1,3,5,7...)

- 两个或以上的 Ceph 管理器 managers, 运行 Ceph 文件系统客户端时

- 还需要高可用的 Ceph Metadata Server(文件系统元数据服务器)。

- RADOS cluster:由多台 host 存储服务器组成的 ceph 集群

- OSD(Object Storage Daemon): 每台存储服务器的磁盘组成的存储空间

- Mon(Monitor): ceph 的监视器,维护 OSD 和 PG 的集群状态, 一个 ceph 集群至少要有一个mon, 可以是一三五七等等这样的奇数个。

- Mgr(Manager): 负责跟踪运行时指标和 Ceph 集群的当前状态, 包括存储利用率, 当前性能指标和系统负载等。

Monitor(ceph-mon) ceph 监视器

在一个主机上运行的一个守护进程,用于维护集群状态映射(maintains maps of thecluster state),比如 ceph 集群中有多少存储池、每个存储池有多少PG以及存储池和 PG的映射关系等,monitor map, manager map, the OSD map, the MDS map, and theCRUSH map,这些映射是 Ceph 守护程序相互协调所需的关键群集状态, 此外监视器还负责管理守护程序和客户端之间的身份验证(认证使用 cephX 协议)。 通常至少需要三个监视器才能实现冗余和高可用性。

Managers(ceph-mgr)的功能:

在一个主机上运行的一个守护进程,Ceph Manager守护程序(ceph-mgr)负责跟踪运行时指标和Ceph集群的当前状态,包括存储利用率,当前性能指标和系统负载。CephManager守护程序还托管基于python的模块来管理和公开Ceph集群信息,包括基于Web的Ceph仪表板和REST API。高可用性通常至少需要两个管理器。

Ceph OSDs(对象存储守护程序 ceph-osd):

提供存储数据,操作系统上的一个磁盘就是一个OSD守护程序,OSD用于处理ceph集群数据复制、恢复、重新平衡,并通过检查其他Ceph OSD守护程序的心跳来向Ceph监视器和管理器提供一些监视信息。通常至少需要3个Ceph OSD才能实现冗余和高可用性。

MDS(ceph 元数据服务器 ceph-mds):

代表ceph文件系统(NFS/CIFS)存储元数据,(即Ceph块设备和Ceph对象存储不使用MDS)

Ceph 的管理节点:

1.ceph 的常用管理接口是一组命令行工具程序,例如rados、ceph、rbd等命令,ceph 管理员可以从某个特定的ceph-mon节点执行管理操作

2.推荐使用部署专用的管理节点对ceph进行配置管理、升级与后期维护,方便后期权限管理,管理节点的权限只对管理人员开放,可以避免一些不必要的误操作的发生。

ceph 逻辑组织架构

- Pool: 存储池、分区,存储池的大小取决于底层的存储空间。

- PG(placement group):一个 pool 内部可以有多个PG存在, pool和PG都是抽象的逻辑概念,一个pool中有多少个PG可以通过公式计算。

- OSD(Object Storage Daemon,对象存储设备):每一块磁盘都是一个 osd, 一个主机由一个或多个 osd 组成.

- ceph 集群部署好之后,要先创建存储池才能向ceph写入数据,文件在向ceph保存之前要先进行一致性hash计算,计算后会把文件保存在某个对应的PG的,此文件一定属于某个pool的一个PG,在通过PG保存在OSD上。数据对象在写到主 OSD 之后再同步对从OSD以实现数据的高可用。

ceph存储适用场景

块存储:

块存储在使用的时候需要格式化为指定的文件系统, 然后挂载使用, 其对操作系统的兼容性相对比较好(可以格式化为操作系统支持的文件系统), 挂载的时候通常是每个服务单独分配独立的块存储, 即各服务的块存储

是独立且不共享使用的, 如 Redis 的 master 和 slave 的块存储是独立的、 zookeeper 各节点的快存储是独立的、 MySQL 的 master 和 slave 的块存储是独立的、 也可以用于私有云与公有云的虚拟机的

系统盘和云盘等场景, 此类场景适合使用块存储。

cephFS:

对于需要在多个主机实现数据共享的场景,比如多个nginx读取由多个tomcat写入到存储的数据,可以使用cephFS。

对象存储:

而对于数据不会经常变化、删除和修改的场景,如短视频、APP下载等,可以使用对象存储

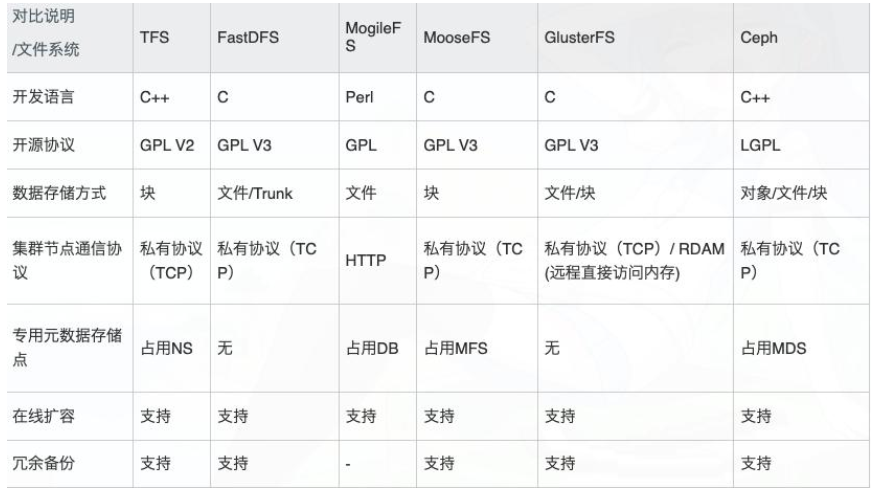

ceph特性对比

二、环境准备

2.1、机器环境条件

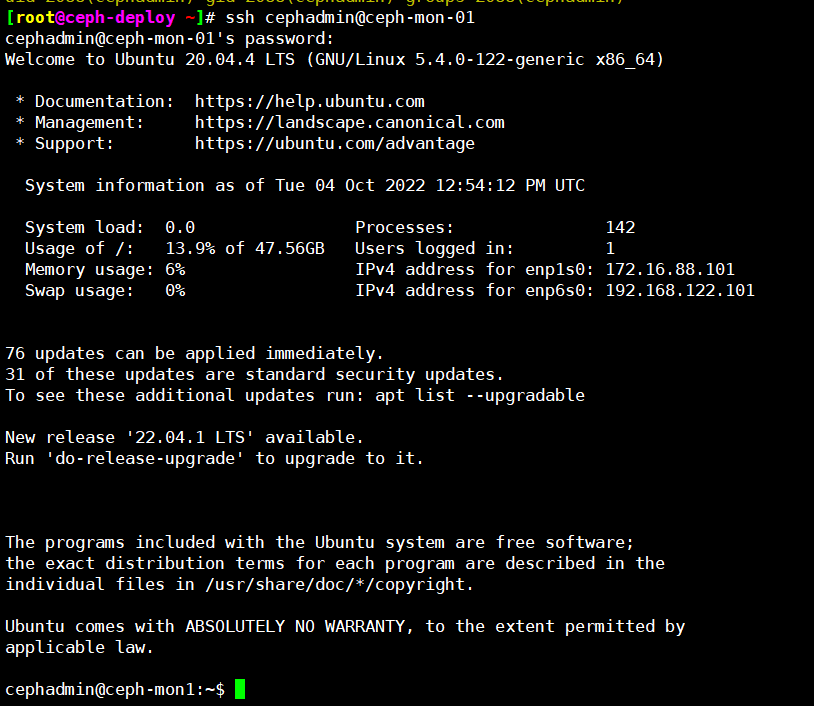

172.16.88.100/192.168.122.100 ceph-deploy.example.local ceph-deploy 2vcpu 4G 50G 172.16.88.101/192.168.122.101 ceph-mon1.example.local ceph-mon1 2vcpu 4G 50G 172.16.88.102/192.168.122.102 ceph-mon2.example.local ceph-mon2 2vcpu 4G 50G 172.16.88.103/192.168.122.103 ceph-mon3.example.local ceph-mon3 2vcpu 4G 50G 172.16.88.111/192.168.122.111 ceph-mgr1.example.local ceph-mgr1 2vcpu 4G 50G 172.16.88.112/192.168.122.112 ceph-mgr2.example.local ceph-mgr2 2vcpu 4G 50G 172.16.88.121/192.168.122.121 ceph-node1.example.local ceph-node1 2vcpu 4G 50G 3*100G 172.16.88.122/192.168.122.122 ceph-node2.example.local ceph-node2 2vcpu 4G 50G 3*100G 172.16.88.123/192.168.122.123 ceph-node3.example.local ceph-node3 2vcpu 4G 50G 3*100G ceph管理网(ceph公共网):172.16.88.0/24 ceph集群网: 192.168.122.0/24

2.2、安装ceph-deploy-2.1.0

[root@ceph-deploy ~]# apt-get update

[root@ceph-deploy ~]# apt install python3-pip sshpass ansible -y

[root@ceph-deploy ~]# pip3 install git+https://github.com/ceph/ceph-deploy.git

Collecting git+https://github.com/ceph/ceph-deploy.git

Cloning https://github.com/ceph/ceph-deploy.git to /tmp/pip-req-build-7dsj_uqq

Running command git clone -q https://github.com/ceph/ceph-deploy.git /tmp/pip-req-build-7dsj_uqq

Collecting remoto>=1.1.4

Downloading remoto-1.2.1.tar.gz (18 kB)

Requirement already satisfied: setuptools in /usr/lib/python3/dist-packages (from ceph-deploy==2.1.0) (45.2.0)

Collecting execnet

Downloading execnet-1.9.0-py2.py3-none-any.whl (39 kB)

Building wheels for collected packages: ceph-deploy, remoto

Building wheel for ceph-deploy (setup.py) ... done

Created wheel for ceph-deploy: filename=ceph_deploy-2.1.0-py3-none-any.whl size=120100 sha256=a40ee5c39c529b8a83aaf8ee45411e7686dd33c4aaa98201396be089ccf79b7a

Stored in directory: /tmp/pip-ephem-wheel-cache-bx3ucslp/wheels/c5/71/d4/95e790facb9f85aebcd09443a8a1ea69cda23237cf1ca74de0

Building wheel for remoto (setup.py) ... done

Created wheel for remoto: filename=remoto-1.2.1-py3-none-any.whl size=20056 sha256=b8966bc6801e3bd0d9c80dd5c13049eadd1840136cadf7284222c26981875570

Stored in directory: /root/.cache/pip/wheels/2e/40/7d/b2e089ff5311103963afb8d33695f0e0a88f4aace228ec5ae2

Successfully built ceph-deploy remoto

Installing collected packages: execnet, remoto, ceph-deploy

Attempting uninstall: ceph-deploy

Found existing installation: ceph-deploy 2.0.1

Uninstalling ceph-deploy-2.0.1:

Successfully uninstalled ceph-deploy-2.0.1

Successfully installed ceph-deploy-2.1.0 execnet-1.9.0 remoto-1.2.1

如果网络卡,可以参考:https://www.cnblogs.com/valeb/p/16131422.html 克隆源码包单独升级安装

git clone https://github.com/ceph/ceph-deploy.git

cd ceph-deploy

python3 setup.py install

2.3、添加ceph apt源

[root@ceph-deploy ~]# vi /etc/ansible/hosts

[root@ceph-deploy ~]# cat /etc/ansible/hosts

[vm] 172.16.88.100 172.16.88.101 172.16.88.102 172.16.88.103 172.16.88.111 172.16.88.112 172.16.88.121 172.16.88.122 172.16.88.123

[root@ceph-deploy ~]# ansible 'vm' -m shell -a "apt install -y apt-transport-https ca-certificates curl software-properties-common"

[root@ceph-deploy ~]# ansible 'vm' -m shell -a "wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | apt-key add -"

[root@ceph-deploy ~]# ansible 'vm' -m shell -a "apt-add-repository 'deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific/ focal main'"

验证新增的源

[root@ceph-deploy ~]# cat /etc/apt/sources.list

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse # 预发布软件源,不建议启用 # deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific/ focal main # deb-src https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific/ focal main

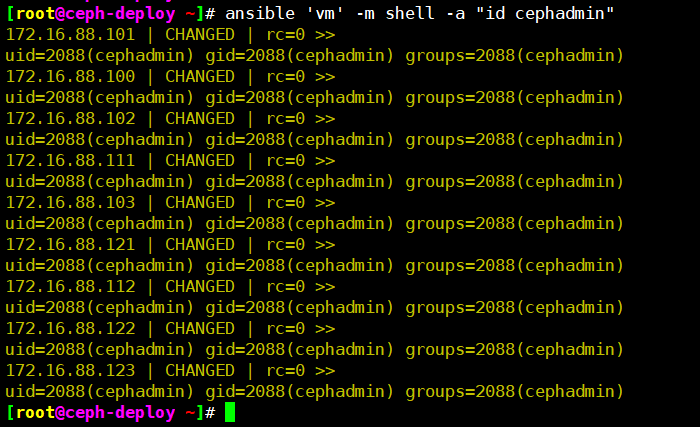

2.4、创建cephadmin用户

[root@ceph-deploy ~]# ansible 'vm' -m shell -a "groupadd -r -g 2088 cephadmin && useradd -r -m -s /bin/bash -u 2088 -g 2088 cephadmin && echo cephadmin:123456 | chpasswd"

设置cephadmin 具备sudo权限

[root@ceph-deploy ~]# ansible 'vm' -m shell -a "echo 'cephadmin ALL=(ALL) NOPASSWD: ALL' >> /etc/sudoers"

验证cephadmin是否具备sudo 权限

[root@ceph-deploy ~]# cat /etc/sudoers # # This file MUST be edited with the 'visudo' command as root. # # Please consider adding local content in /etc/sudoers.d/ instead of # directly modifying this file. # # See the man page for details on how to write a sudoers file. # Defaults env_reset Defaults mail_badpass Defaults secure_path="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/snap/bin" # Host alias specification # User alias specification # Cmnd alias specification # User privilege specification root ALL=(ALL:ALL) ALL # Members of the admin group may gain root privileges %admin ALL=(ALL) ALL # Allow members of group sudo to execute any command %sudo ALL=(ALL:ALL) ALL # See sudoers(5) for more information on "#include" directives: #includedir /etc/sudoers.d cephadmin ALL=(ALL) NOPASSWD: ALL [root@ceph-deploy ~]# su cephadmin cephadmin@ceph-deploy:/root$ cephadmin@ceph-deploy:/root$ cephadmin@ceph-deploy:/root$ cephadmin@ceph-deploy:/root$ sudo su - [root@ceph-deploy ~]# [root@ceph-deploy ~]#

2.5、创建cephadmin密钥并推送

[root@ceph-deploy ~]# su cephadmin cephadmin@ceph-deploy:/root$ cephadmin@ceph-deploy:/root$ cephadmin@ceph-deploy:/root$ cephadmin@ceph-deploy:/root$ ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/home/cephadmin/.ssh/id_rsa): Created directory '/home/cephadmin/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/cephadmin/.ssh/id_rsa Your public key has been saved in /home/cephadmin/.ssh/id_rsa.pub The key fingerprint is: SHA256:tsn/qkbMI0gZhyphFWodg7j5ixM/dpunmbf8o47rMYo cephadmin@ceph-deploy.example.local The key's randomart image is: +---[RSA 3072]----+ |. o=.. | |ooo = . | |.* o + | |= . o | | o . . oS | |. . . .o=o | | + .o o+. | |o.=..Oo o. | |Eo.+XB==oooo. | +----[SHA256]-----+ cephadmin@ceph-deploy:/root$ cephadmin@ceph-deploy:/root$

cephadmin@ceph-deploy:~$ vi ssh-key-push.sh

cephadmin@ceph-deploy:~$ cat ssh-key-push.sh

#!/bin/bash for i in {100..123}; do sshpass -p '123456' ssh-copy-id -o StrictHostKeyChecking=no -i /home/cephadmin/.ssh/id_rsa -p 22 cephadmin@172.16.88.$i; done

三、部署ceph集群

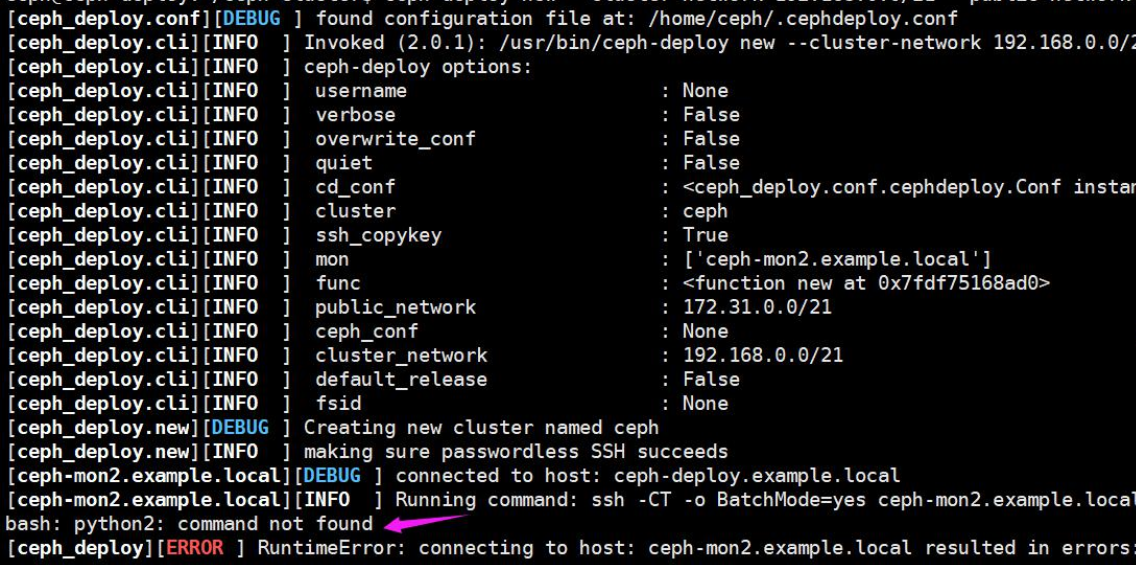

3.1、在管理节点初始化 mon 节点

cephadmin@ceph-deploy:~$ mkdir ceph-cluster

cephadmin@ceph-deploy:~/ceph-cluster$ sudo apt-get install python2.7 -y

cephadmin@ceph-deploy:~/ceph-cluster$ sudo ln -sv /usr/bin/python2.7 /usr/bin/python2

3.2、创建ceph-mon1节点

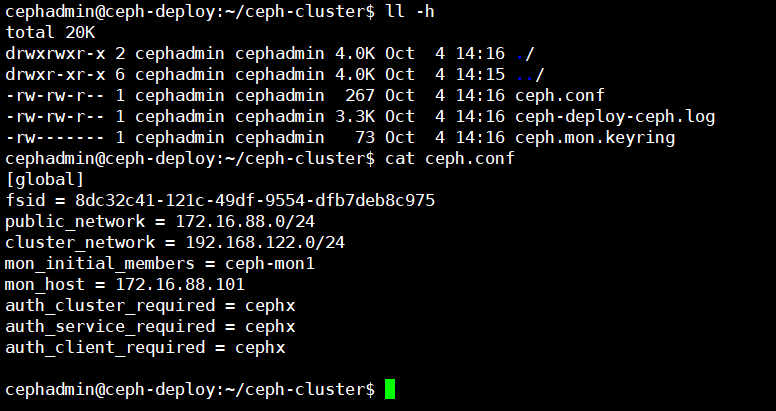

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy new --cluster-network 192.168.122.0/24 --public-network 172.16.88.0/24 ceph-mon1.example.local

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy new --cluster-network 192.168.122.0/24 --public-network 172.16.88.0/24 ceph-mon1.example.local [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] mon : ['ceph-mon1.example.local'] [ceph_deploy.cli][INFO ] ssh_copykey : True [ceph_deploy.cli][INFO ] fsid : None [ceph_deploy.cli][INFO ] cluster_network : 192.168.122.0/24 [ceph_deploy.cli][INFO ] public_network : 172.16.88.0/24 [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7f092bd5d6a0> [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] func : <function new at 0x7f092bd488b0> [ceph_deploy.new][DEBUG ] Creating new cluster named ceph [ceph_deploy.new][INFO ] making sure passwordless SSH succeeds [ceph-mon1.example.local][DEBUG ] connected to host: ceph-deploy.example.local [ceph-mon1.example.local][INFO ] Running command: ssh -CT -o BatchMode=yes ceph-mon1.example.local true [ceph-mon1.example.local][DEBUG ] connection detected need for sudo [ceph-mon1.example.local][DEBUG ] connected to host: ceph-mon1.example.local [ceph-mon1.example.local][INFO ] Running command: sudo /bin/ip link show [ceph-mon1.example.local][INFO ] Running command: sudo /bin/ip addr show [ceph-mon1.example.local][DEBUG ] IP addresses found: ['172.16.88.101', '192.168.122.101'] [ceph_deploy.new][DEBUG ] Resolving host ceph-mon1.example.local [ceph_deploy.new][DEBUG ] Monitor ceph-mon1 at 172.16.88.101 [ceph_deploy.new][DEBUG ] Monitor initial members are ['ceph-mon1'] [ceph_deploy.new][DEBUG ] Monitor addrs are ['172.16.88.101'] [ceph_deploy.new][DEBUG ] Creating a random mon key... [ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring... [ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf...

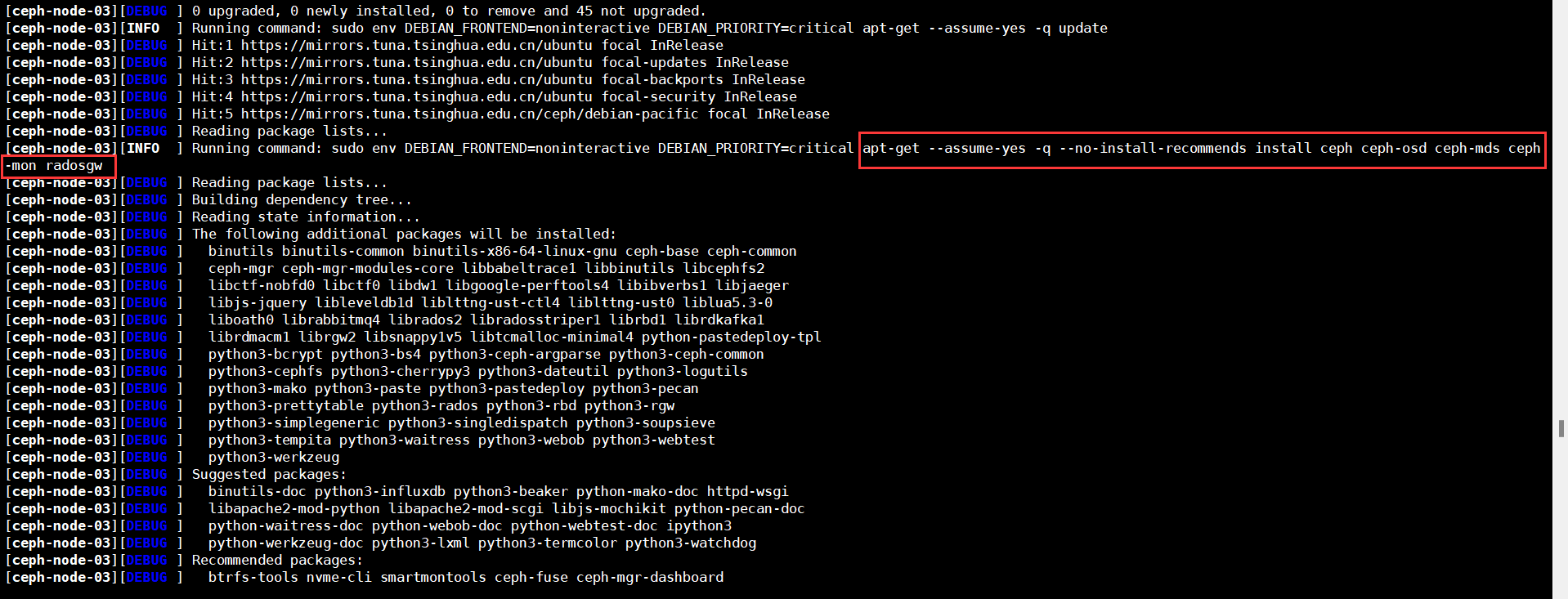

3.3、初始ceph-node节点

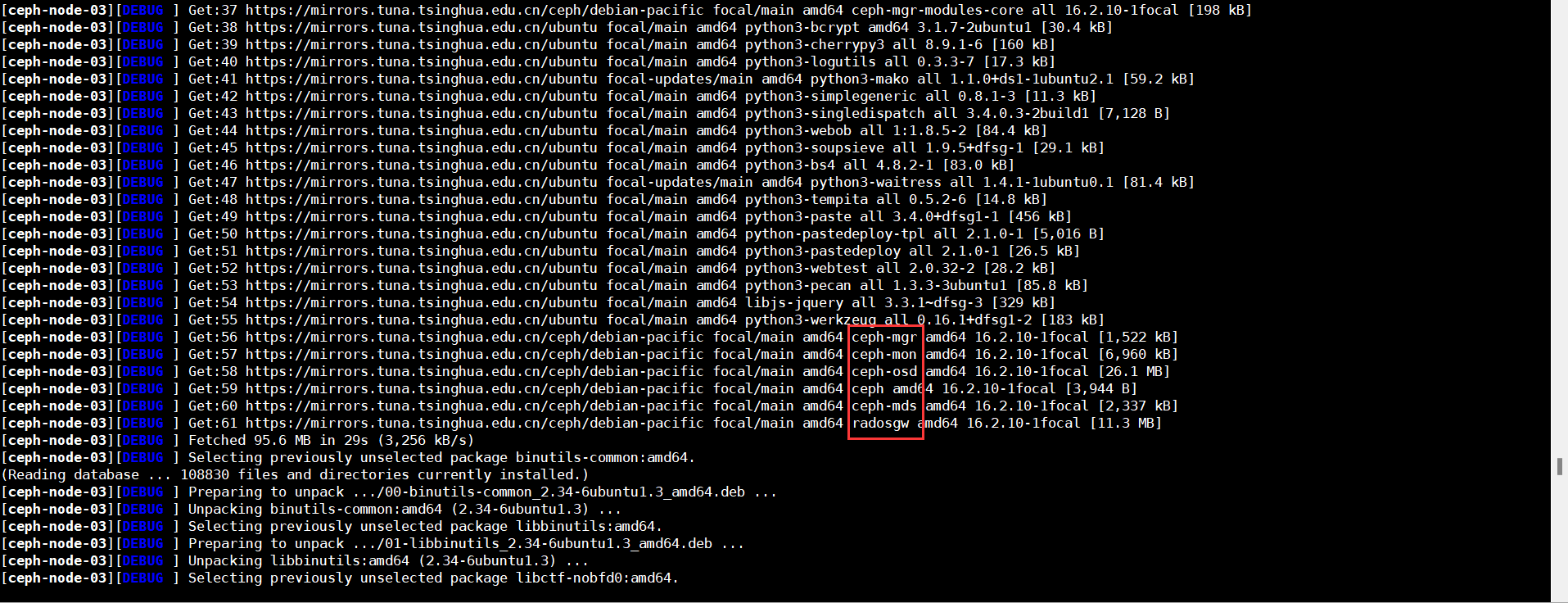

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy install --no-adjust-repos --nogpgcheck ceph-node1 ceph-node2 ceph-node3

此过程会在指定的ceph-node节点按照串行的方式逐个服务器安装ceph-base、ceph-common等组件包:

3.4、安装ceph-mon节点

[root@ceph-mon1 ~]# apt-cache madison ceph-mon ceph-mon | 16.2.10-1focal | https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific focal/main amd64 Packages ceph-mon | 15.2.16-0ubuntu0.20.04.1 | https://mirrors.tuna.tsinghua.edu.cn/ubuntu focal-updates/main amd64 Packages ceph-mon | 15.2.12-0ubuntu0.20.04.1 | https://mirrors.tuna.tsinghua.edu.cn/ubuntu focal-security/main amd64 Packages ceph-mon | 15.2.1-0ubuntu1 | https://mirrors.tuna.tsinghua.edu.cn/ubuntu focal/main amd64 Packages [root@ceph-mon1 ~]#

[root@ceph-mon1 ~]# apt install ceph-mon -y

[root@ceph-mon2 ~]# apt install ceph-mon -y

[root@ceph-mon3 ~]# apt install ceph-mon -y

ceph集群添加ceph-mon

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy mon create-initial

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy mon create-initial [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] subcommand : create-initial [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7f85985253d0> [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] func : <function mon at 0x7f8598507040> [ceph_deploy.cli][INFO ] keyrings : None [ceph_deploy.mon][DEBUG ] Deploying mon, cluster ceph hosts ceph-mon1 [ceph_deploy.mon][DEBUG ] detecting platform for host ceph-mon1 ... [ceph-mon1][DEBUG ] connection detected need for sudo [ceph-mon1][DEBUG ] connected to host: ceph-mon1 [ceph_deploy.mon][INFO ] distro info: ubuntu 20.04 focal [ceph-mon1][DEBUG ] determining if provided host has same hostname in remote [ceph-mon1][DEBUG ] deploying mon to ceph-mon1 [ceph-mon1][DEBUG ] remote hostname: ceph-mon1 [ceph-mon1][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph-mon1/done [ceph-mon1][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-ceph-mon1/done [ceph-mon1][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-ceph-mon1.mon.keyring [ceph-mon1][INFO ] Running command: sudo ceph-mon --cluster ceph --mkfs -i ceph-mon1 --keyring /var/lib/ceph/tmp/ceph-ceph-mon1.mon.keyring --setuser 64045 --setgroup 64045 [ceph-mon1][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-ceph-mon1.mon.keyring [ceph-mon1][INFO ] Running command: sudo systemctl enable ceph.target [ceph-mon1][INFO ] Running command: sudo systemctl enable ceph-mon@ceph-mon1 [ceph-mon1][WARNIN] Created symlink /etc/systemd/system/ceph-mon.target.wants/ceph-mon@ceph-mon1.service → /lib/systemd/system/ceph-mon@.service. [ceph-mon1][INFO ] Running command: sudo systemctl start ceph-mon@ceph-mon1 [ceph-mon1][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-mon1.asok mon_status [ceph-mon1][DEBUG ] ******************************************************************************** [ceph-mon1][DEBUG ] status for monitor: mon.ceph-mon1 [ceph-mon1][DEBUG ] { [ceph-mon1][DEBUG ] "election_epoch": 3, [ceph-mon1][DEBUG ] "extra_probe_peers": [], [ceph-mon1][DEBUG ] "feature_map": { [ceph-mon1][DEBUG ] "mon": [ [ceph-mon1][DEBUG ] { [ceph-mon1][DEBUG ] "features": "0x3f01cfb9fffdffff", [ceph-mon1][DEBUG ] "num": 1, [ceph-mon1][DEBUG ] "release": "luminous" [ceph-mon1][DEBUG ] } [ceph-mon1][DEBUG ] ] [ceph-mon1][DEBUG ] }, [ceph-mon1][DEBUG ] "features": { [ceph-mon1][DEBUG ] "quorum_con": "4540138297136906239", [ceph-mon1][DEBUG ] "quorum_mon": [ [ceph-mon1][DEBUG ] "kraken", [ceph-mon1][DEBUG ] "luminous", [ceph-mon1][DEBUG ] "mimic", [ceph-mon1][DEBUG ] "osdmap-prune", [ceph-mon1][DEBUG ] "nautilus", [ceph-mon1][DEBUG ] "octopus", [ceph-mon1][DEBUG ] "pacific", [ceph-mon1][DEBUG ] "elector-pinging" [ceph-mon1][DEBUG ] ], [ceph-mon1][DEBUG ] "required_con": "2449958747317026820", [ceph-mon1][DEBUG ] "required_mon": [ [ceph-mon1][DEBUG ] "kraken", [ceph-mon1][DEBUG ] "luminous", [ceph-mon1][DEBUG ] "mimic", [ceph-mon1][DEBUG ] "osdmap-prune", [ceph-mon1][DEBUG ] "nautilus", [ceph-mon1][DEBUG ] "octopus", [ceph-mon1][DEBUG ] "pacific", [ceph-mon1][DEBUG ] "elector-pinging" [ceph-mon1][DEBUG ] ] [ceph-mon1][DEBUG ] }, [ceph-mon1][DEBUG ] "monmap": { [ceph-mon1][DEBUG ] "created": "2022-10-04T14:24:27.817567Z", [ceph-mon1][DEBUG ] "disallowed_leaders: ": "", [ceph-mon1][DEBUG ] "election_strategy": 1, [ceph-mon1][DEBUG ] "epoch": 1, [ceph-mon1][DEBUG ] "features": { [ceph-mon1][DEBUG ] "optional": [], [ceph-mon1][DEBUG ] "persistent": [ [ceph-mon1][DEBUG ] "kraken", [ceph-mon1][DEBUG ] "luminous", [ceph-mon1][DEBUG ] "mimic", [ceph-mon1][DEBUG ] "osdmap-prune", [ceph-mon1][DEBUG ] "nautilus", [ceph-mon1][DEBUG ] "octopus", [ceph-mon1][DEBUG ] "pacific", [ceph-mon1][DEBUG ] "elector-pinging" [ceph-mon1][DEBUG ] ] [ceph-mon1][DEBUG ] }, [ceph-mon1][DEBUG ] "fsid": "8dc32c41-121c-49df-9554-dfb7deb8c975", [ceph-mon1][DEBUG ] "min_mon_release": 16, [ceph-mon1][DEBUG ] "min_mon_release_name": "pacific", [ceph-mon1][DEBUG ] "modified": "2022-10-04T14:24:27.817567Z", [ceph-mon1][DEBUG ] "mons": [ [ceph-mon1][DEBUG ] { [ceph-mon1][DEBUG ] "addr": "172.16.88.101:6789/0", [ceph-mon1][DEBUG ] "crush_location": "{}", [ceph-mon1][DEBUG ] "name": "ceph-mon1", [ceph-mon1][DEBUG ] "priority": 0, [ceph-mon1][DEBUG ] "public_addr": "172.16.88.101:6789/0", [ceph-mon1][DEBUG ] "public_addrs": { [ceph-mon1][DEBUG ] "addrvec": [ [ceph-mon1][DEBUG ] { [ceph-mon1][DEBUG ] "addr": "172.16.88.101:3300", [ceph-mon1][DEBUG ] "nonce": 0, [ceph-mon1][DEBUG ] "type": "v2" [ceph-mon1][DEBUG ] }, [ceph-mon1][DEBUG ] { [ceph-mon1][DEBUG ] "addr": "172.16.88.101:6789", [ceph-mon1][DEBUG ] "nonce": 0, [ceph-mon1][DEBUG ] "type": "v1" [ceph-mon1][DEBUG ] } [ceph-mon1][DEBUG ] ] [ceph-mon1][DEBUG ] }, [ceph-mon1][DEBUG ] "rank": 0, [ceph-mon1][DEBUG ] "weight": 0 [ceph-mon1][DEBUG ] } [ceph-mon1][DEBUG ] ], [ceph-mon1][DEBUG ] "stretch_mode": false, [ceph-mon1][DEBUG ] "tiebreaker_mon": "" [ceph-mon1][DEBUG ] }, [ceph-mon1][DEBUG ] "name": "ceph-mon1", [ceph-mon1][DEBUG ] "outside_quorum": [], [ceph-mon1][DEBUG ] "quorum": [ [ceph-mon1][DEBUG ] 0 [ceph-mon1][DEBUG ] ], [ceph-mon1][DEBUG ] "quorum_age": 2, [ceph-mon1][DEBUG ] "rank": 0, [ceph-mon1][DEBUG ] "state": "leader", [ceph-mon1][DEBUG ] "stretch_mode": false, [ceph-mon1][DEBUG ] "sync_provider": [] [ceph-mon1][DEBUG ] } [ceph-mon1][DEBUG ] ******************************************************************************** [ceph-mon1][INFO ] monitor: mon.ceph-mon1 is running [ceph-mon1][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-mon1.asok mon_status [ceph_deploy.mon][INFO ] processing monitor mon.ceph-mon1 [ceph-mon1][DEBUG ] connection detected need for sudo [ceph-mon1][DEBUG ] connected to host: ceph-mon1 [ceph-mon1][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-mon1.asok mon_status [ceph_deploy.mon][INFO ] mon.ceph-mon1 monitor has reached quorum! [ceph_deploy.mon][INFO ] all initial monitors are running and have formed quorum [ceph_deploy.mon][INFO ] Running gatherkeys... [ceph_deploy.gatherkeys][INFO ] Storing keys in temp directory /tmp/tmpzsxa8eq8 [ceph-mon1][DEBUG ] connection detected need for sudo [ceph-mon1][DEBUG ] connected to host: ceph-mon1 [ceph-mon1][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --admin-daemon=/var/run/ceph/ceph-mon.ceph-mon1.asok mon_status [ceph-mon1][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-mon1/keyring auth get client.admin [ceph-mon1][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-mon1/keyring auth get client.bootstrap-mds [ceph-mon1][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-mon1/keyring auth get client.bootstrap-mgr [ceph-mon1][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-mon1/keyring auth get client.bootstrap-osd [ceph-mon1][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-mon1/keyring auth get client.bootstrap-rgw [ceph_deploy.gatherkeys][INFO ] Storing ceph.client.admin.keyring [ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mds.keyring [ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mgr.keyring [ceph_deploy.gatherkeys][INFO ] keyring 'ceph.mon.keyring' already exists [ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-osd.keyring [ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-rgw.keyring [ceph_deploy.gatherkeys][INFO ] Destroy temp directory /tmp/tmpzsxa8eq8

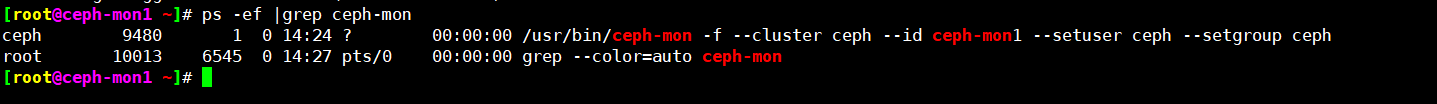

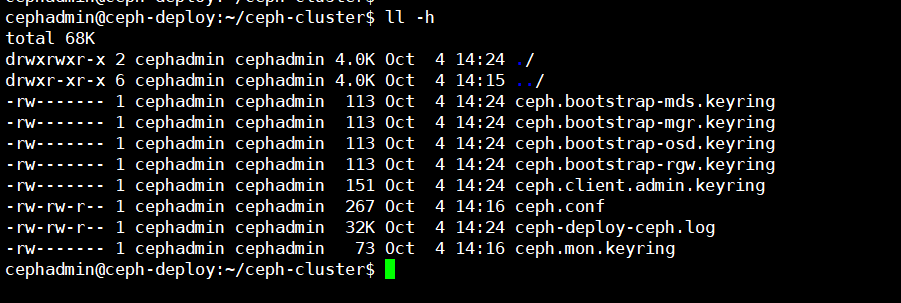

验证ceph-mon

验证在mon定节点已经自动安装并启动了ceph-mon服务,并且后期在ceph-deploy节点初始化目录会生成一些bootstrap、ceph、mds、mgr、osd、rgw 等服务的keyring认证文件,这些初始化文件拥有对 ceph 集群的最高权限, 所以一定要保存好

3.5、分发admin密钥到node节点

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy admin ceph-node1 ceph-node2 ceph-node3

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy admin ceph-node1 ceph-node2 ceph-node3 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] client : ['ceph-node1', 'ceph-node2', 'ceph-node3'] [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7faf34cbd070> [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] func : <function admin at 0x7faf352a7310> [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-node1 [ceph-node1][DEBUG ] connection detected need for sudo [ceph-node1][DEBUG ] connected to host: ceph-node1 [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-node2 [ceph-node2][DEBUG ] connection detected need for sudo [ceph-node2][DEBUG ] connected to host: ceph-node2 [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-node3 [ceph-node3][DEBUG ] connection detected need for sudo [ceph-node3][DEBUG ] connected to host: ceph-node3

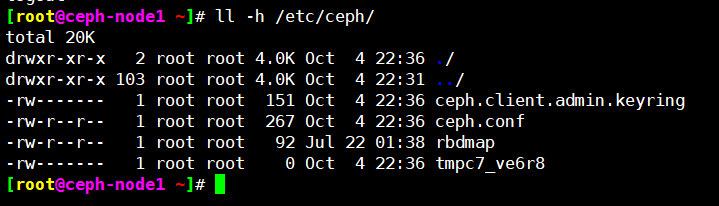

验证node节点密钥

认证文件的属主和属组为了安全考虑,默认设置为了root 用户和root组,如果需要ceph用户也能执行ceph 命令,那么就需要对ceph 用户进行授权

[root@ceph-node1 ~]# apt-get install acl -y

[root@ceph-node1 ~]# setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

[root@ceph-node2 ~]# apt-get install acl -y

[root@ceph-node2 ~]# setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

[root@ceph-node3 ~]# apt-get install acl -y

[root@ceph-node3 ~]# setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

3.6、部署ceph-mgr节点

[root@ceph-mgr1 ~]# apt install ceph-mgr -y

[root@ceph-mgr2 ~]# apt install ceph-mgr -y

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy mgr create ceph-mgr1

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy mgr create ceph-mgr1 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7f07e50a4640> [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] func : <function mgr at 0x7f07e50df700> [ceph_deploy.cli][INFO ] mgr : [('ceph-mgr1', 'ceph-mgr1')] [ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts ceph-mgr1:ceph-mgr1 [ceph-mgr1][DEBUG ] connection detected need for sudo [ceph-mgr1][DEBUG ] connected to host: ceph-mgr1 [ceph_deploy.mgr][INFO ] Distro info: ubuntu 20.04 focal [ceph_deploy.mgr][DEBUG ] remote host will use systemd [ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to ceph-mgr1 [ceph-mgr1][WARNIN] mgr keyring does not exist yet, creating one [ceph-mgr1][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.ceph-mgr1 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-ceph-mgr1/keyring [ceph-mgr1][INFO ] Running command: sudo systemctl enable ceph-mgr@ceph-mgr1 [ceph-mgr1][WARNIN] Created symlink /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@ceph-mgr1.service → /lib/systemd/system/ceph-mgr@.service. [ceph-mgr1][INFO ] Running command: sudo systemctl start ceph-mgr@ceph-mgr1 [ceph-mgr1][INFO ] Running command: sudo systemctl enable ceph.target

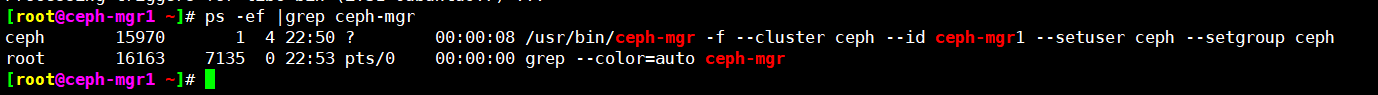

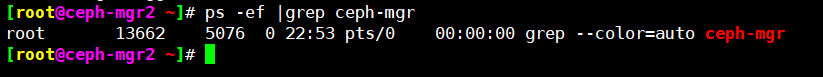

验证ceph-mgr

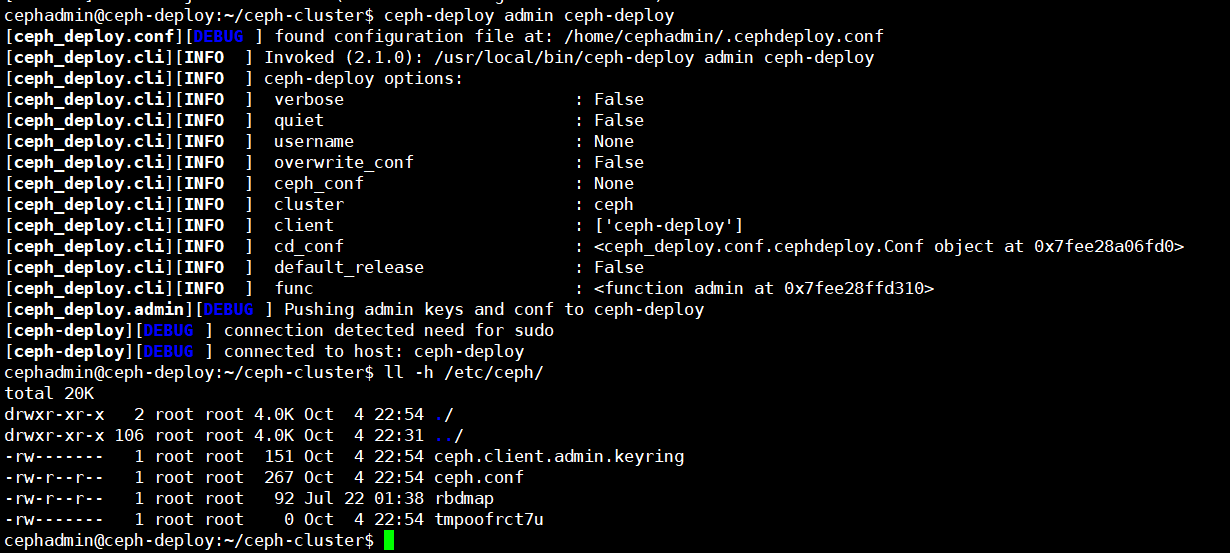

3.7、配置ceph-deploy管理ceph集群

cephadmin@ceph-deploy:~/ceph-cluster$ sudo apt install acl -y

cephadmin@ceph-deploy:~/ceph-cluster$ sudo apt install ceph-common

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy admin ceph-deploy #给自己推送一份admin密钥

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy admin ceph-deploy [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] client : ['ceph-deploy'] [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7fee28a06fd0> [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] func : <function admin at 0x7fee28ffd310> [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-deploy [ceph-deploy][DEBUG ] connection detected need for sudo [ceph-deploy][DEBUG ] connected to host: ceph-deploy

cephadmin@ceph-deploy:~/ceph-cluster$ sudo setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

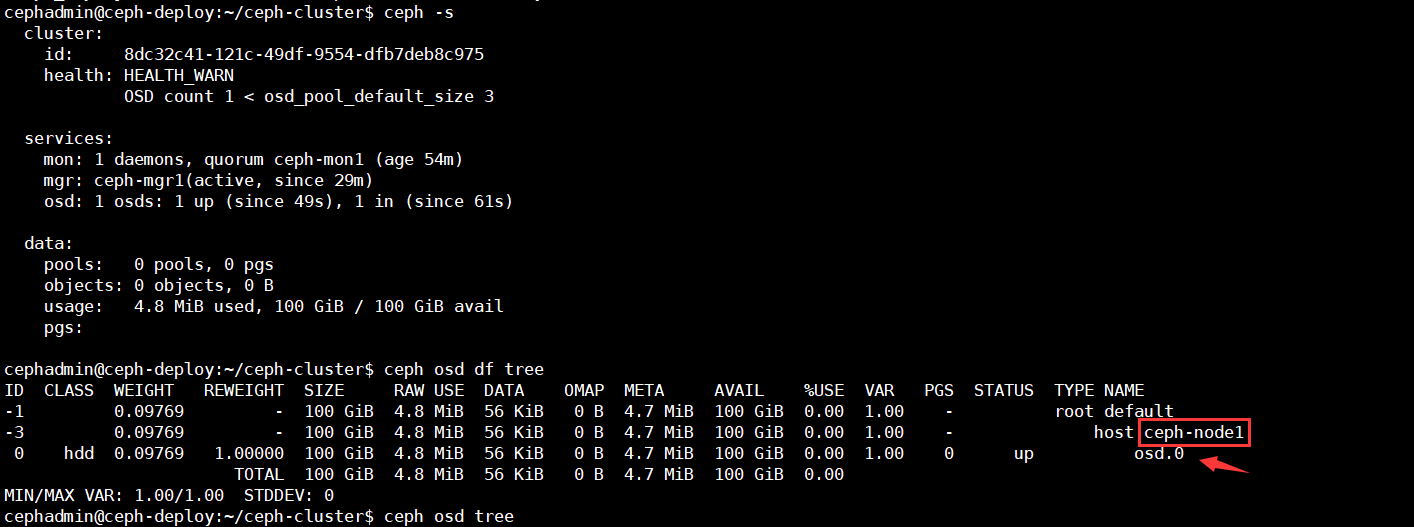

cephadmin@ceph-deploy:~/ceph-cluster$ sudo setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring cephadmin@ceph-deploy:~/ceph-cluster$ ceph -s cluster: id: 8dc32c41-121c-49df-9554-dfb7deb8c975 health: HEALTH_WARN OSD count 0 < osd_pool_default_size 3 services: mon: 1 daemons, quorum ceph-mon1 (age 38m) mgr: ceph-mgr1(active, since 13m) osd: 0 osds: 0 up, 0 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 0 B used, 0 B / 0 B avail pgs: cephadmin@ceph-deploy:~/ceph-cluster$

"mon is allowing insecure global_id reclaim"报错解决:

cephadmin@ceph-deploy:~/ceph-cluster$ ceph config set mon auth_allow_insecure_global_id_reclaim false

cephadmin@ceph-deploy:~/ceph-cluster$ ceph config set mon auth_allow_insecure_global_id_reclaim false cephadmin@ceph-deploy:~/ceph-cluster$ ceph -s cluster: id: 8dc32c41-121c-49df-9554-dfb7deb8c975 health: HEALTH_WARN OSD count 0 < osd_pool_default_size 3 services: mon: 1 daemons, quorum ceph-mon1 (age 40m) mgr: ceph-mgr1(active, since 14m) osd: 0 osds: 0 up, 0 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 0 B used, 0 B / 0 B avail pgs: cephadmin@ceph-deploy:~/ceph-cluster$

3.8、初始化存储节点

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy install --release pacific ceph-node1 ##擦除磁盘之前通过deploy节点对node节点执行安装ceph基本运行环境

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy install --release pacific ceph-node1 [ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy install --release pacific ceph-node1 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] stable : None [ceph_deploy.cli][INFO ] release : pacific [ceph_deploy.cli][INFO ] testing : None [ceph_deploy.cli][INFO ] dev : master [ceph_deploy.cli][INFO ] dev_commit : None [ceph_deploy.cli][INFO ] install_mon : False [ceph_deploy.cli][INFO ] install_mgr : False [ceph_deploy.cli][INFO ] install_mds : False [ceph_deploy.cli][INFO ] install_rgw : False [ceph_deploy.cli][INFO ] install_osd : False [ceph_deploy.cli][INFO ] install_tests : False [ceph_deploy.cli][INFO ] install_common : False [ceph_deploy.cli][INFO ] install_all : False [ceph_deploy.cli][INFO ] adjust_repos : True [ceph_deploy.cli][INFO ] repo : False [ceph_deploy.cli][INFO ] host : ['ceph-node1'] [ceph_deploy.cli][INFO ] local_mirror : None [ceph_deploy.cli][INFO ] repo_url : None [ceph_deploy.cli][INFO ] gpg_url : None [ceph_deploy.cli][INFO ] nogpgcheck : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7ff822a91190> [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] version_kind : stable [ceph_deploy.cli][INFO ] func : <function install at 0x7ff822aca5e0> [ceph_deploy.install][DEBUG ] Installing stable version pacific on cluster ceph hosts ceph-node1 [ceph_deploy.install][DEBUG ] Detecting platform for host ceph-node1 ... [ceph-node1][DEBUG ] connection detected need for sudo [ceph-node1][DEBUG ] connected to host: ceph-node1 [ceph_deploy.install][INFO ] Distro info: ubuntu 20.04 focal [ceph-node1][INFO ] installing Ceph on ceph-node1 [ceph-node1][INFO ] Running command: sudo env DEBIAN_FRONTEND=noninteractive DEBIAN_PRIORITY=critical apt-get --assume-yes -q update [ceph-node1][DEBUG ] Hit:1 https://mirrors.tuna.tsinghua.edu.cn/ubuntu focal InRelease [ceph-node1][DEBUG ] Hit:2 https://mirrors.tuna.tsinghua.edu.cn/ubuntu focal-updates InRelease [ceph-node1][DEBUG ] Hit:3 https://mirrors.tuna.tsinghua.edu.cn/ubuntu focal-backports InRelease [ceph-node1][DEBUG ] Hit:4 https://mirrors.tuna.tsinghua.edu.cn/ubuntu focal-security InRelease [ceph-node1][DEBUG ] Hit:5 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific focal InRelease [ceph-node1][DEBUG ] Reading package lists... [ceph-node1][INFO ] Running command: sudo env DEBIAN_FRONTEND=noninteractive DEBIAN_PRIORITY=critical apt-get --assume-yes -q --no-install-recommends install ca-certificates apt-transport-https [ceph-node1][DEBUG ] Reading package lists... [ceph-node1][DEBUG ] Building dependency tree... [ceph-node1][DEBUG ] Reading state information... [ceph-node1][DEBUG ] ca-certificates is already the newest version (20211016~20.04.1). [ceph-node1][DEBUG ] apt-transport-https is already the newest version (2.0.9). [ceph-node1][DEBUG ] 0 upgraded, 0 newly installed, 0 to remove and 69 not upgraded. [ceph-node1][INFO ] Running command: sudo wget -O release.asc https://download.ceph.com/keys/release.asc [ceph-node1][WARNIN] --2022-10-04 23:08:19-- https://download.ceph.com/keys/release.asc [ceph-node1][WARNIN] Resolving download.ceph.com (download.ceph.com)... 158.69.68.124 [ceph-node1][WARNIN] Connecting to download.ceph.com (download.ceph.com)|158.69.68.124|:443... connected. [ceph-node1][WARNIN] HTTP request sent, awaiting response... 200 OK [ceph-node1][WARNIN] Length: 1645 (1.6K) [application/octet-stream] [ceph-node1][WARNIN] Saving to: 'release.asc' [ceph-node1][WARNIN] [ceph-node1][WARNIN] 0K . 100% 81.3M=0s [ceph-node1][WARNIN] [ceph-node1][WARNIN] 2022-10-04 23:08:21 (81.3 MB/s) - 'release.asc' saved [1645/1645] [ceph-node1][WARNIN] [ceph-node1][INFO ] Running command: sudo apt-key add release.asc [ceph-node1][WARNIN] Warning: apt-key output should not be parsed (stdout is not a terminal) [ceph-node1][DEBUG ] OK [ceph-node1][INFO ] Running command: sudo env DEBIAN_FRONTEND=noninteractive DEBIAN_PRIORITY=critical apt-get --assume-yes -q update [ceph-node1][DEBUG ] Hit:1 https://mirrors.tuna.tsinghua.edu.cn/ubuntu focal InRelease [ceph-node1][DEBUG ] Hit:2 https://mirrors.tuna.tsinghua.edu.cn/ubuntu focal-updates InRelease [ceph-node1][DEBUG ] Hit:3 https://mirrors.tuna.tsinghua.edu.cn/ubuntu focal-backports InRelease [ceph-node1][DEBUG ] Hit:4 https://mirrors.tuna.tsinghua.edu.cn/ubuntu focal-security InRelease [ceph-node1][DEBUG ] Hit:5 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific focal InRelease [ceph-node1][DEBUG ] Get:6 https://download.ceph.com/debian-pacific focal InRelease [8571 B] [ceph-node1][DEBUG ] Get:7 https://download.ceph.com/debian-pacific focal/main amd64 Packages [17.0 kB] [ceph-node1][DEBUG ] Fetched 25.6 kB in 4s (6622 B/s) [ceph-node1][DEBUG ] Reading package lists... [ceph-node1][INFO ] Running command: sudo env DEBIAN_FRONTEND=noninteractive DEBIAN_PRIORITY=critical apt-get --assume-yes -q --no-install-recommends install -o Dpkg::Options::=--force-confnew ceph ceph-osd ceph-mds ceph-mon radosgw [ceph-node1][DEBUG ] Reading package lists... [ceph-node1][DEBUG ] Building dependency tree... [ceph-node1][DEBUG ] Reading state information... [ceph-node1][DEBUG ] ceph is already the newest version (16.2.10-1focal). [ceph-node1][DEBUG ] ceph-mds is already the newest version (16.2.10-1focal). [ceph-node1][DEBUG ] ceph-mon is already the newest version (16.2.10-1focal). [ceph-node1][DEBUG ] ceph-osd is already the newest version (16.2.10-1focal). [ceph-node1][DEBUG ] radosgw is already the newest version (16.2.10-1focal). [ceph-node1][DEBUG ] 0 upgraded, 0 newly installed, 0 to remove and 69 not upgraded. [ceph-node1][INFO ] Running command: sudo ceph --version [ceph-node1][DEBUG ] ceph version 16.2.10 (45fa1a083152e41a408d15505f594ec5f1b4fe17) pacific (stable) cephadmin@ceph-deploy:~/ceph-cluster$

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy install --release pacific ceph-node2

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy install --release pacific ceph-node3

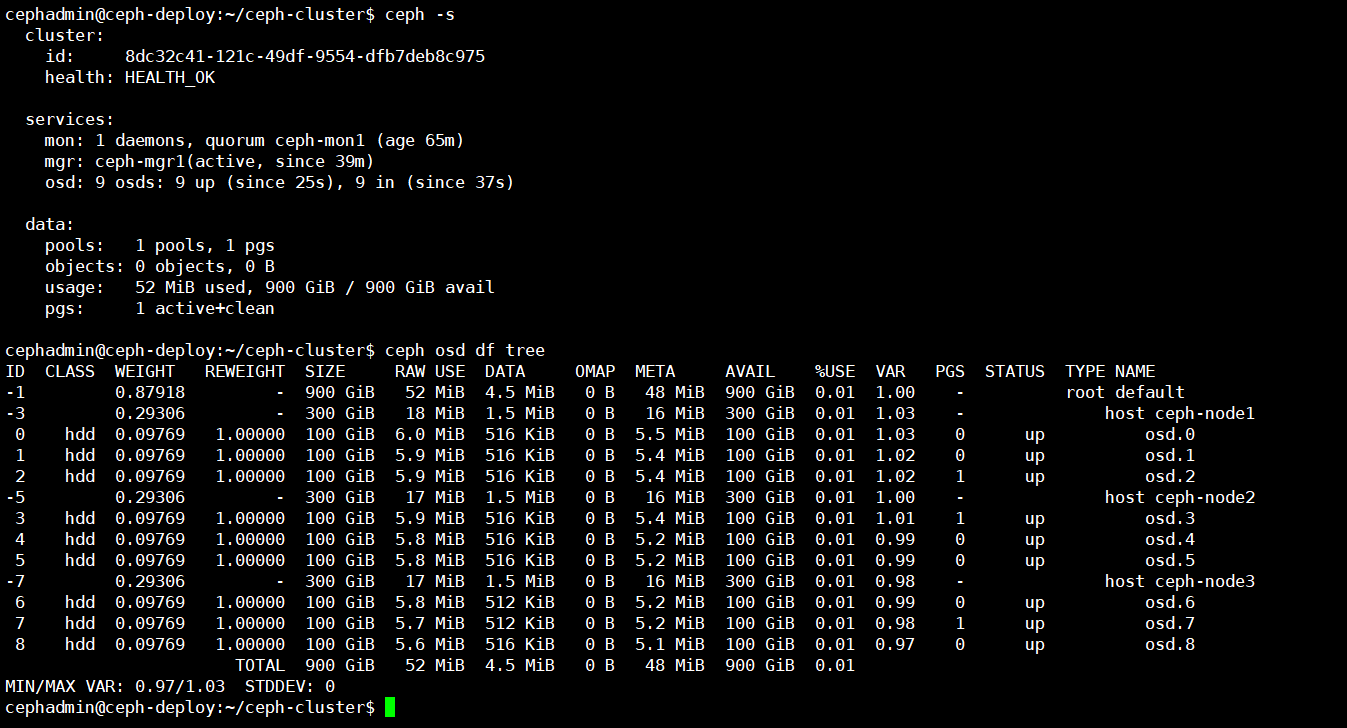

添加osd

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy osd create ceph-node1 --data /dev/vdb

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy osd create ceph-node1 --data /dev/vdb [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7f216c3135b0> [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] func : <function osd at 0x7f216c3413a0> [ceph_deploy.cli][INFO ] data : /dev/vdb [ceph_deploy.cli][INFO ] journal : None [ceph_deploy.cli][INFO ] zap_disk : False [ceph_deploy.cli][INFO ] fs_type : xfs [ceph_deploy.cli][INFO ] dmcrypt : False [ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys [ceph_deploy.cli][INFO ] filestore : None [ceph_deploy.cli][INFO ] bluestore : None [ceph_deploy.cli][INFO ] block_db : None [ceph_deploy.cli][INFO ] block_wal : None [ceph_deploy.cli][INFO ] host : ceph-node1 [ceph_deploy.cli][INFO ] debug : False [ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/vdb [ceph-node1][DEBUG ] connection detected need for sudo [ceph-node1][DEBUG ] connected to host: ceph-node1 [ceph_deploy.osd][INFO ] Distro info: ubuntu 20.04 focal [ceph_deploy.osd][DEBUG ] Deploying osd to ceph-node1 [ceph-node1][WARNIN] osd keyring does not exist yet, creating one [ceph-node1][INFO ] Running command: sudo /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/vdb [ceph-node1][WARNIN] Running command: /usr/bin/ceph-authtool --gen-print-key [ceph-node1][WARNIN] Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 1664c48e-0fdc-4166-b2b4-a4bbed39e758 [ceph-node1][WARNIN] Running command: vgcreate --force --yes ceph-d6691ee3-0f71-416b-bc97-188c3718b2c6 /dev/vdb [ceph-node1][WARNIN] stdout: Physical volume "/dev/vdb" successfully created. [ceph-node1][WARNIN] stdout: Volume group "ceph-d6691ee3-0f71-416b-bc97-188c3718b2c6" successfully created [ceph-node1][WARNIN] Running command: lvcreate --yes -l 25599 -n osd-block-1664c48e-0fdc-4166-b2b4-a4bbed39e758 ceph-d6691ee3-0f71-416b-bc97-188c3718b2c6 [ceph-node1][WARNIN] stdout: Logical volume "osd-block-1664c48e-0fdc-4166-b2b4-a4bbed39e758" created. [ceph-node1][WARNIN] Running command: /usr/bin/ceph-authtool --gen-print-key [ceph-node1][WARNIN] Running command: /usr/bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-0 [ceph-node1][WARNIN] --> Executable selinuxenabled not in PATH: /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/snap/bin/usr/local/bin:/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/sbin [ceph-node1][WARNIN] Running command: /usr/bin/chown -h ceph:ceph /dev/ceph-d6691ee3-0f71-416b-bc97-188c3718b2c6/osd-block-1664c48e-0fdc-4166-b2b4-a4bbed39e758 [ceph-node1][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /dev/dm-1 [ceph-node1][WARNIN] Running command: /usr/bin/ln -s /dev/ceph-d6691ee3-0f71-416b-bc97-188c3718b2c6/osd-block-1664c48e-0fdc-4166-b2b4-a4bbed39e758 /var/lib/ceph/osd/ceph-0/block [ceph-node1][WARNIN] Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-0/activate.monmap [ceph-node1][WARNIN] stderr: 2022-10-04T23:18:25.099+0800 7f2d87b9d700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory [ceph-node1][WARNIN] 2022-10-04T23:18:25.099+0800 7f2d87b9d700 -1 AuthRegistry(0x7f2d8005b868) no keyring found at /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx [ceph-node1][WARNIN] stderr: got monmap epoch 1 [ceph-node1][WARNIN] Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-0/keyring --create-keyring --name osd.0 --add-key AQC/TjxjKuoSDhAAtMJyExZqbJWoTuTpU1dtYw== [ceph-node1][WARNIN] stdout: creating /var/lib/ceph/osd/ceph-0/keyring [ceph-node1][WARNIN] added entity osd.0 auth(key=AQC/TjxjKuoSDhAAtMJyExZqbJWoTuTpU1dtYw==) [ceph-node1][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/keyring [ceph-node1][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/ [ceph-node1][WARNIN] Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 0 --monmap /var/lib/ceph/osd/ceph-0/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-0/ --osd-uuid 1664c48e-0fdc-4166-b2b4-a4bbed39e758 --setuser ceph --setgroup ceph [ceph-node1][WARNIN] stderr: 2022-10-04T23:18:25.947+0800 7fb2821d7080 -1 bluestore(/var/lib/ceph/osd/ceph-0/) _read_fsid unparsable uuid [ceph-node1][WARNIN] --> ceph-volume lvm prepare successful for: /dev/vdb [ceph-node1][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 [ceph-node1][WARNIN] Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-d6691ee3-0f71-416b-bc97-188c3718b2c6/osd-block-1664c48e-0fdc-4166-b2b4-a4bbed39e758 --path /var/lib/ceph/osd/ceph-0 --no-mon-config [ceph-node1][WARNIN] Running command: /usr/bin/ln -snf /dev/ceph-d6691ee3-0f71-416b-bc97-188c3718b2c6/osd-block-1664c48e-0fdc-4166-b2b4-a4bbed39e758 /var/lib/ceph/osd/ceph-0/block [ceph-node1][WARNIN] Running command: /usr/bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-0/block [ceph-node1][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /dev/dm-1 [ceph-node1][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 [ceph-node1][WARNIN] Running command: /usr/bin/systemctl enable ceph-volume@lvm-0-1664c48e-0fdc-4166-b2b4-a4bbed39e758 [ceph-node1][WARNIN] stderr: Created symlink /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-0-1664c48e-0fdc-4166-b2b4-a4bbed39e758.service → /lib/systemd/system/ceph-volume@.service. [ceph-node1][WARNIN] Running command: /usr/bin/systemctl enable --runtime ceph-osd@0 [ceph-node1][WARNIN] stderr: Created symlink /run/systemd/system/ceph-osd.target.wants/ceph-osd@0.service → /lib/systemd/system/ceph-osd@.service. [ceph-node1][WARNIN] Running command: /usr/bin/systemctl start ceph-osd@0 [ceph-node1][WARNIN] --> ceph-volume lvm activate successful for osd ID: 0 [ceph-node1][WARNIN] --> ceph-volume lvm create successful for: /dev/vdb [ceph-node1][INFO ] checking OSD status... [ceph-node1][INFO ] Running command: sudo /bin/ceph --cluster=ceph osd stat --format=json [ceph_deploy.osd][DEBUG ] Host ceph-node1 is now ready for osd use.

继续添加其他节点osd

ceph-deploy osd create ceph-node1 --data /dev/vdb ceph-deploy osd create ceph-node1 --data /dev/vdc ceph-deploy osd create ceph-node1 --data /dev/vdd ceph-deploy osd create ceph-node2 --data /dev/vdb ceph-deploy osd create ceph-node2 --data /dev/vdc ceph-deploy osd create ceph-node2 --data /dev/vdd ceph-deploy osd create ceph-node3 --data /dev/vdb ceph-deploy osd create ceph-node3 --data /dev/vdc ceph-deploy osd create ceph-node3 --data /dev/vdd

四、扩容ceph-mon、ceph-mgr

4.1、扩容ceph-mon

[root@ceph-mon2 ~]# apt install ceph-mon

[root@ceph-mon3 ~]# apt install ceph-mon

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy mon add ceph-mon2

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy mon add ceph-mon2 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] subcommand : add [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7f23fe8fc850> [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] func : <function mon at 0x7f23fe8e3040> [ceph_deploy.cli][INFO ] address : None [ceph_deploy.cli][INFO ] mon : ['ceph-mon2'] [ceph_deploy.mon][INFO ] ensuring configuration of new mon host: ceph-mon2 [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-mon2 [ceph-mon2][DEBUG ] connection detected need for sudo [ceph-mon2][DEBUG ] connected to host: ceph-mon2 [ceph_deploy.mon][DEBUG ] Adding mon to cluster ceph, host ceph-mon2 [ceph_deploy.mon][DEBUG ] using mon address by resolving host: 172.16.88.102 [ceph_deploy.mon][DEBUG ] detecting platform for host ceph-mon2 ... [ceph-mon2][DEBUG ] connection detected need for sudo [ceph-mon2][DEBUG ] connected to host: ceph-mon2 [ceph_deploy.mon][INFO ] distro info: ubuntu 20.04 focal [ceph-mon2][DEBUG ] determining if provided host has same hostname in remote [ceph-mon2][DEBUG ] adding mon to ceph-mon2 [ceph-mon2][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph-mon2/done [ceph-mon2][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-ceph-mon2/done [ceph-mon2][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-ceph-mon2.mon.keyring [ceph-mon2][INFO ] Running command: sudo ceph --cluster ceph mon getmap -o /var/lib/ceph/tmp/ceph.ceph-mon2.monmap [ceph-mon2][WARNIN] got monmap epoch 1 [ceph-mon2][INFO ] Running command: sudo ceph-mon --cluster ceph --mkfs -i ceph-mon2 --monmap /var/lib/ceph/tmp/ceph.ceph-mon2.monmap --keyring /var/lib/ceph/tmp/ceph-ceph-mon2.mon.keyring --setuser 64045 --setgroup 64045 [ceph-mon2][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-ceph-mon2.mon.keyring [ceph-mon2][INFO ] Running command: sudo systemctl enable ceph.target [ceph-mon2][INFO ] Running command: sudo systemctl enable ceph-mon@ceph-mon2 [ceph-mon2][WARNIN] Created symlink /etc/systemd/system/ceph-mon.target.wants/ceph-mon@ceph-mon2.service → /lib/systemd/system/ceph-mon@.service. [ceph-mon2][INFO ] Running command: sudo systemctl start ceph-mon@ceph-mon2 [ceph-mon2][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-mon2.asok mon_status [ceph-mon2][WARNIN] ceph-mon2 is not defined in `mon initial members` [ceph-mon2][WARNIN] monitor ceph-mon2 does not exist in monmap [ceph-mon2][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-mon2.asok mon_status [ceph-mon2][DEBUG ] ******************************************************************************** [ceph-mon2][DEBUG ] status for monitor: mon.ceph-mon2 [ceph-mon2][DEBUG ] { [ceph-mon2][DEBUG ] "election_epoch": 0, [ceph-mon2][DEBUG ] "extra_probe_peers": [], [ceph-mon2][DEBUG ] "feature_map": { [ceph-mon2][DEBUG ] "mon": [ [ceph-mon2][DEBUG ] { [ceph-mon2][DEBUG ] "features": "0x3f01cfb9fffdffff", [ceph-mon2][DEBUG ] "num": 1, [ceph-mon2][DEBUG ] "release": "luminous" [ceph-mon2][DEBUG ] } [ceph-mon2][DEBUG ] ] [ceph-mon2][DEBUG ] }, [ceph-mon2][DEBUG ] "features": { [ceph-mon2][DEBUG ] "quorum_con": "0", [ceph-mon2][DEBUG ] "quorum_mon": [], [ceph-mon2][DEBUG ] "required_con": "2449958197560098820", [ceph-mon2][DEBUG ] "required_mon": [ [ceph-mon2][DEBUG ] "kraken", [ceph-mon2][DEBUG ] "luminous", [ceph-mon2][DEBUG ] "mimic", [ceph-mon2][DEBUG ] "osdmap-prune", [ceph-mon2][DEBUG ] "nautilus", [ceph-mon2][DEBUG ] "octopus", [ceph-mon2][DEBUG ] "pacific", [ceph-mon2][DEBUG ] "elector-pinging" [ceph-mon2][DEBUG ] ] [ceph-mon2][DEBUG ] }, [ceph-mon2][DEBUG ] "monmap": { [ceph-mon2][DEBUG ] "created": "2022-10-04T14:24:27.817567Z", [ceph-mon2][DEBUG ] "disallowed_leaders: ": "", [ceph-mon2][DEBUG ] "election_strategy": 1, [ceph-mon2][DEBUG ] "epoch": 1, [ceph-mon2][DEBUG ] "features": { [ceph-mon2][DEBUG ] "optional": [], [ceph-mon2][DEBUG ] "persistent": [ [ceph-mon2][DEBUG ] "kraken", [ceph-mon2][DEBUG ] "luminous", [ceph-mon2][DEBUG ] "mimic", [ceph-mon2][DEBUG ] "osdmap-prune", [ceph-mon2][DEBUG ] "nautilus", [ceph-mon2][DEBUG ] "octopus", [ceph-mon2][DEBUG ] "pacific", [ceph-mon2][DEBUG ] "elector-pinging" [ceph-mon2][DEBUG ] ] [ceph-mon2][DEBUG ] }, [ceph-mon2][DEBUG ] "fsid": "8dc32c41-121c-49df-9554-dfb7deb8c975", [ceph-mon2][DEBUG ] "min_mon_release": 16, [ceph-mon2][DEBUG ] "min_mon_release_name": "pacific", [ceph-mon2][DEBUG ] "modified": "2022-10-04T14:24:27.817567Z", [ceph-mon2][DEBUG ] "mons": [ [ceph-mon2][DEBUG ] { [ceph-mon2][DEBUG ] "addr": "172.16.88.101:6789/0", [ceph-mon2][DEBUG ] "crush_location": "{}", [ceph-mon2][DEBUG ] "name": "ceph-mon1", [ceph-mon2][DEBUG ] "priority": 0, [ceph-mon2][DEBUG ] "public_addr": "172.16.88.101:6789/0", [ceph-mon2][DEBUG ] "public_addrs": { [ceph-mon2][DEBUG ] "addrvec": [ [ceph-mon2][DEBUG ] { [ceph-mon2][DEBUG ] "addr": "172.16.88.101:3300", [ceph-mon2][DEBUG ] "nonce": 0, [ceph-mon2][DEBUG ] "type": "v2" [ceph-mon2][DEBUG ] }, [ceph-mon2][DEBUG ] { [ceph-mon2][DEBUG ] "addr": "172.16.88.101:6789", [ceph-mon2][DEBUG ] "nonce": 0, [ceph-mon2][DEBUG ] "type": "v1" [ceph-mon2][DEBUG ] } [ceph-mon2][DEBUG ] ] [ceph-mon2][DEBUG ] }, [ceph-mon2][DEBUG ] "rank": 0, [ceph-mon2][DEBUG ] "weight": 0 [ceph-mon2][DEBUG ] } [ceph-mon2][DEBUG ] ], [ceph-mon2][DEBUG ] "stretch_mode": false, [ceph-mon2][DEBUG ] "tiebreaker_mon": "" [ceph-mon2][DEBUG ] }, [ceph-mon2][DEBUG ] "name": "ceph-mon2", [ceph-mon2][DEBUG ] "outside_quorum": [], [ceph-mon2][DEBUG ] "quorum": [], [ceph-mon2][DEBUG ] "rank": -1, [ceph-mon2][DEBUG ] "state": "probing", [ceph-mon2][DEBUG ] "stretch_mode": false, [ceph-mon2][DEBUG ] "sync_provider": [] [ceph-mon2][DEBUG ] } [ceph-mon2][DEBUG ] ******************************************************************************** [ceph-mon2][INFO ] monitor: mon.ceph-mon2 is currently at the state of probing

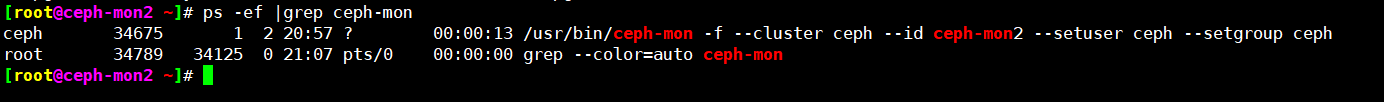

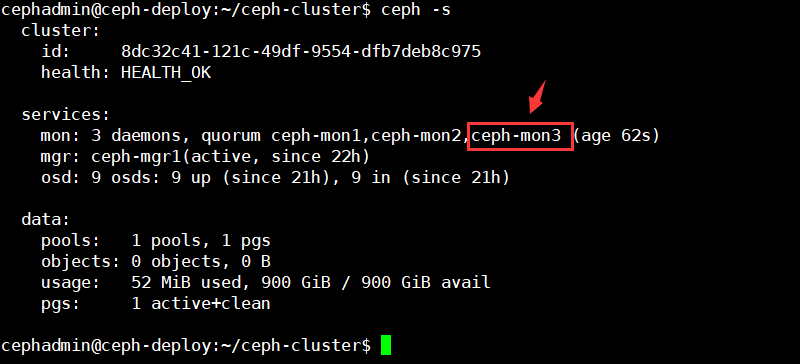

验证是否添加成功

继续添加ceph-mon3

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy mon add ceph-mon3

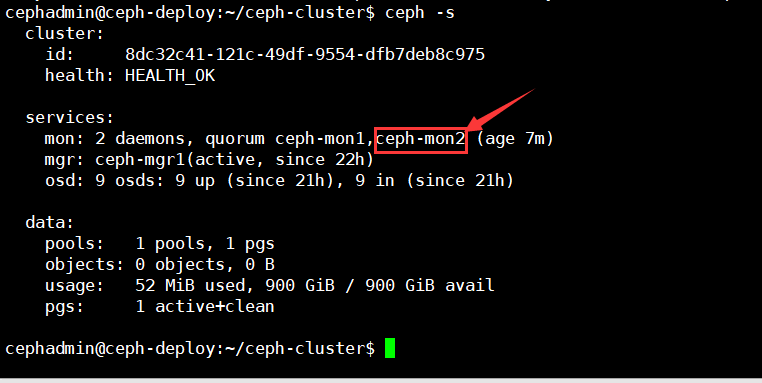

验证ceph-mon状态

cephadmin@ceph-deploy:~/ceph-cluster$ ceph quorum_status --format json-pretty

{ "election_epoch": 12, "quorum": [ 0, 1, 2 ], "quorum_names": [ "ceph-mon1", "ceph-mon2", "ceph-mon3" ], "quorum_leader_name": "ceph-mon1", #当前的leader节点 "quorum_age": 194, "features": { "quorum_con": "4540138297136906239", "quorum_mon": [ "kraken", "luminous", "mimic", "osdmap-prune", "nautilus", "octopus", "pacific", "elector-pinging" ] }, "monmap": { "epoch": 3, "fsid": "8dc32c41-121c-49df-9554-dfb7deb8c975", "modified": "2022-10-05T13:06:49.042131Z", "created": "2022-10-04T14:24:27.817567Z", "min_mon_release": 16, "min_mon_release_name": "pacific", "election_strategy": 1, "disallowed_leaders: ": "", "stretch_mode": false, "tiebreaker_mon": "", "features": { "persistent": [ "kraken", "luminous", "mimic", "osdmap-prune", "nautilus", "octopus", "pacific", "elector-pinging" ], "optional": [] }, "mons": [ { "rank": 0, "name": "ceph-mon1", "public_addrs": { "addrvec": [ { "type": "v2", "addr": "172.16.88.101:3300", "nonce": 0 }, { "type": "v1", "addr": "172.16.88.101:6789", "nonce": 0 } ] }, "addr": "172.16.88.101:6789/0", "public_addr": "172.16.88.101:6789/0", "priority": 0, "weight": 0, "crush_location": "{}" }, { "rank": 1, "name": "ceph-mon2", "public_addrs": { "addrvec": [ { "type": "v2", "addr": "172.16.88.102:3300", "nonce": 0 }, { "type": "v1", "addr": "172.16.88.102:6789", "nonce": 0 } ] }, "addr": "172.16.88.102:6789/0", "public_addr": "172.16.88.102:6789/0", "priority": 0, "weight": 0, "crush_location": "{}" }, { "rank": 2, "name": "ceph-mon3", "public_addrs": { "addrvec": [ { "type": "v2", "addr": "172.16.88.103:3300", "nonce": 0 }, { "type": "v1", "addr": "172.16.88.103:6789", "nonce": 0 } ] }, "addr": "172.16.88.103:6789/0", "public_addr": "172.16.88.103:6789/0", "priority": 0, "weight": 0, "crush_location": "{}" } ] } }

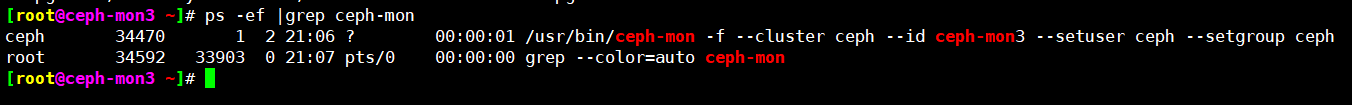

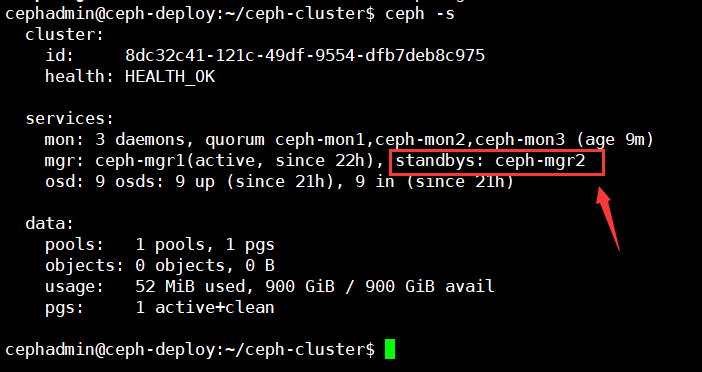

4.2、扩容ceph-mgr

[root@ceph-mgr2 ~]# apt install ceph-mgr

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy mgr create ceph-mgr2

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy mgr create ceph-mgr2 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7f79b4b986a0> [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] func : <function mgr at 0x7f79b4bd0700> [ceph_deploy.cli][INFO ] mgr : [('ceph-mgr2', 'ceph-mgr2')] [ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts ceph-mgr2:ceph-mgr2 [ceph-mgr2][DEBUG ] connection detected need for sudo [ceph-mgr2][DEBUG ] connected to host: ceph-mgr2 [ceph_deploy.mgr][INFO ] Distro info: ubuntu 20.04 focal [ceph_deploy.mgr][DEBUG ] remote host will use systemd [ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to ceph-mgr2 [ceph-mgr2][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.ceph-mgr2 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-ceph-mgr2/keyring [ceph-mgr2][INFO ] Running command: sudo systemctl enable ceph-mgr@ceph-mgr2 [ceph-mgr2][WARNIN] Created symlink /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@ceph-mgr2.service → /lib/systemd/system/ceph-mgr@.service. [ceph-mgr2][INFO ] Running command: sudo systemctl start ceph-mgr@ceph-mgr2 [ceph-mgr2][INFO ] Running command: sudo systemctl enable ceph.target

同步配置文件到ceph-mgr2

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy admin ceph-mgr2

[ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy admin ceph-mgr2 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] client : ['ceph-mgr2'] [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7fab8d716130> [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] func : <function admin at 0x7fab8dd01310> [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-mgr2 [ceph-mgr2][DEBUG ] connection detected need for sudo [ceph-mgr2][DEBUG ] connected to host: ceph-mgr2

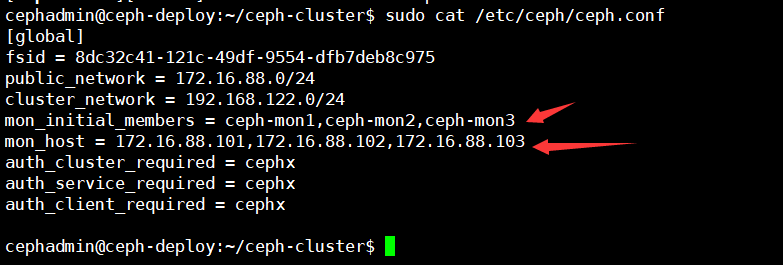

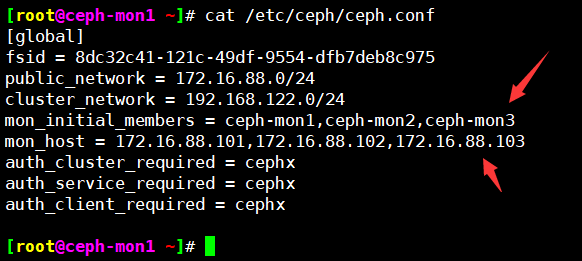

4.3、同步ceph-mon扩容节点信息到ceph.conf

修改ceph.conf

cephadmin@ceph-deploy:~/ceph-cluster$ vi ceph.conf

cephadmin@ceph-deploy:~/ceph-cluster$ cat ceph.conf

[global] fsid = 8dc32c41-121c-49df-9554-dfb7deb8c975 public_network = 172.16.88.0/24 cluster_network = 192.168.122.0/24 mon_initial_members = ceph-mon1,ceph-mon2,ceph-mon3 mon_host = 172.16.88.101,172.16.88.102,172.16.88.103 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx

同步配置文件到/etc/ceph/ceph.conf

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy --overwrite-conf config push ceph-deploy ceph-mon{1,2,3} ceph-mgr{1,2} ceph-node{1,2,3}

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.1.0): /usr/local/bin/ceph-deploy --overwrite-conf config push ceph-deploy ceph-mon1 ceph-mon2 ceph-mon3 ceph-mgr1 ceph-mgr2 ceph-node1 ceph-node2 ceph-node3 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] overwrite_conf : True [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] subcommand : push [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf object at 0x7f428a56f400> [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] func : <function config at 0x7f428a5f3790> [ceph_deploy.cli][INFO ] client : ['ceph-deploy', 'ceph-mon1', 'ceph-mon2', 'ceph-mon3', 'ceph-mgr1', 'ceph-mgr2', 'ceph-node1', 'ceph-node2', 'ceph-node3'] [ceph_deploy.config][DEBUG ] Pushing config to ceph-deploy [ceph-deploy][DEBUG ] connection detected need for sudo [ceph-deploy][DEBUG ] connected to host: ceph-deploy [ceph_deploy.config][DEBUG ] Pushing config to ceph-mon1 [ceph-mon1][DEBUG ] connection detected need for sudo [ceph-mon1][DEBUG ] connected to host: ceph-mon1 [ceph_deploy.config][DEBUG ] Pushing config to ceph-mon2 [ceph-mon2][DEBUG ] connection detected need for sudo [ceph-mon2][DEBUG ] connected to host: ceph-mon2 [ceph_deploy.config][DEBUG ] Pushing config to ceph-mon3 [ceph-mon3][DEBUG ] connection detected need for sudo [ceph-mon3][DEBUG ] connected to host: ceph-mon3 [ceph_deploy.config][DEBUG ] Pushing config to ceph-mgr1 [ceph-mgr1][DEBUG ] connection detected need for sudo [ceph-mgr1][DEBUG ] connected to host: ceph-mgr1 [ceph_deploy.config][DEBUG ] Pushing config to ceph-mgr2 [ceph-mgr2][DEBUG ] connection detected need for sudo [ceph-mgr2][DEBUG ] connected to host: ceph-mgr2 [ceph_deploy.config][DEBUG ] Pushing config to ceph-node1 [ceph-node1][DEBUG ] connection detected need for sudo [ceph-node1][DEBUG ] connected to host: ceph-node1 [ceph_deploy.config][DEBUG ] Pushing config to ceph-node2 [ceph-node2][DEBUG ] connection detected need for sudo [ceph-node2][DEBUG ] connected to host: ceph-node2 [ceph_deploy.config][DEBUG ] Pushing config to ceph-node3 [ceph-node3][DEBUG ] connection detected need for sudo [ceph-node3][DEBUG ] connected to host: ceph-node3

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 【杭电多校比赛记录】2025“钉耙编程”中国大学生算法设计春季联赛(1)