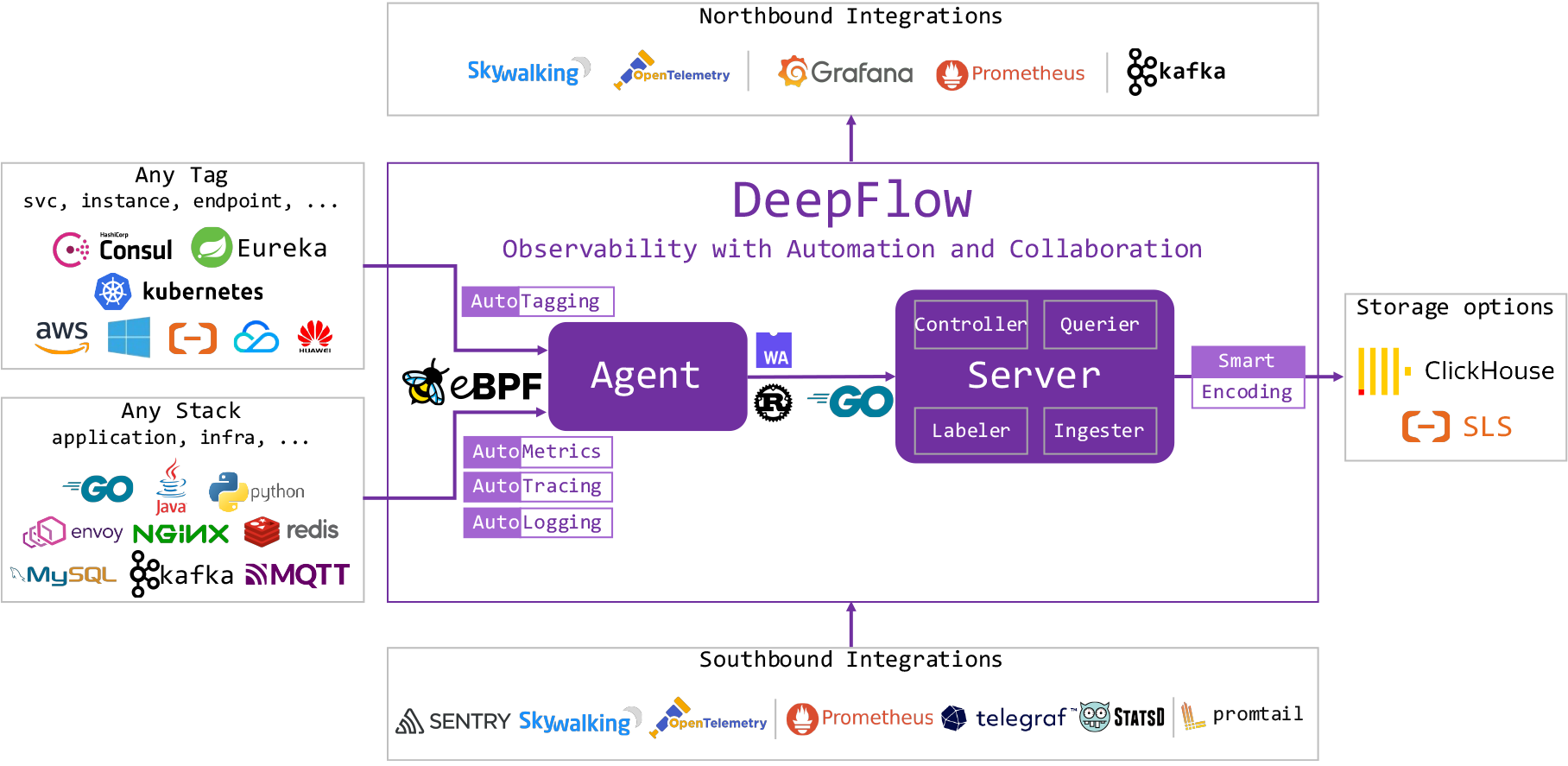

k8s集群部署deepflow

相关文档链接地址

官方文档:https://www.deepflowys.com/docs/zh/release-notes/release-6.1.1/

github地址:https://github.com/deepflowys/deepflow

官网demo体验:https://ce-demo.deepflow.yunshan.net/d/Application_K8s_Pod_Map/application-k8s-pod-map?orgId=1

一:sealos安装

使用sealos方式部署,推荐用centos7, centos8暂时没测过,不知道是否有坑

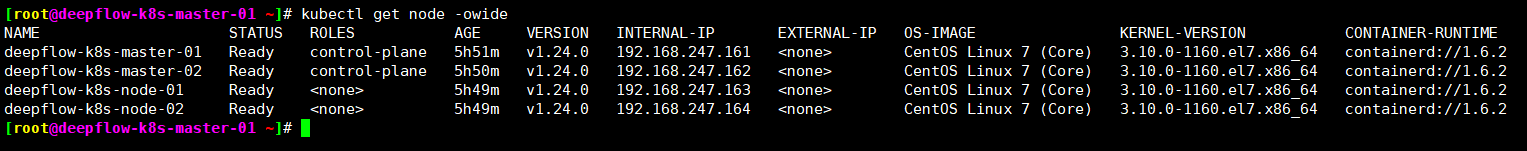

机器环境准备

192.168.247.161 deeplflow-k8s-master-01 2vcpu 4G 50G 192.168.247.162 deeplflow-k8s-master-02 2vcpu 4G 50G 192.168.247.163 deeplflow-k8s-node-01 2vcpu 4G 50G 192.168.247.164 deeplflow-k8s-node-02 2vcpu 4G 50G

安装部署

下载sealos工具

wget https://github.com/labring/sealos/releases/download/v4.0.0/sealos_4.0.0_linux_amd64.tar.gz \ && tar zxvf sealos_4.0.0_linux_amd64.tar.gz sealos && chmod +x sealos && mv sealos /usr/bin 通过sealos工具部署k8s集群,并在k8s集群部署deepflow sealos run \ labring/kubernetes:v1.24.0 \ labring/calico:v3.22.1 \ labring/helm:v3.8.2 \ labring/openebs:v1.9.0 \ labring/deepflow:v6.1.1 \ --masters 192.168.247.161,192.168.247.162 \ --nodes 192.168.247.163,192.168.247.164 \ -p 'redhat'

[root@deepflow-k8s-master-01 ~]# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE calico-system calico-kube-controllers-6b44b54755-2fq8r 1/1 Running 8 (5m9s ago) 5h48m calico-system calico-node-6d4mp 1/1 Running 1 (4h44m ago) 5h48m calico-system calico-node-c9tnd 1/1 Running 1 (4h44m ago) 5h48m calico-system calico-node-flqz8 1/1 Running 2 5h48m calico-system calico-node-fr4k4 1/1 Running 1 (4h44m ago) 5h48m calico-system calico-typha-5bfcb46fb8-lw4zn 1/1 Running 1 (4h44m ago) 5h48m calico-system calico-typha-5bfcb46fb8-zqrx9 1/1 Running 1 (4h44m ago) 5h48m deepflow deepflow-agent-8vnxw 1/1 Running 6 (109m ago) 5h47m deepflow deepflow-agent-qplwz 1/1 Running 5 (4m29s ago) 5h48m deepflow deepflow-app-7949c986dc-ddz9z 1/1 Running 1 (4h44m ago) 5h49m deepflow deepflow-clickhouse-0 1/1 Running 1 (4h44m ago) 5h49m deepflow deepflow-grafana-6dd7c459d5-h4swc 1/1 Running 6 (4h43m ago) 5h49m deepflow deepflow-mysql-6967788cdb-frgd9 1/1 Running 1 (4h44m ago) 5h49m deepflow deepflow-server-0 2/2 Running 8 (4h42m ago) 5h49m kube-system coredns-6d4b75cb6d-2w24v 1/1 Running 1 (4h44m ago) 5h51m kube-system coredns-6d4b75cb6d-5xljp 1/1 Running 1 (4h44m ago) 5h51m kube-system etcd-deepflow-k8s-master-01 1/1 Running 2 (6m29s ago) 5h52m kube-system etcd-deepflow-k8s-master-02 1/1 Running 1 (4h44m ago) 5h50m kube-system kube-apiserver-deepflow-k8s-master-01 1/1 Running 4 (4m48s ago) 5h52m kube-system kube-apiserver-deepflow-k8s-master-02 1/1 Running 1 (4h44m ago) 5h50m kube-system kube-controller-manager-deepflow-k8s-master-01 1/1 Running 2 (4h44m ago) 5h52m kube-system kube-controller-manager-deepflow-k8s-master-02 1/1 Running 1 (4h44m ago) 5h50m kube-system kube-proxy-bv9s8 1/1 Running 1 (4h44m ago) 5h50m kube-system kube-proxy-dp8ms 1/1 Running 1 (4h44m ago) 5h49m kube-system kube-proxy-dxdnb 1/1 Running 1 (4h44m ago) 5h51m kube-system kube-proxy-jj7zs 1/1 Running 1 (4h44m ago) 5h49m kube-system kube-scheduler-deepflow-k8s-master-01 1/1 Running 2 (4h44m ago) 5h52m kube-system kube-scheduler-deepflow-k8s-master-02 1/1 Running 1 (4h44m ago) 5h50m kube-system kube-sealyun-lvscare-deepflow-k8s-node-01 1/1 Running 1 (4h44m ago) 5h49m kube-system kube-sealyun-lvscare-deepflow-k8s-node-02 1/1 Running 1 (4h44m ago) 5h49m openebs openebs-localpv-provisioner-7b7b4c7b7d-n92jz 1/1 Running 8 (5m40s ago) 5h49m openebs openebs-ndm-cluster-exporter-54cf95c4f7-xslhc 1/1 Running 2 (4h43m ago) 5h49m openebs openebs-ndm-node-exporter-pfhz4 1/1 Running 1 (4h44m ago) 5h47m openebs openebs-ndm-node-exporter-qnrd4 1/1 Running 2 (4h43m ago) 5h48m openebs openebs-ndm-operator-6566d67cf6-krv9f 1/1 Running 2 (4h42m ago) 5h49m tigera-operator tigera-operator-d7957f5cc-ff428 1/1 Running 5 (8m5s ago) 5h49m [root@deepflow-k8s-master-01 ~]#

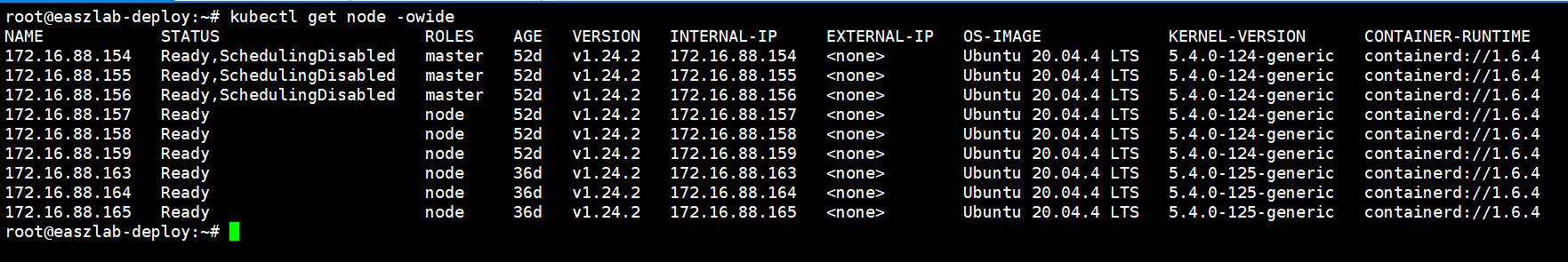

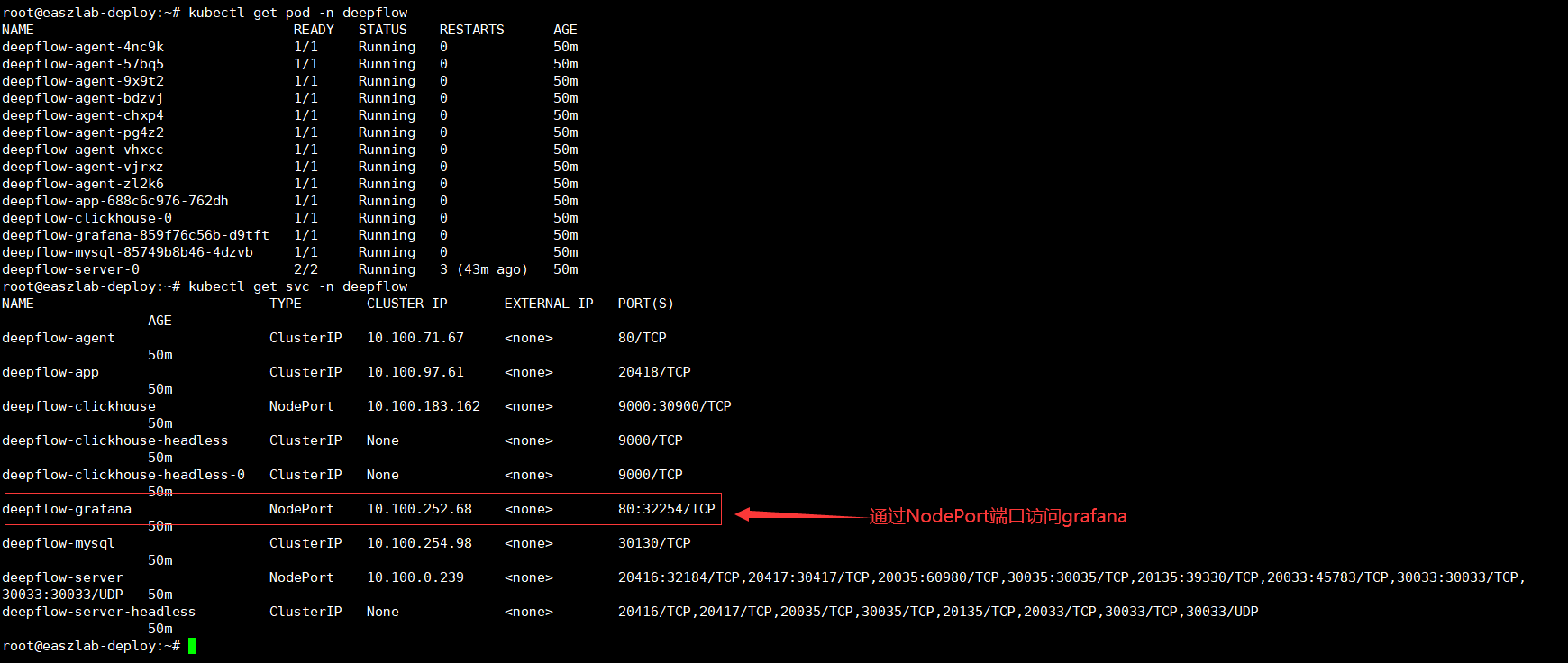

二:helm普通安装

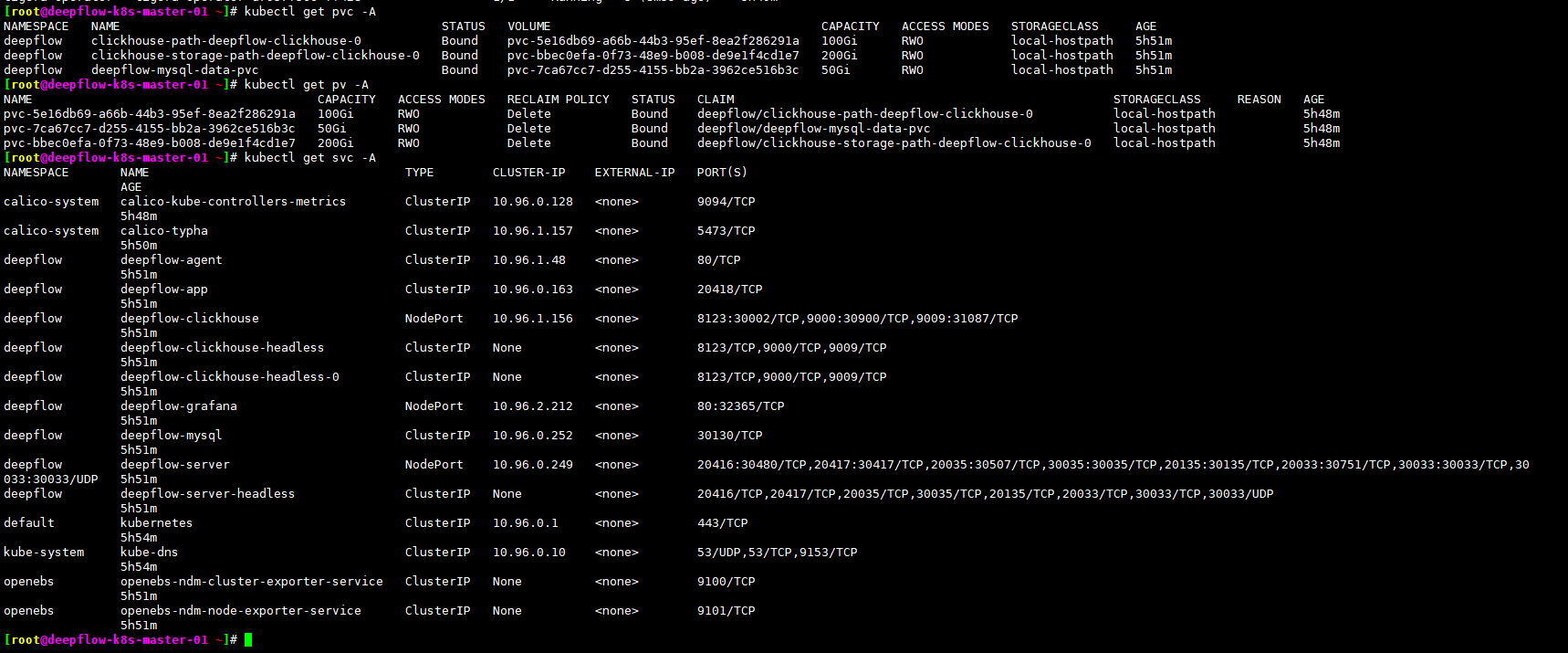

面通过helm在k8s集群里部署deepflow

安装helm工具 curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 chmod 700 get_helm.sh ./get_helm.sh

#helm version

version.BuildInfo{Version:"v3.9.4", GitCommit:"dbc6d8e20fe1d58d50e6ed30f09a04a77e4c68db", GitTreeState:"clean", GoVersion:"go1.17.13"}

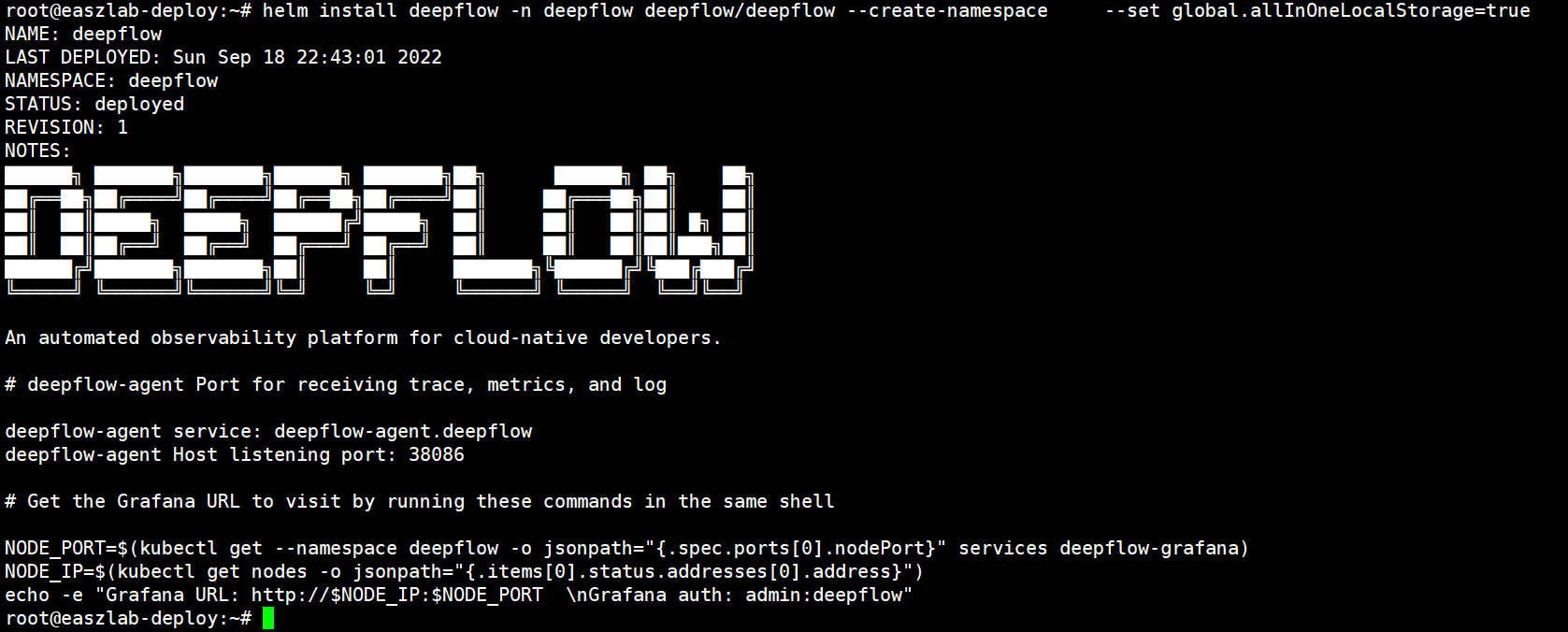

helm repo add deepflow https://deepflowys.github.io/deepflow helm repo update deepflow helm install deepflow -n deepflow deepflow/deepflow --create-namespace --set global.allInOneLocalStorage=true

--set global.allInOneLocalStorage=true 当集群没有存储,可以选择此项,此项可以指定机器目录存放数据

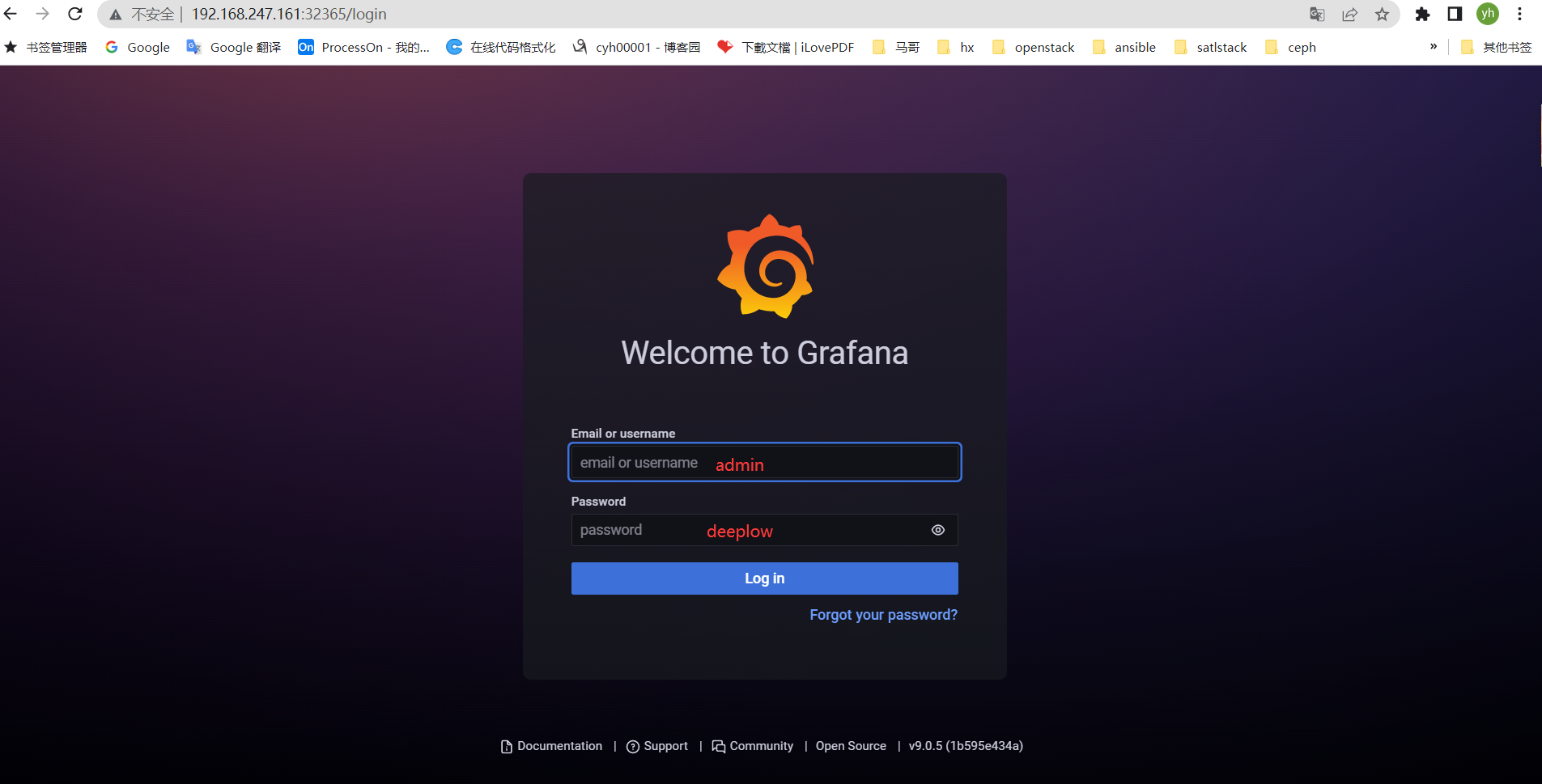

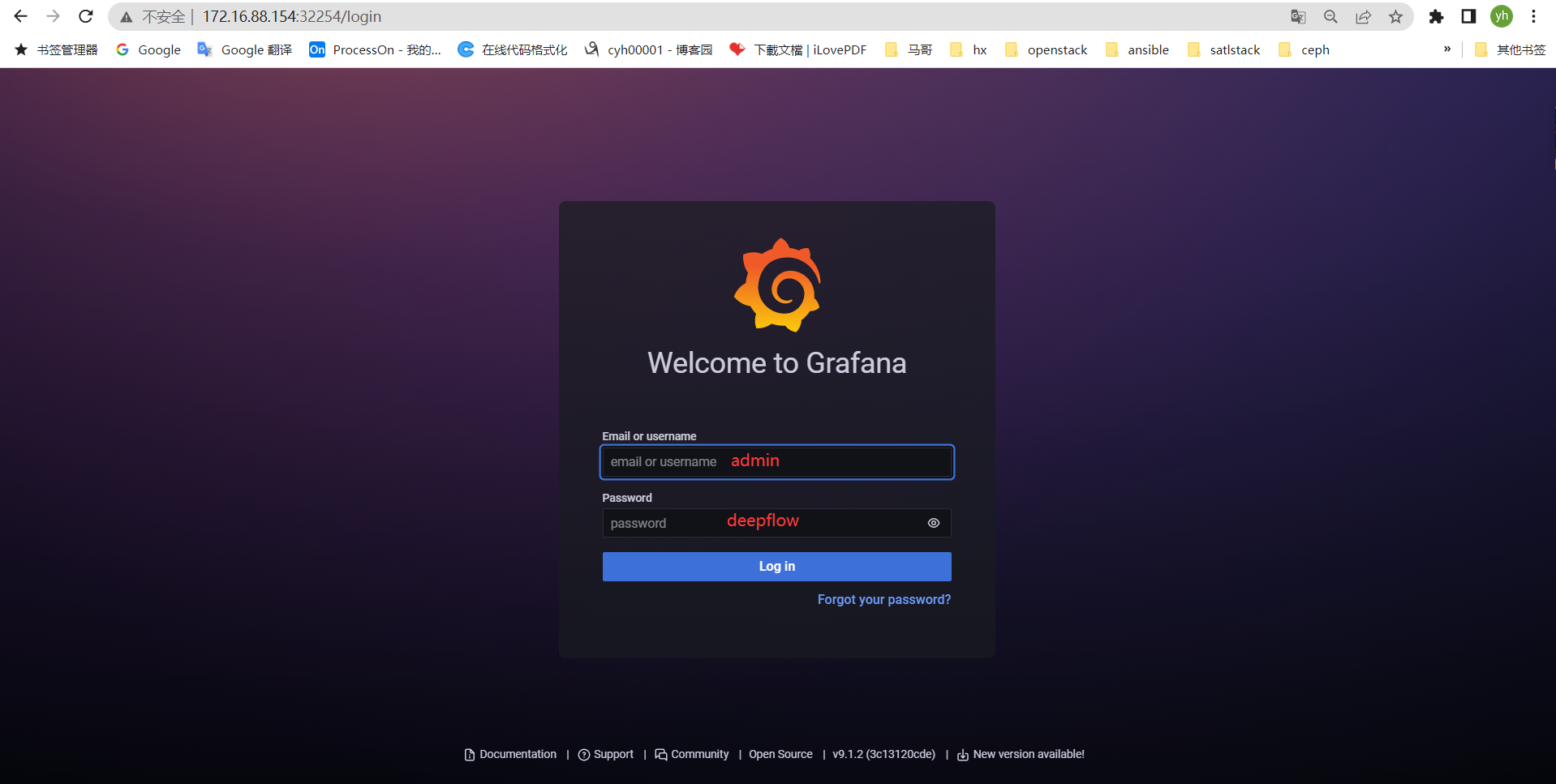

http://172.16.88.154:32254 admin deepflow

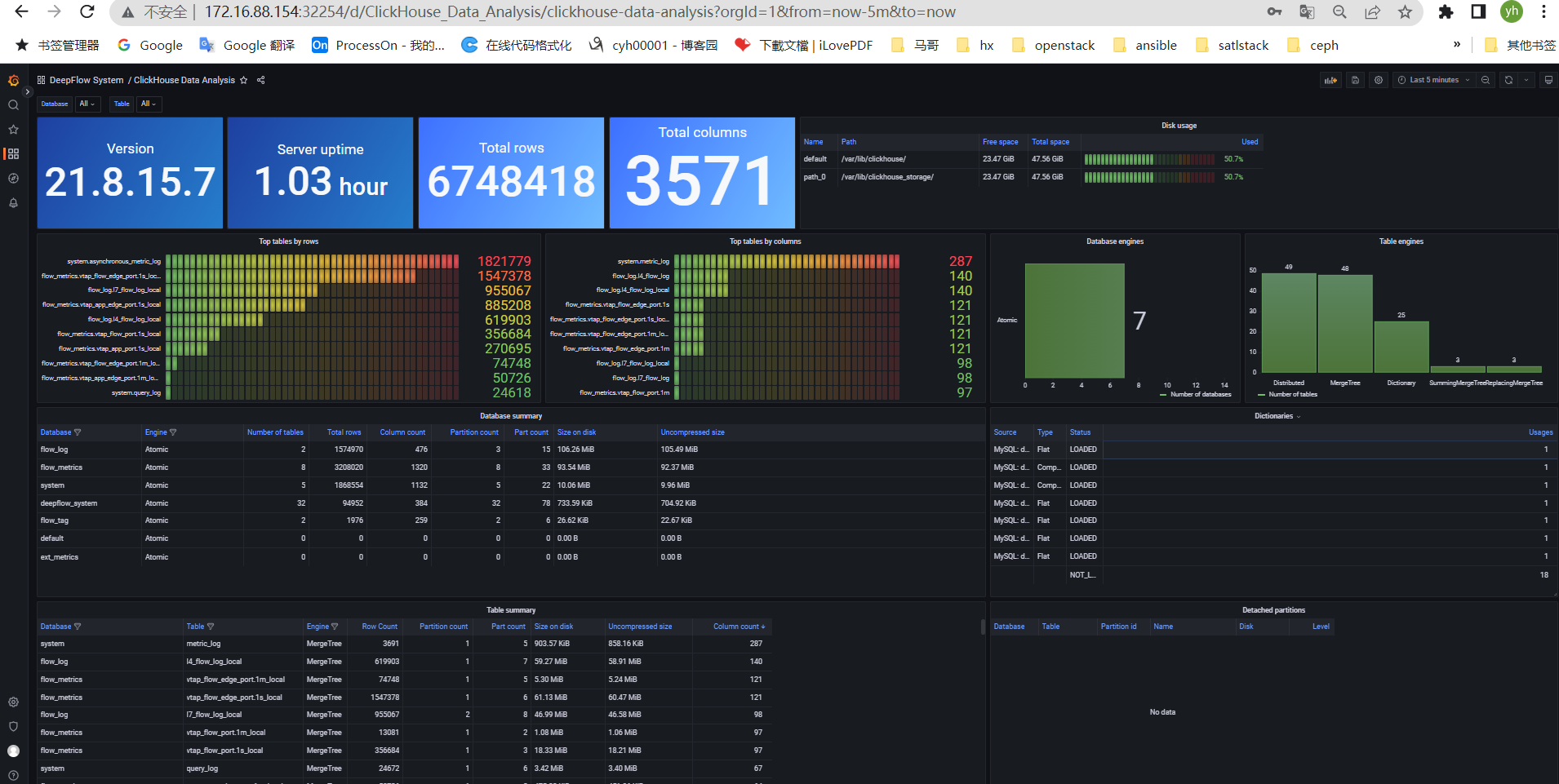

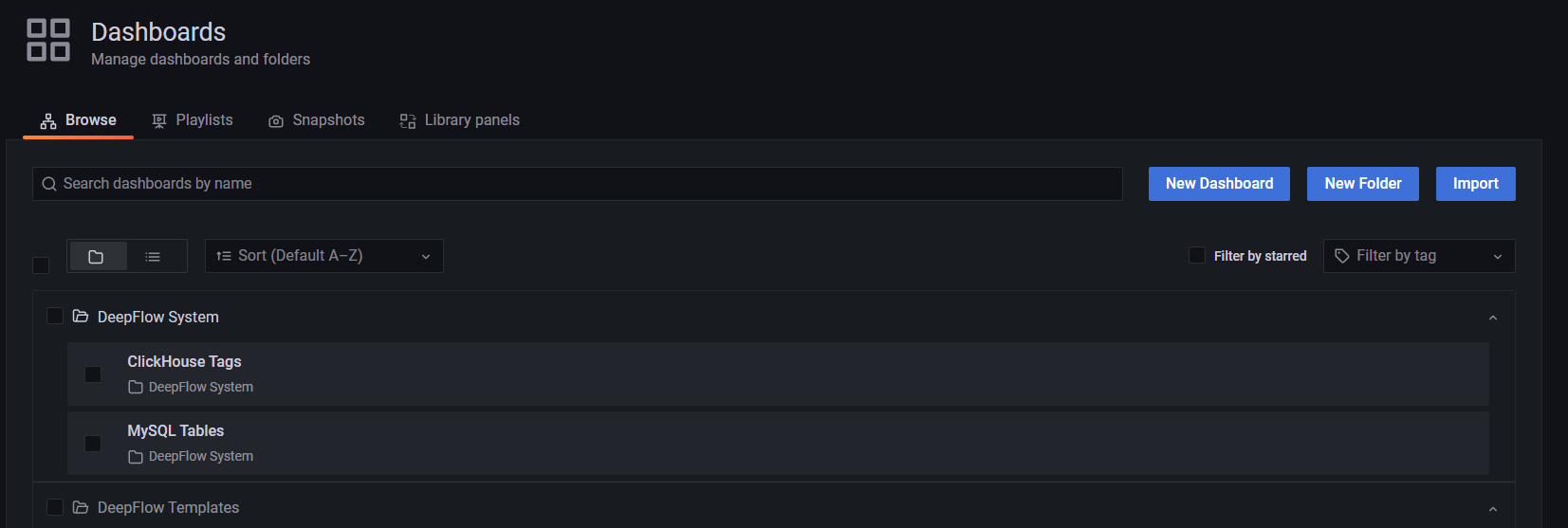

clichouse数据分析图

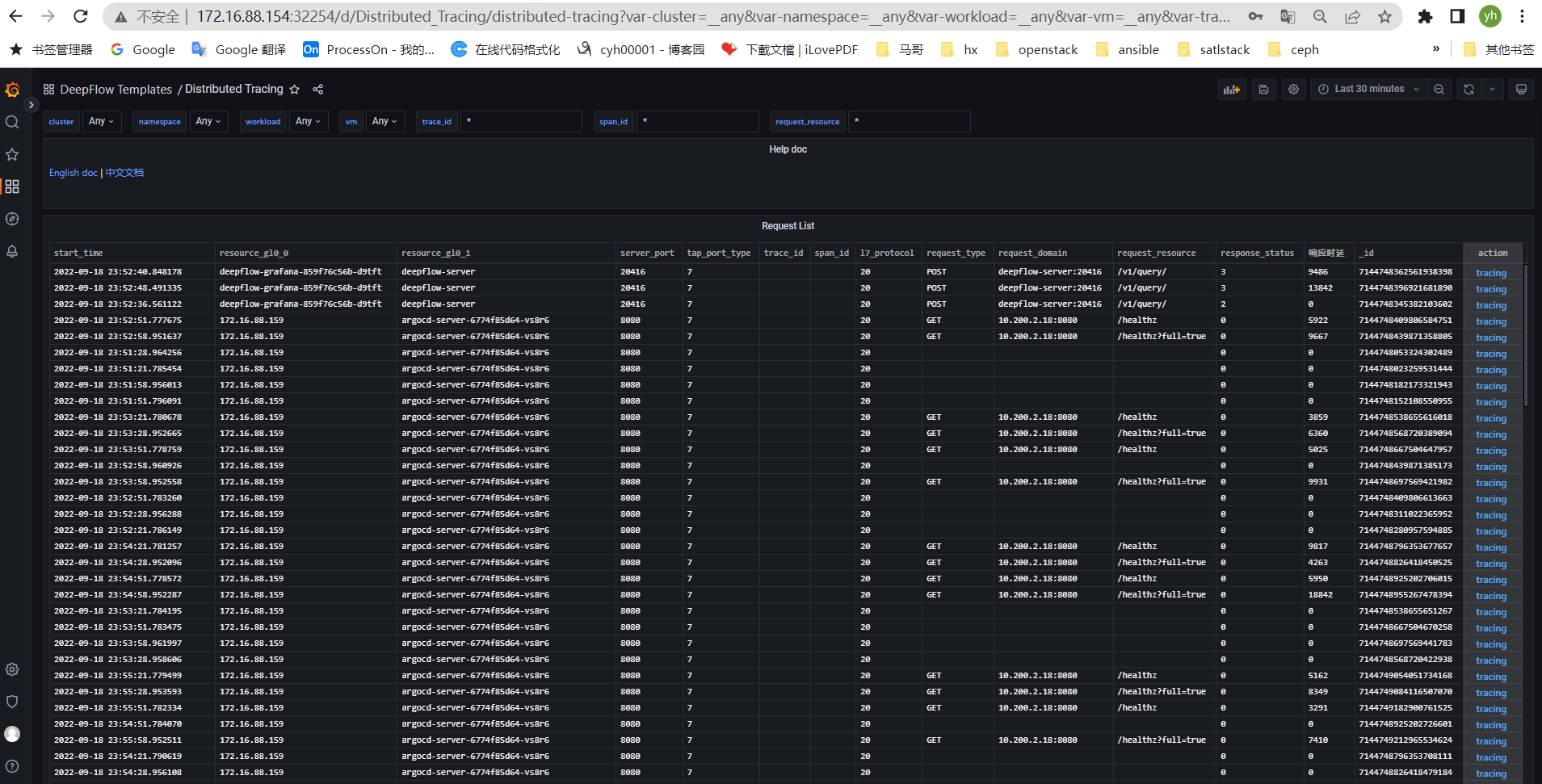

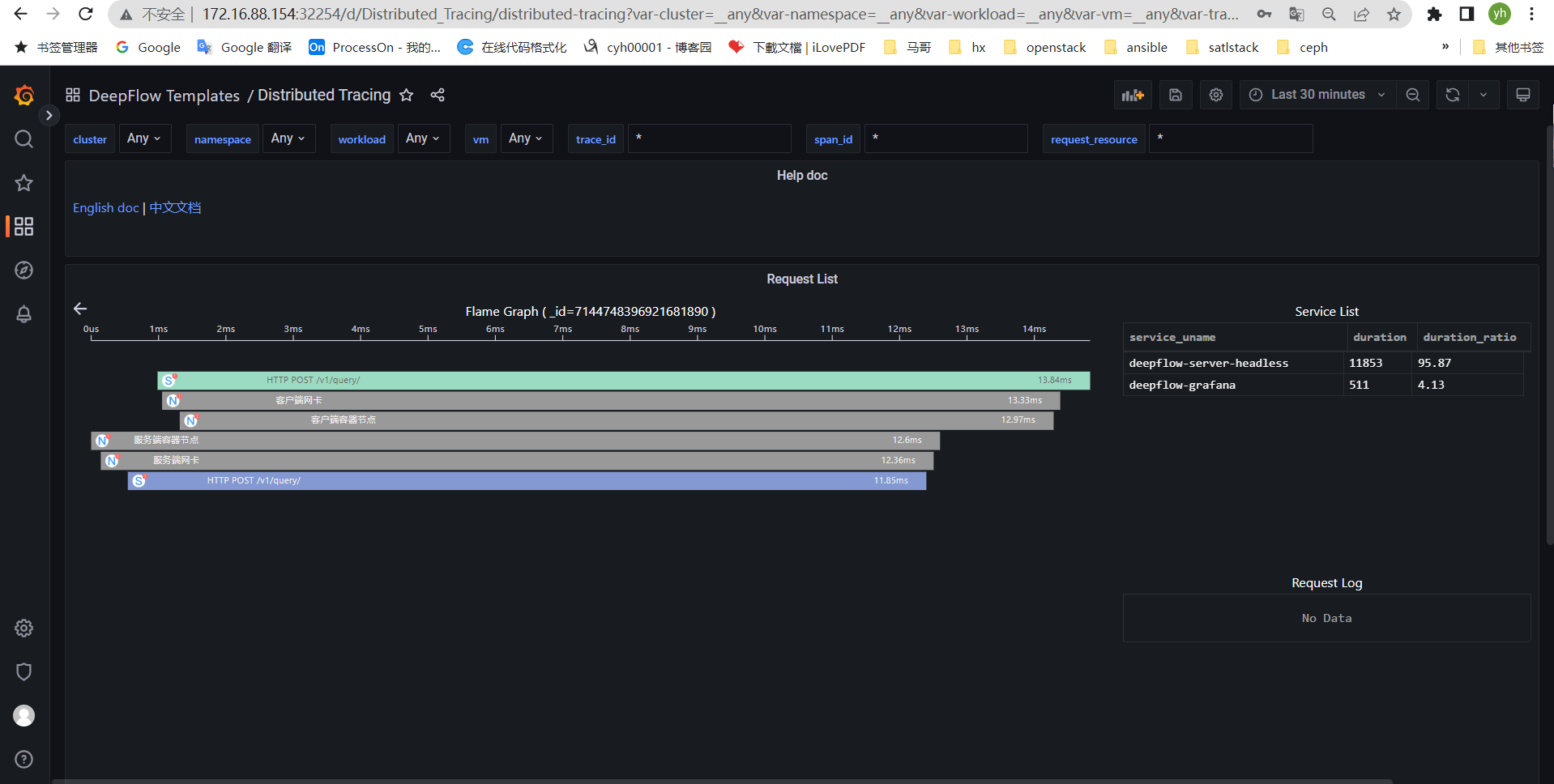

链路追踪图

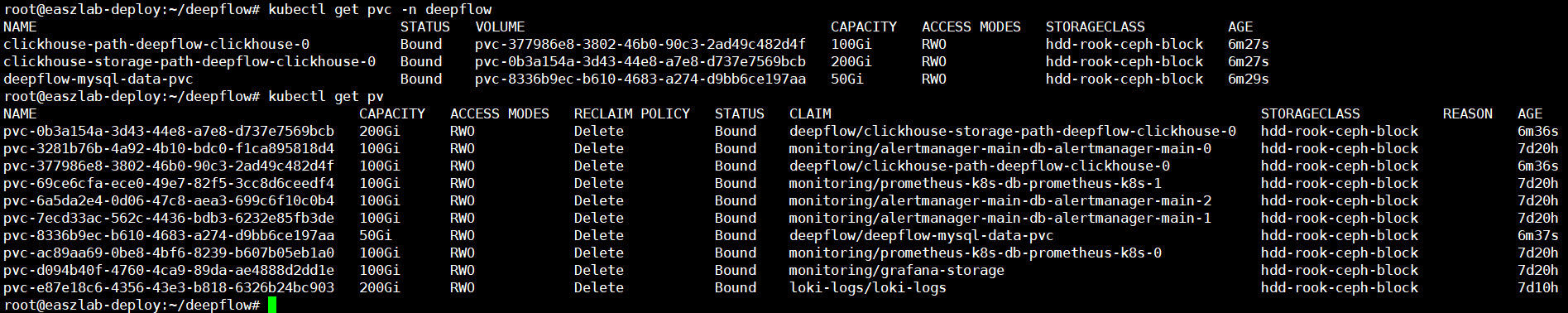

三、helm指定存储类安装

helm install deepflow -n deepflow deepflow/deepflow --create-namespace --set global.allInOneLocalStorage=true #使用本地文件目录作为存储

helm install deepflow -n deepflow deepflow/deepflow --create-namespace --set global.storageClass=hdd-rook-ceph-block #使用存储类

查看k8s集群存储类

root@easzlab-deploy:~/deepflow# kubectl get sc -A NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE hdd-rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate false 34d root@easzlab-deploy:~/deepflow#

创建存储类文件

cat values-custom.yaml

global: storageClass: hdd-rook-ceph-block replicas: 1 image: repository: registry.cn-beijing.aliyuncs.com/deepflow-ce grafana: image: repository: registry.cn-beijing.aliyuncs.com/deepflow-ce/grafana

通过helm指定安装

root@easzlab-deploy:~/deepflow# helm install deepflow -n deepflow deepflow/deepflow -f ./values-custom.yaml --create-namespace NAME: deepflow LAST DEPLOYED: Mon Sep 26 20:42:47 2022 NAMESPACE: deepflow STATUS: deployed REVISION: 1 NOTES: ██████╗ ███████╗███████╗██████╗ ███████╗██╗ ██████╗ ██╗ ██╗ ██╔══██╗██╔════╝██╔════╝██╔══██╗██╔════╝██║ ██╔═══██╗██║ ██║ ██║ ██║█████╗ █████╗ ██████╔╝█████╗ ██║ ██║ ██║██║ █╗ ██║ ██║ ██║██╔══╝ ██╔══╝ ██╔═══╝ ██╔══╝ ██║ ██║ ██║██║███╗██║ ██████╔╝███████╗███████╗██║ ██║ ███████╗╚██████╔╝╚███╔███╔╝ ╚═════╝ ╚══════╝╚══════╝╚═╝ ╚═╝ ╚══════╝ ╚═════╝ ╚══╝╚══╝ An automated observability platform for cloud-native developers. # deepflow-agent Port for receiving trace, metrics, and log deepflow-agent service: deepflow-agent.deepflow deepflow-agent Host listening port: 38086 # Get the Grafana URL to visit by running these commands in the same shell NODE_PORT=$(kubectl get --namespace deepflow -o jsonpath="{.spec.ports[0].nodePort}" services deepflow-grafana) NODE_IP=$(kubectl get nodes -o jsonpath="{.items[0].status.addresses[0].address}") echo -e "Grafana URL: http://$NODE_IP:$NODE_PORT \nGrafana auth: admin:deepflow" root@easzlab-deploy:~/deepflow# NODE_PORT=$(kubectl get --namespace deepflow -o jsonpath="{.spec.ports[0].nodePort}" services deepflow-grafana) root@easzlab-deploy:~/deepflow# NODE_IP=$(kubectl get nodes -o jsonpath="{.items[0].status.addresses[0].address}") root@easzlab-deploy:~/deepflow# echo -e "Grafana URL: http://$NODE_IP:$NODE_PORT \nGrafana auth: admin:deepflow" Grafana URL: http://172.16.88.154:63164 Grafana auth: admin:deepflow root@easzlab-deploy:~/deepflow#

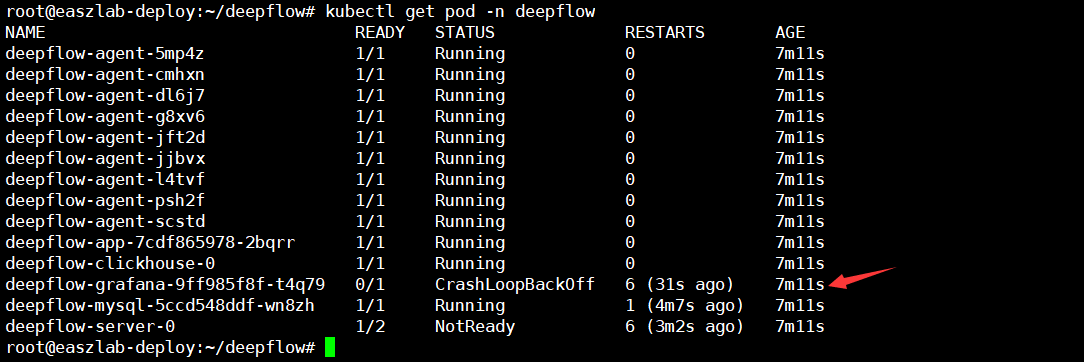

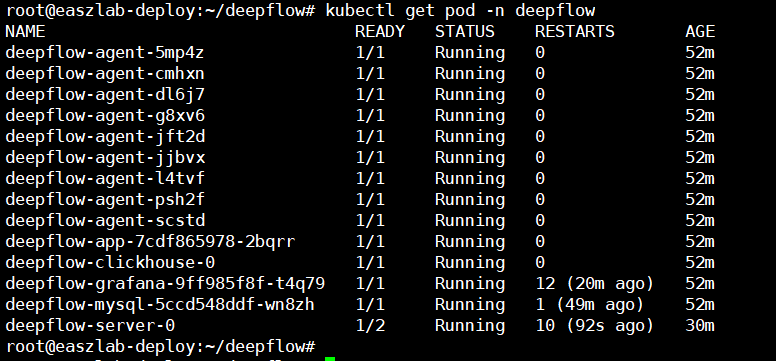

查看pod状态

查看pod状态

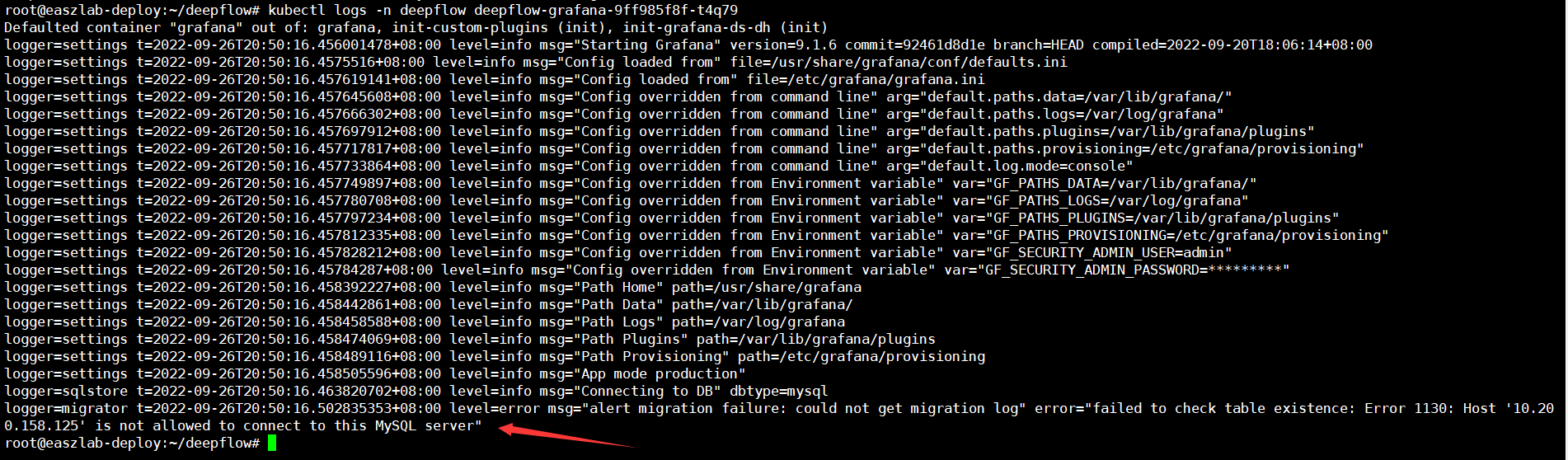

查看日志报连接数据库拒绝

解决办法:

root@easzlab-deploy:~/deepflow# kubectl exec -it -n deepflow deepflow-mysql-5ccd548ddf-wn8zh bash kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead. root@deepflow-mysql-5ccd548ddf-wn8zh:/# mysql Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 64 Server version: 8.0.26 MySQL Community Server - GPL Copyright (c) 2000, 2021, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | sys | +--------------------+ 4 rows in set (0.05 sec) mysql> select User,Host from mysql.user; +------------------+-----------+ | User | Host | +------------------+-----------+ | mysql.infoschema | localhost | | mysql.session | localhost | | mysql.sys | localhost | | root | localhost | +------------------+-----------+ 4 rows in set (0.02 sec) mysql> use mysql; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changed mysql> mysql> drop user root@localhost; Query OK, 0 rows affected (0.17 sec) mysql> create user root@'%' identified by 'deepflow'; Query OK, 0 rows affected (0.18 sec) mysql> grant all on *.* to root@'%'; Query OK, 0 rows affected (0.18 sec) mysql> select User,Host from mysql.user; +------------------+-----------+ | User | Host | +------------------+-----------+ | root | % | | mysql.infoschema | localhost | | mysql.session | localhost | | mysql.sys | localhost | +------------------+-----------+ 5 rows in set (0.02 sec) mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | sys | +--------------------+ 4 rows in set (0.03 sec) mysql> create database grafana; Query OK, 1 row affected (0.32 sec) mysql> show databases; +--------------------+ | Database | +--------------------+ | deepflow | | information_schema | | mysql | | performance_schema | | sys | +--------------------+ 5 rows in set (0.07 sec) mysql> flush privileges; Query OK, 0 rows affected (0.16 sec) mysql> show databases; +--------------------+ | Database | +--------------------+ | deepflow | | grafana | | information_schema | | mysql | | performance_schema | | sys | +--------------------+ 6 rows in set (0.02 sec) mysql> mysql> exit Bye root@deepflow-mysql-5ccd548ddf-wn8zh:/#

四、安装新版deepflow(6.1.8)

官方文档:https://deepflow.io/docs/zh/install/production-deployment/

特别注意:外部ceph集群尽量不要用虚机搭建,否则sql查询会过慢,出现[ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 52.472549499s

[root@easzlab-deploy deepflow]# kubectl get pod -n deepflow -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES deepflow-agent-5msqh 1/1 Running 0 31m 172.16.88.162 172.16.88.162 <none> <none> deepflow-agent-ddz7l 1/1 Running 0 31m 172.16.88.158 172.16.88.158 <none> <none> deepflow-agent-gcfcq 1/1 Running 0 31m 172.16.88.163 172.16.88.163 <none> <none> deepflow-agent-l7b57 1/1 Running 0 31m 172.16.88.157 172.16.88.157 <none> <none> deepflow-agent-pm66h 1/1 Running 0 31m 172.16.88.161 172.16.88.161 <none> <none> deepflow-agent-pmz4z 1/1 Running 0 31m 172.16.88.165 172.16.88.165 <none> <none> deepflow-agent-rdng6 1/1 Running 0 31m 172.16.88.159 172.16.88.159 <none> <none> deepflow-agent-s8qs9 1/1 Running 0 31m 172.16.88.164 172.16.88.164 <none> <none> deepflow-agent-zbr2p 1/1 Running 0 31m 172.16.88.160 172.16.88.160 <none> <none> deepflow-app-6fb6bfb4d4-55rb4 1/1 Running 0 31m 10.200.245.20 172.16.88.165 <none> <none> deepflow-clickhouse-0 1/1 Running 0 31m 10.200.105.146 172.16.88.164 <none> <none> deepflow-grafana-7b7f96cd8b-c2g4z 1/1 Running 0 10m 10.200.2.12 172.16.88.162 <none> <none> deepflow-mysql-59ff455658-dflbs 1/1 Running 0 31m 10.200.105.145 172.16.88.164 <none> <none> deepflow-server-bcc6775fd-5v4wk 0/1 Running 3 (83s ago) 10m 10.200.104.204 172.16.88.163 <none> <none> [root@easzlab-deploy deepflow]# kubectl describe pod -n deepflow deepflow-server-bcc6775fd-5v4wk Name: deepflow-server-bcc6775fd-5v4wk Namespace: deepflow Priority: 0 Node: 172.16.88.163/172.16.88.163 Start Time: Thu, 30 Mar 2023 11:49:49 +0800 Labels: app=deepflow app.kubernetes.io/instance=deepflow app.kubernetes.io/name=deepflow component=deepflow-server pod-template-hash=bcc6775fd Annotations: checksum/config: 7acb2382734ca32852fb60271de5118f4c68e4bd6ffae011eac0fe4a49ec43d2 checksum/customConfig: 4ff52e62cf5cd5ad85965433837ca2a53b6ce00d7e582c173303b4cfa57f87a7 Status: Running IP: 10.200.104.204 IPs: IP: 10.200.104.204 Controlled By: ReplicaSet/deepflow-server-bcc6775fd Containers: deepflow-server: Container ID: containerd://1d14843c17dd6c9de6de8b94054b95d23ca5fefaf68dbc7b718c5bfeaffd30a0 Image: registry.cn-beijing.aliyuncs.com/deepflow-ce/deepflow-server:v6.2.5 Image ID: registry.cn-beijing.aliyuncs.com/deepflow-ce/deepflow-server@sha256:24a5c255db238e27b5b9404977e8ad91db9825ea5f16db92b08252f54d6043e6 Ports: 20417/TCP, 20035/TCP, 20135/TCP, 20416/TCP Host Ports: 0/TCP, 0/TCP, 0/TCP, 0/TCP State: Running Started: Thu, 30 Mar 2023 11:59:12 +0800 Last State: Terminated Reason: Error Exit Code: 2 Started: Thu, 30 Mar 2023 11:57:12 +0800 Finished: Thu, 30 Mar 2023 11:59:10 +0800 Ready: False Restart Count: 3 Liveness: http-get http://:server/v1/health/ delay=15s timeout=1s period=20s #success=1 #failure=6 Readiness: http-get http://:server/v1/health/ delay=15s timeout=1s period=10s #success=1 #failure=10 Environment: K8S_NODE_IP_FOR_DEEPFLOW: (v1:status.hostIP) K8S_NODE_NAME_FOR_DEEPFLOW: (v1:spec.nodeName) K8S_POD_NAME_FOR_DEEPFLOW: deepflow-server-bcc6775fd-5v4wk (v1:metadata.name) K8S_POD_IP_FOR_DEEPFLOW: (v1:status.podIP) K8S_NAMESPACE_FOR_DEEPFLOW: deepflow (v1:metadata.namespace) TZ: Asia/Shanghai Mounts: /etc/server.yaml from server-config (rw,path="server.yaml") /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-dp75w (ro) Conditions: Type Status Initialized True Ready False ContainersReady False PodScheduled True Volumes: server-config: Type: ConfigMap (a volume populated by a ConfigMap) Name: deepflow Optional: false kube-api-access-dp75w: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 3607 ConfigMapName: kube-root-ca.crt ConfigMapOptional: <nil> DownwardAPI: true QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s node.kubernetes.io/unreachable:NoExecute op=Exists for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 10m default-scheduler Successfully assigned deepflow/deepflow-server-bcc6775fd-5v4wk to 172.16.88.163 Normal Pulled 7m24s kubelet Successfully pulled image "registry.cn-beijing.aliyuncs.com/deepflow-ce/deepflow-server:v6.2.5" in 3m23.113684166s Normal Created 7m24s kubelet Created container deepflow-server Normal Started 7m24s kubelet Started container deepflow-server Normal Pulling 5m28s (x2 over 10m) kubelet Pulling image "registry.cn-beijing.aliyuncs.com/deepflow-ce/deepflow-server:v6.2.5" Warning Unhealthy 5m28s (x6 over 7m8s) kubelet Liveness probe failed: HTTP probe failed with statuscode: 503 Normal Killing 5m28s kubelet Container deepflow-server failed liveness probe, will be restarted Normal Pulled 5m26s kubelet Successfully pulled image "registry.cn-beijing.aliyuncs.com/deepflow-ce/deepflow-server:v6.2.5" in 1.925934489s Warning Unhealthy 38s (x43 over 7m8s) kubelet Readiness probe failed: HTTP probe failed with statuscode: 503 [root@easzlab-deploy deepflow]# kubectl logs -n deepflow deepflow-server-bcc6775fd-5v4wk 2023/03/30 11:59:12 ENV K8S_NODE_NAME_FOR_DEEPFLOW=172.16.88.163; K8S_NODE_IP_FOR_DEEPFLOW=172.16.88.163; K8S_POD_NAME_FOR_DEEPFLOW=deepflow-server-bcc6775fd-5v4wk; K8S_POD_IP_FOR_DEEPFLOW=10.200.104.204; K8S_NAMESPACE_FOR_DEEPFLOW=deepflow 2023-03-30 11:59:12.752 [INFO] [querier] querier.go:46 ==================== Launching DeepFlow-Server-Querier ==================== 2023-03-30 11:59:12.754 [INFO] [kubernetes] watcher.gen.go:1004 Endpoints watcher starting 2023-03-30 11:59:12.754 [INFO] [querier] querier.go:47 querier config: log-file: /var/log/querier.log log-level: info listen-port: 20416 clickhouse: user-name: default user-password: "" host: deepflow-clickhouse port: 9000 timeout: 60 connect-timeout: 2 max-connection: 20 deepflow-app: host: deepflow-app port: "20418" prometheus: series-limit: 100 auto-tagging-prefix: df_ language: en otel-endpoint: http://172.16.88.163:38086/api/v1/otel/trace limit: "10000" time-fill-limit: 20 2023-03-30 11:59:12.755 [INFO] [controller] controller.go:72 ==================== Launching DeepFlow-Server-Controller ==================== 2023-03-30 11:59:12.755 [INFO] [controller] controller.go:73 controller config: log-file: /var/log/controller.log log-level: info listen-port: 20417 listen-node-port: 30417 master-controller-name: "" grpc-max-message-length: 104857600 grpc-port: "20035" ingester-port: "20033" grpc-node-port: "30035" kubeconfig: "" election-name: deepflow-server reporting-disabled: false billing-method: license pod-cluster-internal-ip-to-ingester: 0 df-web-service: enabled: false host: df-web port: 20106 timeout: 30 mysql: database: deepflow host: deepflow-mysql port: 30130 user-name: root user-password: deepflow timeout: 30 redis: resource_api_database: 1 resource_api_expire_interval: 3600 dimension_resource_database: 2 host: [] port: 6379 password: deepflow timeout: 30 enabled: false cluster_enabled: false clickhouse: database: flow_tag host: deepflow-clickhouse port: 9000 user-name: default user-password: "" timeout: 30 roze: port: 20106 timeout: 60 spec: vtap_group_max: 1000 vtap_max_per_group: 10000 az_max_per_server: 10 data_source_max: 15 data_source_retention_time_max: 1000 monitor: health_check_interval: 60 health_check_port: 30417 health_check_handle_channel_len: 1000 license_check_interval: 60 vtap_check_interval: 60 exception_time_frame: 3600 auto_rebalance_vtap: true rebalance_check_interval: 300 warrant: warrant: warrant port: 20413 timeout: 30 manager: cloud_config_check_interval: 60 task: resource_recorder_interval: 60 cloud: cloud_gather_interval: 30 kubernetes_gather_interval: 30 aliyun_region_name: cn-beijing huawei_domain_name: myhuaweicloud.com genesis_default_vpc: default_vpc hostname_to_ip_file: /etc/hostname_to_ip.csv dns_enable: false http_timeout: 30 debug_enabled: false recorder: cache_refresh_interval: 60 deleted_resource_clean_interval: 24 deleted_resource_retention_time: 168 resource_max_id_0: 64000 resource_max_id_1: 499999 genesis: aging_time: 86400 vinterface_aging_time: 300 host_ips: [] local_ip_ranges: [] exclude_ip_ranges: [] queue_length: 60 data_persistence_interval: 60 multi_ns_mode: false single_vpc_mode: false statsd: enabled: true host: 127.0.0.1 port: 20033 trisolaris: listen-port: "20014" loglevel: info tsdb-ip: "" chrony: host: ntp.aliyun.com port: 123 timeout: 1 self-update-url: grpc remote-api-timeout: 30 trident-type-for-unkonw-vtap: 3 platform-vips: [] node-type: master region-domain-prefix: "" clear-kubernetes-time: 600 nodeip: "" vtapcache-refresh-interval: 300 metadata-refresh-interval: 60 node-refresh-interval: 60 gpid-refresh-interval: 9 vtap-auto-register: true default-tap-mode: 0 billingmethod: license grpcport: 20035 ingesterport: 20033 podclusterinternaliptoingester: 0 grpcmaxmessagelength: 104857600 tagrecorder: timeout: 60 mysql_batch_size: 5000 [GIN-debug] [WARNING] Running in "debug" mode. Switch to "release" mode in production. - using env: export GIN_MODE=release - using code: gin.SetMode(gin.ReleaseMode) [GIN-debug] GET /v1/health/ --> github.com/deepflowio/deepflow/server/controller/http/router.HealthRouter.func1 (3 handlers) 2023-03-30 11:59:12.757 [INFO] [election] election.go:161 election id is 172.16.88.163/172.16.88.163/deepflow-server-bcc6775fd-5v4wk/10.200.104.204 [GIN-debug] [WARNING] You trusted all proxies, this is NOT safe. We recommend you to set a value. Please check https://pkg.go.dev/github.com/gin-gonic/gin#readme-don-t-trust-all-proxies for details. [GIN-debug] Listening and serving HTTP on :20417 I0330 11:59:12.759852 1 leaderelection.go:248] attempting to acquire leader lease deepflow/deepflow-server... 2023-03-30 11:59:12.760 [WARN] [grpc] grpc_session.go:96 Sync from server 127.0.0.1 failed, reason: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing: dial tcp 127.0.0.1:20035: connect: connection refused" 2023-03-30 11:59:12.760 [INFO] [config] grpc_server_instrance.go:83 New ServerInstranceInfo ips:[127.0.0.1] port:20035 rpcMaxMsgSize:41943040 2023-03-30 11:59:12.761 [WARN] [config] config.go:245 get clickhouse endpoints(deepflow-clickhouse) failed, err: get server pod names empty 2023-03-30 11:59:12.764 [WARN] [config] grpc_server_instrance.go:78 No reachable server 2023-03-30 11:59:12.806 [INFO] [kubernetes] watcher.gen.go:1024 Endpoints watcher started I0330 11:59:12.829860 1 leaderelection.go:258] successfully acquired lease deepflow/deepflow-server 2023-03-30 11:59:12.830 [INFO] [election] election.go:204 172.16.88.163/172.16.88.163/deepflow-server-bcc6775fd-5v4wk/10.200.104.204 is the leader 2023-03-30 11:59:12.831 [INFO] [querier] querier.go:61 init opentelemetry: otel-endpoint(http://172.16.88.163:38086/api/v1/otel/trace) [GIN-debug] [WARNING] Running in "debug" mode. Switch to "release" mode in production. - using env: export GIN_MODE=release - using code: gin.SetMode(gin.ReleaseMode) [GIN-debug] POST /v1/query/ --> github.com/deepflowio/deepflow/server/querier/router.executeQuery.func1 (6 handlers) [GIN-debug] POST /api/v1/prom/read --> github.com/deepflowio/deepflow/server/querier/router.promReader.func1 (6 handlers) [GIN-debug] GET /prom/api/v1/query --> github.com/deepflowio/deepflow/server/querier/router.promQuery.func1 (6 handlers) [GIN-debug] GET /prom/api/v1/query_range --> github.com/deepflowio/deepflow/server/querier/router.promQueryRange.func1 (6 handlers) [GIN-debug] POST /prom/api/v1/query --> github.com/deepflowio/deepflow/server/querier/router.promQuery.func1 (6 handlers) [GIN-debug] POST /prom/api/v1/query_range --> github.com/deepflowio/deepflow/server/querier/router.promQueryRange.func1 (6 handlers) [GIN-debug] GET /prom/api/v1/label/:labelName/values --> github.com/deepflowio/deepflow/server/querier/router.promTagValuesReader.func1 (6 handlers) [GIN-debug] GET /prom/api/v1/series --> github.com/deepflowio/deepflow/server/querier/router.promSeriesReader.func1 (6 handlers) [GIN-debug] POST /prom/api/v1/series --> github.com/deepflowio/deepflow/server/querier/router.promSeriesReader.func1 (6 handlers) [GIN-debug] GET /api/traces/:traceId --> github.com/deepflowio/deepflow/server/querier/router.tempoTraceReader.func1 (6 handlers) [GIN-debug] GET /api/echo --> github.com/deepflowio/deepflow/server/querier/router.QueryRouter.func1 (6 handlers) [GIN-debug] GET /api/search/tags --> github.com/deepflowio/deepflow/server/querier/router.tempoTagsReader.func1 (6 handlers) [GIN-debug] GET /api/search/tag/:tagName/values --> github.com/deepflowio/deepflow/server/querier/router.tempoTagValuesReader.func1 (6 handlers) [GIN-debug] GET /api/search --> github.com/deepflowio/deepflow/server/querier/router.tempoSearchReader.func1 (6 handlers) [GIN-debug] [WARNING] You trusted all proxies, this is NOT safe. We recommend you to set a value. Please check https://pkg.go.dev/github.com/gin-gonic/gin#readme-don-t-trust-all-proxies for details. [GIN-debug] Listening and serving HTTP on :20416 2023-03-30 11:59:17.757 [INFO] [router.health] health.go:55 stage: Election init, time cost: 5.000423839s 2023-03-30 11:59:17.796 [INFO] [db.migrator.mysql] migrator.go:79 upgrade if db version is not the latest 2023-03-30 11:59:17.805 [INFO] [db.migrator.mysql] migrator.go:86 current db version: , expected db version: 6.2.1.22 2023-03-30 11:59:17.805 [INFO] [db.mysql.common] utils.go:105 drop database deepflow 2023-03-30 11:59:17.813 [INFO] [election] election.go:147 check leader finish, leader is 172.16.88.163/172.16.88.163/deepflow-server-bcc6775fd-5v4wk/10.200.104.204 2023-03-30 11:59:30.231 [ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 12.472132086s 2023-03-30 11:59:30.231 [ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 12.472055134s 2023-03-30 11:59:30.232 [GIN] 172.16.88.163 GET /v1/health/ 503 750.858µs 2023-03-30 11:59:30.232 [GIN] 172.16.88.163 GET /v1/health/ 503 949.143µs 2023-03-30 11:59:34.187 [INFO] [db.mysql.common] utils.go:110 create database deepflow 2023-03-30 11:59:34.366 [INFO] [db.mysql.common] utils.go:126 init db tables with rollback 2023-03-30 11:59:34.367 [INFO] [db.mysql.common] utils.go:136 init db tables start 2023-03-30 11:59:40.230 [ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 22.471276888s 2023-03-30 11:59:40.230 [GIN] 172.16.88.163 GET /v1/health/ 503 538.565µs 2023-03-30 11:59:42.762 [WARN] [config] config.go:245 get clickhouse endpoints(deepflow-clickhouse) failed, err: get server pod names empty 2023-03-30 11:59:50.231 [ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 32.471778559s 2023-03-30 11:59:50.231 [ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 32.471912982s 2023-03-30 11:59:50.231 [GIN] 172.16.88.163 GET /v1/health/ 503 520.962µs 2023-03-30 11:59:50.231 [GIN] 172.16.88.163 GET /v1/health/ 503 866.484µs 2023-03-30 12:00:00.231 [ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 42.47262619s 2023-03-30 12:00:00.232 [GIN] 172.16.88.163 GET /v1/health/ 503 607.332µs 2023-03-30 12:00:10.231 [ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 52.471911077s 2023-03-30 12:00:10.231 [GIN] 172.16.88.163 GET /v1/health/ 503 513.009µs 2023-03-30 12:00:10.231 [ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 52.472549499s 2023-03-30 12:00:10.232 [GIN] 172.16.88.163 GET /v1/health/ 503 437.088µs 2023-03-30 12:00:12.761 [WARN] [config] watcher.go:110 get server pod names empty 2023-03-30 12:00:12.764 [WARN] [config] config.go:245 get clickhouse endpoints(deepflow-clickhouse) failed, err: get server pod names empty 2023-03-30 12:00:12.767 [WARN] [config] grpc_server_instrance.go:78 No reachable server 2023-03-30 12:00:20.231 [ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 1m2.471786565s 2023-03-30 12:00:20.231 [GIN] 172.16.88.163 GET /v1/health/ 503 513.956µs 2023-03-30 12:00:30.230 [ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 1m12.471526187s 2023-03-30 12:00:30.231 [ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 1m12.471822195s 2023-03-30 12:00:30.231 [GIN] 172.16.88.163 GET /v1/health/ 503 504.17µs 2023-03-30 12:00:30.231 [GIN] 172.16.88.163 GET /v1/health/ 503 301.3µs 2023-03-30 12:00:37.668 [ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 1m19.9092799s 2023-03-30 12:00:37.669 [GIN] 172.16.88.163 GET /v1/health/ 503 879.732µs 2023-03-30 12:00:40.230 [ERRO] [router.health] health.go:44 server is in stage: MySQL migration now, time cost: 1m22.471516255s 2023-03-30 12:00:40.231 [GIN] 172.16.88.163 GET /v1/health/ 503 694.19µs 2023-03-30 12:00:42.765 [WARN] [config] config.go:245 get clickhouse endpoints(deepflow-clickhouse) failed, err: get server pod names empty [root@easzlab-deploy deepflow]#

没有存储可以使用openebs

kubectl apply -f https://openebs.github.io/charts/openebs-operator.yaml ## config default storage class kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

准备外部ceph存储,参考文档:https://www.cnblogs.com/cyh00001/p/16754053.html

创建deepflow命名空间下secret

[root@easzlab-deploy deepflow]# cat case3-secret-client-k8s.yaml apiVersion: v1 kind: Secret metadata: name: ceph-secret-k8s namespace: deepflow type: "kubernetes.io/rbd" data: key: QVFDZjFmNWpVUitsTUJBQWN6bDZHRmE3VGY4VEkydENVTDNPYnc9PQ== [root@easzlab-deploy deepflow]# [root@easzlab-deploy deepflow]# cat case5-secret-admin.yaml apiVersion: v1 kind: Secret metadata: name: ceph-secret-admin namespace: deepflow type: "kubernetes.io/rbd" data: key: QVFCMnlQNWpKZFNpTFJBQWFVUGIzdzRjdFNKRXpHaXpiZHpUakE9PQ== [root@easzlab-deploy deepflow]# [root@easzlab-deploy deepflow]# kubectl apply -f case3-secret-client-k8s.yaml -f case5-secret-admin.yaml secret/ceph-secret-k8s created secret/ceph-secret-admin created [root@easzlab-deploy deepflow]# [root@easzlab-deploy deepflow]# kubectl get secret -A NAMESPACE NAME TYPE DATA AGE argocd argocd-initial-admin-secret Opaque 1 26d argocd argocd-notifications-secret Opaque 0 26d argocd argocd-secret Opaque 5 26d deepflow ceph-secret-admin kubernetes.io/rbd 1 14s deepflow ceph-secret-k8s kubernetes.io/rbd 1 14s deepflow deepflow-grafana Opaque 3 8m59s deepflow sh.helm.release.v1.deepflow.v1 helm.sh/release.v1 1 8m59s default ceph-secret-admin kubernetes.io/rbd 1 28d default ceph-secret-k8s kubernetes.io/rbd 1 28d kube-system calico-etcd-secrets Opaque 3 29d kubernetes-dashboard dashboard-admin-user kubernetes.io/service-account-token 3 29d kubernetes-dashboard kubernetes-dashboard-certs Opaque 0 29d kubernetes-dashboard kubernetes-dashboard-csrf Opaque 1 29d kubernetes-dashboard kubernetes-dashboard-key-holder Opaque 2 29d velero-system cloud-credentials Opaque 1 28d velero-system velero-restic-credentials Opaque 1 28d [root@easzlab-deploy deepflow]#

通过helm安装新版deepflow并对接ceph

helm repo add deepflow https://deepflow-ce.oss-cn-beijing.aliyuncs.com/chart/stable helm repo update deepflow

cat << EOF > values-custom.yaml global: image: repository: registry.cn-beijing.aliyuncs.com/deepflow-ce grafana: image: repository: registry.cn-beijing.aliyuncs.com/deepflow-ce/grafana EOF helm upgrade --install deepflow -n deepflow deepflow/deepflow --version 6.1.8 --create-namespace --set global.storageClass=ceph-storage-class-k8s -f ./values-custom.yaml

安装情况

[root@easzlab-deploy deepflow]# helm upgrade --install deepflow -n deepflow deepflow/deepflow --version 6.1.8 --create-namespace --set global.storageClass=ceph-storage-class-k8s -f ./values-custom.yaml Release "deepflow" does not exist. Installing it now. NAME: deepflow LAST DEPLOYED: Wed Mar 29 15:05:23 2023 NAMESPACE: deepflow STATUS: deployed REVISION: 1 NOTES: ██████╗ ███████╗███████╗██████╗ ███████╗██╗ ██████╗ ██╗ ██╗ ██╔══██╗██╔════╝██╔════╝██╔══██╗██╔════╝██║ ██╔═══██╗██║ ██║ ██║ ██║█████╗ █████╗ ██████╔╝█████╗ ██║ ██║ ██║██║ █╗ ██║ ██║ ██║██╔══╝ ██╔══╝ ██╔═══╝ ██╔══╝ ██║ ██║ ██║██║███╗██║ ██████╔╝███████╗███████╗██║ ██║ ███████╗╚██████╔╝╚███╔███╔╝ ╚═════╝ ╚══════╝╚══════╝╚═╝ ╚═╝ ╚══════╝ ╚═════╝ ╚══╝╚══╝ An automated observability platform for cloud-native developers. # deepflow-agent Port for receiving trace, metrics, and log deepflow-agent service: deepflow-agent.deepflow deepflow-agent Host listening port: 38086 # Get the Grafana URL to visit by running these commands in the same shell NODE_PORT=$(kubectl get --namespace deepflow -o jsonpath="{.spec.ports[0].nodePort}" services deepflow-grafana) NODE_IP=$(kubectl get nodes -o jsonpath="{.items[0].status.addresses[0].address}") echo -e "Grafana URL: http://$NODE_IP:$NODE_PORT \nGrafana auth: admin:deepflow" [root@easzlab-deploy deepflow]# NODE_PORT=$(kubectl get --namespace deepflow -o jsonpath="{.spec.ports[0].nodePort}" services deepflow-grafana) [root@easzlab-deploy deepflow]# NODE_IP=$(kubectl get nodes -o jsonpath="{.items[0].status.addresses[0].address}") [root@easzlab-deploy deepflow]# echo -e "Grafana URL: http://$NODE_IP:$NODE_PORT \nGrafana auth: admin:deepflow" Grafana URL: http://172.16.88.157:54634 Grafana auth: admin:deepflow [root@easzlab-deploy deepflow]# [root@easzlab-deploy deepflow]# kubectl get pod -n deepflow NAME READY STATUS RESTARTS AGE deepflow-agent-442qb 1/1 Running 0 27m deepflow-agent-4kn7r 1/1 Running 0 27m deepflow-agent-6fqzw 1/1 Running 0 27m deepflow-agent-fbhxd 1/1 Running 0 27m deepflow-agent-h9fj6 1/1 Running 0 27m deepflow-agent-l5xxr 1/1 Running 0 27m deepflow-agent-n25kd 1/1 Running 0 27m deepflow-agent-n468w 1/1 Running 0 27m deepflow-agent-nr56c 1/1 Running 0 27m deepflow-app-66df954b64-2h2hg 1/1 Running 0 27m deepflow-clickhouse-0 1/1 Running 0 27m deepflow-grafana-59845f7896-25hss 1/1 Running 7 (18m ago) 27m deepflow-mysql-59ff455658-x8s5d 1/1 Running 0 27m deepflow-server-5dd8dbc45c-zv86t 1/1 Running 9 (6s ago) 27m [root@easzlab-deploy deepflow]# [root@easzlab-deploy deepflow]# kubectl get pvc -n deepflow NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE clickhouse-path-deepflow-clickhouse-0 Bound pvc-be079aa5-8974-4a17-9881-2da1f9fc4d75 100Gi RWO ceph-storage-class-k8s 28m clickhouse-storage-path-deepflow-clickhouse-0 Bound pvc-d08700bf-811f-4ab3-a040-25efb2f051f2 200Gi RWO ceph-storage-class-k8s 28m deepflow-mysql-data-pvc Bound pvc-753daa24-8c78-412b-b3f8-98d2c248c6c0 50Gi RWO ceph-storage-class-k8s 28m [root@easzlab-deploy deepflow]# kubectl get pv -n deepflow NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-753daa24-8c78-412b-b3f8-98d2c248c6c0 50Gi RWO Delete Bound deepflow/deepflow-mysql-data-pvc ceph-storage-class-k8s 28m pvc-be079aa5-8974-4a17-9881-2da1f9fc4d75 100Gi RWO Delete Bound deepflow/clickhouse-path-deepflow-clickhouse-0 ceph-storage-class-k8s 28m pvc-d08700bf-811f-4ab3-a040-25efb2f051f2 200Gi RWO Delete Bound deepflow/clickhouse-storage-path-deepflow-clickhouse-0 ceph-storage-class-k8s 28m [root@easzlab-deploy deepflow]#

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 如何调用 DeepSeek 的自然语言处理 API 接口并集成到在线客服系统

· 【译】Visual Studio 中新的强大生产力特性

· 2025年我用 Compose 写了一个 Todo App