搭建ELK及kafka日志收集环境

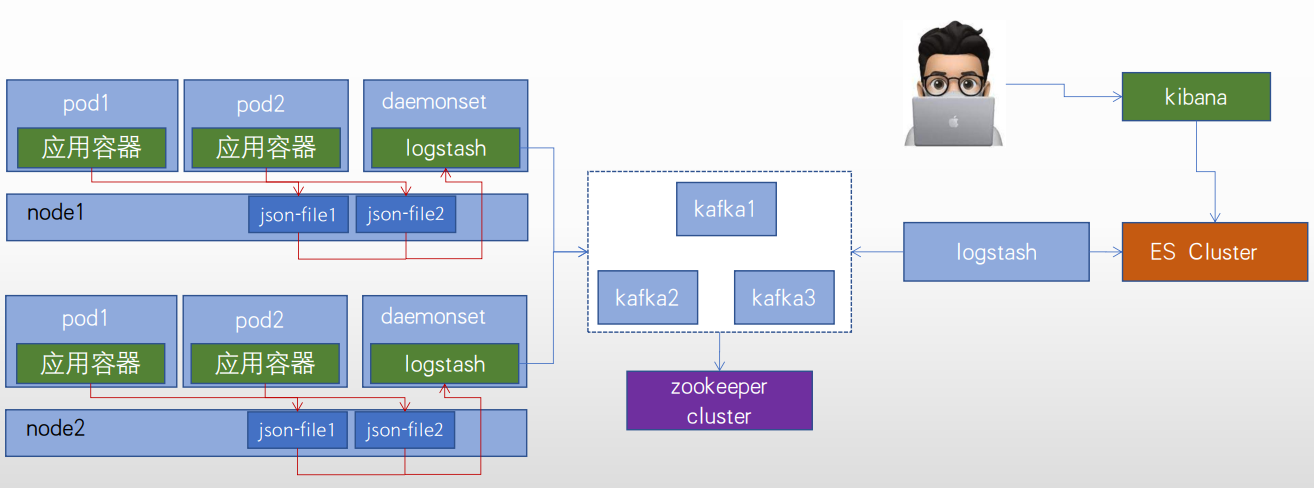

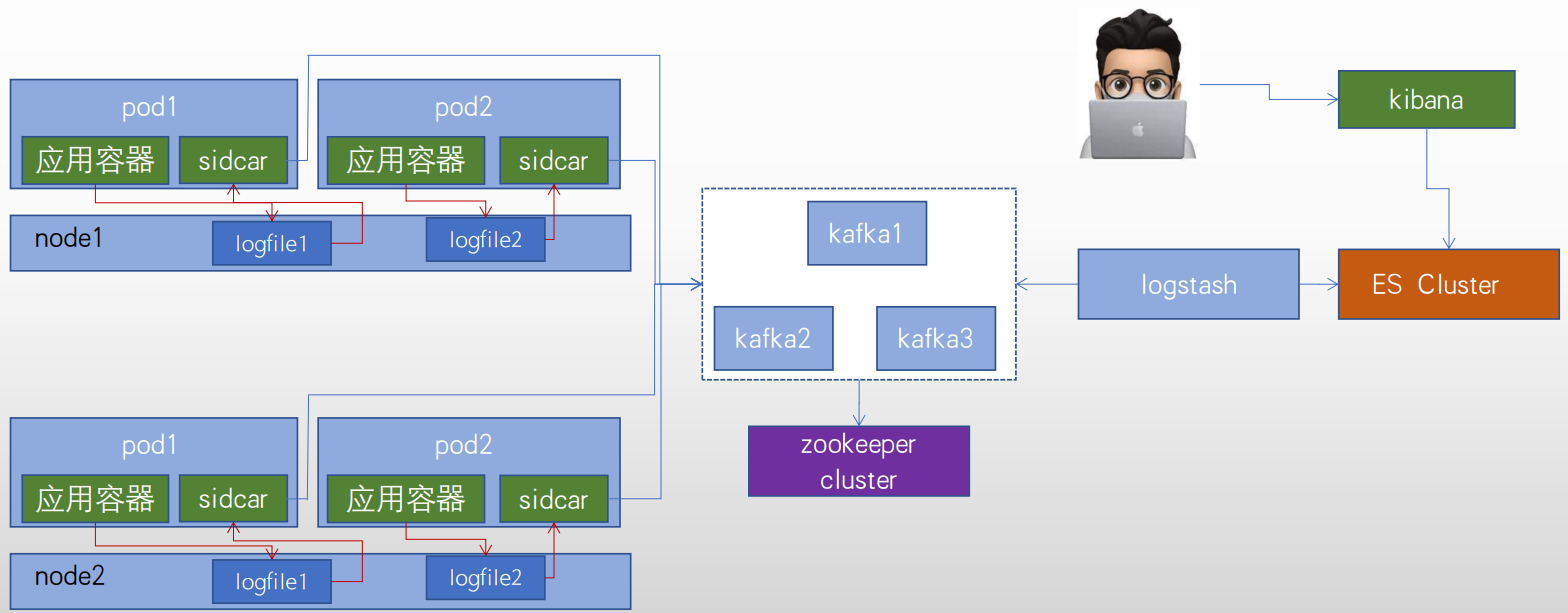

部署逻辑架构图

一、环境准备

k8s集群 172.16.88.154 easzlab-k8s-master-01 172.16.88.155 easzlab-k8s-master-02 172.16.88.156 easzlab-k8s-master-03 172.16.88.157 easzlab-k8s-node-01 172.16.88.158 easzlab-k8s-node-02 172.16.88.159 easzlab-k8s-node-03 172.16.88.160 easzlab-k8s-etcd-01 172.16.88.161 easzlab-k8s-etcd-02 172.16.88.162 easzlab-k8s-etcd-03 es集群 172.16.88.173 easzlab-es-01 172.16.88.174 easzlab-es-02 172.16.88.175 easzlab-es-03 zookeeper+kafka集群 172.16.88.176 easzlab-zk-kafka-01 easzlab-kibana-01 172.16.88.177 easzlab-zk-kafka-02 172.16.88.178 easzlab-zk-kafka-03

logstash传输节点 172.16.88.179 easzlab-logstash

二、安装elasticsearch集群

2.1、下载并安装elasticsearch服务

下载es包 wget https://mirrors.tuna.tsinghua.edu.cn/elasticstack/apt/7.x/pool/main/e/elasticsearch/elasticsearch-7.12.1-amd64.deb

同步到其他节点

scp elasticsearch-7.12.1-amd64.deb root@172.16.88.174:/root scp elasticsearch-7.12.1-amd64.deb root@172.16.88.174:/root

安装es

dpkg -i elasticsearch-7.12.1-amd64.deb

2.2、配置es集群节点

[root@easzlab-es-01 ~]# egrep -v "^$|^#" /etc/elasticsearch/elasticsearch.yml cluster.name: magedu-cluster-01 node.name: easzlab-es-01 path.data: /var/lib/elasticsearch path.logs: /var/log/elasticsearch network.host: 172.16.88.173 http.port: 9200 discovery.seed_hosts: ["172.16.88.173", "172.16.88.174", "172.16.88.175"] cluster.initial_master_nodes: ["172.16.88.173", "172.16.88.174", "172.16.88.175"] action.destructive_requires_name: true [root@easzlab-es-01 ~]#

[root@easzlab-es-02 ~]# egrep -v "^$|^#" /etc/elasticsearch/elasticsearch.yml cluster.name: magedu-cluster-01 node.name: easzlab-es-02 path.data: /var/lib/elasticsearch path.logs: /var/log/elasticsearch network.host: 172.16.88.174 http.port: 9200 discovery.seed_hosts: ["172.16.88.173", "172.16.88.174", "172.16.88.175"] cluster.initial_master_nodes: ["172.16.88.173", "172.16.88.174", "172.16.88.175"] action.destructive_requires_name: true [root@easzlab-es-02 ~]#

[root@easzlab-es-03 ~]# egrep -v "^$|^#" /etc/elasticsearch/elasticsearch.yml cluster.name: magedu-cluster-01 node.name: easzlab-es-03 path.data: /var/lib/elasticsearch path.logs: /var/log/elasticsearch network.host: 172.16.88.175 http.port: 9200 discovery.seed_hosts: ["172.16.88.173", "172.16.88.174", "172.16.88.175"] cluster.initial_master_nodes: ["172.16.88.173", "172.16.88.174", "172.16.88.175"] action.destructive_requires_name: true [root@easzlab-es-03 ~]# #启动服务 systemctl enable --now elasticsearch.service

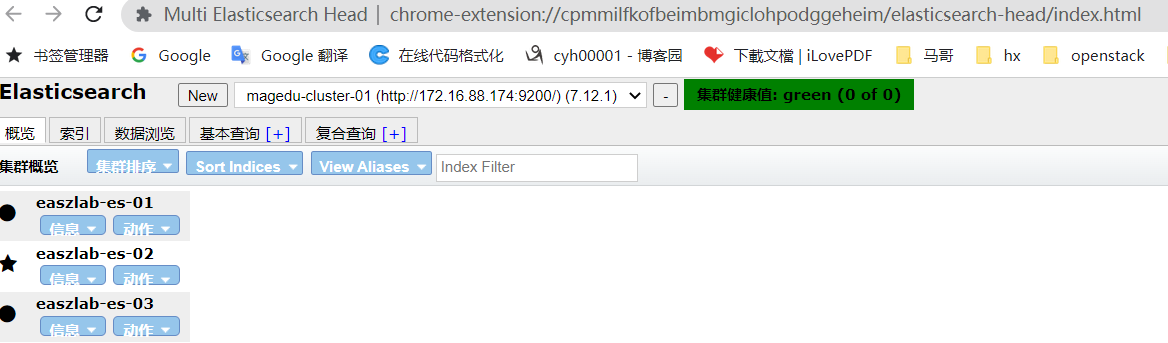

2.3、验证es集群

三、安装kibana控制面板

3.1、下载安装kibana服务

下载kibana安装包

wget https://mirrors.tuna.tsinghua.edu.cn/elasticstack/apt/7.x/pool/main/k/kibana/kibana-7.12.1-amd64.deb

安装kibana软件

dpkg -i kibana-7.12.1-amd64.deb

3.2、配置启动kibana

[root@easzlab-es-01 ~]# egrep -v "^$|^#" /etc/kibana/kibana.yml server.port: 5601 server.host: "172.16.88.173" elasticsearch.hosts: ["http://172.16.88.174:9200"] i18n.locale: "zh-CN" [root@easzlab-es-01 ~]# systemctl enable --now kibana.service

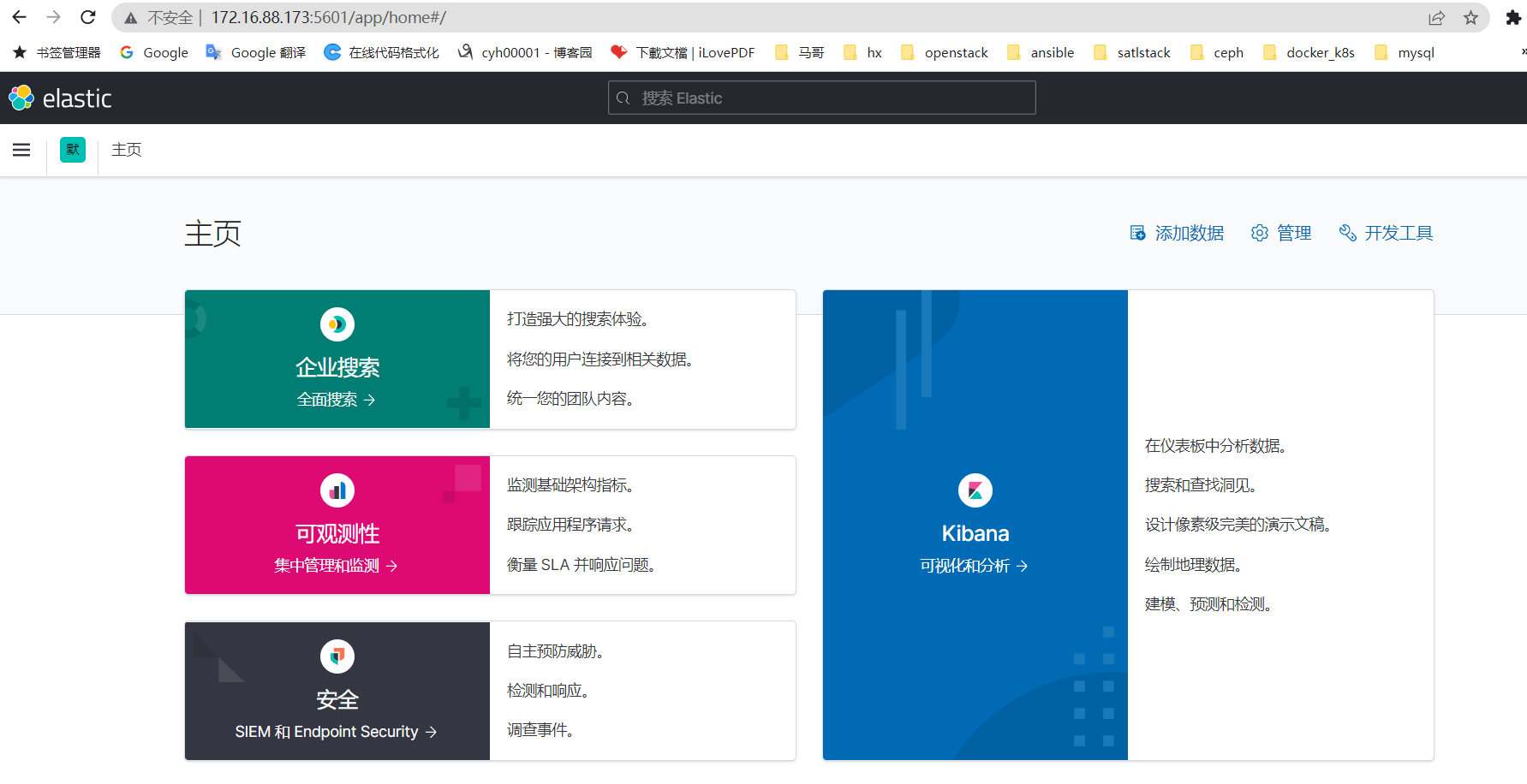

3.3、访问kibana web是否正常

http://172.16.88.173:5601

3.4、检查kibana与es集群连接

四、配置zookeeper+kafka集群

四、配置zookeeper+kafka集群

4.1、准备所需环境

下载服务软件包

wget https://dlcdn.apache.org/zookeeper/zookeeper-3.6.3/apache-zookeeper-3.6.3-bin.tar.gz

安装所需的jdk

apt update && apt-get install openjdk-8-jdk -y

创建软件目录,并做相应软链接及链接文件

mkdir /apps cp apache-zookeeper-3.6.3-bin.tar.gz /apps && tar -xf apache-zookeeper-3.6.3-bin.tar.gz ln -sv /apps/apache-zookeeper-3.6.3-bin zookeeper

创建zookeeper数据目录

mkdir /data/zookeeper -p

4.2、配置zookeeper

配置zoo.cfg

[root@easzlab-zk-kafka-01 conf]# egrep -v "^#|^$" zoo.cfg tickTime=2000 initLimit=10 syncLimit=5 dataDir=/data/zookeeper clientPort=2181 server.1=172.16.88.176:2888:3888 server.2=172.16.88.177:2888:3888 server.3=172.16.88.178:2888:3888 [root@easzlab-zk-kafka-01 conf]#

同步zoo.cfg到其他节点 scp /apps/zookeeper/conf/zoo.cfg root@172.16.88.177:/apps/zookeeper/conf/ scp /apps/zookeeper/conf/zoo.cfg root@172.16.88.178:/apps/zookeeper/conf/

指点节点服务id [root@easzlab-zk-kafka-01 ~]# echo 1 > /data/zookeeper/myid [root@easzlab-zk-kafka-02 ~]# echo 2 > /data/zookeeper/myid [root@easzlab-zk-kafka-03 ~]# echo 3 > /data/zookeeper/myid

4.3、启动zookeeper集群服务

[root@easzlab-zk-kafka-01 ~]# /apps/zookeeper/bin/zkServer.sh start /usr/bin/java ZooKeeper JMX enabled by default Using config: /apps/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [root@easzlab-zk-kafka-01 ~]# [root@easzlab-zk-kafka-02 ~]# /apps/zookeeper/bin/zkServer.sh start /usr/bin/java ZooKeeper JMX enabled by default Using config: /apps/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [root@easzlab-zk-kafka-02 ~]# [root@easzlab-zk-kafka-03 ~]# /apps/zookeeper/bin/zkServer.sh start /usr/bin/java ZooKeeper JMX enabled by default Using config: /apps/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [root@easzlab-zk-kafka-03 ~]#

4.4、查看节点角色

[root@easzlab-zk-kafka-01 ~]# /apps/zookeeper/bin/zkServer.sh status /usr/bin/java ZooKeeper JMX enabled by default Using config: /apps/zookeeper/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower [root@easzlab-zk-kafka-01 ~]# [root@easzlab-zk-kafka-02 ~]# /apps/zookeeper/bin/zkServer.sh status /usr/bin/java ZooKeeper JMX enabled by default Using config: /apps/zookeeper/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: leader #代表该节点为主节点 [root@easzlab-zk-kafka-02 ~]# [root@easzlab-zk-kafka-03 ~]# /apps/zookeeper/bin/zkServer.sh status /usr/bin/java ZooKeeper JMX enabled by default Using config: /apps/zookeeper/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower [root@easzlab-zk-kafka-03 ~]#

4.5、安装kafka集群

下载软件包 wget https://downloads.apache.org/kafka/3.2.1/kafka_2.13-3.2.1.tgz

同步软件包到其他节点 scp kafka_2.13-3.2.1.tgz root@172.16.88.177:/apps scp kafka_2.13-3.2.1.tgz root@172.16.88.178:/apps 在三台zookeeper+kafka节点解压软包并做相应的软链接链接文件 tar -xf kafka_2.13-3.2.1.tgz ln -sv /apps/kafka_2.13-3.2.1 kafka

4.6、配置kafka

[root@easzlab-zk-kafka-01 config]# egrep -v "^#|^$" /apps/kafka/config/server.properties broker.id=1 listeners=PLAINTEXT://172.16.88.176:9092 num.network.threads=3 num.io.threads=8 socket.send.buffer.bytes=102400 socket.receive.buffer.bytes=102400 socket.request.max.bytes=104857600 log.dirs=/data/kafka-logs num.partitions=1 num.recovery.threads.per.data.dir=1 offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 log.retention.hours=168 log.segment.bytes=1073741824 log.retention.check.interval.ms=300000 zookeeper.connect=172.16.88.176:2181,172.16.88.177:2181,172.16.88.178:2181 zookeeper.connection.timeout.ms=18000 group.initial.rebalance.delay.ms=0 [root@easzlab-zk-kafka-01 config]#

[root@easzlab-zk-kafka-02 config]# egrep -v "^$|^#" /apps/kafka/config/server.properties broker.id=2 listeners=PLAINTEXT://172.16.88.177:9092 num.network.threads=3 num.io.threads=8 socket.send.buffer.bytes=102400 socket.receive.buffer.bytes=102400 socket.request.max.bytes=104857600 log.dirs=/data/kafka-logs num.partitions=1 num.recovery.threads.per.data.dir=1 offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 log.retention.hours=168 log.segment.bytes=1073741824 log.retention.check.interval.ms=300000 zookeeper.connect=172.16.88.176:2181,172.16.88.177:2181,172.16.88.178:2181 zookeeper.connection.timeout.ms=18000 group.initial.rebalance.delay.ms=0 [root@easzlab-zk-kafka-02 config]#

[root@easzlab-zk-kafka-03 config]# egrep -v "^$|^#" /apps/kafka/config/server.properties broker.id=3 listeners=PLAINTEXT://172.16.88.178:9092 num.network.threads=3 num.io.threads=8 socket.send.buffer.bytes=102400 socket.receive.buffer.bytes=102400 socket.request.max.bytes=104857600 log.dirs=/data/kafka-logs num.partitions=1 num.recovery.threads.per.data.dir=1 offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 log.retention.hours=168 log.segment.bytes=1073741824 log.retention.check.interval.ms=300000 zookeeper.connect=172.16.88.176:2181,172.16.88.177:2181,172.16.88.178:2181 zookeeper.connection.timeout.ms=18000 group.initial.rebalance.delay.ms=0 [root@easzlab-zk-kafka-03 config]#

4.7、启动kafka服务

/apps/kafka/bin/kafka-server-start.sh -daemon /apps/kafka/config/server.properties

检查kafka端口是否监听

[root@easzlab-zk-kafka-01 ~]# netstat -ntlp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 127.0.0.1:6010 0.0.0.0:* LISTEN 20958/sshd: root@pt tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN 675/systemd-resolve tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 835/sshd: /usr/sbin tcp6 0 0 ::1:6010 :::* LISTEN 20958/sshd: root@pt tcp6 0 0 :::41563 :::* LISTEN 6576/java tcp6 0 0 172.16.88.176:9092 :::* LISTEN 20765/java tcp6 0 0 :::2181 :::* LISTEN 6576/java tcp6 0 0 :::33961 :::* LISTEN 20765/java tcp6 0 0 172.16.88.176:3888 :::* LISTEN 6576/java tcp6 0 0 :::8080 :::* LISTEN 6576/java tcp6 0 0 :::22 :::* LISTEN 835/sshd: /usr/sbin [root@easzlab-zk-kafka-01 ~]# [root@easzlab-zk-kafka-02 ~]# netstat -tnlp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN 667/systemd-resolve tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 812/sshd: /usr/sbin tcp 0 0 127.0.0.1:6010 0.0.0.0:* LISTEN 20448/sshd: root@pt tcp6 0 0 172.16.88.177:3888 :::* LISTEN 6436/java tcp6 0 0 :::8080 :::* LISTEN 6436/java tcp6 0 0 :::36533 :::* LISTEN 20283/java tcp6 0 0 :::22 :::* LISTEN 812/sshd: /usr/sbin tcp6 0 0 ::1:6010 :::* LISTEN 20448/sshd: root@pt tcp6 0 0 172.16.88.177:9092 :::* LISTEN 20283/java tcp6 0 0 :::2181 :::* LISTEN 6436/java tcp6 0 0 172.16.88.177:2888 :::* LISTEN 6436/java tcp6 0 0 :::41259 :::* LISTEN 6436/java [root@easzlab-zk-kafka-02 ~]# [root@easzlab-zk-kafka-03 ~]# netstat -tnlp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN 670/systemd-resolve tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 827/sshd: /usr/sbin tcp 0 0 127.0.0.1:6010 0.0.0.0:* LISTEN 20715/sshd: root@pt tcp6 0 0 :::44271 :::* LISTEN 6336/java tcp6 0 0 172.16.88.178:3888 :::* LISTEN 6336/java tcp6 0 0 :::8080 :::* LISTEN 6336/java tcp6 0 0 :::35571 :::* LISTEN 20129/java tcp6 0 0 :::22 :::* LISTEN 827/sshd: /usr/sbin tcp6 0 0 ::1:6010 :::* LISTEN 20715/sshd: root@pt tcp6 0 0 172.16.88.178:9092 :::* LISTEN 20129/java tcp6 0 0 :::2181 :::* LISTEN 6336/java [root@easzlab-zk-kafka-03 ~]#

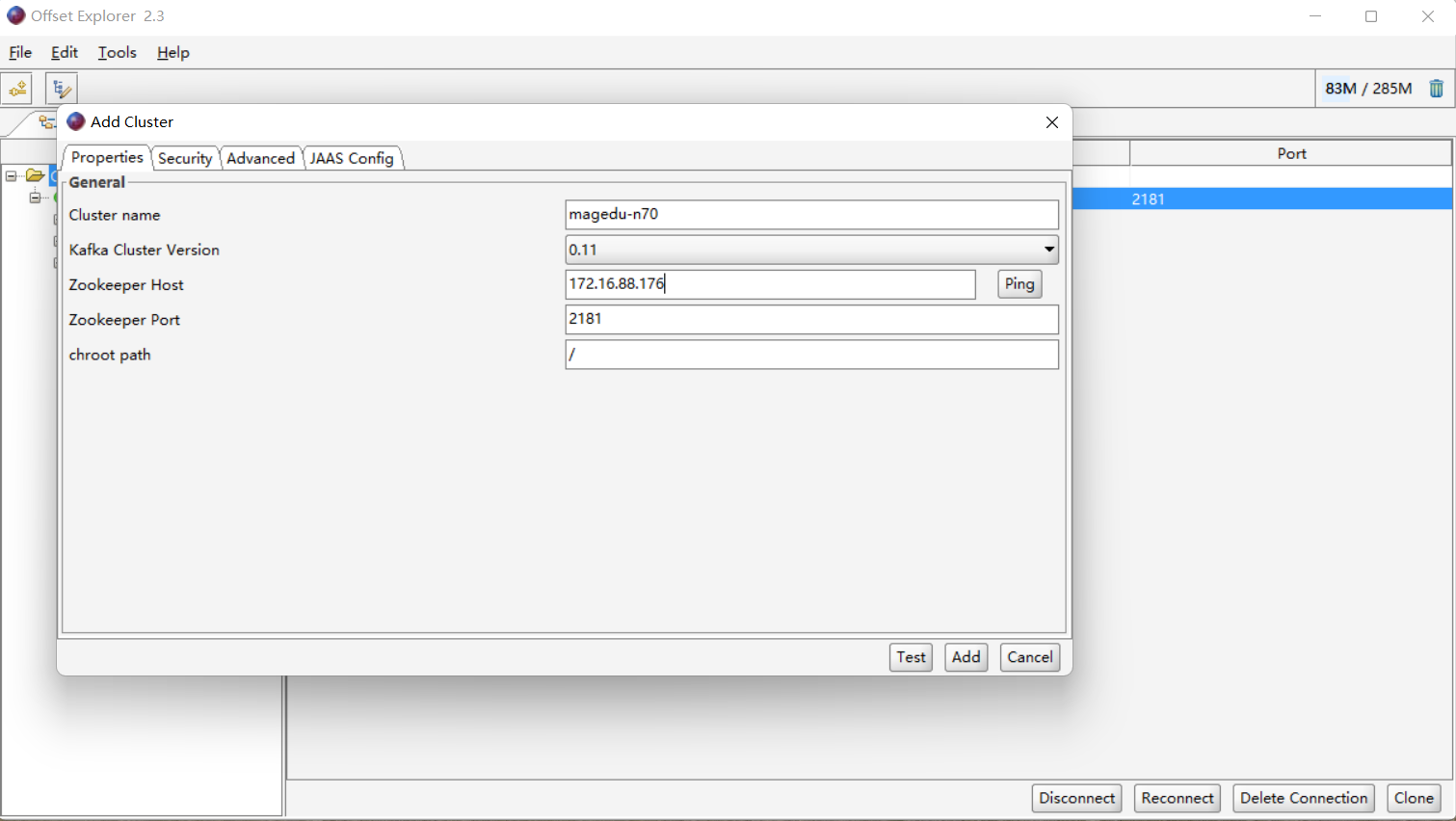

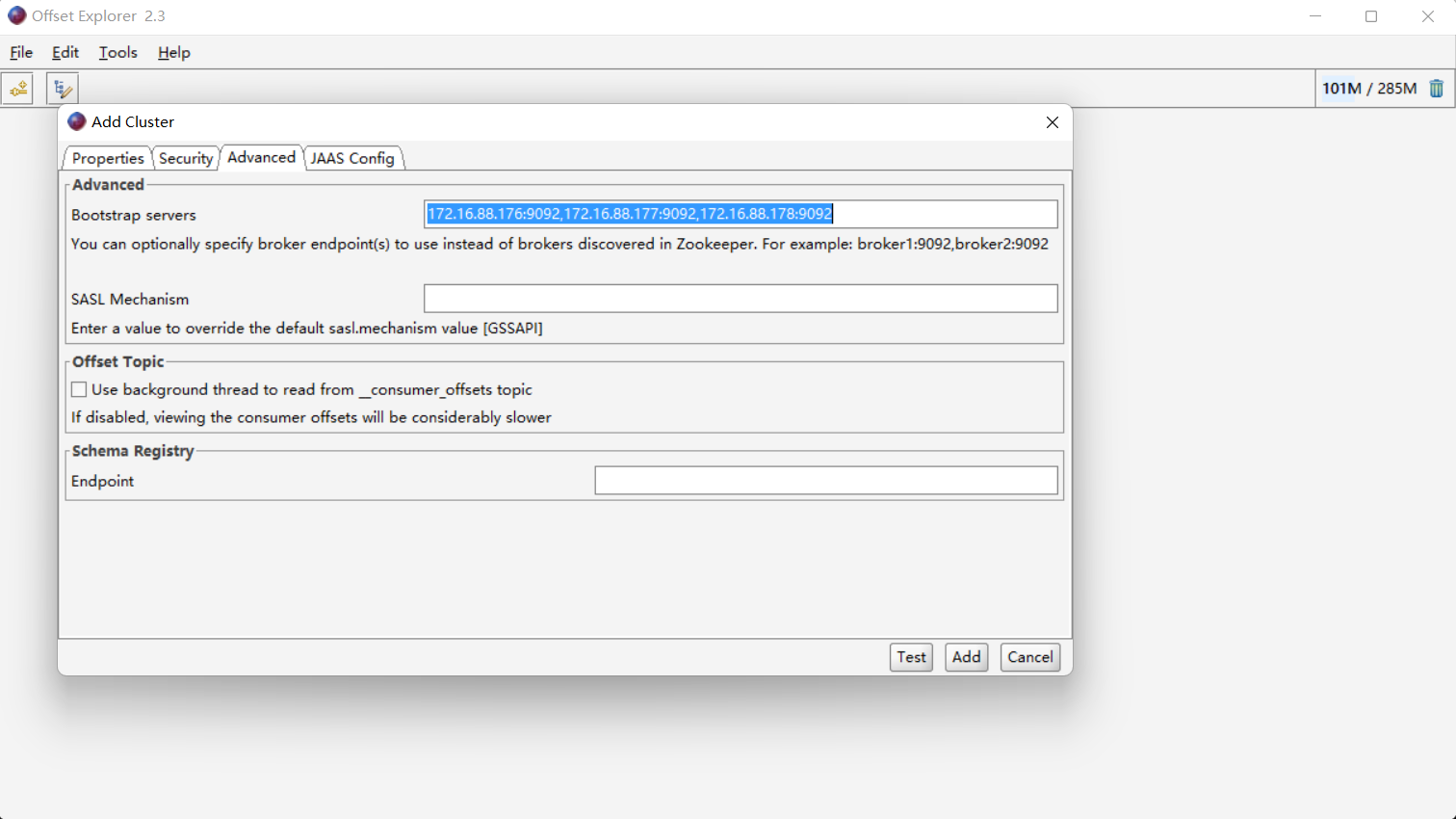

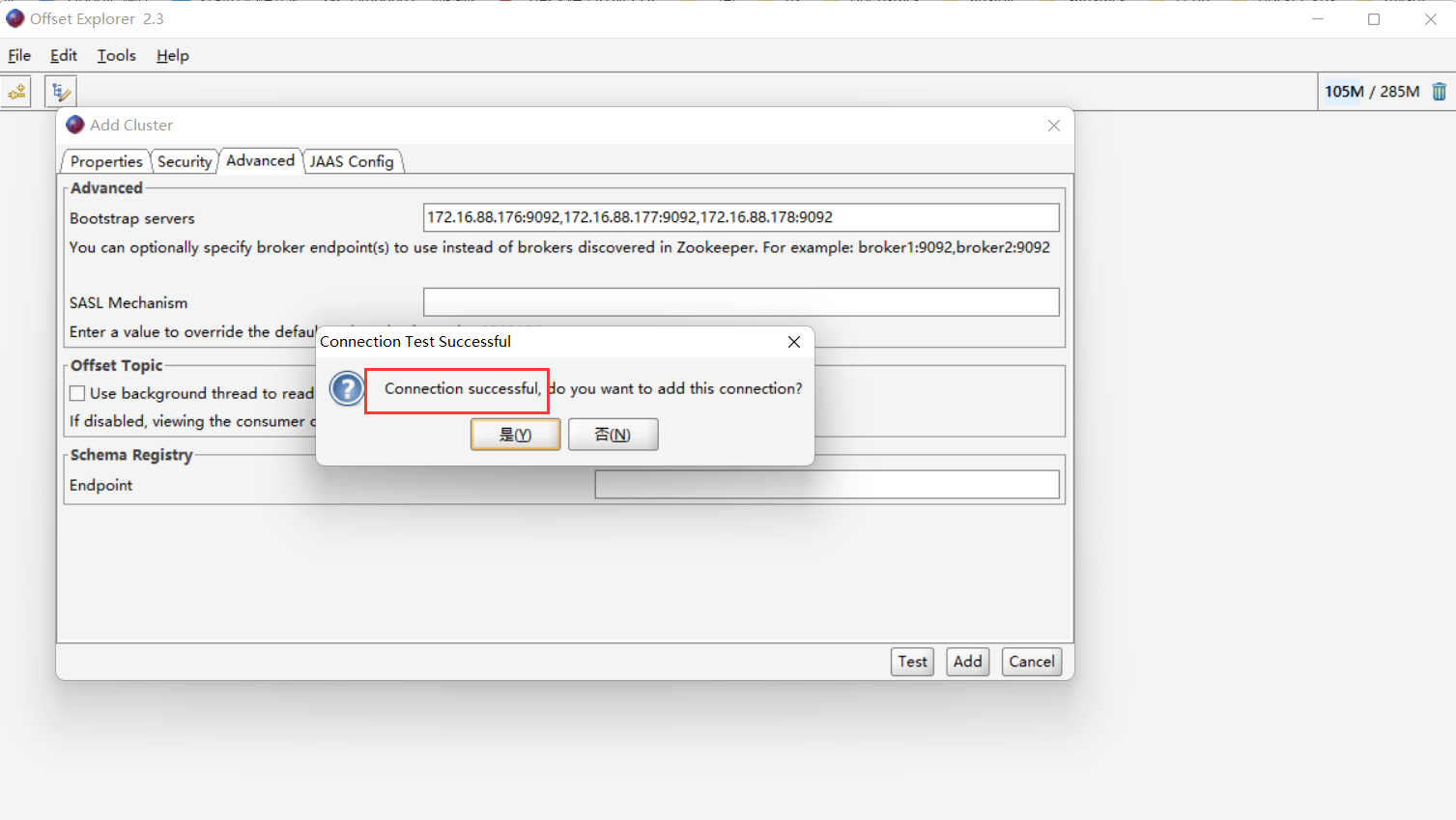

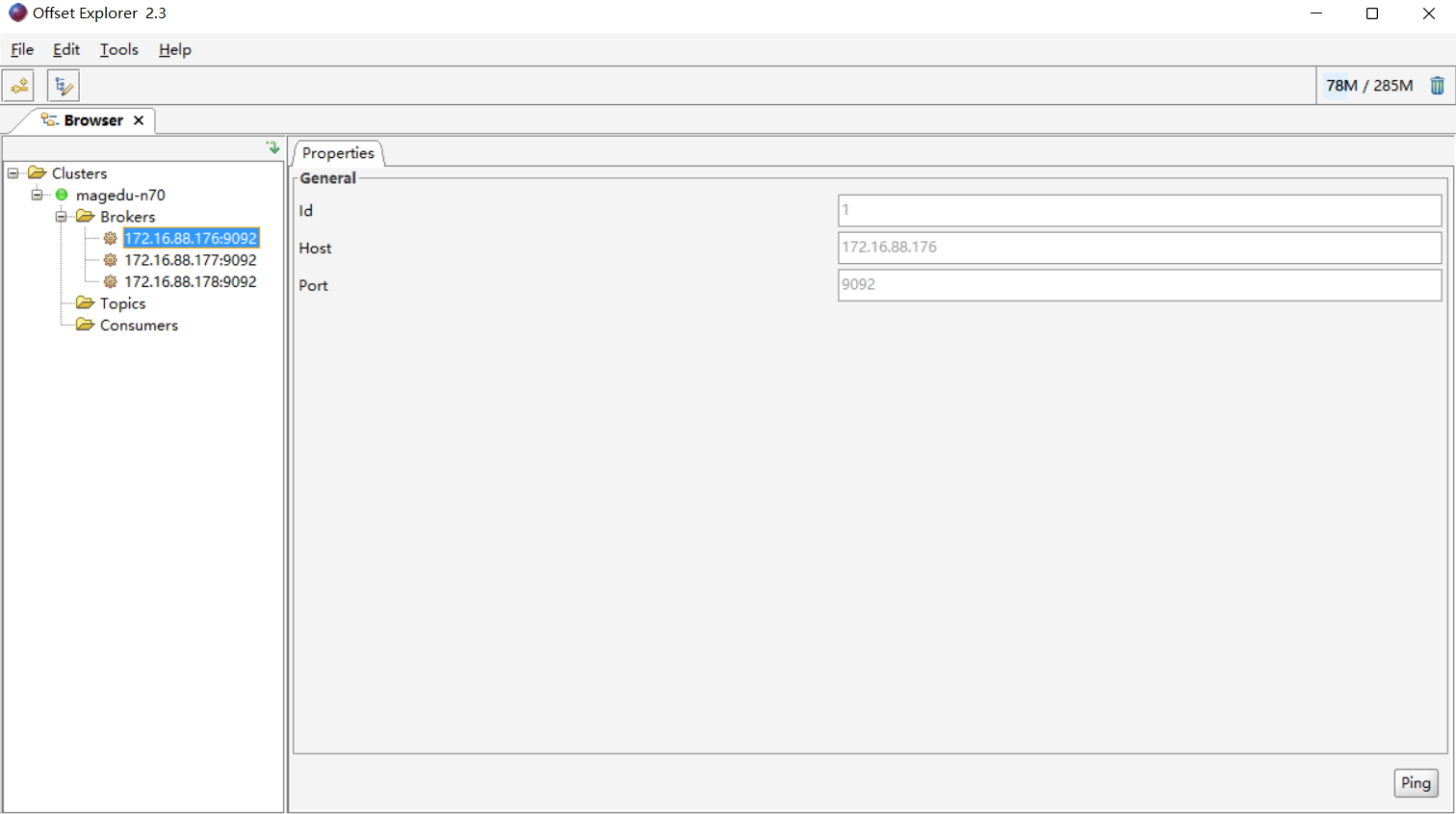

4.8、下载安装kafka客户端工具

wget https://www.kafkatool.com/download2/offsetexplorer_64bit.exe

配置kafka客户端工具,连接kafka集群

五、准备logstash镜像

五、准备logstash镜像

5.1、准备logstash镜像相关文件

[root@easzlab-images-02 1.logstash-image-Dockerfile]# ls build-commond.sh Dockerfile logstash.conf logstash.yml [root@easzlab-images-02 1.logstash-image-Dockerfile]# [root@easzlab-images-02 1.logstash-image-Dockerfile]# cat Dockerfile FROM logstash:7.12.1 USER root WORKDIR /usr/share/logstash ADD logstash.yml /usr/share/logstash/config/logstash.yml ADD logstash.conf /usr/share/logstash/pipeline/logstash.conf [root@easzlab-images-02 1.logstash-image-Dockerfile]# cat logstash.conf input { file { #path => "/var/lib/docker/containers/*/*-json.log" #docker path => "/var/log/pods/*/*/*.log" start_position => "beginning" type => "jsonfile-daemonset-applog" } file { path => "/var/log/*.log" start_position => "beginning" type => "jsonfile-daemonset-syslog" } } output { if [type] == "jsonfile-daemonset-applog" { kafka { bootstrap_servers => "${KAFKA_SERVER}" topic_id => "${TOPIC_ID}" batch_size => 16384 #logstash每次向ES传输的数据量大小,单位为字节 codec => "${CODEC}" } } if [type] == "jsonfile-daemonset-syslog" { kafka { bootstrap_servers => "${KAFKA_SERVER}" topic_id => "${TOPIC_ID}" batch_size => 16384 codec => "${CODEC}" #系统日志不是json格式 }} } [root@easzlab-images-02 1.logstash-image-Dockerfile]# [root@easzlab-images-02 1.logstash-image-Dockerfile]# cat logstash.yml http.host: "0.0.0.0" #xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch:9200" ] [root@easzlab-images-02 1.logstash-image-Dockerfile]# [root@easzlab-images-02 1.logstash-image-Dockerfile]# cat build-commond.sh #!/bin/bash nerdctl build -t harbor.magedu.net/baseimages/logstash:v7.12.1-json-file-log-v1 . nerdctl push harbor.magedu.net/baseimages/logstash:v7.12.1-json-file-log-v1 [root@easzlab-images-02 1.logstash-image-Dockerfile]#

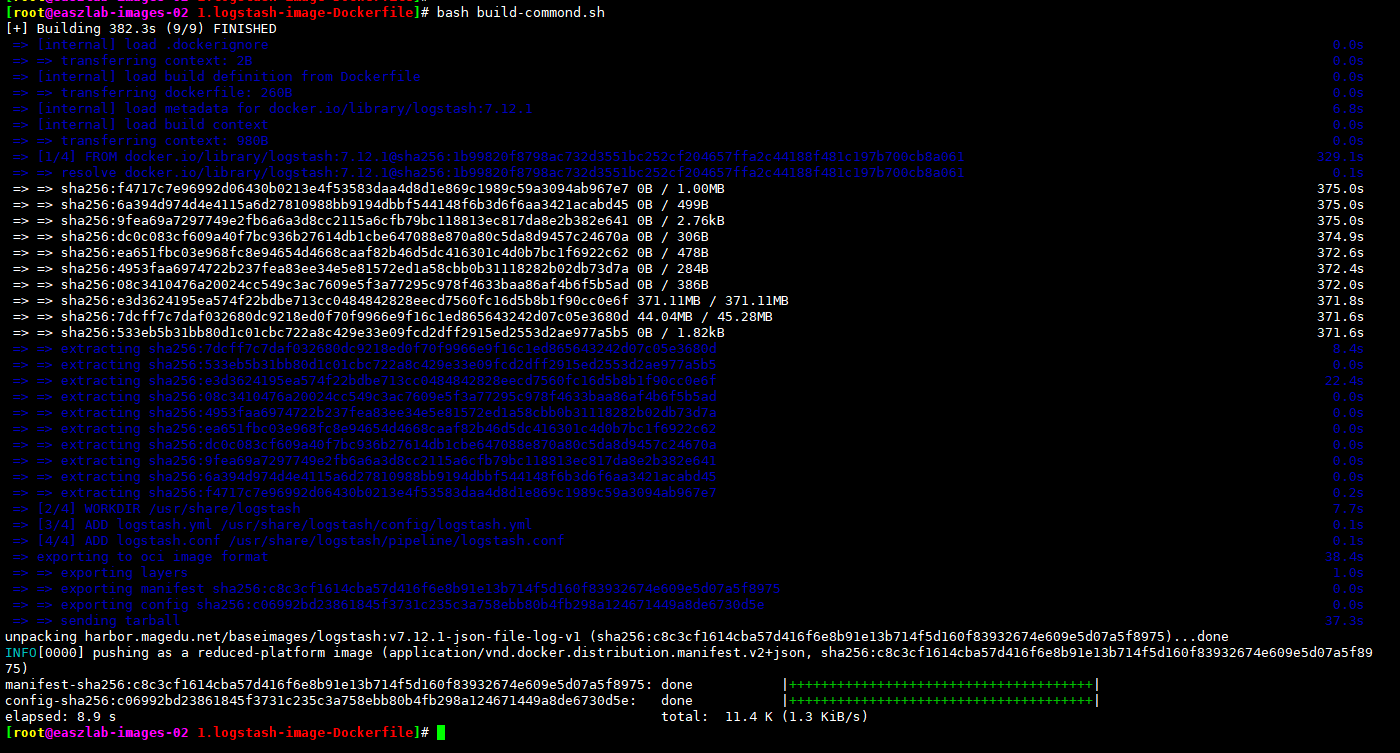

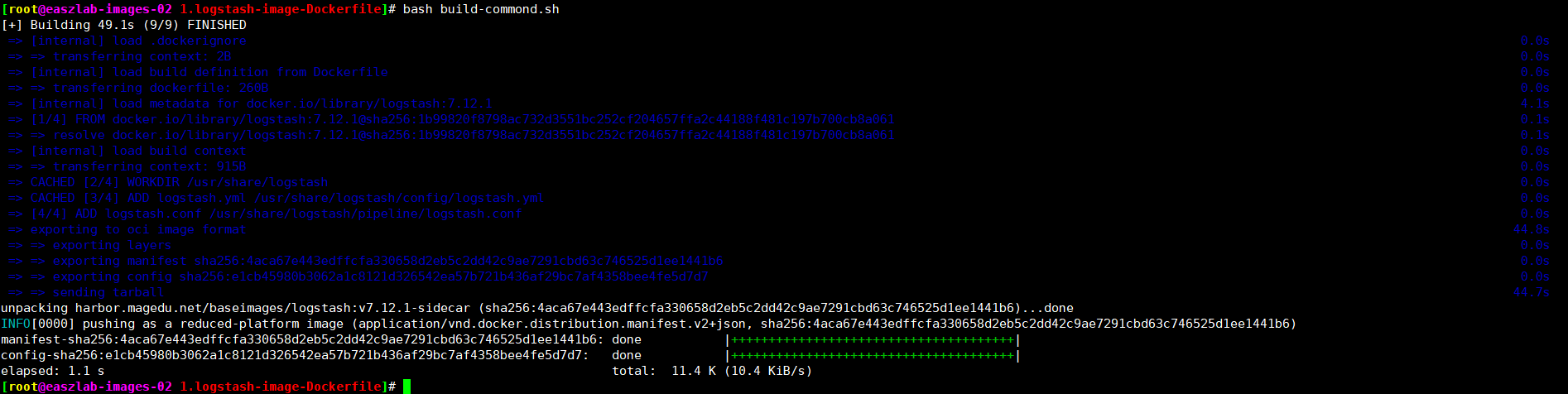

5.2、构建logstash镜像

5.5、准备logstash daemonset部署文件

cat 2.DaemonSet-logstash.yaml

apiVersion: apps/v1 kind: DaemonSet metadata: name: logstash-elasticsearch namespace: kube-system labels: k8s-app: logstash-logging spec: selector: matchLabels: name: logstash-elasticsearch template: metadata: labels: name: logstash-elasticsearch spec: tolerations: # this toleration is to have the daemonset runnable on master nodes # remove it if your masters can't run pods - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule containers: - name: logstash-elasticsearch image: harbor.magedu.net/baseimages/logstash:v7.12.1-json-file-log-v1 env: - name: "KAFKA_SERVER" value: "172.16.88.176:9092,172.16.88.177:9092,172.16.88.178:9092" - name: "TOPIC_ID" value: "jsonfile-log-topic" - name: "CODEC" value: "json" # resources: # limits: # cpu: 1000m # memory: 1024Mi # requests: # cpu: 500m # memory: 1024Mi volumeMounts: - name: varlog #定义宿主机系统日志挂载路径 mountPath: /var/log #宿主机系统日志挂载点 - name: varlibdockercontainers #定义容器日志挂载路径,和logstash配置文件中的收集路径保持一直 #mountPath: /var/lib/docker/containers #docker挂载路径 mountPath: /var/log/pods #containerd挂载路径,此路径与logstash的日志收集路径必须一致 readOnly: false terminationGracePeriodSeconds: 30 volumes: - name: varlog hostPath: path: /var/log #宿主机系统日志 - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers #docker的宿主机日志路径 path: /var/log/pods #containerd的宿主机日志路径

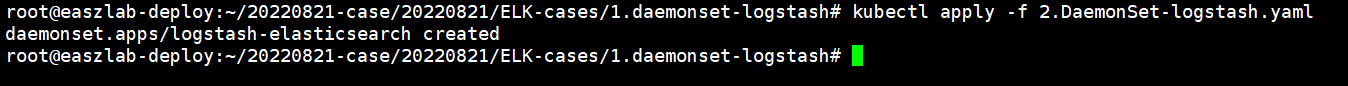

5.6、安装logstash

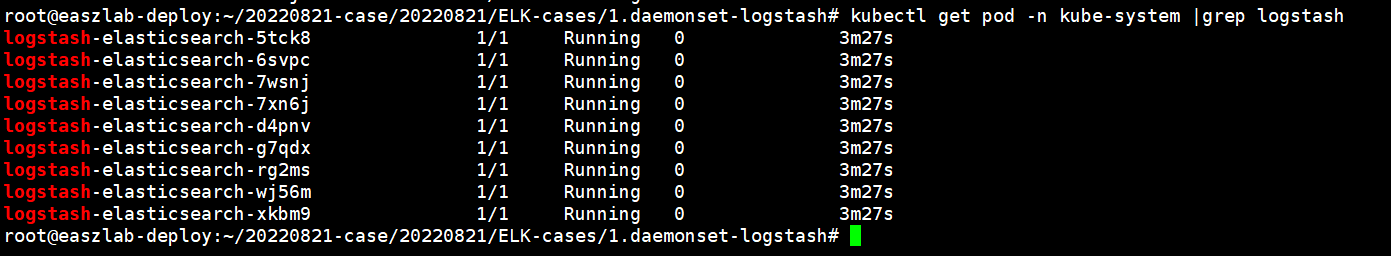

5.7、通过客户端验证kafka集群

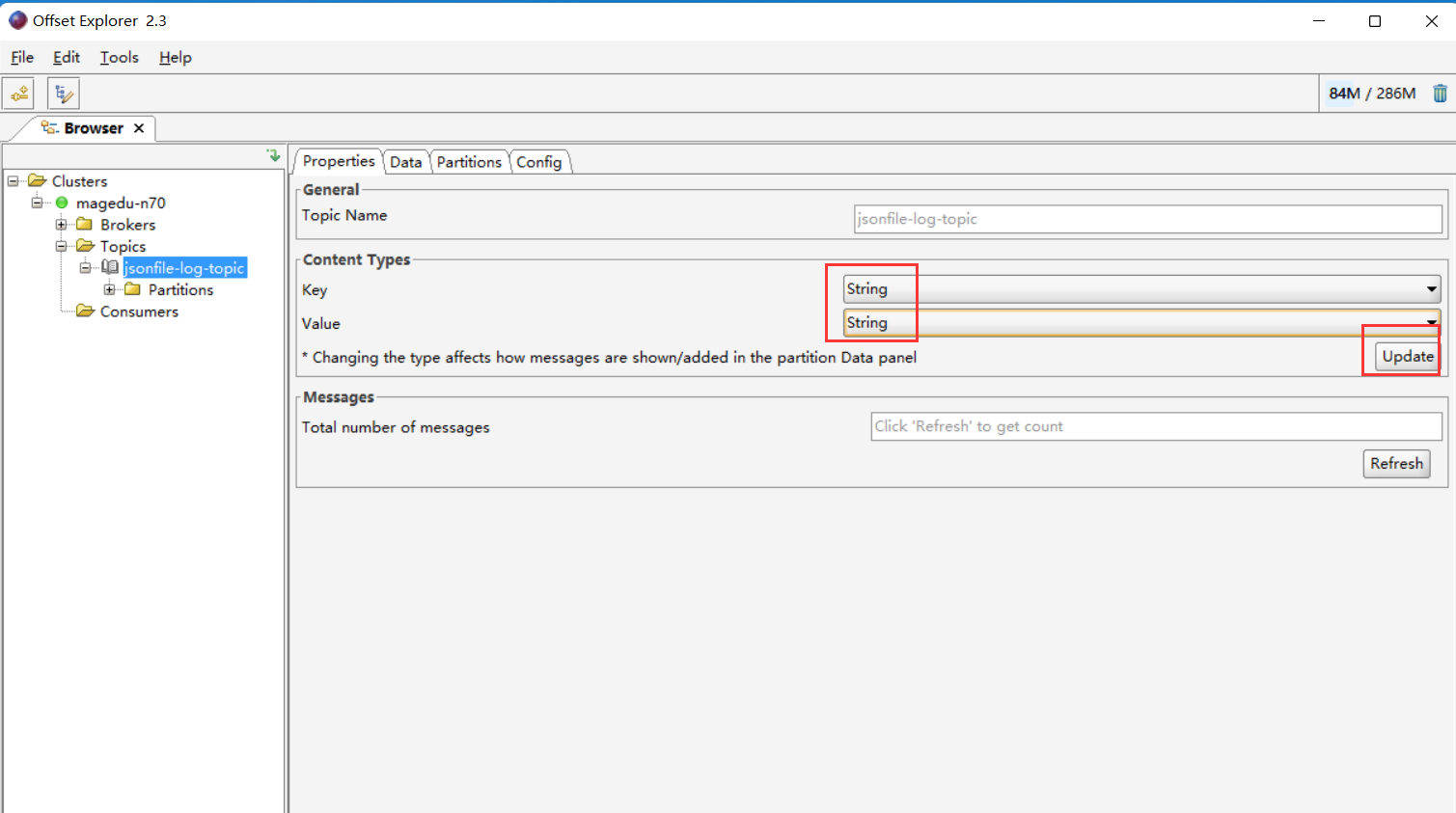

设置环境参数为string

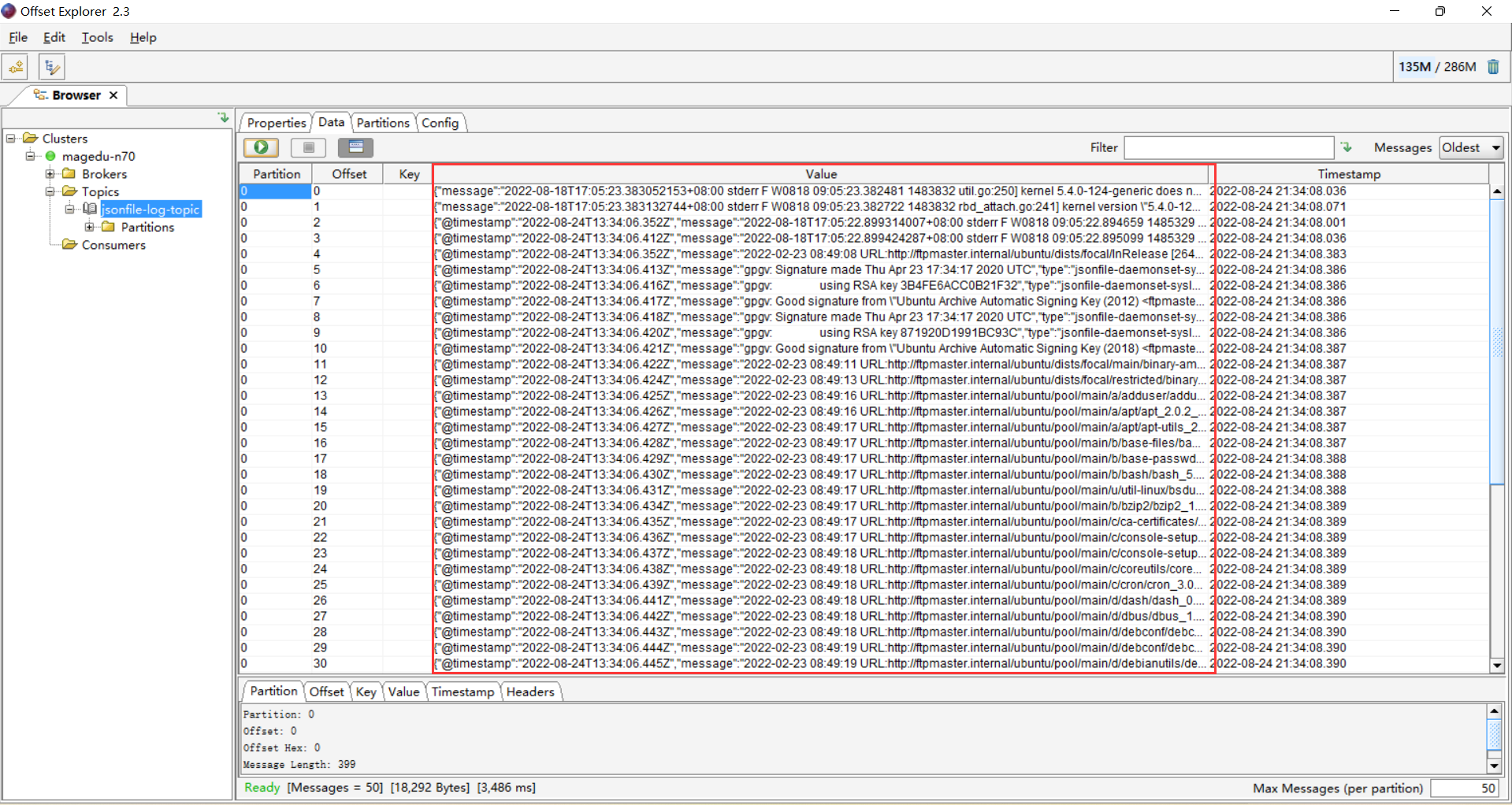

此时再查看数据,已经显示正常

六、部署logstach中间节点

在虚机安装logstach,并将采集到的数据传输到es集群

6.1、下载并安装

下载安装包 wget https://mirrors.tuna.tsinghua.edu.cn/elasticstack/7.x/apt/pool/main/l/logstash/logstash-7.12.1-amd64.deb 安装openjdk apt upate && apt-get install openjdk-8-jdk -y

安装logstash包 dpkg -i logstash-7.12.1-amd64.deb

6.2、配置logstash采集文件

cat logsatsh-daemonset-jsonfile-kafka-to-es.conf

input { kafka { bootstrap_servers => "172.16.88.176:9092,172.16.88.177:9092,172.16.88.178:9092" topics => ["jsonfile-log-topic"] codec => "json" } } output { #if [fields][type] == "app1-access-log" { if [type] == "jsonfile-daemonset-applog" { elasticsearch { hosts => ["172.16.88.173:9200","172.16.88.174:9200"] index => "jsonfile-daemonset-applog-%{+YYYY.MM.dd}" }} if [type] == "jsonfile-daemonset-syslog" { elasticsearch { hosts => ["172.16.88.173:9200","172.16.88.174:9200"] index => "jsonfile-daemonset-syslog-%{+YYYY.MM.dd}" }} }

6.3、启动logstash服务

systemctl enable --now logstash.service

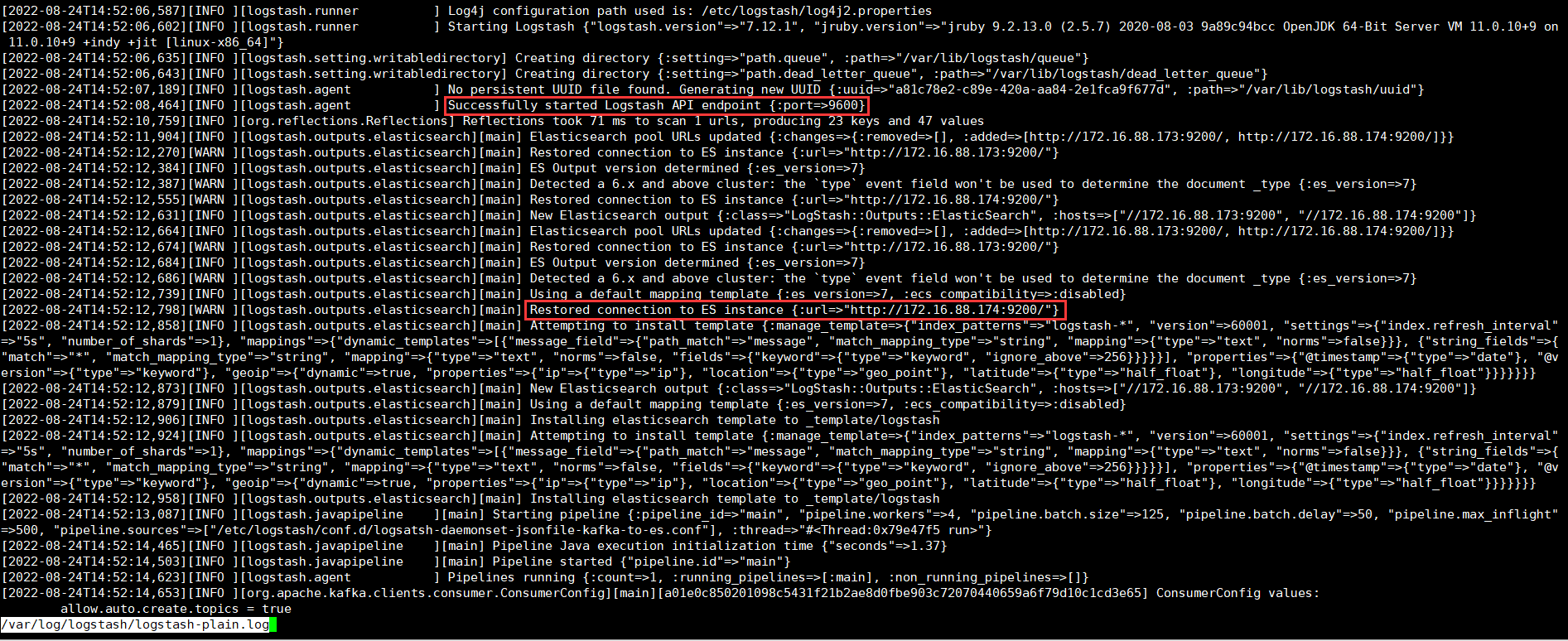

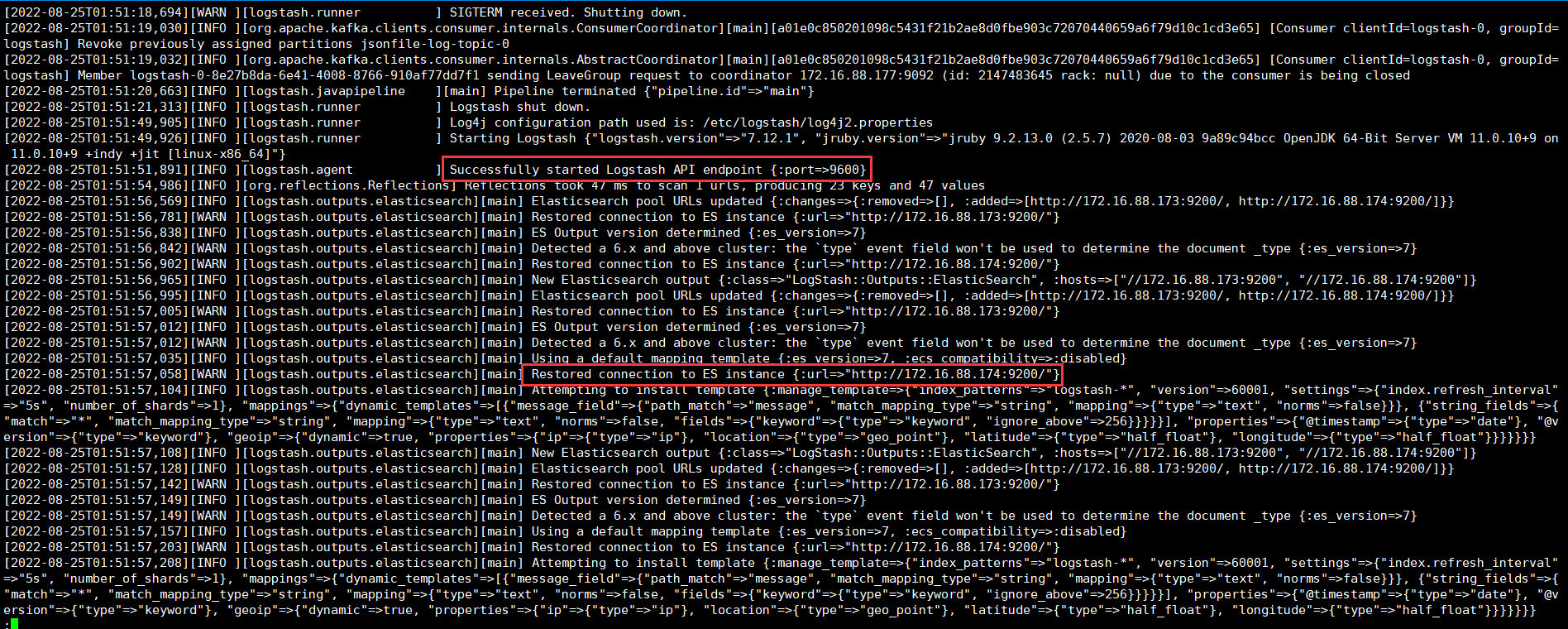

less /var/log/logstash/logstash-plain.log 查看日志,如果这里显示 Successfully started Logstash API endpoint {:port=>9600}表示连接成功

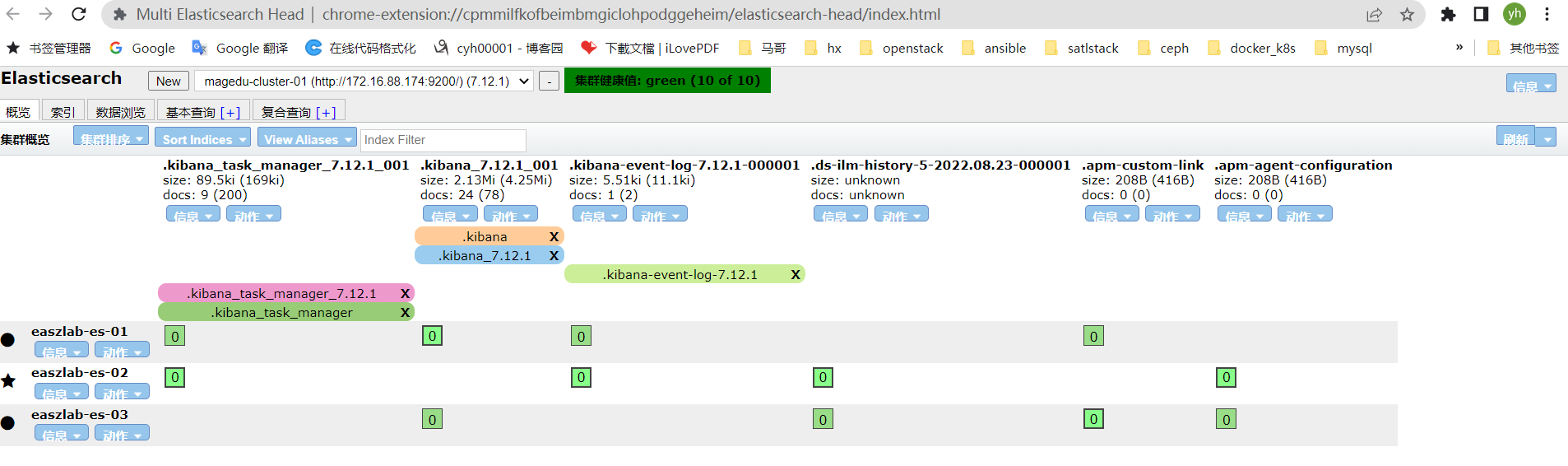

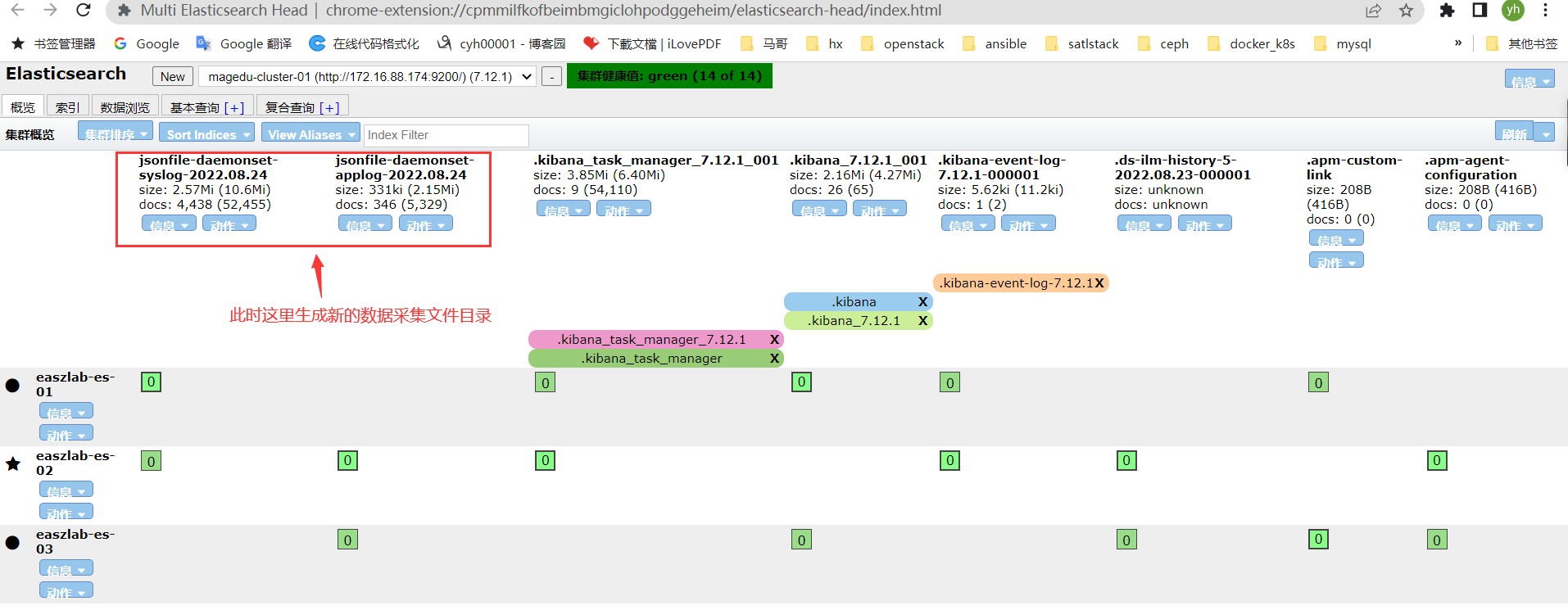

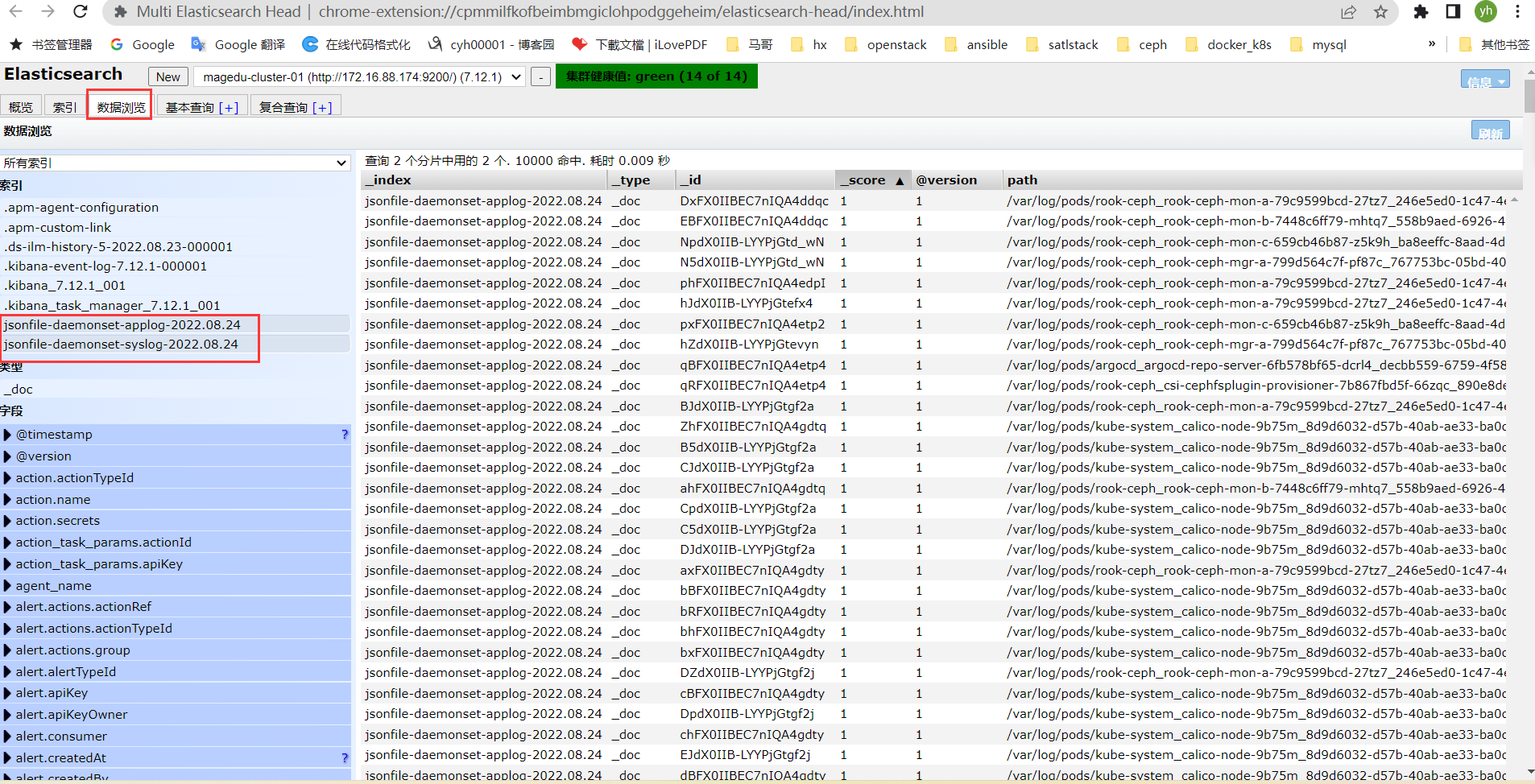

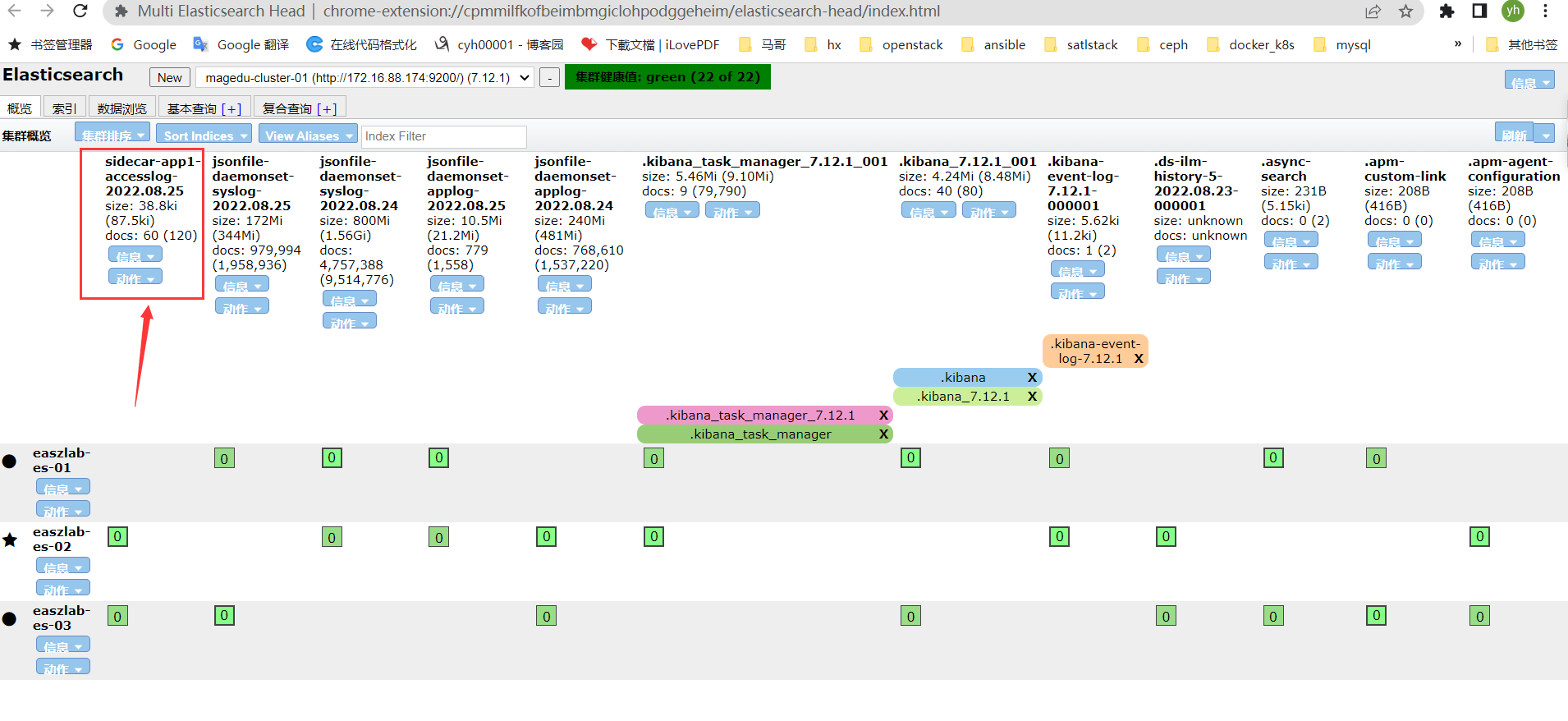

查看es集群是否有数据传输过来,是否是我们所采集的数据

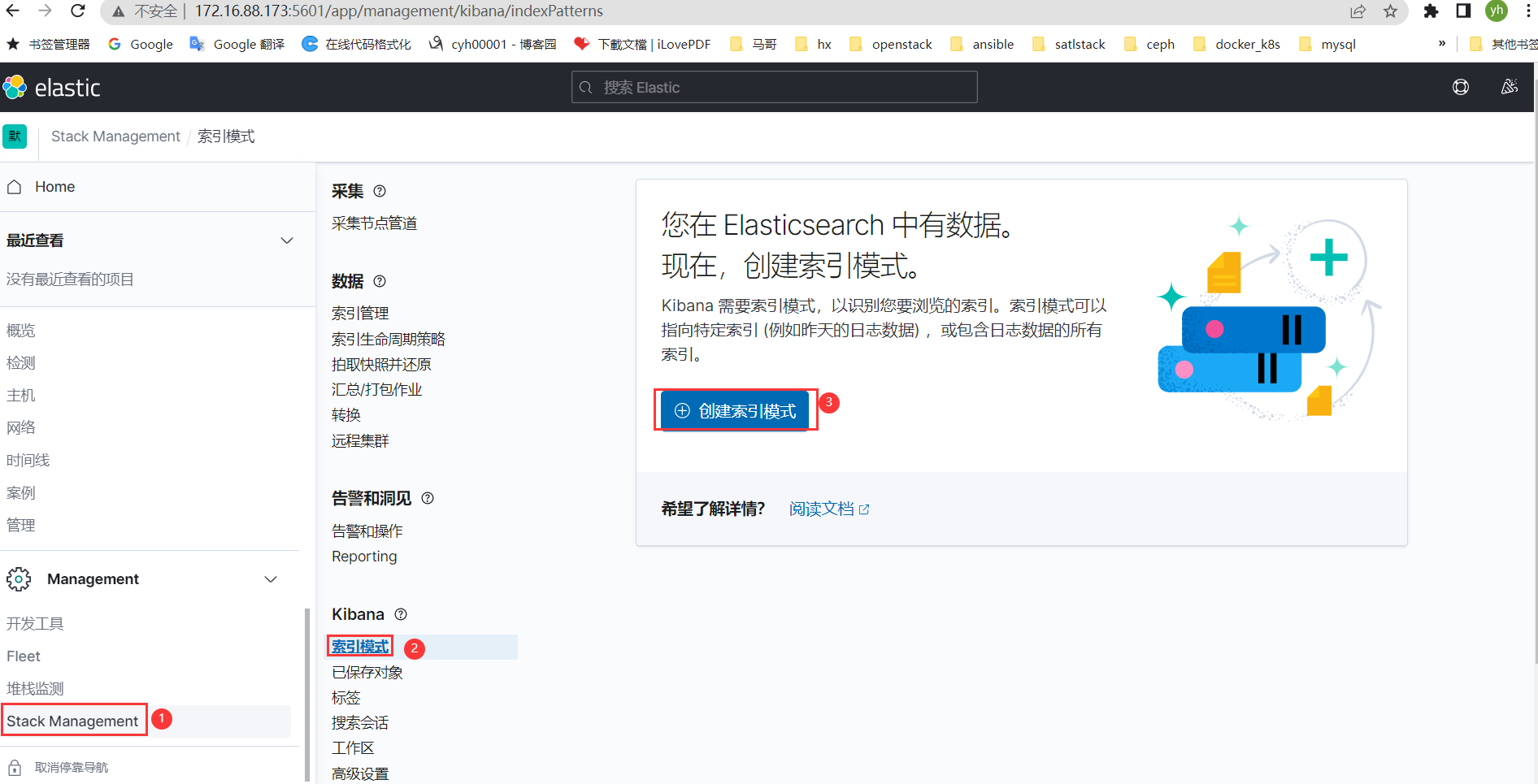

6.4、配置kibana日志展示

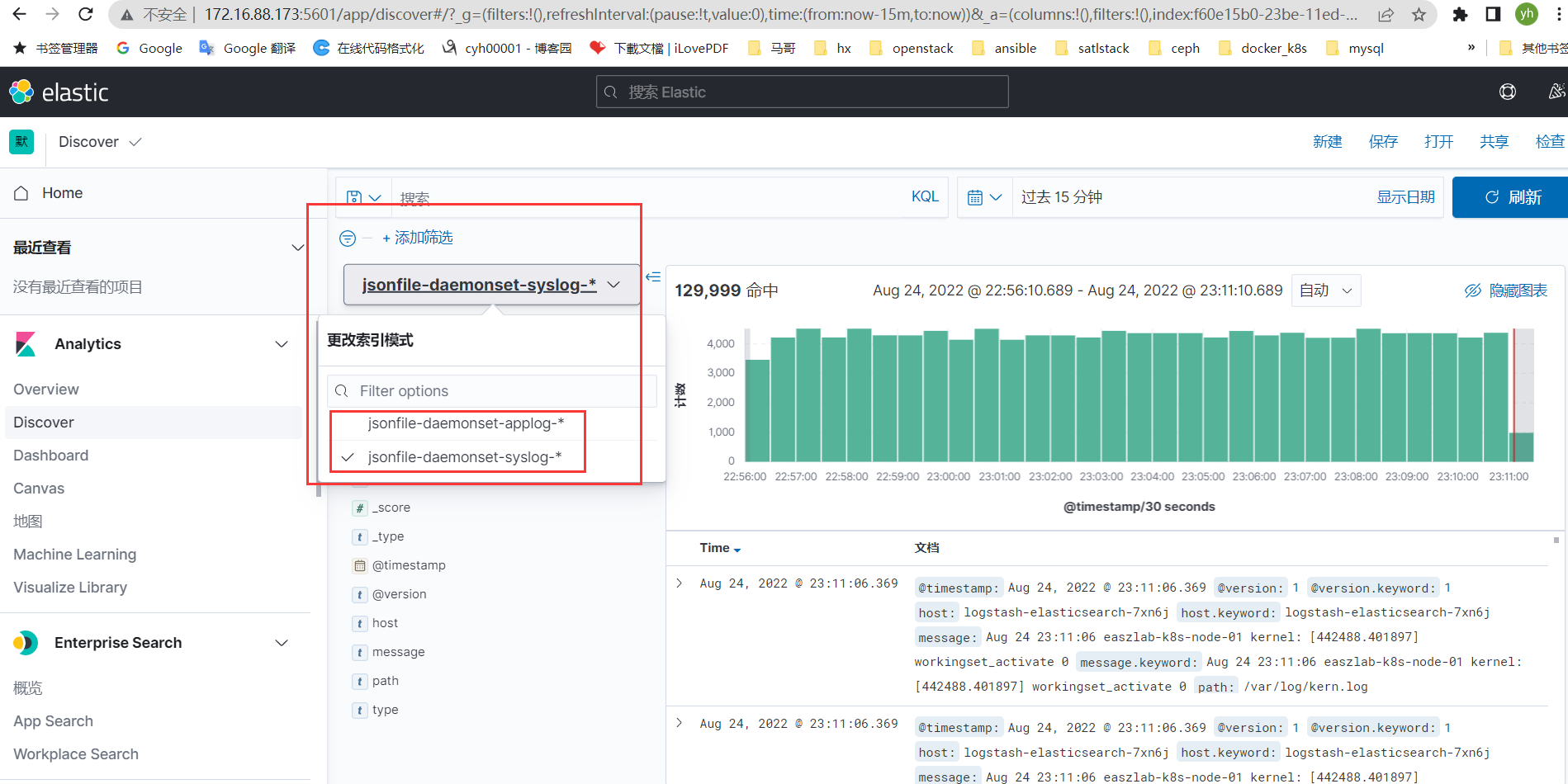

同样操作增加jsonfile-daemonset-syslog

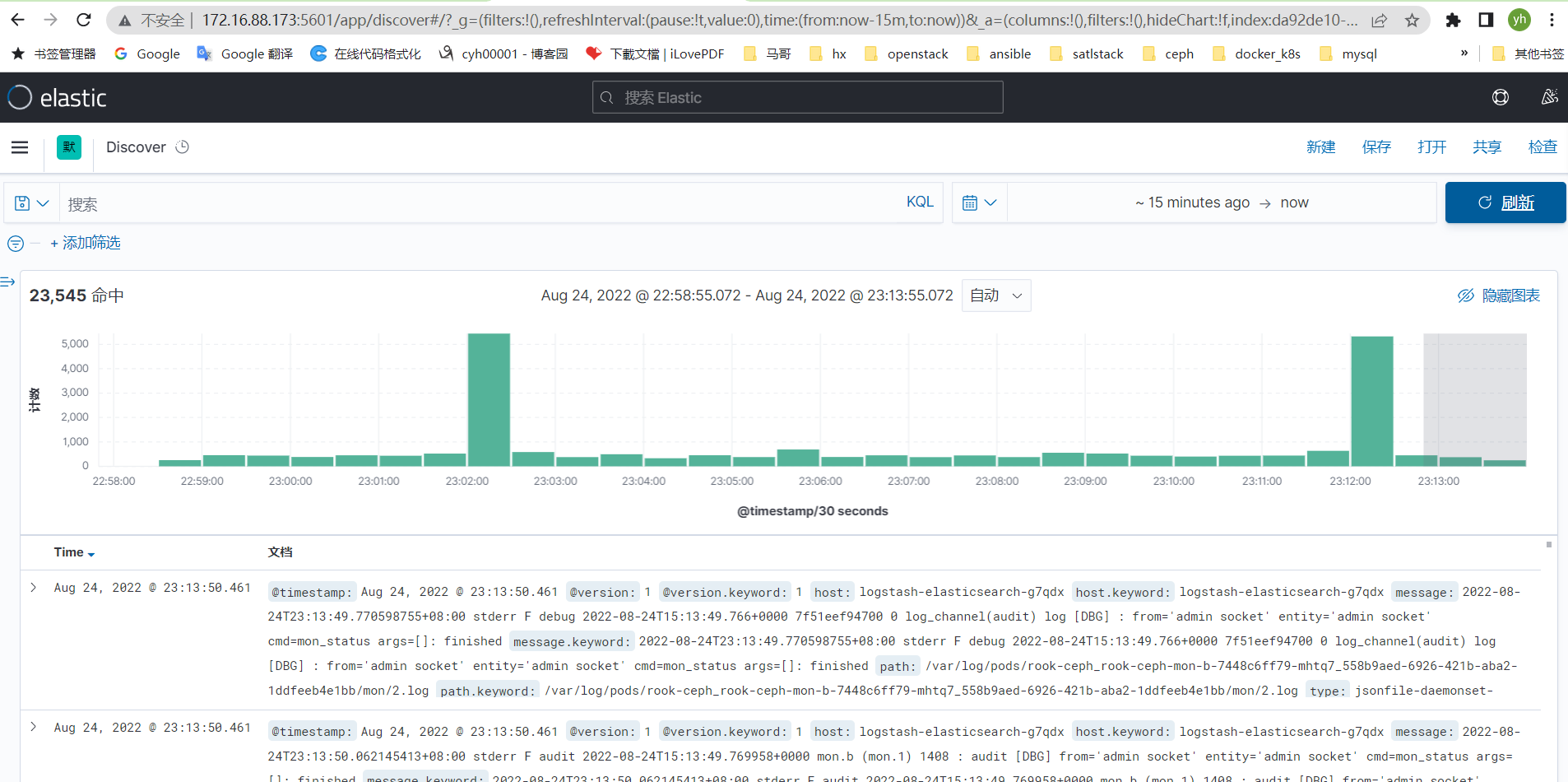

此时此刻日志已经采集到

七、基于sidecar模式实现日志采集

7.1、准备logstash-sidecar容器构建文件

[root@easzlab-images-02 1.logstash-image-Dockerfile]# ls build-commond.sh Dockerfile logstash.conf logstash.yml [root@easzlab-images-02 1.logstash-image-Dockerfile]# cat build-commond.sh #!/bin/bash nerdctl build -t harbor.magedu.net/baseimages/logstash:v7.12.1-sidecar . nerdctl push harbor.magedu.net/baseimages/logstash:v7.12.1-sidecar

[root@easzlab-images-02 1.logstash-image-Dockerfile]# cat Dockerfile FROM logstash:7.12.1

USER root WORKDIR /usr/share/logstash #RUN rm -rf config/logstash-sample.conf ADD logstash.yml /usr/share/logstash/config/logstash.yml ADD logstash.conf /usr/share/logstash/pipeline/logstash.conf [root@easzlab-images-02 1.logstash-image-Dockerfile]# cat logstash.conf input { file { path => "/var/log/applog/catalina.out" start_position => "beginning" type => "app1-sidecar-catalina-log" } file { path => "/var/log/applog/localhost_access_log.*.txt" start_position => "beginning" type => "app1-sidecar-access-log" } } output { if [type] == "app1-sidecar-catalina-log" { kafka { bootstrap_servers => "${KAFKA_SERVER}" topic_id => "${TOPIC_ID}" batch_size => 16384 #logstash每次向ES传输的数据量大小,单位为字节 codec => "${CODEC}" } } if [type] == "app1-sidecar-access-log" { kafka { bootstrap_servers => "${KAFKA_SERVER}" topic_id => "${TOPIC_ID}" batch_size => 16384 codec => "${CODEC}" }} } [root@easzlab-images-02 1.logstash-image-Dockerfile]# cat logstash.yml http.host: "0.0.0.0" #xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch:9200" ] [root@easzlab-images-02 1.logstash-image-Docker

7.2、构建镜像

7.3、准备测试pod文件

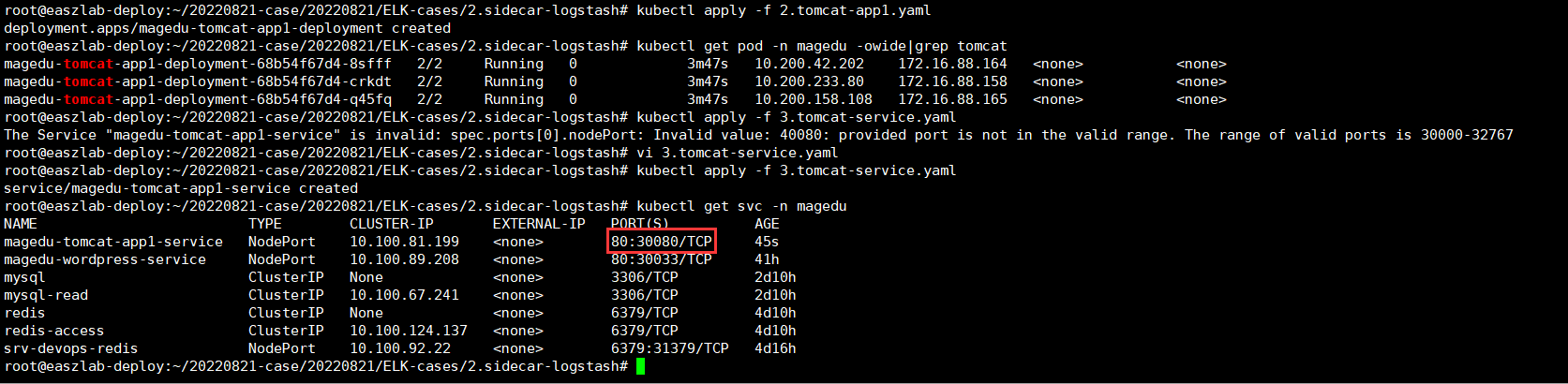

#cat 2.tomcat-app1.yaml kind: Deployment #apiVersion: extensions/v1beta1 apiVersion: apps/v1 metadata: labels: app: magedu-tomcat-app1-deployment-label name: magedu-tomcat-app1-deployment #当前版本的deployment 名称 namespace: magedu spec: replicas: 3 selector: matchLabels: app: magedu-tomcat-app1-selector template: metadata: labels: app: magedu-tomcat-app1-selector spec: containers: - name: sidecar-container image: harbor.magedu.net/baseimages/logstash:v7.12.1-sidecar imagePullPolicy: IfNotPresent #imagePullPolicy: Always env: - name: "KAFKA_SERVER" value: "172.16.88.176:9092,172.16.88.177:9092,172.16.88.178:9092" - name: "TOPIC_ID" value: "tomcat-app1-topic" - name: "CODEC" value: "json" volumeMounts: - name: applogs mountPath: /var/log/applog - name: magedu-tomcat-app1-container image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/tomcat-app1:v1 imagePullPolicy: IfNotPresent #imagePullPolicy: Always ports: - containerPort: 8080 protocol: TCP name: http env: - name: "password" value: "123456" - name: "age" value: "18" resources: limits: cpu: 1 memory: "512Mi" requests: cpu: 500m memory: "512Mi" volumeMounts: - name: applogs mountPath: /apps/tomcat/logs startupProbe: httpGet: path: /myapp/index.html port: 8080 initialDelaySeconds: 5 #首次检测延迟5s failureThreshold: 3 #从成功转为失败的次数 periodSeconds: 3 #探测间隔周期 readinessProbe: httpGet: #path: /monitor/monitor.html path: /myapp/index.html port: 8080 initialDelaySeconds: 5 periodSeconds: 3 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 3 livenessProbe: httpGet: #path: /monitor/monitor.html path: /myapp/index.html port: 8080 initialDelaySeconds: 5 periodSeconds: 3 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 3 volumes: - name: applogs #定义通过emptyDir实现业务容器与sidecar容器的日志共享,以让sidecar收集业务容器中的日志 emptyDir: {} #cat 3.tomcat-service.yaml --- kind: Service apiVersion: v1 metadata: labels: app: magedu-tomcat-app1-service-label name: magedu-tomcat-app1-service namespace: magedu spec: type: NodePort ports: - name: http port: 80 protocol: TCP targetPort: 8080 nodePort: 40080 selector: app: magedu-tomcat-app1-selector

7.3、安装部署tomcat sidecar

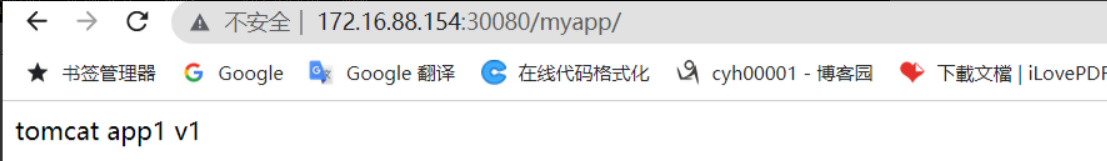

7.4、验证并测试pod是访问

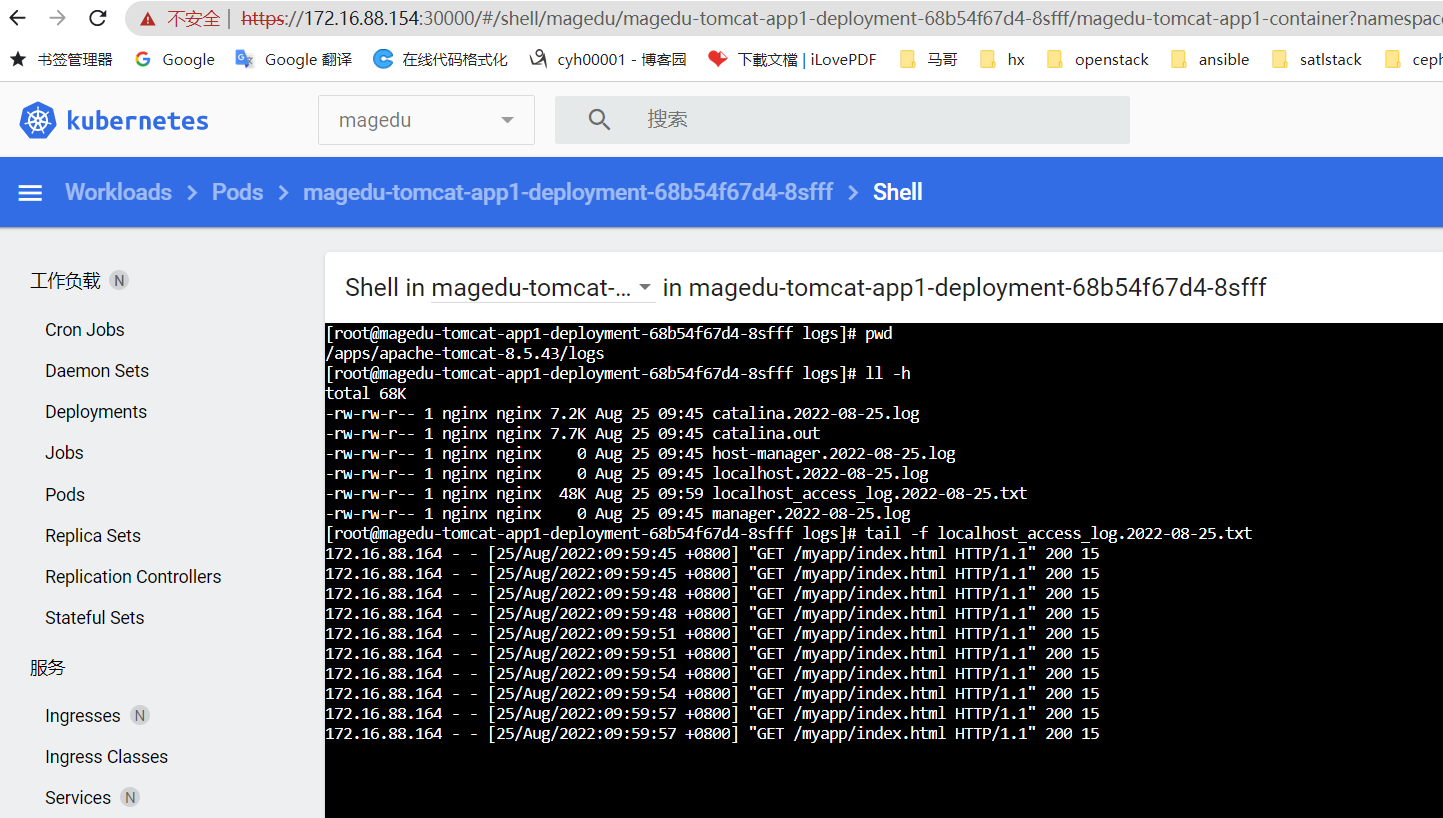

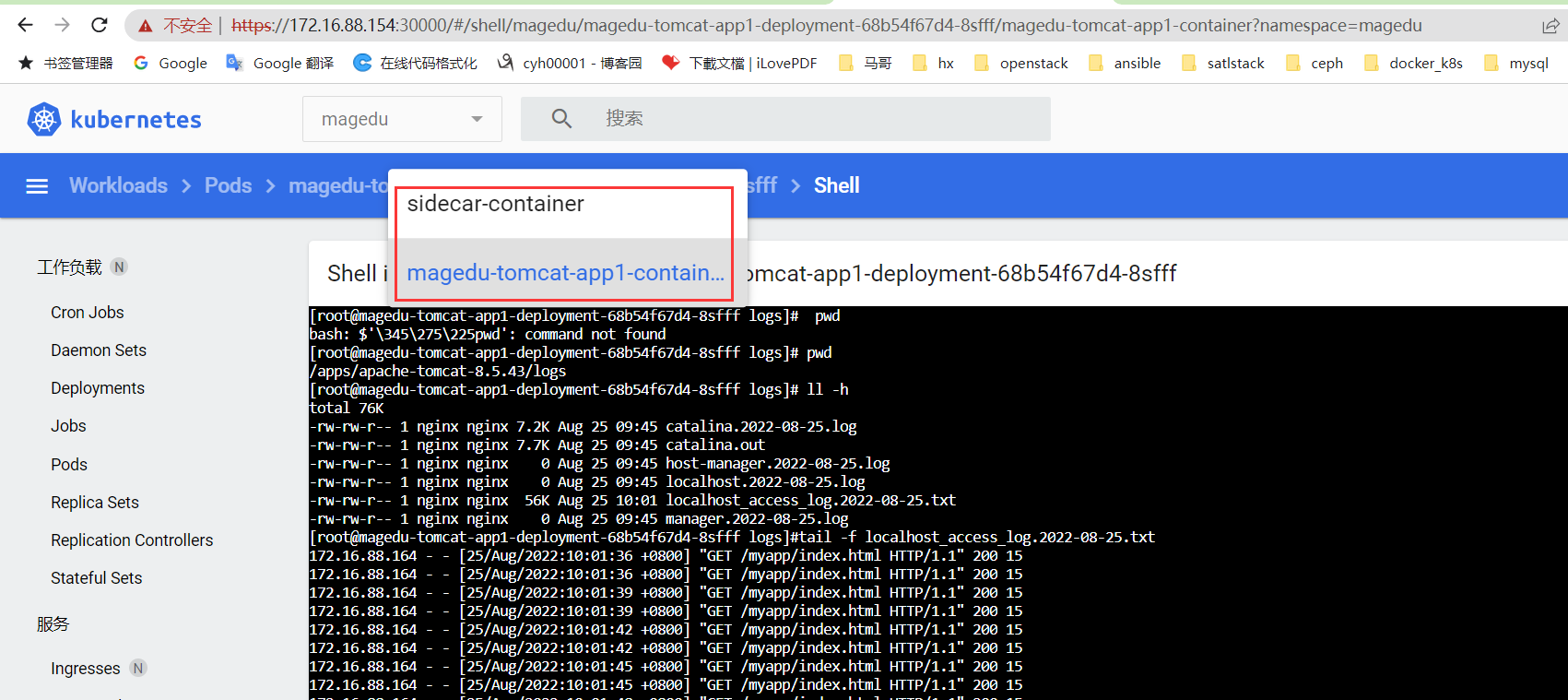

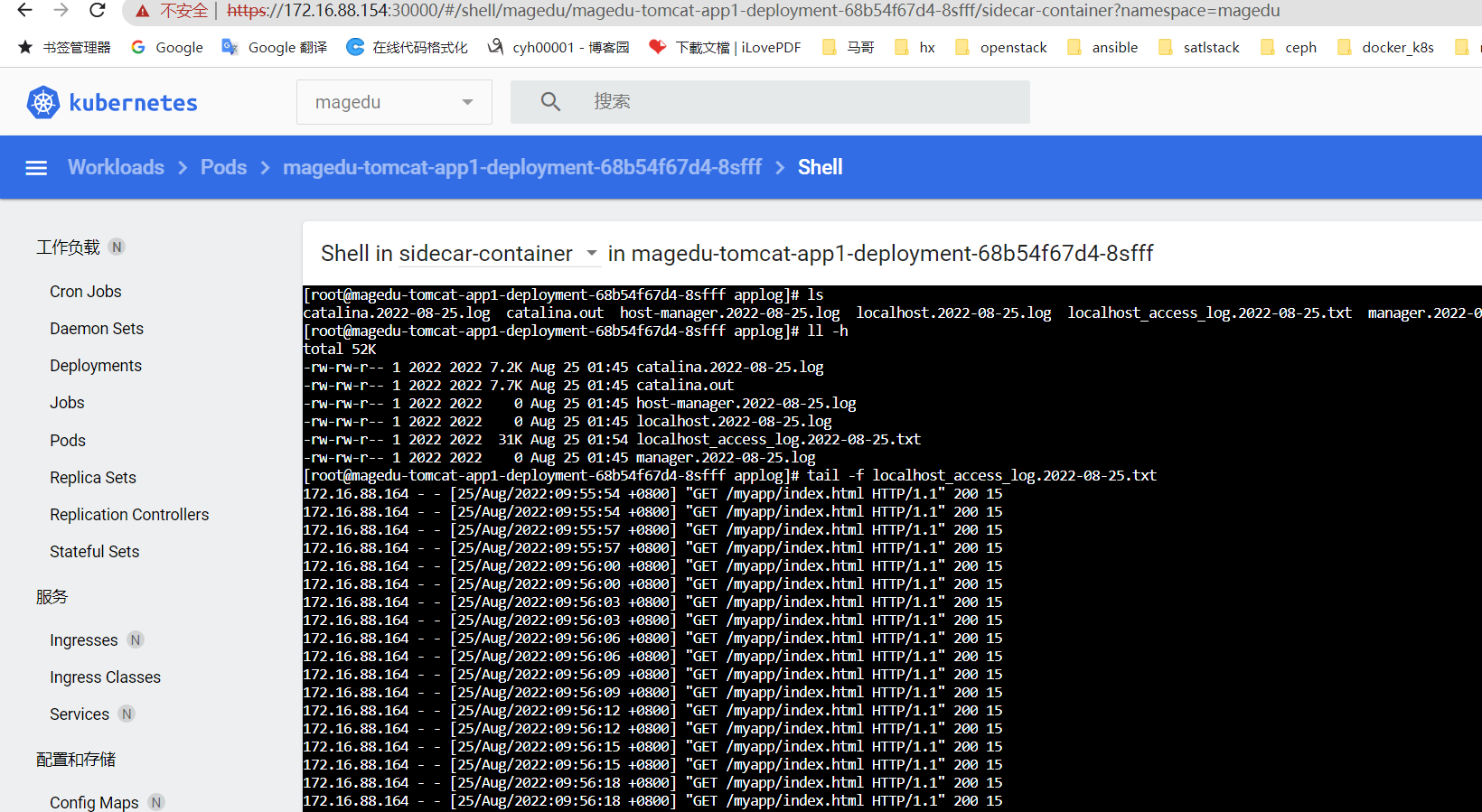

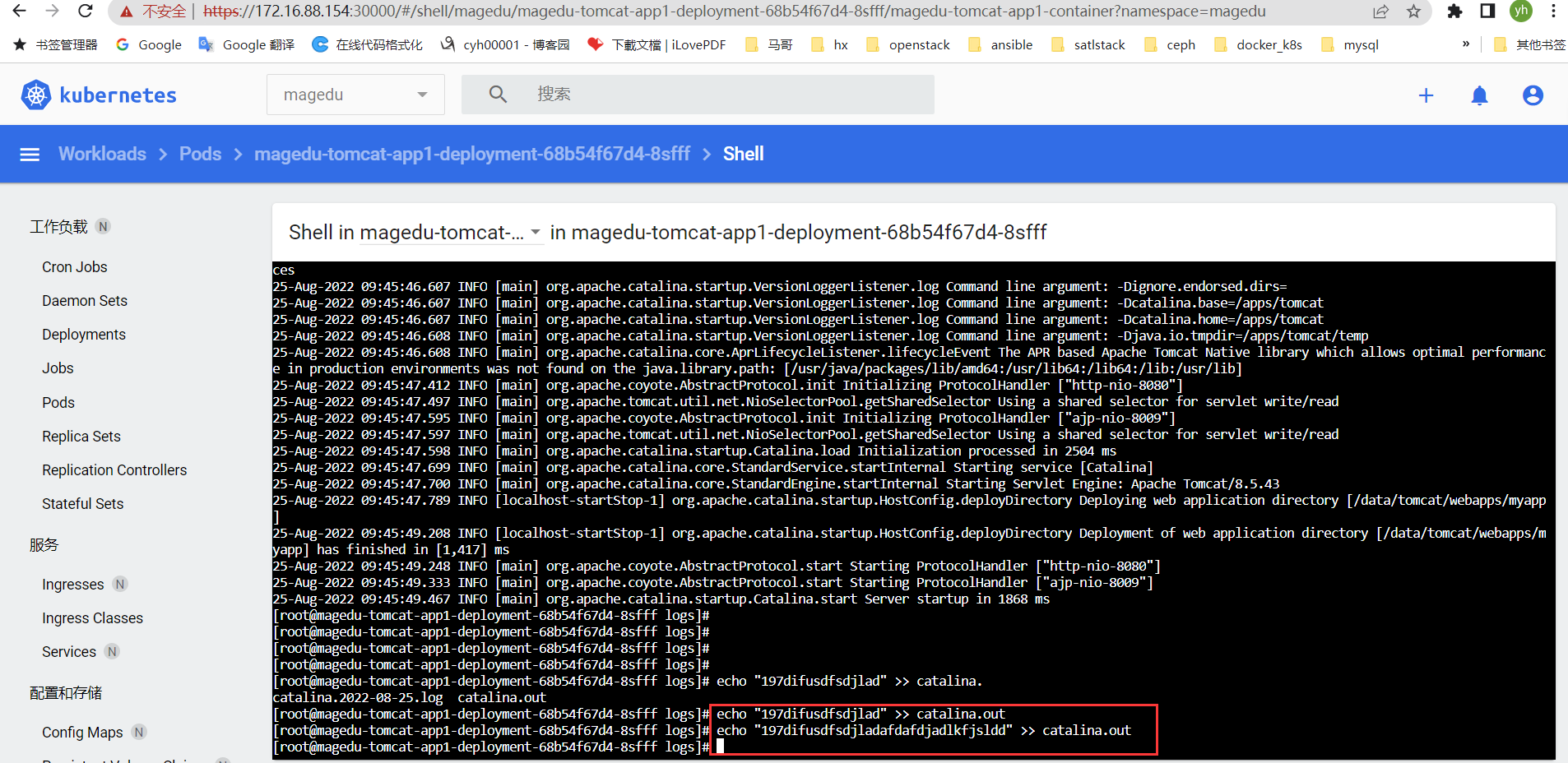

查看容器日志与sidecar容器是否采集到

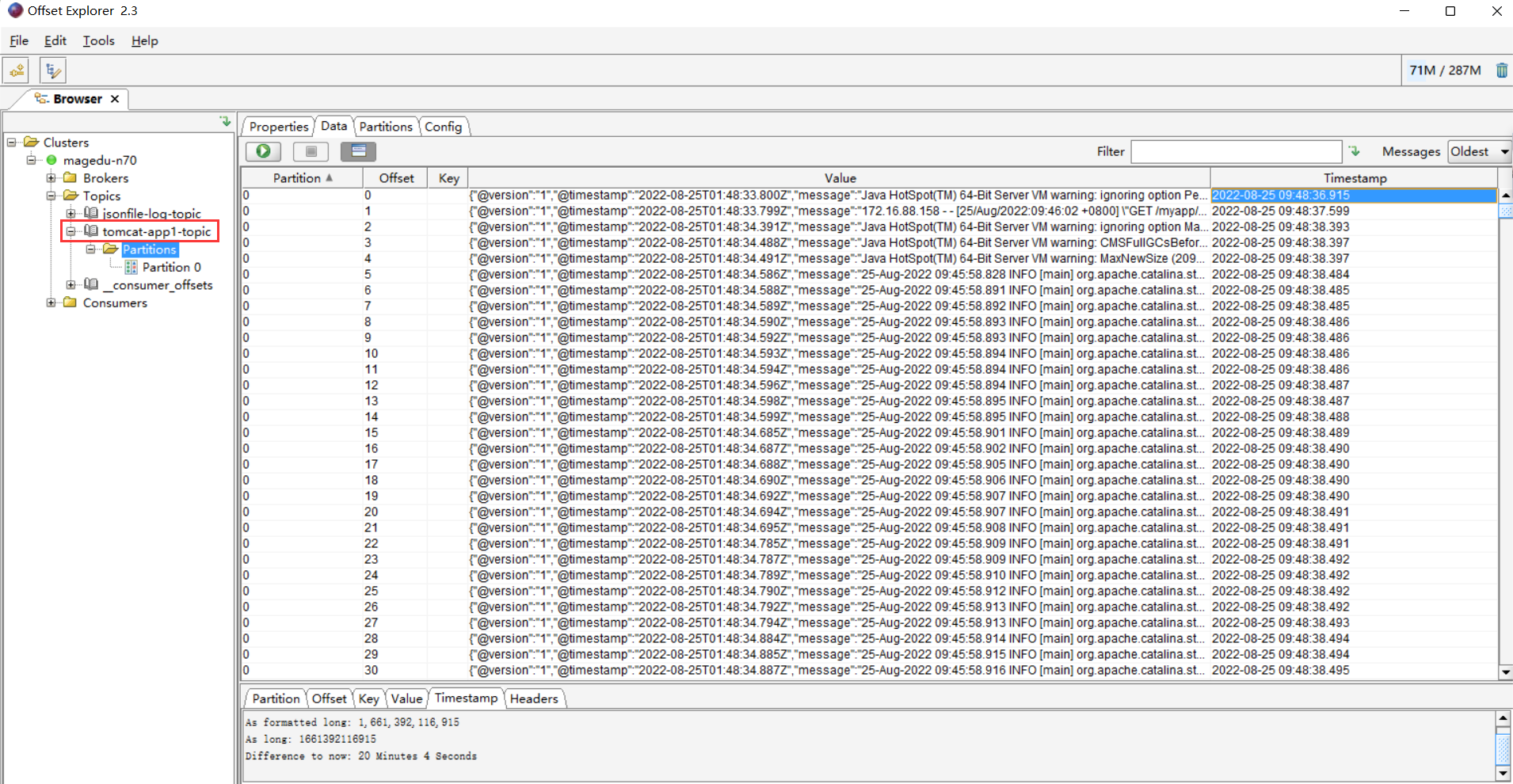

此时kafka集群也获取到相应日志

7.5、配置logstash节点采集信息

cat logsatsh-sidecar-kafka-to-es.conf

input {

kafka {

bootstrap_servers => "172.16.88.176:9092,172.16.88.177:9092,172.16.88.178:9092"

topics => ["tomcat-app1-topic"]

codec => "json"

}

}

output {

#if [fields][type] == "app1-access-log" {

if [type] == "app1-sidecar-access-log" {

elasticsearch {

hosts => ["172.16.88.173:9200","172.16.88.174:9200"]

index => "sidecar-app1-accesslog-%{+YYYY.MM.dd}"

}

}

#if [fields][type] == "app1-catalina-log" {

if [type] == "app1-sidecar-catalina-log" {

elasticsearch {

hosts => ["172.16.88.173:9200","172.16.88.174:9200"]

index => "sidecar-app1-catalinalog-%{+YYYY.MM.dd}"

}

}

# stdout {

# codec => rubydebug

# }

}

systemctl restart logstash.service

less /var/log/logstash/logstash-plain.log

查看是否正常启动

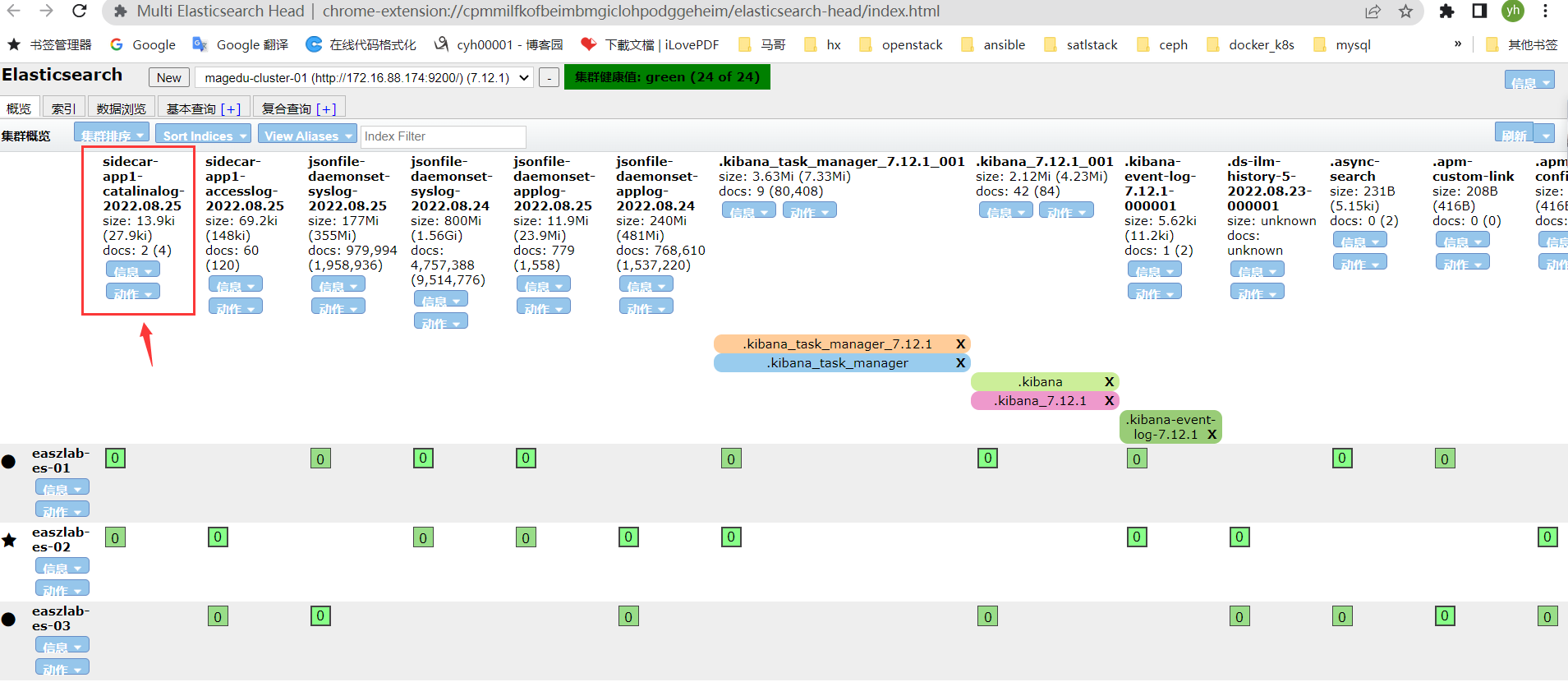

查看es集群是否有新文件生成

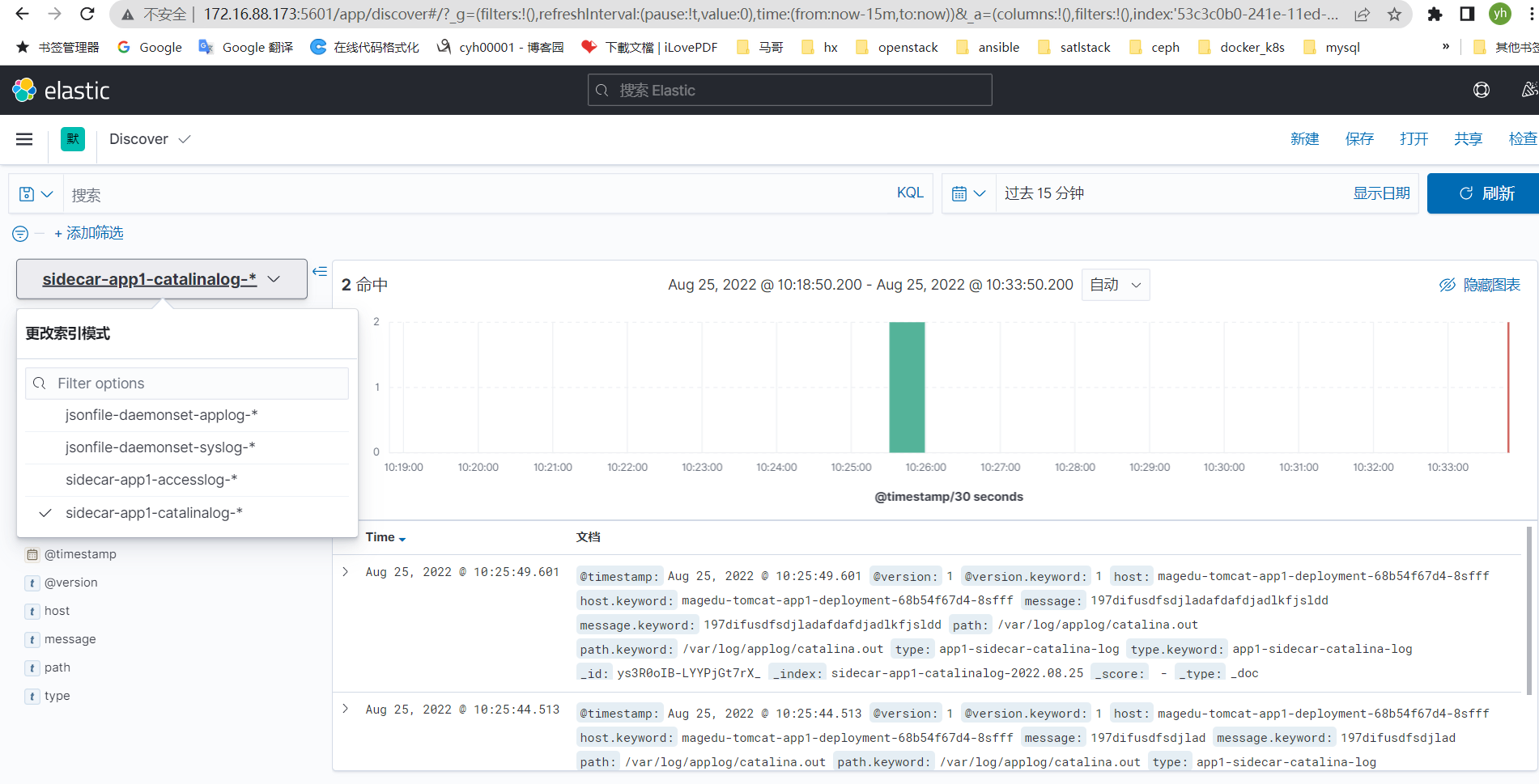

由于没新的cataling日志生成,所以es集群没有catalina日志文件,此时可以通过手动输入日志让其自动创建

由于没新的cataling日志生成,所以es集群没有catalina日志文件,此时可以通过手动输入日志让其自动创建

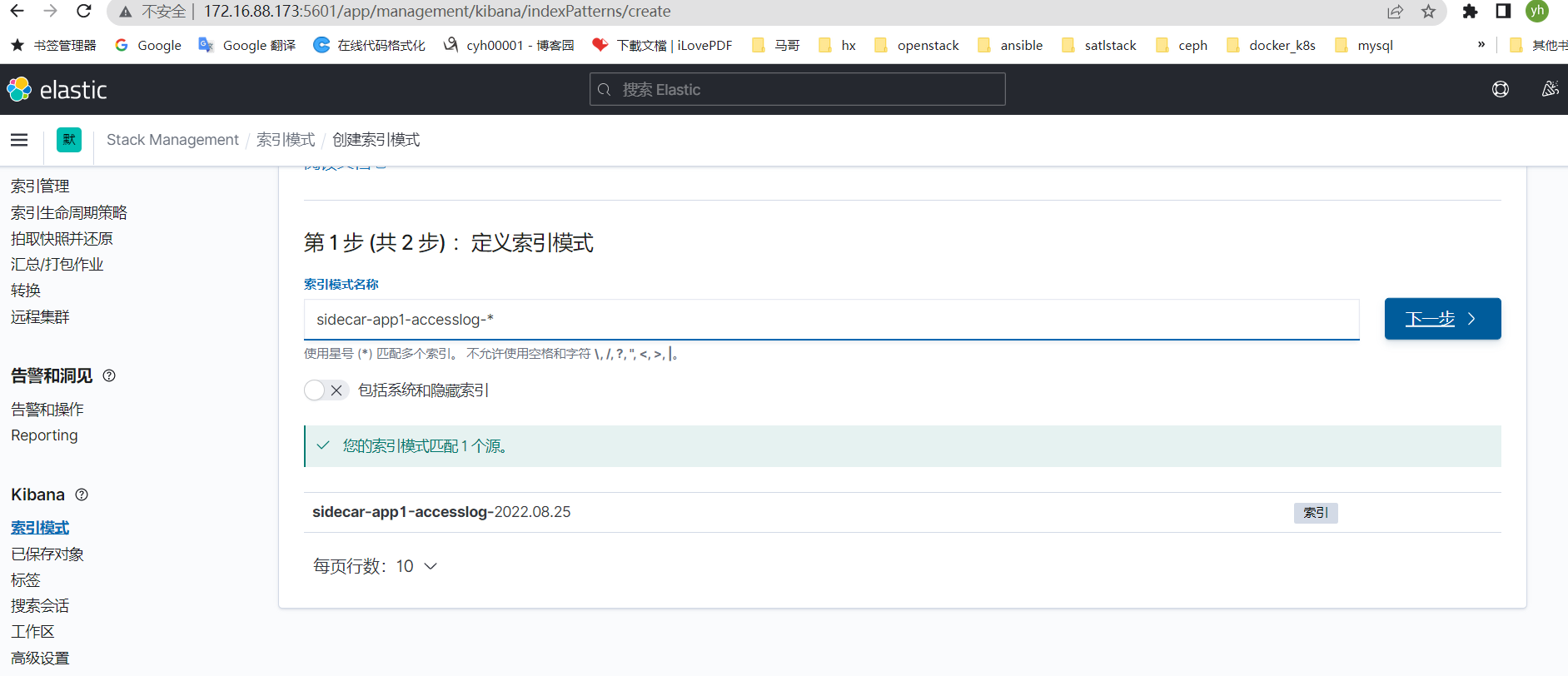

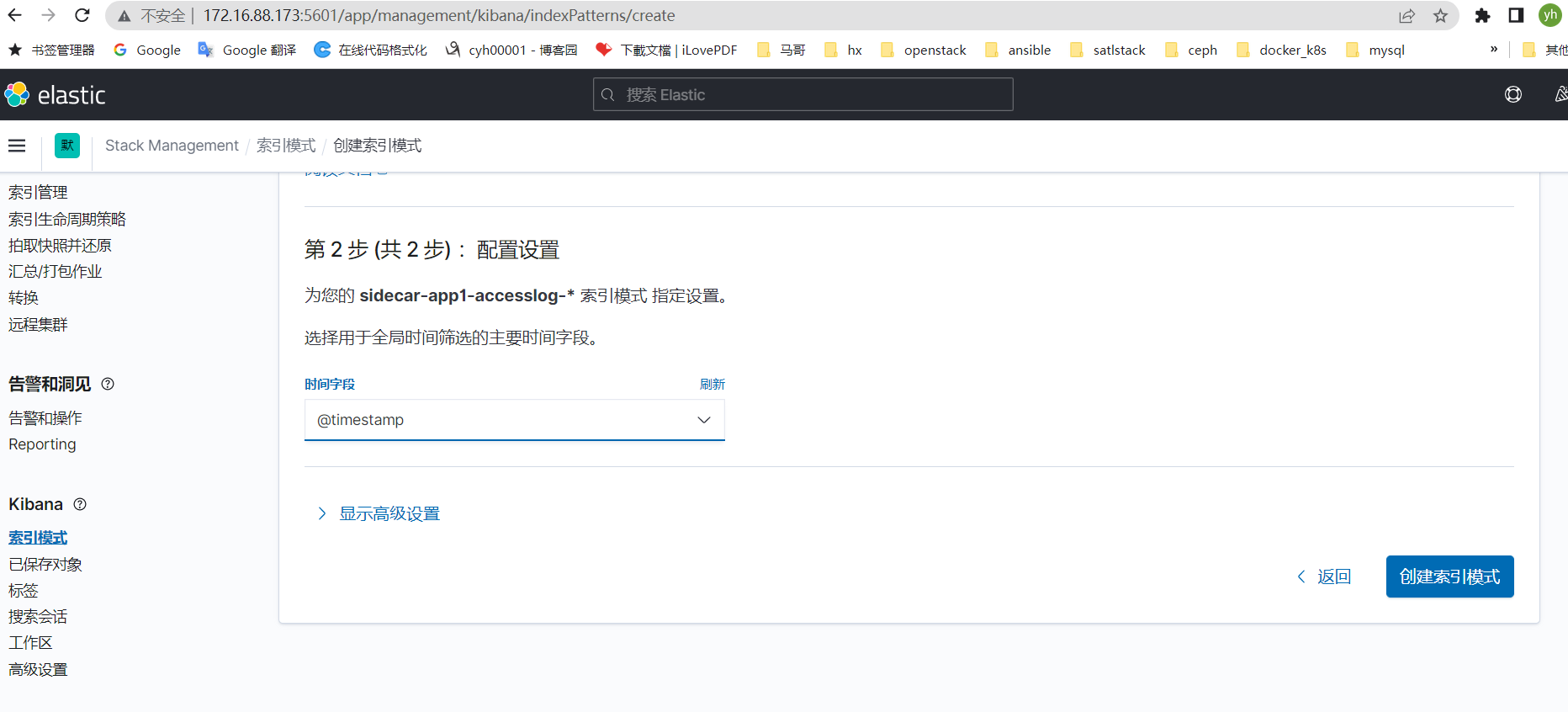

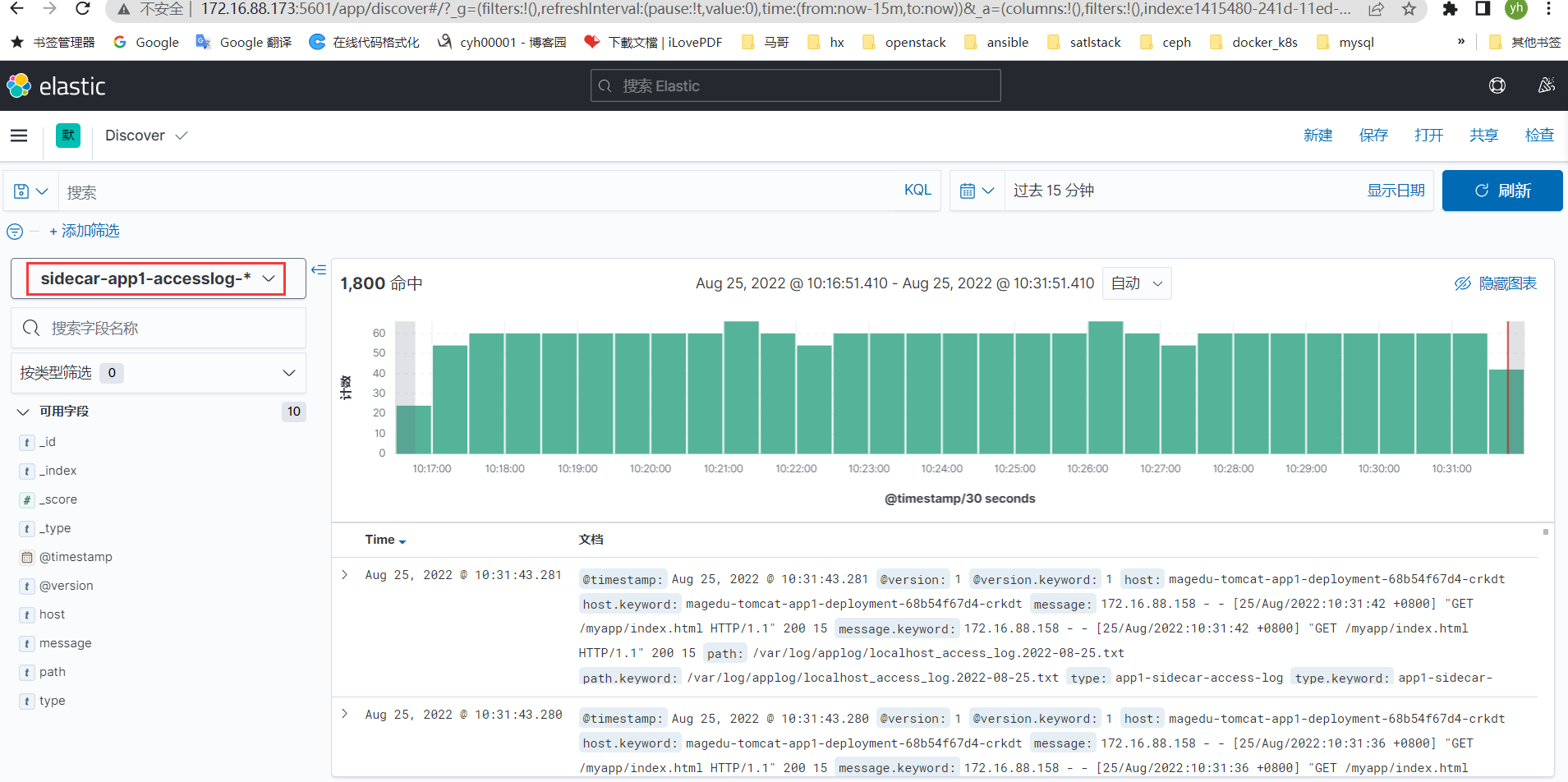

7.6、配置kibana

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 葡萄城 AI 搜索升级:DeepSeek 加持,客户体验更智能

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏