基于DaemonSet部署prometheus、node-exporter、fluentd-elasticsearch

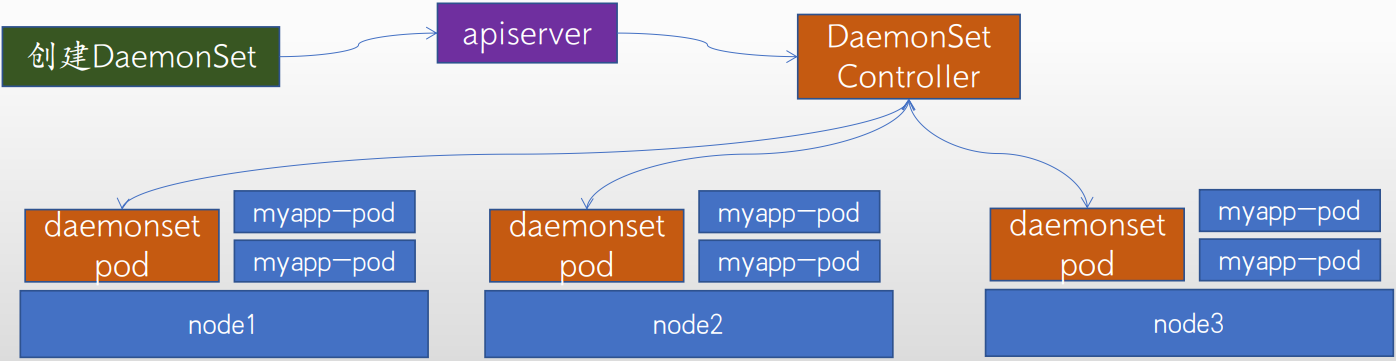

一、DaemonSet简介

DaemonSet 在当前集群中每个节点运行同一个pod, 当有新的节点加入集群时也会为新的节点配置相同的pod, 当节点从集群中移除时其pod也会被kubernetes回收, 删除DaemonSet 控制器将删除其创建的所有的pod。

官方文档:https://kubernetes.io/zh/docs/concepts/workloads/controllers/daemonset/

二、基于DaemonSet部署prometheus与node-exporter

node-exporter安装部署

root@easzlab-deploy:~/jiege-k8s/20220911# cat case2-daemonset-deploy-node-exporter.yaml apiVersion: apps/v1 kind: DaemonSet metadata: name: node-exporter namespace: monitor labels: k8s-app: node-exporter spec: selector: matchLabels: k8s-app: node-exporter template: metadata: labels: k8s-app: node-exporter spec: tolerations: - effect: NoSchedule key: node-role.kubernetes.io/master containers: - image: prom/node-exporter:v1.3.1 imagePullPolicy: IfNotPresent name: prometheus-node-exporter ports: - containerPort: 9100 hostPort: 9100 protocol: TCP name: metrics volumeMounts: - mountPath: /host/proc name: proc - mountPath: /host/sys name: sys - mountPath: /host name: rootfs args: - --path.procfs=/host/proc - --path.sysfs=/host/sys - --path.rootfs=/host volumes: - name: proc hostPath: path: /proc - name: sys hostPath: path: /sys - name: rootfs hostPath: path: / hostNetwork: true hostPID: true --- apiVersion: v1 kind: Service metadata: annotations: prometheus.io/scrape: "true" labels: k8s-app: node-exporter name: node-exporter namespace: monitor spec: type: NodePort ports: - name: http port: 9100 nodePort: 39100 protocol: TCP selector: k8s-app: node-exporter root@easzlab-deploy:~/jiege-k8s/20220911# root@easzlab-deploy:~/jiege-k8s/20220911# kubectl create ns monitor namespace/monitor created root@easzlab-deploy:~/jiege-k8s/20220911# kubectl apply -f case2-daemonset-deploy-node-exporter.yaml daemonset.apps/node-exporter created service/node-exporter created root@easzlab-deploy:~/jiege-k8s/20220911# root@easzlab-deploy:~/jiege-k8s/20220911# kubectl get pod -n monitor -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES node-exporter-4ctcn 1/1 Running 0 42s 172.16.88.160 172.16.88.160 <none> <none> node-exporter-8d8q5 1/1 Running 0 42s 172.16.88.162 172.16.88.162 <none> <none> node-exporter-9wrcr 1/1 Running 0 42s 172.16.88.164 172.16.88.164 <none> <none> node-exporter-js5h7 1/1 Running 0 42s 172.16.88.161 172.16.88.161 <none> <none> node-exporter-qgfjb 1/1 Running 0 42s 172.16.88.163 172.16.88.163 <none> <none> node-exporter-rdzfr 1/1 Running 0 42s 172.16.88.165 172.16.88.165 <none> <none> node-exporter-tqnbd 1/1 Running 0 42s 172.16.88.159 172.16.88.159 <none> <none> node-exporter-v2ts5 1/1 Running 0 42s 172.16.88.157 172.16.88.157 <none> <none> node-exporter-zp2xf 1/1 Running 0 42s 172.16.88.158 172.16.88.158 <none> <none> root@easzlab-deploy:~/jiege-k8s/20220911#

安装Prometheus configmap配置文件

root@easzlab-deploy:~/jiege-k8s/20220911# cat case3-1-prometheus-cfg.yaml --- kind: ConfigMap apiVersion: v1 metadata: labels: app: prometheus name: prometheus-config namespace: monitor data: prometheus.yml: | global: scrape_interval: 15s scrape_timeout: 10s evaluation_interval: 1m scrape_configs: - job_name: 'kubernetes-node' kubernetes_sd_configs: - role: node relabel_configs: - source_labels: [__address__] regex: '(.*):10250' replacement: '${1}:9100' target_label: __address__ action: replace - action: labelmap regex: __meta_kubernetes_node_label_(.+) - job_name: 'kubernetes-node-cadvisor' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor - job_name: 'kubernetes-apiserver' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https - job_name: 'kubernetes-service-endpoints' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme] action: replace target_label: __scheme__ regex: (https?) - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port] action: replace target_label: __address__ regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: replace target_label: kubernetes_service_name root@easzlab-deploy:~/jiege-k8s/20220911# root@easzlab-deploy:~/jiege-k8s/20220911# kubectl apply -f case3-1-prometheus-cfg.yaml configmap/prometheus-config created root@easzlab-deploy:~/jiege-k8s/20220911# root@easzlab-deploy:~/jiege-k8s/20220911# kubectl get configmap -n monitor NAME DATA AGE istio-ca-root-cert 1 4m10s kube-root-ca.crt 1 4m10s prometheus-config 1 25s root@easzlab-deploy:~/jiege-k8s/20220911#

安装Prometheus服务

[root@easzlab-k8s-node-01 ~]# mkdir -p /data/prometheusdata [root@easzlab-k8s-node-01 ~]# chmod 777 /data/prometheusdata [root@easzlab-k8s-node-01 ~]# chown 65534.65534 /data/prometheusdata -R [root@easzlab-k8s-node-01 ~]# root@easzlab-deploy:~/jiege-k8s/20220911# cat case3-2-prometheus-deployment.yaml --- apiVersion: apps/v1 kind: Deployment metadata: name: prometheus-server namespace: monitor labels: app: prometheus spec: replicas: 1 selector: matchLabels: app: prometheus component: server #matchExpressions: #- {key: app, operator: In, values: [prometheus]} #- {key: component, operator: In, values: [server]} template: metadata: labels: app: prometheus component: server annotations: prometheus.io/scrape: 'false' spec: nodeName: 172.16.88.160 serviceAccountName: monitor containers: - name: prometheus image: prom/prometheus:v2.31.2 imagePullPolicy: IfNotPresent command: - prometheus - --config.file=/etc/prometheus/prometheus.yml - --storage.tsdb.path=/prometheus - --storage.tsdb.retention=720h ports: - containerPort: 9090 protocol: TCP volumeMounts: - mountPath: /etc/prometheus/prometheus.yml name: prometheus-config subPath: prometheus.yml - mountPath: /prometheus/ name: prometheus-storage-volume volumes: - name: prometheus-config configMap: name: prometheus-config items: - key: prometheus.yml path: prometheus.yml mode: 0644 - name: prometheus-storage-volume hostPath: path: /data/prometheusdata type: Directory root@easzlab-deploy:~/jiege-k8s/20220911# root@easzlab-deploy:~/jiege-k8s/20220911# kubectl create serviceaccount monitor -n monitor serviceaccount/monitor created root@easzlab-deploy:~/jiege-k8s/20220911# kubectl create clusterrolebinding monitor-clusterrolebinding -n monitor --clusterrole=cluster-admin --serviceaccount=monitor:monitor clusterrolebinding.rbac.authorization.k8s.io/monitor-clusterrolebinding created root@easzlab-deploy:~/jiege-k8s/20220911# kubectl apply -f case3-2-prometheus-deployment.yaml deployment.apps/prometheus-server created root@easzlab-deploy:~/jiege-k8s/20220911# root@easzlab-deploy:~/jiege-k8s/20220911# kubectl get deployment -A NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE argocd argocd-applicationset-controller 1/1 1 1 3d17h argocd argocd-dex-server 1/1 1 1 3d17h argocd argocd-notifications-controller 1/1 1 1 3d17h argocd argocd-redis-ha-haproxy 3/3 3 3 3d17h argocd argocd-repo-server 2/2 2 2 3d17h argocd argocd-server 2/2 2 2 3d17h istio-system istio-egressgateway 1/1 1 1 3d15h istio-system istio-ingressgateway 1/1 1 1 3d15h istio-system istiod 1/1 1 1 3d15h istio details-v1 1/1 1 1 3d15h istio productpage-v1 1/1 1 1 3d15h istio ratings-v1 1/1 1 1 3d15h istio reviews-v1 1/1 1 1 3d15h istio reviews-v2 1/1 1 1 3d15h istio reviews-v3 1/1 1 1 3d15h kube-system calico-kube-controllers 1/1 1 1 3d22h kube-system coredns 2/2 2 2 3d22h kube-system kuboard 1/1 1 1 3d22h kube-system metrics-server 1/1 1 1 3d17h kubernetes-dashboard dashboard-metrics-scraper 1/1 1 1 3d19h kubernetes-dashboard kubernetes-dashboard 1/1 1 1 3d19h monitor prometheus-server 1/1 1 1 85s velero-system velero 1/1 1 1 3d19h root@easzlab-deploy:~/jiege-k8s/20220911# root@easzlab-deploy:~/jiege-k8s/20220911# kubectl get pod -n monitor NAME READY STATUS RESTARTS AGE node-exporter-4ctcn 1/1 Running 0 17m node-exporter-8d8q5 1/1 Running 0 17m node-exporter-9wrcr 1/1 Running 0 17m node-exporter-js5h7 1/1 Running 0 17m node-exporter-qgfjb 1/1 Running 0 17m node-exporter-rdzfr 1/1 Running 0 17m node-exporter-tqnbd 1/1 Running 0 17m node-exporter-v2ts5 1/1 Running 0 17m node-exporter-zp2xf 1/1 Running 0 17m prometheus-server-6d59f75647-p6tm8 1/1 Running 0 87s root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case5#

安装Prometheus service

root@easzlab-deploy:~/jiege-k8s/20220911# cat case3-3-prometheus-svc.yaml --- apiVersion: v1 kind: Service metadata: name: prometheus namespace: monitor labels: app: prometheus spec: type: NodePort ports: - port: 9090 targetPort: 9090 nodePort: 30090 protocol: TCP selector: app: prometheus component: server root@easzlab-deploy:~/jiege-k8s/20220911# root@easzlab-deploy:~/jiege-k8s/20220911# kubectl apply -f case3-3-prometheus-svc.yaml service/prometheus created root@easzlab-deploy:~/jiege-k8s/20220911# kubectl get svc -n monitor NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE node-exporter NodePort 10.100.170.33 <none> 9100:39100/TCP 24m prometheus NodePort 10.100.132.57 <none> 9090:30090/TCP 8s root@easzlab-deploy:~/jiege-k8s/20220911#

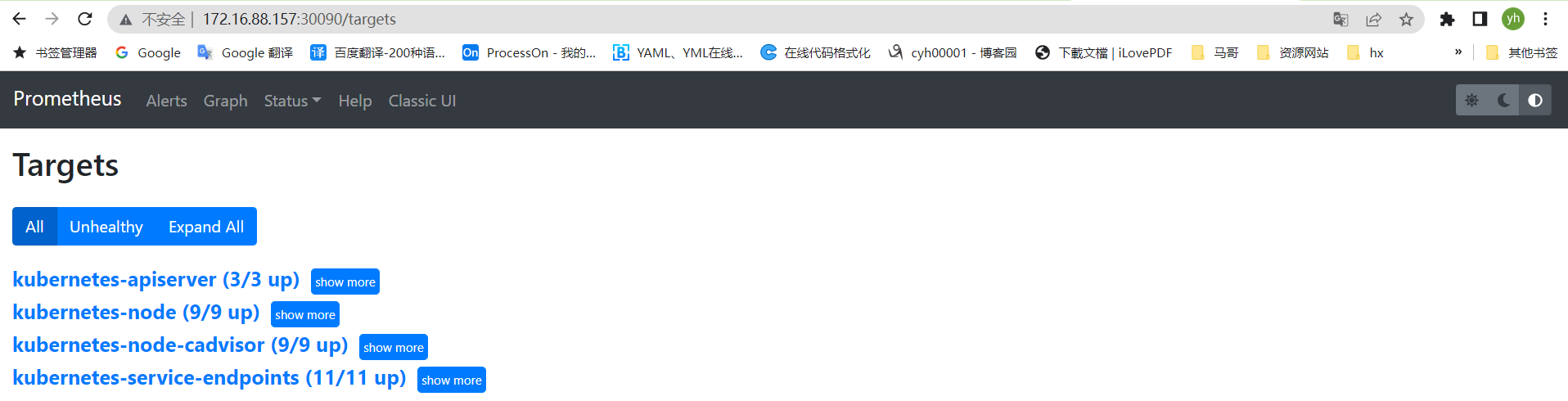

验证

三、基于DaemonSet安装fluentd-elasticsearch

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case7# docker pull quay.io/fluentd_elasticsearch/fluentd:v2.5.2 root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case7# docker tag quay.io/fluentd_elasticsearch/fluentd:v2.5.2 harbor.magedu.net/fluentd_elasticsearch/fluentd:v2.5.2 root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case7# docker push harbor.magedu.net/fluentd_elasticsearch/fluentd:v2.5.2 root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case7# vi daemonset.yaml root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case7# cat daemonset.yaml apiVersion: apps/v1 kind: DaemonSet metadata: name: fluentd-elasticsearch namespace: kube-system labels: k8s-app: fluentd-logging spec: selector: matchLabels: name: fluentd-elasticsearch template: metadata: labels: name: fluentd-elasticsearch spec: tolerations: # this toleration is to have the daemonset runnable on master nodes # remove it if your masters can't run pods - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule containers: - name: fluentd-elasticsearch image: harbor.magedu.net/fluentd_elasticsearch/fluentd:v2.5.2 resources: limits: memory: 200Mi requests: cpu: 100m memory: 200Mi volumeMounts: - name: varlog mountPath: /var/log - name: varlibdockercontainers mountPath: /var/lib/docker/containers readOnly: true terminationGracePeriodSeconds: 30 volumes: - name: varlog hostPath: path: /var/log - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case7# kubectl apply -f daemonset.yaml daemonset.apps/fluentd-elasticsearch created root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case7# root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case7# kubectl get pod -n kube-system -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-5c8bb696bb-5nrbl 1/1 Running 3 (2d18h ago) 3d23h 172.16.88.160 172.16.88.160 <none> <none> calico-node-68k76 1/1 Running 2 (2d18h ago) 3d21h 172.16.88.160 172.16.88.160 <none> <none> calico-node-bz8pp 1/1 Running 2 (2d18h ago) 3d21h 172.16.88.158 172.16.88.158 <none> <none> calico-node-cfl2g 1/1 Running 2 (2d18h ago) 3d21h 172.16.88.163 172.16.88.163 <none> <none> calico-node-hsrfh 1/1 Running 2 (2d18h ago) 3d21h 172.16.88.159 172.16.88.159 <none> <none> calico-node-j8kgf 1/1 Running 2 (2d18h ago) 3d21h 172.16.88.161 172.16.88.161 <none> <none> calico-node-kdb5j 1/1 Running 2 (2d18h ago) 3d21h 172.16.88.164 172.16.88.164 <none> <none> calico-node-mqmhp 1/1 Running 2 (2d18h ago) 3d21h 172.16.88.165 172.16.88.165 <none> <none> calico-node-x6ctf 1/1 Running 2 (2d18h ago) 3d21h 172.16.88.162 172.16.88.162 <none> <none> calico-node-xh79g 1/1 Running 2 3d21h 172.16.88.157 172.16.88.157 <none> <none> coredns-69548bdd5f-9md6c 1/1 Running 2 (2d18h ago) 3d22h 10.200.104.209 172.16.88.163 <none> <none> coredns-69548bdd5f-n6rvg 1/1 Running 2 (2d18h ago) 3d22h 10.200.2.24 172.16.88.162 <none> <none> fluentd-elasticsearch-54shp 1/1 Running 0 46s 10.200.233.100 172.16.88.161 <none> <none> fluentd-elasticsearch-8x2pz 1/1 Running 0 46s 10.200.2.28 172.16.88.162 <none> <none> fluentd-elasticsearch-kjtx8 1/1 Running 0 46s 10.200.105.165 172.16.88.164 <none> <none> fluentd-elasticsearch-knvjc 1/1 Running 0 47s 10.200.141.193 172.16.88.158 <none> <none> fluentd-elasticsearch-np9bz 1/1 Running 0 47s 10.200.174.65 172.16.88.159 <none> <none> fluentd-elasticsearch-q225t 1/1 Running 0 47s 10.200.104.236 172.16.88.163 <none> <none> fluentd-elasticsearch-rdz9b 1/1 Running 0 47s 10.200.40.202 172.16.88.160 <none> <none> fluentd-elasticsearch-rvdh7 1/1 Running 0 46s 10.200.126.129 172.16.88.157 <none> <none> fluentd-elasticsearch-zvqdx 1/1 Running 0 46s 10.200.245.31 172.16.88.165 <none> <none> kuboard-7dc6ffdd7c-njhnb 1/1 Running 2 (2d18h ago) 3d22h 10.200.245.26 172.16.88.165 <none> <none> metrics-server-686ff776cf-dbgq6 1/1 Running 4 (2d18h ago) 3d17h 10.200.245.25 172.16.88.165 <none> <none> root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case7#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case7# kubectl get ds -A NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE kube-system calico-node 9 9 9 9 9 kubernetes.io/os=linux 4d kube-system fluentd-elasticsearch 9 9 9 9 9 <none> 17s root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case7#

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· 写一个简单的SQL生成工具

· AI 智能体引爆开源社区「GitHub 热点速览」

· C#/.NET/.NET Core技术前沿周刊 | 第 29 期(2025年3.1-3.9)