easzlab二进制安装k8s(1.24.2)高可用集群

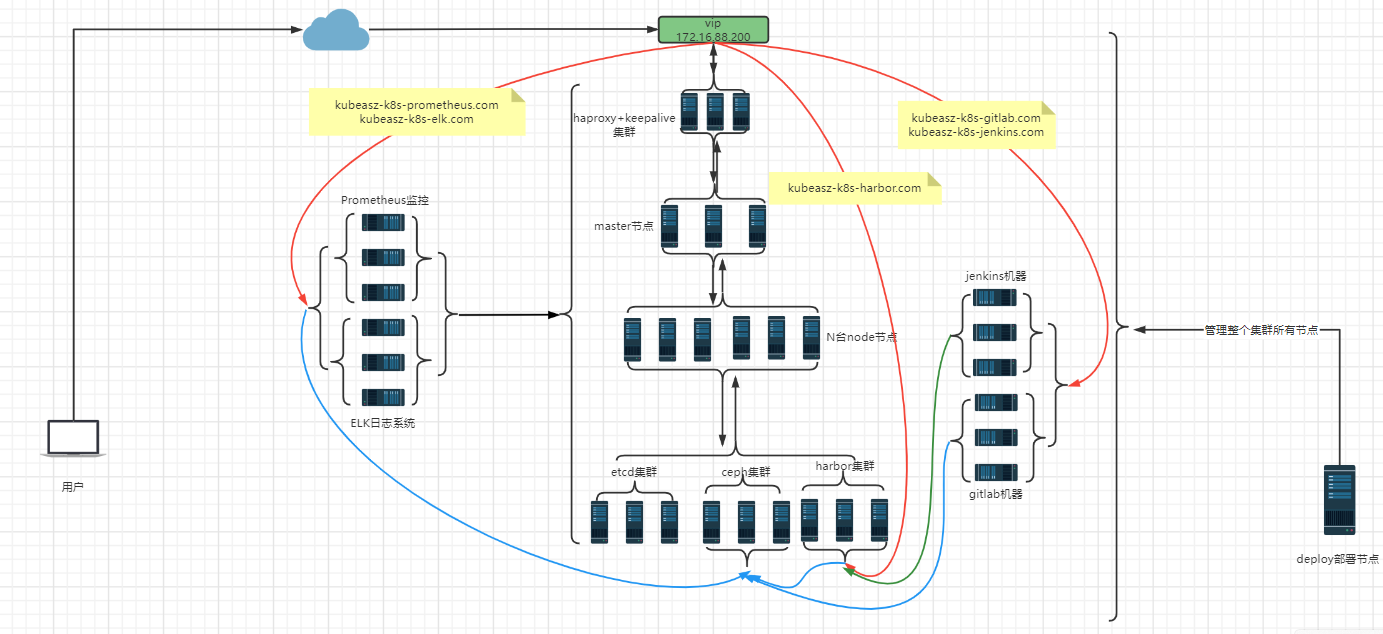

easzlab部署架构图

一、环境机器准备

#部署机器

172.16.88.150 easzlab-deploy

#负载均衡集群 172.16.88.151 easzlab-haproxy-keepalive-01 172.16.88.152 easzlab-haproxy-keepalive-02 172.16.88.153 easzlab-haproxy-keepalive-03

172.16.88.200 k8s-vip #k8s集群入口vip地址

#k8s etcd集群 172.16.88.154 easzlab-k8s-etcd-01 172.16.88.155 easzlab-k8s-etcd-02 172.16.88.156 easzlab-k8s-etcd-03 #k8s集群节点 172.16.88.157 easzlab-k8s-master-01 172.16.88.158 easzlab-k8s-master-02 172.16.88.159 easzlab-k8s-master-03 172.16.88.160 easzlab-k8s-node-01 172.16.88.161 easzlab-k8s-node-02 172.16.88.162 easzlab-k8s-node-03 172.16.88.163 easzlab-k8s-node-04 172.16.88.164 easzlab-k8s-node-05 172.16.88.165 easzlab-k8s-node-06 #其余节点 172.16.88.166 easzlab-k8s-harbor-01

172.16.88.170 easzlab-k8s-minio-01 #k8s集群信息定时备份机器 172.16.88.172 easzlab-images-02 #业务镜像打包构建机器

172.16.88.190 easzlab-k8s-harbor-nginx-01 harbor.magedu.net #harbor nginx反向代理节点 #ceph集群 172.16.88.100 ceph-deploy.example.local ceph-deploy 172.16.88.101 ceph-mon1.example.local ceph-mon1 172.16.88.102 ceph-mon2.example.local ceph-mon2 172.16.88.103 ceph-mon3.example.local ceph-mon3 172.16.88.111 ceph-mgr1.example.local ceph-mgr1 172.16.88.111 ceph-mgr2.example.local ceph-mgr2 172.16.88.121 ceph-node1.example.local ceph-node1 172.16.88.122 ceph-node2.example.local ceph-node2 172.16.88.123 ceph-node3.example.local ceph-node3

机器初始化配置

#!/bin/bash #添加apt源 cat > /etc/apt/sources.list <<EOF deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse EOF #安装所需要的包 apt-get update && apt-get install -y wget curl chrony net-tools python3 tcpdump sshpass #配置时间同步 cat > /etc/chrony.conf <<EOF server ntp.aliyun.com iburst stratumweight 0 driftfile /var/lib/chrony/drift rtcsync makestep 10 3 bindcmdaddress 127.0.0.1 bindcmdaddress ::1 keyfile /etc/chrony.keys commandkey 1 generatecommandkey logchange 0.5 logdir /var/log/chrony EOF #启动chronyd服务 systemctl enable --now chrony #关闭防火墙 systemctl stop ufw.service && systemctl disable ufw.service #设置时区 timedatectl set-timezone "Asia/Shanghai" #清清除iptables防火墙规则 iptables -F && iptables -X && iptables -Z 关闭swap swapoff -a sed -i 's@^/swap.img@#swap.ima@g' /etc/fstab

通过ansible配置k8s集群集群信息

#通过ansible批量修改主机名 [vm1] 172.16.88.154 hostname=easzlab-k8s-etcd-01 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.155 hostname=easzlab-k8s-etcd-02 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.156 hostname=easzlab-k8s-etcd-03 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.157 hostname=easzlab-k8s-master-01 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.158 hostname=easzlab-k8s-master-02 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.159 hostname=easzlab-k8s-master-03 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.160 hostname=easzlab-k8s-node-01 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.161 hostname=easzlab-k8s-node-02 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.162 hostname=easzlab-k8s-node-03 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.163 hostname=easzlab-k8s-node-04 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.164 hostname=easzlab-k8s-node-05 ansible_ssh_port=22 ansible_ssh_pass=redhat 172.16.88.165 hostname=easzlab-k8s-node-06 ansible_ssh_port=22 ansible_ssh_pass=redhat [vm] 172.16.88.[151:156] root@easzlab-deploy:~# cat name.yaml --- - hosts: vm1 remote_user: root tasks: - name: change name raw: "echo {{hostname|quote}} > /etc/hostname" - name: shell: hostname {{hostname|quote}} root@easzlab-deploy:~# #批量修改主机名 root@easzlab-deploy:~# ansible-playbook 'vm1' ./name.yaml #批量新增hosts机器 cat add-hosts.sh cat > /etc/hosts <<EOF 127.0.0.1 localhost 127.0.1.1 magedu # The following lines are desirable for IPv6 capable hosts ::1 ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters 172.16.88.151 easzlab-haproxy-keepalive-01 172.16.88.152 easzlab-haproxy-keepalive-02 172.16.88.153 easzlab-haproxy-keepalive-03 172.16.88.154 easzlab-k8s-etcd-01 172.16.88.155 easzlab-k8s-etcd-02 172.16.88.156 easzlab-k8s-etcd-03 172.16.88.157 easzlab-k8s-master-01 172.16.88.158 easzlab-k8s-master-02 172.16.88.159 easzlab-k8s-master-03 172.16.88.160 easzlab-k8s-node-01 172.16.88.161 easzlab-k8s-node-02 172.16.88.162 easzlab-k8s-node-03 172.16.88.163 easzlab-k8s-node-04 172.16.88.164 easzlab-k8s-node-05 172.16.88.165 easzlab-k8s-node-06 EOF 批量增加hosts root@easzlab-deploy:~# ansible 'vm' -m script -a "./add-hosts.sh"

二、安装负载均衡

2.1、配置keepalived

在3台haproxy+keepalived节点分别安装haproxy、keepalived软件包 apt-get install haproxy keepalived -y

cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

master节点keepalived.conf配置 ! Configuration File for keepalived global_defs { router_id easzlab-lvs } vrrp_script check_haproxy { script "/etc/keepalived/check_haproxy.sh" interval 1 weight -30 fall 3 rise 2 timeout 2 } vrrp_instance VI_1 { state MASTER interface enp1s0 garp_master_delay 10 smtp_alert virtual_router_id 51 priority 100 #注意权重 advert_int 1 authentication { auth_type PASS auth_pass easzlab111 } virtual_ipaddress { 172.16.88.200 label enp1s0:1 } track_script { check_haproxy } }

backup-01节点keepalive.conf配置 ! Configuration File for keepalived global_defs { router_id easzlab-lvs } vrrp_script check_haproxy { script "/etc/keepalived/check_haproxy.sh" interval 1 weight -30 fall 3 rise 2 timeout 2 } vrrp_instance VI_1 { state BACKUP interface enp1s0 garp_master_delay 10 smtp_alert virtual_router_id 51 priority 90 advert_int 1 authentication { auth_type PASS auth_pass easzlab111 } virtual_ipaddress { 172.16.88.200 label enp1s0:1 } track_script { check_haproxy } }

backup-02节点keepalived.conf配置 ! Configuration File for keepalived global_defs { router_id easzlab-lvs } vrrp_script check_haproxy { script "/etc/keepalived/check_haproxy.sh" interval 1 weight -30 fall 3 rise 2 timeout 2 } vrrp_instance VI_1 { state BACKUP interface enp1s0 garp_master_delay 10 smtp_alert virtual_router_id 51 priority 80 advert_int 1 authentication { auth_type PASS auth_pass easzlab111 } virtual_ipaddress { 172.16.88.200 label enp1s0:1 } track_script { check_haproxy } }

haproxy服务检查脚本 root@easzlab-haproxy-keepalive-03:/etc/keepalived# cat check_haproxy.sh #!/bin/bash /usr/bin/killall -0 haproxy || systemctl restart haproxy root@easzlab-haproxy-keepalive-03:/etc/keepalived#

启动keepalived服务 systemctl restart keepalived && systemctl enable keepalived

2.2、配置haproxy服务

root@easzlab-haproxy-keepalive-01:~# cat /etc/haproxy/haproxy.cfg ###########全局配置######### global log 127.0.0.1 local0 log 127.0.0.1 local1 notice daemon nbproc 1 #进程数量 maxconn 4096 #最大连接数 user haproxy #运行用户 group haproxy #运行组 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid # Default SSL material locations ca-base /etc/ssl/certs crt-base /etc/ssl/private # See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384 ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256 ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets ########默认配置############ defaults log global mode http #默认模式{ tcp|http|health } option httplog #日志类别,采用httplog option dontlognull #不记录健康检查日志信息 retries 2 #2次连接失败不可用 # option forwardfor #后端服务获得真实ip option httpclose #请求完毕后主动关闭http通道 option abortonclose #服务器负载很高,自动结束比较久的链接 maxconn 4096 #最大连接数 timeout connect 5m #连接超时 timeout client 1m #客户端超时 timeout server 31m #服务器超时 timeout check 10s #心跳检测超时 balance roundrobin #负载均衡方式,轮询 ########统计页面配置######## listen stats bind 172.16.88.200:1080 mode http option httplog log 127.0.0.1 local0 err maxconn 10 #最大连接数 stats refresh 30s stats uri /admin #状态页面 http//ip:1080/admin访问 stats realm Haproxy\ Statistics stats auth admin:admin #用户和密码:admin stats hide-version #隐藏版本信息 stats admin if TRUE #设置手工启动/禁用 #############K8S############### listen k8s_api_nodes_6443 bind 172.16.88.200:6443 mode tcp server easzlab-k8s-master-01 172.16.88.154:6443 check inter 2000 fall 3 rise 5 server easzlab-k8s-master-02 172.16.88.155:6443 check inter 2000 fall 3 rise 5 server easzlab-k8s-master-03 172.16.88.156:6443 check inter 2000 fall 3 rise 5

root@easzlab-haproxy-keepalive-01:~#

拷贝配置haproxy.conf文件到集群其他节点 root@easzlab-haproxy-keepalive-01:~# scp /etc/haproxy/haproxy.cfg root@172.16.88.152:/etc/haproxy/ root@easzlab-haproxy-keepalive-01:~# scp /etc/haproxy/haproxy.cfg root@172.16.88.153:/etc/haproxy/

配置启动haproxy服务 echo "net.ipv4.ip_nonlocal_bind = 1" >> /etc/sysctl.conf && sysctl -p systemctl enable --now haproxy

三、安装harbor服务

可参考:https://www.cnblogs.com/cyh00001/p/16495946.html

3.1、安装docker、docker-compose

root@easzlab-k8s-harbor-01:~# mkdir /docker root@easzlab-k8s-harbor-01:~# tar -xf docker-20.10.17-binary-install.tar.gz #需要提前下载准备 root@easzlab-k8s-harbor-01:~# ll -h docker/ total 225M drwxr-xr-x 3 root root 4.0K Jul 26 19:21 ./ drwxr-xr-x 7 root root 4.0K Oct 15 10:57 ../ -rwxr-xr-x 1 root root 38M Jul 26 19:21 containerd* -rw-r--r-- 1 root root 647 Apr 11 2021 containerd.service -rwxr-xr-x 1 root root 7.3M Jul 26 19:21 containerd-shim* -rwxr-xr-x 1 root root 9.5M Jul 26 19:21 containerd-shim-runc-v2* -rwxr-xr-x 1 root root 23M Jul 26 19:21 ctr* -rw-r--r-- 1 root root 356 Jul 18 19:09 daemon.json drwxrwxr-x 2 1000 docker 4.0K Jun 7 07:03 docker/ -rw-r--r-- 1 root root 62M Jun 7 16:42 docker-20.10.17.tgz -rwxr-xr-x 1 root root 12M Dec 7 2021 docker-compose-Linux-x86_64_1.28.6* -rwxr-xr-x 1 root root 58M Jul 26 19:21 dockerd* -rwxr-xr-x 1 root root 689K Jul 26 19:21 docker-init* -rwxr-xr-x 1 root root 2.9K Jul 21 15:29 docker-install.sh* -rwxr-xr-x 1 root root 2.5M Jul 26 19:21 docker-proxy* -rw-r--r-- 1 root root 1.7K Apr 11 2021 docker.service -rw-r--r-- 1 root root 197 Apr 11 2021 docker.socket -rw-r--r-- 1 root root 454 Apr 11 2021 limits.conf -rwxr-xr-x 1 root root 14M Jul 26 19:21 runc* -rw-r--r-- 1 root root 257 Apr 11 2021 sysctl.conf root@easzlab-k8s-harbor-01:~# root@easzlab-k8s-harbor-01:~# cd docker/ root@easzlab-k8s-harbor-01:~/docker# cat docker-install.sh #!/bin/bash DIR=`pwd` PACKAGE_NAME="docker-20.10.17.tgz" DOCKER_FILE=${DIR}/${PACKAGE_NAME} centos_install_docker(){ grep "Kernel" /etc/issue &> /dev/null if [ $? -eq 0 ];then /bin/echo "当前系统是`cat /etc/redhat-release`,即将开始系统初始化、配置docker-compose与安装docker" && sleep 1 systemctl stop firewalld && systemctl disable firewalld && echo "防火墙已关闭" && sleep 1 systemctl stop NetworkManager && systemctl disable NetworkManager && echo "NetworkManager" && sleep 1 sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux && setenforce 0 && echo "selinux 已关闭" && sleep 1 \cp ${DIR}/limits.conf /etc/security/limits.conf \cp ${DIR}/sysctl.conf /etc/sysctl.conf /bin/tar xvf ${DOCKER_FILE} \cp docker/* /usr/bin mkdir /etc/docker && \cp daemon.json /etc/docker \cp containerd.service /lib/systemd/system/containerd.service \cp docker.service /lib/systemd/system/docker.service \cp docker.socket /lib/systemd/system/docker.socket \cp ${DIR}/docker-compose-Linux-x86_64_1.28.6 /usr/bin/docker-compose groupadd docker && useradd docker -s /sbin/nologin -g docker id -u magedu &> /dev/null if [ $? -ne 0 ];then useradd magedu usermod magedu -G docker else usermod magedu -G docker fi systemctl enable containerd.service && systemctl restart containerd.service systemctl enable docker.service && systemctl restart docker.service systemctl enable docker.socket && systemctl restart docker.socket fi } ubuntu_install_docker(){ grep "Ubuntu" /etc/issue &> /dev/null if [ $? -eq 0 ];then /bin/echo "当前系统是`cat /etc/issue`,即将开始系统初始化、配置docker-compose与安装docker" && sleep 1 \cp ${DIR}/limits.conf /etc/security/limits.conf \cp ${DIR}/sysctl.conf /etc/sysctl.conf /bin/tar xvf ${DOCKER_FILE} \cp docker/* /usr/bin mkdir /etc/docker && \cp daemon.json /etc/docker \cp containerd.service /lib/systemd/system/containerd.service \cp docker.service /lib/systemd/system/docker.service \cp docker.socket /lib/systemd/system/docker.socket \cp ${DIR}/docker-compose-Linux-x86_64_1.28.6 /usr/bin/docker-compose #ulimit -n 1000000 #/bin/su -c - jack "ulimit -n 1000000" /bin/echo "docker 安装完成!" && sleep 1 groupadd docker && useradd docker -r -m -s /sbin/nologin -g docker id -u magedu &> /dev/null if [ $? -ne 0 ];then groupadd -r magedu useradd -r -m -g magedu magedu usermod magedu -G docker else usermod magedu -G docker fi systemctl enable containerd.service && systemctl restart containerd.service systemctl enable docker.service && systemctl restart docker.service systemctl enable docker.socket && systemctl restart docker.socket fi } main(){ centos_install_docker ubuntu_install_docker } main root@easzlab-k8s-harbor-01:~/docker# root@easzlab-k8s-harbor-01:~/docker# vi daemon.json root@easzlab-k8s-harbor-01:~/docker# cat daemon.json { "graph": "/var/lib/docker", "storage-driver": "overlay2", "registry-mirrors": ["https://9916w1ow.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"], "live-restore": false, "log-opts": { "max-file": "5", "max-size": "100m" } } root@easzlab-k8s-harbor-01:~/docker# root@easzlab-k8s-harbor-01:~/docker# bash docker-install.sh #安装docker、docker-compose服务

准备harbor服务安装环境

下载harbor安装包

mkdir /apps wget https://github.com/goharbor/harbor/releases/download/v2.5.3/harbor-offline-installer-v2.5.3.tgz tar -xvzf harbor-offline-installer-v2.5.3.tgz mkdir /apps/harbor/certs cd /apps/harbor && cp harbor.yml.tmpl harbor.yml

修改配置harbor.yml root@easzlab-k8s-harbor-01:/apps/harbor# egrep "^$|^#|^[[:space:]]+#" -v harbor.yml hostname: harbor.magedu.net http: port: 80 #https: # port: 443 # certificate: /apps/harbor/certs/magedu.net.crt # private_key: /apps/harbor/certs/magedu.net.key harbor_admin_password: Harbor12345 database: password: root123 max_idle_conns: 100 max_open_conns: 900 data_volume: /data #注意镜像存放路径 trivy: ignore_unfixed: false skip_update: false offline_scan: false insecure: false jobservice: max_job_workers: 10 notification: webhook_job_max_retry: 10 chart: absolute_url: disabled log: level: info local: rotate_count: 50 rotate_size: 200M location: /var/log/harbor _version: 2.5.0 proxy: http_proxy: https_proxy: no_proxy: components: - core - jobservice - trivy upload_purging: enabled: true age: 168h interval: 24h dryrun: false root@easzlab-k8s-harbor-01:/apps/harbor#

3.2、创建CA证书

#创建证书存放路径 root@easzlab-k8s-harbor-01:~# mkdir -p /apps/harbor/certs/ root@easzlab-k8s-harbor-01:~# cd /apps/harbor/certs/ #生成CA证书私钥 openssl genrsa -out ca.key 4096 #生成CA证书 openssl req -x509 -new -nodes -sha512 -days 3650 -subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=magedu.com" -key ca.key -out ca.crt #生成服务端证书私钥 openssl genrsa -out magedu.net.key 4096 #生成证书签名请求(CSR) openssl req -sha512 -new -subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=magedu.net" -key magedu.net.key -out magedu.net.csr #生成一个x509 v3扩展文件cat > v3.ext <<-EOF authorityKeyIdentifier=keyid,issuer basicConstraints=CA:FALSE keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment extendedKeyUsage = serverAuth subjectAltName = @alt_names [alt_names] DNS.1=magedu.com DNS.2=harbor.magedu.net DNS.3=harbor.magedu.local EOF #使用该v3.ext文件为Harbor主机生成证书 openssl x509 -req -sha512 -days 3650 -extfile v3.ext -CA ca.crt -CAkey ca.key -CAcreateserial -in magedu.net.csr -out magedu.net.crt

#执行完后自动生成ca.srl、magedu.net.csr两个文件

3.3、安装habor服务

运行prepare脚本检查harbor配置

./prepare

[root@docker-compose-harbor harbor]# ./prepare prepare base dir is set to /opt/harbor/harbor Generated configuration file: /config/portal/nginx.conf Generated configuration file: /config/log/logrotate.conf Generated configuration file: /config/log/rsyslog_docker.conf Generated configuration file: /config/nginx/nginx.conf Generated configuration file: /config/core/env Generated configuration file: /config/core/app.conf Generated configuration file: /config/registry/config.yml Generated configuration file: /config/registryctl/env Generated configuration file: /config/registryctl/config.yml Generated configuration file: /config/db/env Generated configuration file: /config/jobservice/env Generated configuration file: /config/jobservice/config.yml Generated and saved secret to file: /data/secret/keys/secretkey Successfully called func: create_root_cert Generated configuration file: /compose_location/docker-compose.yml Clean up the input dir [root@docker-compose-harbor harbor]#

运行install.sh脚本安装harbor

./install.sh

# ./install.sh --with-trivy --with-chartmuseum [Step 0]: checking if docker is installed ... Note: docker version: 20.10.17 [Step 1]: checking docker-compose is installed ... Note: docker-compose version: 1.28.6 [Step 2]: loading Harbor images ... eb50d8bbd990: Loading layer [==================================================>] 7.668MB/7.668MB 04e75300c772: Loading layer [==================================================>] 7.362MB/7.362MB e6830bb442bf: Loading layer [==================================================>] 1MB/1MB Loaded image: goharbor/harbor-portal:v2.5.3 7e761f0c6325: Loading layer [==================================================>] 8.898MB/8.898MB bea2d99bdd9a: Loading layer [==================================================>] 3.584kB/3.584kB 7635b8507a3f: Loading layer [==================================================>] 2.56kB/2.56kB 5374b1e2b14a: Loading layer [==================================================>] 78.75MB/78.75MB 3c111582434e: Loading layer [==================================================>] 5.632kB/5.632kB c634a4d49b0c: Loading layer [==================================================>] 102.9kB/102.9kB 4edf106f0e4f: Loading layer [==================================================>] 15.87kB/15.87kB 732b0f7f2241: Loading layer [==================================================>] 79.66MB/79.66MB 8191a56b80ca: Loading layer [==================================================>] 2.56kB/2.56kB Loaded image: goharbor/harbor-core:v2.5.3 005d5db57e06: Loading layer [==================================================>] 119.7MB/119.7MB 0e3d87aacbc9: Loading layer [==================================================>] 3.072kB/3.072kB b4e26556ed44: Loading layer [==================================================>] 59.9kB/59.9kB 55f587609a73: Loading layer [==================================================>] 61.95kB/61.95kB Loaded image: goharbor/redis-photon:v2.5.3 Loaded image: goharbor/prepare:v2.5.3 a86a26c0452a: Loading layer [==================================================>] 1.096MB/1.096MB 1025dfd257d2: Loading layer [==================================================>] 5.889MB/5.889MB cd51e6d945dd: Loading layer [==================================================>] 168.8MB/168.8MB c68c45fe177d: Loading layer [==================================================>] 16.58MB/16.58MB fa18680022f9: Loading layer [==================================================>] 4.096kB/4.096kB 9f470cfcecff: Loading layer [==================================================>] 6.144kB/6.144kB d9d256f40e6f: Loading layer [==================================================>] 3.072kB/3.072kB f02862555d46: Loading layer [==================================================>] 2.048kB/2.048kB 8cc2449c3a33: Loading layer [==================================================>] 2.56kB/2.56kB 53e7545b8c1b: Loading layer [==================================================>] 2.56kB/2.56kB 62fbef76d294: Loading layer [==================================================>] 2.56kB/2.56kB 7e2d721c6c91: Loading layer [==================================================>] 8.704kB/8.704kB Loaded image: goharbor/harbor-db:v2.5.3 7b5e699985f2: Loading layer [==================================================>] 5.755MB/5.755MB 17bb7303d841: Loading layer [==================================================>] 90.86MB/90.86MB 146be4872a18: Loading layer [==================================================>] 3.072kB/3.072kB 7f44df31c7df: Loading layer [==================================================>] 4.096kB/4.096kB ec5f15201a56: Loading layer [==================================================>] 91.65MB/91.65MB Loaded image: goharbor/chartmuseum-photon:v2.5.3 25ed0962037c: Loading layer [==================================================>] 8.898MB/8.898MB 96bf61ca4a6d: Loading layer [==================================================>] 3.584kB/3.584kB faed05a35aaa: Loading layer [==================================================>] 2.56kB/2.56kB 6b2cce967e64: Loading layer [==================================================>] 90.8MB/90.8MB 47d73d2ec8c4: Loading layer [==================================================>] 91.59MB/91.59MB Loaded image: goharbor/harbor-jobservice:v2.5.3 1b8a5b56dd8f: Loading layer [==================================================>] 5.755MB/5.755MB ef6a1d16e324: Loading layer [==================================================>] 4.096kB/4.096kB 60cf083bf2b3: Loading layer [==================================================>] 17.34MB/17.34MB 54308a335bf1: Loading layer [==================================================>] 3.072kB/3.072kB b507f0c5f1e1: Loading layer [==================================================>] 29.17MB/29.17MB 79b24972e581: Loading layer [==================================================>] 47.31MB/47.31MB Loaded image: goharbor/harbor-registryctl:v2.5.3 35239a1e0d7a: Loading layer [==================================================>] 7.668MB/7.668MB Loaded image: goharbor/nginx-photon:v2.5.3 e0776ca3d7c2: Loading layer [==================================================>] 5.75MB/5.75MB c90a80564f89: Loading layer [==================================================>] 8.543MB/8.543MB 86c0504b8fcb: Loading layer [==================================================>] 14.47MB/14.47MB abde74115d1a: Loading layer [==================================================>] 29.29MB/29.29MB 3ad37faaa958: Loading layer [==================================================>] 22.02kB/22.02kB 1d3c37158629: Loading layer [==================================================>] 14.47MB/14.47MB Loaded image: goharbor/notary-signer-photon:v2.5.3 fa27c9d81dc3: Loading layer [==================================================>] 127MB/127MB 9ca66cb9252f: Loading layer [==================================================>] 3.584kB/3.584kB 09ce0e15f5ba: Loading layer [==================================================>] 3.072kB/3.072kB d0ba49c5841f: Loading layer [==================================================>] 2.56kB/2.56kB 04623512f2e5: Loading layer [==================================================>] 3.072kB/3.072kB 083acf89058c: Loading layer [==================================================>] 3.584kB/3.584kB 5f2000f524c8: Loading layer [==================================================>] 20.99kB/20.99kB Loaded image: goharbor/harbor-log:v2.5.3 425045210126: Loading layer [==================================================>] 8.898MB/8.898MB a0ef3ff89e82: Loading layer [==================================================>] 21.05MB/21.05MB 7facb153a2bf: Loading layer [==================================================>] 4.608kB/4.608kB ca36c2356dc0: Loading layer [==================================================>] 21.84MB/21.84MB Loaded image: goharbor/harbor-exporter:v2.5.3 abd4886cf446: Loading layer [==================================================>] 5.755MB/5.755MB a662294ced4c: Loading layer [==================================================>] 4.096kB/4.096kB e1e02d95f798: Loading layer [==================================================>] 3.072kB/3.072kB 54535cb3135b: Loading layer [==================================================>] 17.34MB/17.34MB a8556cd12eb5: Loading layer [==================================================>] 18.13MB/18.13MB Loaded image: goharbor/registry-photon:v2.5.3 01427a3d3d67: Loading layer [==================================================>] 5.75MB/5.75MB 5cd7cb12cabb: Loading layer [==================================================>] 8.543MB/8.543MB 564dcad1be91: Loading layer [==================================================>] 15.88MB/15.88MB b3020f432a85: Loading layer [==================================================>] 29.29MB/29.29MB 05bbf70fd214: Loading layer [==================================================>] 22.02kB/22.02kB 7cb2819f6977: Loading layer [==================================================>] 15.88MB/15.88MB Loaded image: goharbor/notary-server-photon:v2.5.3 8cc02d219629: Loading layer [==================================================>] 6.283MB/6.283MB 09856854b73c: Loading layer [==================================================>] 4.096kB/4.096kB c53bbce8e1c4: Loading layer [==================================================>] 3.072kB/3.072kB ca0011850458: Loading layer [==================================================>] 91.21MB/91.21MB 0e7337dca995: Loading layer [==================================================>] 12.65MB/12.65MB c1e6b3a22dfd: Loading layer [==================================================>] 104.6MB/104.6MB Loaded image: goharbor/trivy-adapter-photon:v2.5.3 [Step 3]: preparing environment ... [Step 4]: preparing harbor configs ... prepare base dir is set to /apps/harbor Clearing the configuration file: /config/core/app.conf Clearing the configuration file: /config/core/env Clearing the configuration file: /config/registry/config.yml Clearing the configuration file: /config/registry/passwd Clearing the configuration file: /config/portal/nginx.conf Clearing the configuration file: /config/log/logrotate.conf Clearing the configuration file: /config/log/rsyslog_docker.conf Clearing the configuration file: /config/nginx/nginx.conf Clearing the configuration file: /config/db/env Clearing the configuration file: /config/jobservice/config.yml Clearing the configuration file: /config/jobservice/env Clearing the configuration file: /config/registryctl/config.yml Clearing the configuration file: /config/registryctl/env Generated configuration file: /config/portal/nginx.conf Generated configuration file: /config/log/logrotate.conf Generated configuration file: /config/log/rsyslog_docker.conf Generated configuration file: /config/nginx/nginx.conf Generated configuration file: /config/core/env Generated configuration file: /config/core/app.conf Generated configuration file: /config/registry/config.yml Generated configuration file: /config/registryctl/env Generated configuration file: /config/registryctl/config.yml Generated configuration file: /config/db/env Generated configuration file: /config/jobservice/env Generated configuration file: /config/jobservice/config.yml loaded secret from file: /data/secret/keys/secretkey Generated configuration file: /config/trivy-adapter/env Generated configuration file: /config/chartserver/env Generated configuration file: /compose_location/docker-compose.yml Clean up the input dir [Step 5]: starting Harbor ... Creating network "harbor_harbor" with the default driver Creating network "harbor_harbor-chartmuseum" with the default driver Creating harbor-log ... done Creating registry ... done Creating harbor-db ... done Creating chartmuseum ... done Creating registryctl ... done Creating harbor-portal ... done Creating redis ... done Creating trivy-adapter ... done Creating harbor-core ... done Creating harbor-jobservice ... done Creating nginx ... done ✔ ----Harbor has been installed and started successfully.----

root@easzlab-k8s-harbor-01:/apps/harbor# docker images REPOSITORY TAG IMAGE ID CREATED SIZE goharbor/harbor-exporter v2.5.3 d9a8cfa37cf8 3 months ago 87.2MB goharbor/chartmuseum-photon v2.5.3 788b207156ad 3 months ago 225MB goharbor/redis-photon v2.5.3 5dc5331f3de8 3 months ago 154MB goharbor/trivy-adapter-photon v2.5.3 27798821348a 3 months ago 251MB goharbor/notary-server-photon v2.5.3 c686413b72ce 3 months ago 112MB goharbor/notary-signer-photon v2.5.3 a3bc1def3f94 3 months ago 109MB goharbor/harbor-registryctl v2.5.3 942de6829d43 3 months ago 136MB goharbor/registry-photon v2.5.3 fb1278854b91 3 months ago 77.9MB goharbor/nginx-photon v2.5.3 91877cbc147a 3 months ago 44.3MB goharbor/harbor-log v2.5.3 ca36fb3b68a6 3 months ago 161MB goharbor/harbor-jobservice v2.5.3 75e6a7496590 3 months ago 227MB goharbor/harbor-core v2.5.3 93a775677473 3 months ago 203MB goharbor/harbor-portal v2.5.3 d78f9bbad9ee 3 months ago 52.6MB goharbor/harbor-db v2.5.3 bd50ae1eccdf 3 months ago 224MB goharbor/prepare v2.5.3 15102b9ebde6 3 months ago 166MB root@easzlab-k8s-harbor-01:/apps/harbor# docker-compose ps -a Name Command State Ports ------------------------------------------------------------------------------------------------------------------------------------------------ harbor-core /harbor/entrypoint.sh Up (healthy) harbor-db /docker-entrypoint.sh 96 13 Up (healthy) harbor-jobservice /harbor/entrypoint.sh Up (healthy) harbor-log /bin/sh -c /usr/local/bin/ ... Up (healthy) 127.0.0.1:1514->10514/tcp harbor-portal nginx -g daemon off; Up (healthy) nginx nginx -g daemon off; Up (healthy) 0.0.0.0:80->8080/tcp,:::80->8080/tcp, 0.0.0.0:443->8443/tcp,:::443->8443/tcp redis redis-server /etc/redis.conf Up (healthy) registry /home/harbor/entrypoint.sh Up (healthy) registryctl /home/harbor/start.sh Up (healthy) root@easzlab-k8s-harbor-01:/apps/harbor# root@easzlab-k8s-harbor-01:/apps/harbor# netstat -tnlp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 127.0.0.1:34179 0.0.0.0:* LISTEN 830/containerd tcp 0 0 127.0.0.1:1514 0.0.0.0:* LISTEN 1381/docker-proxy tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 1/init tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 1425/docker-proxy tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN 715/systemd-resolve tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 930/sshd: /usr/sbin tcp 0 0 127.0.0.1:6010 0.0.0.0:* LISTEN 962550/sshd: root@p tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 1402/docker-proxy tcp6 0 0 :::111 :::* LISTEN 1/init tcp6 0 0 :::80 :::* LISTEN 1432/docker-proxy tcp6 0 0 :::22 :::* LISTEN 930/sshd: /usr/sbin tcp6 0 0 ::1:6010 :::* LISTEN 962550/sshd: root@p tcp6 0 0 :::443 :::* LISTEN 1409/docker-proxy root@easzlab-k8s-harbor-01:/apps/harbor#

3.4、配置harbor服务nginx反向代理

安装nginx服务 #源码编译安装nginxf服务 [root@easzlab-k8s-harbor-nginx-01 ~]# wget http://nginx.org/download/nginx-1.20.2.tar.gz [root@easzlab-k8s-harbor-nginx-01 ~]# mkdir -p /apps/nginx [root@easzlab-k8s-harbor-nginx-01 ~]# tar -xf nginx-1.20.2.tar.gz [root@easzlab-k8s-harbor-nginx-01 ~]# cd nginx-1.20.2/ [root@easzlab-k8s-harbor-nginx-01 nginx-1.20.2]# ls CHANGES CHANGES.ru LICENSE README auto conf configure contrib html man src [root@easzlab-k8s-harbor-nginx-01 nginx-1.20.2]# apt update && apt -y install gcc make libpcre3 libpcre3-dev openssl libssl-dev zlib1g-dev #安装编译环境 [root@easzlab-k8s-harbor-nginx-01 nginx-1.20.2]#./configure --prefix=/apps/nginx \ --with-http_ssl_module \ --with-http_v2_module \ --with-http_realip_module \ --with-http_stub_status_module \ --with-http_gzip_static_module \ --with-pcre \ --with-stream \ --with-stream_ssl_module \ --with-stream_realip_module [root@easzlab-k8s-harbor-nginx-01 nginx-1.20.2]# make && make install

#同步harbor签发证书到客户端 root@easzlab-k8s-harbor-01:/apps/harbor/certs# scp magedu.net.crt magedu.net.key root@172.16.88.190:/apps/nginx/certs [root@easzlab-k8s-harbor-nginx-01 ~]# ll -h /apps/nginx/certs total 20K drwxr-xr-x 2 root root 4.0K Oct 15 16:00 ./ drwxr-xr-x 7 1001 1001 4.0K Oct 15 15:58 ../ -rw-r--r-- 1 root root 2.1K Oct 15 16:00 magedu.net.crt -rw------- 1 root root 3.2K Oct 15 16:00 magedu.net.key [root@easzlab-k8s-harbor-nginx-01 ~]# [root@easzlab-k8s-harbor-nginx-01 ~]# egrep -v "^$|^#|^[[:space:]]+#" /apps/nginx/conf/nginx.conf worker_processes 1; events { worker_connections 1024; } http { include mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; client_max_body_size 2000m; #设置上传harbor单个镜像最大为2G server { listen 80; listen 443 ssl; server_name harbor.magedu.net; ssl_certificate /apps/nginx/certs/magedu.net.crt; ssl_certificate_key /apps/nginx/certs/magedu.net.key; ssl_session_cache shared:sslcache:20m; ssl_session_timeout 10m; location / { proxy_pass http://172.16.88.166; } error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } } } [root@easzlab-k8s-harbor-nginx-01 conf]# /apps/nginx/sbin/nginx -t nginx: the configuration file /apps/nginx/conf/nginx.conf syntax is ok nginx: configuration file /apps/nginx/conf/nginx.conf test is successful [root@easzlab-k8s-harbor-nginx-01 conf]# /apps/nginx/sbin/nginx [root@easzlab-k8s-harbor-nginx-01 conf]# netstat -tnlp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN 629/systemd-resolve tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 786/sshd: /usr/sbin tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 9702/nginx: master tcp 0 0 127.0.0.1:6011 0.0.0.0:* LISTEN 1231/sshd: root@pts tcp 0 0 127.0.0.1:6012 0.0.0.0:* LISTEN 8978/sshd: root@pts tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 9702/nginx: master tcp6 0 0 :::22 :::* LISTEN 786/sshd: /usr/sbin tcp6 0 0 ::1:6011 :::* LISTEN 1231/sshd: root@pts tcp6 0 0 ::1:6012 :::* LISTEN 8978/sshd: root@pts [root@easzlab-k8s-harbor-nginx-01 conf]#

配置本地域名解析 所有集群节点都添加此项 root@easzlab-deploy:~# ansible 'vm' -m shell -a "echo '172.16.88.190 easzlab-k8s-harbor-nginx-01 harbor.magedu.net' >> /etc/hosts"

虚机登录测试 root@easzlab-deploy:~# mkdir /etc/docker/certs.d/harbor.magedu.net -p root@easzlab-deploy:~# echo "172.16.88.190 easzlab-k8s-harbor-nginx-01 harbor.magedu.net" >> /etc/hosts root@easzlab-k8s-harbor-01:~# scp magedu.net.crt root@172.16.88.150:/etc/docker/certs.d/harbor.magedu.net root@easzlab-deploy:~# docker login harbor.magedu.net Username: admin Password: WARNING! Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded root@easzlab-deploy:~#

修改本地windows hosts

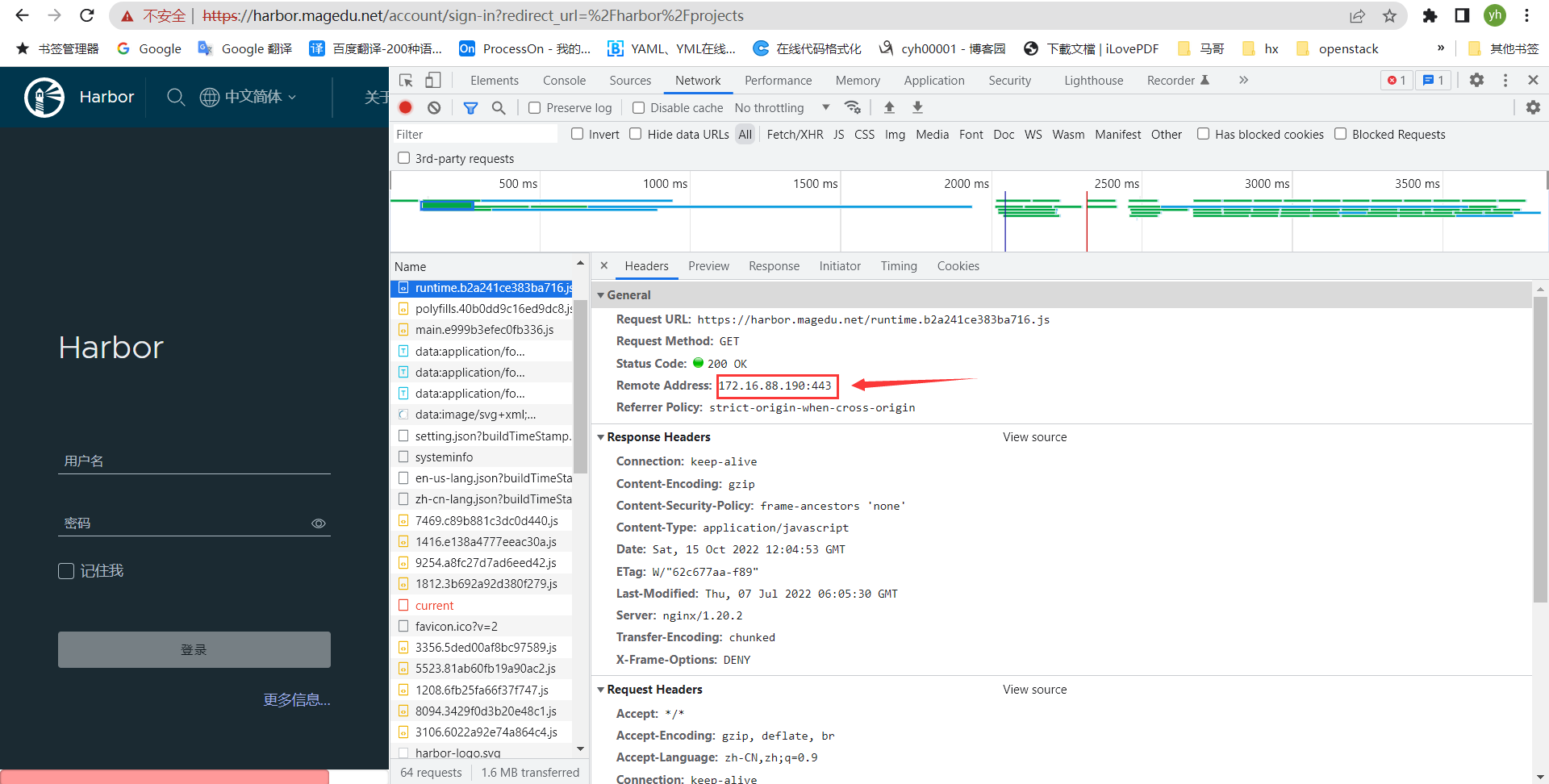

验证harbor nginx反向代理

四、安装k8s集群

4.1、下载安装easzlab

生成本地公钥: ssh-keygen

推送公钥到他其他节点 cat ssh-key-push.sh #!/bin/bash for i in {151..165}; do sshpass -p 'redhat' ssh-copy-id -o StrictHostKeyChecking=no -i /root/.ssh/id_rsa -p 22 root@172.16.88.$i; done

下载工具脚本ezdown,举例使用kubeasz版本3.3.1 export release=3.3.1 wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown chmod +x ./ezdown

下载kubeasz代码、二进制、默认容器镜像(更多关于ezdown的参数,运行./ezdown 查看) # 国内环境 ./ezdown -D # 海外环境 /ezdown -D -m standard

4.2、安装ezdown服务

root@easzlab-deploy:~# ./ezdown -D

安装过程

root@easzlab-deploy:~# ./ezdown -D 2022-07-26 13:59:10 INFO Action begin: download_all 2022-07-26 13:59:10 INFO downloading docker binaries, version 20.10.16 --2022-07-26 13:59:10-- https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/static/stable/x86_64/docker-20.10.16.tgz Resolving mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)... 101.6.15.130 Connecting to mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)|101.6.15.130|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 64969189 (62M) [application/octet-stream] Saving to: ‘docker-20.10.16.tgz’ docker-20.10.16.tgz 100%[===================================================================================================================>] 61.96M 503KB/s in 2m 37s 2022-07-26 14:01:48 (404 KB/s) - ‘docker-20.10.16.tgz’ saved [64969189/64969189] Unit docker.service could not be found. 2022-07-26 14:01:52 DEBUG generate docker service file 2022-07-26 14:01:52 DEBUG generate docker config: /etc/docker/daemon.json 2022-07-26 14:01:52 DEBUG prepare register mirror for CN 2022-07-26 14:01:52 DEBUG enable and start docker Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /etc/systemd/system/docker.service. 2022-07-26 14:01:57 INFO downloading kubeasz: 3.3.1 2022-07-26 14:01:57 DEBUG run a temporary container Unable to find image 'easzlab/kubeasz:3.3.1' locally 3.3.1: Pulling from easzlab/kubeasz 540db60ca938: Downloading d037ddac5dde: Download complete 05d0edf52df4: Download complete 54d94e388fb8: Download complete b25964b87dc1: Download complete aedfadb13329: Download complete 8f6f8140f32b: Download complete 3.3.1: Pulling from easzlab/kubeasz 540db60ca938: Pull complete d037ddac5dde: Pull complete 05d0edf52df4: Pull complete 54d94e388fb8: Pull complete b25964b87dc1: Pull complete aedfadb13329: Pull complete 8f6f8140f32b: Pull complete Digest: sha256:c0cfc314c4caea45a7582a5e03b090901177c4c48210c3df8b209f5b03045f70 Status: Downloaded newer image for easzlab/kubeasz:3.3.1 6f732877c0c3e5be0eb9f451819023fc17b93eb4b2e1c63617c9e18418fbd69e 2022-07-26 14:03:17 DEBUG cp kubeasz code from the temporary container 2022-07-26 14:03:18 DEBUG stop&remove temporary container temp_easz 2022-07-26 14:03:18 INFO downloading kubernetes: v1.24.2 binaries v1.24.2: Pulling from easzlab/kubeasz-k8s-bin 1b7ca6aea1dd: Pull complete d2339c028cfd: Pull complete Digest: sha256:1a41943faa18d7a69e243f4cd9b7b6f1cd7268be7c6358587170c3d3e9e1a34c Status: Downloaded newer image for easzlab/kubeasz-k8s-bin:v1.24.2 docker.io/easzlab/kubeasz-k8s-bin:v1.24.2 2022-07-26 14:04:52 DEBUG run a temporary container a36f7611827da95de06b219b439110444d7dd7503c41f57a2d4c73b5a999f75d 2022-07-26 14:04:53 DEBUG cp k8s binaries 2022-07-26 14:04:57 DEBUG stop&remove temporary container temp_k8s_bin 2022-07-26 14:04:59 INFO downloading extral binaries kubeasz-ext-bin:1.2.0 1.2.0: Pulling from easzlab/kubeasz-ext-bin 1b7ca6aea1dd: Already exists 4a494a9b7425: Pull complete b11479c0b3c6: Pull complete 0351e344774e: Pull complete 1c1e5d29db2d: Pull complete Digest: sha256:a40f30978cca518503811db70ec7734b98ab4378a5c06546bf22de37900f252d Status: Downloaded newer image for easzlab/kubeasz-ext-bin:1.2.0 docker.io/easzlab/kubeasz-ext-bin:1.2.0 2022-07-26 14:06:40 DEBUG run a temporary container 9ff8cb304bfeb6bead68c5e65fc7bccea68ccd23741fd3ebd4196197fb2e19ad 2022-07-26 14:06:41 DEBUG cp extral binaries 2022-07-26 14:06:45 DEBUG stop&remove temporary container temp_ext_bin 2: Pulling from library/registry 79e9f2f55bf5: Pull complete 0d96da54f60b: Pull complete 5b27040df4a2: Pull complete e2ead8259a04: Pull complete 3790aef225b9: Pull complete Digest: sha256:169211e20e2f2d5d115674681eb79d21a217b296b43374b8e39f97fcf866b375 Status: Downloaded newer image for registry:2 docker.io/library/registry:2 2022-07-26 14:07:20 INFO start local registry ... 2b7c1f661614c2947fcd0072719a9300e9da4a2ab8a70583be7725dd8182f8ec 2022-07-26 14:07:21 INFO download default images, then upload to the local registry v3.19.4: Pulling from calico/cni f3894d312a4e: Pull complete 8244094b678e: Pull complete 45b915a54b66: Pull complete Digest: sha256:a866562105d3c18486879d313830d8b4918e8ba25ccd23b7dd84d65093d03c62 Status: Downloaded newer image for calico/cni:v3.19.4 docker.io/calico/cni:v3.19.4 v3.19.4: Pulling from calico/pod2daemon-flexvol 99aa522a8a66: Pull complete beb35b03ed9b: Pull complete 8c61f8de6c67: Pull complete 622403455de3: Pull complete a26eec45c530: Pull complete b02e2914a61e: Pull complete 91f16e6ede78: Pull complete Digest: sha256:d698fbda7a2e895ad45b478ab0b5fdd572cd80629e558dbfcf6e401c6ee6275e Status: Downloaded newer image for calico/pod2daemon-flexvol:v3.19.4 docker.io/calico/pod2daemon-flexvol:v3.19.4 v3.19.4: Pulling from calico/kube-controllers 0a1506fb14ea: Pull complete 6abc1e849f8f: Pull complete 0cfea6002588: Pull complete 91d785239eb0: Pull complete Digest: sha256:b15521e60d8bb04a501fe0ef4bf791fc8c164a175dd49a2328fb3f2b89838a68 Status: Downloaded newer image for calico/kube-controllers:v3.19.4 docker.io/calico/kube-controllers:v3.19.4 v3.19.4: Pulling from calico/node 7563b432e373: Pull complete f1ad2d4094a4: Pull complete Digest: sha256:df027832d91944516046f6baf3f6e74c5130046d2c56f88dc96296681771bc6a Status: Downloaded newer image for calico/node:v3.19.4 docker.io/calico/node:v3.19.4 The push refers to repository [easzlab.io.local:5000/calico/cni] e190560973d0: Pushed 237eb7dff52b: Pushed 7bdb7ca6a5a4: Pushed v3.19.4: digest: sha256:9e1da653e987232cf18df3eb6967c9555a1235d212189b3e4c26f6f9d1601297 size: 946 The push refers to repository [easzlab.io.local:5000/calico/pod2daemon-flexvol] 0312eef4fc3a: Pushed aeeffe0f6b8b: Pushed 672e236e33e9: Pushed e5816bd252f3: Pushed e29ee4bf6f3f: Pushed 9dd9977906c2: Pushed cdc78476cc38: Pushed v3.19.4: digest: sha256:152415638f6cc10fcbc2095069c5286df262c591422fb2608a14c7eee554c259 size: 1788 The push refers to repository [easzlab.io.local:5000/calico/kube-controllers] 568d0e1941e4: Pushed 7094539af214: Pushed 44bbcee30afb: Pushed e47767779496: Pushed v3.19.4: digest: sha256:214b5384028bac797ff16531d71d28f7d658ef3a26837db6bf5466bc5f113bfd size: 1155 The push refers to repository [easzlab.io.local:5000/calico/node] f03078b73155: Pushed 14ec913b26f5: Pushed v3.19.4: digest: sha256:393ff601623e04e685add605920e6c984a1ac74e23cc4232cec7f5013ba8caad size: 737 1.9.3: Pulling from coredns/coredns d92bdee79785: Pull complete f2401d57212f: Pull complete Digest: sha256:8e352a029d304ca7431c6507b56800636c321cb52289686a581ab70aaa8a2e2a Status: Downloaded newer image for coredns/coredns:1.9.3 docker.io/coredns/coredns:1.9.3 The push refers to repository [easzlab.io.local:5000/coredns/coredns] df1818f16337: Pushed 256bc5c338a6: Pushed 1.9.3: digest: sha256:bdb36ee882c13135669cfc2bb91c808a33926ad1a411fee07bd2dc344bb8f782 size: 739 1.21.1: Pulling from easzlab/k8s-dns-node-cache 20b09fbd3037: Downloading af833073aa95: Download complete 1.21.1: Pulling from easzlab/k8s-dns-node-cache 20b09fbd3037: Pull complete af833073aa95: Pull complete Digest: sha256:04c4f6b1f2f2f72441dadcea1c8eec611af4d963315187ceb04b939d1956782f Status: Downloaded newer image for easzlab/k8s-dns-node-cache:1.21.1 docker.io/easzlab/k8s-dns-node-cache:1.21.1 The push refers to repository [easzlab.io.local:5000/easzlab/k8s-dns-node-cache] 8391095a8344: Pushed 87b6a930c8d0: Pushed 1.21.1: digest: sha256:04c4f6b1f2f2f72441dadcea1c8eec611af4d963315187ceb04b939d1956782f size: 741 v2.5.1: Pulling from kubernetesui/dashboard d1d01ae59b08: Pull complete a25bff2a339f: Pull complete Digest: sha256:cc746e7a0b1eec0db01cbabbb6386b23d7af97e79fa9e36bb883a95b7eb96fe2 Status: Downloaded newer image for kubernetesui/dashboard:v2.5.1 docker.io/kubernetesui/dashboard:v2.5.1 The push refers to repository [easzlab.io.local:5000/kubernetesui/dashboard] e98b3744f758: Pushed dab46c9f5775: Pushed v2.5.1: digest: sha256:0c82e96241aa683fe2f8fbdf43530e22863ac8bfaddb0d7d30b4e3a639d4e8c5 size: 736 v1.0.8: Pulling from kubernetesui/metrics-scraper 978be80e3ee3: Pull complete 5866d2c04d96: Pull complete Digest: sha256:76049887f07a0476dc93efc2d3569b9529bf982b22d29f356092ce206e98765c Status: Downloaded newer image for kubernetesui/metrics-scraper:v1.0.8 docker.io/kubernetesui/metrics-scraper:v1.0.8 The push refers to repository [easzlab.io.local:5000/kubernetesui/metrics-scraper] bcec7eb9e567: Pushed d01384fea991: Pushed v1.0.8: digest: sha256:43227e8286fd379ee0415a5e2156a9439c4056807e3caa38e1dd413b0644807a size: 736 v0.5.2: Pulling from easzlab/metrics-server e8614d09b7be: Pull complete 334ef31a5c43: Pull complete Digest: sha256:6879d1d3e42c06fa383aed4988fc8f39901d46fb29d25a5b41c88f9d4b6854b1 Status: Downloaded newer image for easzlab/metrics-server:v0.5.2 docker.io/easzlab/metrics-server:v0.5.2 The push refers to repository [easzlab.io.local:5000/easzlab/metrics-server] b2839a50be1a: Pushed 6d75f23be3dd: Pushed v0.5.2: digest: sha256:6879d1d3e42c06fa383aed4988fc8f39901d46fb29d25a5b41c88f9d4b6854b1 size: 739 3.7: Pulling from easzlab/pause 7582c2cc65ef: Pull complete Digest: sha256:445a99db22e9add9bfb15ddb1980861a329e5dff5c88d7eec9cbf08b6b2f4eb1 Status: Downloaded newer image for easzlab/pause:3.7 docker.io/easzlab/pause:3.7 The push refers to repository [easzlab.io.local:5000/easzlab/pause] 1cb555415fd3: Pushed 3.7: digest: sha256:445a99db22e9add9bfb15ddb1980861a329e5dff5c88d7eec9cbf08b6b2f4eb1 size: 526 3.3.1: Pulling from easzlab/kubeasz Digest: sha256:c0cfc314c4caea45a7582a5e03b090901177c4c48210c3df8b209f5b03045f70 Status: Image is up to date for easzlab/kubeasz:3.3.1 docker.io/easzlab/kubeasz:3.3.1 2022-07-26 14:20:38 INFO Action successed: download_all root@easzlab-deploy:~# root@easzlab-deploy:~# root@easzlab-deploy:~# docker images REPOSITORY TAG IMAGE ID CREATED SIZE easzlab/kubeasz 3.3.1 0c40d5a6cf5d 3 weeks ago 164MB easzlab/kubeasz-k8s-bin v1.24.2 9f87128471fd 3 weeks ago 498MB easzlab/kubeasz-ext-bin 1.2.0 e0ac3a77cea7 4 weeks ago 485MB kubernetesui/metrics-scraper v1.0.8 115053965e86 8 weeks ago 43.8MB easzlab.io.local:5000/kubernetesui/metrics-scraper v1.0.8 115053965e86 8 weeks ago 43.8MB coredns/coredns 1.9.3 5185b96f0bec 8 weeks ago 48.8MB easzlab.io.local:5000/coredns/coredns 1.9.3 5185b96f0bec 8 weeks ago 48.8MB easzlab/pause 3.7 221177c6082a 4 months ago 711kB easzlab.io.local:5000/easzlab/pause 3.7 221177c6082a 4 months ago 711kB kubernetesui/dashboard v2.5.1 7fff914c4a61 4 months ago 243MB easzlab.io.local:5000/kubernetesui/dashboard v2.5.1 7fff914c4a61 4 months ago 243MB calico/node v3.19.4 172a034f7297 5 months ago 155MB easzlab.io.local:5000/calico/node v3.19.4 172a034f7297 5 months ago 155MB calico/pod2daemon-flexvol v3.19.4 054ddbbe5975 5 months ago 20MB easzlab.io.local:5000/calico/pod2daemon-flexvol v3.19.4 054ddbbe5975 5 months ago 20MB easzlab.io.local:5000/calico/cni v3.19.4 84358b137f83 5 months ago 146MB calico/cni v3.19.4 84358b137f83 5 months ago 146MB calico/kube-controllers v3.19.4 0db60d880d2d 5 months ago 60.6MB easzlab.io.local:5000/calico/kube-controllers v3.19.4 0db60d880d2d 5 months ago 60.6MB easzlab/metrics-server v0.5.2 f73640fb5061 8 months ago 64.3MB easzlab.io.local:5000/easzlab/metrics-server v0.5.2 f73640fb5061 8 months ago 64.3MB registry 2 b8604a3fe854 8 months ago 26.2MB easzlab/k8s-dns-node-cache 1.21.1 5bae806f8f12 10 months ago 104MB easzlab.io.local:5000/easzlab/k8s-dns-node-cache 1.21.1 5bae806f8f12 10 months ago 104MB root@easzlab-deploy:~#

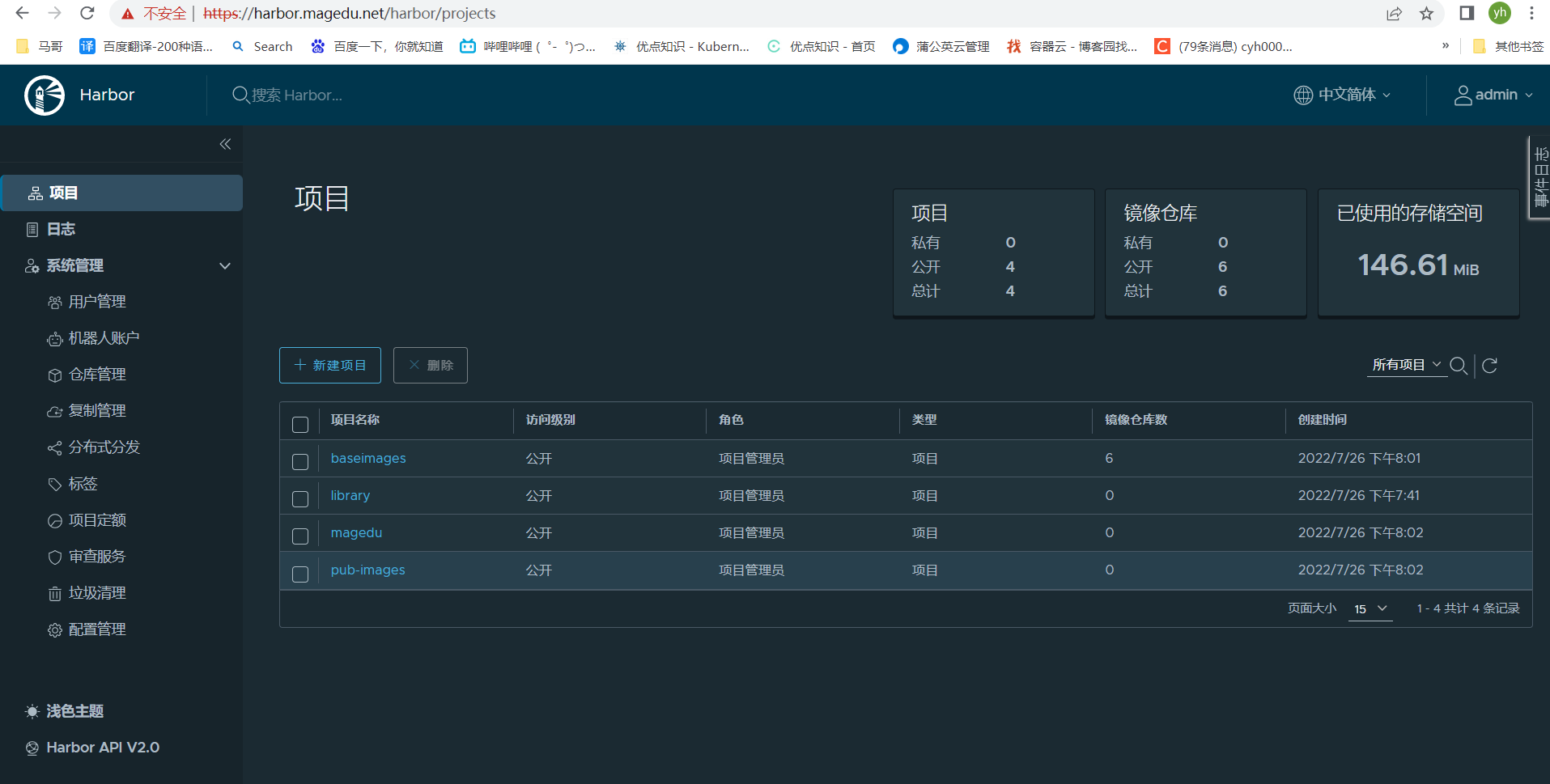

将下面镜像重打tag标签并推送到harbor仓库

docker tag easzlab.io.local:5000/coredns/coredns:1.9.3 harbor.magedu.net/baseimages/coredns:1.9.3 docker tag easzlab.io.local:5000/easzlab/pause:3.7 harbor.magedu.net/baseimages/pause:3.7 docker tag easzlab.io.local:5000/calico/node:v3.19.4 harbor.magedu.net/baseimages/calico/node:v3.19.4 docker tag easzlab.io.local:5000/calico/pod2daemon-flexvol:v3.19.4 harbor.magedu.net/baseimages/calico/pod2daemon-flexvol:v3.19.4 docker tag easzlab.io.local:5000/calico/cni:v3.19.4 harbor.magedu.net/baseimages/calico/cni:v3.19.4 docker tag easzlab.io.local:5000/calico/kube-controllers:v3.19.4 harbor.magedu.net/baseimages/calico/kube-controllers:v3.19.4 docker push harbor.magedu.net/baseimages/coredns:1.9.3 docker push harbor.magedu.net/baseimages/pause:3.7 docker push harbor.magedu.net/baseimages/calico/node:v3.19.4 docker push harbor.magedu.net/baseimages/calico/pod2daemon-flexvol:v3.19.4 docker push harbor.magedu.net/baseimages/calico/cni:v3.19.4 docker push harbor.magedu.net/baseimages/calico/kube-controllers:v3.19.4

4.3、安装k8s集群

创建集群配置实例

root@easzlab-deploy:~# ./ezdown -S #容器化运行kubeasz 2022-07-26 14:31:36 INFO Action begin: start_kubeasz_docker 2022-07-26 14:31:36 INFO try to run kubeasz in a container 2022-07-26 14:31:36 DEBUG get host IP: 172.16.88.150 7f0761e0970b84dde16b8ef50823cfaf92d008e0951a2328660d39c229150c05 2022-07-26 14:31:37 INFO Action successed: start_kubeasz_docker

root@easzlab-deploy:~# docker exec -it kubeasz ezctl new k8s-01 #创建新集群k8s-01 2022-07-26 06:31:47 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-01 2022-07-26 06:31:47 DEBUG set versions 2022-07-26 06:31:47 DEBUG cluster k8s-01: files successfully created. 2022-07-26 06:31:47 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-01/hosts' 2022-07-26 06:31:47 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-01/config.yml' root@easzlab-deploy:~#

修改config配置

/etc/kubeasz/clusters/k8s-01/config.yml

############################ # prepare ############################ # 可选离线安装系统软件包 (offline|online) INSTALL_SOURCE: "online" # 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening OS_HARDEN: false ############################ # role:deploy ############################ # default: ca will expire in 100 years # default: certs issued by the ca will expire in 50 years CA_EXPIRY: "876000h" CERT_EXPIRY: "438000h" # kubeconfig 配置参数 CLUSTER_NAME: "cluster1" CONTEXT_NAME: "context-{{ CLUSTER_NAME }}" # k8s version K8S_VER: "1.24.2" ############################ # role:etcd ############################ # 设置不同的wal目录,可以避免磁盘io竞争,提高性能 ETCD_DATA_DIR: "/var/lib/etcd" ETCD_WAL_DIR: "" ############################ # role:runtime [containerd,docker] ############################ # ------------------------------------------- containerd # [.]启用容器仓库镜像 ENABLE_MIRROR_REGISTRY: true # [containerd]基础容器镜像 SANDBOX_IMAGE: "harbor.magedu.net/baseimages/pause:3.7" # [containerd]容器持久化存储目录 CONTAINERD_STORAGE_DIR: "/var/lib/containerd" # ------------------------------------------- docker # [docker]容器存储目录 DOCKER_STORAGE_DIR: "/var/lib/docker" # [docker]开启Restful API ENABLE_REMOTE_API: false # [docker]信任的HTTP仓库 INSECURE_REG: '["http://easzlab.io.local:5000","harbor.magedu.net"]' ############################ # role:kube-master ############################ # k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名) MASTER_CERT_HOSTS: - "172.16.88.200" - "api.myserver.com" #- "www.test.com" # node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址) # 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段 # https://github.com/coreos/flannel/issues/847 NODE_CIDR_LEN: 24 ############################ # role:kube-node ############################ # Kubelet 根目录 KUBELET_ROOT_DIR: "/var/lib/kubelet" # node节点最大pod 数 MAX_PODS: 500 # 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量 # 数值设置详见templates/kubelet-config.yaml.j2 KUBE_RESERVED_ENABLED: "no" # k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况; # 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2 # 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留 # 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存 SYS_RESERVED_ENABLED: "no" ############################ # role:network [flannel,calico,cilium,kube-ovn,kube-router] ############################ # ------------------------------------------- flannel # [flannel]设置flannel 后端"host-gw","vxlan"等 FLANNEL_BACKEND: "vxlan" DIRECT_ROUTING: false # [flannel] flanneld_image: "quay.io/coreos/flannel:v0.10.0-amd64" flannelVer: "v0.15.1" flanneld_image: "easzlab.io.local:5000/easzlab/flannel:{{ flannelVer }}" # ------------------------------------------- calico # [calico]设置 CALICO_IPV4POOL_IPIP=“off”,可以提高网络性能,条件限制详见 docs/setup/calico.md CALICO_IPV4POOL_IPIP: "Always" # [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现 IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}" # [calico]设置calico 网络 backend: brid, vxlan, none CALICO_NETWORKING_BACKEND: "brid" # [calico]设置calico 是否使用route reflectors # 如果集群规模超过50个节点,建议启用该特性 CALICO_RR_ENABLED: false # CALICO_RR_NODES 配置route reflectors的节点,如果未设置默认使用集群master节点 # CALICO_RR_NODES: ["192.168.1.1", "192.168.1.2"] CALICO_RR_NODES: [] # [calico]更新支持calico 版本: [v3.3.x] [v3.4.x] [v3.8.x] [v3.15.x] calico_ver: "v3.19.4" # [calico]calico 主版本 calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}" # ------------------------------------------- cilium # [cilium]镜像版本 cilium_ver: "1.11.6" cilium_connectivity_check: true cilium_hubble_enabled: false cilium_hubble_ui_enabled: false # ------------------------------------------- kube-ovn # [kube-ovn]选择 OVN DB and OVN Control Plane 节点,默认为第一个master节点 OVN_DB_NODE: "{{ groups['kube_master'][0] }}" # [kube-ovn]离线镜像tar包 kube_ovn_ver: "v1.5.3" # ------------------------------------------- kube-router # [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet" OVERLAY_TYPE: "full" # [kube-router]NetworkPolicy 支持开关 FIREWALL_ENABLE: true # [kube-router]kube-router 镜像版本 kube_router_ver: "v0.3.1" busybox_ver: "1.28.4" ############################ # role:cluster-addon ############################ # coredns 自动安装 dns_install: "no" corednsVer: "1.9.3" ENABLE_LOCAL_DNS_CACHE: false dnsNodeCacheVer: "1.21.1" # 设置 local dns cache 地址 LOCAL_DNS_CACHE: "169.254.20.10" # metric server 自动安装 metricsserver_install: "no" metricsVer: "v0.5.2" # dashboard 自动安装 dashboard_install: "no" dashboardVer: "v2.5.1" dashboardMetricsScraperVer: "v1.0.8" # prometheus 自动安装 prom_install: "no" prom_namespace: "monitor" prom_chart_ver: "35.5.1" # nfs-provisioner 自动安装 nfs_provisioner_install: "no" nfs_provisioner_namespace: "kube-system" nfs_provisioner_ver: "v4.0.2" nfs_storage_class: "managed-nfs-storage" nfs_server: "192.168.1.10" nfs_path: "/data/nfs" # network-check 自动安装 network_check_enabled: false network_check_schedule: "*/5 * * * *" ############################ # role:harbor ############################ # harbor version,完整版本号 HARBOR_VER: "v2.1.3" HARBOR_DOMAIN: "harbor.easzlab.io.local" HARBOR_TLS_PORT: 8443 # if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down' HARBOR_SELF_SIGNED_CERT: true # install extra component HARBOR_WITH_NOTARY: false HARBOR_WITH_TRIVY: false HARBOR_WITH_CLAIR: false HARBOR_WITH_CHARTMUSEUM: true

修改hosts配置

/etc/kubeasz/clusters/k8s-01/hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...) [etcd] 172.16.88.154 172.16.88.155 172.16.88.156 # master node(s) [kube_master] 172.16.88.157 172.16.88.158 172.16.88.159 # work node(s) [kube_node] 172.16.88.160 172.16.88.161 172.16.88.162 172.16.88.163 172.16.88.164 172.16.88.165 # [optional] harbor server, a private docker registry # 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one [harbor] #192.168.1.8 NEW_INSTALL=false # [optional] loadbalance for accessing k8s from outside [ex_lb] #192.168.1.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443 #192.168.1.7 LB_ROLE=master EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443 # [optional] ntp server for the cluster [chrony] #192.168.1.1 [all:vars] # --------- Main Variables --------------- # Secure port for apiservers SECURE_PORT="6443" # Cluster container-runtime supported: docker, containerd # if k8s version >= 1.24, docker is not supported CONTAINER_RUNTIME="containerd" # Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn CLUSTER_NETWORK="calico" # Service proxy mode of kube-proxy: 'iptables' or 'ipvs' PROXY_MODE="ipvs" # K8S Service CIDR, not overlap with node(host) networking SERVICE_CIDR="10.100.0.0/16" # Cluster CIDR (Pod CIDR), not overlap with node(host) networking CLUSTER_CIDR="10.200.0.0/16" # NodePort Range NODE_PORT_RANGE="30000-65535" # Cluster DNS Domain CLUSTER_DNS_DOMAIN="magedu.local" # -------- Additional Variables (don't change the default value right now) --- # Binaries Directory bin_dir="/opt/kube/bin" # Deploy Directory (kubeasz workspace) base_dir="/etc/kubeasz" # Directory for a specific cluster cluster_dir="{{ base_dir }}/clusters/k8s-01" # CA and other components cert/key Directory ca_dir="/etc/kubernetes/ssl"

修改calico安装文件镜像路径

root@easzlab-deploy:/etc/kubeasz# grep image roles/calico/templates/calico-v3.19.yaml.j2 image: easzlab.io.local:5000/calico/cni:{{ calico_ver }} image: easzlab.io.local:5000/calico/pod2daemon-flexvol:{{ calico_ver }} image: easzlab.io.local:5000/calico/node:{{ calico_ver }} image: easzlab.io.local:5000/calico/kube-controllers:{{ calico_ver }} root@easzlab-deploy:/etc/kubeasz# root@easzlab-deploy:/etc/kubeasz# sed -i 's@easzlab.io.local:5000@harbor.magedu.net/baseimages@g' roles/calico/templates/calico-v3.19.yaml.j2 root@easzlab-deploy:/etc/kubeasz# grep image roles/calico/templates/calico-v3.19.yaml.j2 image: harbor.magedu.net/baseimages/calico/cni:{{ calico_ver }} image: harbor.magedu.net/baseimages/calico/pod2daemon-flexvol:{{ calico_ver }} image: harbor.magedu.net/baseimages/calico/node:{{ calico_ver }} image: harbor.magedu.net/baseimages/calico/kube-controllers:{{ calico_ver }} root@easzlab-deploy:/etc/kubeasz#

安装命令参数详情

root@easzlab-deploy:/etc/kubeasz# ./ezctl setup k8s-01 --help Usage: ezctl setup <cluster> <step> available steps: 01 prepare to prepare CA/certs & kubeconfig & other system settings 02 etcd to setup the etcd cluster 03 container-runtime to setup the container runtime(docker or containerd) 04 kube-master to setup the master nodes 05 kube-node to setup the worker nodes 06 network to setup the network plugin 07 cluster-addon to setup other useful plugins 90 all to run 01~07 all at once 10 ex-lb to install external loadbalance for accessing k8s from outside 11 harbor to install a new harbor server or to integrate with an existed one examples: ./ezctl setup test-k8s 01 (or ./ezctl setup test-k8s prepare) ./ezctl setup test-k8s 02 (or ./ezctl setup test-k8s etcd) ./ezctl setup test-k8s all ./ezctl setup test-k8s 04 -t restart_master root@easzlab-deploy:/etc/kubeasz#

安装集群

root@easzlab-deploy:/etc/kubeasz/clusters/k8s-01# ./ezctl setup k8s-01 all

4.4、配置k8s与harbor互信

同步harbor证书到k8s集群节点 root@easzlab-deploy:~# ansible 'vm' -m shell -a "mkdir -p /etc/containerd/certs.d/harbor.magedu.net" #master、node节点创建harbor证书存放路径 root@easzlab-k8s-harbor-01:/apps/harbor/certs# for i in {157..165};do scp ca.crt magedu.net.crt magedu.net.key root@172.16.88.$i:/etc/containerd/certs.d/harbor.magedu.net;done

修改/etc/containerd/config.toml文件,配置认证信息 [root@easzlab-k8s-master-01 ~]# cat -n /etc/containerd/config.toml |grep -C 10 harbor 51 disable_tcp_service = true 52 enable_selinux = false 53 enable_tls_streaming = false 54 enable_unprivileged_icmp = false 55 enable_unprivileged_ports = false 56 ignore_image_defined_volumes = false 57 max_concurrent_downloads = 3 58 max_container_log_line_size = 16384 59 netns_mounts_under_state_dir = false 60 restrict_oom_score_adj = false 61 sandbox_image = "harbor.magedu.net/baseimages/pause:3.7" 62 selinux_category_range = 1024 63 stats_collect_period = 10 64 stream_idle_timeout = "4h0m0s" 65 stream_server_address = "127.0.0.1" 66 stream_server_port = "0" 67 systemd_cgroup = false 68 tolerate_missing_hugetlb_controller = true 69 unset_seccomp_profile = "" 70 71 [plugins."io.containerd.grpc.v1.cri".cni] -- 145 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."easzlab.io.local:5000"] 146 endpoint = ["http://easzlab.io.local:5000"] 147 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] 148 endpoint = ["https://docker.mirrors.ustc.edu.cn", "http://hub-mirror.c.163.com"] 149 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."gcr.io"] 150 endpoint = ["https://gcr.mirrors.ustc.edu.cn"] 151 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s.gcr.io"] 152 endpoint = ["https://gcr.mirrors.ustc.edu.cn/google-containers/"] 153 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."quay.io"] 154 endpoint = ["https://quay.mirrors.ustc.edu.cn"] 155 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.magedu.net"] 156 endpoint = ["https://harbor.magedu.net"] 157 [plugins."io.containerd.grpc.v1.cri".registry.configs."harbor.magedu.net".tls] 158 insecure_skip_verify = true 159 [plugins."io.containerd.grpc.v1.cri".registry.configs."harbor.magedu.net".auth] 160 username = "admin" 161 password = "Harbor12345" 162 163 [plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming] 164 tls_cert_file = "" 165 tls_key_file = "" 166 167 [plugins."io.containerd.internal.v1.opt"] 168 path = "/opt/containerd" 169 [root@easzlab-k8s-master-01 ~]#

拷贝覆盖其他节点的config.toml [root@easzlab-k8s-master-01 ~]# for i in {158..165};do scp /etc/containerd/config.toml root@172.16.88.$i:/etc/containerd/

重启集群所有节点的containerd服务 root@easzlab-deploy:~# ansible 'vm' -m shell -a "systemctl restart containerd.service"

测试验证

master节点验证 [root@easzlab-k8s-master-01 ~]# nerdctl login https://harbor.magedu.net Enter Username: admin Enter Password: WARNING: Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded [root@easzlab-k8s-master-01 ~]#

node节点验证 [root@easzlab-k8s-node-01 ~]# nerdctl login https://harbor.magedu.net Enter Username: admin Enter Password: WARNING: Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded [root@easzlab-k8s-node-01 ~]#

五、安装组件服务

5.1、安装coredns

上传coredns-v1.9.3.yaml

root@easzlab-deploy:~/jiege-k8s# cat coredns-v1.9.3.yaml

# __MACHINE_GENERATED_WARNING__ apiVersion: v1 kind: ServiceAccount metadata: name: coredns namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: kubernetes.io/bootstrapping: rbac-defaults addonmanager.kubernetes.io/mode: Reconcile name: system:coredns rules: - apiGroups: - "" resources: - endpoints - services - pods - namespaces verbs: - list - watch - apiGroups: - "" resources: - nodes verbs: - get - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults addonmanager.kubernetes.io/mode: EnsureExists name: system:coredns roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:coredns subjects: - kind: ServiceAccount name: coredns namespace: kube-system --- apiVersion: v1 kind: ConfigMap metadata: name: coredns namespace: kube-system labels: addonmanager.kubernetes.io/mode: EnsureExists data: Corefile: | .:53 { errors health { lameduck 5s } ready kubernetes magedu.local in-addr.arpa ip6.arpa { pods insecure fallthrough in-addr.arpa ip6.arpa ttl 30 } prometheus :9153 #forward . /etc/resolv.conf { forward . 223.6.6.6 { max_concurrent 1000 } cache 600 loop reload loadbalance } myserver.online { forward . 172.16.88.254:53 } --- apiVersion: apps/v1 kind: Deployment metadata: name: coredns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "CoreDNS" spec: # replicas: not specified here: # 1. In order to make Addon Manager do not reconcile this replicas parameter. # 2. Default is 1. # 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on. replicas: 2 strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns spec: securityContext: seccompProfile: type: RuntimeDefault priorityClassName: system-cluster-critical serviceAccountName: coredns affinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: k8s-app operator: In values: ["kube-dns"] topologyKey: kubernetes.io/hostname tolerations: - key: "CriticalAddonsOnly" operator: "Exists" nodeSelector: kubernetes.io/os: linux containers: - name: coredns image: harbor.magedu.net/baseimages/coredns:1.9.3 imagePullPolicy: IfNotPresent resources: limits: memory: 256Mi cpu: 200m requests: cpu: 100m memory: 70Mi args: [ "-conf", "/etc/coredns/Corefile" ] volumeMounts: - name: config-volume mountPath: /etc/coredns readOnly: true ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP - containerPort: 9153 name: metrics protocol: TCP livenessProbe: httpGet: path: /health port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /ready port: 8181 scheme: HTTP securityContext: allowPrivilegeEscalation: false capabilities: add: - NET_BIND_SERVICE drop: - all readOnlyRootFilesystem: true dnsPolicy: Default volumes: - name: config-volume configMap: name: coredns items: - key: Corefile path: Corefile --- apiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system annotations: prometheus.io/port: "9153" prometheus.io/scrape: "true" labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "CoreDNS" spec: selector: k8s-app: kube-dns clusterIP: 10.100.0.2 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP - name: metrics port: 9153 protocol: TCP

安装coredns

root@easzlab-deploy:~# kubectl apply -f coredns-v1.9.3.yaml serviceaccount/coredns created clusterrole.rbac.authorization.k8s.io/system:coredns created clusterrolebinding.rbac.authorization.k8s.io/system:coredns created configmap/coredns created deployment.apps/coredns created service/kube-dns created root@easzlab-deploy:~#

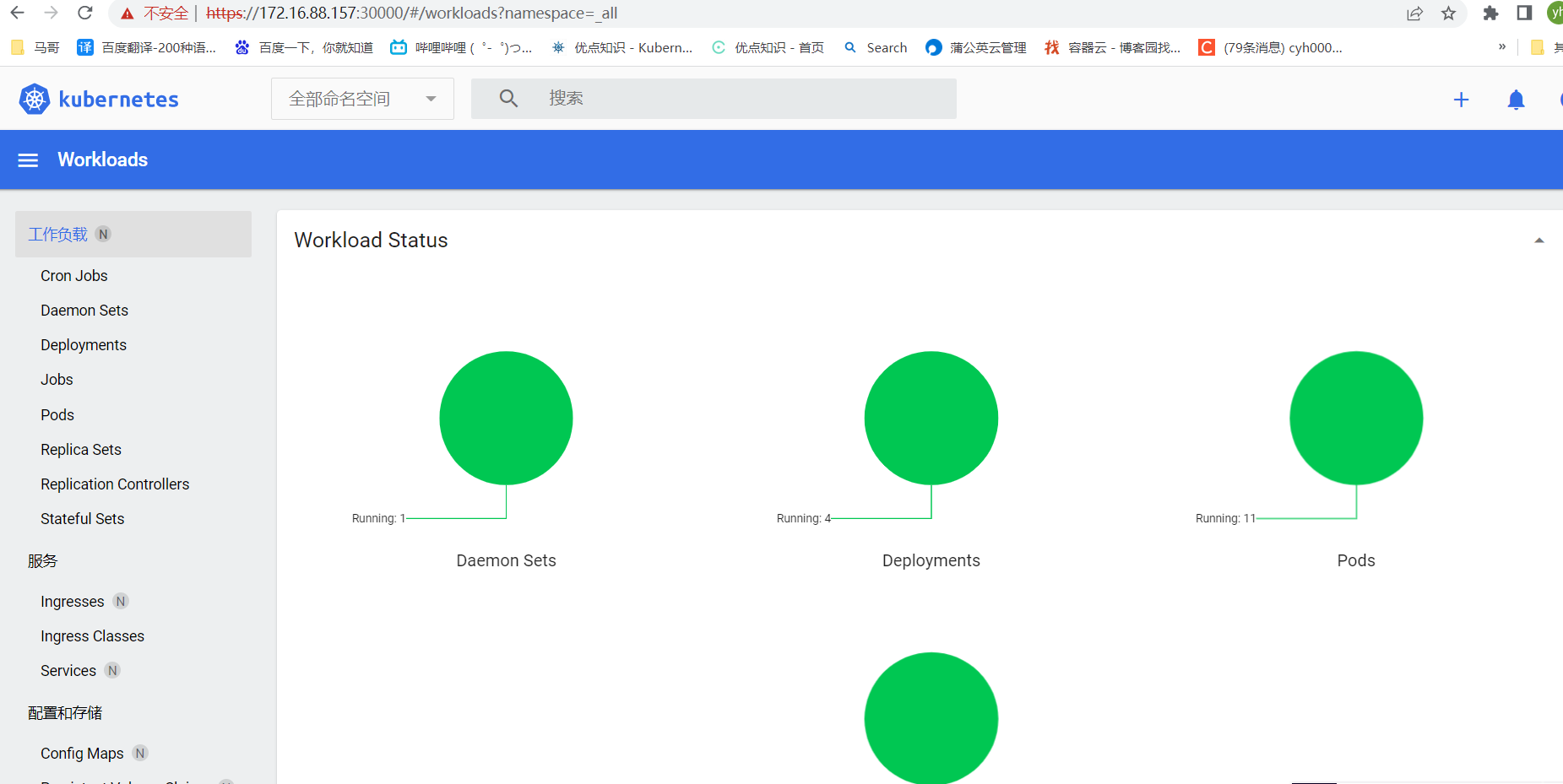

5.2、安装dashborad

admin-user.yaml

apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard

dashborad-v2.6.0.yaml

# Copyright 2017 The Kubernetes Authors. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. apiVersion: v1 kind: Namespace metadata: name: kubernetes-dashboard --- apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: type: NodePort ports: - port: 443 targetPort: 8443 nodePort: 30000 selector: k8s-app: kubernetes-dashboard --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kubernetes-dashboard type: Opaque --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-csrf namespace: kubernetes-dashboard type: Opaque data: csrf: "" --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-key-holder namespace: kubernetes-dashboard type: Opaque --- kind: ConfigMap apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-settings namespace: kubernetes-dashboard --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard rules: # Allow Dashboard to get, update and delete Dashboard exclusive secrets. - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics. - apiGroups: [""] resources: ["services"] resourceNames: ["heapster", "dashboard-metrics-scraper"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"] verbs: ["get"] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard rules: # Allow Metrics Scraper to get metrics from the Metrics server - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes"] verbs: ["get", "list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: securityContext: seccompProfile: type: RuntimeDefault containers: - name: kubernetes-dashboard image: kubernetesui/dashboard:v2.6.0 imagePullPolicy: Always ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates - --namespace=kubernetes-dashboard # Uncomment the following line to manually specify Kubernetes API server Host # If not specified, Dashboard will attempt to auto discover the API server and connect # to it. Uncomment only if the default does not work. # - --apiserver-host=http://my-address:port volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs # Create on-disk volume to store exec logs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard nodeSelector: "kubernetes.io/os": linux # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- kind: Service apiVersion: v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: ports: - port: 8000 targetPort: 8000 selector: k8s-app: dashboard-metrics-scraper --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: dashboard-metrics-scraper template: metadata: labels: k8s-app: dashboard-metrics-scraper spec: securityContext: seccompProfile: type: RuntimeDefault containers: - name: dashboard-metrics-scraper image: kubernetesui/metrics-scraper:v1.0.8 ports: - containerPort: 8000 protocol: TCP livenessProbe: httpGet: scheme: HTTP path: / port: 8000 initialDelaySeconds: 30 timeoutSeconds: 30 volumeMounts: - mountPath: /tmp name: tmp-volume securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 serviceAccountName: kubernetes-dashboard nodeSelector: "kubernetes.io/os": linux # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule volumes: - name: tmp-volume emptyDir: {}

admin-secret.yaml

apiVersion: v1 kind: Secret type: kubernetes.io/service-account-token metadata: name: dashboard-admin-user namespace: kubernetes-dashboard annotations: kubernetes.io/service-account.name: "admin-user"

安装过程

root@easzlab-deploy:~/dashboard# ls admin-secret.yaml admin-user.yaml dashboard-v2.6.0.yaml root@easzlab-deploy:~/dashboard# kubectl apply -f dashboard-v2.6.0.yaml -f admin-user.yaml -f admin-secret.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created serviceaccount/admin-user created clusterrolebinding.rbac.authorization.k8s.io/admin-user created secret/dashboard-admin-user created root@easzlab-deploy:~/dashboard# root@easzlab-deploy:~/dashboard# kubectl get secret -A NAMESPACE NAME TYPE DATA AGE kube-system calico-etcd-secrets Opaque 3 17h kubernetes-dashboard dashboard-admin-user kubernetes.io/service-account-token 3 15s kubernetes-dashboard kubernetes-dashboard-certs Opaque 0 16s kubernetes-dashboard kubernetes-dashboard-csrf Opaque 1 15s kubernetes-dashboard kubernetes-dashboard-key-holder Opaque 0 15s root@easzlab-deploy:~/dashboard# root@easzlab-deploy:~/dashboard# kubectl describe secrets -n kubernetes-dashboard dashboard-admin Name: dashboard-admin-user Namespace: kubernetes-dashboard Labels: <none> Annotations: kubernetes.io/service-account.name: admin-user kubernetes.io/service-account.uid: 68844120-9cfb-4e49-a070-875c67d14bb7 Type: kubernetes.io/service-account-token Data ==== token: eyJhbGciOiJSUzI1NiIsImtpZCI6InB2MkRvUy1fdm5rZHpsM25iV2N3cWN4b01pXzZJMjhQZ3Y2YWQ4aXVLWGsifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdXNlciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbi11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNjg4NDQxMjAtOWNmYi00ZTQ5LWEwNzAtODc1YzY3ZDE0YmI3Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmFkbWluLXVzZXIifQ.X3BxmfZfLRbA4JPdnu-FE_2pn59bXFD9TDr_YLpIauN5cdT9htNa-tujTSKMukwx538f4CGM_kMLGF1HOcSF6R6gDub76hf_jES3WHeG3XmlOCXjlI77orLt5LbaAf6m_Y14JcAipB30mZHXtPArcBGZzuH3cZ-Qa9fQ3iFO2Cj38w6FQzShx5tkiWpuWR_0UswSjGDo2Q664fWvzPhSKyhOx7BBGACWlUYBUuVwZVZOyHrMFk8XkSBjE8GdMlajgAhH1Ri6M0A51_ErvugXInkNWvCTZOqzHGEG059qY_Oz4t0aZrWfxS1XZi4PKiq1nDaQAbNLfyOKSb5mbmS1Lg ca.crt: 1302 bytes namespace: 20 bytes root@easzlab-deploy:~/dashboard# kubectl get pod -A -owide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system calico-kube-controllers-5c8bb696bb-69kws 1/1 Running 0 67m 172.16.88.159 172.16.88.159 <none> <none> kube-system calico-node-cjx4k 1/1 Running 0 67m 172.16.88.154 172.16.88.154 <none> <none> kube-system calico-node-mr977 1/1 Running 0 67m 172.16.88.158 172.16.88.158 <none> <none> kube-system calico-node-q7jbn 1/1 Running 0 67m 172.16.88.159 172.16.88.159 <none> <none> kube-system calico-node-rf9fv 1/1 Running 0 67m 172.16.88.156 172.16.88.156 <none> <none> kube-system calico-node-th8dt 1/1 Running 0 67m 172.16.88.157 172.16.88.157 <none> <none> kube-system calico-node-xlhbr 1/1 Running 0 67m 172.16.88.155 172.16.88.155 <none> <none> kube-system coredns-69548bdd5f-k7qwt 1/1 Running 0 42m 10.200.40.193 172.16.88.157 <none> <none> kube-system coredns-69548bdd5f-xvkbc 1/1 Running 0 42m 10.200.233.65 172.16.88.158 <none> <none> kubernetes-dashboard dashboard-metrics-scraper-8c47d4b5d-hp47z 1/1 Running 0 2m53s 10.200.2.7 172.16.88.159 <none> <none> kubernetes-dashboard kubernetes-dashboard-5676d8b865-t9j6r 1/1 Running 0 2m53s 10.200.40.197 172.16.88.157 <none> <none> root@easzlab-deploy:~/dashboard# kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 18h kube-system kube-dns ClusterIP 10.100.0.2 <none> 53/UDP,53/TCP,9153/TCP 78m kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.100.228.111 <none> 8000/TCP 3m5s kubernetes-dashboard kubernetes-dashboard NodePort 10.100.212.143 <none> 443:30000/TCP 3m6s root@easzlab-deploy:~/dashboard#

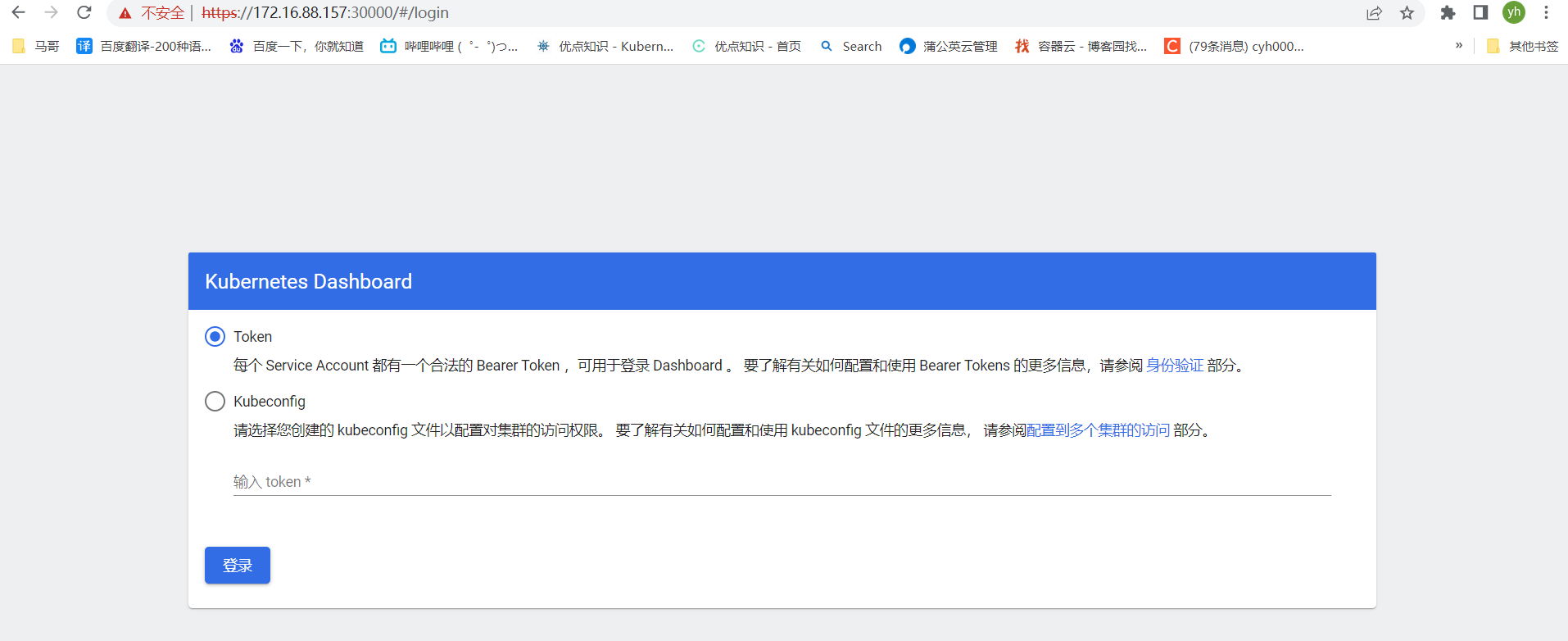

通过浏览器访问

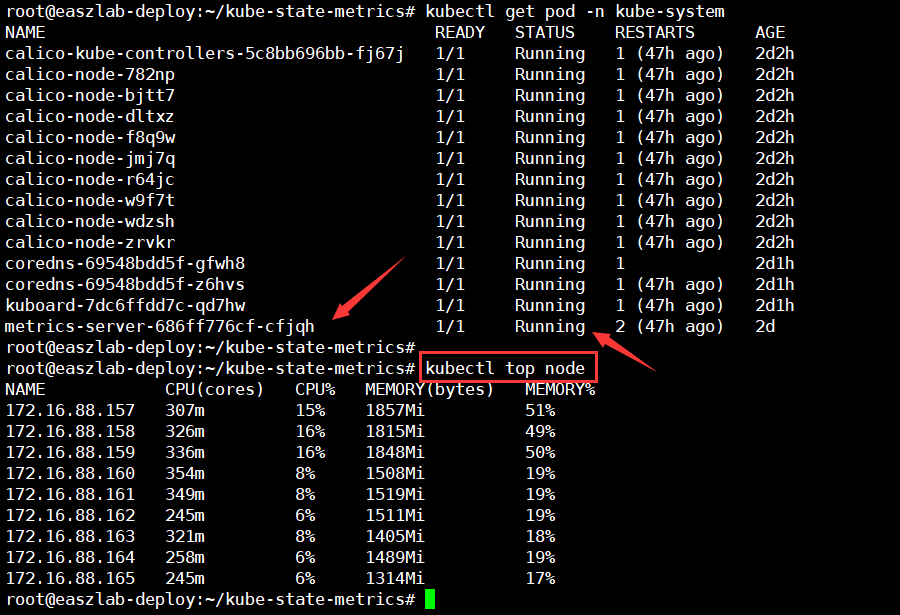

5.3、安装metrics-server

准备安装配置文件

github地址:https://github.com/kubernetes-sigs/metrics-server/releases/tag/v0.6.1

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.1/components.yaml

root@easzlab-deploy:~/kube-state-metrics# cat components.yaml