hadoop mapreduce 简单例子

本例子统计 用空格分开的单词出现数量( 这个Main.mian 启动方式是hadoop 2.0 的写法。1.0 不一样 )

目录结构:

使用的 maven : 下面是maven 依赖。

<dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.8.5</version> </dependency>

Main.java:

package com.zyk.test;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class Main {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

GenericOptionsParser optionParser = new GenericOptionsParser(conf, args);

String[] remainingArgs = optionParser.getRemainingArgs();

if ((remainingArgs.length != 2) && (remainingArgs.length != 4)) {

System.err.println("Usage: wordcount <in> <out> [-skip skipPatternFile]");

System.exit(2);

}

Job job = Job.getInstance(conf, "word count");

job.setJarByClass(Main.class);

job.setMapperClass(WordMap.class);

// job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(WordReduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

//FileInputFormat.addInputPath(job, new Path("/wd/in"));

//FileOutputFormat.setOutputPath(job, new Path("/wd/out"));

List<String> otherArgs = new ArrayList<String>();

for (int i = 0; i < remainingArgs.length; ++i) {

if ("-skip".equals(remainingArgs[i])) {

job.addCacheFile(new Path(remainingArgs[++i]).toUri());

job.getConfiguration().setBoolean("wordcount.skip.patterns", true);

} else {

otherArgs.add(remainingArgs[i]);

}

}

FileInputFormat.addInputPath(job, new Path(otherArgs.get(0)));

FileOutputFormat.setOutputPath(job, new Path(otherArgs.get(1)));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

WordMap.java

package com.zyk.test;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class WordMap extends Mapper<LongWritable, Text, Text, LongWritable> {

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, LongWritable>.Context context)throws IOException, InterruptedException {

String[] words = value.toString().split(" ");

for(String word : words) {

context.write (new Text( word ), new LongWritable( 1 ) );

}

}

}

WordReduce.java

package com.zyk.test;

import java.io.IOException;

import java.util.Iterator;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class WordReduce extends Reducer<Text, LongWritable, Text, LongWritable > {

@Override

protected void reduce(Text key, Iterable<LongWritable> arg1,Reducer<Text, LongWritable, Text, LongWritable>.Context context) throws IOException, InterruptedException {

Iterator<LongWritable> its = arg1.iterator();

long sum = 0L;

while( its.hasNext() ) {

LongWritable it = its.next();

sum += it.get();

}

context.write( key , new LongWritable( sum ) );

}

}

content.txt 是 要上传到hdfs 上作为输入参数目录的 ,内容我就不提提供了。随便找个页面复制一些文本就可以。

然后打成 jar 包。 发布到hadoop 上运行。( 后面 两个参数是 指定的 输入 和输出路径 )运行前应该吧 要统计的文件复制到 hdfs 的 /wd/in 目录里面。

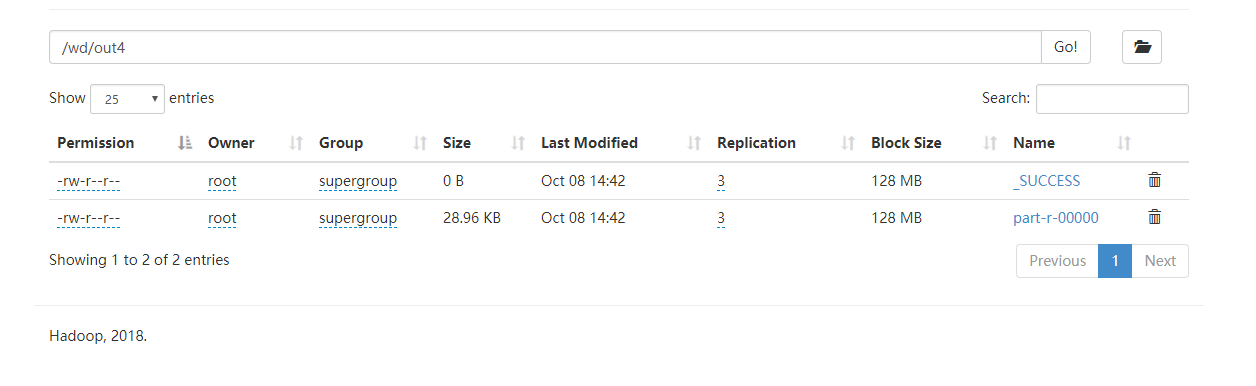

./hadoop jar /tools/wd.jar com.zyk.test.Main /wd/in /wd/out4

运行结果:

能耍的时候就一定要耍,不能耍的时候一定要学。

天道酬勤,贵在坚持

posted on 2018-10-08 14:35 zhangyukun 阅读(207) 评论(0) 编辑 收藏 举报