grpc-gateway使用教程

一、前言

grpc-gateway是go语言的开源项目,涉及到grpc是什么?以及如何在windows使用golang安装grpc可以看下之前写的两篇文章。

1.1 protoc 和 protobuf 参数讲解

按照上面的两篇文章,应该是能安装好protoc和protobuf,由于接下来会使用到protoc,先举例讲解一下:

#!/usr/bin/env bash protoDir="../proto" outDir="../proto" # 编译google.api protoc -I ${protoDir}/ ${protoDir}/google/api/*.proto \ --go_out ${outDir} \ --go_opt paths=source_relative # 编译自定义的proto protoc -I ${protoDir}/ ${protoDir}/*.proto \ --go_out ${outDir}/pb \ --go_opt paths=source_relative \ --go-grpc_out ${outDir}/pb \ --go-grpc_opt paths=source_relative \ --go-grpc_opt require_unimplemented_servers=false \ --grpc-gateway_out ${outDir}/pb \ --grpc-gateway_opt logtostderr=true \ --grpc-gateway_opt paths=source_relative \ --grpc-gateway_opt generate_unbound_methods=true \

--openapiv2_out ${outDir}/pb \

--openapiv2_opt logtostderr=true

参数讲解:

-

-I 或者 --proto_path:用于指定所编译的源码,就是我们所导入的proto文件,支持多次指定,按照顺序搜索,如果未指定,则使用当前工作目录。

-

--go_out:同样的也有其他语言的,例如

--java_out、--csharp_out,用来指定语言的生成位置,用于生成*.pb.go 文件-

--go_opt:paths=source_relative 指定--go_out生成文件是基于相对路径的

-

-

--go-grpc_out:用于生成 *_grpc.pb.go 文件

-

--go-grpc_opt:

-

paths=source_relative 指定--go_grpc_out生成文件是基于相对路径的

-

require_unimplemented_servers=false 默认是true,会在server类多生成一个接口

-

-

--grpc-gateway_out:是使用到了 protoc-gen-grpc-gateway.exe 插件,用于生成pb.gw.go文件

-

--grpc-gateway_opt:

-

logtostderr=true 记录log

-

paths=source_relative 指定--grpc-gateway_out生成文件是基于相对路径的

-

generate_unbound_methods=true 如果proto文件没有写api接口信息,也会默认生成

-

- --openapiv2_out:使用到了protoc-gen-openapiv2.exe 插件,用于生成swagger.json 文件

当然,还有其他很多命令参数,可以使用protoc -help 查看,也提供了很详细的英文提示。

1.2 openssl证书

grpc是使用HTTPS/2协议的,为了方便使用(当然,不用证书也是可以的),我们自制一个CA证书。

1.2.1 生成CA根证书

-

新建ca.conf文件

表示证书配置信息,内容如下:

[ req ] default_bits = 4096 distinguished_name = req_distinguished_name [ req_distinguished_name ] countryName = Country Name (2 letter code) countryName_default = CN stateOrProvinceName = State or Province Name (full name) stateOrProvinceName_default = GuangDong localityName = Locality Name (eg, city) localityName_default = ShenZhen organizationName = Organization Name (eg, company) organizationName_default = Sheld commonName = Common Name (e.g. server FQDN or YOUR name) commonName_max = 64 commonName_default = grpc.demo

openssl genrsa -out ca.key 4096

openssl genrsa:生成RSA私钥,名称为ca.key,4096表示指定生成密钥的位数

-

签发请求,得到ca.csr

openssl req -new -sha256 -out ca.csr -key ca.key -config ca.conf

openssl req:生成签名证书,-new表示生成证书请求,-sha256表示使用sha256加密,-out指定输出证书的名称,-key指定私钥文件,-config指定证书配置的信息

# 输入命令后生成结果如下,因为在ca.conf里面配置了,可以一路next $ openssl req -new -sha256 -out ca.csr -key ca.key -config ca.conf You are about to be asked to enter information that will be incorporated into your certificate request. What you are about to enter is what is called a Distinguished Name or a DN. There are quite a few fields but you can leave some blank For some fields there will be a default value, If you enter '.', the field will be left blank. ----- Country Name (2 letter code) [CN]: State or Province Name (full name) [GuangDong]: Locality Name (eg, city) [ShenZhen]: Organization Name (eg, company) [Sheld]: Common Name (e.g. server FQDN or YOUR name) [grpc.demo]:

openssl x509 -req -days 3650 -in ca.csr -signkey ca.key -out ca.crt

参数说明:

x509指输出证书,-days 3650为有效期,表示10年

1.2.2 生成终端用户证书

-

新建server.conf

[ req ] default_bits = 2048 distinguished_name = req_distinguished_name req_extensions = req_ext [ req_distinguished_name ] countryName = Country Name (2 letter code) countryName_default = CN stateOrProvinceName = State or Province Name (full name) stateOrProvinceName_default = GuangDong localityName = Locality Name (eg, city) localityName_default = ShenZhen organizationName = Organization Name (eg, company) organizationName_default = Sheld commonName = Common Name (e.g. server FQDN or YOUR name) commonName_max = 64 commonName_default = grpc.demo [ req_ext ] subjectAltName = @alt_names [alt_names] DNS.1 = grpc.demo IP = 127.0.0.1

openssl genrsa -out server.key 2048

openssl req -new -sha256 -out server.csr -key server.key -config server.conf

openssl x509 \ -req \ -days 3650 \ -CA ca.crt \ -CAkey ca.key \ -CAcreateserial \ -in server.csr \ -out server.pem\ -extensions req_ext \ -extfile server.conf

2.1 简介及安装使用

简介

grpc-gateway是Google协议缓冲区编译器协议(protoc)的一个插件。它读取protobuf服务定义并生成一个反向代理服务器,该服务器将RESTful HTTP API转换为gRPC。此服务器是根据服务定义中的google.api.http annotations生成的。grpc-gateway能同时提供gRPC和RETSful风格的API。

The gRPC-Gateway is a plugin of the Google protocol buffers compiler

grpc-gateway开源项目地址:https://github.com/grpc-ecosystem/grpc-gateway

grpc-gateway官网文档地址:https://grpc-ecosystem.github.io/grpc-gateway/

grpc-gateway官方示例教程地址:https://grpc-ecosystem.github.io/grpc-gateway/docs/tutorials/

grpc-gateway演示demo地址:https://github.com/iamrajiv/helloworld-grpc-gateway

官方在github上提供了一个helloworld-grpc-gateway demo,非常的简单,大家可以先去看看,就几行代码。

上面这张图片,来自官网提供的,简单明了,定义proto文件,然后根据这个proto文件,grpc-gateway帮我们做一层反向代理,整个项目的核心部分——Reverse Proxy。旨在为整个grpc服务提供HTTP+JSON接口,在代码层面生成反向代理只需在服务中进行少量配置以附加HTTP语义。

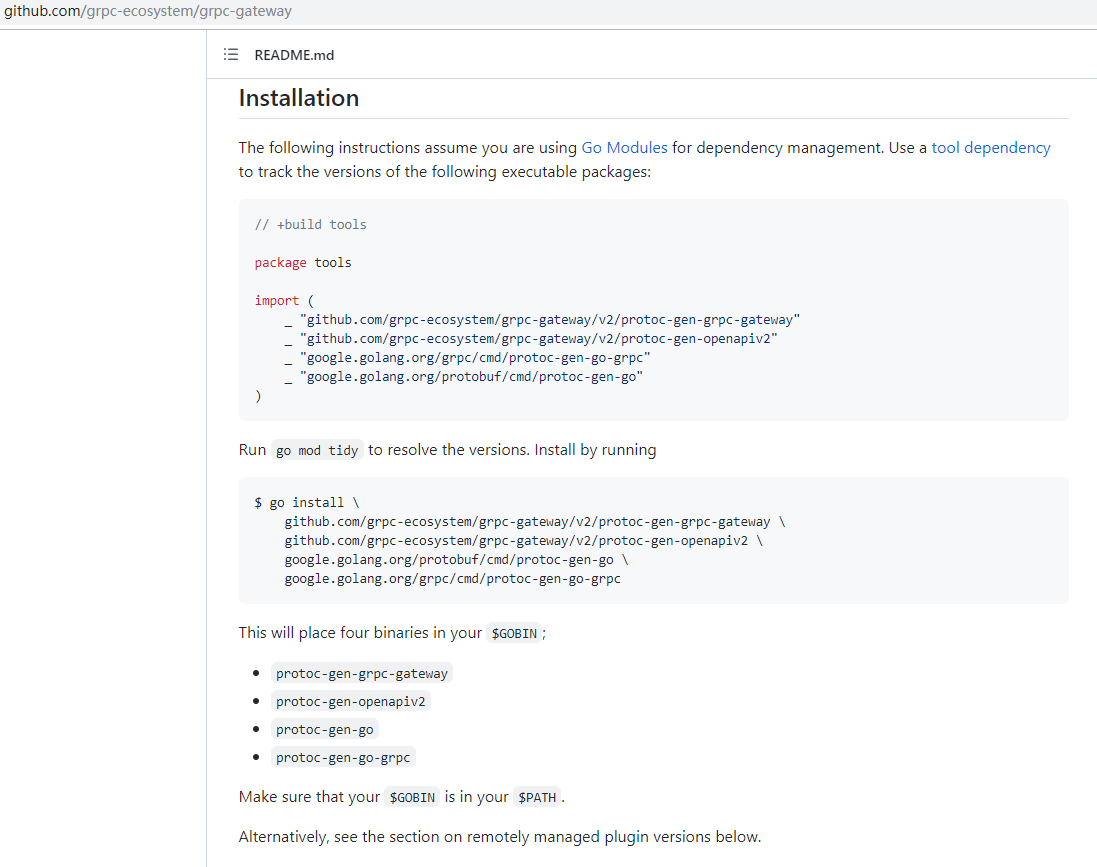

安装使用

这里使用grpc-gateway的master版本,也就是v2,以前博客的教程可能是v1的,略有不同。

go get -u google.golang.org/grpc/cmd/protoc-gen-go-grpc

go get -u google.golang.org/protobuf/cmd/protoc-gen-go

go get -u github.com/grpc-ecosystem/grpc-gateway

cd $GOPATH/src

go install github.com/grpc-ecosystem/grpc-gateway/protoc-gen-grpc-gateway

go install github.com/grpc-ecosystem/grpc-gateway/protoc-gen-openapiv2

这样,在$GOPATH/bin 目录下就会生成:

protoc-gen-go.exe、protoc-gen-go-grpc.exe、

protoc-gen-grpc-gateway.exe、protoc-gen-openapiv2.exe

注意:grpc-gateway存在两个版本,现在默认的master版本也就是v2

同时,也可以看看github项目上的readme.md文档,里面解释很详细,包含安装步骤和使用指南

接下来我们的使用步骤,也基本上是基于官网给出的指导:

2.2 使用proto定义grpc服务

Define your gRPC service using protocol buffers

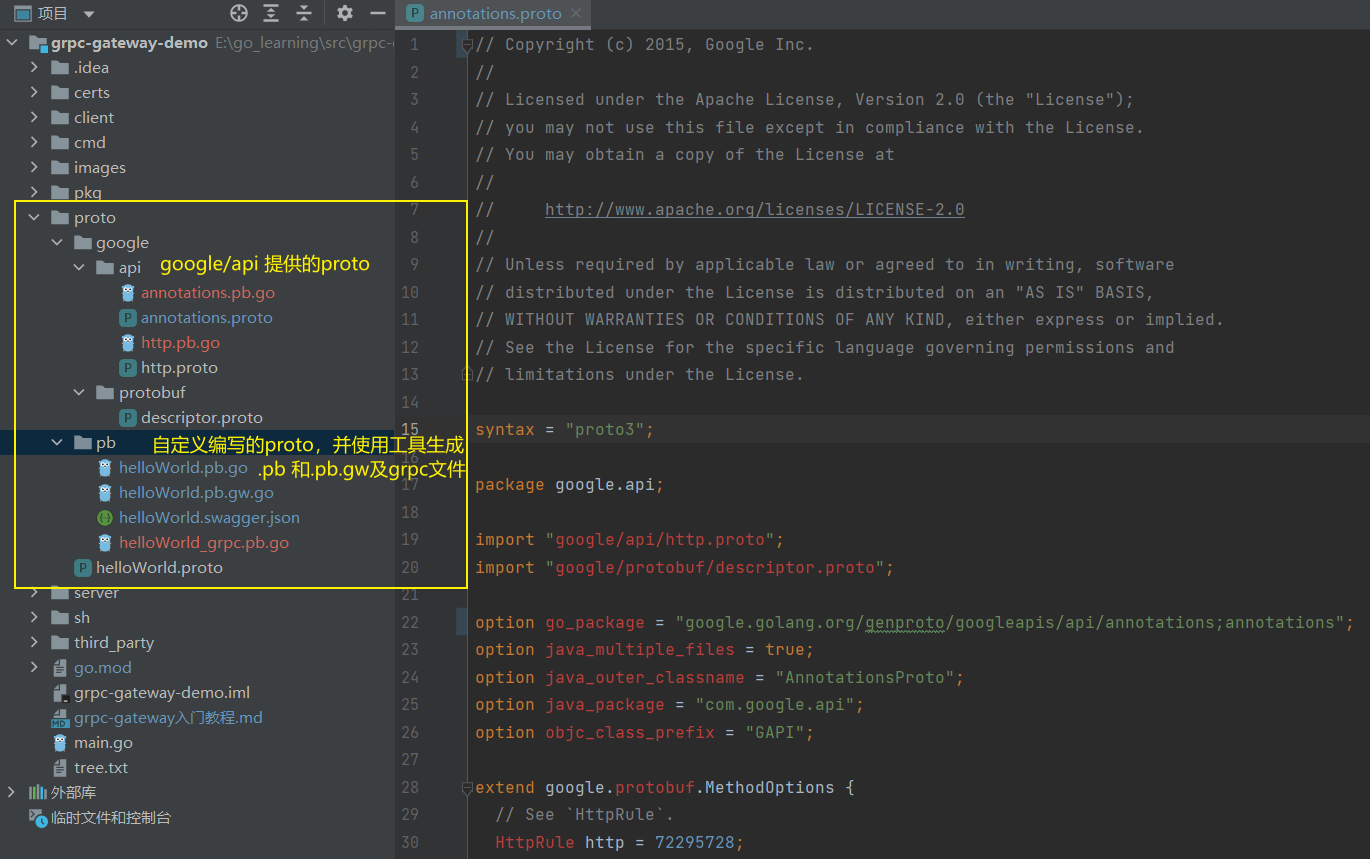

与我们之前编写的proto文件略有不同,本次我们需要用到 google/api/annotations.proto 文件。因此,我们可以把annotations.proto文件先下载下来,可以在googleapi项目中下载,路径为:https://github.com/googleapis/googleapis/tree/master/google/api 。本次我们需要使用到 annotations.proto文件,由于annotations.proto又引用到了google/api/http.proto,google/protobuf/descriptor.proto,因此我们把这3个文件一并下载来,并按照目录结构存放。so,先看看本次演示项目的目录结构吧。

如上图所示:项目名为grpc-gateway-demo,在proto文件夹下,HelloWorld.proto 文件是我本次要演示的,同事也建了google文件夹和pb文件夹,其中google文件夹存放了annotations.proto、http.proto以及descriptor.proto文件,pb文件夹是存放我们接下来要生成的pb.go文件。

1. google的proto文件

proto目录中有google/api目录,它用到了google官方提供的两个api描述文件,主要是针对grpc-gateway的http转换提供支持,定义了Protocol Buffer所扩展的HTTP Option.

annotations.proto 文件:

// Copyright (c) 2015, Google Inc.

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

syntax = "proto3";

package google.api;

import "google/api/http.proto";

import "google/protobuf/descriptor.proto";

option go_package = "google.golang.org/genproto/googleapis/api/annotations;annotations";

option java_multiple_files = true;

option java_outer_classname = "AnnotationsProto";

option java_package = "com.google.api";

option objc_class_prefix = "GAPI";

extend google.protobuf.MethodOptions {

// See `HttpRule`.

HttpRule http = 72295728;

}

http.proto文件:

// Copyright 2015 Google LLC // // Licensed under the Apache License, Version 2.0 (the "License"); // you may not use this file except in compliance with the License. // You may obtain a copy of the License at // // http://www.apache.org/licenses/LICENSE-2.0 // // Unless required by applicable law or agreed to in writing, software // distributed under the License is distributed on an "AS IS" BASIS, // WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. // See the License for the specific language governing permissions and // limitations under the License. syntax = "proto3"; package google.api; option cc_enable_arenas = true; option go_package = "google.golang.org/genproto/googleapis/api/annotations;annotations"; option java_multiple_files = true; option java_outer_classname = "HttpProto"; option java_package = "com.google.api"; option objc_class_prefix = "GAPI"; // Defines the HTTP configuration for an API service. It contains a list of // [HttpRule][google.api.HttpRule], each specifying the mapping of an RPC method // to one or more HTTP REST API methods. message Http { // A list of HTTP configuration rules that apply to individual API methods. // // **NOTE:** All service configuration rules follow "last one wins" order. repeated HttpRule rules = 1; // When set to true, URL path parameters will be fully URI-decoded except in // cases of single segment matches in reserved expansion, where "%2F" will be // left encoded. // // The default behavior is to not decode RFC 6570 reserved characters in multi // segment matches. bool fully_decode_reserved_expansion = 2; } // # gRPC Transcoding // // gRPC Transcoding is a feature for mapping between a gRPC method and one or // more HTTP REST endpoints. It allows developers to build a single API service // that supports both gRPC APIs and REST APIs. Many systems, including [Google // APIs](https://github.com/googleapis/googleapis), // [Cloud Endpoints](https://cloud.google.com/endpoints), [gRPC // Gateway](https://github.com/grpc-ecosystem/grpc-gateway), // and [Envoy](https://github.com/envoyproxy/envoy) proxy support this feature // and use it for large scale production services. // // `HttpRule` defines the schema of the gRPC/REST mapping. The mapping specifies // how different portions of the gRPC request message are mapped to the URL // path, URL query parameters, and HTTP request body. It also controls how the // gRPC response message is mapped to the HTTP response body. `HttpRule` is // typically specified as an `google.api.http` annotation on the gRPC method. // // Each mapping specifies a URL path template and an HTTP method. The path // template may refer to one or more fields in the gRPC request message, as long // as each field is a non-repeated field with a primitive (non-message) type. // The path template controls how fields of the request message are mapped to // the URL path. // // Example: // // service Messaging { // rpc GetMessage(GetMessageRequest) returns (Message) { // option (google.api.http) = { // get: "/v1/{name=messages/*}" // }; // } // } // message GetMessageRequest { // string name = 1; // Mapped to URL path. // } // message Message { // string text = 1; // The resource content. // } // // This enables an HTTP REST to gRPC mapping as below: // // HTTP | gRPC // -----|----- // `GET /v1/messages/123456` | `GetMessage(name: "messages/123456")` // // Any fields in the request message which are not bound by the path template // automatically become HTTP query parameters if there is no HTTP request body. // For example: // // service Messaging { // rpc GetMessage(GetMessageRequest) returns (Message) { // option (google.api.http) = { // get:"/v1/messages/{message_id}" // }; // } // } // message GetMessageRequest { // message SubMessage { // string subfield = 1; // } // string message_id = 1; // Mapped to URL path. // int64 revision = 2; // Mapped to URL query parameter `revision`. // SubMessage sub = 3; // Mapped to URL query parameter `sub.subfield`. // } // // This enables a HTTP JSON to RPC mapping as below: // // HTTP | gRPC // -----|----- // `GET /v1/messages/123456?revision=2&sub.subfield=foo` | // `GetMessage(message_id: "123456" revision: 2 sub: SubMessage(subfield: // "foo"))` // // Note that fields which are mapped to URL query parameters must have a // primitive type or a repeated primitive type or a non-repeated message type. // In the case of a repeated type, the parameter can be repeated in the URL // as `...?param=A¶m=B`. In the case of a message type, each field of the // message is mapped to a separate parameter, such as // `...?foo.a=A&foo.b=B&foo.c=C`. // // For HTTP methods that allow a request body, the `body` field // specifies the mapping. Consider a REST update method on the // message resource collection: // // service Messaging { // rpc UpdateMessage(UpdateMessageRequest) returns (Message) { // option (google.api.http) = { // patch: "/v1/messages/{message_id}" // body: "message" // }; // } // } // message UpdateMessageRequest { // string message_id = 1; // mapped to the URL // Message message = 2; // mapped to the body // } // // The following HTTP JSON to RPC mapping is enabled, where the // representation of the JSON in the request body is determined by // protos JSON encoding: // // HTTP | gRPC // -----|----- // `PATCH /v1/messages/123456 { "text": "Hi!" }` | `UpdateMessage(message_id: // "123456" message { text: "Hi!" })` // // The special name `*` can be used in the body mapping to define that // every field not bound by the path template should be mapped to the // request body. This enables the following alternative definition of // the update method: // // service Messaging { // rpc UpdateMessage(Message) returns (Message) { // option (google.api.http) = { // patch: "/v1/messages/{message_id}" // body: "*" // }; // } // } // message Message { // string message_id = 1; // string text = 2; // } // // // The following HTTP JSON to RPC mapping is enabled: // // HTTP | gRPC // -----|----- // `PATCH /v1/messages/123456 { "text": "Hi!" }` | `UpdateMessage(message_id: // "123456" text: "Hi!")` // // Note that when using `*` in the body mapping, it is not possible to // have HTTP parameters, as all fields not bound by the path end in // the body. This makes this option more rarely used in practice when // defining REST APIs. The common usage of `*` is in custom methods // which don't use the URL at all for transferring data. // // It is possible to define multiple HTTP methods for one RPC by using // the `additional_bindings` option. Example: // // service Messaging { // rpc GetMessage(GetMessageRequest) returns (Message) { // option (google.api.http) = { // get: "/v1/messages/{message_id}" // additional_bindings { // get: "/v1/users/{user_id}/messages/{message_id}" // } // }; // } // } // message GetMessageRequest { // string message_id = 1; // string user_id = 2; // } // // This enables the following two alternative HTTP JSON to RPC mappings: // // HTTP | gRPC // -----|----- // `GET /v1/messages/123456` | `GetMessage(message_id: "123456")` // `GET /v1/users/me/messages/123456` | `GetMessage(user_id: "me" message_id: // "123456")` // // ## Rules for HTTP mapping // // 1. Leaf request fields (recursive expansion nested messages in the request // message) are classified into three categories: // - Fields referred by the path template. They are passed via the URL path. // - Fields referred by the [HttpRule.body][google.api.HttpRule.body]. They are passed via the HTTP // request body. // - All other fields are passed via the URL query parameters, and the // parameter name is the field path in the request message. A repeated // field can be represented as multiple query parameters under the same // name. // 2. If [HttpRule.body][google.api.HttpRule.body] is "*", there is no URL query parameter, all fields // are passed via URL path and HTTP request body. // 3. If [HttpRule.body][google.api.HttpRule.body] is omitted, there is no HTTP request body, all // fields are passed via URL path and URL query parameters. // // ### Path template syntax // // Template = "/" Segments [ Verb ] ; // Segments = Segment { "/" Segment } ; // Segment = "*" | "**" | LITERAL | Variable ; // Variable = "{" FieldPath [ "=" Segments ] "}" ; // FieldPath = IDENT { "." IDENT } ; // Verb = ":" LITERAL ; // // The syntax `*` matches a single URL path segment. The syntax `**` matches // zero or more URL path segments, which must be the last part of the URL path // except the `Verb`. // // The syntax `Variable` matches part of the URL path as specified by its // template. A variable template must not contain other variables. If a variable // matches a single path segment, its template may be omitted, e.g. `{var}` // is equivalent to `{var=*}`. // // The syntax `LITERAL` matches literal text in the URL path. If the `LITERAL` // contains any reserved character, such characters should be percent-encoded // before the matching. // // If a variable contains exactly one path segment, such as `"{var}"` or // `"{var=*}"`, when such a variable is expanded into a URL path on the client // side, all characters except `[-_.~0-9a-zA-Z]` are percent-encoded. The // server side does the reverse decoding. Such variables show up in the // [Discovery // Document](https://developers.google.com/discovery/v1/reference/apis) as // `{var}`. // // If a variable contains multiple path segments, such as `"{var=foo/*}"` // or `"{var=**}"`, when such a variable is expanded into a URL path on the // client side, all characters except `[-_.~/0-9a-zA-Z]` are percent-encoded. // The server side does the reverse decoding, except "%2F" and "%2f" are left // unchanged. Such variables show up in the // [Discovery // Document](https://developers.google.com/discovery/v1/reference/apis) as // `{+var}`. // // ## Using gRPC API Service Configuration // // gRPC API Service Configuration (service config) is a configuration language // for configuring a gRPC service to become a user-facing product. The // service config is simply the YAML representation of the `google.api.Service` // proto message. // // As an alternative to annotating your proto file, you can configure gRPC // transcoding in your service config YAML files. You do this by specifying a // `HttpRule` that maps the gRPC method to a REST endpoint, achieving the same // effect as the proto annotation. This can be particularly useful if you // have a proto that is reused in multiple services. Note that any transcoding // specified in the service config will override any matching transcoding // configuration in the proto. // // Example: // // http: // rules: // # Selects a gRPC method and applies HttpRule to it. // - selector: example.v1.Messaging.GetMessage // get: /v1/messages/{message_id}/{sub.subfield} // // ## Special notes // // When gRPC Transcoding is used to map a gRPC to JSON REST endpoints, the // proto to JSON conversion must follow the [proto3 // specification](https://developers.google.com/protocol-buffers/docs/proto3#json). // // While the single segment variable follows the semantics of // [RFC 6570](https://tools.ietf.org/html/rfc6570) Section 3.2.2 Simple String // Expansion, the multi segment variable **does not** follow RFC 6570 Section // 3.2.3 Reserved Expansion. The reason is that the Reserved Expansion // does not expand special characters like `?` and `#`, which would lead // to invalid URLs. As the result, gRPC Transcoding uses a custom encoding // for multi segment variables. // // The path variables **must not** refer to any repeated or mapped field, // because client libraries are not capable of handling such variable expansion. // // The path variables **must not** capture the leading "/" character. The reason // is that the most common use case "{var}" does not capture the leading "/" // character. For consistency, all path variables must share the same behavior. // // Repeated message fields must not be mapped to URL query parameters, because // no client library can support such complicated mapping. // // If an API needs to use a JSON array for request or response body, it can map // the request or response body to a repeated field. However, some gRPC // Transcoding implementations may not support this feature. message HttpRule { // Selects a method to which this rule applies. // // Refer to [selector][google.api.DocumentationRule.selector] for syntax details. string selector = 1; // Determines the URL pattern is matched by this rules. This pattern can be // used with any of the {get|put|post|delete|patch} methods. A custom method // can be defined using the 'custom' field. oneof pattern { // Maps to HTTP GET. Used for listing and getting information about // resources. string get = 2; // Maps to HTTP PUT. Used for replacing a resource. string put = 3; // Maps to HTTP POST. Used for creating a resource or performing an action. string post = 4; // Maps to HTTP DELETE. Used for deleting a resource. string delete = 5; // Maps to HTTP PATCH. Used for updating a resource. string patch = 6; // The custom pattern is used for specifying an HTTP method that is not // included in the `pattern` field, such as HEAD, or "*" to leave the // HTTP method unspecified for this rule. The wild-card rule is useful // for services that provide content to Web (HTML) clients. CustomHttpPattern custom = 8; } // The name of the request field whose value is mapped to the HTTP request // body, or `*` for mapping all request fields not captured by the path // pattern to the HTTP body, or omitted for not having any HTTP request body. // // NOTE: the referred field must be present at the top-level of the request // message type. string body = 7; // Optional. The name of the response field whose value is mapped to the HTTP // response body. When omitted, the entire response message will be used // as the HTTP response body. // // NOTE: The referred field must be present at the top-level of the response // message type. string response_body = 12; // Additional HTTP bindings for the selector. Nested bindings must // not contain an `additional_bindings` field themselves (that is, // the nesting may only be one level deep). repeated HttpRule additional_bindings = 11; } // A custom pattern is used for defining custom HTTP verb. message CustomHttpPattern { // The name of this custom HTTP verb. string kind = 1; // The path matched by this custom verb. string path = 2; }

descriptor.proto 文件:

// Protocol Buffers - Google's data interchange format // Copyright 2008 Google Inc. All rights reserved. // https://developers.google.com/protocol-buffers/ // // Redistribution and use in source and binary forms, with or without // modification, are permitted provided that the following conditions are // met: // // * Redistributions of source code must retain the above copyright // notice, this list of conditions and the following disclaimer. // * Redistributions in binary form must reproduce the above // copyright notice, this list of conditions and the following disclaimer // in the documentation and/or other materials provided with the // distribution. // * Neither the name of Google Inc. nor the names of its // contributors may be used to endorse or promote products derived from // this software without specific prior written permission. // // THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS // "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT // LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR // A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT // OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, // SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT // LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, // DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY // THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT // (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE // OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. // Author: kenton@google.com (Kenton Varda) // Based on original Protocol Buffers design by // Sanjay Ghemawat, Jeff Dean, and others. // // The messages in this file describe the definitions found in .proto files. // A valid .proto file can be translated directly to a FileDescriptorProto // without any other information (e.g. without reading its imports). syntax = "proto2"; package google.protobuf; option go_package = "github.com/golang/protobuf/protoc-gen-go/descriptor;descriptor"; option java_package = "com.google.protobuf"; option java_outer_classname = "DescriptorProtos"; option csharp_namespace = "Google.Protobuf.Reflection"; option objc_class_prefix = "GPB"; option cc_enable_arenas = true; // descriptor.proto must be optimized for speed because reflection-based // algorithms don't work during bootstrapping. option optimize_for = SPEED; // The protocol compiler can output a FileDescriptorSet containing the .proto // files it parses. message FileDescriptorSet { repeated FileDescriptorProto file = 1; } // Describes a complete .proto file. message FileDescriptorProto { optional string name = 1; // file name, relative to root of source tree optional string package = 2; // e.g. "foo", "foo.bar", etc. // Names of files imported by this file. repeated string dependency = 3; // Indexes of the public imported files in the dependency list above. repeated int32 public_dependency = 10; // Indexes of the weak imported files in the dependency list. // For Google-internal migration only. Do not use. repeated int32 weak_dependency = 11; // All top-level definitions in this file. repeated DescriptorProto message_type = 4; repeated EnumDescriptorProto enum_type = 5; repeated ServiceDescriptorProto service = 6; repeated FieldDescriptorProto extension = 7; optional FileOptions options = 8; // This field contains optional information about the original source code. // You may safely remove this entire field without harming runtime // functionality of the descriptors -- the information is needed only by // development tools. optional SourceCodeInfo source_code_info = 9; // The syntax of the proto file. // The supported values are "proto2" and "proto3". optional string syntax = 12; } // Describes a message type. message DescriptorProto { optional string name = 1; repeated FieldDescriptorProto field = 2; repeated FieldDescriptorProto extension = 6; repeated DescriptorProto nested_type = 3; repeated EnumDescriptorProto enum_type = 4; message ExtensionRange { optional int32 start = 1; optional int32 end = 2; optional ExtensionRangeOptions options = 3; } repeated ExtensionRange extension_range = 5; repeated OneofDescriptorProto oneof_decl = 8; optional MessageOptions options = 7; // Range of reserved tag numbers. Reserved tag numbers may not be used by // fields or extension ranges in the same message. Reserved ranges may // not overlap. message ReservedRange { optional int32 start = 1; // Inclusive. optional int32 end = 2; // Exclusive. } repeated ReservedRange reserved_range = 9; // Reserved field names, which may not be used by fields in the same message. // A given name may only be reserved once. repeated string reserved_name = 10; } message ExtensionRangeOptions { // The parser stores options it doesn't recognize here. See above. repeated UninterpretedOption uninterpreted_option = 999; // Clients can define custom options in extensions of this message. See above. extensions 1000 to max; } // Describes a field within a message. message FieldDescriptorProto { enum Type { // 0 is reserved for errors. // Order is weird for historical reasons. TYPE_DOUBLE = 1; TYPE_FLOAT = 2; // Not ZigZag encoded. Negative numbers take 10 bytes. Use TYPE_SINT64 if // negative values are likely. TYPE_INT64 = 3; TYPE_UINT64 = 4; // Not ZigZag encoded. Negative numbers take 10 bytes. Use TYPE_SINT32 if // negative values are likely. TYPE_INT32 = 5; TYPE_FIXED64 = 6; TYPE_FIXED32 = 7; TYPE_BOOL = 8; TYPE_STRING = 9; // Tag-delimited aggregate. // Group type is deprecated and not supported in proto3. However, Proto3 // implementations should still be able to parse the group wire format and // treat group fields as unknown fields. TYPE_GROUP = 10; TYPE_MESSAGE = 11; // Length-delimited aggregate. // New in version 2. TYPE_BYTES = 12; TYPE_UINT32 = 13; TYPE_ENUM = 14; TYPE_SFIXED32 = 15; TYPE_SFIXED64 = 16; TYPE_SINT32 = 17; // Uses ZigZag encoding. TYPE_SINT64 = 18; // Uses ZigZag encoding. }; enum Label { // 0 is reserved for errors LABEL_OPTIONAL = 1; LABEL_REQUIRED = 2; LABEL_REPEATED = 3; }; optional string name = 1; optional int32 number = 3; optional Label label = 4; // If type_name is set, this need not be set. If both this and type_name // are set, this must be one of TYPE_ENUM, TYPE_MESSAGE or TYPE_GROUP. optional Type type = 5; // For message and enum types, this is the name of the type. If the name // starts with a '.', it is fully-qualified. Otherwise, C++-like scoping // rules are used to find the type (i.e. first the nested types within this // message are searched, then within the parent, on up to the root // namespace). optional string type_name = 6; // For extensions, this is the name of the type being extended. It is // resolved in the same manner as type_name. optional string extendee = 2; // For numeric types, contains the original text representation of the value. // For booleans, "true" or "false". // For strings, contains the default text contents (not escaped in any way). // For bytes, contains the C escaped value. All bytes >= 128 are escaped. // TODO(kenton): Base-64 encode? optional string default_value = 7; // If set, gives the index of a oneof in the containing type's oneof_decl // list. This field is a member of that oneof. optional int32 oneof_index = 9; // JSON name of this field. The value is set by protocol compiler. If the // user has set a "json_name" option on this field, that option's value // will be used. Otherwise, it's deduced from the field's name by converting // it to camelCase. optional string json_name = 10; optional FieldOptions options = 8; } // Describes a oneof. message OneofDescriptorProto { optional string name = 1; optional OneofOptions options = 2; } // Describes an enum type. message EnumDescriptorProto { optional string name = 1; repeated EnumValueDescriptorProto value = 2; optional EnumOptions options = 3; // Range of reserved numeric values. Reserved values may not be used by // entries in the same enum. Reserved ranges may not overlap. // // Note that this is distinct from DescriptorProto.ReservedRange in that it // is inclusive such that it can appropriately represent the entire int32 // domain. message EnumReservedRange { optional int32 start = 1; // Inclusive. optional int32 end = 2; // Inclusive. } // Range of reserved numeric values. Reserved numeric values may not be used // by enum values in the same enum declaration. Reserved ranges may not // overlap. repeated EnumReservedRange reserved_range = 4; // Reserved enum value names, which may not be reused. A given name may only // be reserved once. repeated string reserved_name = 5; } // Describes a value within an enum. message EnumValueDescriptorProto { optional string name = 1; optional int32 number = 2; optional EnumValueOptions options = 3; } // Describes a service. message ServiceDescriptorProto { optional string name = 1; repeated MethodDescriptorProto method = 2; optional ServiceOptions options = 3; } // Describes a method of a service. message MethodDescriptorProto { optional string name = 1; // Input and output type names. These are resolved in the same way as // FieldDescriptorProto.type_name, but must refer to a message type. optional string input_type = 2; optional string output_type = 3; optional MethodOptions options = 4; // Identifies if client streams multiple client messages optional bool client_streaming = 5 [default=false]; // Identifies if server streams multiple server messages optional bool server_streaming = 6 [default=false]; } // =================================================================== // Options // Each of the definitions above may have "options" attached. These are // just annotations which may cause code to be generated slightly differently // or may contain hints for code that manipulates protocol messages. // // Clients may define custom options as extensions of the *Options messages. // These extensions may not yet be known at parsing time, so the parser cannot // store the values in them. Instead it stores them in a field in the *Options // message called uninterpreted_option. This field must have the same name // across all *Options messages. We then use this field to populate the // extensions when we build a descriptor, at which point all protos have been // parsed and so all extensions are known. // // Extension numbers for custom options may be chosen as follows: // * For options which will only be used within a single application or // organization, or for experimental options, use field numbers 50000 // through 99999. It is up to you to ensure that you do not use the // same number for multiple options. // * For options which will be published and used publicly by multiple // independent entities, e-mail protobuf-global-extension-registry@google.com // to reserve extension numbers. Simply provide your project name (e.g. // Objective-C plugin) and your project website (if available) -- there's no // need to explain how you intend to use them. Usually you only need one // extension number. You can declare multiple options with only one extension // number by putting them in a sub-message. See the Custom Options section of // the docs for examples: // https://developers.google.com/protocol-buffers/docs/proto#options // If this turns out to be popular, a web service will be set up // to automatically assign option numbers. message FileOptions { // Sets the Java package where classes generated from this .proto will be // placed. By default, the proto package is used, but this is often // inappropriate because proto packages do not normally start with backwards // domain names. optional string java_package = 1; // If set, all the classes from the .proto file are wrapped in a single // outer class with the given name. This applies to both Proto1 // (equivalent to the old "--one_java_file" option) and Proto2 (where // a .proto always translates to a single class, but you may want to // explicitly choose the class name). optional string java_outer_classname = 8; // If set true, then the Java code generator will generate a separate .java // file for each top-level message, enum, and service defined in the .proto // file. Thus, these types will *not* be nested inside the outer class // named by java_outer_classname. However, the outer class will still be // generated to contain the file's getDescriptor() method as well as any // top-level extensions defined in the file. optional bool java_multiple_files = 10 [default=false]; // This option does nothing. optional bool java_generate_equals_and_hash = 20 [deprecated=true]; // If set true, then the Java2 code generator will generate code that // throws an exception whenever an attempt is made to assign a non-UTF-8 // byte sequence to a string field. // Message reflection will do the same. // However, an extension field still accepts non-UTF-8 byte sequences. // This option has no effect on when used with the lite runtime. optional bool java_string_check_utf8 = 27 [default=false]; // Generated classes can be optimized for speed or code size. enum OptimizeMode { SPEED = 1; // Generate complete code for parsing, serialization, // etc. CODE_SIZE = 2; // Use ReflectionOps to implement these methods. LITE_RUNTIME = 3; // Generate code using MessageLite and the lite runtime. } optional OptimizeMode optimize_for = 9 [default=SPEED]; // Sets the Go package where structs generated from this .proto will be // placed. If omitted, the Go package will be derived from the following: // - The basename of the package import path, if provided. // - Otherwise, the package statement in the .proto file, if present. // - Otherwise, the basename of the .proto file, without extension. optional string go_package = 11; // Should generic services be generated in each language? "Generic" services // are not specific to any particular RPC system. They are generated by the // main code generators in each language (without additional plugins). // Generic services were the only kind of service generation supported by // early versions of google.protobuf. // // Generic services are now considered deprecated in favor of using plugins // that generate code specific to your particular RPC system. Therefore, // these default to false. Old code which depends on generic services should // explicitly set them to true. optional bool cc_generic_services = 16 [default=false]; optional bool java_generic_services = 17 [default=false]; optional bool py_generic_services = 18 [default=false]; optional bool php_generic_services = 42 [default=false]; // Is this file deprecated? // Depending on the target platform, this can emit Deprecated annotations // for everything in the file, or it will be completely ignored; in the very // least, this is a formalization for deprecating files. optional bool deprecated = 23 [default=false]; // Enables the use of arenas for the proto messages in this file. This applies // only to generated classes for C++. optional bool cc_enable_arenas = 31 [default=false]; // Sets the objective c class prefix which is prepended to all objective c // generated classes from this .proto. There is no default. optional string objc_class_prefix = 36; // Namespace for generated classes; defaults to the package. optional string csharp_namespace = 37; // By default Swift generators will take the proto package and CamelCase it // replacing '.' with underscore and use that to prefix the types/symbols // defined. When this options is provided, they will use this value instead // to prefix the types/symbols defined. optional string swift_prefix = 39; // Sets the php class prefix which is prepended to all php generated classes // from this .proto. Default is empty. optional string php_class_prefix = 40; // Use this option to change the namespace of php generated classes. Default // is empty. When this option is empty, the package name will be used for // determining the namespace. optional string php_namespace = 41; // Use this option to change the namespace of php generated metadata classes. // Default is empty. When this option is empty, the proto file name will be used // for determining the namespace. optional string php_metadata_namespace = 44; // Use this option to change the package of ruby generated classes. Default // is empty. When this option is not set, the package name will be used for // determining the ruby package. optional string ruby_package = 45; // The parser stores options it doesn't recognize here. // See the documentation for the "Options" section above. repeated UninterpretedOption uninterpreted_option = 999; // Clients can define custom options in extensions of this message. // See the documentation for the "Options" section above. extensions 1000 to max; reserved 38; } message MessageOptions { // Set true to use the old proto1 MessageSet wire format for extensions. // This is provided for backwards-compatibility with the MessageSet wire // format. You should not use this for any other reason: It's less // efficient, has fewer features, and is more complicated. // // The message must be defined exactly as follows: // message Foo { // option message_set_wire_format = true; // extensions 4 to max; // } // Note that the message cannot have any defined fields; MessageSets only // have extensions. // // All extensions of your type must be singular messages; e.g. they cannot // be int32s, enums, or repeated messages. // // Because this is an option, the above two restrictions are not enforced by // the protocol compiler. optional bool message_set_wire_format = 1 [default=false]; // Disables the generation of the standard "descriptor()" accessor, which can // conflict with a field of the same name. This is meant to make migration // from proto1 easier; new code should avoid fields named "descriptor". optional bool no_standard_descriptor_accessor = 2 [default=false]; // Is this message deprecated? // Depending on the target platform, this can emit Deprecated annotations // for the message, or it will be completely ignored; in the very least, // this is a formalization for deprecating messages. optional bool deprecated = 3 [default=false]; // Whether the message is an automatically generated map entry type for the // maps field. // // For maps fields: // map<KeyType, ValueType> map_field = 1; // The parsed descriptor looks like: // message MapFieldEntry { // option map_entry = true; // optional KeyType key = 1; // optional ValueType value = 2; // } // repeated MapFieldEntry map_field = 1; // // Implementations may choose not to generate the map_entry=true message, but // use a native map in the target language to hold the keys and values. // The reflection APIs in such implementions still need to work as // if the field is a repeated message field. // // NOTE: Do not set the option in .proto files. Always use the maps syntax // instead. The option should only be implicitly set by the proto compiler // parser. optional bool map_entry = 7; reserved 8; // javalite_serializable reserved 9; // javanano_as_lite // The parser stores options it doesn't recognize here. See above. repeated UninterpretedOption uninterpreted_option = 999; // Clients can define custom options in extensions of this message. See above. extensions 1000 to max; } message FieldOptions { // The ctype option instructs the C++ code generator to use a different // representation of the field than it normally would. See the specific // options below. This option is not yet implemented in the open source // release -- sorry, we'll try to include it in a future version! optional CType ctype = 1 [default = STRING]; enum CType { // Default mode. STRING = 0; CORD = 1; STRING_PIECE = 2; } // The packed option can be enabled for repeated primitive fields to enable // a more efficient representation on the wire. Rather than repeatedly // writing the tag and type for each element, the entire array is encoded as // a single length-delimited blob. In proto3, only explicit setting it to // false will avoid using packed encoding. optional bool packed = 2; // The jstype option determines the JavaScript type used for values of the // field. The option is permitted only for 64 bit integral and fixed types // (int64, uint64, sint64, fixed64, sfixed64). A field with jstype JS_STRING // is represented as JavaScript string, which avoids loss of precision that // can happen when a large value is converted to a floating point JavaScript. // Specifying JS_NUMBER for the jstype causes the generated JavaScript code to // use the JavaScript "number" type. The behavior of the default option // JS_NORMAL is implementation dependent. // // This option is an enum to permit additional types to be added, e.g. // goog.math.Integer. optional JSType jstype = 6 [default = JS_NORMAL]; enum JSType { // Use the default type. JS_NORMAL = 0; // Use JavaScript strings. JS_STRING = 1; // Use JavaScript numbers. JS_NUMBER = 2; } // Should this field be parsed lazily? Lazy applies only to message-type // fields. It means that when the outer message is initially parsed, the // inner message's contents will not be parsed but instead stored in encoded // form. The inner message will actually be parsed when it is first accessed. // // This is only a hint. Implementations are free to choose whether to use // eager or lazy parsing regardless of the value of this option. However, // setting this option true suggests that the protocol author believes that // using lazy parsing on this field is worth the additional bookkeeping // overhead typically needed to implement it. // // This option does not affect the public interface of any generated code; // all method signatures remain the same. Furthermore, thread-safety of the // interface is not affected by this option; const methods remain safe to // call from multiple threads concurrently, while non-const methods continue // to require exclusive access. // // // Note that implementations may choose not to check required fields within // a lazy sub-message. That is, calling IsInitialized() on the outer message // may return true even if the inner message has missing required fields. // This is necessary because otherwise the inner message would have to be // parsed in order to perform the check, defeating the purpose of lazy // parsing. An implementation which chooses not to check required fields // must be consistent about it. That is, for any particular sub-message, the // implementation must either *always* check its required fields, or *never* // check its required fields, regardless of whether or not the message has // been parsed. optional bool lazy = 5 [default=false]; // Is this field deprecated? // Depending on the target platform, this can emit Deprecated annotations // for accessors, or it will be completely ignored; in the very least, this // is a formalization for deprecating fields. optional bool deprecated = 3 [default=false]; // For Google-internal migration only. Do not use. optional bool weak = 10 [default=false]; // The parser stores options it doesn't recognize here. See above. repeated UninterpretedOption uninterpreted_option = 999; // Clients can define custom options in extensions of this message. See above. extensions 1000 to max; reserved 4; // removed jtype } message OneofOptions { // The parser stores options it doesn't recognize here. See above. repeated UninterpretedOption uninterpreted_option = 999; // Clients can define custom options in extensions of this message. See above. extensions 1000 to max; } message EnumOptions { // Set this option to true to allow mapping different tag names to the same // value. optional bool allow_alias = 2; // Is this enum deprecated? // Depending on the target platform, this can emit Deprecated annotations // for the enum, or it will be completely ignored; in the very least, this // is a formalization for deprecating enums. optional bool deprecated = 3 [default=false]; reserved 5; // javanano_as_lite // The parser stores options it doesn't recognize here. See above. repeated UninterpretedOption uninterpreted_option = 999; // Clients can define custom options in extensions of this message. See above. extensions 1000 to max; } message EnumValueOptions { // Is this enum value deprecated? // Depending on the target platform, this can emit Deprecated annotations // for the enum value, or it will be completely ignored; in the very least, // this is a formalization for deprecating enum values. optional bool deprecated = 1 [default=false]; // The parser stores options it doesn't recognize here. See above. repeated UninterpretedOption uninterpreted_option = 999; // Clients can define custom options in extensions of this message. See above. extensions 1000 to max; } message ServiceOptions { // Note: Field numbers 1 through 32 are reserved for Google's internal RPC // framework. We apologize for hoarding these numbers to ourselves, but // we were already using them long before we decided to release Protocol // Buffers. // Is this service deprecated? // Depending on the target platform, this can emit Deprecated annotations // for the service, or it will be completely ignored; in the very least, // this is a formalization for deprecating services. optional bool deprecated = 33 [default=false]; // The parser stores options it doesn't recognize here. See above. repeated UninterpretedOption uninterpreted_option = 999; // Clients can define custom options in extensions of this message. See above. extensions 1000 to max; } message MethodOptions { // Note: Field numbers 1 through 32 are reserved for Google's internal RPC // framework. We apologize for hoarding these numbers to ourselves, but // we were already using them long before we decided to release Protocol // Buffers. // Is this method deprecated? // Depending on the target platform, this can emit Deprecated annotations // for the method, or it will be completely ignored; in the very least, // this is a formalization for deprecating methods. optional bool deprecated = 33 [default=false]; // Is this method side-effect-free (or safe in HTTP parlance), or idempotent, // or neither? HTTP based RPC implementation may choose GET verb for safe // methods, and PUT verb for idempotent methods instead of the default POST. enum IdempotencyLevel { IDEMPOTENCY_UNKNOWN = 0; NO_SIDE_EFFECTS = 1; // implies idempotent IDEMPOTENT = 2; // idempotent, but may have side effects } optional IdempotencyLevel idempotency_level = 34 [default=IDEMPOTENCY_UNKNOWN]; // The parser stores options it doesn't recognize here. See above. repeated UninterpretedOption uninterpreted_option = 999; // Clients can define custom options in extensions of this message. See above. extensions 1000 to max; } // A message representing a option the parser does not recognize. This only // appears in options protos created by the compiler::Parser class. // DescriptorPool resolves these when building Descriptor objects. Therefore, // options protos in descriptor objects (e.g. returned by Descriptor::options(), // or produced by Descriptor::CopyTo()) will never have UninterpretedOptions // in them. message UninterpretedOption { // The name of the uninterpreted option. Each string represents a segment in // a dot-separated name. is_extension is true iff a segment represents an // extension (denoted with parentheses in options specs in .proto files). // E.g.,{ ["foo", false], ["bar.baz", true], ["qux", false] } represents // "foo.(bar.baz).qux". message NamePart { required string name_part = 1; required bool is_extension = 2; } repeated NamePart name = 2; // The value of the uninterpreted option, in whatever type the tokenizer // identified it as during parsing. Exactly one of these should be set. optional string identifier_value = 3; optional uint64 positive_int_value = 4; optional int64 negative_int_value = 5; optional double double_value = 6; optional bytes string_value = 7; optional string aggregate_value = 8; } // =================================================================== // Optional source code info // Encapsulates information about the original source file from which a // FileDescriptorProto was generated. message SourceCodeInfo { // A Location identifies a piece of source code in a .proto file which // corresponds to a particular definition. This information is intended // to be useful to IDEs, code indexers, documentation generators, and similar // tools. // // For example, say we have a file like: // message Foo { // optional string foo = 1; // } // Let's look at just the field definition: // optional string foo = 1; // ^ ^^ ^^ ^ ^^^ // a bc de f ghi // We have the following locations: // span path represents // [a,i) [ 4, 0, 2, 0 ] The whole field definition. // [a,b) [ 4, 0, 2, 0, 4 ] The label (optional). // [c,d) [ 4, 0, 2, 0, 5 ] The type (string). // [e,f) [ 4, 0, 2, 0, 1 ] The name (foo). // [g,h) [ 4, 0, 2, 0, 3 ] The number (1). // // Notes: // - A location may refer to a repeated field itself (i.e. not to any // particular index within it). This is used whenever a set of elements are // logically enclosed in a single code segment. For example, an entire // extend block (possibly containing multiple extension definitions) will // have an outer location whose path refers to the "extensions" repeated // field without an index. // - Multiple locations may have the same path. This happens when a single // logical declaration is spread out across multiple places. The most // obvious example is the "extend" block again -- there may be multiple // extend blocks in the same scope, each of which will have the same path. // - A location's span is not always a subset of its parent's span. For // example, the "extendee" of an extension declaration appears at the // beginning of the "extend" block and is shared by all extensions within // the block. // - Just because a location's span is a subset of some other location's span // does not mean that it is a descendent. For example, a "group" defines // both a type and a field in a single declaration. Thus, the locations // corresponding to the type and field and their components will overlap. // - Code which tries to interpret locations should probably be designed to // ignore those that it doesn't understand, as more types of locations could // be recorded in the future. repeated Location location = 1; message Location { // Identifies which part of the FileDescriptorProto was defined at this // location. // // Each element is a field number or an index. They form a path from // the root FileDescriptorProto to the place where the definition. For // example, this path: // [ 4, 3, 2, 7, 1 ] // refers to: // file.message_type(3) // 4, 3 // .field(7) // 2, 7 // .name() // 1 // This is because FileDescriptorProto.message_type has field number 4: // repeated DescriptorProto message_type = 4; // and DescriptorProto.field has field number 2: // repeated FieldDescriptorProto field = 2; // and FieldDescriptorProto.name has field number 1: // optional string name = 1; // // Thus, the above path gives the location of a field name. If we removed // the last element: // [ 4, 3, 2, 7 ] // this path refers to the whole field declaration (from the beginning // of the label to the terminating semicolon). repeated int32 path = 1 [packed=true]; // Always has exactly three or four elements: start line, start column, // end line (optional, otherwise assumed same as start line), end column. // These are packed into a single field for efficiency. Note that line // and column numbers are zero-based -- typically you will want to add // 1 to each before displaying to a user. repeated int32 span = 2 [packed=true]; // If this SourceCodeInfo represents a complete declaration, these are any // comments appearing before and after the declaration which appear to be // attached to the declaration. // // A series of line comments appearing on consecutive lines, with no other // tokens appearing on those lines, will be treated as a single comment. // // leading_detached_comments will keep paragraphs of comments that appear // before (but not connected to) the current element. Each paragraph, // separated by empty lines, will be one comment element in the repeated // field. // // Only the comment content is provided; comment markers (e.g. //) are // stripped out. For block comments, leading whitespace and an asterisk // will be stripped from the beginning of each line other than the first. // Newlines are included in the output. // // Examples: // // optional int32 foo = 1; // Comment attached to foo. // // Comment attached to bar. // optional int32 bar = 2; // // optional string baz = 3; // // Comment attached to baz. // // Another line attached to baz. // // // Comment attached to qux. // // // // Another line attached to qux. // optional double qux = 4; // // // Detached comment for corge. This is not leading or trailing comments // // to qux or corge because there are blank lines separating it from // // both. // // // Detached comment for corge paragraph 2. // // optional string corge = 5; // /* Block comment attached // * to corge. Leading asterisks // * will be removed. */ // /* Block comment attached to // * grault. */ // optional int32 grault = 6; // // // ignored detached comments. optional string leading_comments = 3; optional string trailing_comments = 4; repeated string leading_detached_comments = 6; } } // Describes the relationship between generated code and its original source // file. A GeneratedCodeInfo message is associated with only one generated // source file, but may contain references to different source .proto files. message GeneratedCodeInfo { // An Annotation connects some span of text in generated code to an element // of its generating .proto file. repeated Annotation annotation = 1; message Annotation { // Identifies the element in the original source .proto file. This field // is formatted the same as SourceCodeInfo.Location.path. repeated int32 path = 1 [packed=true]; // Identifies the filesystem path to the original source .proto. optional string source_file = 2; // Identifies the starting offset in bytes in the generated code // that relates to the identified object. optional int32 begin = 3; // Identifies the ending offset in bytes in the generated code that // relates to the identified offset. The end offset should be one past // the last relevant byte (so the length of the text = end - begin). optional int32 end = 4; } }

2.自定义proto文件

syntax = "proto3";

package proto;

option go_package = "../proto/pb";

import "google/api/annotations.proto";

service HelloWorld{

rpc SayHelloWorld(HelloWorldRequest) returns (HelloWorldResponse) {

option (google.api.http) = {

post:"/hello/say"

body:"*"

};

}

}

message HelloWorldRequest {

string name = 1;

}

message HelloWorldResponse {

string message = 1;

}

与我们之前写的proto文件有点区别,因为我们还要基于grpc-gateway实现http响应,所以,代码中加入了图片框出来的这段,声明了一个http post请求地址。

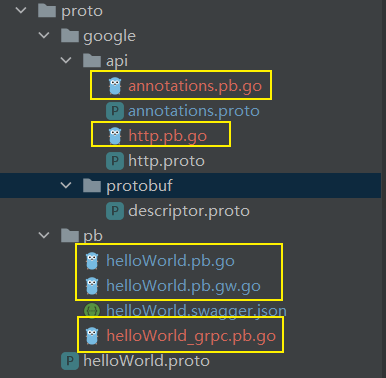

2.3 生成pb和pb.gw文件

Generate gRPC stubs

编写完proto文件后,就要使用protoc插件生成我们需要的.pb.go 和 .pb.gw.go 文件,命令已经在章节1.1贴过了,再贴一下:

#!/usr/bin/env bash

protoDir="../proto"

outDir="../proto"

# 编译google.api

protoc -I ${protoDir}/ ${protoDir}/google/api/*.proto \

--go_out ${outDir} \

--go_opt paths=source_relative

# 编译自定义的proto

protoc -I ${protoDir}/ ${protoDir}/*.proto \

--go_out ${outDir}/pb \

--go_opt paths=source_relative \

--go-grpc_out ${outDir}/pb \

--go-grpc_opt paths=source_relative \

--go-grpc_opt require_unimplemented_servers=false \

--grpc-gateway_out ${outDir}/pb \

--grpc-gateway_opt logtostderr=true \

--grpc-gateway_opt paths=source_relative \

--grpc-gateway_opt generate_unbound_methods=true \

--openapiv2_out ${outDir}/pb \

--openapiv2_opt logtostderr=true

命令根据输出目录以及proto文件的go_package参数,会将pb文件和pb.gw文件生成在对应目录:

2.4 实现服务

Implement your service in gRPC as usual.

在编写好proto文件之后,接下来就需要编写服务端的业务逻辑了。首先,在server目录下新建文件helloWorld.go,实现我们的接口,内容如下:

package server

import (

"context"

"grpc-gateway-demo/proto/pb"

)

type helloWorldService struct {

}

func NewHelloWorldService() *helloWorldService {

return &helloWorldService{}

}

func (h *helloWorldService) SayHelloWorld(ctx context.Context, r *pb.HelloWorldRequest) (*pb.HelloWorldResponse, error) {

return &pb.HelloWorldResponse{

Message: r.GetName() + "说:你好呀",

}, nil

}

2.5 反向代理服务编写

Write an entrypoint for the HTTP reverse-proxy server

在做了前面的一系列准备之后(编写proto文件,生成.pb.go、.pb.gw.go以及实现接口服务),反向代理服务的编写是我们最为重要的一部分,涉及到grpc、grpc-gateway、TLS证书以及一些网络知识的应用。

2.5.1 在pkg下新建util目录,新建grpc.go文件

package util import ( "google.golang.org/grpc" "net/http" "strings" ) // GrpcHandlerFunc 函数是用于判断请求来源于Rpc客户端还是Restful api的请求,根据不同的请求注册不同的ServerHTTP服务, // r.ProtoMajor=2代表着请求必须基于HTTP/2 func GrpcHandlerFunc(grpcServer *grpc.Server, otherHandler http.Handler) http.Handler { if otherHandler == nil { return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) { grpcServer.ServeHTTP(w, r) }) } return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) { if r.ProtoMajor == 2 && strings.Contains(r.Header.Get("Content-Type"), "application/grpc") { grpcServer.ServeHTTP(w, r) } else { otherHandler.ServeHTTP(w, r) } }) }

GrpcHandlerFunc函数是用于判断请求是来源于RPC客户端还是RESTful API请求,grpc-gateway做了一层反向代理,能同时处理这两种请求,根据不同的请求注册不同的ServerHTTP服务;r.ProtoMajor == 2表示请求必须基于HTTP/2,strings.Contains(r.Header.Get("Content-Type"), "application/grpc") 表示接收来自grpc客户端的请求。

2.5.2 在pkg下新建util目录,新建tls.go文件

在项目中,我们生成了自制的证书,用于去校验HTTPS请求。当然,如果使用普通的HTTP和GRPC也是没问题的,后面的代码会使用到grpc.WithTransportCredentials(),如果不使用安全传输的话,可以使用grpc.WithInsecure()。

tls.go用于读取证书的相关信息,代码如下:

package util import ( "crypto/tls" "golang.org/x/net/http2" "io/ioutil" "log" ) // GetTLSConfig // 用户获取TLS配置,读取了server.key 和 server.pem证书凭证文件 // tls.X509KeyPair: 从一对PEM编码的数据中解析公钥/私钥对 // tls.Certificate: 返回一个或者多个证书 // http2.NextProtoTLS:用户HTTP/2的TLS配置 func GetTLSConfig(certPemPath, certKeyPath string) *tls.Config { var certKeyPair *tls.Certificate cert, _ := ioutil.ReadFile(certPemPath) key, _ := ioutil.ReadFile(certKeyPath) pair, err := tls.X509KeyPair(cert, key) if err != nil { log.Printf("TLS KeyPair err: %v\n\n", err) } certKeyPair = &pair return &tls.Config{ Certificates: []tls.Certificate{*certKeyPair}, NextProtos: []string{http2.NextProtoTLS}, } }

-

tls.X509KeyPair:从一对PEM编码的数据中解析公钥/私钥对。成功则返回公钥/私钥对 -

http2.NextProtoTLS:NextProtoTLS是谈判期间的NPN/ALPN协议,用于HTTP/2的TLS设置 -

tls.Certificate:返回一个或多个证书,实质我们解析PEM调用的X509KeyPair的函数声明就是func X509KeyPair(certPEMBlock, keyPEMBlock []byte) (Certificate, error),返回值就是Certificate

总的来说该函数是用于处理从证书凭证文件(PEM),最终获取tls.Config作为HTTP2的使用参数

2.5.3 在server下新建server.go文件

package server import ( "context" "crypto/tls" "github.com/elazarl/go-bindata-assetfs" "github.com/grpc-ecosystem/grpc-gateway/v2/runtime" "google.golang.org/grpc" "google.golang.org/grpc/credentials" "google.golang.org/grpc/reflection" "grpc-gateway-demo/pkg/ui/data/swagger" "grpc-gateway-demo/pkg/util" "grpc-gateway-demo/proto/pb" "log" "net" "net/http" "path" "strings" ) var ( Port string CertName string CertPemPath string CertKeyPath string SwaggerDir string ) func Server() (err error) { log.Println(Port, CertName, CertPemPath, CertKeyPath) // 监听本地的网络地址 address := ":" + Port conn, err := net.Listen("tcp", address) if err != nil { log.Printf("TCP Listen err: %v\n", err) return err } tlsConfig := util.GetTLSConfig(CertPemPath, CertKeyPath) server := createInternalServer(address, tlsConfig) log.Printf("gRPC and https listen on:%s\n", Port) newListener := tls.NewListener(conn, tlsConfig) if err = server.Serve(newListener); err != nil { log.Printf("Listen and Server err: %v\n", err) return err } return nil } /** createInternalServer: 创建grpc服务 */ func createInternalServer(address string, tlsConfig *tls.Config) *http.Server { var opts []grpc.ServerOption // grpc server cred, err := credentials.NewServerTLSFromFile(CertPemPath, CertKeyPath) if err != nil { log.Printf("Failed to create server TLS credentials: %v\n", err) } opts = append(opts, grpc.Creds(cred)) server := grpc.NewServer(opts...) // reflection register reflection.Register(server) // register grpc pb pb.RegisterHelloWorldServer(server, NewHelloWorldService()) // gateway server ctx := context.Background() newCred, err := credentials.NewClientTLSFromFile(CertPemPath, CertName) if err != nil { log.Printf("Failed to create client TLS credentials: %v\n", err) } dialOpt := []grpc.DialOption{grpc.WithTransportCredentials(newCred)} gateWayMux := runtime.NewServeMux() // register grpc-gateway pb if err := pb.RegisterHelloWorldHandlerFromEndpoint(ctx, gateWayMux, address, dialOpt); err != nil { log.Printf("Failed to register gateway server: %v\n", err) } mux := http.NewServeMux() mux.Handle("/", gateWayMux) // register swagger //mux.HandleFunc("/swagger/", swaggerFile) //swaggerUI(mux) return &http.Server{ Addr: address, Handler: util.GrpcHandlerFunc(server, mux), TLSConfig: tlsConfig, } } /** swaggerFile: 提供对swagger.json文件的访问支持 */ func swaggerFile(w http.ResponseWriter, r *http.Request) { if !strings.HasSuffix(r.URL.Path, "swagger.json") { log.Printf("Not Found: %s", r.URL.Path) http.NotFound(w, r) return } p := strings.TrimPrefix(r.URL.Path, "/swagger/") name := path.Join(SwaggerDir, p) log.Printf("Serving swagger-file: %s", name) http.ServeFile(w, r, name) } /** swaggerUI: 提供UI支持 */ func swaggerUI(mux *http.ServeMux) { fileServer := http.FileServer(&assetfs.AssetFS{ Asset: swagger.Asset, AssetDir: swagger.AssetDir, }) prefix := "/swagger-ui/" mux.Handle(prefix, http.StripPrefix(prefix, fileServer)) }

server.go是本次项目的核心部分,涉及一下几个流程:

1.启动监听

// 监听本地的网络地址 address := ":" + Port conn, err := net.Listen("tcp", address)

2.获取TLS信息

tlsConfig := util.GetTLSConfig(CertPemPath, CertKeyPath)

3.创建grpc的TLS认证凭证

cred, err := credentials.NewServerTLSFromFile(CertPemPath, CertKeyPath) if err != nil { log.Printf("Failed to create server TLS credentials: %v\n", err) }

NewServerTLSFromFile 方法通过传入证书文件和密钥文件构建TLS证书凭证。

// NewServerTLSFromFile constructs TLS credentials from the input certificate file and key // file for server. func NewServerTLSFromFile(certFile, keyFile string) (TransportCredentials, error) { cert, err := tls.LoadX509KeyPair(certFile, keyFile) if err != nil { return nil, err } return NewTLS(&tls.Config{Certificates: []tls.Certificate{cert}}), nil }

4.创建grpc服务

// grpc server var opts []grpc.ServerOption opts = append(opts, grpc.Creds(cred)) server := grpc.NewServer(opts...)

通过 grpc.NewServer(opt ...ServerOption) 创建出了grpc server,如果方法不传入参数,那么创建的就不会使用证书认证。

5.基于反射注册grpc服务

// reflection register reflection.Register(server)

6.注册grpc服务

// register grpc pb pb.RegisterHelloWorldServer(server, NewHelloWorldService())

因为演示代码只有一个helloWorld.proto,所以也就只有一个Service,如果项目有多个,那么就依次注入即可。

7.创建grpc-gateway服务及注册

// gateway server ctx := context.Background() newCred, err := credentials.NewClientTLSFromFile(CertPemPath, CertName) if err != nil { log.Printf("Failed to create client TLS credentials: %v\n", err) } dialOpt := []grpc.DialOption{grpc.WithTransportCredentials(newCred)} gateWayMux := runtime.NewServeMux() // register grpc-gateway pb if err := pb.RegisterHelloWorldHandlerFromEndpoint(ctx, gateWayMux, address, dialOpt); err != nil { log.Printf("Failed to register gateway server: %v\n", err) } mux := http.NewServeMux() mux.Handle("/", gateWayMux)

反向代理的核心部分,前面的还都是grpc自带的功能,这一部分是使用到了grpc-gateway了

-

context.Background():创建一个context,作为传入请求的上下文

-

credentials.NewClientTLSFromFile

-

grpc.WithTransportCredentials(newCred):配置一个连接级别的安全凭证,前面创建的证书也是为了这一步使用,如果不想使用证书,可以使用grpc.WithInsecure(),同样都是返回type DialOption

-

runtime.NewServeMux:返回一个新的ServeMux,这是grpc-gateway的一个请求多路复用器,它将http请求与模式匹配,并调用相应的处理程序

-

RegisterHelloWorldHandlerFromEndpoint:注册HelloWorld服务的HTTP handle到grpc端点,同样的,如果有其他服务,依次注入即可。

-

http.NewServeMux():分配一个新的ServeMux,并使用mux.Handle("/", gateWayMux),将gateWayMux注入到程序中。

8.返回http.Server

return &http.Server{ Addr: address, Handler: util.GrpcHandlerFunc(server, mux), TLSConfig: tlsConfig, }

创建新的http.Server,将监听的网络地址,TLS配置,以及处理请求来源的Handler一并返回

9.创建tls.NewListener

newListener := tls.NewListener(conn, tlsConfig)

10.监听并接受请求

if err = server.Serve(newListener); err != nil { log.Printf("Listen and Server err: %v\n", err) return err }

2.6 运行服务端和客户端

2.6.1 运行服务端

到了这一步,就是怎么把服务端运行起来,在server.go的server方法中,用到了4个变量:

func Server() (err error) {

log.Println(Port, CertName, CertPemPath, CertKeyPath)

}

在这里,我们使用Cobra包来实现命令行功能,Cobra既是创建强大的现代CLI应用程序的库,也是生成应用程序和命令文件的程序。

在cmd目录下创建root.go以及创建server文件夹,在server文件夹下创建server.go

server.go内容如下:

package server import ( "github.com/spf13/cobra" "grpc-gateway-demo/server" "log" ) var StartCmd = &cobra.Command{ Use: "server", Short: "Run the gRPC gateway server", Run: func(cmd *cobra.Command, args []string) { defer func() { if err := recover(); err != nil { log.Printf("Recover error:%v \n", err) } }() err := server.Server() if err != nil { return } }, } func init() { StartCmd.Flags().StringVarP(&server.Port, "port", "p", "50001", "server port") StartCmd.Flags().StringVarP(&server.CertPemPath, "cert-pem", "", "./certs/server.pem", "cert pem path") StartCmd.Flags().StringVarP(&server.CertKeyPath, "cert-key", "", "./certs/server.key", "cert key path") StartCmd.Flags().StringVarP(&server.CertName, "cert-name", "", "grpc.demo", "server's hostname") }

-

func init():在函数中,使用StartCmd.Flags().StringVarP 将Port、CertPemPath、CertKeyPath、CertName变量赋予初始值,并支持通过命令行的方式传入值。

-

&cobra.Command

-

Use:Command的用法,Use是一个行用法,是我们在执行的时候会使用到的

-

Short:Short是help命令输出中显示的简短描述

-

Run:是我们实际要运行的函数,调用服务端的server方法

-

在server.go 中已经使用了StartCmd,现在需要将StartCmd添加到父命令中去,所以,在root.go中代码如下:

package cmd import ( "fmt" "github.com/spf13/cobra" "grpc-gateway-demo/cmd/client" "grpc-gateway-demo/cmd/server" "os" ) var rootCmd = &cobra.Command{ Use: "grpc", Short: "Run the gRPC gateway server", } func init() { rootCmd.AddCommand(client.StartCmd) rootCmd.AddCommand(server.StartCmd) } func Execute() { // rootCmd:表示在没有任何子命令的情况下的基本命令 if err := rootCmd.Execute(); err != nil { fmt.Println(err) os.Exit(-1) } }

在init方法中,添加了服务端和客户端的启动命令,并执行rootCmd.Execute(),所以在main.go中,只需要执行Execute这个方法

在根目录下创建main.go,代码如下:

package main import "grpc-gateway-demo/cmd" func main() { cmd.Execute() }

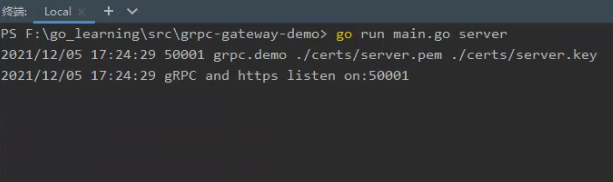

现在,我们就可以启动服务端了,命令如下:

go run main.go server

2.6.2 运行客户端

为了查看server的接口信息,我们可以使用Postman或者curl命令执行发起请求,同时,我们也可以使用代码运行来看看结果

新建目录client,新建client.go文件,代码如下:

package client import ( "golang.org/x/net/context" "google.golang.org/grpc" "google.golang.org/grpc/credentials" "grpc-gateway-demo/proto/pb" "log" ) var ( Port string CertPemPath string CertName string ) func Client() error { cred, err := credentials.NewClientTLSFromFile(CertPemPath, CertName) if err != nil { log.Printf("Failed to create TLS credentials %v\n", err) return err } endPoint := ":" + Port conn, err := grpc.Dial(endPoint, grpc.WithTransportCredentials(cred)) defer conn.Close() if err != nil { log.Println(err) return err } c := pb.NewHelloWorldClient(conn) ctx := context.Background() body := &pb.HelloWorldRequest{ Name: "CXT", } r, err := c.SayHelloWorld(ctx, body) if err != nil { log.Println(err) return err } log.Println(r.Message) return nil }

同时,在cmd目录下新建client文件夹,client文件夹下新建server.go,也是使用cobra来实现:

package client import ( "github.com/spf13/cobra" "grpc-gateway-demo/client" "log" ) var StartCmd = &cobra.Command{ Use: "client", Short: "Run the gRPC gateway client", Run: func(cmd *cobra.Command, args []string) { defer func() { if err := recover(); err != nil { log.Printf("Recover error:%v\n", err) } }() err := client.Client() if err != nil { return } }, } func init() { StartCmd.Flags().StringVarP(&client.Port, "port", "p", "50001", "server port") StartCmd.Flags().StringVarP(&client.CertPemPath, "cert-pem", "", "./certs/server.pem", "cert pem path") StartCmd.Flags().StringVarP(&client.CertName, "cert-name", "", "grpc.demo", "client's hostname") }

现在,我们就可以启动客户端端了,命令如下:

go run main.go client

使用curl执行测试:

curl -X POST -k https://127.0.0.1:50001/hello/say -d '{"name":"Chen xiaotao"}'

使用postman测试:

2.7 grpc-gateway 集成swagger

如果我们基于curl命令或者postman来请求服务端,是没有问题的,但能这么用的前提是我们知道能访问哪些服务,以及服务的请求参数又是什么。

Swagger是全球最大的OpenAPI规范(OAS)API开发工具框架,支持从设计和文档到测试和部署的整个API生命周期的开发。

Swagger是目前最受欢迎的RESTful Api文档生成工具之一,主要的原因如下:

- 跨平台、跨语言的支持

- 强大的社区

- 生态圈 Swagger Tools(Swagger Editor、Swagger Codegen、Swagger UI ...)

- 强大的控制台

2.7.1 生成swagger.json

就用到这个命令,就可以生成了*.swagger.json

2.7.2 下载Swagger UI文件

去github上将

的源码下载下来,拷贝dist目录下的所有文件到我们项目的third_party/swagger-ui 目录去。

2.7.3 将Swagger UI转换为Go源代码

安装go-bindata 支持将任何文件转换为可管理的Go源代码。

go get -u github.com/jteeuwen/go-bindata/...

在项目下新建pkg/ui/data/swagger 目录,通过命令转换,最终生产datafile.go文件。生成的文件比较大,有17M,在IDEA中,代码洞察功能就不能用了,需要执行设置一下。

go-bindata --nocompress -pkg swagger -o pkg/ui/data/swagger/datafile.go third_party/swagger-ui/...

2.7.4 支持swagger.json文件对外访问

/** swaggerFile: 提供对swagger.json文件的访问支持 */ func swaggerFile(w http.ResponseWriter, r *http.Request) { if !strings.HasSuffix(r.URL.Path, "swagger.json") { log.Printf("Not Found: %s", r.URL.Path) http.NotFound(w, r) return } p := strings.TrimPrefix(r.URL.Path, "/swagger/") name := path.Join(SwaggerDir, p) log.Printf("Serving swagger-file: %s", name) http.ServeFile(w, r, name) }

// register swagger mux.HandleFunc("/swagger/", swaggerFile)

在上面的代码中,获取请求的路径,需要以swagger前缀开头,以swagger.json结尾,访问到项目中的proto/pb目录下的swagger文件,例如helloWorld.swagger.json。

2.7.5 支持swagger-ui

import ( "github.com/elazarl/go-bindata-assetfs" "grpc-gateway-demo/pkg/ui/data/swagger" ) /** swaggerUI: 提供UI支持 */ func swaggerUI(mux *http.ServeMux) { fileServer := http.FileServer(&assetfs.AssetFS{ Asset: swagger.Asset, AssetDir: swagger.AssetDir, }) prefix := "/swagger-ui/" mux.Handle(prefix, http.StripPrefix(prefix, fileServer)) }

引入两个包:github.com/elazarl/go-bindata-assetfs 、grpc-gateway-demo/pkg/ui/data/swagger;

go-bindata-assetfs用来调度之前生成的datafile.go文件,结合net/http来对外提供swagger-ui的服务。

详细可以看看代码,运行是OK的

2.7.6 运行

grpcurl工具基于反射,提供了众多命令,能让我们查询服务列表,服务参数,服务调用等命令。

grpcui提供一个图形化的界面,直接输入参数就能发起请求了,是不是更方便。

2.8.1 grpcurl命令工具使用

1、安装

go get -u github.com/fullstorydev/grpcurl # go get 获取包成功后,进入github.com/fullstorydev/grpcurl/cmd/grpcurl目录下,执行 go install # 这样在gopath下的bin目录就会生成grpcurl.exe

由于grpcurl是基于反射的,可以看到我们在server.go中加入了这样一行代码:

// reflection register reflection.Register(server)

3、命令使用

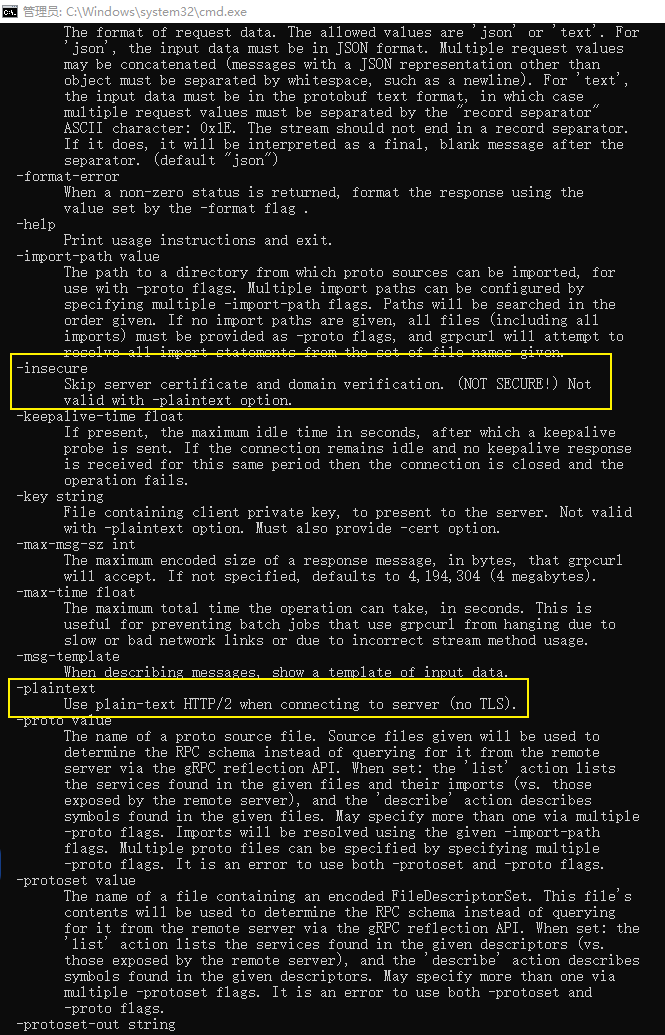

使用 grpcurl -help 查看命令

一般而言我们会使用到-plaintext,但是本项目中我们使用到了自制的tls证书,但是不被认可的,所以我们需要忽略校验,否则会出现如下错误:

http: TLS handshake error from 127.0.0.1:52358: tls: first record does not look like a TLS handshake

正确命令如下:

# 1、查询服务列表 grpcurl -insecure 127.0.0.1:50001 list # 2、查询服务提供的方法 grpcurl -insecure 127.0.0.1:50001 list proto.HelloWorld # 3、服务提供的方法更详细的描述 grpcurl -insecure 127.0.0.1:50001 describe proto.HelloWorld # 4、获取服务方法的请求类型信息 grpcurl -insecure 127.0.0.1:50001 describe proto.HelloWorldRequest # 5、调用服务的方法 grpcurl -insecure -d '{"name":"cxt"}' 127.0.0.1:50001 proto.HelloWorld/SayHelloWorld

2.8.2 grpcui界面工具使用

1、安装

go get -u github.com/fullstorydev/grpcui # go get 获取包成功后,进入github.com/fullstorydev/grpcui/cmd/grpcui,执行 go install # 这样在gopath下的bin目录就会生成grpcui.exe

2、使用

grpcui -insecure 127.0.0.1:50001 # 根据命令提示,在浏览器打开新的请求地址

3、界面

三、参考

参考了很多文章,对于grpc-gateway的使用有了初步的认识,下面列举了参考官网教程和博客文章。

https://grpc-ecosystem.github.io/grpc-gateway/

https://segmentfault.com/a/1190000013408485?utm_source=sf-similar-article

https://blog.csdn.net/m0_37322399/article/details/117308604

https://www.cnblogs.com/li-peng/p/14201079.html?ivk_sa=1024320u

浙公网安备 33010602011771号

浙公网安备 33010602011771号