keepalived实现nginx高可用

一、keepalived服务

-

keepalived 是什么?

-

Keepalived 一方面具有配置管理LVS的功能,同时还具有对LVS下面节点进行健康检查的功能,另一方面也可实现系统网络服务的高可用功能,用来防止单点故障。

- keepalived 工作原理

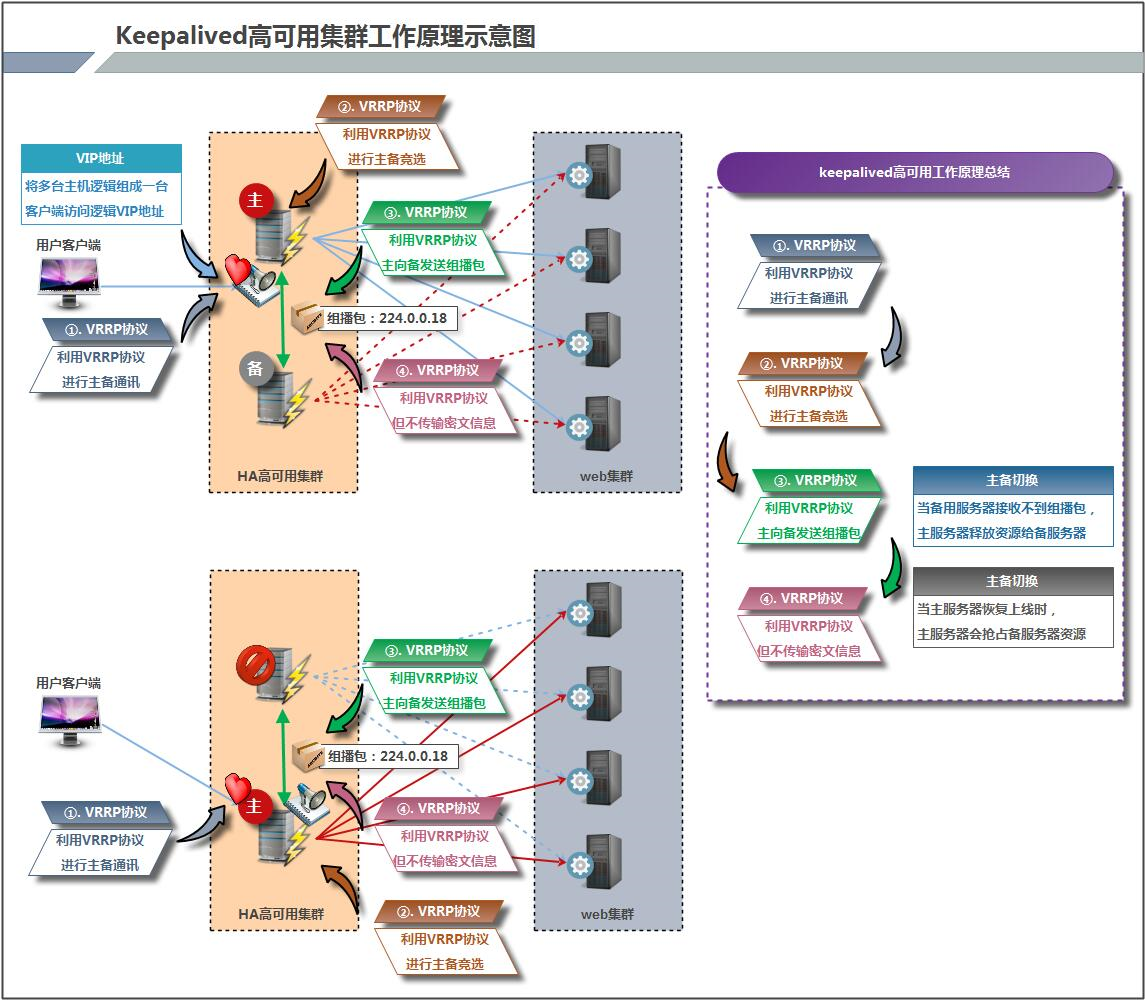

keepalived 是以 VRRP 协议为实现基础,VRRP全称Virtual Router Redundancy Protocol,即虚拟路由冗余协议。

虚拟路由冗余协议,可以认为是实现路由器高可用的协议,即将N台提供相同功能的路由器组成一个路由器组,这个组里面有一个master和多个backup,master上面有一个对外提供服务的vip(该路由器所在局域网内其他机器的默认路由为该vip),master会发组播vrrp包,用于高速backup自己还活着,当backup收不到vrrp包时就认为master宕掉了,这时就需要根据VRRP的优先级来选举一个backup当master。这样的话就可以保证路由器的高可用了。保证业务的连续性,接管速度最快可以小于1秒。

![]()

keepalived主要有三个模块,分别是core、check和vrrp。

-

core模块为keepalived的核心,负责主进程的启动、维护以及全局配置文件的加载和解析。

-

check负责健康检查,包括常见的各种检查方式。

-

vrrp模块是来实现VRRP协议的。

-

- keepalived 与 zookeeper 高可用性区别

- Keepalived:

优点:简单,基本不需要业务层面做任何事情,就可以实现高可用,主备容灾。而且容灾的宕机时间也比较短。

缺点:也是简单,因为VRRP、主备切换都没有什么复杂的逻辑,所以无法应对某些特殊场景,比如主备通信链路出问题,会导致脑裂。同时keepalived也不容易做负载均衡。 -

Zookeeper:优点:可以支持高可用,负载均衡。本身是个分布式的服务。缺点:跟业务结合的比较紧密。需要在业务代码中写好ZK使用的逻辑,比如注册名字。拉取名字对应的服务地址等。

- Keepalived:

二、keepalived 软件使用

- keepalived 安装

[root@etcd1 ~]# yum install keepalived -y

/etc/keepalived /etc/keepalived/keepalived.conf #keepalived服务主配置文件 /etc/rc.d/init.d/keepalived #服务启动脚本(centos 7 之前的用init.d 脚本启动,之后的systemd启动) /etc/sysconfig/keepalived /usr/bin/genhash /usr/libexec/keepalived /usr/sbin/keepalived

- 配置说明

[root@etcd1 kubernetes]# cat /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { # 全局配置 notification_email { # 定义报警邮件地址 acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc # 定义发送邮件的地址 smtp_server 192.168.200.1 # 邮箱服务器 smtp_connect_timeout 30 # 定义超时时间 router_id LVS_DEVEL # 定义路由器标识信息,相同的局域网唯一,标识本节点的字条串,通常为hostname vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 }

# 虚拟 IP 配置 vrrp vrrp_instance VI_1 { # 定义实例 state MASTER # 状态参数 master/backup 仅表示说明,主节点为 MASTER, 对应的备份节点为 BACKUP interface eth0 # 绑定虚拟 IP 的网络接口,与本机 IP 地址所在的网络接口相同, 我的是 eth0 virtual_router_id 51 # 虚拟路由的 ID 号, 两个节点设置必须一样, 可选 IP 最后一段使用, 相同的 VRID 为一个组,他将决定多播的 MAC 地址

mcast_src_ip 192.168.10.50 ## 本机 IP 地址 priority 100 # 优先级决定是主还是备 --> 越大越优先,节点优先级, 值范围 0-254, MASTER 要比 BACKUP 高

nopreempt # 优先级高的设置 nopreempt 解决异常恢复后再次抢占的问题 advert_int 1 # 主备心跳通讯时间间隔,组播信息发送间隔,两个节点设置必须一样, 默认 1s authentication { # 认证授权,设置验证信息,两个节点必须一致 auth_type PASS auth_pass 1111 }

## 将 track_script 块加入 instance 配置块

track_script {

chk_nginx ## 执行 Nginx 监控的服务

} virtual_ipaddress { # vip,设备之间使用的虚拟ip地址,可以定义多个

192.168.200.16 192.168.200.17 192.168.200.18 } } .... - 使用配置

主负载均衡服务器配置[root@etcd1 vagrant]# vi /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id lvs-01 } ## keepalived 会定时执行脚本并对脚本执行的结果进行分析,动态调整 vrrp_instance 的优先级。 # 如果脚本执行结果为 0,并且 weight 配置的值大于 0,则优先级相应的增加。 # 如果脚本执行结果非 0,并且 weight配置的值小于 0,则优先级相应的减少。 # 其他情况,维持原本配置的优先级,即配置文件中 priority 对应的值。 vrrp_script chk_nginx { script "/etc/keepalived/nginx_check.sh" ## 检测 nginx 状态的脚本路径 interval 2 ## 检测时间间隔 weight -20 ## 如果条件成立,权重-20 } vrrp_instance VI_1 { state MASTER interface eth1 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } ## 将 track_script 块加入 instance 配置块 track_script { chk_nginx ## 执行 Nginx 监控的服务 } virtual_ipaddress { 192.168.10.99 } } ... - 备负载均衡服务器配置

[root@etcd2 vagrant]# vi /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id lvs-02 } vrrp_script chk_nginx { script "/etc/keepalived/nginx_check.sh" interval 3 weight -20 } vrrp_instance VI_1 { state MASTER interface eth1 virtual_router_id 51 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 1111 } ## 将 track_script 块加入 instance 配置块 track_script { chk_nginx ## 执行 Nginx 监控的服务 } virtual_ipaddress { 192.168.10.99 } } ... - 启动

查看启动文件:

[root@etcd2 init.d]# cat /usr/lib/systemd/system/keepalived.service [Unit] Description=LVS and VRRP High Availability Monitor After=syslog.target network-online.target [Service] Type=forking PIDFile=/var/run/keepalived.pid KillMode=process EnvironmentFile=-/etc/sysconfig/keepalived ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS ExecReload=/bin/kill -HUP $MAINPID [Install] WantedBy=multi-user.target

相关操作命令如下:

chkconfig keepalived on #keepalived服务开机启动 service keepalived start #启动服务 service keepalived stop #停止服务 service keepalived restart #重启服务

keepalived正常运行后,会启动3个进程,其中一个是父进程,负责监控其子进程。一个是vrrp子进程,另外一个是checkers子进程。

[root@etcd2 init.d]# ps -ef | grep keepalived root 3626 1 0 08:59 ? 00:00:00 /usr/sbin/keepalived -D root 3627 3626 0 08:59 ? 00:00:00 /usr/sbin/keepalived -D root 3628 3626 0 08:59 ? 00:00:00 /usr/sbin/keepalived -D root 3656 3322 0 09:03 pts/0 00:00:00 grep --color=auto keepalived

- nginx 安装与配置

- 安装(略)

- 配置,默认配置

- nginx 默认文件index.html配置

机器10.50,配置为:

<!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx! test keepalived 1</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

机器10.51,配置为:

<!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx! test keepalived 2</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

三、测试

- 编写 nginx 检测脚本

在所有的节点上面编写 Nginx 状态检测脚本 /etc/keepalived/nginx_check.sh (已在 keepalived.conf 中配置)脚本要求:如果 nginx 停止运行,尝试启动,如果无法启动则杀死本机的 keepalived 进程, keepalied将虚拟 ip 绑定到 BACKUP 机器上。

内容如下:

[root@etcd1 keepalived]# vi nginx_check.sh #!/bin/bash set -x A=`ps -C nginx --no-header |wc -l` if [ $A -eq 0 ];then echo `date`': nginx is not healthy, try to killall keepalived' >> /etc/keepalived/keepalived.log killall keepalived fi

- 启动所有节点上的nginx和keepalived

- 192.168.10.50 机器查看ip信息

[root@etcd1 html]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:47:46:52 brd ff:ff:ff:ff:ff:ff inet 10.0.2.15/24 brd 10.0.2.255 scope global dynamic eth0 valid_lft 60191sec preferred_lft 60191sec inet6 fe80::5054:ff:fe47:4652/64 scope link valid_lft forever preferred_lft forever 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 08:00:27:c4:c3:0e brd ff:ff:ff:ff:ff:ff inet 192.168.10.50/24 brd 192.168.10.255 scope global eth1 valid_lft forever preferred_lft forever inet 192.168.10.99/32 scope global eth1 valid_lft forever preferred_lft forever inet6 fe80::a00:27ff:fec4:c30e/64 scope link tentative dadfailed valid_lft forever preferred_lft forever 4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:22:bd:9b:38 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 scope global docker0 valid_lft forever preferred_lft forever - 192.168.10.51 查看 ip 信息

[root@etcd2 vagrant]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:ca:e4:8b brd ff:ff:ff:ff:ff:ff inet 10.0.2.15/24 brd 10.0.2.255 scope global dynamic eth0 valid_lft 82271sec preferred_lft 82271sec inet6 fe80::5054:ff:feca:e48b/64 scope link valid_lft forever preferred_lft forever 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 08:00:27:ee:7d:7d brd ff:ff:ff:ff:ff:ff inet 192.168.10.51/24 brd 192.168.10.255 scope global eth1 valid_lft forever preferred_lft forever inet6 fe80::a00:27ff:feee:7d7d/64 scope link tentative dadfailed valid_lft forever preferred_lft forever 4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:fa:84:71:b0 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 scope global docker0 valid_lft forever preferred_lft forever

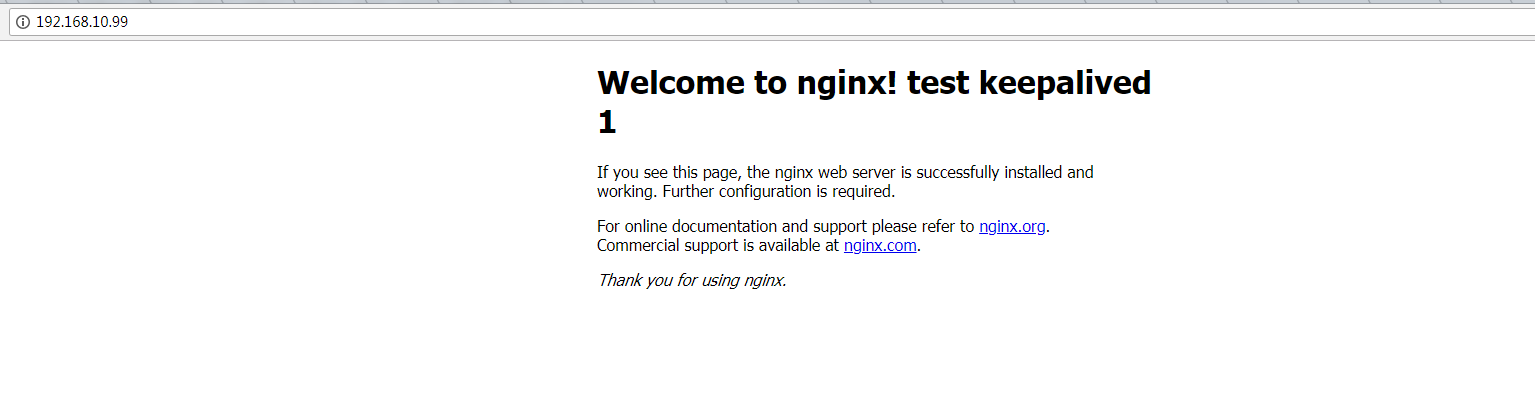

以上可以看到,vip生效是在机器192.168.10.50上面。 - 通过VIP(192.168.10.99)来访问nginx,结果如下

![]()

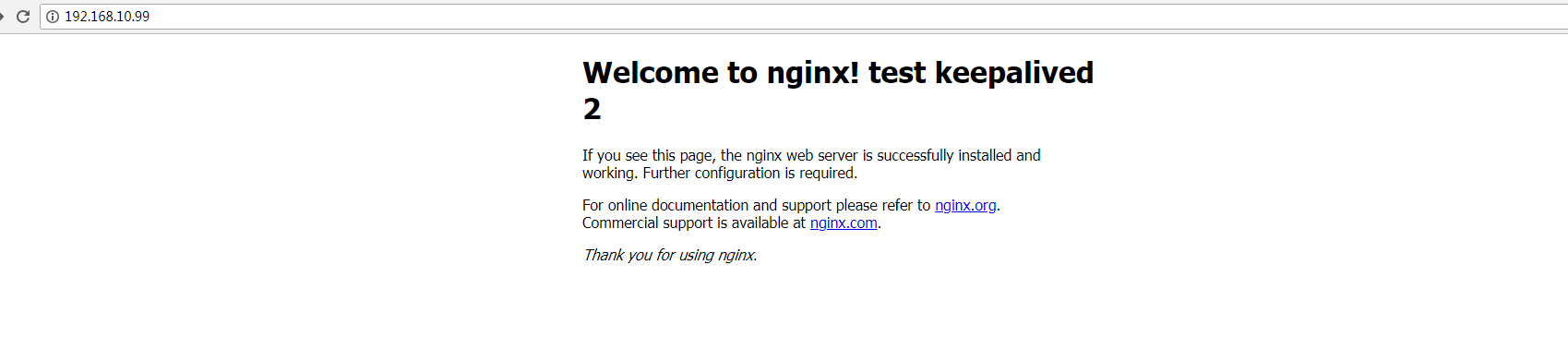

以上可知,现在生效的nginx代理机器是10.50;我们停掉机器10.50上面的keepalived [root@etcd1 html]# systemctl stop keepalived.service 。

再使用VIP(192.168.10.99)访问nginx服务,结果如下:![]()

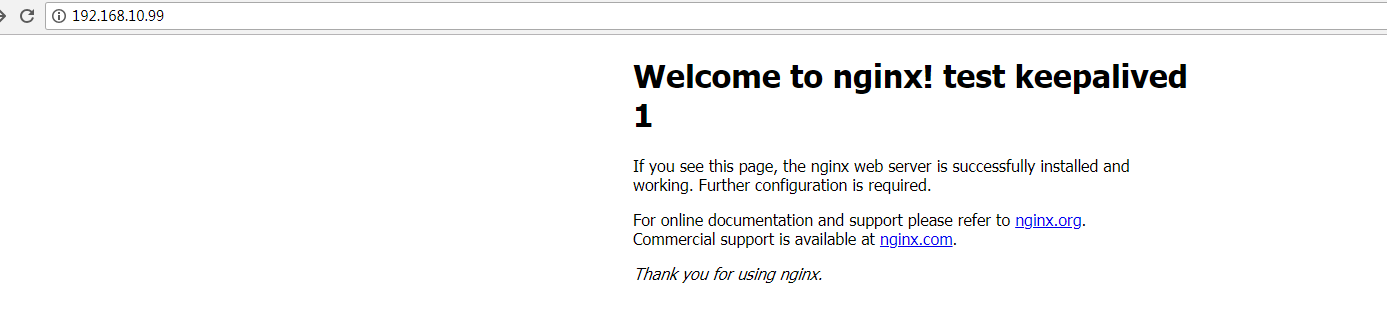

以上可知,现在生效的nginx代理机器是10.51;我们重新机器10.50上面的keepalived [root@etcd1 html]# systemctl start keepalived.service

再使用VIP(192.168.10.99)访问nginx服务,结果如下:![]()

至此,Keepalived + Nginx 实现高可用 Web 负载均衡搭建完毕。

浙公网安备 33010602011771号

浙公网安备 33010602011771号