spark常见错误

spark 技术文档

spark常见错误

1.1 ExecutorLostFailure

Exception in thread "main" org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 1 times, most recent failure: Lost task 0.0 in stage 0.0 (TID 0, localhost, executor driver): ExecutorLostFailure (executor driver exited caused by one of the running tasks) Reason: Executor heartbeat timed out after 173901 ms

Driver stacktrace:

2021-06-07 16:36:59,754 INFO --- [ dispatcher-event-loop-5] org.apache.spark.storage.BlockManagerMasterEndpoint (line: 54) : Removing block manager BlockManagerId(driver, 192.168.3.168, 56454, None)

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1435)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1423)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1422)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1422)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:802)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:802)

at scala.Option.foreach(Option.scala:257)

1.2 spark写数据到hive外部表报错

Caused by: java.lang.ClassCastException: org.apache.hadoop.hive.hbase.HiveHBaseTableOutputFormat cannot be cast to org.apache.hadoop.hive.ql.io.HiveOutputFormat

spark 2.1.1

spark在写数据到hive外部表(底层数据在hbase中)时会报错

Caused by: java.lang.ClassCastException: org.apache.hadoop.hive.hbase.HiveHBaseTableOutputFormat cannot be cast to org.apache.hadoop.hive.ql.io.HiveOutputFormat

at org.apache.spark.sql.hive.SparkHiveWriterContainer.outputFormat$lzycompute(hiveWriterContainers.scala:82)

org.apache.spark.sql.hive.SparkHiveWriterContainer

org.apache.spark.sql.hive.SparkHiveWriterContainer

@transient private lazy val outputFormat = conf.value.getOutputFormat.asInstanceOf[HiveOutputFormat[AnyRef, Writable]]

报错的是这一句,查看代码发现此时这个变量并没有什么用处,可以在不能cast时置为null

@transient private lazy val outputFormat =

// conf.value.getOutputFormat.asInstanceOf[HiveOutputFormat[AnyRef, Writable]]

conf.value.getOutputFormat match {

case format if format.isInstanceOf[HiveOutputFormat[AnyRef, Writable]] => format.asInstanceOf[HiveOutputFormat[AnyRef, Writable]]

case _ => null

}

问题解决,官方讨论如下: https://issues.apache.org/jira/browse/SPARK-6628

1.3 Row 转对象失败

Exception in thread "main" org.apache.spark.SparkException: Job aborted due to stage failure: Task 3 in stage 3.0 failed 1 times, most recent failure: Lost task 3.0 in stage 3.0 (TID 9, localhost, executor driver): java.lang.ClassCastException: org.apache.spark.sql.catalyst.expressions.GenericRowWithSchema cannot be cast to com.hangshu.model.SpinData

at com.hangshu.mock.KafkaProducerSpin03$$anonfun$main$1.apply(KafkaProducerSpin03.scala:85)

at com.hangshu.mock.KafkaProducerSpin03$$anonfun$main$1.apply(KafkaProducerSpin03.scala:84)

Exception in thread "main" org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 1.0 failed 1 times, most recent failure: Lost task 0.0 in stage 1.0 (TID 1, localhost, executor driver): java.lang.ClassCastException: scala.collection.mutable.WrappedArray$ofRef cannot be cast to com.hangshu.model.SpinData

at com.hangshu.mock.KafkaProducerSpin03$$anonfun$main$1.apply(KafkaProducerSpin03.scala:85)

at com.hangshu.mock.KafkaProducerSpin03$$anonfun$main$1.apply(KafkaProducerSpin03.scala:84)

1.4 scala调用fastjson JSON.toJSONString()序列化对象出错

https://www.cnblogs.com/createweb/p/11971351.html

https://blog.csdn.net/FlatTiger/article/details/117248559

1.5 未设置检查点

Exception in thread "main" java.lang.IllegalArgumentException: requirement failed: The checkpoint directory has not been set. Please set it by StreamingContext.checkpoint().

at scala.Predef$.require(Predef.scala:224)

at org.apache.spark.streaming.dstream.DStream.validateAtStart(DStream.scala:242)

1.6 DStreams with their functions are not serializable

Exception in thread "main" java.io.NotSerializableException: DStream checkpointing has been enabled but the DStreams with their functions are not serializable

org.apache.spark.streaming.StreamingContext

https://blog.csdn.net/spark_dev/article/details/115441447

spark.ui.retainedStages=5000,总stage数量没有超过5000, job数量没有超过1000.

1、Spark standalone 模式集群,使用zk作为服务状态一致性协调器,当zk leader宕机,spark master也跟着挂掉

问题描述:

zk leader宕机之后,spark master节点也出现宕机情况,spark standby master转换为master,若在宕机的master节点恢复健康之前zk leader又出现宕机,那么spark集群挂掉

解决方案:

1)、适当增加spark standby master

2)、编写shell脚本,定期检测master状态,出现宕机后对master进行重启操作

2、Spark standalone 模式集群主备切换后,备用master上任务状态由running变为waiting

问题描述:

当spark master宕机后,所有任务转移到备用的standby master上,转移完毕后发现master界面中之前running状态的任务现在全部变为waiting状态,但实际上该任务处于running状态

解决思路:

该问题属于spark状态检测BUG,升级到2.1版本后发现问题仍然存在。

3、CDH环境中提交spark任务报java.lang.IncompatibleClassChangeError:Implementing class

问题描述:

CDH环境中提交SPARK-STREAMING任务消费kafka数据,任务提交成功后消费到kafka数据时出现上述错误信息

解决方案:

经过排查确定该问题是由于spark在消费kafka数据时需要的kafka相关jar包版本与本身CDH环境已加载的kafka相关jar包版本不同,导致调用时引起类冲突。

在spark默认提交任务时使用classloader继承spark主线程classloader,修改loader默认的类加载策略,优先加载本地class,本地class找不到后再去寻找CDH环境中相关class,问题解决

4、spark1.6版本使用accumulator 时内存使用过大导致OOM问题

问题描述:

spark1.6版本测试过程中发现数据量比较大时内存消耗增加,查看进程内存使用逐步增长,长时间运行后出现OOM问题,2.0版本没有该问题,查看spark任务堆栈信息后发现

在spark缓存Task中存在任务分析后生成的数据没有释放,spark默认会缓存job,stage,task列表信息做缓存,而1.6版本中task会对accumulator数据做缓存并展示,导致内存使用量急剧增加

解决方案:

spark默认缓存的job=1000,stage=1000,task=10000个数为1000,修改降低三个参数缓存数量,减少内存使用,修改参数为提交spark任务时设置

--conf spark.ui.retainedJobs=50

--conf spark.ui.retainedStages=100

--conf spark.ui.retainedTasks=400

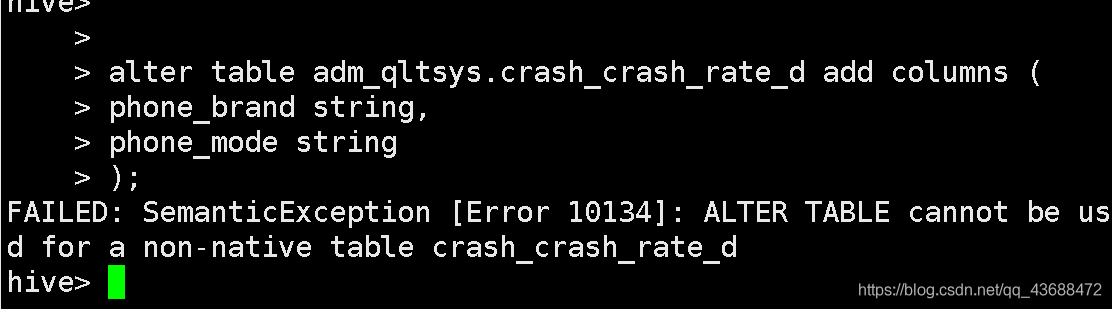

1.7 hive 外部表不支持添加列

hive 外部表不支持添加列

其实在之前我也不知道,在官网上面也没有看到

在操作的时候才发现的

alter table hbase_fct_mcht_item_day add columns(avg_stay_time_bi bigint);

1

会报如下错误:

FAILED: Error in metadata: Cannot use ALTER TABLE on a non-native table

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask

方法一:

将外部表转换为内部表操作

将 内部表 转成 外部表:

alter table uuu set tblproperties(‘EXTERNAL’ = ‘TRUE’);

注意点:

alter table t1 set tblproperties(‘EXTERNAL’ = ‘true’);

true一定要大写,小写不报错,但是不会进行修改

将 外部表 转成 内部表 :

alter table uuu set tblproperties(‘EXTERNAL’ = ‘FALSE’);

alter table log2 set tblproperties(‘EXTERNAL’ = ‘false’);

false 大小写都可以,都会进行修改

只要我们牢记外部表也是一种表就可以,普通表有分区,外部表也是有分区的。

所以如果是基于分区表创建的外部表一定要对外部表执行ALTER TABLE table_name ADD PARTITION。

否则是根本访问不到数据的。

方法二:

将外部表删掉重新创建

1.8 org.apache.hadoop.security.AccessControlException

Caused by: org.apache.hadoop.security.AccessControlException: Permission denied: user=root, access=WRITE, inode="/user/hive/warehouse/hs_spin.db/ods_wide_production":admin:hive:drwxr-xr-x

检查数据表结构,表结构有问题导致

1.9 PartialGroupNameException

2021-08-19 15:44:07,371 WARN --- [ main] org.apache.hadoop.security.ShellBasedUnixGroupsMapping (line: 136) : unable to return groups for user hdfs

PartialGroupNameException Does not support partial group name resolution on Windows. GetLocalGroupsForUser error (1332): ?????????????????

注释一下代码即可:

System.setProperty("HADOOP_USER_NAME","hdfs")

1.10 JDWP exit error JVMTI_ERROR_WRONG_PHASE(112): on getting class status [util.c:1285]

FATAL ERROR in native method: JDWP Can't allocate jvmti memory, jvmtiError=JVMTI_ERROR_INVALID_ENVIRONMENT(116)

FATAL ERROR in native method: JDWP on getting class status, jvmtiError=JVMTI_ERROR_WRONG_PHASE(112)

Disconnected from the target VM, address: '127.0.0.1:55244', transport: 'socket'

JDWP exit error JVMTI_ERROR_WRONG_PHASE(112): on getting class status [util.c:1285]

JDWP exit error JVMTI_ERROR_INVALID_ENVIRONMENT(116): Can't allocate jvmti memory [util.c:1799]

ERROR: JDWP unable to dispose of JVMTI environment: JVMTI_ERROR_INVALID_ENVIRONMENT(116)

-server -Xms1024m -Xmx2048m -XX:PermSize=512m -XX:MaxPermSize=512m -XX:+CMSClassUnloadingEnabled -XX:+PrintGCDetails -Xloggc:%M2_HOME%/gc.log -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=%M2_HOME%/java_pid.hproyuan

1.11 ClassNotFoundException org.apache.spark.sql.catalyst.util.CaseInsensitiveMap$

Caused by: java.lang.ClassNotFoundException: org.apache.spark.sql.catalyst.util.CaseInsensitiveMap$

java.lang.NoClassDefFoundError: org/apache/spark/sql/catalyst/util/CaseInsensitiveMap$

at org.apache.spark.sql.hive.HiveExternalCatalog.org$apache$spark$sql$hive$HiveExternalCatalog$$getLocationFromStorageProps(HiveExternalCatalog.scala:494)

at org.apache.spark.sql.hive.HiveExternalCatalog.restoreDataSourceTable(HiveExternalCatalog.scala:732)

at org.apache.spark.sql.hive.HiveExternalCatalog.org$apache$spark$sql$hive$HiveExternalCatalog$$restoreTableMetadata(HiveExternalCatalog.scala:657)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:628)

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$getTable$1.apply(HiveExternalCatalog.scala:628)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97)

at org.apache.spark.sql.hive.HiveExternalCatalog.getTable(HiveExternalCatalog.scala:627)

at org.apache.spark.sql.hive.HiveMetastoreCatalog.lookupRelation(HiveMetastoreCatalog.scala:124)

at org.apache.spark.sql.hive.HiveSessionCatalog.lookupRelation(HiveSessionCatalog.scala:70)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.org$apache$spark$sql$catalyst$analysis$Analyzer$ResolveRelations$$lookupTableFromCatalog(Analyzer.scala:457)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$$anonfun$apply$8.applyOrElse(Analyzer.scala:466)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$$anonfun$apply$8.applyOrElse(Analyzer.scala:464)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan$$anonfun$resolveOperators$1.apply(LogicalPlan.scala:61)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan$$anonfun$resolveOperators$1.apply(LogicalPlan.scala:61)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:70)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.resolveOperators(LogicalPlan.scala:60)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.apply(Analyzer.scala:464)

at org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations$.apply(Analyzer.scala:454)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:85)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:82)

at scala.collection.LinearSeqOptimized$class.foldLeft(LinearSeqOptimized.scala:124)

at scala.collection.immutable.List.foldLeft(List.scala:84)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:82)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:74)

at scala.collection.immutable.List.foreach(List.scala:381)

at org.apache.spark.sql.catalyst.rules.RuleExecutor.execute(RuleExecutor.scala:74)

at org.apache.spark.sql.execution.QueryExecution.analyzed$lzycompute(QueryExecution.scala:69)

at org.apache.spark.sql.execution.QueryExecution.analyzed(QueryExecution.scala:67)

at org.apache.spark.sql.execution.QueryExecution.assertAnalyzed(QueryExecution.scala:50)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:63)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:592)

... 49 elided

Caused by: java.lang.ClassNotFoundException: org.apache.spark.sql.catalyst.util.CaseInsensitiveMap$

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 80 more

Permission denied: user=root, access=WRITE, inode="/user":hdfs:supergroup:drwxr-xr-x

Permission denied: user=root, access=WRITE, inode="/user":hdfs:supergroup:drwxr-xr-x

在使用root 账户安装完hadoop 集群后 在使用flink 使用on yarn 方式进行集群启动持续Permission denied: user=root,access=WRITE,inode="/user":hdfs:supergroup:drwxr-xr-x错误,查阅相关资料明白/user文件的所有者是HDFS 权限为755 也就是只有HDFS才能对这个文件进行操作,而root去访问hdfs上面的/user目录没有权限,那么接下来我们便可以这样操作文件,输入命令su hdfs ,然后 输入hadoop fs -chmod 777 /user 来进行授权,再重新执行启动命令即可

hadoop fs -chmod -R 777 /warehouse/tablespace/managed/hive 这个是执行该目录下所有权限都放开

hadoop fs -chmod 777 /user

<property><name>dfs.permissions.enabled</name><value>false</value><description>解除权限</description></property>

A.创建一个保存第三方jar文件的文件夹:

命令:$ mkdir external_jars

B.修改Spark配置信息

命令:$ vim conf/spark-env.sh

修改内容:SPARK_CLASSPATH=$SPARK_CLASSPATH:/opt/cdh-5.3.6/spark/external_jars/*

C.将依赖的jar文件copy到新建的文件夹中

命令:$ cp /opt/cdh-5.3.6/hive/lib/mysql-connector-java-5.1.27-bin.jar ./external_jars/

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· AI 智能体引爆开源社区「GitHub 热点速览」

· C#/.NET/.NET Core技术前沿周刊 | 第 29 期(2025年3.1-3.9)

· 从HTTP原因短语缺失研究HTTP/2和HTTP/3的设计差异