二维视角下的模板匹配

涉猎了二维视角下的模板匹配,用这篇博客记录一下,顺便再理一理思路,方便向三维的模板匹配扩展。

模板匹配定义:

模板匹配是一种最原始、最基本的模式识别方法,研究某一特定对象物的图案位于图像的什么地方,进而识别对象物,这就是一个匹配问题。

可以这样理解,手中有两张图片,一张是模板图片,另一张是场景图片,要找到模板在场景中的位置,同时确定模板图片到场景图片的变换矩阵,这样的一个任务。

相关算法

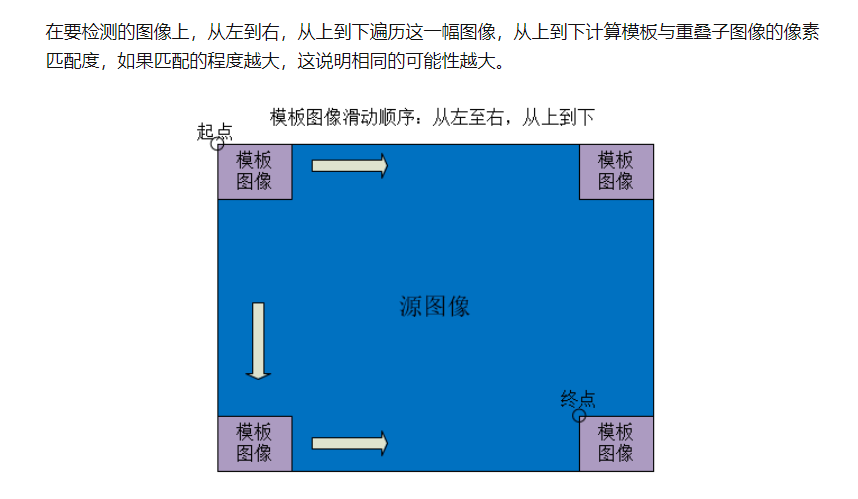

基于灰度值的模板匹配

解释一下相关的原理:

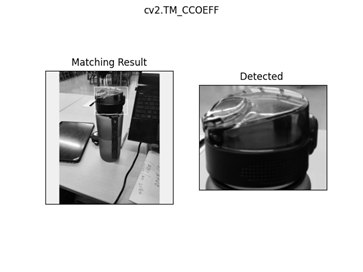

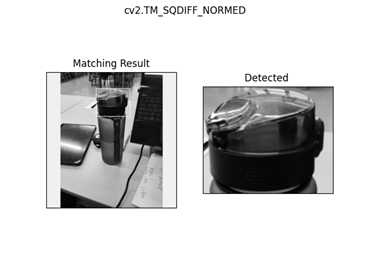

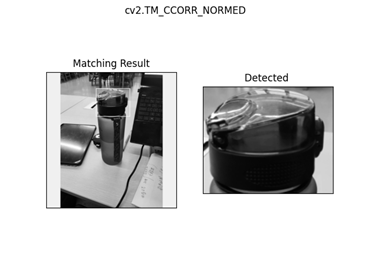

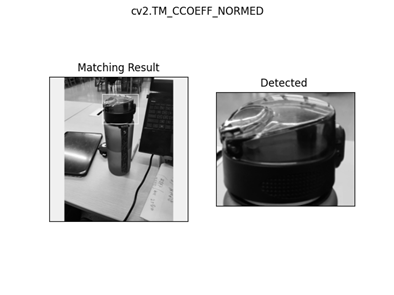

在OpenCV中支持以下6种对比方式:

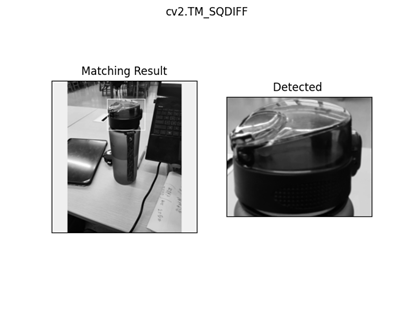

- CV_TM_SQDIFF 平方差匹配法:该方法采用平方差来进行匹配;最好的匹配值为0;匹配越差,匹配值越大。

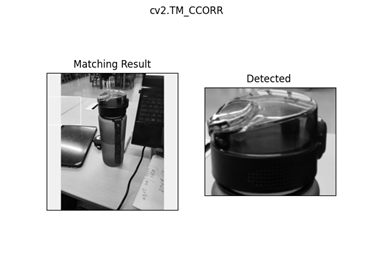

- CV_TM_CCORR 相关匹配法:该方法采用乘法操作;数值越大表明匹配程度越好。

- CV_TM_CCOEFF 相关系数匹配法:1表示完美的匹配;-1表示最差的匹配。

- CV_TM_SQDIFF_NORMED 归一化平方差匹配法

- CV_TM_CCORR_NORMED 归一化相关匹配法

- CV_TM_CCOEFF_NORMED 归一化相关系数匹配法

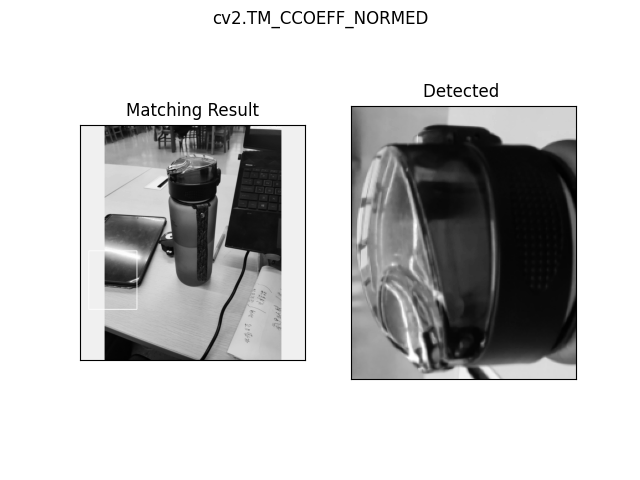

我们看一下匹配的实际情况:

|

|

|

|

|

|

|---|

这个方法其实是很不"智能",结合原理可知。一旦模板发生大幅度的旋转,放缩,错切,则匹配就会很难成功。而且也很难应对相机拍摄角度发生变化的情况。如果有小伙伴有针对这个的优化想法,欢迎留言讨论。

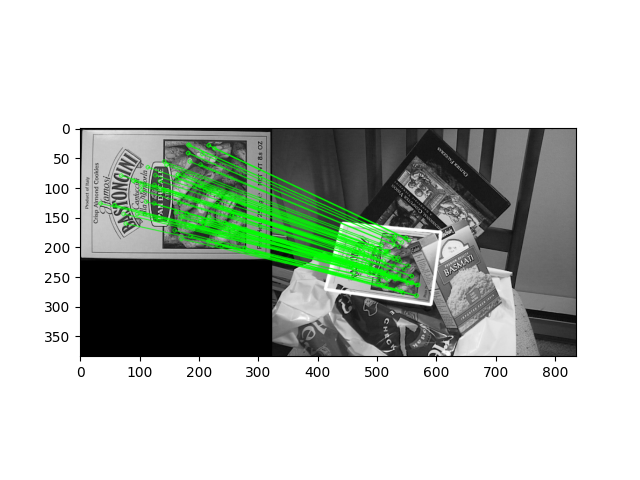

基于描述符的模板匹配

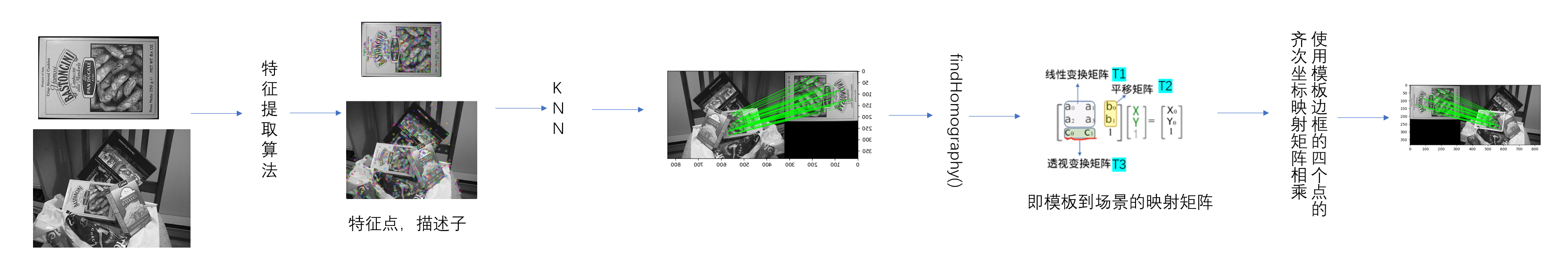

这个是很值得探讨的一种模板匹配的方法,我们可以先看一下流程图:

模板图和场景图分别通过特征提取算法得到它们各自的特征点, 描述子,然后将描述子送入KNN进行匹配,建立起点到点的对应关系。之后再用OpenCV里的findHomography()函数求出点到点的映射矩阵。之后再用模板图片边框四个点的坐标乘以这个映射矩阵,就得到了模板图片在场景图片中的位置。

这个算法最重要的地方是特征提取算法。算法提取到的特征点,描述子是否能够对图片的旋转,放缩,错切等变换具有鲁棒性,将直接决定KNN中能有多少点匹配上,进而影响后面的操作。举个例子,一个模板图片,相对于场景图片发生了一些透视变换,如果导致提取到的描述子的“距离变远了”,那这个算法就不能很好的应对这些变换。

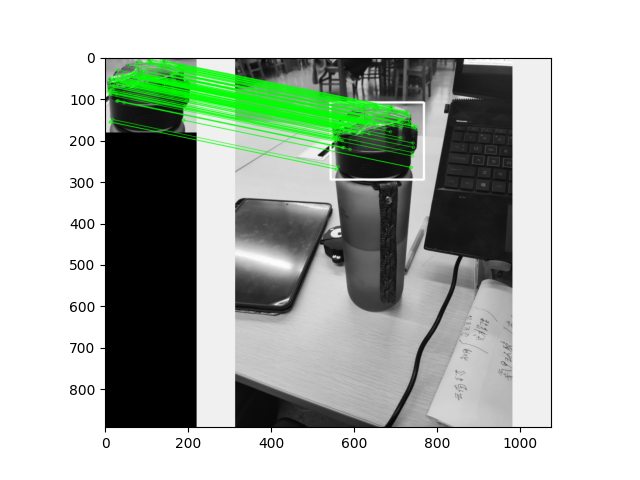

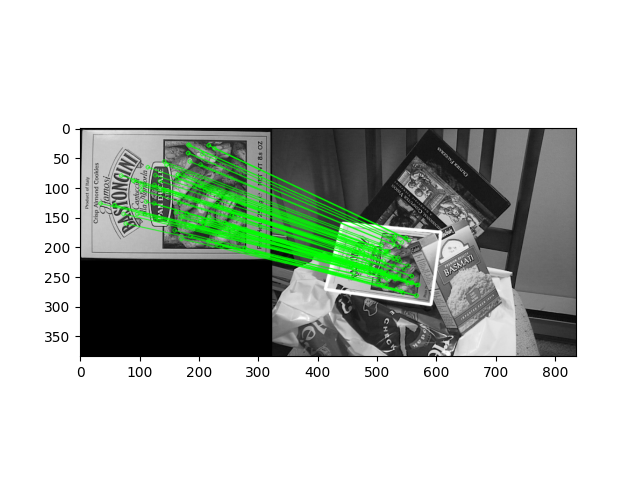

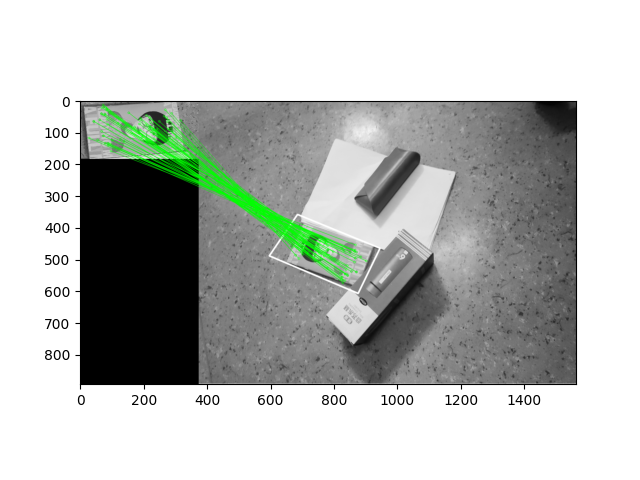

我们以sift举例,表现还不错:

|

|

|

|---|

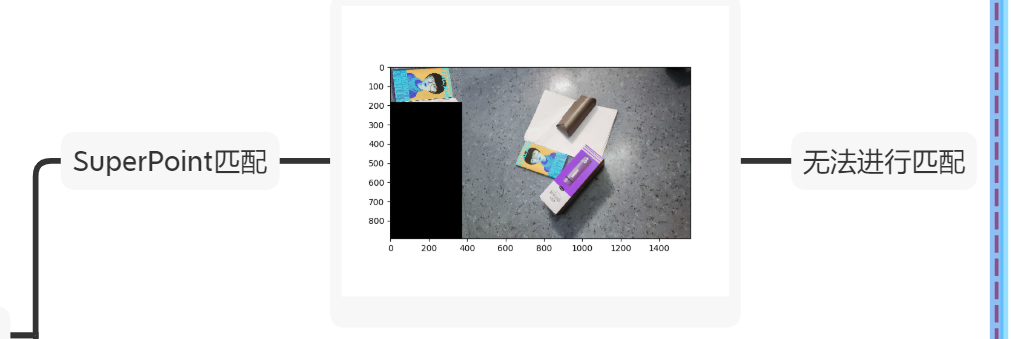

基于学习的特征提取算法与SIFT进行对比

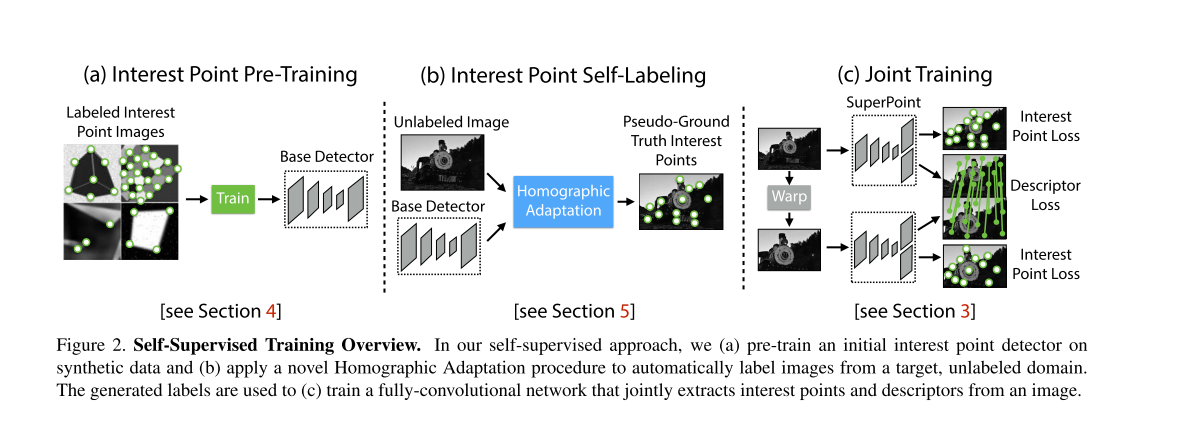

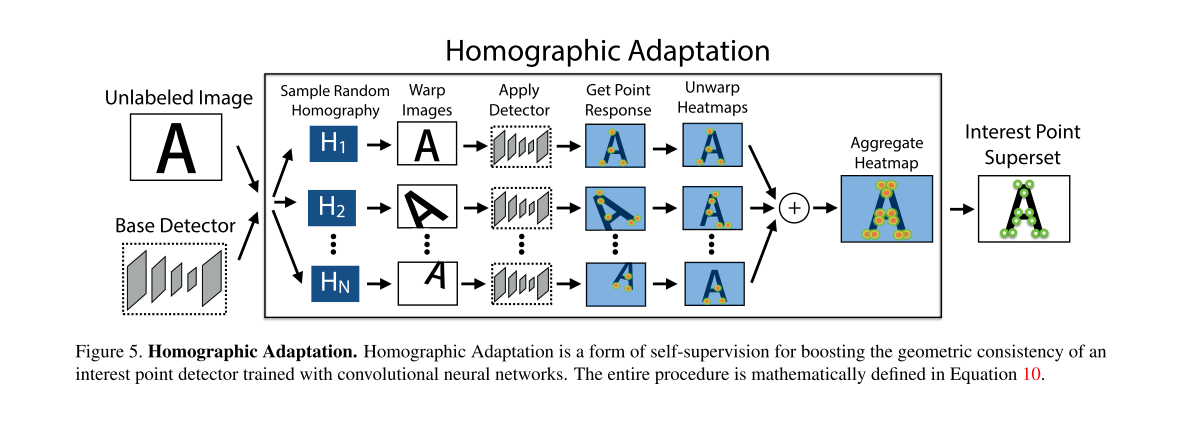

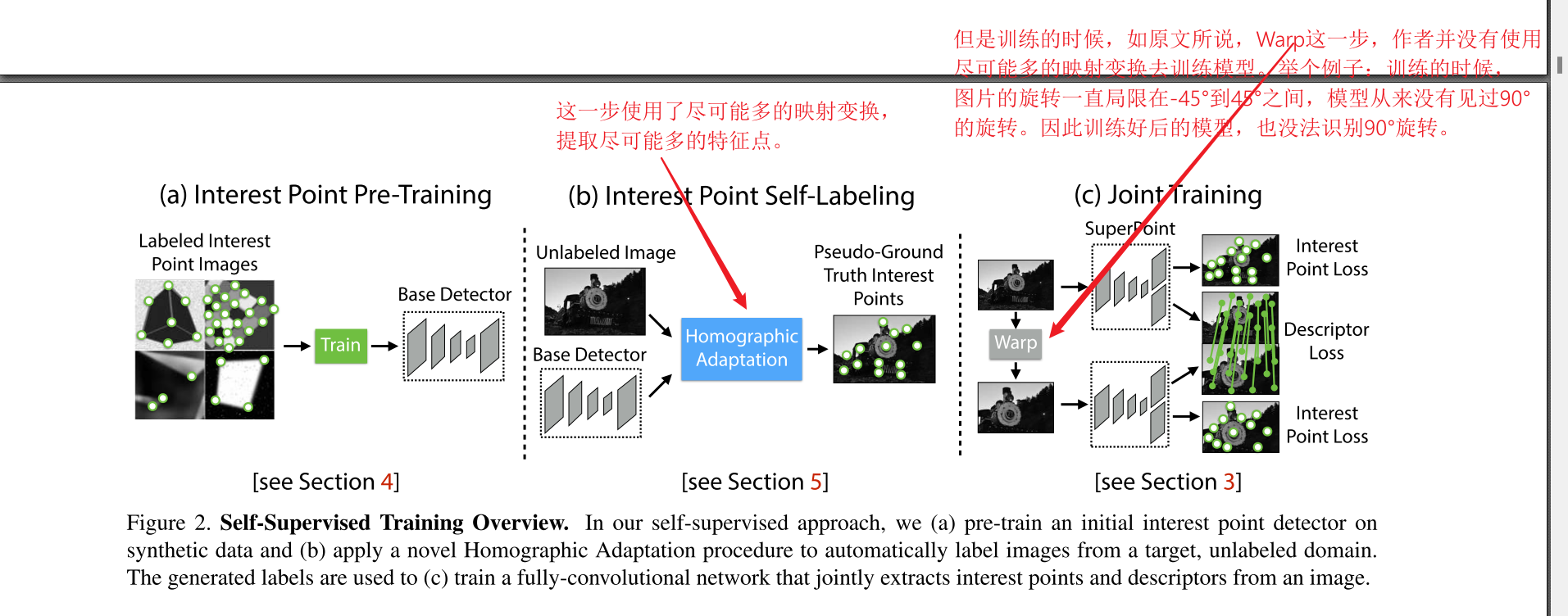

- SuperPoint结构

基于学习的特征提取算法,以SuperPoint为例。

总结如下:

SuperPoint无法匹配较大透视变换的原因推测:

原文:

代码

SIFT.py

import numpy as np

import cv2 as cv

from matplotlib import pyplot as plt

MIN_MATCH_COUNT = 10

#

img1 = cv.imread('data/box.png',0) # queryImage

img2 = cv.imread('data/box_in_scene.png',0) # trainImage

# Initiate SIFT detector

sift = cv.SIFT_create()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1,None)

kp2, des2 = sift.detectAndCompute(img2,None)

FLANN_INDEX_KDTREE = 1

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)

search_params = dict(checks = 50)

flann = cv.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(des1,des2,k=2)

# store all the good matches as per Lowe's ratio test.

good = []

for m,n in matches:

if m.distance < 0.7*n.distance:

good.append(m)

print("The number of keypoints in image1 is", len(kp1))

print("The number of keypoints in image2 is", len(kp2))

if len(good)>MIN_MATCH_COUNT:

src_pts = np.float32([ kp1[m.queryIdx].pt for m in good ]).reshape(-1,1,2)

dst_pts = np.float32([ kp2[m.trainIdx].pt for m in good ]).reshape(-1,1,2)

M, mask = cv.findHomography(src_pts, dst_pts, cv.RANSAC,5.0)

matchesMask = mask.ravel().tolist()

h,w = img1.shape

pts = np.float32([ [0,0],[0,h-1],[w-1,h-1],[w-1,0] ]).reshape(-1,1,2)

dst = cv.perspectiveTransform(pts,M)

img2 = cv.polylines(img2,[np.int32(dst)],True,255,3, cv.LINE_AA)

else:

print( "Not enough matches are found - {}/{}".format(len(good), MIN_MATCH_COUNT) )

matchesMask = None

print("the number of matches is ", len(matchesMask))

draw_params = dict(matchColor = (0,255,0), # draw matches in green color

singlePointColor = None,

matchesMask = matchesMask, # draw only inliers

flags = 2)

img3 = cv.drawMatches(img1,kp1,img2,kp2,good,None,**draw_params)

plt.imshow(img3),plt.show()

SuperPoint.py

import numpy as np

import os

import cv2

import torch

from matplotlib import pyplot as plt

MIN_MATCH_COUNT = 10

# Jet colormap for visualization.

myjet = np.array([[0., 0., 0.5],

[0., 0., 0.99910873],

[0., 0.37843137, 1.],

[0., 0.83333333, 1.],

[0.30044276, 1., 0.66729918],

[0.66729918, 1., 0.30044276],

[1., 0.90123457, 0.],

[1., 0.48002905, 0.],

[0.99910873, 0.07334786, 0.],

[0.5, 0., 0.]])

class SuperPointNet(torch.nn.Module):

""" Pytorch definition of SuperPoint Network. """

def __init__(self):

super(SuperPointNet, self).__init__()

self.relu = torch.nn.ReLU(inplace=True)

self.pool = torch.nn.MaxPool2d(kernel_size=2, stride=2)

c1, c2, c3, c4, c5, d1 = 64, 64, 128, 128, 256, 256

# Shared Encoder.

self.conv1a = torch.nn.Conv2d(1, c1, kernel_size=3, stride=1, padding=1)

self.conv1b = torch.nn.Conv2d(c1, c1, kernel_size=3, stride=1, padding=1)

self.conv2a = torch.nn.Conv2d(c1, c2, kernel_size=3, stride=1, padding=1)

self.conv2b = torch.nn.Conv2d(c2, c2, kernel_size=3, stride=1, padding=1)

self.conv3a = torch.nn.Conv2d(c2, c3, kernel_size=3, stride=1, padding=1)

self.conv3b = torch.nn.Conv2d(c3, c3, kernel_size=3, stride=1, padding=1)

self.conv4a = torch.nn.Conv2d(c3, c4, kernel_size=3, stride=1, padding=1)

self.conv4b = torch.nn.Conv2d(c4, c4, kernel_size=3, stride=1, padding=1)

# Detector Head.

self.convPa = torch.nn.Conv2d(c4, c5, kernel_size=3, stride=1, padding=1)

self.convPb = torch.nn.Conv2d(c5, 65, kernel_size=1, stride=1, padding=0)

# Descriptor Head.

self.convDa = torch.nn.Conv2d(c4, c5, kernel_size=3, stride=1, padding=1)

self.convDb = torch.nn.Conv2d(c5, d1, kernel_size=1, stride=1, padding=0)

def forward(self, x):

""" Forward pass that jointly computes unprocessed point and descriptor

tensors.

Input

x: Image pytorch tensor shaped N x 1 x H x W.

Output

semi: Output point pytorch tensor shaped N x 65 x H/8 x W/8.

desc: Output descriptor pytorch tensor shaped N x 256 x H/8 x W/8.

"""

# Shared Encoder.

x = self.relu(self.conv1a(x))

x = self.relu(self.conv1b(x))

x = self.pool(x)

x = self.relu(self.conv2a(x))

x = self.relu(self.conv2b(x))

x = self.pool(x)

x = self.relu(self.conv3a(x))

x = self.relu(self.conv3b(x))

x = self.pool(x)

x = self.relu(self.conv4a(x))

x = self.relu(self.conv4b(x))

# Detector Head.

cPa = self.relu(self.convPa(x))

semi = self.convPb(cPa)

# Descriptor Head.

cDa = self.relu(self.convDa(x))

desc = self.convDb(cDa)

dn = torch.norm(desc, p=2, dim=1) # Compute the norm.

desc = desc.div(torch.unsqueeze(dn, 1)) # Divide by norm to normalize.

return semi, desc

class SuperPointFrontend(object):

""" Wrapper around pytorch net to help with pre and post image processing. """

def __init__(self, weights_path, nms_dist, conf_thresh, nn_thresh,

cuda=False):

self.name = 'SuperPoint'

self.cuda = cuda

self.nms_dist = nms_dist

self.conf_thresh = conf_thresh

self.nn_thresh = nn_thresh # L2 descriptor distance for good match.

self.cell = 8 # Size of each output cell. Keep this fixed.

self.border_remove = 4 # Remove points this close to the border.

# Load the network in inference mode.

self.net = SuperPointNet()

if cuda:

# Train on GPU, deploy on GPU.

self.net.load_state_dict(torch.load(weights_path))

self.net = self.net.cuda()

else:

# Train on GPU, deploy on CPU.

self.net.load_state_dict(torch.load(weights_path,

map_location=lambda storage, loc: storage))

self.net.eval()

def nms_fast(self, in_corners, H, W, dist_thresh):

"""

Run a faster approximate Non-Max-Suppression on numpy corners shaped:

3xN [x_i,y_i,conf_i]^T

Algo summary: Create a grid sized HxW. Assign each corner location a 1, rest

are zeros. Iterate through all the 1's and convert them either to -1 or 0.

Suppress points by setting nearby values to 0.

Grid Value Legend:

-1 : Kept.

0 : Empty or suppressed.

1 : To be processed (converted to either kept or supressed).

NOTE: The NMS first rounds points to integers, so NMS distance might not

be exactly dist_thresh. It also assumes points are within image boundaries.

Inputs

in_corners - 3xN numpy array with corners [x_i, y_i, confidence_i]^T.

H - Image height.

W - Image width.

dist_thresh - Distance to suppress, measured as an infinty norm distance.

Returns

nmsed_corners - 3xN numpy matrix with surviving corners.

nmsed_inds - N length numpy vector with surviving corner indices.

"""

grid = np.zeros((H, W)).astype(int) # Track NMS data.

inds = np.zeros((H, W)).astype(int) # Store indices of points.

# Sort by confidence and round to nearest int.

inds1 = np.argsort(-in_corners[2, :])

corners = in_corners[:, inds1]

rcorners = corners[:2, :].round().astype(int) # Rounded corners.

# Check for edge case of 0 or 1 corners.

if rcorners.shape[1] == 0:

return np.zeros((3, 0)).astype(int), np.zeros(0).astype(int)

if rcorners.shape[1] == 1:

out = np.vstack((rcorners, in_corners[2])).reshape(3, 1)

return out, np.zeros((1)).astype(int)

# Initialize the grid.

for i, rc in enumerate(rcorners.T):

grid[rcorners[1, i], rcorners[0, i]] = 1

inds[rcorners[1, i], rcorners[0, i]] = i

# Pad the border of the grid, so that we can NMS points near the border.

pad = dist_thresh

grid = np.pad(grid, ((pad, pad), (pad, pad)), mode='constant')

# Iterate through points, highest to lowest conf, suppress neighborhood.

count = 0

for i, rc in enumerate(rcorners.T):

# Account for top and left padding.

pt = (rc[0] + pad, rc[1] + pad)

if grid[pt[1], pt[0]] == 1: # If not yet suppressed.

grid[pt[1] - pad:pt[1] + pad + 1, pt[0] - pad:pt[0] + pad + 1] = 0

grid[pt[1], pt[0]] = -1

count += 1

# Get all surviving -1's and return sorted array of remaining corners.

keepy, keepx = np.where(grid == -1)

keepy, keepx = keepy - pad, keepx - pad

inds_keep = inds[keepy, keepx]

out = corners[:, inds_keep]

values = out[-1, :]

inds2 = np.argsort(-values)

out = out[:, inds2]

out_inds = inds1[inds_keep[inds2]]

return out, out_inds

def run(self, img):

""" Process a numpy image to extract points and descriptors.

Input

img - HxW numpy float32 input image in range [0,1].

Output

corners - 3xN numpy array with corners [x_i, y_i, confidence_i]^T.

desc - 256xN numpy array of corresponding unit normalized descriptors.

heatmap - HxW numpy heatmap in range [0,1] of point confidences.

"""

assert img.ndim == 2, 'Image must be grayscale.'

assert img.dtype == np.float32, 'Image must be float32.'

H, W = img.shape[0], img.shape[1]

inp = img.copy()

inp = (inp.reshape(1, H, W))

inp = torch.from_numpy(inp)

inp = torch.autograd.Variable(inp).view(1, 1, H, W)

if self.cuda:

inp = inp.cuda()

# Forward pass of network.

outs = self.net.forward(inp)

semi, coarse_desc = outs[0], outs[1]

# Convert pytorch -> numpy.

semi = semi.data.cpu().numpy().squeeze()

# --- Process points.

# C = np.max(semi)

# dense = np.exp(semi - C) # Softmax.

# dense = dense / (np.sum(dense)) # Should sum to 1.

dense = np.exp(semi) # Softmax.

dense = dense / (np.sum(dense, axis=0) + .00001) # Should sum to 1.

# Remove dustbin.

nodust = dense[:-1, :, :]

# Reshape to get full resolution heatmap.

Hc = int(H / self.cell)

Wc = int(W / self.cell)

nodust = nodust.transpose(1, 2, 0)

heatmap = np.reshape(nodust, [Hc, Wc, self.cell, self.cell])

heatmap = np.transpose(heatmap, [0, 2, 1, 3])

heatmap = np.reshape(heatmap, [Hc * self.cell, Wc * self.cell])

xs, ys = np.where(heatmap >= self.conf_thresh) # Confidence threshold.

if len(xs) == 0:

return np.zeros((3, 0)), None, None

pts = np.zeros((3, len(xs))) # Populate point data sized 3xN.

pts[0, :] = ys

pts[1, :] = xs

pts[2, :] = heatmap[xs, ys]

pts, _ = self.nms_fast(pts, H, W, dist_thresh=self.nms_dist) # Apply NMS.

inds = np.argsort(pts[2, :])

pts = pts[:, inds[::-1]] # Sort by confidence.

# Remove points along border.

bord = self.border_remove

toremoveW = np.logical_or(pts[0, :] < bord, pts[0, :] >= (W - bord))

toremoveH = np.logical_or(pts[1, :] < bord, pts[1, :] >= (H - bord))

toremove = np.logical_or(toremoveW, toremoveH)

pts = pts[:, ~toremove]

# --- Process descriptor.

D = coarse_desc.shape[1]

if pts.shape[1] == 0:

desc = np.zeros((D, 0))

else:

# Interpolate into descriptor map using 2D point locations.

samp_pts = torch.from_numpy(pts[:2, :].copy())

samp_pts[0, :] = (samp_pts[0, :] / (float(W) / 2.)) - 1.

samp_pts[1, :] = (samp_pts[1, :] / (float(H) / 2.)) - 1.

samp_pts = samp_pts.transpose(0, 1).contiguous()

samp_pts = samp_pts.view(1, 1, -1, 2)

samp_pts = samp_pts.float()

if self.cuda:

samp_pts = samp_pts.cuda()

desc = torch.nn.functional.grid_sample(coarse_desc, samp_pts)

desc = desc.data.cpu().numpy().reshape(D, -1)

desc /= np.linalg.norm(desc, axis=0)[np.newaxis, :]

return pts, desc, heatmap

if __name__ == '__main__':

print('==> Loading pre-trained network.')

# This class runs the SuperPoint network and processes its outputs.

fe = SuperPointFrontend(weights_path="./model/superpoint_v1.pth",

nms_dist=4,

conf_thresh=0.015,

nn_thresh=0.7,

cuda=True)

print('==> Successfully loaded pre-trained network.')

pic1 = "data/wulala.png"

pic2 = "data/viewPoint2.png"

image1_origin = cv2.imread(pic1)

image2_origin = cv2.imread(pic2)

img1 = cv2.imread(pic1, cv2.IMREAD_GRAYSCALE).astype(np.float32)

img2 = cv2.imread(pic2, cv2.IMREAD_GRAYSCALE).astype(np.float32)

img1 = img1 / 255.

img2 = img2 / 255.

if img1 is None or img2 is None:

print('Could not open or find the images!')

exit(0)

# -- Step 1: Detect the keypoints using SURF Detector, compute the descriptors

kp1, des1, h1 = fe.run(img1)

kp2, des2, h2 = fe.run(img2)

## to transfer array ==> KeyPoints

kp1 = [cv2.KeyPoint(kp1[0][i], kp1[1][i], 1)

for i in range(kp1.shape[1])]

kp2 = [cv2.KeyPoint(kp2[0][i], kp2[1][i], 1)

for i in range(kp2.shape[1])]

print("The number of keypoints in image1 is", len(kp1))

print("The number of keypoints in image2 is", len(kp2))

# -- Step 2: Matching descriptor vectors with a FLANN based matcher

# Since SURF is a floating-point descriptor NORM_L2 is used

matcher = cv2.DescriptorMatcher_create(cv2.DescriptorMatcher_FLANNBASED)

knn_matches = matcher.knnMatch(des1.T, des2.T, 2)

# -- Filter matches using the Lowe's ratio test

ratio_thresh = 0.7

good = []

for m, n in knn_matches:

if m.distance < ratio_thresh * n.distance:

good.append(m)

if len(good)>MIN_MATCH_COUNT:

src_pts = np.float32([ kp1[m.queryIdx].pt for m in good ]).reshape(-1,1,2)

dst_pts = np.float32([ kp2[m.trainIdx].pt for m in good ]).reshape(-1,1,2)

M, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC,5.0)

matchesMask = mask.ravel().tolist()

h,w = img1.shape

pts = np.float32([ [0,0],[0,h-1],[w-1,h-1],[w-1,0] ]).reshape(-1,1,2)

dst = cv2.perspectiveTransform(pts,M)

img2 = cv2.polylines(image2_origin,[np.int32(dst)],True,255,3, cv2.LINE_AA)

else:

print( "Not enough matches are found - {}/{}".format(len(good), MIN_MATCH_COUNT) )

matchesMask = None

print("the number of matches is ", len(matchesMask))

draw_params = dict(matchColor = (0,255,0), # draw matches in green color

singlePointColor = None,

matchesMask = matchesMask, # draw only inliers

flags = 2)

img3 = cv2.drawMatches(image1_origin,kp1,image2_origin,kp2,good,None,**draw_params)

plt.imshow(img3),plt.show()

本文来自博客园,作者:CuriosityWang,转载请注明原文链接:https://www.cnblogs.com/curiositywang/p/15995132.html

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· AI 智能体引爆开源社区「GitHub 热点速览」

· 三行代码完成国际化适配,妙~啊~

· .NET Core 中如何实现缓存的预热?