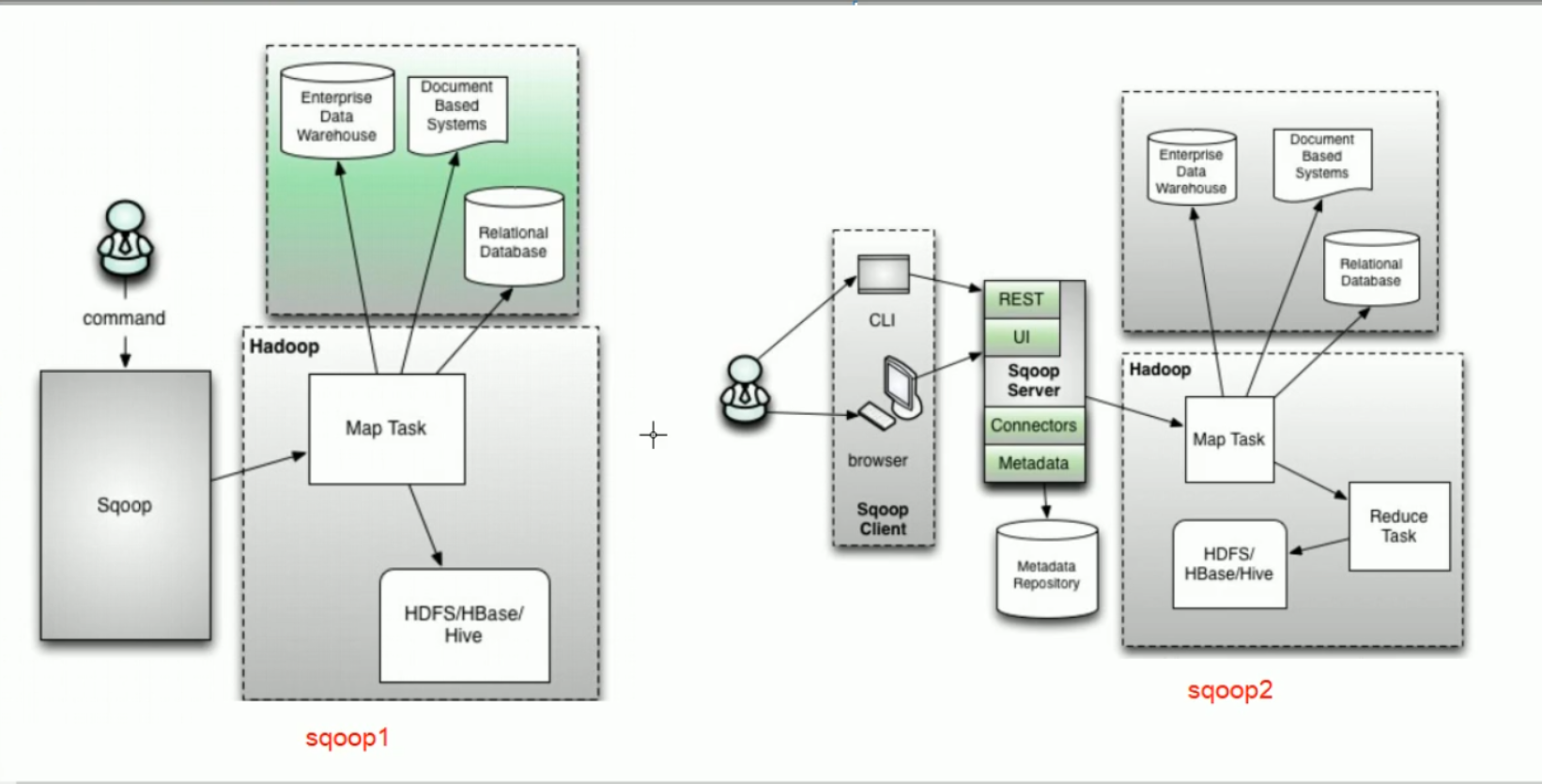

Sqoop(将关系型数据库的数据导入到HDFS)

10-sqoop入门介绍

================================================================================================================================================================

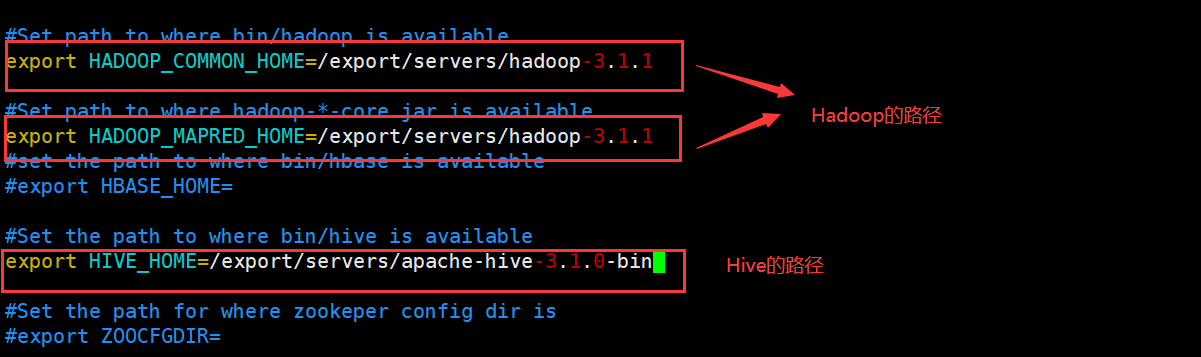

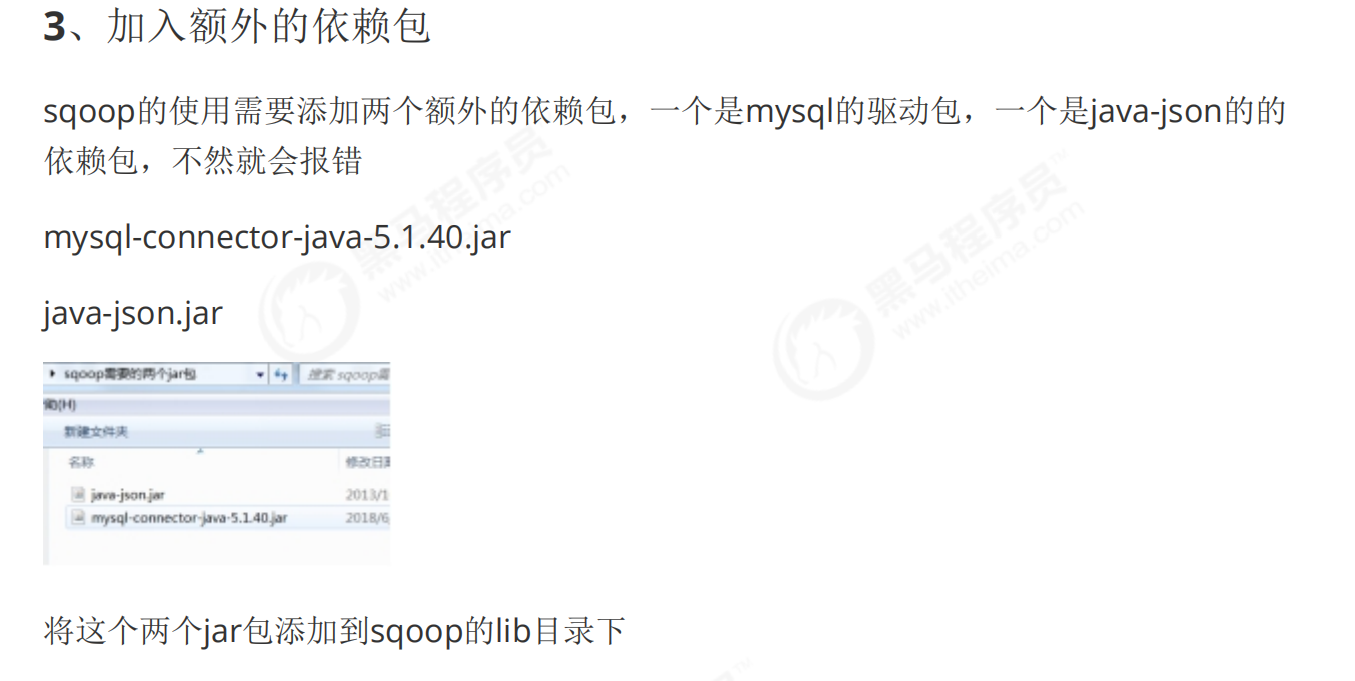

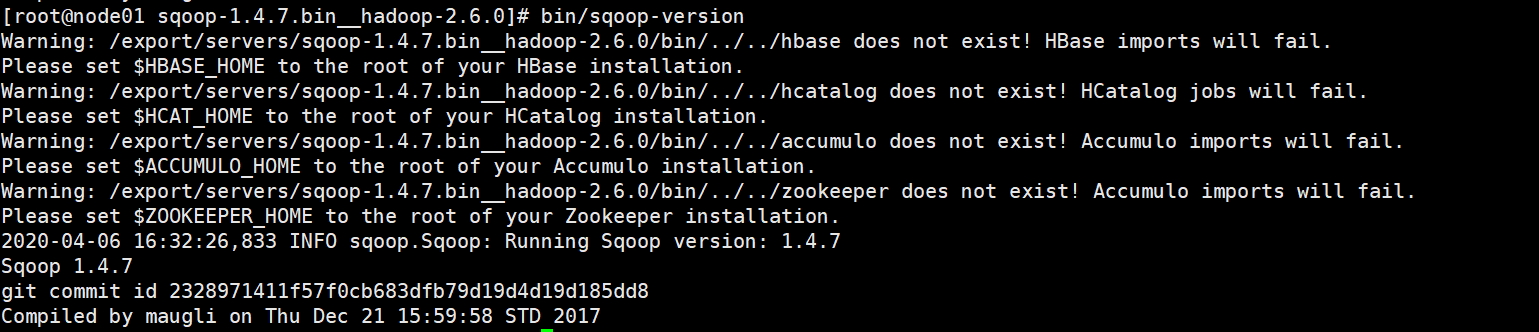

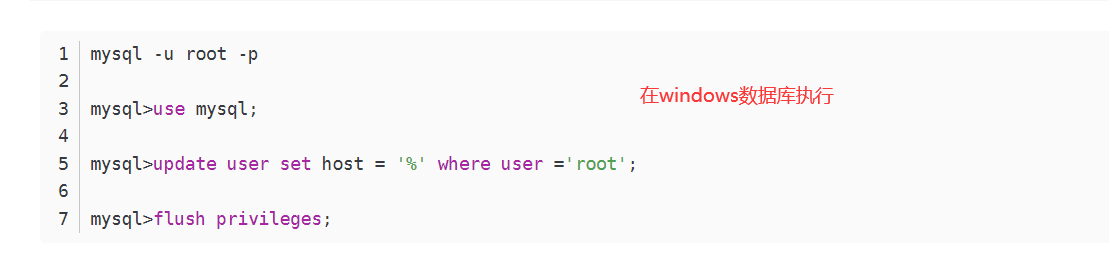

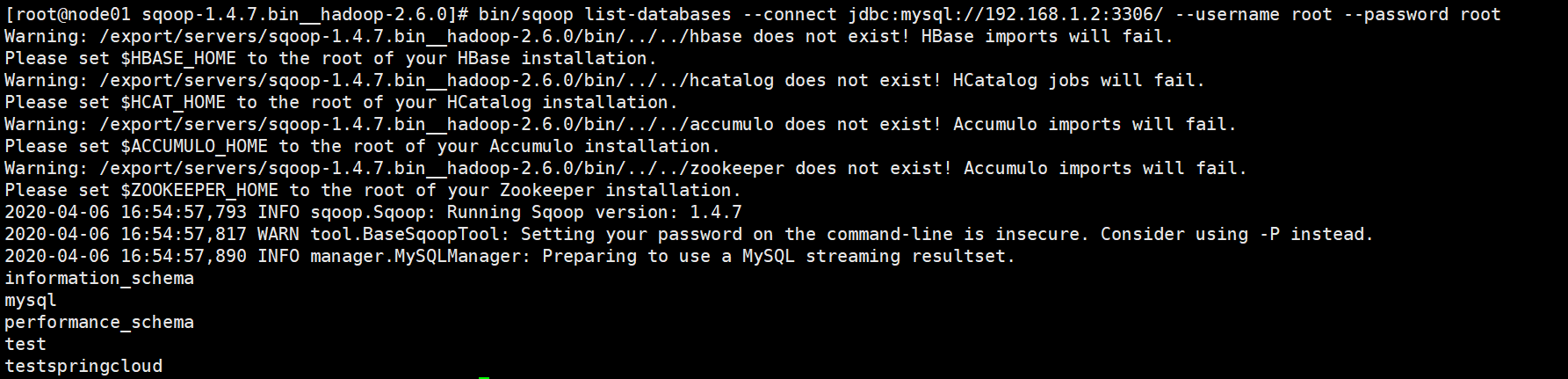

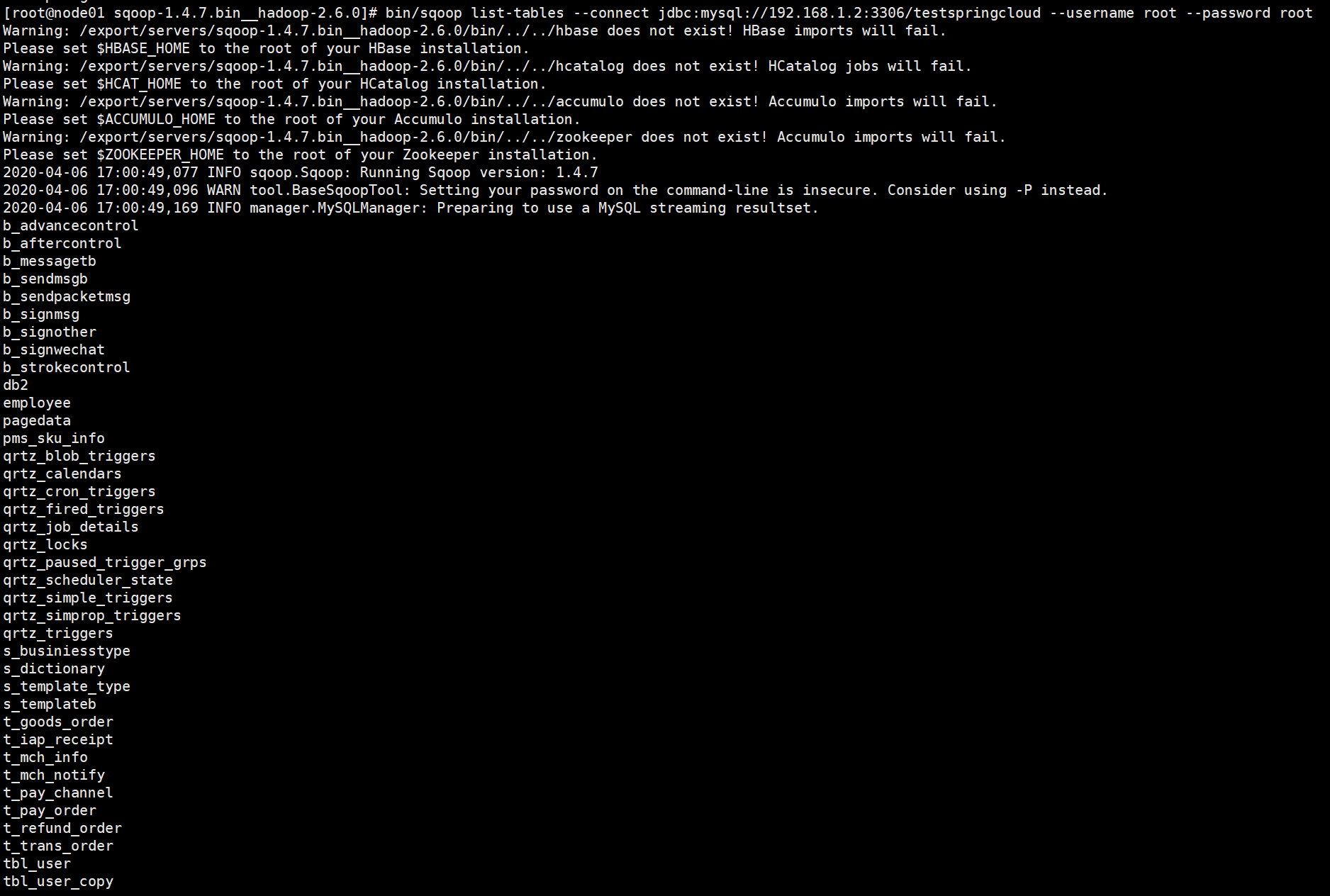

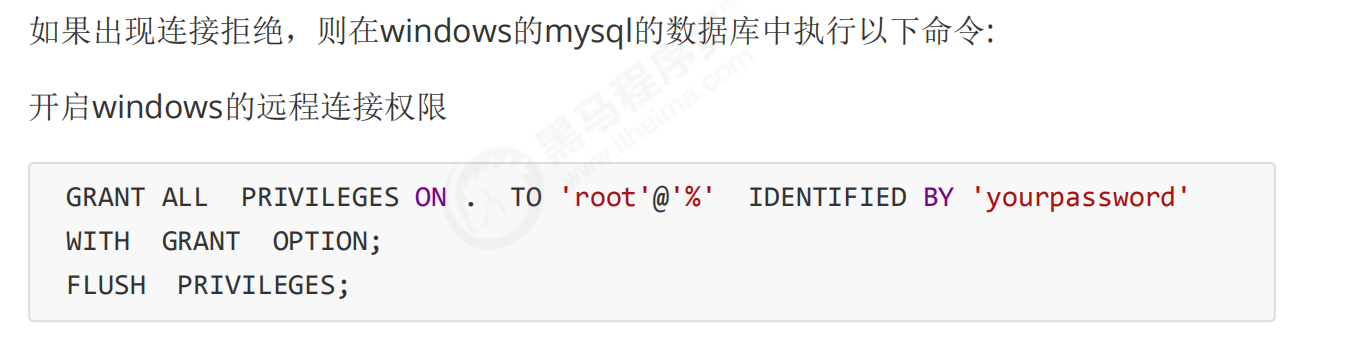

11-sqoop安装

-------------------------------------------------------------------------------------------------------------------------------------

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

===============================================================================================================================================================

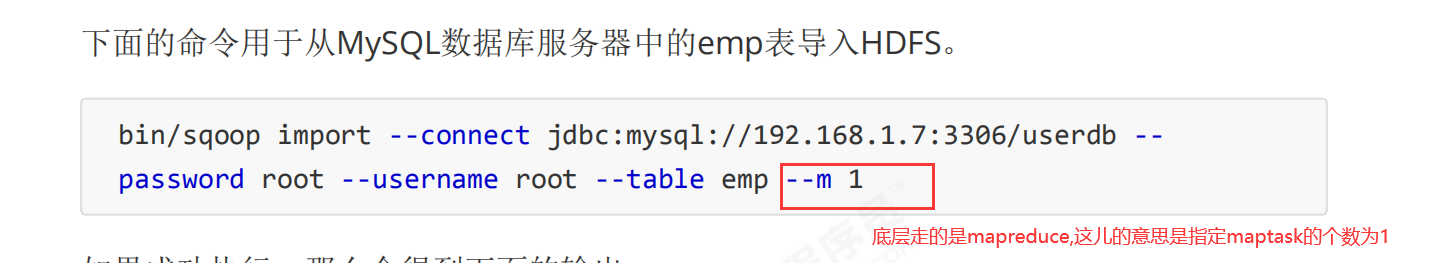

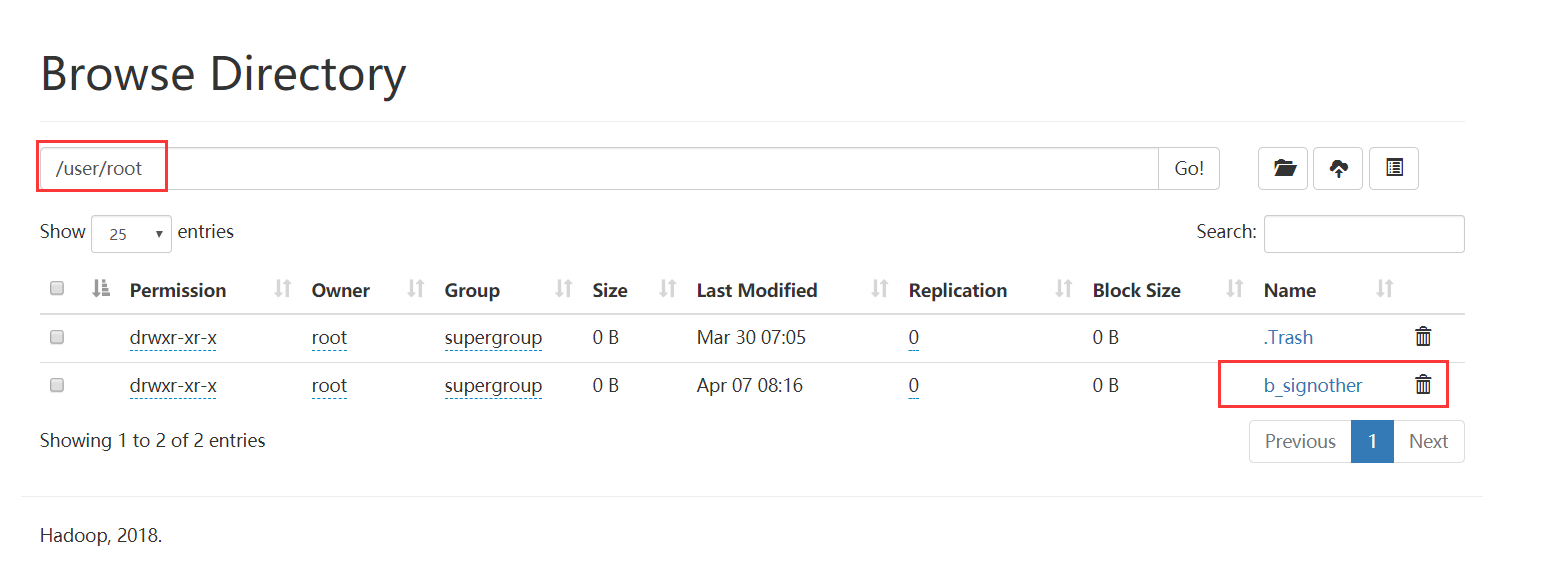

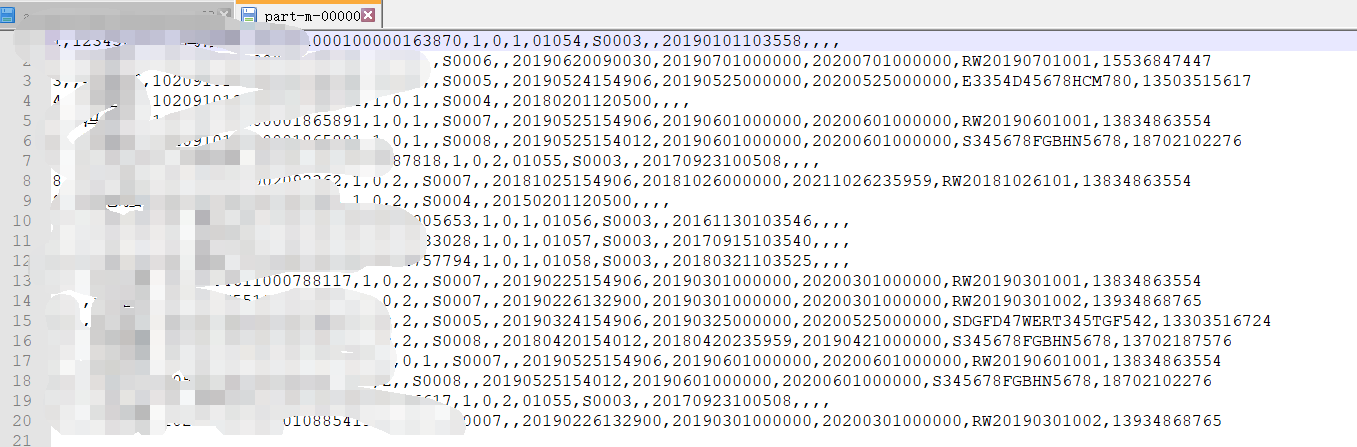

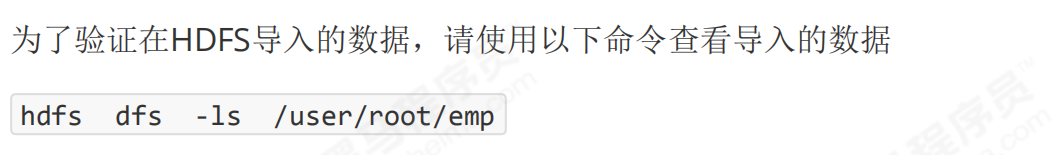

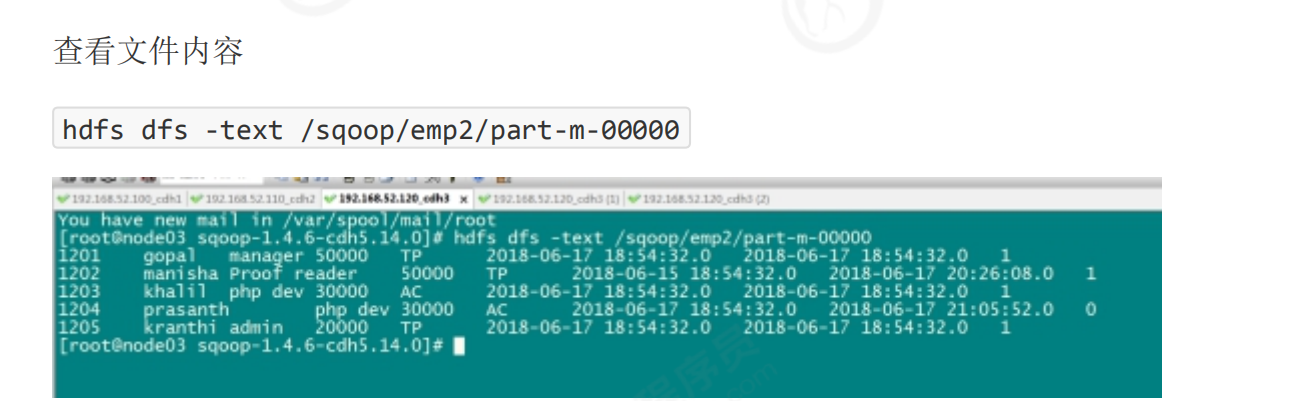

12-sqoop导入-hdfs

bin/sqoop import --connect jdbc:mysql://192.168.1.2:3306/testspringcloud --username root --password root --table b_signother --m 1

请问导入到HDFS的那个位置呢??

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

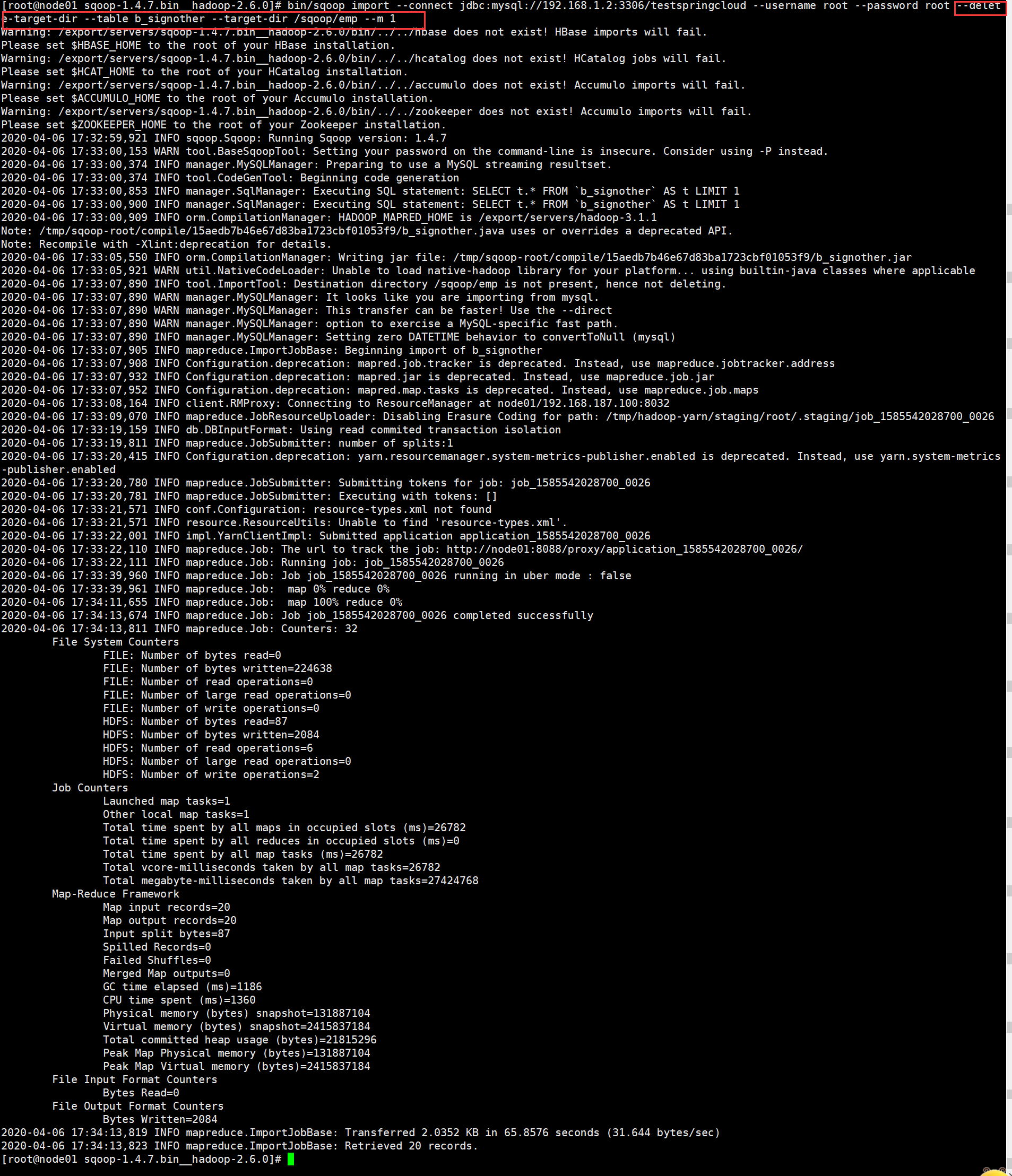

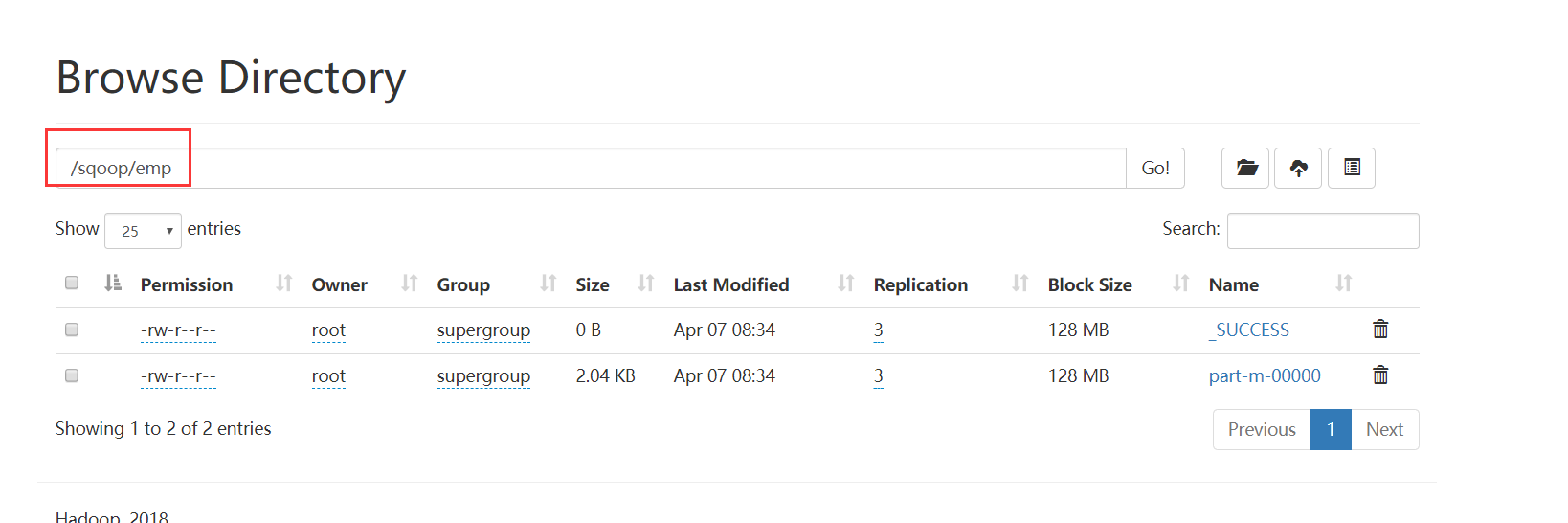

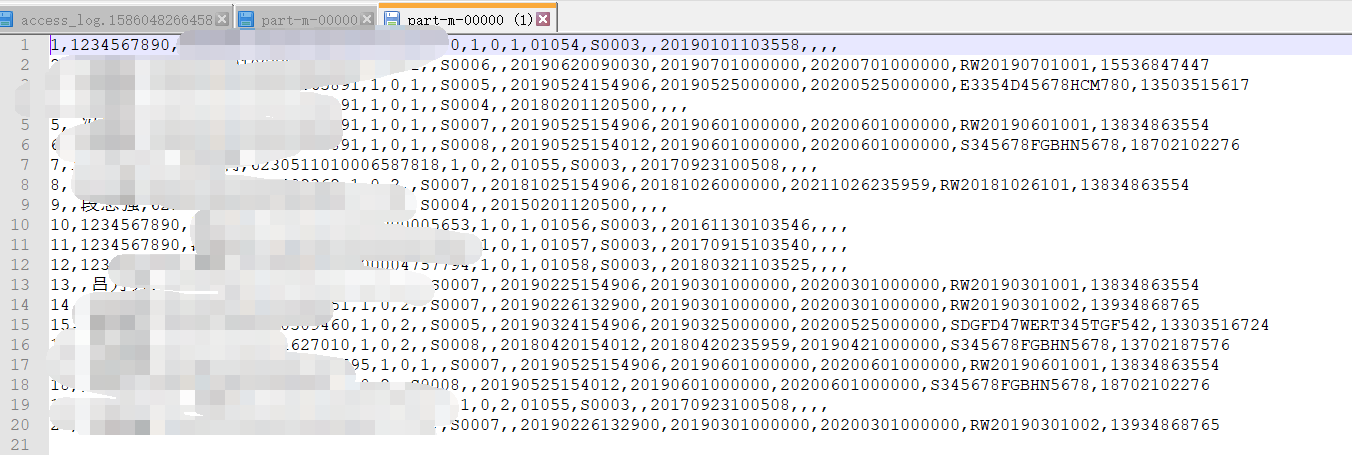

bin/sqoop import --connect jdbc:mysql://192.168.1.2:3306/testspringcloud --username root --password root --delete-target-dir --table b_signother --target-dir /sqoop/emp --m 1

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

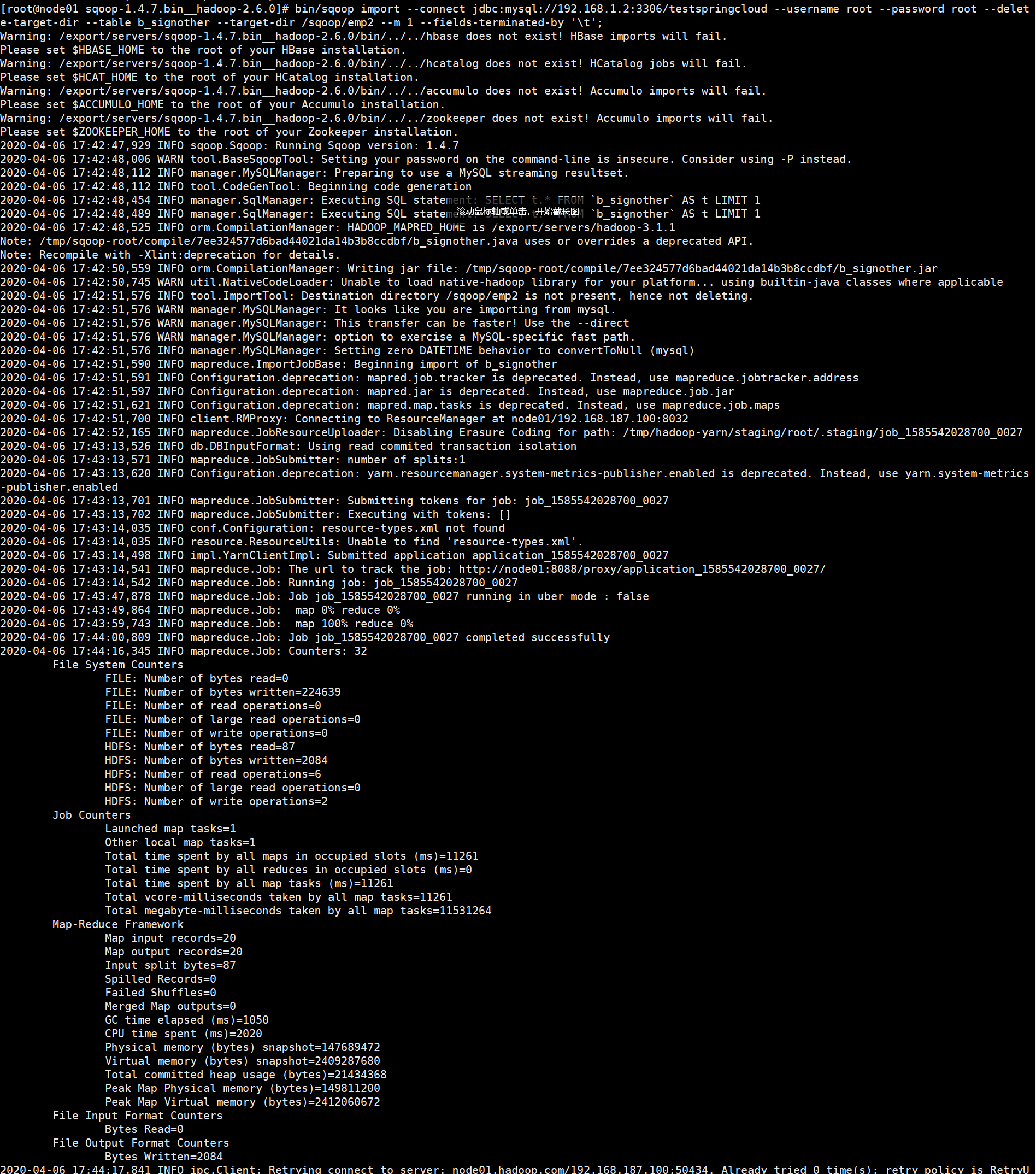

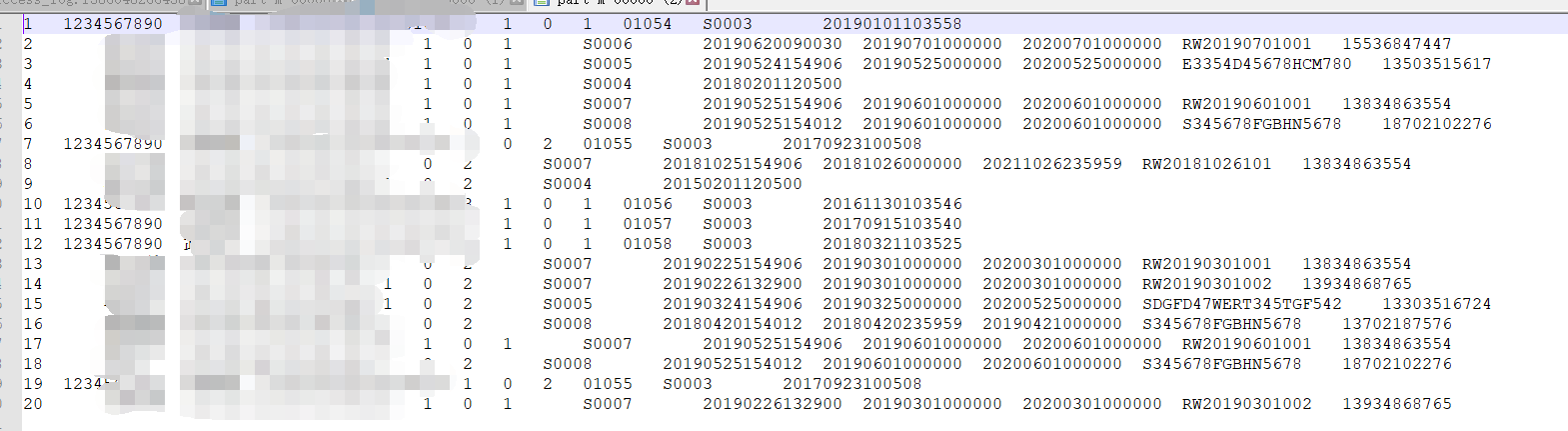

bin/sqoop import --connect jdbc:mysql://192.168.1.2:3306/testspringcloud --username root --password root --delete-target-dir --table b_signother --target-dir /sqoop/emp2 --m 1 --fields-terminated-by '\t';

=========================================================================================================================================================================

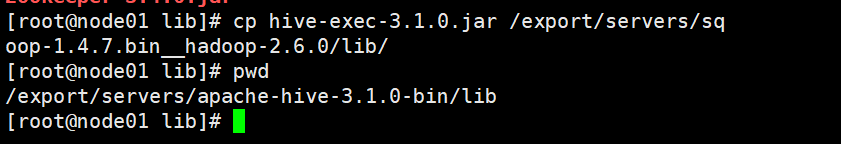

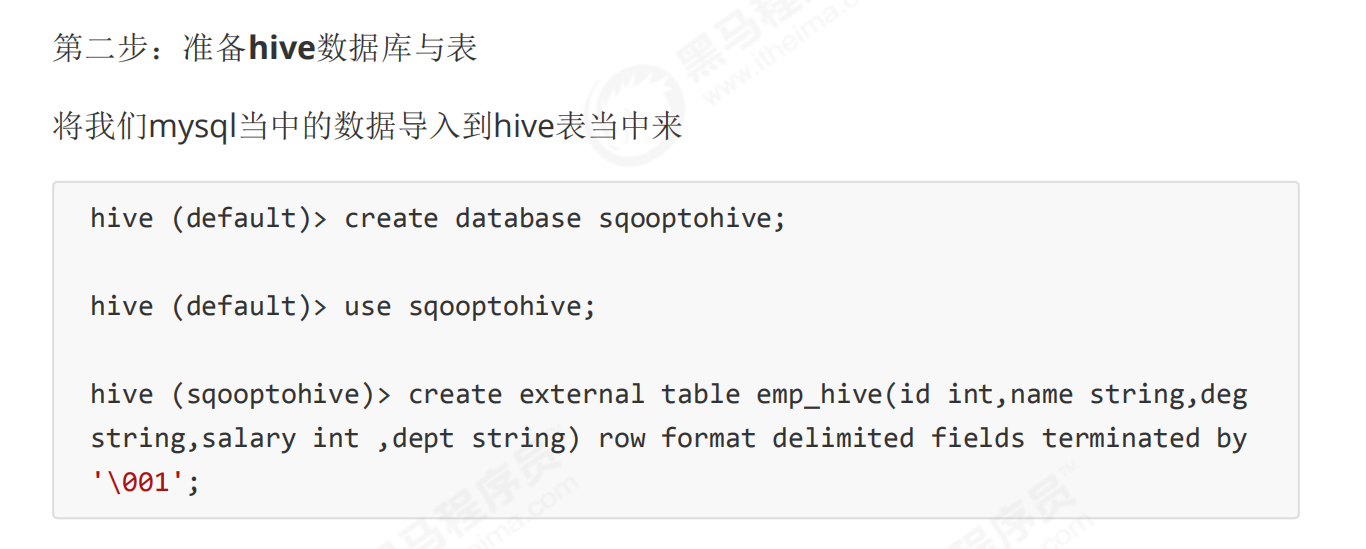

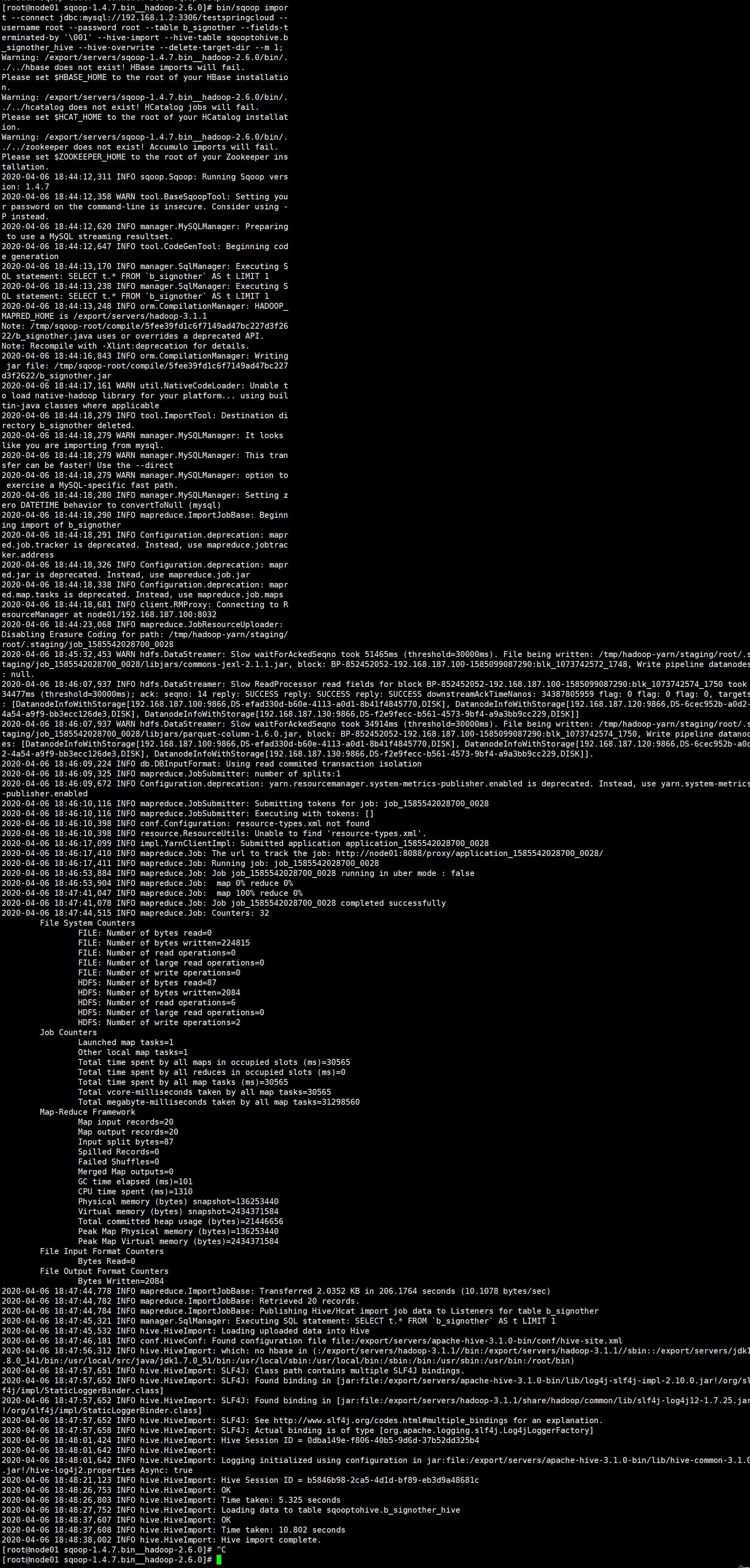

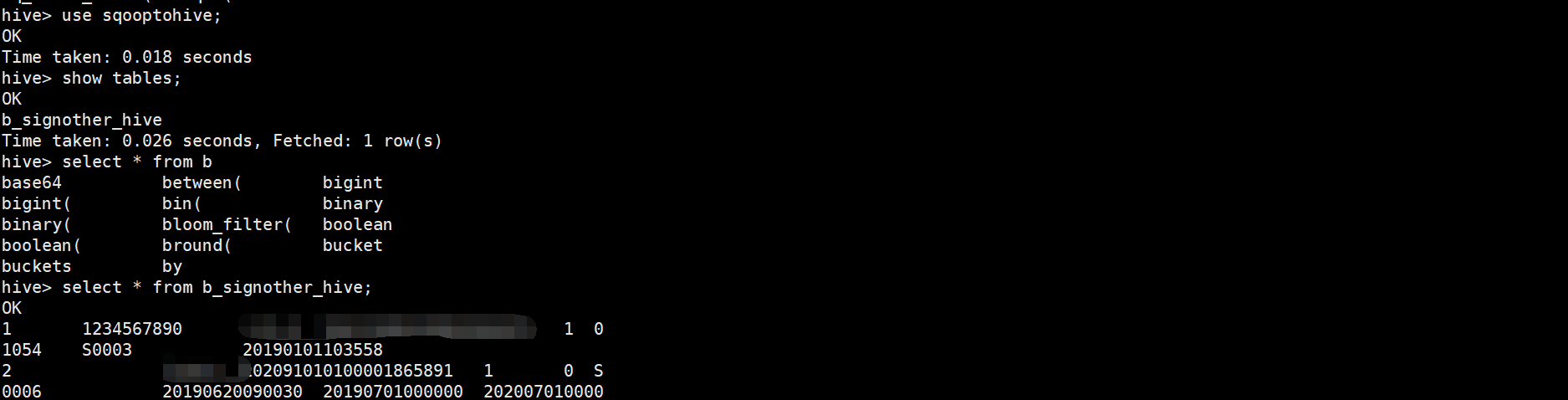

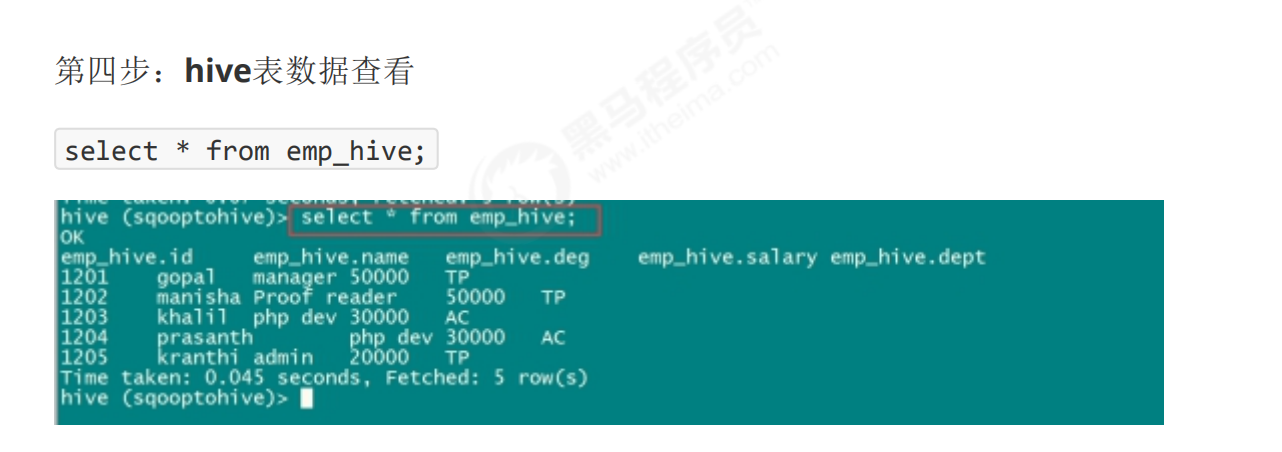

13-sqoop导入-hive

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

CREATE TABLE `b_signother` (

`signOtherId` bigint(20) NOT NULL AUTO_INCREMENT COMMENT '其他签约唯一标识',

`sysCusNum` varchar(32) DEFAULT NULL COMMENT '银行为公积金中心分配的客户编号',

`acctName` varchar(50) DEFAULT NULL COMMENT '户名',

`cardNum` varchar(22) NOT NULL COMMENT '卡号/账号',

`acctType` char(1) DEFAULT NULL COMMENT '账户类型(2-对公 1-对私)',

`cardType` char(1) DEFAULT NULL COMMENT '卡类型(0 存折/借记卡 1 信用卡)',

`aorcFlag` char(1) DEFAULT NULL COMMENT '卡折标志(1折 2卡)',

`opNum` char(6) DEFAULT NULL COMMENT '操作员,公积金有值',

`signType` varchar(10) DEFAULT NULL COMMENT '签约类型\r\n公积金:S0003 房管局:S0004 \r\n电信诈骗:S0005 公安查控:S0006\r\n国安查控:S0007 国监委查控:S0008',

`orgNum` char(6) DEFAULT NULL COMMENT '网点号',

`regTime` varchar(14) DEFAULT NULL COMMENT '签约登记时间YYYYMMDDHHmmss',

`interceptionStartTime` varchar(14) CHARACTER SET utf8mb4 DEFAULT NULL COMMENT '公积金、房管局时该字段无值\r\n电信诈骗、公安查控、国安查控、国监委查控时此字段有值\r\n签约时:该字段代表监控开始时间\r\n解约时:该字段代表为空',

`interceptionEndTime` varchar(14) DEFAULT NULL COMMENT '公积金、房管局时该字段无值\r\n电信诈骗、公安查控、国安查控、国监委查控时此字段有值\r\n签约时:该字段代表监控结束时间\r\n解约时:该字段代表解绑生效时间',

`rwSerialno` varchar(40) DEFAULT NULL COMMENT '电信诈骗、公安查控、国安查控、国监委查控时此字段有值',

`tel` char(11) DEFAULT NULL COMMENT '监控人接收消息手机号',

PRIMARY KEY (`signOtherId`),

KEY `index_signother` (`cardNum`) USING BTREE

) ENGINE=InnoDB AUTO_INCREMENT=21 DEFAULT CHARSET=utf8 COMMENT='公积金、房管局、公安、国安等查控签约表';

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

bin/sqoop import --connect jdbc:mysql://192.168.1.2:3306/testspringcloud --username root --password root --table b_signother --fields-terminated-by '\001' --hive-import --hive-table sqooptohive.b_signother_hive --hive-overwrite --delete-target-dir --m 1;

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

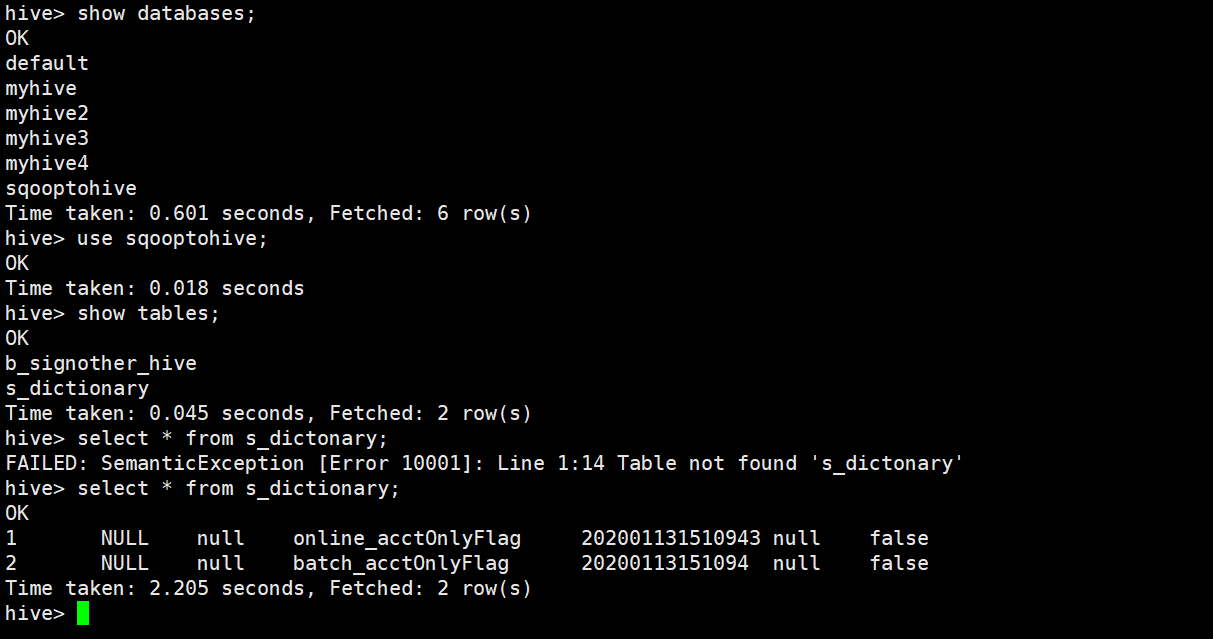

执行上述命令,会自动创建上述的表

bin/sqoop import --connect jdbc:mysql://192.168.1.2:3306/testspringcloud --username root --password root --table s_dictionary --hive-import -m 1 --hive-database sqooptohive;

========================================================================================================================================================

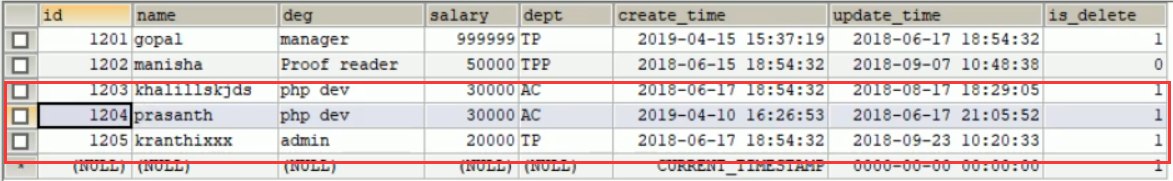

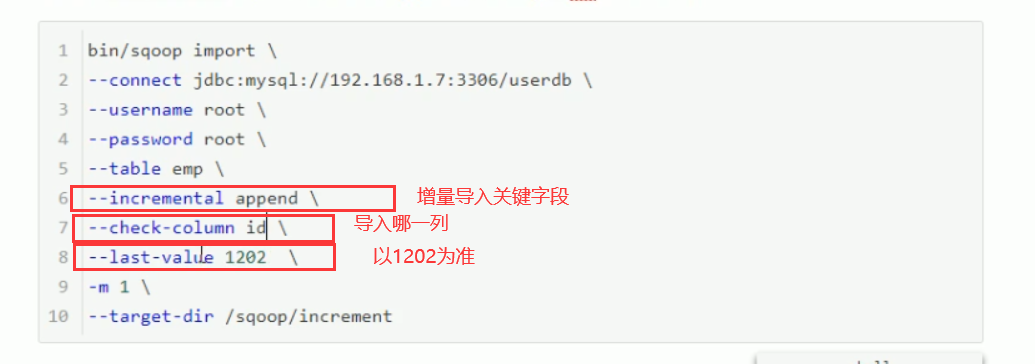

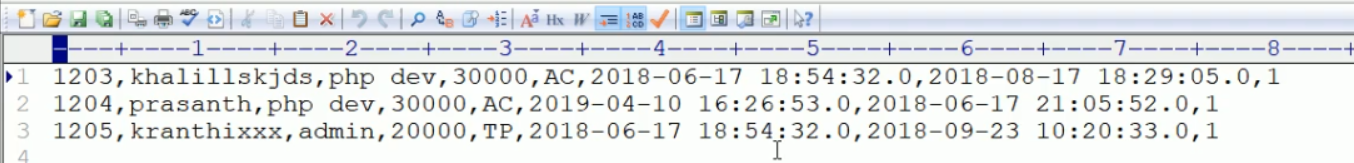

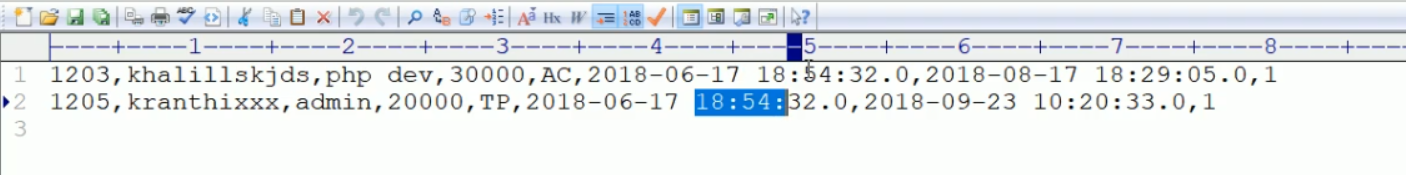

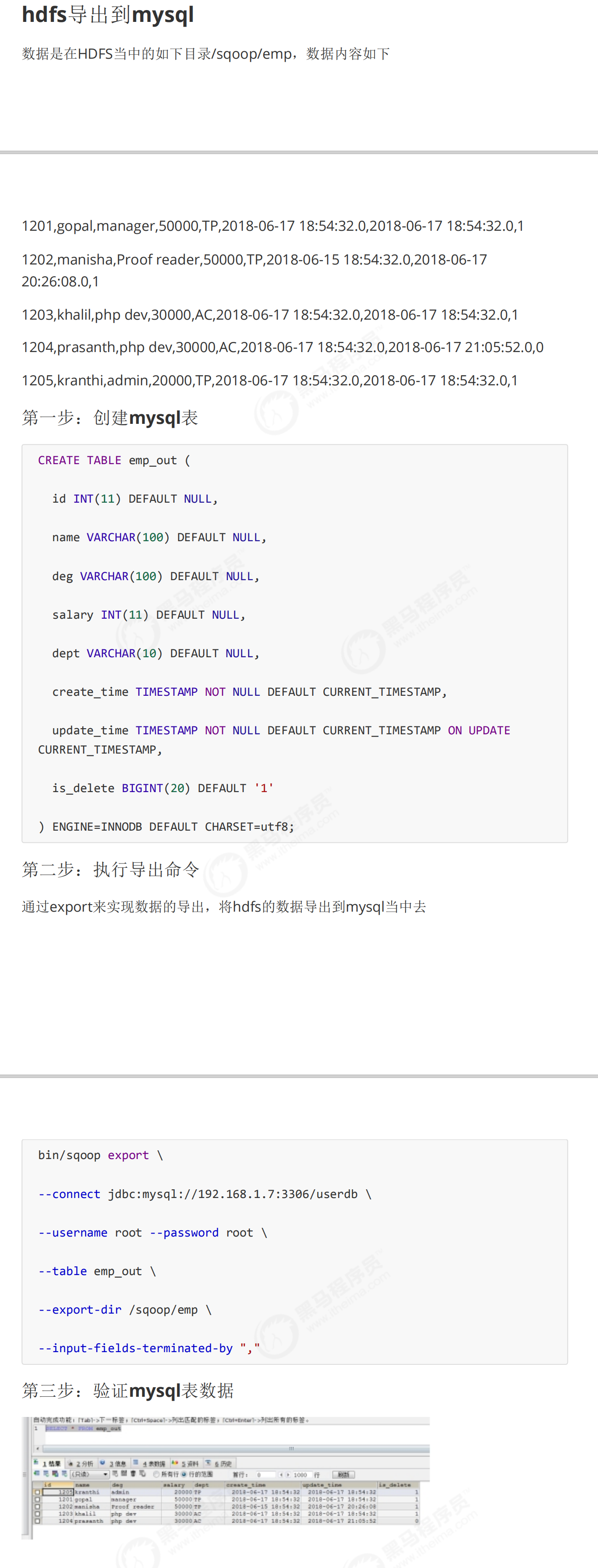

14-sqoop增量导入hdfs和导出

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

=======================================================================================================================================================\

浙公网安备 33010602011771号

浙公网安备 33010602011771号