openstack入门至深入学习

openstack深入学习

一、OpenStack云计算的介绍

(一)云计算的服务类型

IAAS:基础设施即服务,如:云主机;

PAAS:平台即服务,如:docker;

SAAS:软件即服务,如:购买企业邮箱,CDN;

| 传统IT | IAAS | PAAS | SAAS |

|---|---|---|---|

| 1应用 | 你管理 | 你管理 | 服务上管理 |

| 2数据 | 你管理 | 你管理 | 服务上管理 |

| 3运行时 | 你管理 | 服务上管理 | 服务上管理 |

| 4中间件 | 你管理 | 服务上管理 | 服务上管理 |

| 5操作系统 | 服务上管理 | 服务上管理 | 服务上管理 |

| 6虚拟化 | 服务上管理 | 服务上管理 | 服务上管理 |

| 7服务器 | 服务上管理 | 服务上管理 | 服务上管理 |

| 8存储 | 服务上管理 | 服务上管理 | 服务上管理 |

| 9网络 | 服务上管理 | 服务上管理 | 服务上管理 |

(二)openstack定义及组件

是开源的云计算管理平台项目,通过各种互补的服务提供了基础设施即服务(IAAS)的解决方案,每个服务提供API以进行集成。

OpenStack的每个主版本系列以字母表顺序(A~Z)命名,以年份及当年内的排序做版本号,从第一版的Austin(2010.1)到目前最新的稳定版Rocky(2018.8),共经历了18个主版本,第19版的Stein仍在开发中。版本发布策略:几乎是每半年发布一个新版本openstack官方文档地址https://docs.openstack.org

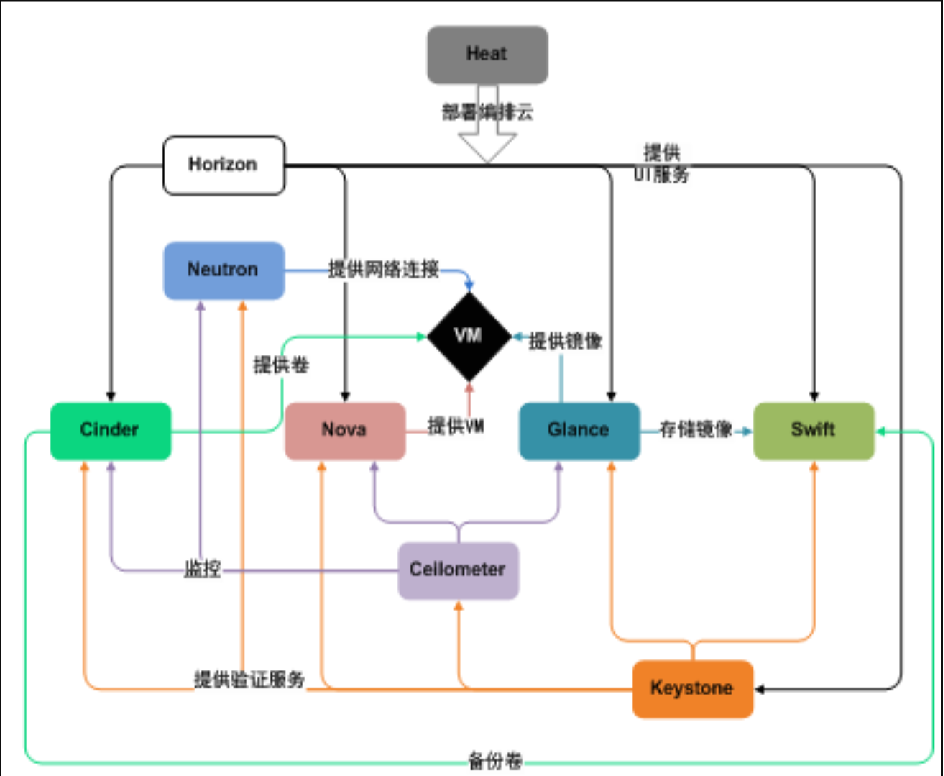

1、OpenStack的架构图:

openstack架构模块中文含义:

horizon:UI界面;Neutron:网络;clinder:硬盘;nova:计算;Glance:镜像;VM:虚拟机;keystone:授权;cellometer:监控;swift:校验;heat:编排

2、openst核心组件含义及作用:

①计算:Nova

一套控制器,用于为单个用户或使用群组管理虚拟机实例的整个生命周期,负责虚拟机创建、开机、关机、挂起、暂停、调整、迁移、重启、销毁等操作。

②镜像服务:Glance

一套虚拟机镜像查找及检索,支持多种虚拟机镜像格式(AKI,AMI,ARI,ISO,QCOW2,RAW,VMDK),有创建上传镜像、删除镜像、编辑镜像基本信息的功能。

③身份服务:keystone

为openstack其他服务提供身份验证、服务规则和服务令牌功能,管理Domains,Projects,Users,Groups,Roles.

④网络&地址管理:Neutron

提供云计算的网络虚拟化技术,为OpenStack其他服务提供网络连接服务。为用户提供接口,可以定义Network、Subnet、Router,配置DHCP、DNS、负载均衡、L3服务,网络支持GRE、VLAN。

⑤块存储:Cinder

为运行实例提供稳定的数据块存储服务,它的插件驱动架构有利于块设备的创建和管理,如创建卷、删除卷,在实例上挂载和卸载卷。

⑥UI界面:Horizon

OpenStack中各种服务的Web管理门户,用于简化用户对服务的操作,例如:启动实例、分配IP地址、配置访问控制等。

3、soa架构介绍

soa(拆业务)千万用户同时访问。每个网页都是一个集群。

首页 www.jd.com/index.html(5张,nginx+php+mysql)

秒杀 miaosha.jd.com/index.html(15张, nginx+php+mysql )

优惠卷 juan.jd.com/index.html (15张, nginx+php+mysql )

会员 plus.jd.com/index.html(15张, nginx+php+mysql )

登录 login.jd.com/index.html(15张, nginx+php+mysql )

二、OpenStack基础服务的安装

(一)hosts文件配置

控制节点和计算节点

[root@computer1 /]# cat /etc/hosts

10.0.0.11 controller

10.0.0.31 computer1

10.0.0.32 computer2

[root@computer1 /]#

(二)yum源配置

控制节点和计算节点:

[root@computer1 etc]# mount /dev/cdrom /mnt/

[root@computer1 etc]# cat /etc/rc.local

mount /dev/cdrom /mnt/

[root@computer1 etc]# chom +x /etc/rc.local

将资料包里的openstack的rpm上传至/opt,并解压

[root@computer1 opt]# cat /etc/yum.repos.d/local.repo

[local]

name=local

baseurl=file:///mnt

gpgcheck=0

[openstack]

name=openstack

baseurl=file:///opt/repo

gpgcheck=0

[root@controller /]# yum makecache

(三)安装时间同步(chrony)

控制节点:

[root@controller /]# yum install chrony -y

[root@controller /]# vim /etc/chrony.conf

allow 10.0.0.0/24

计算节点:

[root@computer1 /]# yum install chrony -y

[root@computer1 /]# vim /etc/chrony.conf

server 10.0.0.11 iburst

控制节点和计算节点:

[root@computer1 /]# systemctl restart chronyd.service

(四)安装openstack客户端和selinux

控制节点和计算节点

[root@computer1 /]# yum install python-openstackclient.noarch openstack-selinux.noarch

(五)安装配置mariadb

仅仅控制节点

[root@controller /]# yum install mariadb mariadb-server.x86_64 python2-PyMySQL.noarch

[root@controller /]# cat >> /etc/my.cnf.d/openstack.cnf << EOF

> [mysqld]

> bind-address = 10.0.0.11

> default-storage-engine = innodb

> innodb_file_per_table

> max_connections = 4096

> collation-server = utf8_general_ci

> character-set-server = utf8

> EOF

[root@controller /]#

[root@controller /]# systemctl start mariadb.service

[root@controller /]# systemctl status mariadb.service

[root@controller /]# systemctl enable mariadb

mysql优化配置:

[root@controller /]# mysql_secure_installation

(六)安装rabbitmq并创建用户

(仅仅控制节点)

[root@controller /]# yum install rabbitmq-server.noarch -y

[root@controller /]# systemctl start rabbitmq-server.service

[root@controller /]# systemctl status rabbitmq-server.service

[root@controller /]# systemctl enable rabbitmq-server.service

[root@controller /]# rabbitmq-plugins enable rabbitmq_management

[root@controller /]# rabbitmqctl add_user openstack RABBIT_PASS

Creating user "openstack" ...

[root@controller /]# rabbitmqctl set_permissions openstack ".*" ".*" ".*"

Setting permissions for user "openstack" in vhost "/" ...

[root@controller /]# rabbitmq-plugins enable rabbitmq_management

(七)安装缓存memcached

(仅仅控制节点):

[root@controller /]# yum install memcached.x86_64 python-memcached.noarch -y

[root@controller /]# vim /etc/sysconfig/memcached

PORT="11211"

USER="memcached"

MAXCONN="1024"

CACHESIZE="64"

OPTIONS="-l 10.0.0.11,::1"

[root@controller /]# systemctl start memcached.service

[root@controller /]# systemctl enable memcached.service

三、安装openstack认证服务(keystone)

(一)keystone概念

keystone的主要功能:认证管理,授权管理和服务目录。

①认证:也可以理解成账号管理,openstack所有的用户,都是在keystone上注册的。

②授权: glance,nova,neutron,cinder等其他服务都统一使用keystone的账号管理,就像现在很多网站支持qq登陆是一样的。

③服务目录:每增加一个服务,都需要在keystone上做注册登记,用户通过keystone可以知道由有那些服务,这么服务的url地址是多少,然后用户就可以直接访问这些服务。

(二)keystone认证服务配置

1、创库授权

数据库授权命令格式:

grant 权限 on 数据库对象 to 用户

grant 权限 on 数据库对象 to 用户 identified by ‘密码'

[root@controller ~]# mysql

MariaDB [(none)]> CREATE DATABASE keystone;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \

-> IDENTIFIED BY 'KEYSTONE_DBPASS';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \

-> IDENTIFIED BY 'KEYSTONE_DBPASS';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]>

2、安装keystone相关软件包

php,nginx +fastcgi --->php #使用fastcgi插件使得nginx可以连接php。

python,httpd +wsgi--->python #使用wsgi插件使得httpd可以连接pyhon。

[root@controller ~]# yum install openstack-keystone httpd mod_wsgi -y

3、修改配置文件

[root@controller ~]# \cp /etc/keystone/keystone.conf{,.bak}

[root@controller ~]# grep -Ev '^$|#' /etc/keystone/keystone.conf.bak >/etc/keystone/keystone.conf

[root@controller ~]# vim /etc/keystone/keystone.conf

方法1:打开配置文件进行添加数据配置

定义初始管理令牌的值

[DEFAULT]

admin_token = ADMIN_TOKEN

配置数据库访问

[database]

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

配置Fernet UUID令牌的提供者

[token]

provider = fernet #提供临时随机秘钥(令牌)

方法2:

[root@controller keystone]# yum install openstack-utils -y

openstack-config --set /etc/keystone/keystone.conf DEFAULT admin_token ADMIN_TOKEN

openstack-config --set /etc/keystone/keystone.conf database connection mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

openstack-config --set /etc/keystone/keystone.conf token provider fernet

4、同步数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone #su到keystone用户,使用/bin/sh去执行命令。

-s:表示解释器

-c:表示要执行的命令

keystone:是数据库用户名

[root@controller keystone]# su -s /bin/sh -c "keystone-manage db_sync" keystone

检查表:检查是否差生了表(同步数据库的结果)

[root@controller keystone]# mysql keystone -e "show tables"

查看同步日志:

[root@controller keystone]# vim /var/log/keystone/keystone.log

5、初始化fernet

[root@controller keystone]# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

[root@controller keystone]# ll /etc/keystone/

drwx------ 2 keystone keystone 24 Jan 4 22:32 fernet-keys(初始化后差生的令牌文件)

6、配置httpd(apachd)

优化启动速度:

[root@controller keystone]# echo "ServerName controller" >>/etc/httpd/conf/httpd.conf

以下文件默认不存在:

[root@controller keystone]# vim /etc/httpd/conf.d/wsgi-keystone.conf

#监听两个端口

Listen 5000

Listen 35357

#配置两个虚拟主机站点:

<VirtualHost *:5000>

WSGIDaemonProcess keystone-public processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-public

WSGIScriptAlias / /usr/bin/keystone-wsgi-public

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

ErrorLogFormat "%{cu}t %M"

ErrorLog /var/log/httpd/keystone-error.log

CustomLog /var/log/httpd/keystone-access.log combined

<Directory /usr/bin>

Require all granted

</Directory>

</VirtualHost>

<VirtualHost *:35357>

WSGIDaemonProcess keystone-admin processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-admin

WSGIScriptAlias / /usr/bin/keystone-wsgi-admin

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

ErrorLogFormat "%{cu}t %M"

ErrorLog /var/log/httpd/keystone-error.log

CustomLog /var/log/httpd/keystone-access.log combined

<Directory /usr/bin>

Require all granted

</Directory>

</VirtualHost>

[root@controller keystone]#

检验:

[root@controller keystone]# md5sum /etc/httpd/conf.d/wsgi-keystone.conf

8f051eb53577f67356ed03e4550315c2 /etc/httpd/conf.d/wsgi-keystone.conf

7、启动httpd

[root@controller keystone]# systemctl start httpd.service

[root@controller keystone]# systemctl enable httpd.service

netstat -lntp #查看是否监听5000和35357端口

8、创建服务和注册api

在keystone上配置,keystone还不完善,无法为自己和其他服务注册服务和api。使用管理员token(管理员令牌)初始的方法注册服务和api。

声明环境变量

[root@controller ~]# export OS_TOKEN=ADMIN_TOKEN

[root@controller ~]# export OS_URL=http://controller:35357/v3

[root@controller ~]# export OS_IDENTITY_API_VERSION=3

env|grep OS

创建认证服务:

[root@controller ~]# openstack service create \

> --name keystone --description "OpenStack Identity" identity

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Identity |

| enabled | True |

| id | b251b397df344ed58b77879709a82340 |

| name | keystone |

| type | identity |

+-------------+----------------------------------+

注册API:

[root@controller ~]# openstack endpoint create --region RegionOne \

> identity public http://controller:5000/v3 #公共使用的url

tp://controller:35357/v3

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 034a286a309c4d998c2918cb9ad6f161 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | b251b397df344ed58b77879709a82340 |

| service_name | keystone |

| service_type | identity |

| url | http://controller:5000/v3 |

+--------------+----------------------------------+

[root@controller ~]#

[root@controller ~]# openstack endpoint create --region RegionOne \

> identity internal http://controller:5000/v3 #内部组件使用的url

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | dedefe5fe8424132b9ced6c0ead9291c |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | b251b397df344ed58b77879709a82340 |

| service_name | keystone |

| service_type | identity |

| url | http://controller:5000/v3 |

+--------------+----------------------------------+

[root@controller ~]#

[root@controller ~]# openstack endpoint create --region RegionOne \

> identity admin http://controller:35357/v3 #管理员使用的url

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 64af2fb03db945d79d77e3c4b67b75ab |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | b251b397df344ed58b77879709a82340 |

| service_name | keystone |

| service_type | identity |

| url | http://controller:35357/v3 |

+--------------+----------------------------------+

[root@controller ~]#

9、创建域、项目、用户、角色

[root@controller ~]# openstack domain create --description "Default Domain" default

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Default Domain |

| enabled | True |

| id | 30c30c794d4a4e92ae4474320e75bf47 |

| name | default |

+-------------+----------------------------------+

[root@controller ~]#

[root@controller ~]# openstack project create --domain default \

> --description "Admin Project" admin

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Admin Project |

| domain_id | 30c30c794d4a4e92ae4474320e75bf47 |

| enabled | True |

| id | 17b0da567cc341c7b33205572bd0470b |

| is_domain | False |

| name | admin |

| parent_id | 30c30c794d4a4e92ae4474320e75bf47 |

+-------------+----------------------------------+

[root@controller ~]#

[root@controller ~]# openstack user create --domain default \

> --password ADMIN_PASS admin #通过--password指定用户的密码,与官网不同。

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | 30c30c794d4a4e92ae4474320e75bf47 |

| enabled | True |

| id | a7b53c25b6c94a78a6efe00bc9150c33 |

| name | admin |

+-----------+----------------------------------+

[root@controller ~]#

[root@controller ~]# openstack role create admin

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | None |

| id | 043b3090d03f436eab223f9f1cedf815 |

| name | admin |

+-----------+----------------------------------+

#关联项目,用户,角色

[root@controller ~]# openstack role add --project admin --user admin admin

#在admin项目上,给admin用户赋予admin角色

[root@controller ~]# openstack project create --domain default \

> --description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | 30c30c794d4a4e92ae4474320e75bf47 |

| enabled | True |

| id | 317c63946e484b518dc0d99774ff6772 |

| is_domain | False |

| name | service |

| parent_id | 30c30c794d4a4e92ae4474320e75bf47 |

+-------------+----------------------------------+

10、测试授权

[root@controller ~]# unset OS_TOKEN OS_URL #取消环境变量

#没有环境变脸情况下配置:

[root@controller ~]# openstack --os-auth-url http://controller:35357/v3 \

> --os-project-domain-name default --os-user-domain-name default \

> --os-project-name admin --os-username admin --os-password ADMIN_PASS token issue

[root@controller ~]# openstack --os-auth-url http://controller:35357/v3 \

> --os-project-domain-name default --os-user-domain-name default \

> --os-project-name admin --os-username admin --os-password ADMIN_PASS user list

(三)创建环境变量脚本

[root@controller ~]# vim admin-openrc #这里都是大写,命令行中都是小写,效果一样。

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

[root@controller ~]# source admin-openrc

[root@controller ~]# openstack user list

[root@controller ~]# openstack token issue #检查是否可以分别出token

四、镜像服务glance

(一)镜像服务介绍

1、概念

镜像服务 (glance) 允许用户查询、上传和下载虚拟机镜像。

组件:glance-api

接收镜像API的调用,诸如镜像发现、恢复、存储。

组件:glance-registry

存储、处理和恢复镜像的元数据,元数据包括项诸如大小和类型。

(二)镜像配置

1、openstack通用步骤

a:数据库创库授权

b:在keystone创建系统用户关联角色

c:在keystone上创建服务,注册api

d:安装相应服务软件包

e:修改相应服务的配置文件f:同步数据库

g:启动服务

2、镜像配置步骤

①数据库创库授权

[root@controller ~]# mysql

MariaDB [(none)]> CREATE DATABASE glance;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \

-> IDENTIFIED BY 'GLANCE_DBPASS';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \

-> IDENTIFIED BY 'GLANCE_DBPASS';

Query OK, 0 rows affected (0.00 sec)

②在keystone创建glance用户关联角色

[root@controller ~]# openstack user create --domain default --password GLANCE_PASS glance

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | 30c30c794d4a4e92ae4474320e75bf47 |

| enabled | True |

| id | dc68fd42c718411085a1cbc1379a662e |

| name | glance |

+-----------+----------------------------------+

[root@controller ~]# openstack role add --project service --user glance admin

③在keystone上创建服务和注册api

[root@controller ~]# openstack service create --name glance \

> --description "OpenStack Image" image

nstack endpoint create --region RegionOne \

image admin http://controller:9292

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Image |

| enabled | True |

| id | 7f258ec0b235433188c5664c9e710d7c |

| name | glance |

| type | image |

+-------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne \

> image public http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 2b8484d91ec94bd8a5aafd56ea7a1cfe |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 7f258ec0b235433188c5664c9e710d7c |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne \

> image internal http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | aec16a57566a4bccae96f9c63885c0b5 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 7f258ec0b235433188c5664c9e710d7c |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne \

> image admin http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | ceba791635b341d79c1c47182c22c4df |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 7f258ec0b235433188c5664c9e710d7c |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

[root@controller ~]#

④安装服务相应软件包

[root@controller ~]# yum install openstack-glance -y

⑤修改相应服务的配置文件

[root@controller ~]# cp /etc/glance/glance-api.conf{,.bak}

[root@controller ~]# grep '^[a-Z\[]' /etc/glance/glance-api.conf.bak >/etc/glance/glance-api.conf

配置api配置文件:

openstack-config --set /etc/glance/glance-api.conf database connection mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

openstack-config --set /etc/glance/glance-api.conf glance_store stores file,http

openstack-config --set /etc/glance/glance-api.conf glance_store default_store file

openstack-config --set /etc/glance/glance-api.conf glance_store filesystem_store_datadir /var/lib/glance/images/

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken password GLANCE_PASS

openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystone

配置注册配置文件:

cp /etc/glance/glance-registry.conf{,.bak}

grep '^[a-Z\[]' /etc/glance/glance-registry.conf.bak > /etc/glance/glance-registry.conf

openstack-config --set /etc/glance/glance-registry.conf database connection mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken password GLANCE_PASS

openstack-config --set /etc/glance/glance-registry.conf paste_deploy flavor keystone

⑥同步数据库

[root@controller ~]# su -s /bin/sh -c "glance-manage db_sync" glance

[root@controller ~]# mysql glance -e "show tables"

⑦启动服务

[root@controller ~]# systemctl start openstack-glance-scrubber.service openstack-glance-api.service

[root@controller ~]# systemctl enable openstack-glance-scrubber.service openstack-glance-api.service

[root@controller ~]# systemctl status openstack-glance-scrubber.service openstack-glance-api.service

⑧验证

将镜像(cirros-0.3.4-x86_64-disk.img)上传至根目录

验证镜像上传:

openstack image create "cirros" \

--file cirros-0.3.4-x86_64-disk.img \

--disk-format qcow2 --container-format bare \(也可以作为容器镜像)

--public

查看镜像上传成功:

[root@controller images]# pwd

/var/lib/glance/images

[root@controller images]# ll

total 12980

-rw-r----- 1 glance glance 13287936 Jan 4 23:29 456d7600-3bd1-4fb5-aa84-144a61c0eb07

[root@controller images]#

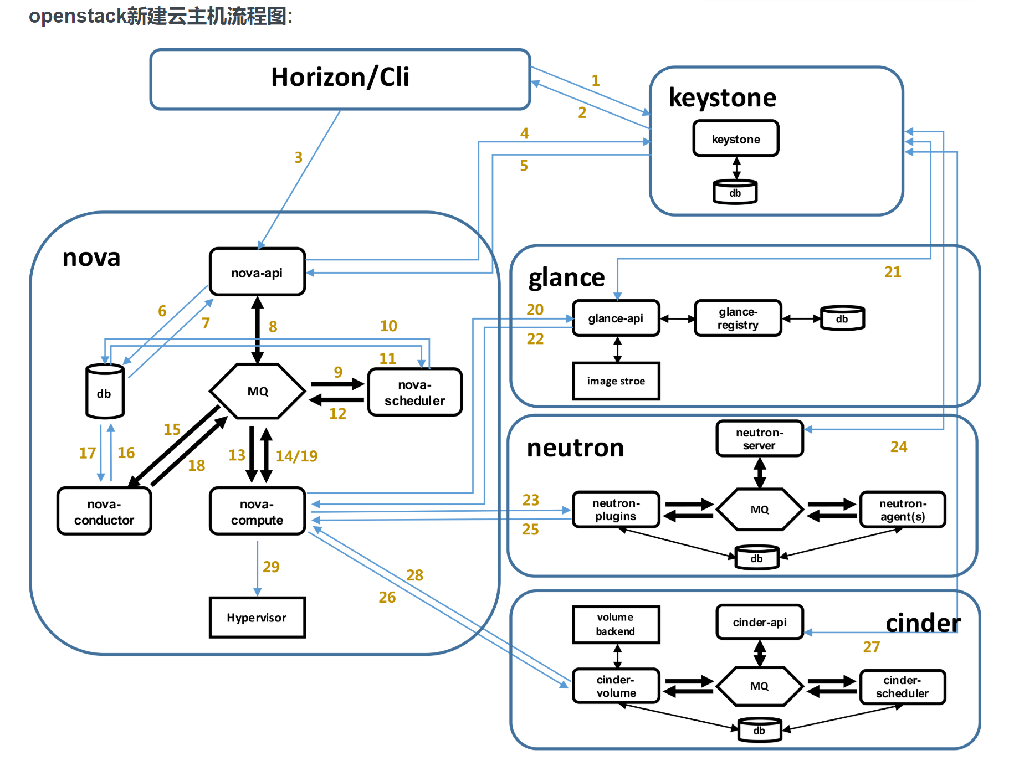

五、计算服务nova

(一)nova介绍

nova服务是openstack云计算中的最核心服务。常用组件有:

nova-api:接受并响应所有的计算服务请求,管理虚拟机(云主机)生命周期

nova-compute(多个):真正管理虚拟机的生命周期

nova-scheduler: nova调度器(挑选出最合适的nova-compute来创建虚机)

nova-conductor: 帮助nova-compute代理修改数据库中虚拟机的状态

nova-network : 早期openstack版本管理虚拟机的网络(已弃用,neutron)

nova-consoleauth : 为web版的vnc提供访问令牌

tokennovncproxy:web版 vnc客户端

nova-api-metadata:接受来自虚拟机发送的元数据请求

(二)nova配置

1、openstack通用配置流程

a:数据库创库授权

b:在keystone创建系统用户关联角色

c:在keystone上创建服务,注册api

d:安装相应服务软件包

e:修改相应服务的配置文件

f:同步数据库

g:启动服务

2、nova控制节点配置步骤

①数据库创库授权

[root@controller ~]# mysql

CREATE DATABASE nova_api;

CREATE DATABASE nova;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';

②在keystone创建系统用户(glance,nova,neutron)关联角色

openstack user create --domain default \

--password NOVA_PASS nova

openstack role add --project service --user nova admin

③在keystone上创建服务和注册api

openstack service create --name nova \

--description "OpenStack Compute" compute

openstack endpoint create --region RegionOne \

compute public http://controller:8774/v2.1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

compute internal http://controller:8774/v2.1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

compute admin http://controller:8774/v2.1/%\(tenant_id\)s

④安装服务相应软件包

yum install openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler -y

⑤修改相应服务的配置文件

cp /etc/nova/nova.conf{,.bak}

grep '^[a-Z\[]' /etc/nova/nova.conf.bak >/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.0.0.11

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf api_database connection mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

openstack-config --set /etc/nova/nova.conf database connection mysql+pymysql://nova:NOVA_DBPASS@controller/nova

openstack-config --set /etc/nova/nova.conf glance api_servers http://controller:9292

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

openstack-config --set /etc/nova/nova.conf vnc vncserver_listen '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc vncserver_proxyclient_address '$my_ip'

验证

[root@controller ~]# md5sum /etc/nova/nova.conf

47ded61fdd1a79ab91bdb37ce59ef192 /etc/nova/nova.conf

⑥同步数据库

[root@controller ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

[root@controller ~]# su -s /bin/sh -c "nova-manage db sync" nova

⑦启动服务

systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl start openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

查看:

[root@controller ~]# openstack compute service list

nova service-list

glance image-list

openstack image list

openstack compute service list

3、nova计算节点配置步骤

①nova-compute调用libvirtd来创建虚拟机

安装相关软件:

yum install openstack-nova-compute -y

yum install openstack-utils.noarch -y

②配置

[root@computer1 ~]# cp /etc/nova/nova.conf{,.bak}

[root@computer1 ~]# grep '^[a-Z\[]' /etc/nova/nova.conf.bak >/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.0.0.31

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf glance api_servers http://controller:9292

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

openstack-config --set /etc/nova/nova.conf vnc enabled True

openstack-config --set /etc/nova/nova.conf vnc vncserver_listen 0.0.0.0

openstack-config --set /etc/nova/nova.conf vnc vncserver_proxyclient_address '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://controller:6080/vnc_auto.html

验证

[root@computer1 ~]# md5sum /etc/nova/nova.conf

45cab6030a9ab82761e9f697d6d79e14 /etc/nova/nova.conf

③启动服务

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl start libvirtd.service openstack-nova-compute.service

④验证(全局变量要生效)

控制节点

[root@controller ~]# openstack compute service list

六、网络服务neutron

(一)概念介绍

OpenStack Networking(neutron),允许创建、附加网卡设备,这些设备由其他的OpenStack服务管理。插件式的实现可以容纳不同的网络设备和软件,为OpenStack架构与部署提供了灵活性。

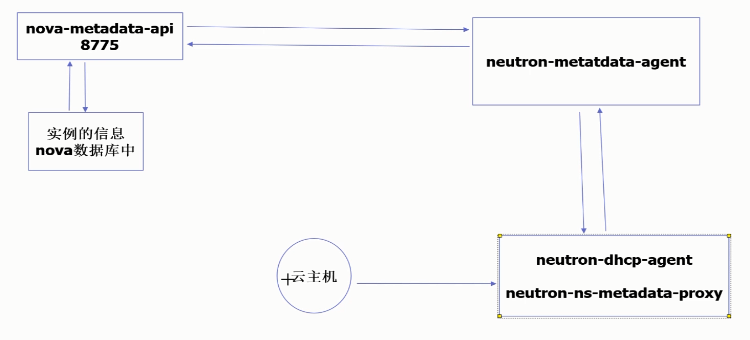

常用组件有:

neutron-server :接受和响应外部的网络管理请求

neutron-linuxbridge-agent:负责创建桥接网卡

neutron-dhcp-agent:负责分配IP

neutron-metadata-agent:配合nova-metadata-api实现虚拟机的定制化操作

L3-agent:实现三层网络vxlan(网络层)

(二)neutron组件配置

1、控制节点配置

①数据库授权

[root@controller ~]# mysql

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \

IDENTIFIED BY 'NEUTRON_DBPASS';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \

IDENTIFIED BY 'NEUTRON_DBPASS';

②在keystone创建系统用户(glance,nova,neutron)关联角色

openstack user create --domain default --password NEUTRON_PASS neutron

openstack role add --project service --user neutron admin

③在keystone上创建服务和注册api

openstack service create --name neutron \

--description "OpenStack Networking" network

openstack endpoint create --region RegionOne \

network public http://controller:9696

openstack endpoint create --region RegionOne \

network internal http://controller:9696

openstack endpoint create --region RegionOne \

network admin http://controller:9696

④安装服务相应软件包

yum install openstack-neutron openstack-neutron-ml2 \

openstack-neutron-linuxbridge ebtables -y

⑤修改相应服务的配置文件

文件:/etc/neutron/neutron.conf

cp /etc/neutron/neutron.conf{,.bak}

grep '^[a-Z\[]' /etc/neutron/neutron.conf.bak >/etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2

openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins

openstack-config --set /etc/neutron/neutron.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes True

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes True

openstack-config --set /etc/neutron/neutron.conf database connection mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS

openstack-config --set /etc/neutron/neutron.conf nova auth_url http://controller:35357

openstack-config --set /etc/neutron/neutron.conf nova auth_type password

openstack-config --set /etc/neutron/neutron.conf nova project_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova user_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova region_name RegionOne

openstack-config --set /etc/neutron/neutron.conf nova project_name service

openstack-config --set /etc/neutron/neutron.conf nova username nova

openstack-config --set /etc/neutron/neutron.conf nova password NOVA_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

验证

[root@controller ~]# md5sum /etc/neutron/neutron.conf

e399b7958cd22f47becc6d8fd6d3521a /etc/neutron/neutron.conf

文件:/etc/neutron/plugins/ml2/ml2_conf.ini

cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

grep '^[a-Z\[]' /etc/neutron/plugins/ml2/ml2_conf.ini.bak >/etc/neutron/plugins/ml2/ml2_conf.ini

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers flat,vlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers linuxbridge

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 extension_drivers port_security

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_flat flat_networks provider

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_ipset True

验证:

[root@controller ~]# md5sum /etc/neutron/plugins/ml2/ml2_conf.ini

2640b5de519fafcd675b30e1bcd3c7d5 /etc/neutron/plugins/ml2/ml2_conf.ini

文件:/etc/neutron/plugins/ml2/linuxbridge_agent.ini

cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep '^[a-Z\[]' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak >/etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eth0

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group True

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan False

验证:

[root@controller ~]# md5sum /etc/neutron/plugins/ml2/linuxbridge_agent.ini

3f474907a7f438b34563e4d3f3c29538 /etc/neutron/plugins/ml2/linuxbridge_agent.ini

文件:/etc/neutron/dhcp_agent.ini

cp /etc/neutron/dhcp_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/dhcp_agent.ini.bak >/etc/neutron/dhcp_agent.ini

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver neutron.agent.linux.interface.BridgeInterfaceDriver

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.Dnsmasq

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT enable_isolated_metadata True

验证:

[root@controller ~]# md5sum /etc/neutron/dhcp_agent.ini

d39579607b2f7d92e88f8910f9213520 /etc/neutron/dhcp_agent.ini

文件:/etc/neutron/metadata_agent.ini

cp /etc/neutron/metadata_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/metadata_agent.ini.bak >/etc/neutron/metadata_agent.ini

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_ip controller

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret METADATA_SECRET

验证:

[root@controller ~]# md5sum /etc/neutron/metadata_agent.ini

e1166b0dfcbcf4507d50860d124335d6 /etc/neutron/metadata_agent.ini

文件:再次修改/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf neutron url http://controller:9696

openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password NEUTRON_PASS

openstack-config --set /etc/nova/nova.conf neutron service_metadata_proxy True

openstack-config --set /etc/nova/nova.conf neutron metadata_proxy_shared_secret METADATA_SECRET

验证:

[root@controller ~]# md5sum /etc/nova/nova.conf

6334f359655efdbcf083b812ab94efc1 /etc/nova/nova.conf

⑥同步数据库

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

⑦启动服务

systemctl restart openstack-nova-api.service

systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

systemctl start neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

2、计算节点配置

①安装相关软件

yum install openstack-neutron-linuxbridge ebtables ipset -y

②配置

cp /etc/neutron/neutron.conf{,.bak}

grep '^[a-Z\[]' /etc/neutron/neutron.conf.bak >/etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

验证:

[root@computer1 ~]# md5sum /etc/neutron/neutron.conf

77ffab503797be5063c06e8b956d6ed0 /etc/neutron/neutron.conf

文件:/etc/neutron/plugins/ml2/linuxbridge_agent.ini

cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep '^[a-Z\[]' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak >/etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eth0

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group True

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan False

验证:

[root@computer1 ~]# md5sum /etc/neutron/plugins/ml2/linuxbridge_agent.ini

3f474907a7f438b34563e4d3f3c29538 /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[root@computer1 ~]#

文件:再次配置/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf neutron url http://controller:9696

openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password NEUTRON_PASS

验证:

[root@computer1 ~]# md5sum /etc/nova/nova.conf

328cd5f0745e26a420e828b0dfc2934e /etc/nova/nova.conf

控制节点上查看:

[root@controller ~]# neutron agent-list

③组件启动

systemctl restart openstack-nova-compute.service

systemctl enable neutron-linuxbridge-agent.service

systemctl start neutron-linuxbridge-agent.service

七、仪表盘服务horizon

(一)概念介绍

Dashboard(horizon)是一个web接口,使得云平台管理员以及用户可以管理不同的Openstack资源以及服务。它是使用python django框架开发的,它没有自己的数据库,web页面展示,全程依赖调用其他服务的api。仪表盘服务安装在计算节点上(官方文档安装在控制节点上)

(二)组件安装配置

①安装相关软件

yum install openstack-dashboard python-memcached -y

②配置

openstack资料包里准备好的配置文件(local-setting)导入配置文件:

[root@computer1 ~]# cat local_settings >/etc/openstack-dashboard/local_settings

配置组件

[root@computer1 ~]# grep -Ev '^$|#' local_settings

import os

from django.utils.translation import ugettext_lazy as _

from openstack_dashboard import exceptions

from openstack_dashboard.settings import HORIZON_CONFIG

DEBUG = False

TEMPLATE_DEBUG = DEBUG

WEBROOT = '/dashboard/'

ALLOWED_HOSTS = ['*', ]

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

"compute": 2,

}

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = 'default'

LOCAL_PATH = '/tmp'

SECRET_KEY='65941f1393ea1c265ad7'

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

},

}

EMAIL_BACKEND = 'django.core.mail.backends.console.EmailBackend'

OPENSTACK_HOST = "controller"

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

OPENSTACK_KEYSTONE_BACKEND = {

'name': 'native',

'can_edit_user': True,

'can_edit_group': True,

'can_edit_project': True,

'can_edit_domain': True,

'can_edit_role': True,

}

OPENSTACK_HYPERVISOR_FEATURES = {

'can_set_mount_point': False,

'can_set_password': False,

'requires_keypair': False,

}

OPENSTACK_CINDER_FEATURES = {

'enable_backup': False,

}

OPENSTACK_NEUTRON_NETWORK = {

'enable_router': False,

'enable_quotas': False,

'enable_ipv6': False,

'enable_distributed_router': False,

'enable_ha_router': False,

'enable_lb': False,

'enable_firewall': False,

'enable_vpn': False,

'enable_fip_topology_check': False,

'default_ipv4_subnet_pool_label': None,

'default_ipv6_subnet_pool_label': None,

'profile_support': None,

'supported_provider_types': ['*'],

'supported_vnic_types': ['*'],

}

OPENSTACK_HEAT_STACK = {

'enable_user_pass': True,

}

IMAGE_CUSTOM_PROPERTY_TITLES = {

"architecture": _("Architecture"),

"kernel_id": _("Kernel ID"),

"ramdisk_id": _("Ramdisk ID"),

"image_state": _("Euca2ools state"),

"project_id": _("Project ID"),

"image_type": _("Image Type"),

}

IMAGE_RESERVED_CUSTOM_PROPERTIES = []

API_RESULT_LIMIT = 1000

API_RESULT_PAGE_SIZE = 20

SWIFT_FILE_TRANSFER_CHUNK_SIZE = 512 * 1024

DROPDOWN_MAX_ITEMS = 30

TIME_ZONE = "Asia/Shanghai"

POLICY_FILES_PATH = '/etc/openstack-dashboard'

LOGGING = {

'version': 1,

'disable_existing_loggers': False,

'handlers': {

'null': {

'level': 'DEBUG',

'class': 'logging.NullHandler',

},

'console': {

'level': 'INFO',

'class': 'logging.StreamHandler',

},

},

'loggers': {

'django.db.backends': {

'handlers': ['null'],

'propagate': False,

},

'requests': {

'handlers': ['null'],

'propagate': False,

},

'horizon': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': False,

},

'openstack_dashboard': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': False,

},

'novaclient': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': False,

},

'cinderclient': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': False,

},

'keystoneclient': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': False,

},

'glanceclient': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': False,

},

'neutronclient': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': False,

},

'heatclient': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': False,

},

'ceilometerclient': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': False,

},

'swiftclient': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': False,

},

'openstack_auth': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': False,

},

'nose.plugins.manager': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': False,

},

'django': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': False,

},

'iso8601': {

'handlers': ['null'],

'propagate': False,

},

'scss': {

'handlers': ['null'],

'propagate': False,

},

},

}

SECURITY_GROUP_RULES = {

'all_tcp': {

'name': _('All TCP'),

'ip_protocol': 'tcp',

'from_port': '1',

'to_port': '65535',

},

'all_udp': {

'name': _('All UDP'),

'ip_protocol': 'udp',

'from_port': '1',

'to_port': '65535',

},

'all_icmp': {

'name': _('All ICMP'),

'ip_protocol': 'icmp',

'from_port': '-1',

'to_port': '-1',

},

'ssh': {

'name': 'SSH',

'ip_protocol': 'tcp',

'from_port': '22',

'to_port': '22',

},

'smtp': {

'name': 'SMTP',

'ip_protocol': 'tcp',

'from_port': '25',

'to_port': '25',

},

'dns': {

'name': 'DNS',

'ip_protocol': 'tcp',

'from_port': '53',

'to_port': '53',

},

'http': {

'name': 'HTTP',

'ip_protocol': 'tcp',

'from_port': '80',

'to_port': '80',

},

'pop3': {

'name': 'POP3',

'ip_protocol': 'tcp',

'from_port': '110',

'to_port': '110',

},

'imap': {

'name': 'IMAP',

'ip_protocol': 'tcp',

'from_port': '143',

'to_port': '143',

},

'ldap': {

'name': 'LDAP',

'ip_protocol': 'tcp',

'from_port': '389',

'to_port': '389',

},

'https': {

'name': 'HTTPS',

'ip_protocol': 'tcp',

'from_port': '443',

'to_port': '443',

},

'smtps': {

'name': 'SMTPS',

'ip_protocol': 'tcp',

'from_port': '465',

'to_port': '465',

},

'imaps': {

'name': 'IMAPS',

'ip_protocol': 'tcp',

'from_port': '993',

'to_port': '993',

},

'pop3s': {

'name': 'POP3S',

'ip_protocol': 'tcp',

'from_port': '995',

'to_port': '995',

},

'ms_sql': {

'name': 'MS SQL',

'ip_protocol': 'tcp',

'from_port': '1433',

'to_port': '1433',

},

'mysql': {

'name': 'MYSQL',

'ip_protocol': 'tcp',

'from_port': '3306',

'to_port': '3306',

},

'rdp': {

'name': 'RDP',

'ip_protocol': 'tcp',

'from_port': '3389',

'to_port': '3389',

},

}

REST_API_REQUIRED_SETTINGS = ['OPENSTACK_HYPERVISOR_FEATURES',

'LAUNCH_INSTANCE_DEFAULTS']

③启动服务

[root@computer1 ~]# systemctl start httpd.service

④使用浏览器http://10.0.0.31/dashboard,

⑤如果出现Internal Server Error

解决办法:

[root@computer1 ~]# vim /etc/httpd/conf.d/openstack-dashboard.conf

在WSGISocketPrefix run/wsgi后一行添加:

WSGIApplicationGroup %{GLOBAL}

[root@computer1 ~]# systemctl restart httpd.service

⑥登录dashboard

域:default

用户名:admin

密码:ADMIN_PASS

八、启动一个实例

(一)启动实例步骤

第一次启动实例需要步骤:

1:创建openstack网络

2:创建实例的硬件配置方案

3:创建密钥对(控制节点免秘钥登录)

4:创建安全组规则

5:启动一个实例(通过命令行创建实例,或者通过web页面启动实例)

(二)通过命令行创建实例

①创建网络

neutron net-create --shared --provider:physical_network provider \

--provider:network_type flat oldboy

# physical_network provider,这里的名称相同与:

#[root@controller ~]# cat /etc/neutron/plugins/ml2/ml2_conf.ini | grep flat_networks

#flat_networks = provider

创建子网:

neutron subnet-create --name oldgirl \

--allocation-pool start=10.0.0.101,end=10.0.0.250 \

--dns-nameserver 223.5.5.5 --gateway 10.0.0.254 \

oldboy 10.0.0.0/24

②配置硬件配置方案:

查看已有配置方案(硬件方案):

[root@controller ~]# openstack flavor list

创建方案:

[root@controller ~]# openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano

③创建秘钥对

创建秘钥对,然后上传到OpenStack上:

[root@controller ~]# ssh-keygen -q -N "" -f ~/.ssh/id_rsa

[root@controller ~]# openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

④创建安全组规则

openstack security group rule create --proto icmp default

openstack security group rule create --proto tcp --dst-port 22 default

#开放的两个协议,icmp,tcp协议22端口

⑤启动一个实例(命令行):

查看已有镜像:

[root@controller ~]# openstack image list

查看网络id:

[root@controller ~]# neutron net-list

dd7500f9-1cb1-42df-8025-a232ef90d54c

创建镜像

openstack server create --flavor m1.nano --image cirros \

--nic net-id=dd7500f9-1cb1-42df-8025-a232ef90d54c --security-group default \

--key-name mykey oldboy

#实例的名称叫oldboy,秘钥对名称跟上面一样:mykey

检查:

[root@controller images]# openstack server list

[root@controller images]# nova list

安装相关插件查看虚拟机列表:

yum install libvirt -y

virsh list #查看虚拟机列表

netstat -lntp #监控5900端口

注意事项:

①出现controller无法解析,在电脑的hosts文件中添加:10.0.0.11 controller

②如果实例卡在gurb界面

计算节点修改配置:

vim /etc/nova/nova.conf

[libvirt]

cpu_mode = none

virt_type = qemu

[root@computer1 ~]# systemctl restart openstack-nova-compute

(三)web页面创建实例步骤

①点击“计算”

②点击“实例”

③点击右上角“启用实例”

④详情信息:instance name :实例名称,count:选择创建实例数量

⑤选择镜像源,点击加号

⑥flavor:实例的硬件配置,选择点击后端加号(硬件的配置方案)

⑦网络选择已经创建的,默认的

⑧下面都是默认配置

在windows的host文件中增加:10.0.0.11 controller #(避免报错)

创建实例:控制台可以进入虚拟机;

点击控制台进入虚拟机内部时候:

提示错误:booting from hard disk grub:

解决办法:

计算节点:nova配置文件:

vim /etc/nova/nova.conf

[libvirt]

cpu_mode=none

virt_type=qemu

systemctl restart openstack-nova-compute

硬重启实例。

九、增加一个计算节点

(一)增加计算节点的步骤

1:配置yum源

2:时间同步

3:安装openstack基础包

4:安装nova-compute

5:安装neutron-linuxbridge-agent

6:启动服务nova-compute和linuxbridge-agent

7:验证

(二)安装yum

mount /dev/cdrom /mnt

rz 上传openstack_rpm.tar.gz到/opt,并解压

生成repo配置文件

echo '[local]

name=local

baseurl=file:///mnt

gpgcheck=0

[openstack]

name=openstack

baseurl=file:///opt/repo

gpgcheck=0' >/etc/yum.repos.d/local.repo

yum makecache

echo 'mount /dev/cdrom /mnt' >>/etc/rc.local

chmod +x /etc/rc.d/rc.local

(三)时间同步和openstack基础包

时间同步:

vim /etc/chrony.conf

修改第3行为

server 10.0.0.11 iburst

systemctl restart chronyd

安装openstack客户端和openstack-selinux

yum install python-openstackclient.noarch openstack-selinux.noarch -y

(四)安装nova-compute和网络

yum install openstack-nova-compute -y

yum install openstack-utils.noarch -y

\cp /etc/nova/nova.conf{,.bak}

grep -Ev '^$|#' /etc/nova/nova.conf.bak >/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.0.0.32

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf glance api_servers http://controller:9292

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

openstack-config --set /etc/nova/nova.conf vnc enabled True

openstack-config --set /etc/nova/nova.conf vnc vncserver_listen 0.0.0.0

openstack-config --set /etc/nova/nova.conf vnc vncserver_proxyclient_address '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://controller:6080/vnc_auto.html

openstack-config --set /etc/nova/nova.conf neutron url http://controller:9696

openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password NEUTRON_PASS

安装neutron-linuxbridge-agent

yum install openstack-neutron-linuxbridge ebtables ipset -y

\cp /etc/neutron/neutron.conf{,.bak}

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak >/etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

####

#vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

\cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep '^[a-Z\[]' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak >/etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eth0

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group True

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan False

(五)启动服务

[root@computer2 /]# systemctl start libvirtd openstack-nova-compute neutron-linuxbridge-agent

[root@computer2 /]# systemctl status libvirtd openstack-nova-compute neutron-linuxbridge-agent

在控制节点上进行检测:

nova service-list

neutron agent-list

(六)检查计算节点

创建虚机来检查新增的计算节点是否可用:

①创建主机聚集:管理员-主机聚集-创建主机聚集-主机聚集信息(名称,域:oldboyboy)-管理聚集内主机(compute2)--创建 #对nova-compute进行分组。

②创建主机:项目-实例-启动实例-详细信息(可用区域选择刚创建的oldobyboy,主机聚集名称)-源-flavor-网络-网络端口-安全组-秘钥对-配置-元数据-创建 #在管理员目录下可以看到实例属于的主机。

注意:如果实例卡在gurb界面

vim /etc/nova/nova.conf

[libvirt]

cpu_mode = none

virt_type = qemu

systemctl restart openstack-nova-compute

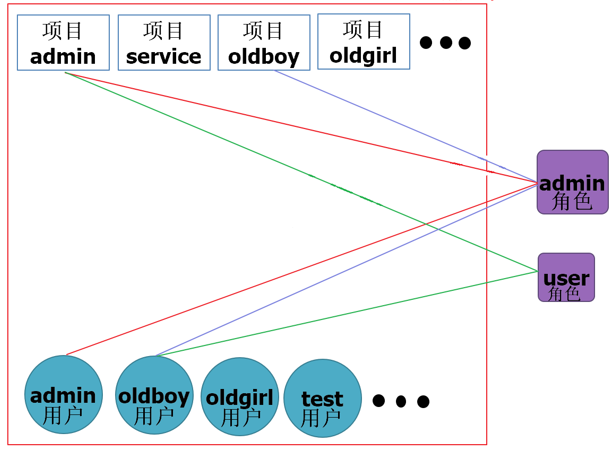

十、openstack用户项目和角色关系

(一)项目用户角色的关系图

1、创建域

openstack domain create --description "Default Domain" default

2、创建项目

openstack project create --domain default --description "Admin Project" admin

3、创建用户

openstack user create --domain default --password ADMIN_PASS admin

4、创建角色

openstack role create admin

5、关联角色,授权

openstack role add --project admin --user admin admin

(二)身份管理里创建角色(admin,user)

①先创建角色

②创建项目

调整配额,在项目里调整。

③创建用户

普通用户里无管理员目录

admin角色:所有项目管理员

user角色:单个项目的用户

只用管理员才能看到所有实例。

(三)项目配额管理

管理员-项目-管理成员-项目配额:配置相应的配额。

十一、迁移glance镜像服务

(一)背景

当openstack管理的计算节点越来越多的时候,控制节点的压力越来越大,由于所有的服务都安装在控制节点,这时候控制节点上的openstack服务随时都团灭的风险。

openstack是基于soa架构设计的,我们已经实现了horizon的迁移,接下来,我们实现glance镜像服务的迁移,后面其他的服务都可以迁移,让控制节点只保留一个keystone服务,是soa架构的最佳实践。

本次,我们将glance镜像服务,由控制节点迁移到compute2上。

(二)glance镜像服务迁移的主要步骤

1:停止控制节点上的glance服务

2:备份迁移glance数据库

3:在新的节点上安装配置glance

4:迁移原有glance镜像文件

5:修改keystone中glance的api地址

6:修改所有节点nova配置文件中glance的api地址

7:测试,上传镜像,创建实例

(三)操作过程

①控制节点上关闭相关服务:

[root@controller ~]# systemctl stop openstack-glance-api.service openstack-glance-registry.service

[root@controller ~]# systemctl disable openstack-glance-api.service openstack-glance-registry.service

②在控制节点上备份库:

[root@controller ~]# mysqldump -uroot -B glance >glance.sql

[root@controller ~]# scp glance.sql 10.0.0.32:/root

(四)数据库迁移

在compute2上:

yum install mariadb-server.x86_64 python2-PyMySQL -y

systemctl start mariadb

systemctl enable mariadb

mysql_secure_installation

导入从控制节点上备份的glance数据库

mysql < glance.sql

[root@computer2 ~]# mysql

mysql>

show databases;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \

IDENTIFIED BY 'GLANCE_DBPASS';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \

IDENTIFIED BY 'GLANCE_DBPASS';

(五)安装glance服务

在compute2上安装:

yum install openstack-glance -y

在控制节点上将配置文件发送至compute2上:

[root@controller ~]# scp /etc/glance/glance-api.conf 10.0.0.32:/etc/glance/

[root@controller ~]# scp /etc/glance/glance-registry.conf 10.0.0.32:/etc/glance/

修改:

connection = mysql+pymysql://glance:GLANCE_DBPASS@10.0.0.32/glance

复制之前的内容,注意:修改数据库的ip地址为10.0.0.32

systemctl start openstack-glance-api.service openstack-glance-registry.service

systemctl enable openstack-glance-api.service openstack-glance-registry.service

(六)迁移镜像

[root@computer2 glance]# scp -rp 10.0.0.11:/var/lib/glance/images/* /var/lib/glance/images/

[root@computer2 images]# chown -R glance:glance /var/lib/glance/images/

在控制节点上检查:

source admin-openrc

openstack endpoint list | grep image

依旧是之前的镜像

(七)修改keystone上的glance的api地址

在控制节点上:

查看相关数据库:

msyql keystone:

select * from endpoint

[root@controller ~]# mysqldump -uroot keystone endpoint >endpoint.sql

[root@controller ~]# cp endpoint.sql /opt/

修改数据库配置文件:

[root@controller ~]# sed -i 's#http://controller:9292#http://10.0.0.32:9292#g' endpoint.sql

导入修改好的数据库文件:

[root@controller ~]# mysql keystone < endpoint.sql

查看glance接口地址:

[root@controller ~]# openstack endpoint list|grep image

[root@controller ~]# openstack image list

(八)修改所有节点nova配置文件

sed -i 's#http://controller:9292#http://10.0.0.32:9292#g' /etc/nova/nova.conf

grep '9292' /etc/nova/nova.conf

systemctl restart openstack-nova-api.service openstack-nova-compute.service

控制节点重启:openstack-nova-api.service

计算节点重启:openstack-nova-compute.service

控制节点:

[root@controller ~]# nova service-list

+----+------------------+------------+-----------+---------+-------+----------------------------+-----------------+

| Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+----+------------------+------------+-----------+---------+-------+----------------------------+-----------------+

| 1 | nova-conductor | controller | internal | enabled | up | 2020-01-05T16:53:07.000000 | - |

| 2 | nova-consoleauth | controller | internal | enabled | up | 2020-01-05T16:53:10.000000 | - |

| 3 | nova-scheduler | controller | internal | enabled | up | 2020-01-05T16:53:10.000000 | - |

| 6 | nova-compute | computer1 | nova | enabled | up | 2020-01-05T16:53:08.000000 | - |

| 7 | nova-compute | computer2 | oldboyboy | enabled | up | 2020-01-05T16:53:08.000000 | - |

+----+------------------+------------+-----------+---------+-------+----------------------------+-----------------+

(九)测试,上传镜像,创建实例

1、上传镜像:

项目-镜像-创建镜像(centos-cloud)

镜像存储位置:/var/lib/glance/image

查看镜像信息:qemu-image info /var/lib/glance/image/...

2、创建实例:

项目-实例-启用实例

web页面;项目里可以上传镜像

qemu-img info .. #查看镜像信息

启动实例过程中查看nova中信息:从glance上下载镜像,并进行格式转换。下载的位置:

/var/lib/nova/instance/_base/

十二、块存储服务cinder

(一)安装cinder块服务

1、块存储服务cinder的介绍

为云主机添加磁盘。块存储服务(cinder)为实例提供块存储。存储的分配和消耗是由块存储驱动器,或者多后端配置的驱动器决定的。还有很多驱动程序可用:NAS/SAN,NFS,LVM,Ceph等。常用组件:

cinder-api:接收和响应外部有关块存储请求

cinder-volume: 提供存储空间

cinder-scheduler:调度器,决定将要分配的空间由哪一个cinder-volume提供。

cinder-backup: 备份卷

2、openstack服务通用安装步骤

a:数据库创库授权

b:在keystone创建系统用户关联角色

c:在keystone上创建服务,注册api

d:安装相应服务软件包

e:修改相应服务的配置文件

f:同步数据库

g:启动服务

(二)块存储服务节安装

1、cinder块存储服务控制节点

①数据库创库授权

[root@controller ~]# mysql

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' \

IDENTIFIED BY 'CINDER_DBPASS';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' \

IDENTIFIED BY 'CINDER_DBPASS';

②在keystone创建系统用户(glance,nova,neutron,cinder)关联角色

openstack user create --domain default --password CINDER_PASS cinder

openstack role add --project service --user cinder admin

③在keystone上创建服务和注册api(source admin-openrc )

openstack service create --name cinder \

--description "OpenStack Block Storage" volume

openstack service create --name cinderv2 \

--description "OpenStack Block Storage" volumev2

openstack endpoint create --region RegionOne \

volume public http://controller:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

volume internal http://controller:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

volume admin http://controller:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

volumev2 public http://controller:8776/v2/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

volumev2 internal http://controller:8776/v2/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

volumev2 admin http://controller:8776/v2/%\(tenant_id\)s

④安装服务相应软件包

[root@controller ~]# yum install openstack-cinder -y

⑤修改相应服务的配置文件

cp /etc/cinder/cinder.conf{,.bak}

grep -Ev '^$|#' /etc/cinder/cinder.conf.bak >/etc/cinder/cinder.conf

openstack-config --set /etc/cinder/cinder.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/cinder/cinder.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/cinder/cinder.conf DEFAULT my_ip 10.0.0.11

openstack-config --set /etc/cinder/cinder.conf database connection mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_type password

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken project_name service

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken username cinder

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken password CINDER_PASS

openstack-config --set /etc/cinder/cinder.conf oslo_concurrency lock_path /var/lib/cinder/tmp

openstack-config --set /etc/cinder/cinder.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/cinder/cinder.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/cinder/cinder.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

openstack-config --set /etc/nova/nova.conf cinder os_region_name RegionOne

⑥同步数据库

[root@controller ~]# su -s /bin/sh -c "cinder-manage db sync" cinder

⑦启动服务

systemctl restart openstack-nova-api.service

systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

[root@controller ~]# systemctl status openstack-cinder-api.service openstack-cinder-scheduler.service

⑧检查:

[root@controller ~]# cinder service-list

(二)安装cinder块服务存储节点

为节省资源,安装在计算节点上,在computer1上增加两块硬盘,添加两块硬盘,一块30G,一块10G。

1、lvm原理:

2、存储节点安装步骤

①在计算节点上安装lvm相关软件

yum install lvm2 -y

systemctl enable lvm2-lvmetad.service

systemctl start lvm2-lvmetad.service

②创建卷组

echo '- - -' >/sys/class/scsi_host/host0/scan

#以上命令是重新扫描硬盘

fdisk -l

创建物理卷

pvcreate /dev/sdb

pvcreate /dev/sdc

创卷成卷组,将两个物理卷分别创建成相应的卷组:

vgcreate cinder-ssd /dev/sdb

vgcreate cinder-sata /dev/sdc

检查创建情况:

pvs:

vgs:

lvs:

③修改/etc/lvm/lvm.conf

在130下面插入一行:

只接受sdb,sdc访问

filter = [ "a/sdb/", "a/sdc/","r/.*/"]

④安装cinder相关软件

yum install openstack-cinder targetcli python-keystone -y

⑤修改配置文件

[root@computer1 ~]# cat /etc/cinder/cinder.conf

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

my_ip = 10.0.0.31

glance_api_servers = http://10.0.0.32:9292

enabled_backends = ssd,sata #区别官方文档,这里配置两种磁盘格式

[BACKEND]

[BRCD_FABRIC_EXAMPLE]

[CISCO_FABRIC_EXAMPLE]

[COORDINATION]

[FC-ZONE-MANAGER]

[KEYMGR]

[cors]

[cors.subdomain]

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = CINDER_PASS

[matchmaker_redis]

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[ssl]

[ssd]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-ssd

iscsi_protocol = iscsi

iscsi_helper = lioadm

volume_backend_name = ssd

[sata]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-sata

iscsi_protocol = iscsi

iscsi_helper = lioadm

volume_backend_name = sata

⑥启动应用程序

systemctl enable openstack-cinder-volume.service target.service

systemctl start openstack-cinder-volume.service target.service

⑦在控制节点上检测

[root@controller ~]# cinder service-list

⑧创建卷,验证:

项目-计算-卷-创建卷;在计算节点1上lvs查看创建成功的卷。

⑨挂卷:

步骤一:卷-编辑卷-管理连接-挂至相应实例;

步骤二:在相应的实例上查看:

sudo su

fdisk -l

步骤三:格式化新增的卷并挂载:

web页面中:项目-卷-管理连接-连接至创建的实例上:

点击卷后面的实例:进入控制台的实例:

mkfs.ext4 /dev/vdb

mount /dev/vdb /mnt

df -h

⑩扩容卷

步骤一:

unmount /mnt

步骤二:

项目-计算-卷-编辑卷-卷管理-分离卷

项目-计算-卷-编辑卷-卷管理-编辑卷-扩展卷(2g,computer1:lvs)

项目-计算-卷-编辑卷-卷管理-编辑卷-管理卷-挂至相应的实例

实例控制台:

mount /dev/vdb /mnt

df -h

resize2fs /dev/vdb

df -h

查看存储信息:

[root@computer1 ~]# vgs

⑪创卷卷组类型

已定义卷组类型:

volume_backend_name = ssd

volume_backend_name = sata

管理员-卷-创建类型卷-名称-查看卷类型-已创建-分别在键和值里填写以上信息。

创建卷:可选择卷的类型

项目-卷-创建卷-创建卷过程中可以选择已经创建好的卷类型。lvs查看创建情况

十三、增加一个flat网络

分别在三台机器上增加一个网卡,选择lan网段,地址172.16.0.0/24

(一)增加一个flat网络原因

我们的openstack当前环境只有一个基于eth0网卡桥接的,它能使用的ip范围有限,就决定着它能创建的实例数量有限,无法超过可用ip的数量,当我们的openstack私有云规模比较大的时候,这时候只有一个网络,就不能满足我们的需求了,所以这里我们来学习如何增加一个网络我们使用的环境是VMware workstation,无法模拟vlan的场景,所以这里我们继续使用flat,网络类型。

(二)添加网卡eth1

分别虚拟机上添加一块网卡,为lan区段,172.16.0.0/24

拷贝ifcfg-eth0 至ifcfg-eth1,修改eth1的地址为172.16.0.0/24地址段,并ifup eth1启动网卡。

[root@computer1 network-scripts]# scp ifcfg-eth1 10.0.0.11:`pwd`

(三)控制节点配置

1:控制节点

a:

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2_type_flat]

flat_networks = provider,net172_16 #增加网络名词net172.16

b:

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:eth0,net172_16:eth1 #网络名词和网卡要对应

c:重启

systemctl restart neutron-server.service neutron-linuxbridge-agent.service

(四)计算节点配置

a:

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:eth0,net172_16:eth1

b:重启

systemctl restart neutron-linuxbridge-agent.service

检测:控制节点

neutron agent-list

(五)创建网络

1、命令行创建:

neutron net-create --shared --provider:physical_network net172_16 \

--provider:network_type flat net172_16

neutron subnet-create --name oldgirl \

--allocation-pool start=172.16.0.1,end=172.16.0.250 \

--dns-nameserver 223.5.5.5 --gateway 172.16.0.254 \

net172_16 172.16.0.0/24

2、web页面创建网络:

管理员-网络-创建网络(供应商,平面)-创建子网

创建实例:项目-实例-创建实例(创建过程中可以选择刚创建的网络)

注意:创建一个linux系统作为路由器使用:

基于net172_16网络上网,路由器服务器需要配置:

配置eth0和eth1,但是eth1的网络地址为172.16.0.254,为虚拟机网关地址,不配置网关。

编辑内核配置文件,开启转发

vim /etc/sysctl.conf

net.ipv4.ip_forward = 1

使内核生效

sysctl -p

清空防火墙的filter表

iptables -F

#添加转发规则

iptables -t nat -A POSTROUTING -s 172.16.0.0/24 -j MASQUERADE

十四、cinder对接nfs共享存储

(一)背景

cinder使用nfs做后端存储,cinder服务和nova服务很相似。

nova:不提供虚拟化,支持多种虚拟化技术,kvm,xen,qemu,lxc

cinder:不提供存储,支持多种存储技术,lvm,nfs,glusterFS,ceph

后期如果需要对接其他类型后端存储,方法都类似。

(二)相关配置

①前提条件控制节点安装nfs

安装

[root@controller ~]# yum install nfs-utils.x86_64 -y

配置

[root@controller ~]# mkdir /data

[root@controller ~]# vim /etc/exports

/data 10.0.0.0/24(rw,async,no_root_squash,no_all_squash)

启动

[root@controller ~]# systemctl restart rpcbind.socket

[root@controller ~]# systemctl restart nfs

②存储节点的配置

[root@computer1 ~]# yum install nfs -y

修改/etc/cinder/cinder.conf

[DEFAULT]

enabled_backends = sata,ssd,nfs #增加nfs存储类型,下面配置nfs模块

[nfs]

volume_driver = cinder.volume.drivers.nfs.NfsDriver

nfs_shares_config = /etc/cinder/nfs_shares

volume_backend_name = nfs

vi /etc/cinder/nfs_shares

10.0.0.11:/data

[root@computer1 ~]# showmount -e 10.0.0.11

Export list for 10.0.0.11:

/data 10.0.0.0/24

重启cinder-volume

systemctl restart openstack-cinder-volume.service

在控制节点上检查:cinder service-list

③错误纠正

查看卷日志:

[root@computer1 ~]# vim /var/log/cinder/volume.log

报错,需要配置:

[root@computer1 ~]# chown -R cinder:cinder /var/lib/cinder/mnt/

④创建卷类型,挂载实例

管理员-卷-创建类型卷-查看extra spec,设置键和值

项目-卷-创建卷-管理连接-连接到实例

[root@computer1 ~]# qemu-img info /var/lib/cinder/mnt/490717a467bd12d34ec324c86a4f35b3/volume-b5f95e9f-7c11-4014-a2a0-26fc756bcdc3