HBase Java API

HBase Java API

添加依赖

<dependencies>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.4.6</version>

</dependency>

</dependencies>

基本流程

package com.shujia;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Table;

import java.io.IOException;

public class Demo01TestAPI {

public static void main(String[] args) throws IOException {

// 1、创建配置文件,设置HBase的连接地址(ZK的地址)

// 不知道的话 可以到 /usr/local/soft/hbase-1.4.6/conf/hbase-site.xml 文件中去查看

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "master:2181,node1:2181,node2:2181");

// 2、建立连接

Connection conn = ConnectionFactory.createConnection(conf);

/**

* 3、执行操作:

* 对表的结构进行操作 则getAdmin

* 对表的数据进行操作 则getTable

*/

Admin admin = conn.getAdmin();

Table test = conn.getTable(TableName.valueOf("test"));

// 4、关闭连接

conn.close();

}

}

常用操作示例

package com.shujia;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

public class Demo02API {

Connection conn;

@Before

public void init() throws IOException {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "master:2181,node1:2181,node2:2181");

conn = ConnectionFactory.createConnection(conf);

}

@Test

// create table

public void createTable() throws IOException {

Admin admin = conn.getAdmin();

// 指定表名

HTableDescriptor testAPI = new HTableDescriptor(TableName.valueOf("testAPI"));

// 创建列簇

HColumnDescriptor cf1 = new HColumnDescriptor("cf1");

// 对列簇进行配置

cf1.setMaxVersions(3);

// 给testAPI表添加一个列簇

testAPI.addFamily(cf1);

// 创建testAPI表

admin.createTable(testAPI);

}

@Test

// list 查看所有表

public void listTables() throws IOException {

Admin admin = conn.getAdmin();

TableName[] tableNames = admin.listTableNames();

for (TableName tableName : tableNames) {

System.out.println(tableName.getNameAsString());

}

}

@Test

// desc 查看表结构

public void getTableDescriptor() throws IOException {

Admin admin = conn.getAdmin();

HTableDescriptor testAPI = admin.getTableDescriptor(TableName.valueOf("testAPI"));

HColumnDescriptor[] cfs = testAPI.getColumnFamilies();

for (HColumnDescriptor cf : cfs) {

System.out.println(cf.getNameAsString());

System.out.println(cf.getMaxVersions());

System.out.println(cf.getTimeToLive());

}

}

@Test

// alter

// 对testAPI 将cf1的版本设置为5,并且新加一个列簇cf2

public void AlterTable() throws IOException {

Admin admin = conn.getAdmin();

TableName testAPI = TableName.valueOf("testAPI");

// 在修改表结构之前,先获取原有表的结构

HTableDescriptor testAPIDesc = admin.getTableDescriptor(testAPI);

// 获取原有表的列族

HColumnDescriptor[] cfs = testAPIDesc.getColumnFamilies();

for (HColumnDescriptor cf : cfs) {

if ("cf1".equals(cf.getNameAsString())) {

cf.setMaxVersions(5);

}

}

// 新加一个列簇cf2

testAPIDesc.addFamily(new HColumnDescriptor("cf2"));

// 改完之后 将 testAPIDesc 作为第二个参数传出去 以实现表结构的修改

admin.modifyTable(testAPI, testAPIDesc);

}

@Test

// drop

public void DropTable() throws IOException {

Admin admin = conn.getAdmin();

TableName tableName = TableName.valueOf("test1");

if (admin.tableExists(tableName)) {

// 表在删除之前需要先disable

admin.disableTable(tableName);

admin.deleteTable(tableName);

} else {

System.out.println("表不存在!");

}

}

@Test

// put

public void PutData() throws IOException {

Table testAPI = conn.getTable(TableName.valueOf("testAPI"));

// 设置 rowkey

Put put = new Put("001".getBytes());

// put 数据的时候 要指定 列族 列名

put.addColumn("cf1".getBytes(), "name".getBytes(), "张三".getBytes());

put.addColumn("cf1".getBytes(), "age".getBytes(), "18".getBytes());

put.addColumn("cf1".getBytes(), "clazz".getBytes(), "文科一班".getBytes());

put.addColumn("cf1".getBytes(), "clazz".getBytes(), 1, "文科二班".getBytes());

testAPI.put(put);

}

@Test

// get

// 获取最新的数据

public void GetData() throws IOException {

Table testAPI = conn.getTable(TableName.valueOf("testAPI"));

// 设置 rowkey

Get get = new Get("001".getBytes());

// 设置可获取的最大版本数

get.setMaxVersions(10);

Result rs = testAPI.get(get);

// 获取rowkey

byte[] row = rs.getRow();

// 获取数据

byte[] name = rs.getValue("cf1".getBytes(), "name".getBytes());

byte[] age = rs.getValue("cf1".getBytes(), "age".getBytes());

byte[] clazz = rs.getValue("cf1".getBytes(), "clazz".getBytes());

// HBase提供了一个工具给我们 -- Bytes

System.out.println(Bytes.toString(row) + "," + Bytes.toString(name) + "," + Bytes.toString(age) + "," + Bytes.toString(clazz));

}

@Test

// 提取数据的另一种方式

// 由HBase提供

public void ListCells() throws IOException {

Table testAPI = conn.getTable(TableName.valueOf("testAPI"));

Get get = new Get("001".getBytes());

get.setMaxVersions(10);

Result rs = testAPI.get(get);

// 获取所有的Cell 单元格

// 获取该rowkey所有的数据

List<Cell> cells = rs.listCells();

for (Cell cell : cells) {

String value = Bytes.toString(CellUtil.cloneValue(cell));

System.out.println(value);

}

}

@Test

/**

* 批量插入

* 创建stu表,增加一个info列簇,将students.txt的1000条数据全部插入

*/

public void PutStu() throws IOException {

TableName stu = TableName.valueOf("stu");

// 创建表

Admin admin = conn.getAdmin();

if (!admin.tableExists(stu)) {

admin.createTable(new HTableDescriptor(stu)

.addFamily(new HColumnDescriptor("info")));

}

Table stuTable = conn.getTable(stu);

// 批量插入

ArrayList<Put> puts = new ArrayList<>();

// 读取文件

BufferedReader br = new BufferedReader(new FileReader("data/students.txt"));

int cnt = 0;

String line;

while ((line = br.readLine()) != null) {

String[] split = line.split(",");

String id = split[0];

String name = split[1];

String age = split[2];

String gender = split[3];

String clazz = split[4];

Put put = new Put(id.getBytes());

put.addColumn("info".getBytes(), "name".getBytes(), name.getBytes());

put.addColumn("info".getBytes(), "age".getBytes(), age.getBytes());

put.addColumn("info".getBytes(), "gender".getBytes(), gender.getBytes());

put.addColumn("info".getBytes(), "clazz".getBytes(), clazz.getBytes());

// 批量插入

// 每次插入100条 假如被插入的数据不是100的倍数,那么最后的几条数据会丢失

// 所以为了避免这种情况,需要在最后 判断Put的List是否为空

puts.add(put);

cnt += 1;

if (cnt == 100) {

stuTable.put(puts);

puts.clear(); // 清空

cnt = 0;

}

// 逐条插入,效率较低

// stuTable.put(put);

}

// 判断Put的List是否为空

if (!puts.isEmpty()) {

stuTable.put(puts);

}

br.close();

}

@Test

// delete

// 删除也可以批量删除,原理和上面类似,这里不做演示了

public void DeleteData() throws IOException {

Table stuTable = conn.getTable(TableName.valueOf("stu"));

Delete del = new Delete("1500100001".getBytes());

stuTable.delete(del);

}

@Test

// scan

public void ScanData() throws IOException {

Table stuTable = conn.getTable(TableName.valueOf("stu"));

Scan scan = new Scan();

scan.setLimit(10);

scan.withStartRow("1500100008".getBytes());

scan.withStopRow("1500100020".getBytes());

ResultScanner scanner = stuTable.getScanner(scan);

for (Result rs : scanner) {

String id = Bytes.toString(rs.getRow());

String name = Bytes.toString(rs.getValue("info".getBytes(), "name".getBytes()));

String age = Bytes.toString(rs.getValue("info".getBytes(), "age".getBytes()));

String gender = Bytes.toString(rs.getValue("info".getBytes(), "gender".getBytes()));

String clazz = Bytes.toString(rs.getValue("info".getBytes(), "clazz".getBytes()));

System.out.println(id + "," + name + "," + age + "," + gender + "," + clazz);

}

}

@After

public void close() throws IOException {

conn.close();

}

}

需求示例

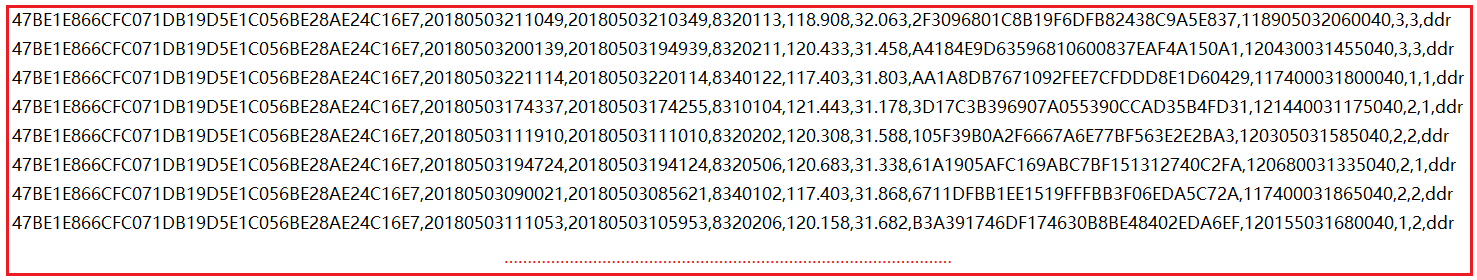

获取用户最新的三个位置

数据:

package com.shujia;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.util.ArrayList;

public class Demo03DianXin {

Connection conn;

TableName dianXin;

@Before

public void init() throws IOException {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "master:2181,node1:2181,node2:2181");

conn = ConnectionFactory.createConnection(conf);

dianXin = TableName.valueOf("dianXin");

}

@Test

// create table

public void createTable() throws IOException {

Admin admin = conn.getAdmin();

if (!admin.tableExists(dianXin)) {

admin.createTable(new HTableDescriptor(dianXin)

.addFamily(new HColumnDescriptor("cf1")

.setMaxVersions(5)));

} else {

System.out.println("表已经存在!");

}

}

@Test

// 将数据写入HBase

public void putALL() throws IOException {

Table dx_tb = conn.getTable(dianXin);

ArrayList<Put> puts = new ArrayList<>();

int cnt = 0;

int batchSize = 1000;

BufferedReader br = new BufferedReader(new FileReader("data/DIANXIN.csv"));

String line;

while ((line = br.readLine()) != null) {

String[] split = line.split(",");

String mdn = split[0];

String start_time = split[1];

// lg -- longitude 经度

// lat -- latitude 纬度

String lg = split[4];

String lat = split[5];

Put put = new Put(mdn.getBytes());

put.addColumn("cf1".getBytes(), "lg".getBytes(), Long.parseLong(start_time), lg.getBytes());

put.addColumn("cf1".getBytes(), "lat".getBytes(), Long.parseLong(start_time), lat.getBytes());

puts.add(put);

cnt += 1;

if (cnt == batchSize) {

dx_tb.put(puts);

puts.clear();

cnt = 0;

}

}

if (!puts.isEmpty()) {

dx_tb.put(puts);

}

br.close();

}

@Test

// 根据mdn获取用户最新的3个位置

public void getPositionByMdn() throws IOException {

Table dx_tb = conn.getTable(dianXin);

String mdn = "48049101CE9FC280703582E667DE3F3D947ABD37";

Get get = new Get(mdn.getBytes());

get.setMaxVersions(3);

Result rs = dx_tb.get(get);

ArrayList<String> lgArr = new ArrayList<>();

ArrayList<String> latArr = new ArrayList<>();

for (Cell cell : rs.listCells()) {

String value = Bytes.toString(CellUtil.cloneValue(cell));

if ("lg".equals(Bytes.toString(CellUtil.cloneQualifier(cell)))) {

lgArr.add(value);

} else if ("lat".equals(Bytes.toString(CellUtil.cloneQualifier(cell)))) {

latArr.add(value);

}

}

for (int i = 0; i < 3; i++) {

System.out.println(lgArr.get(i) + "," + latArr.get(i));

}

}

@After

public void close() throws IOException {

conn.close();

}

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号