HDFS Java API 基本流程

导入依赖--Maven仓库的官网(mvnrepository.com)

<dependencies>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-client -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.7.6</version>

</dependency>

</dependencies>

操作流程

//1、创建连接

// fs.defaultFS 和 hdfs://master:9000 是配置Hadoop时设置的HDFS的服务端口

//这个端口在配置文件和HDFS的web界面中可以找到

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

//2、通过创建的连接对象fs对HDFS进行操作

通过 fs.方法 进行操作

//3、关闭连接

fs.close();

程序示例

package com.shujia.HDFS;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import java.io.*;

public class HDFSJavaAPI {

FileSystem fs;

@Before

public void init() throws IOException {

// 1、创建连接

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://master:9000");

fs = FileSystem.get(conf);

}

// 创建目录(默认可以递归地创建目录)

@Test

public void mkdirs() throws IOException {

fs.mkdirs(new Path("/e/f/g"));

}

// 删除目录

@Test

public void deleteDir() throws IOException {

// 非递归的删除,相当于直接rm

// fs.delete(new Path("/e"), false);

// 递归地删除,相当于rm -r

fs.delete(new Path("/e"), true);

}

// 移动文件

@Test

public void moveFile() throws IOException {

fs.rename(new Path("/CentOS-7-x86_64-DVD-2009.iso"), new Path("/a/CentOS-7-x86_64-DVD-2009.iso"));

}

// 上传文件 put (move上传文件时会删除本地文件)

@Test

public void putFile() throws IOException {

fs.moveFromLocalFile(new Path("data/students.txt"), new Path("/a/students.txt"));

}

// 下载文件 get (copy不会)

@Test

public void getFile() throws IOException {

fs.copyToLocalFile(new Path("/a/students.txt"), new Path("data/"));

}

// 读文件

@Test

public void readFromHDFS() throws IOException {

FSDataInputStream fsDataInputStream = fs.open(new Path("/a/students.txt"));

BufferedReader br = new BufferedReader(new InputStreamReader(fsDataInputStream));

String line = null;

while ((line = br.readLine()) != null) {

System.out.println(line);

}

//用完之后要关闭流通道

br.close();

fsDataInputStream.close();

}

// 写文件

@Test

public void writeFile() throws IOException {

Path path = new Path("/newFile1.txt");

FSDataOutputStream fsDataOutputStream;

// 判断文件是否存在

if(fs.exists(path)){

// 直接追加有问题 不常用

fsDataOutputStream = fs.append(path);

}else{

fsDataOutputStream= fs.create(path);

}

BufferedWriter bw = new BufferedWriter(new OutputStreamWriter(fsDataOutputStream));

bw.write("hello world");

bw.newLine();

bw.write("hadoop hadoop hive hbase");

bw.newLine();

bw.flush();

bw.close();

fsDataOutputStream.close();

}

@After

public void closed() throws IOException {

fs.close();

}

}

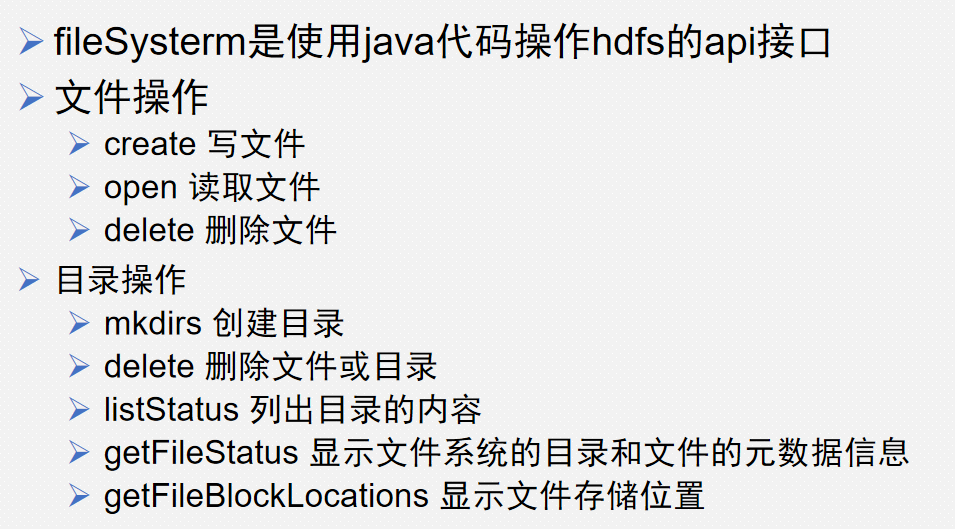

FileSystem

![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号