k8s集群安装二

一环境配置

主机名 安装角色

192.168.23.146 k8s-master master和node(为了实现3个node节点,让master节点兼职做了个node)

192.168.23.145 docke02 node

192.168.23.144 docke01 node

环境是centos74:关闭防火墙、关闭selinux、配置epel源,配置centos_Base源

在hosts都要配置域名解析

二、master节点的安装

master节点需要安装etcd、api-server、controller-manager、schedule

a、安装etcd服务

[root@k8s-master ~]# yum install etcd -y

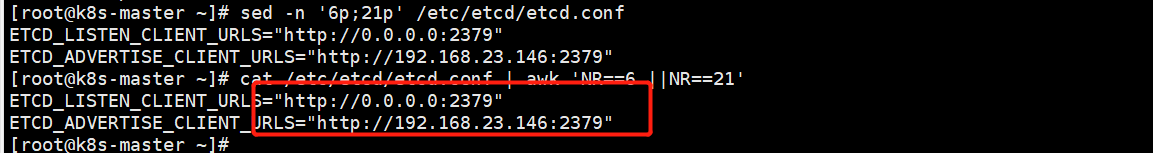

vim /etc/etcd/etcd.conf

6行:ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" 监听地址,本地和远端都可以使用,本地默认使用127.0.0.1

21行:ETCD_ADVERTISE_CLIENT_URLS="http://192.168.23.146:2379" 要是集群就写集群的IP

ETCD_DATA_DIR="/var/lib/etcd/default.etcd" 数据存在的目录

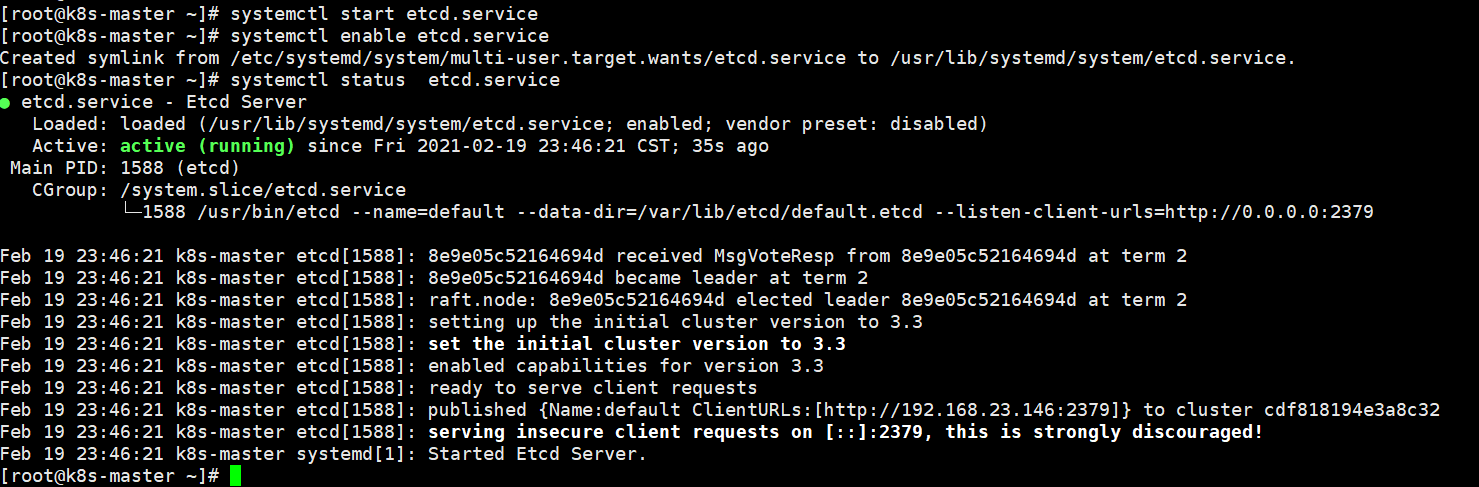

systemctl start etcd.service

systemctl enable etcd.service

[root@k8s-master ~]# sed -n '6p;21p' /etc/etcd/etcd.conf

[root@k8s-master ~]# cat /etc/etcd/etcd.conf | awk 'NR==6 ||NR==21'

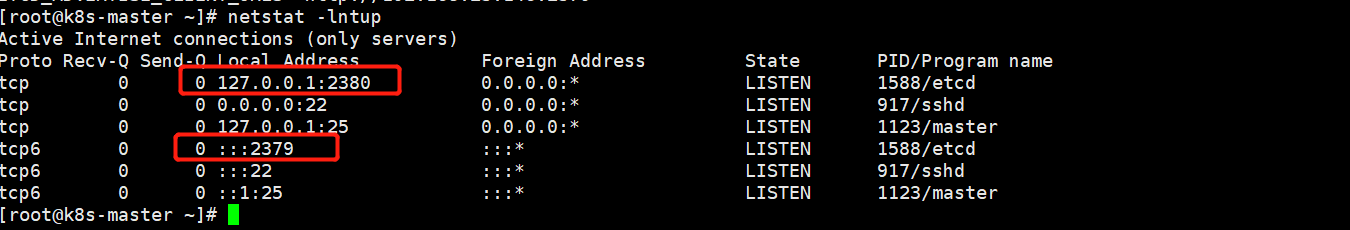

监听任意的2379端口,2379是对外提供服务的,k8s往etcd写内容就是这个端口;etcd集群之间数据同步就是用2380端口

测试:

[root@k8s-master ~]# etcdctl set testdir/testkey0 0 0 [root@k8s-master ~]# etcdctl get testdir/testkey0 0 [root@k8s-master ~]# etcdctl -C http://192.168.23.146:2379 cluster-health member 8e9e05c52164694d is healthy: got healthy result from http://192.168.23.146:2379 cluster is healthy [root@k8s-master ~]#

[root@k8s-master ~]# etcd --version 查看版本

etcd在键的组织上采用了层次化的空间结构(类似于文件系统中目录的概念)

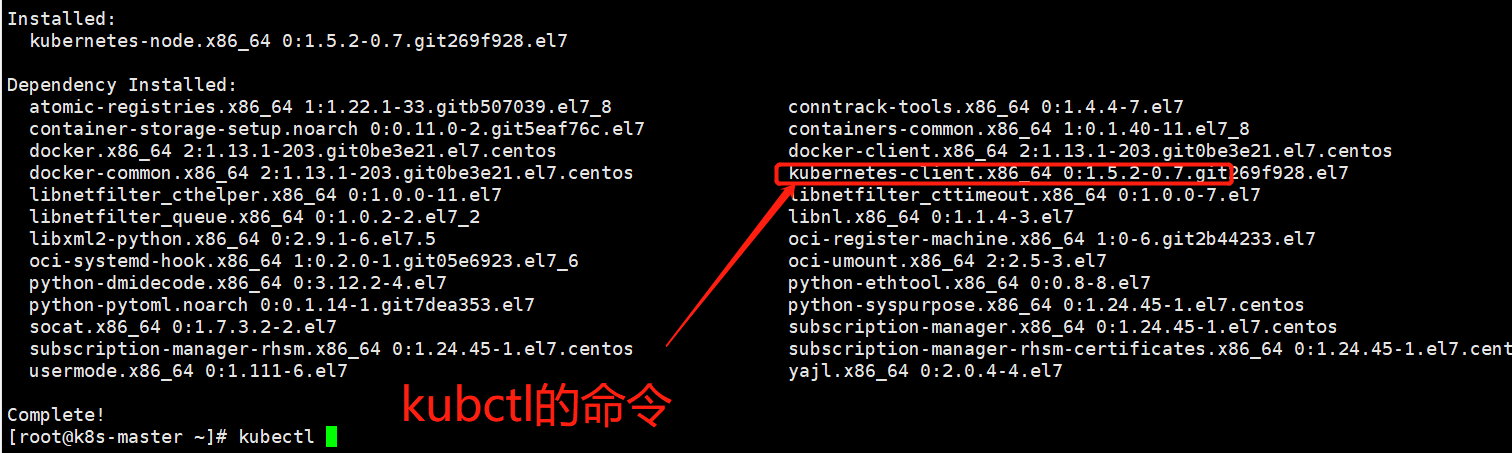

b、安装kubernetes master节点:api-controller和schedule

[root@k8s-master ~]# yum install kubernetes-master.x86_64 -y

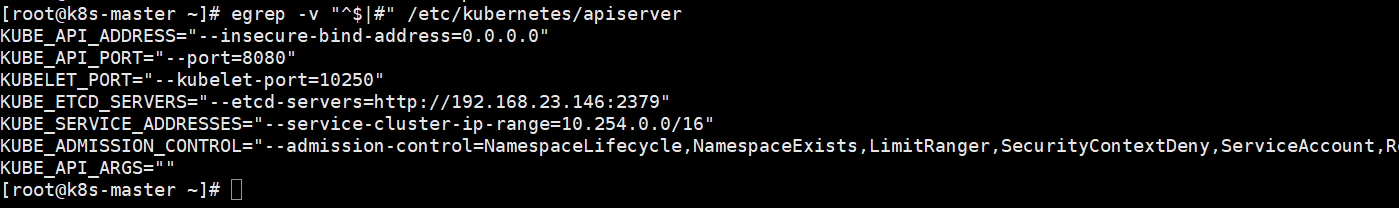

配置apiserver:

vim /etc/kubernetes/apiserver 8行: KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" 在本地和远端都能用k8s的客户端,要是只能本机用就配置127.0.0.1 11行:KUBE_API_PORT="--port=8080" 装apiserver的节点称为master节点,端口为8080 17行:KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.23.146:2379" 要连接etcd服务,etcd所在的ip 23行:KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

# Port minions listen on随从,也是node

KUBELET_PORT="--kubelet-port=10250" 其他node装完kubelet,启动kubelet就会监听10250,这个可以协商

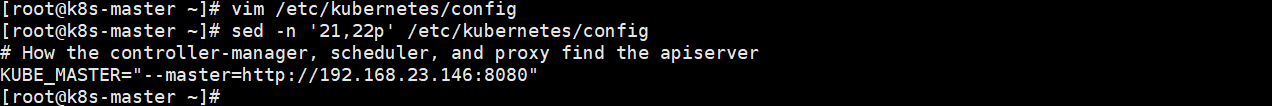

配置controller-mansger和scheduler,这两个服务共用一个配置文件

vim /etc/kubernetes/config

# How the controller-manager, scheduler, and proxy find the apiserver :controller\scheduler\proxy找到apiserver的地址,也就是配apiserver地址,apiserver装在哪,那就是master

22行:KUBE_MASTER="--master=http://192.168.23.146:8080“

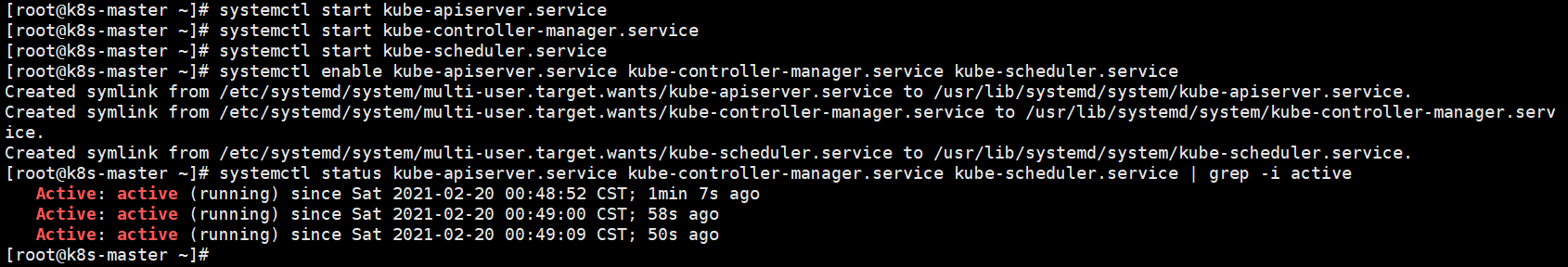

[root@k8s-master ~]#systemctl start kube-apiserver.service

[root@k8s-master ~]#systemctl start kube-controller-manager.service

[root@k8s-master ~]#systemctl start kube-scheduler.service

[root@k8s-master ~]# systemctl enable kube-apiserver.service kube-controller-manager.service kube-scheduler.service

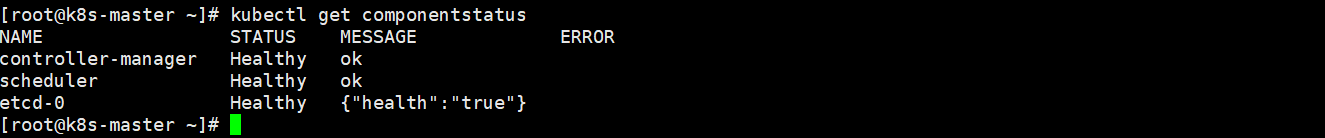

[root@k8s-master ~]# kubectl get componentstatus --检查安装状态

三、安装node节点

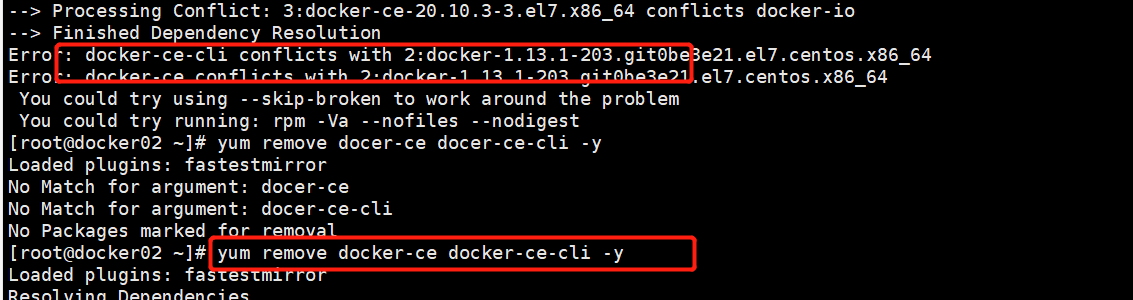

node节点需要安装kubelet和kube-proxy ,安装node节点的时候会自动帮你安装上docker;安装这个的时候不要yum单独安装docker,否则安装node会冲突

[root@k8s-master ~]# yum install kubernetes-node.x86_64 -y

[root@docker02 ~]# yum install kubernetes-node.x86_64 -y

[root@docker01 ~]# yum install kubernetes-node.x86_64 -y

配置node服务:因为master也安装了node,192.168.23.146

vim /etc/kubernetes/config 22行:KUBE_MASTER="--master=http://192.168.23.146:8080" vim /etc/kubernetes/kubelet 5行:KUBELET_ADDRESS="--address=192.168.23.146" 监听地址

8行:KUBELET_PORT="--port=10250" 要和api server一样 11行:KUBELET_HOSTNAME="--hostname-override=10.0.0.12" 给各个node添加个标签,标签就是这用IP或者主机名区分就行,主机名要加hosts 14行:KUBELET_API_SERVER="--api-servers=http://192.168.23.146:8080" systemctl start kubelet.service systemctl start kube-proxy.service

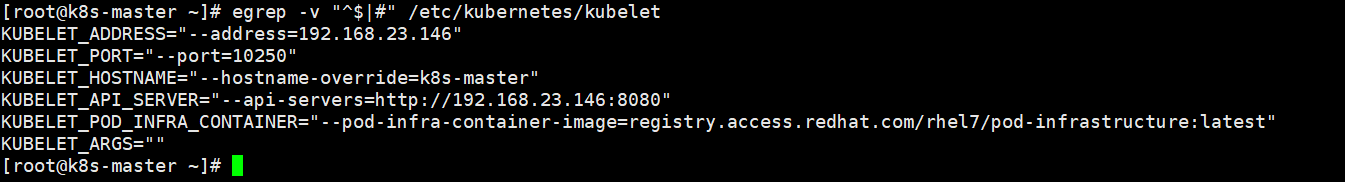

master节点上node的配置

vim /etc/kubernetes/config

22行:KUBE_MASTER="--master=http://192.168.23.146:8080"

[root@k8s-master ~]# egrep -v "^#|^$" /etc/kubernetes/kubelet KUBELET_ADDRESS="--address=192.168.23.146" 监听地址 KUBELET_HOSTNAME="--hostname-override=k8s-master" KUBELET_API_SERVER="--api-servers=http://192.168.23.146:8080" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" KUBELET_ARGS=""

[root@k8s-master ~]# systemctl start kubelet.service

[root@k8s-master ~]# systemctl start kube-proxy.service

[root@k8s-master ~]# systemctl enable kube-proxy.service kubelet.service

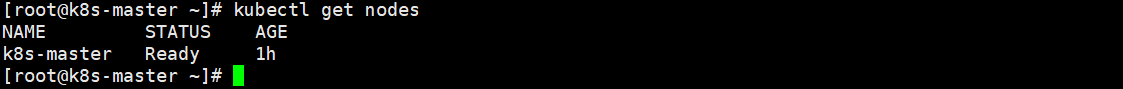

[root@k8s-master ~]# kubectl get nodes

docer01上部署的node--192.168.23.144

[root@docker01 ~]# vim /etc/kubernetes/config [root@docker01 ~]# vim /etc/kubernetes/kubelet [root@docker01 ~]# egrep -v "^#|^$" /etc/kubernetes/kubelet KUBELET_ADDRESS="--address=192.168.23.144" KUBELET_PORT="--port=10250" KUBELET_HOSTNAME="--hostname-override=docker01" KUBELET_API_SERVER="--api-servers=http://192.168.23.146:8080" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" KUBELET_ARGS="" [root@docker01 ~]# egrep -v "^#|^$" /etc/kubernetes/config KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" KUBE_ALLOW_PRIV="--allow-privileged=false" KUBE_MASTER="--master=http://192.168.23.146:8080" [root@docker01 ~]#

[root@docker01 ~]# systemctl start kubelet.service

[root@docker01 ~]# systemctl start kube-proxy.service

[root@docker01 ~]# systemctl enable kube-proxy.service kubelet.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@docker01 ~]# systemctl status kube-proxy.service kubelet.service | grep -i active

Active: active (running) since Mon 2021-02-22 16:39:42 CST; 22s ago

Active: active (running) since Mon 2021-02-22 16:39:38 CST; 25s ago

[root@docker01 ~]#

docker02安装node--192.168.23.145

[root@docker02 ~]# vim /etc/kubernetes/config [root@docker02 ~]# vim /etc/kubernetes/kubelet [root@docker02 ~]# systemctl start kubelet.service [root@docker02 ~]# systemctl start kube-proxy.service [root@docker02 ~]# systemctl enable kube-proxy.service kubelet.service Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service. Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. [root@docker02 ~]# systemctl status kube-proxy.service kubelet.service | grep -i active Active: active (running) since Mon 2021-02-22 16:45:06 CST; 16s ago Active: active (running) since Mon 2021-02-22 16:45:02 CST; 20s ago [root@docker02 ~]# egrep -v "^#|^$" /etc/kubernetes/config KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" KUBE_ALLOW_PRIV="--allow-privileged=false" KUBE_MASTER="--master=http://192.168.23.146:8080" [root@docker02 ~]# egrep -v "^#|^$" /etc/kubernetes/kubelet KUBELET_ADDRESS="--address=192.168.23.145" KUBELET_HOSTNAME="--hostname-override=docker02" KUBELET_API_SERVER="--api-servers=http://192.168.23.146:8080" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" KUBELET_ARGS=""

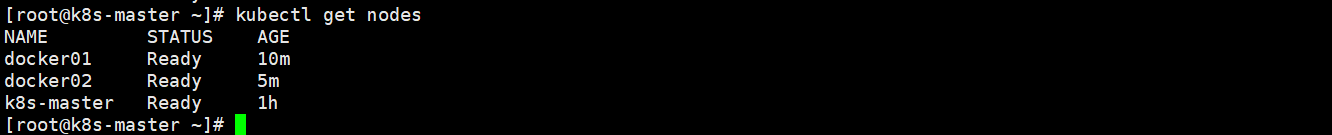

[root@k8s-master ~]# kubectl get nodes --在控制节点查看刚才安装的node

kubectl delete node node-nameA 删除某个node节点

所有node节点配置flannel网络插件--容器跨宿主机通信

[root@docker02 ~]# yum install flannel -y

[root@docker01 ~]# yum install flannel -y

[root@k8s-master ~]# yum install flannel -y

# etcd url location. Point this to the server where etcd runs要配置flannel连接etcd的地址:

[root@k8s-master ~]# sed -i 's#http://127.0.0.1:2379#http://192.168.23.146:2379#g' /etc/sysconfig/flanneld

[root@docker01 ~]# sed -i 's#http://127.0.0.1:2379#http://192.168.23.146:2379#g' /etc/sysconfig/flanneld

[root@docker02 ~]# sed -i 's#http://127.0.0.1:2379#http://192.168.23.146:2379#g' /etc/sysconfig/flanneld

去配置个key,后期flannel查询etcd;后期容器分配IP地址存储在etcd,在/etc/sysconfig/flanneld配置文件

FLANNEL_ETCD_PREFIX="/atomic.io/network" key的前缀默认是这个。设置一个键值对key:value,这个在master节点操作,etcd安装到master

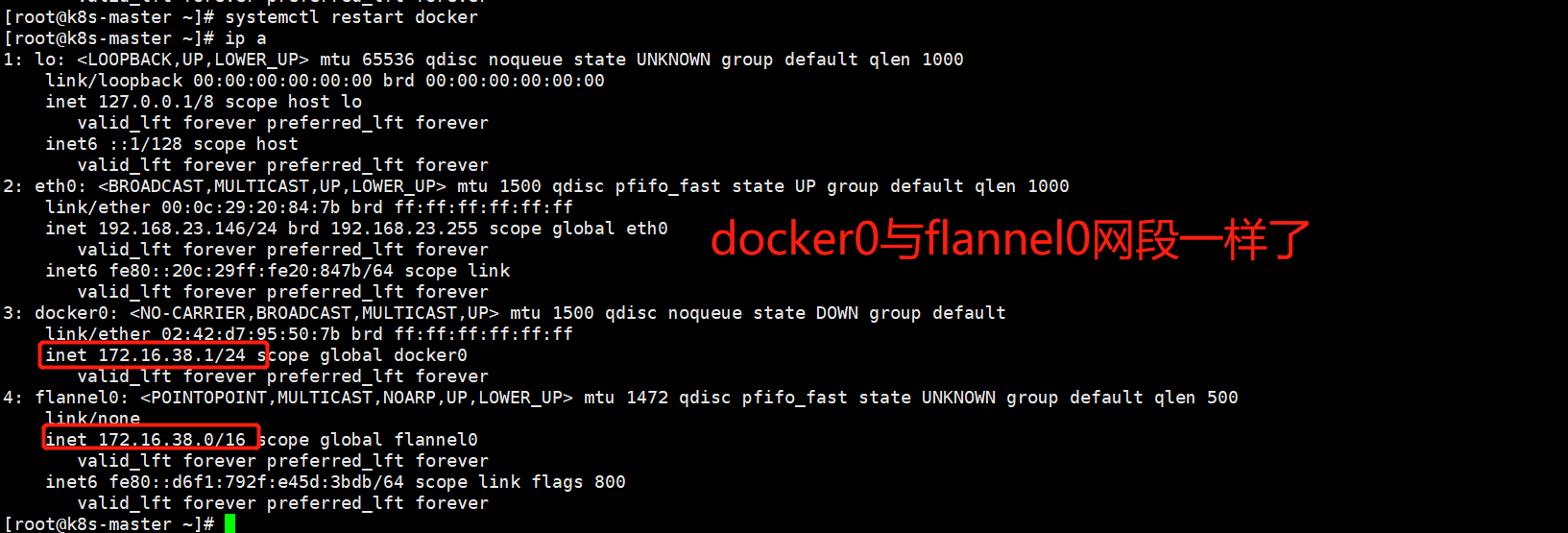

etcdctl set /atomic.io/network/config '{ "Network": "172.16.0.0/16" }'

[root@k8s-master ~]# etcdctl set /atomic.io/network/config '{ "Network": "172.16.0.0/16" }' { "Network": "172.16.0.0/16" }

[root@k8s-master ~]# systemctl start flanneld.service

[root@k8s-master ~]# systemctl enable flanneld.service

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

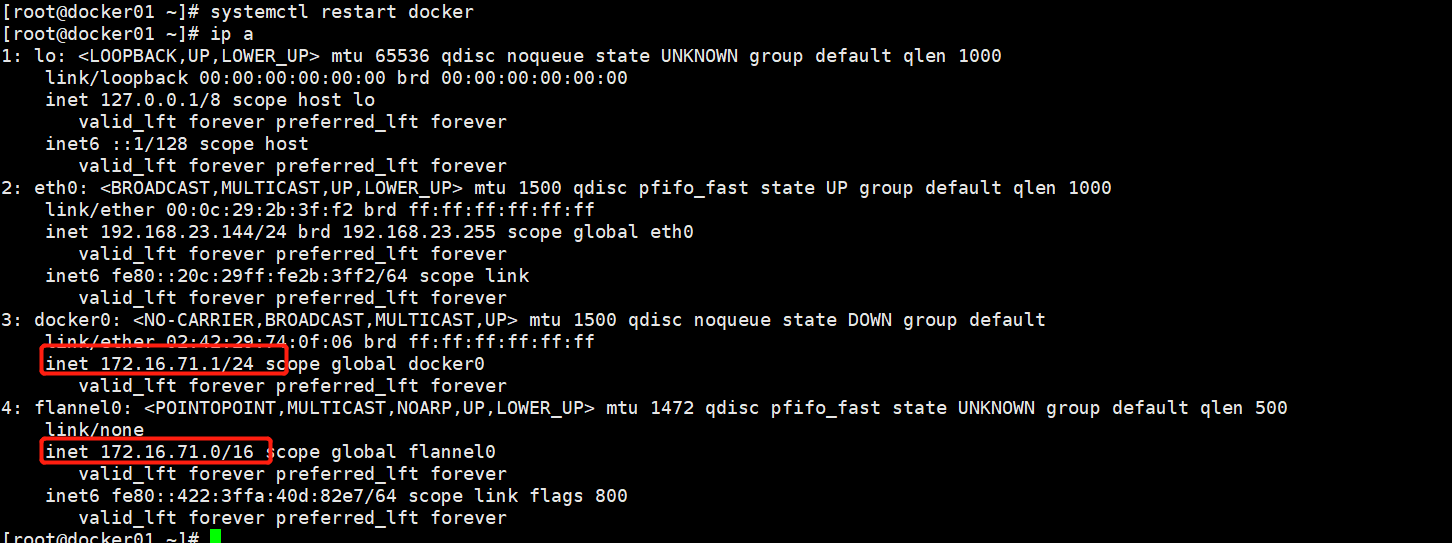

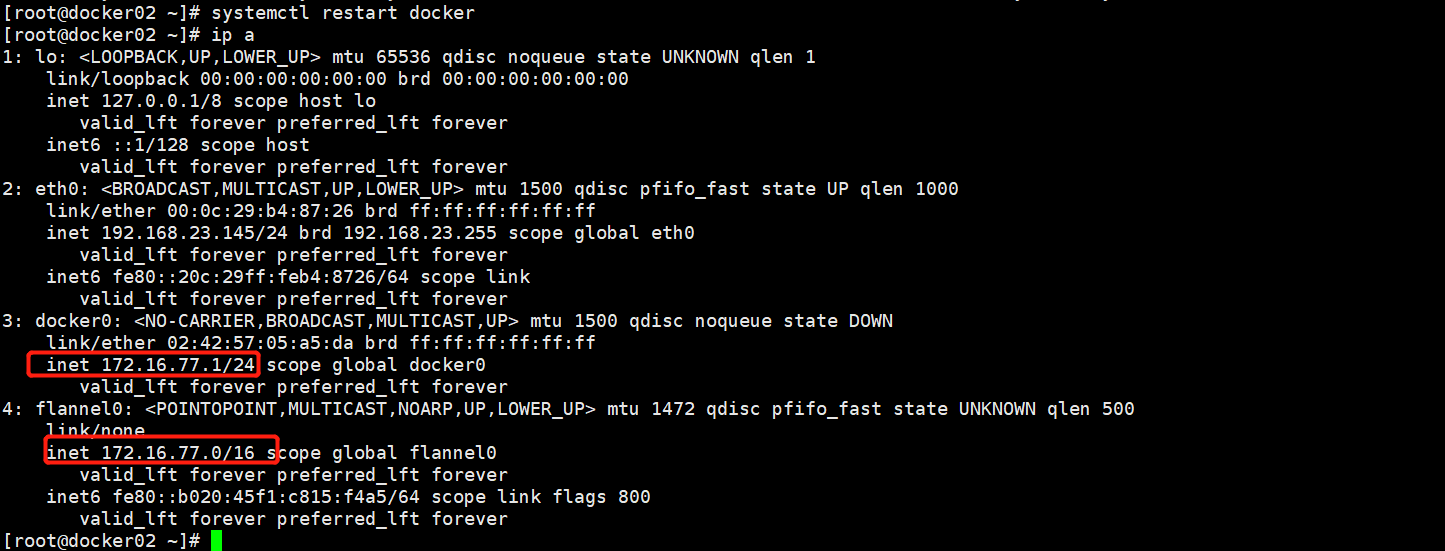

会生成一个flannel0网卡,重启docker生效

[root@k8s-master ~]# systemctl restart docker

node节点:

[root@docker01 ~]# sed -i 's#http://127.0.0.1:2379#http://192.168.23.146:2379#g' /etc/sysconfig/flanneld

[root@docker01 ~]# systemctl start flanneld.service

[root@docker01 ~]# systemctl enable flanneld.service

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

[root@docker01 ~]# systemctl restart docker

[root@docker02 ~]# sed -i 's#http://127.0.0.1:2379#http://192.168.23.146:2379#g' /etc/sysconfig/flanneld

[root@docker02 ~]# systemctl start flanneld.service

[root@docker02 ~]# systemctl enable flanneld.service

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

[root@docker02 ~]# systemctl restart docker

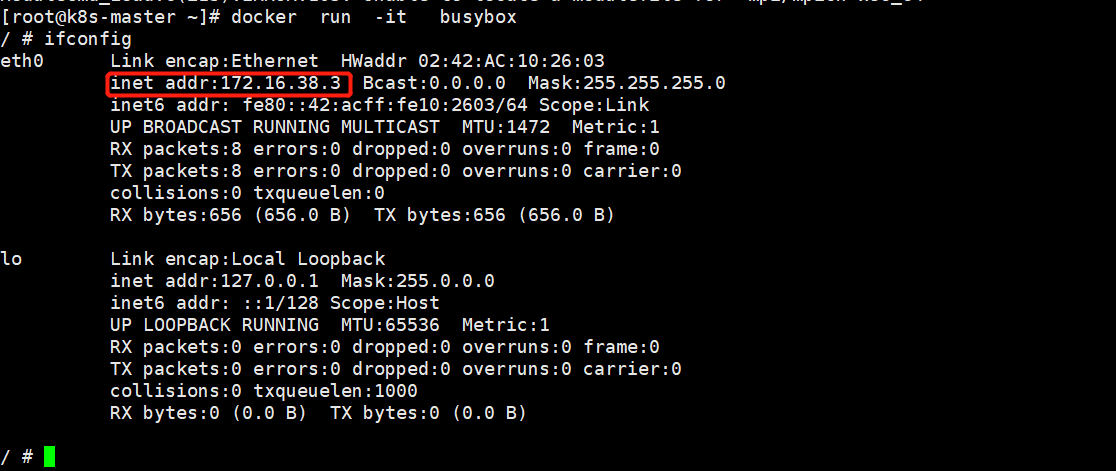

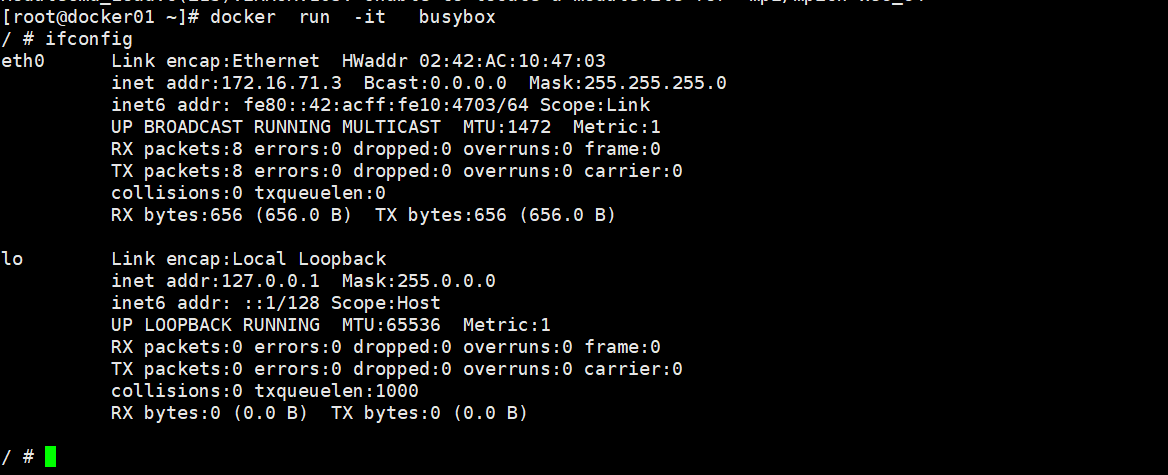

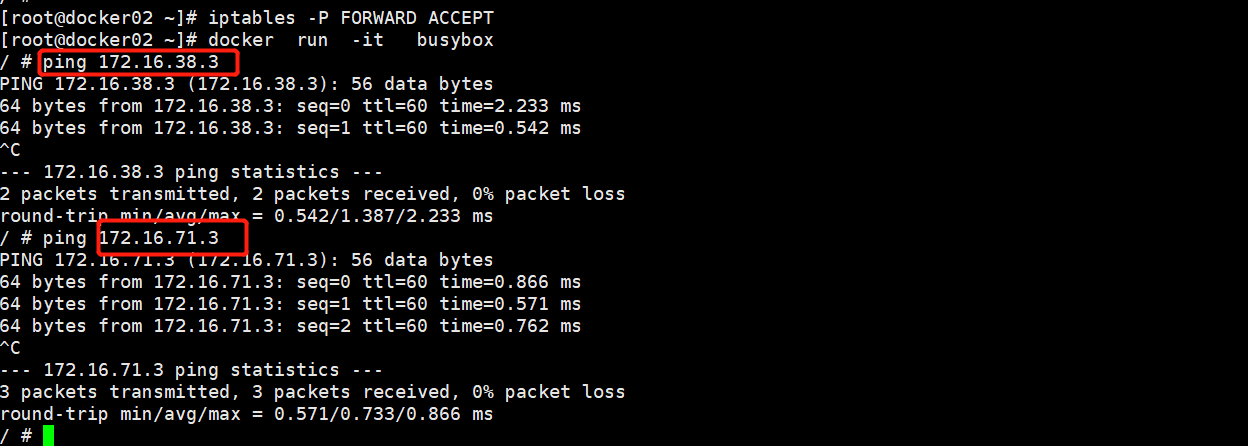

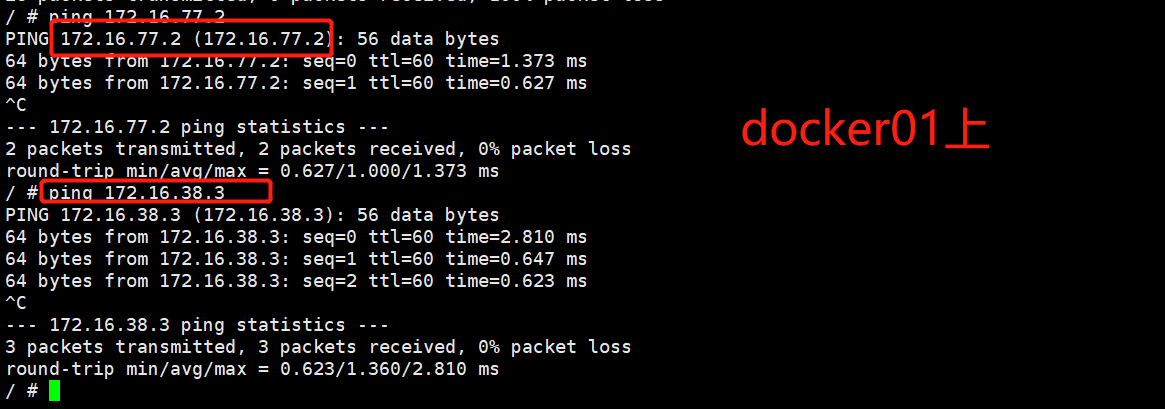

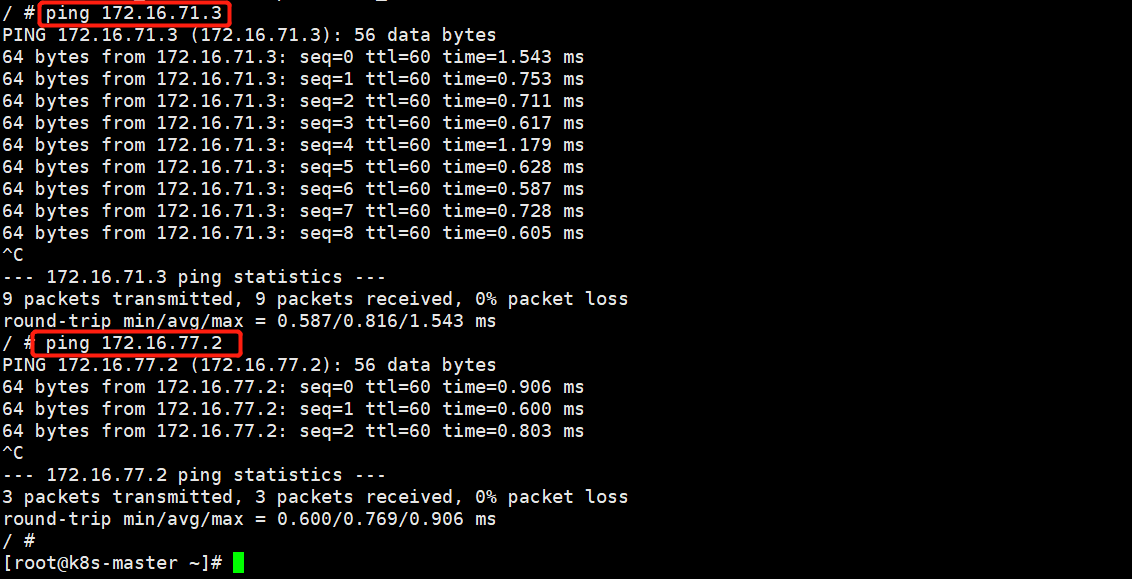

三个node节点上的docker网段不同,分别是16.38 16.71 16.77

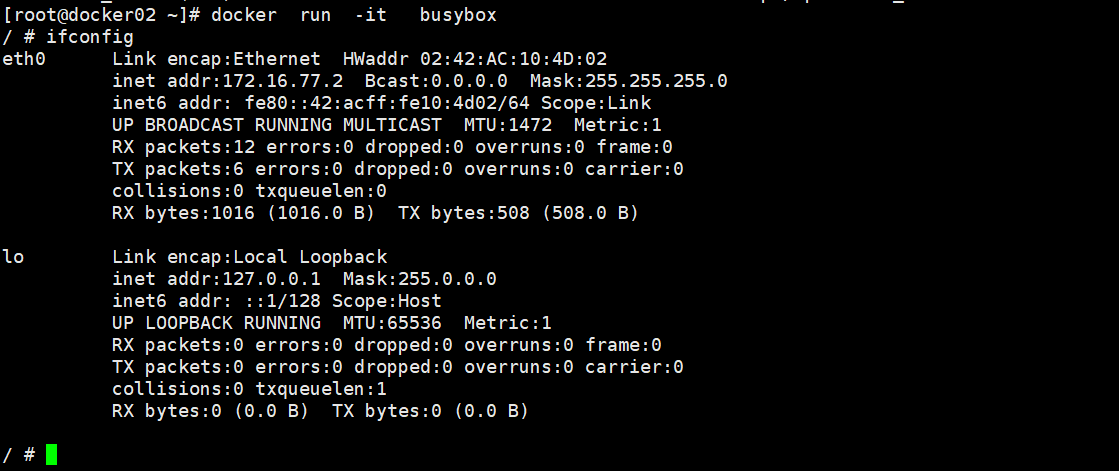

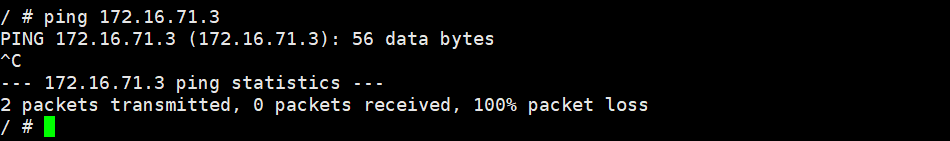

docker run -it busybox 三个节点分别起个容器,看下对应IP

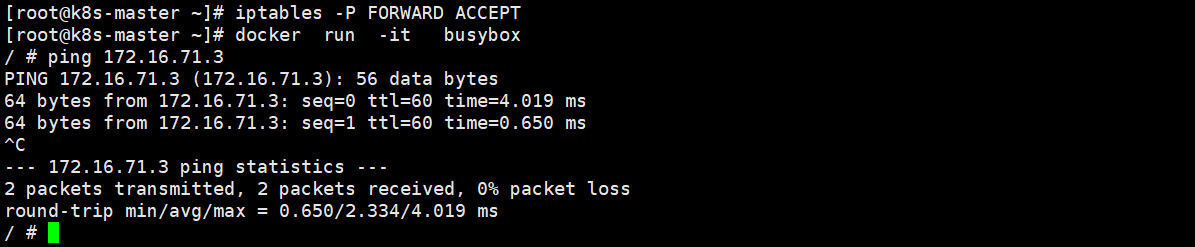

容器内互ping不通:三个node节点都要改

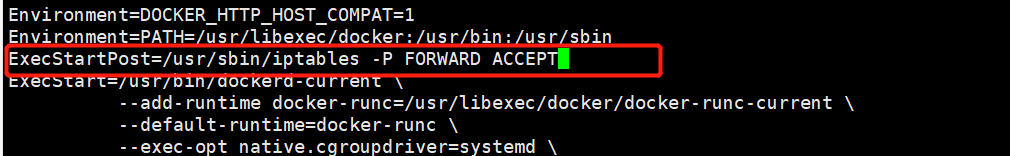

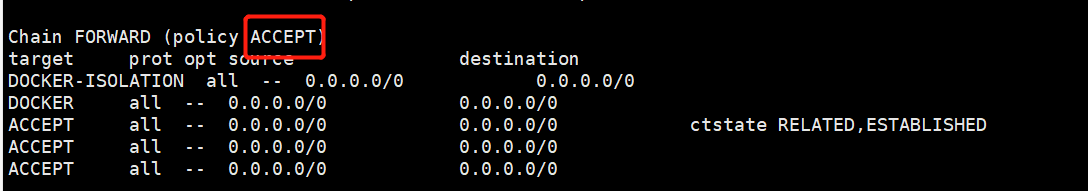

Docker从1.13版本开始调整了默认的防火墙规则,禁用了iptables filter表中FOWARD链(docker运行后forward会变成drop,所以要改过来),这样会引起Kubernetes集群中跨Node的Pod无法通信,在各个Docker节点执行下面的命令:iptables -P FORWARD ACCEPT --临时生效

可以把这个规则加入到docker的配置文件中,服务启动的时候会加载配置文件

ExecStartPost字段:启动服务之后执行的命令

systemctl status docker中的load就是配置文件;或者systemct cat docker

vim /usr/lib/systemd/system/docker.service

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

systemctl daemon-reload

浙公网安备 33010602011771号

浙公网安备 33010602011771号