搭建GTID+MHA+Atlas架构多节点

一、IP规划

|

centos74-ren-Node-002 |

192.168.0.248 |

Slave2 |

|

centos74-ren-Node-001 |

192.168.0.2 |

slave1(备用主) |

|

centos74-ren-M |

192.168.0.204 |

主数据库(master) |

|

centos74-ren-MH |

192.168.0.135 |

MHA管理、Atlas |

前提是主数据库、slave1、slave2三台机器mysql均已安装(135也要装,后期部署Atlas测试要用,安装完可以不开启),安装脚本如下:

#! /bin/bash id mysql [ $? -eq 0 ] || useradd -M -s /sbin/nologin mysql yum -y install autoconf libaio* &> /dev/null [ -d /appliaction ] || mkdir -p /application [ -d /root/tools ] || mkdir -p /root/tools if [ -d /root/tools ] ;then wget -P /root/tools https://cdn.mysql.com/archives/mysql-5.6/mysql-5.6.32-linux-glibc2.5-x86_64.tar.gz else mkdir -P /root/tools exit 1 fi ###Mysql Config#### Mysql_Config() { cd /root/tools && tar xf mysql-5.6.32-linux-glibc2.5-x86_64.tar.gz mv /root/tools/mysql-5.6.32-linux-glibc2.5-x86_64 /application/mysql-5.6.32 ln -s /application/mysql-5.6.32 /application/mysql cd /application/mysql chown -R mysql.mysql /application/mysql/data/ /application/mysql/scripts/mysql_install_db --basedir=/application/mysql --datadir=/application/mysql/data/ --user=mysql cp -rf /application/mysql/support-files/mysql.server /etc/init.d/mysqld sed -ri "s#/usr/local#/application#g" /etc/init.d/mysqld /application/mysql/bin/mysqld_safe cp -rf /application/mysql/support-files/my-default.cnf /etc/my.cnf /etc/init.d/mysqld start /application/mysql/bin/mysqladmin -uroot password "Rcs551552?" } Mysql_Config ##导入环境变量 echo 'PATH=/application/mysql/bin/:$PATH' >>/etc/profile source /etc/profile /etc/init.d/mysqld restart

登录:mysql -uroot -pRcs551552?

1、实现1主2从GTID主从复制环境

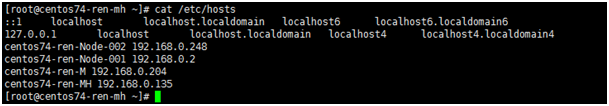

1)主机配置,四台机器均需部署

将ip和域名配置到/etc/hosts文件中

centos74-ren-Node-002 192.168.0.248

centos74-ren-Node-001 192.168.0.2 #备用master

centos74-ren-M 192.168.0.204

centos74-ren-MH 192.168.0.135

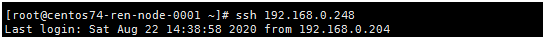

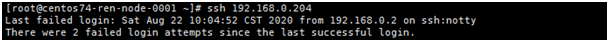

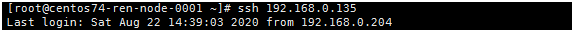

2)实现四台机器的免密登录

用ssh-keygen实现四台主机之间相互免密钥登录

以在192.168.0.2(slave1)部署为例,执行sh ssh.sh

先安装依赖包yum -y install sshpass

#! /bin/bash

[ -s /root/.ssh/ ] && rm -rf /root/.ssh/id_*

ssh-keygen -t dsa -f /root/.ssh/id_dsa -N ""

for ip in 248 204 135

do

sshpass -pRcs551552? ssh-copy-id -i /root/.ssh/id_dsa.pub "-o strictHostKeyChecking=no" 192.168.0.$ip

done

登录验证:ssh IP 不需要输入密码

2、建立master,slave1,slave2之间主从复制

1)master(192.168.0.204)主节点部署

cat >> /etc/my.cnf << EOF [mysqld] basedir=/application/mysql datadir=/application/mysql/data/ socket=/tmp/mysql.sock log-error=/var/log/mysql.log log-bin=/application/mysql/data/mysql-bin binlog_format=row skip-name-resolve server-id=3306 gtid_mode=on enforce-gtid-consistency=on log-slave-updates=1 EOF

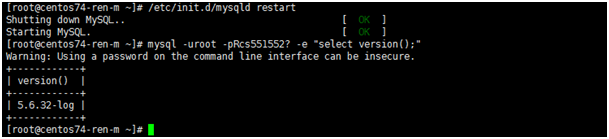

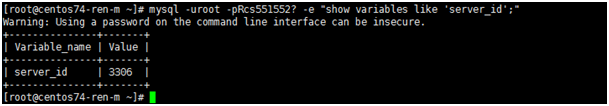

重启mysql服务,并且查看mysql版本和server id

mysql -uroot -pRcs551552? -e "select version();"

[root@centos74-ren-m ~]# mysql -uroot -pRcs551552? -e "show variables like 'server_id';"

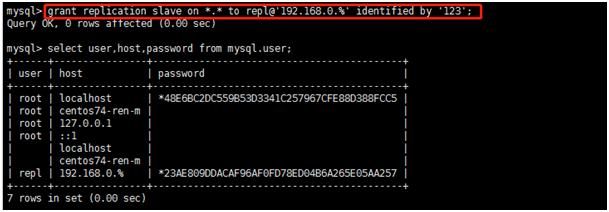

master节点进行账号授权:

grant replication slave on *.* to repl@'192.168.0.%' identified by '123';

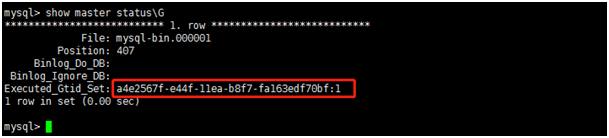

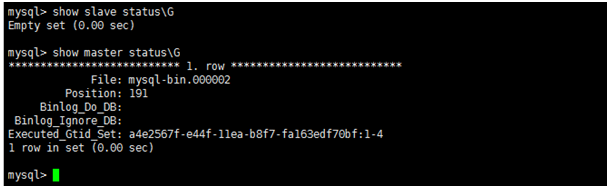

show master status\G --查看主库状态

2)slave1(192.168.0.2)从节点部署

cat >> /etc/my.cnf << EOF [mysqld] basedir=/application/mysql datadir=/application/mysql/data/ socket=/tmp/mysql.sock log-error=/var/log/mysql.log log-bin=/application/mysql/data/mysql-bin binlog_format=row skip-name-resolve server-id=3307 gtid_mode=on enforce-gtid-consistency=on log-slave-updates=1 EOF

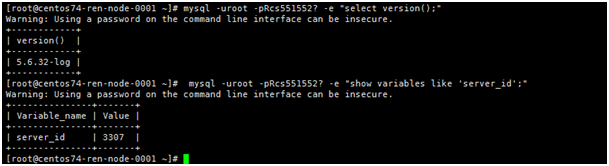

重启mysql服务,并且查看mysql版本和server id

mysql -uroot -pRcs551552? -e "select version();"

mysql -uroot -pRcs551552? -e "show variables like 'server_id';"

在主库进行账号授权之后,从库需要连接主库:

CHANGE MASTER TO MASTER_HOST='192.168.0.204',MASTER_USER='repl',MASTER_PASSWORD='123',MASTER_PORT=3306,MASTER_AUTO_POSITION=1;

mysql> start slave; --开启slave

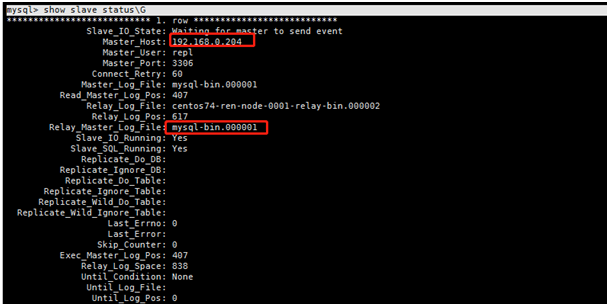

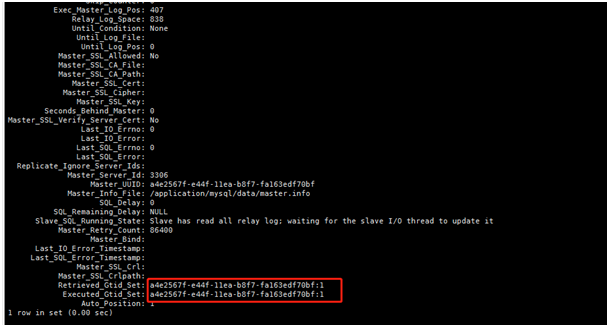

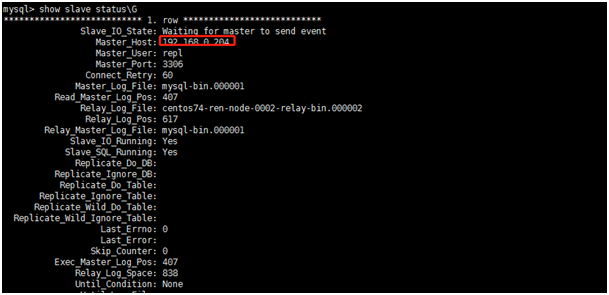

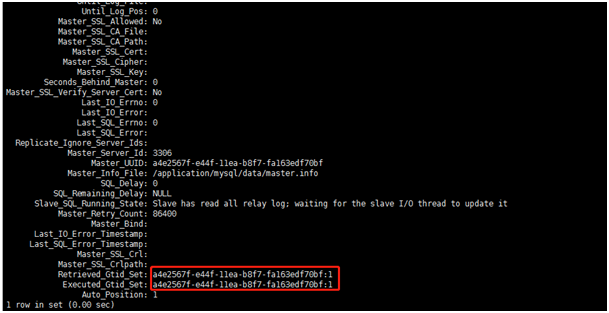

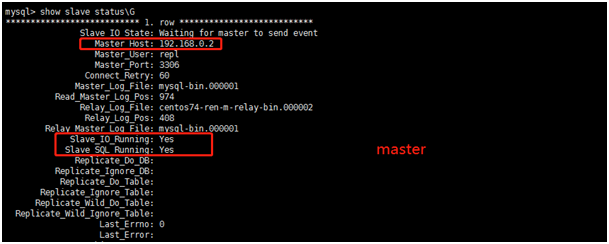

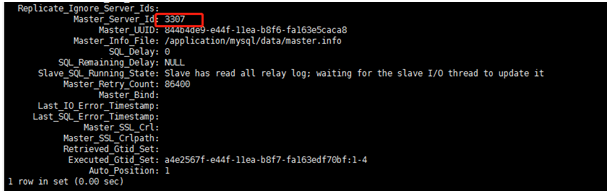

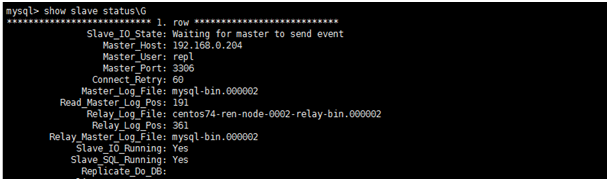

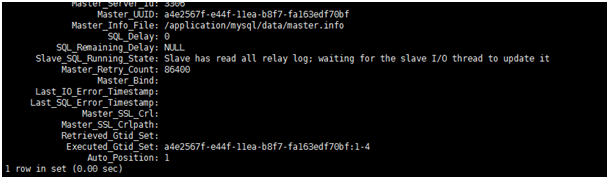

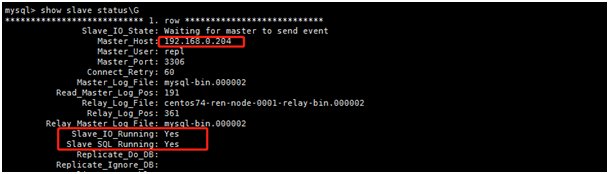

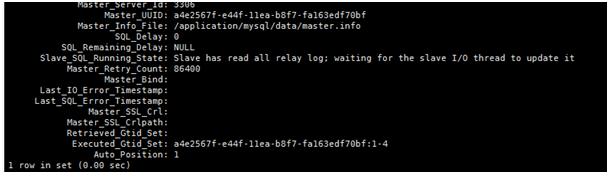

mysql> show slave status\G 查看slave状态

3)slave2(192.168.0.248)从节点部署

cat >> /etc/my.cnf << EOF [mysqld] basedir=/application/mysql datadir=/application/mysql/data/ socket=/tmp/mysql.sock log-error=/var/log/mysql.log log-bin=/application/mysql/data/mysql-bin binlog_format=row skip-name-resolve server-id=3308 gtid_mode=on enforce-gtid-consistency=on log-slave-updates=1 EOF

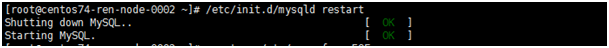

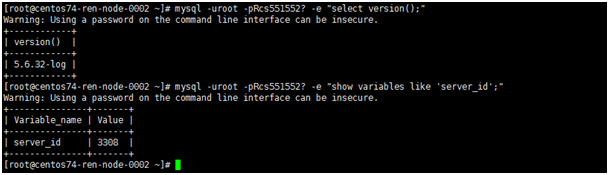

重启mysql服务,并且查看mysql版本和server id

mysql -uroot -pRcs551552? -e "select version();"

mysql -uroot -pRcs551552? -e "show variables like 'server_id';"

在主库进行账号授权之后,从库需要连接主库:

CHANGE MASTER TO MASTER_HOST='192.168.0.204',MASTER_USER='repl',MASTER_PASSWORD='123',MASTER_PORT=3306,MASTER_AUTO_POSITION=1;

mysql> start slave; --开启slave

mysql> show slave status\G 查看slave状态

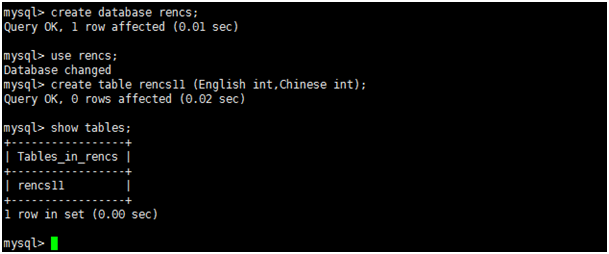

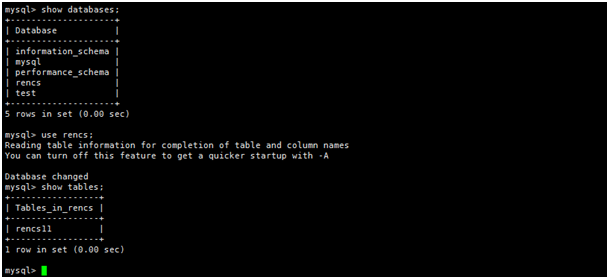

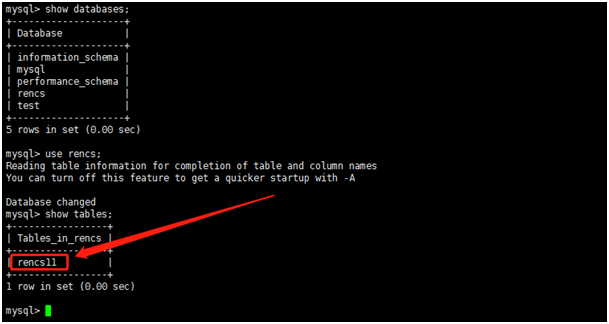

4)进行主从复制验证

先 在master节点创建一个数据库,且创建个表,再插入数据,看slave1、slave2是不是会同步进去

master:

slave1:

slave2:

验证数据都已经复制过来了

2、构建MHA高可用环境

所有节点配置域名解析,实现主机名hostname登录,配置项都是一样,加不加都行,是为了scp拷贝用域名而已

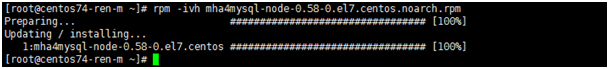

四台机器都安装MHAmha4mysql-node,MH管理节点安装mha4mysql-manager和perl环境包

wget https://qiniu.wsfnk.com/mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

wget https://qiniu.wsfnk.com/mha4mysql-node-0.58-0.el7.centos.noarch.rpm

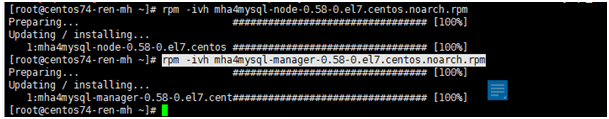

1)MHA管理节点(192.168.0.135)安装

Manage安装依赖包:yum -y install perl-Config-Tiny perl-Log-Dispatch cpan perl-Parallel-ForkManager perl-Time-HiRes

Node安装依赖包:yum -y install perl-DBD-MySQL

rpm -ivh mha4mysql-node-0.58-0.el7.centos.noarch.rpm

rpm -ivh mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

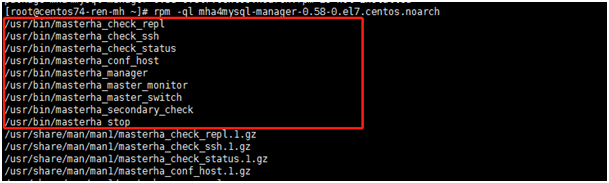

安装位置:

rpm -ql mha4mysql-node-0.58-0.el7.centos.noarch

rpm -ql mha4mysql-manager-0.58-0.el7.centos.noarch

管理机manager上配置MHA文件:

mkdir -p /etc/mha #创建配置文件目录

mkdir -p /var/log/mha/app1 #创建数据文件目录

/etc/mha/app1.cnf --不能配管理节点的IP

cat > /etc/mha/app1.cnf << 'EOF' [server default] manager_log=/var/log/mha/app1/manager manager_workdir=/var/log/mha/app1 master_binlog_dir=/data/binlog user=mha password=mha ping_interval=2 repl_user=repl repl_password=123 ssh_user=root [server1] hostname=192.168.0.204 port=3306 [server2] hostname=192.168.0.2 port=3306 [server3] hostname=192.168.0.248 port=3306 EOF

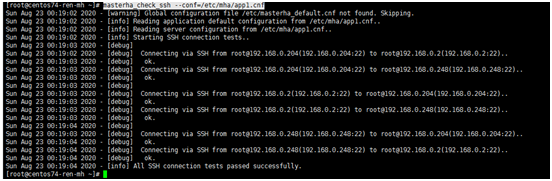

验证可信之前先确保四台机器之间可以免密登录(ssh ip不需要密码能登录)

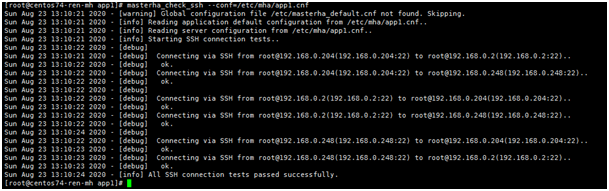

检查互信:

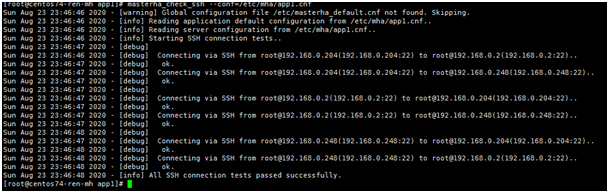

masterha_check_ssh --conf=/etc/mha/app1.cnf

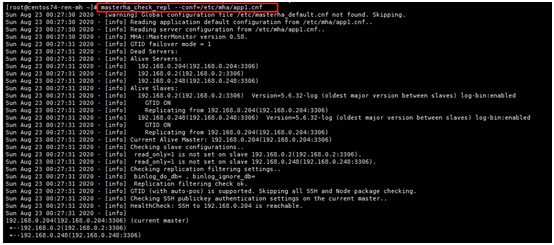

检测主从:

masterha_check_repl工具验证mysql复制是否成功

masterha_check_repl --conf=/etc/mha/app1.cnf

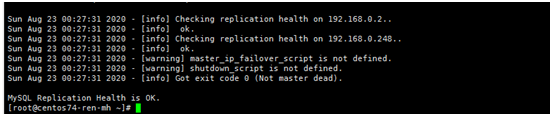

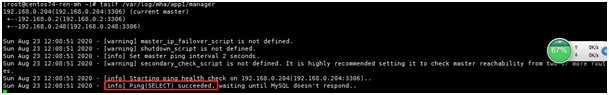

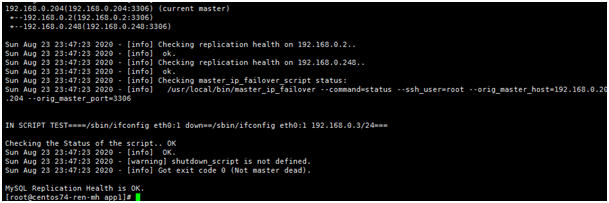

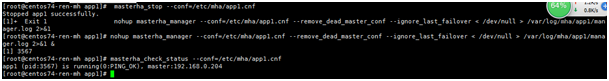

开启MHA manager监控,并监控日志文件

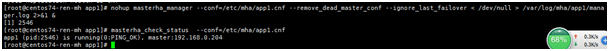

nohup masterha_manager --conf=/etc/mha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/mha/app1/manager.log 2>&1 &

--remove_dead_master_conf:当主从切换后,老的主库的IP将会从配置文件中移除

--ignore_last_failover:

tailf /var/log/mha/app1/manager:

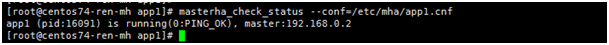

检查MHA Manager状态:

masterha_check_status --conf=/etc/mha/app1.cnf

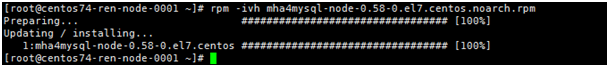

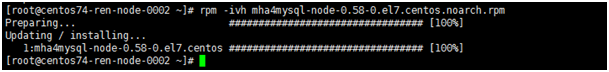

2)master、slave1、slave2节点安装

N安装依赖包:yum -y install perl-DBD-MySQL

rpm -ivh mha4mysql-node-0.58-0.el7.centos.noarch.rpm

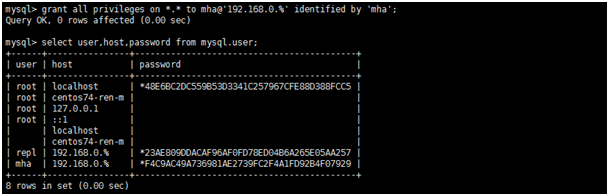

3)master主库中创建mha用户

mysql> grant all privileges on *.* to mha@'192.168.0.%' identified by 'mha';

flush privileges;

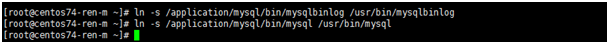

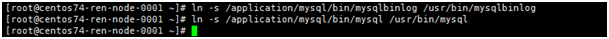

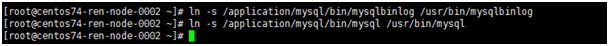

在主从数据库中都要配置软链接

ln -s /application/mysql/bin/mysqlbinlog /usr/bin/mysqlbinlog

ln -s /application/mysql/bin/mysql /usr/bin/mysql

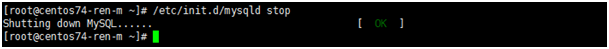

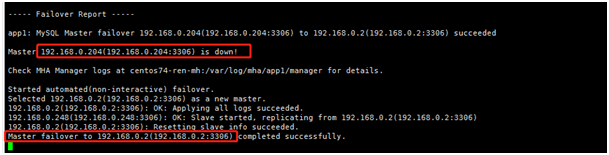

4)模拟主库损坏,自动切换

master节点mysqld停止,在mha节点查看manager日志,发现已自动完成主切换成slave1

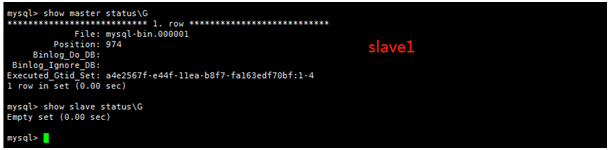

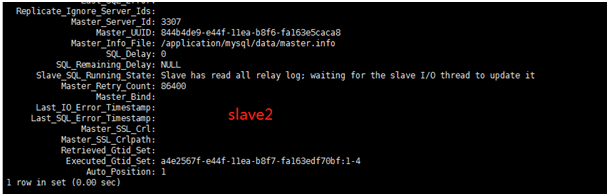

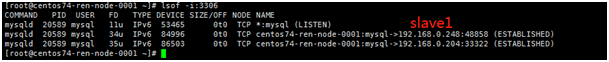

我们查看slave1已成主节点,slave1的从库状态已经被取消了,slaver2同步的数据库的IP也变成slave1

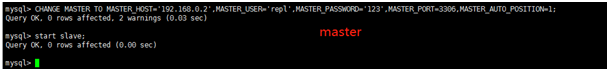

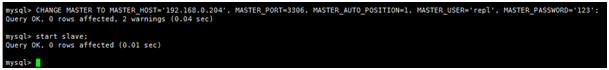

把master节点(192.168.0.204)加入到新的主从复制,新的主是slave1(192.168.0.2)

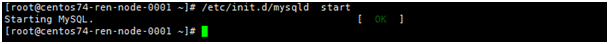

先恢复0.204的mysql

加入新的主从复制:

CHANGE MASTER TO MASTER_HOST='192.168.0.2',MASTER_USER='repl',MASTER_PASSWORD='123',MASTER_PORT=3306,MASTER_AUTO_POSITION=1;

start slave;

这样就构成新的一主(slave1:192.168.0.2)两从(master:192.168.0.204和slave2:192.168.0.248)

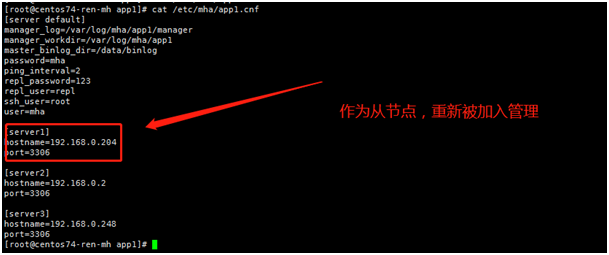

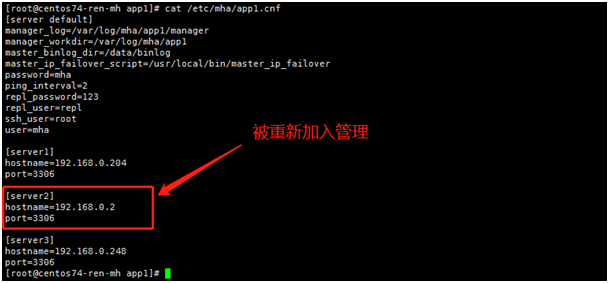

在mha管理节点中的配置文件中从新加入被删的master节点

一旦发生切换管理进程将会退出,无法进行再次测试,需将故障数据库加入到MHA环境中来

重新启动MHA:

检查互信

masterha_check_ssh --conf=/etc/mha/app1.cnf

检查主从

masterha_check_repl --conf=/etc/mha/app1.cnf

开启mha

nohup masterha_manager --conf=/etc/mha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/mha/app1/manager.log 2>&1 &

检查状态

masterha_check_status --conf=/etc/mha/app1.cnf

3、实现应用透明(VIP)

vip漂移、应用透明

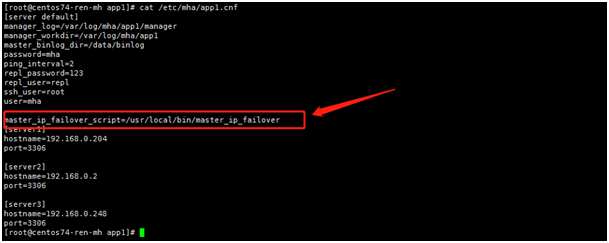

配置VIP:master_ip_failover_script=/usr/local/bin/master_ip_failover。在用虚拟IP的时候,需要在开启MHA程序之前要把虚拟IP先设置到主上去

1)在mha节点上传master_ip_failover脚本

cat > /usr/local/bin/master_ip_failover << 'EOF'

#!/usr/bin/env perl

use strict;

use warnings FATAL => 'all';

use Getopt::Long;

my (

$command, $ssh_user, $orig_master_host, $orig_master_ip,

$orig_master_port, $new_master_host, $new_master_ip, $new_master_port

);

my $vip = '192.168.0.3/24';

my $key = '1';

my $ssh_start_vip = "/sbin/ifconfig eth0:$key $vip";

my $ssh_stop_vip = "/sbin/ifconfig eth0:$key down";

GetOptions(

'command=s' => \$command,

'ssh_user=s' => \$ssh_user,

'orig_master_host=s' => \$orig_master_host,

'orig_master_ip=s' => \$orig_master_ip,

'orig_master_port=i' => \$orig_master_port,

'new_master_host=s' => \$new_master_host,

'new_master_ip=s' => \$new_master_ip,

'new_master_port=i' => \$new_master_port,

);

exit &main();

sub main {

print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n";

if ( $command eq "stop" || $command eq "stopssh" ) {

my $exit_code = 1;

eval {

print "Disabling the VIP on old master: $orig_master_host \n";

&stop_vip();

$exit_code = 0;

};

if ($@) {

warn "Got Error: $@\n";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "start" ) {

my $exit_code = 10;

eval {

print "Enabling the VIP - $vip on the new master - $new_master_host \n";

&start_vip();

$exit_code = 0;

};

if ($@) {

warn $@;

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "status" ) {

print "Checking the Status of the script.. OK \n";

exit 0;

}

else {

&usage();

exit 1;

}

}

sub start_vip() {

`ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}

sub stop_vip() {

return 0 unless ($ssh_user);

`ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}

sub usage {

print

"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

}

EOF

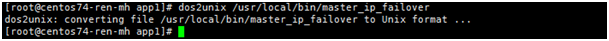

修改master_ip_failover权限,且修改编码格式,以免是Windows编码

2)修改mha的配置文件

在/etc/mha/app1.cnf加入master_ip_failover_script=/usr/local/bin/master_ip_failover

3)重启mha

masterha_stop --conf=/etc/mha/app1.cnf

nohup masterha_manager --conf=/etc/mha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/mha/app1/manager.log 2>&1 &

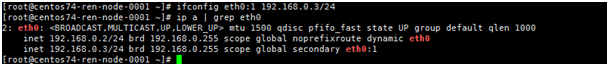

4)手动在目前的主库上绑定VIP(slave1:192.168.0.2)

ifconfig eth0:0 192.168.0.3 测试手动这样添加就行,(如果后期要真实用到数据传输,就要弄个真正的虚拟机,看下虚拟IP能ping通不)

5)主库切换测试

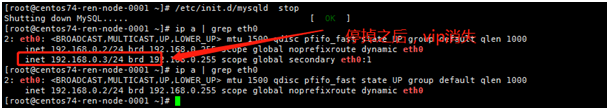

停止主库(slave1:192.168.0.2)

查看节点切换状态和vip漂移状态(节点切换时根据配置文件的server先后顺序)

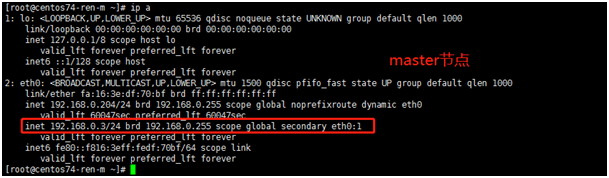

master (192.168.0.204)重新成为主库,从库状态被取消

查看slave2:

恢复slave1(192.168.0.2)主从复制关系,成为新的一主(192.168.0.204)两从(192.168.0.2和192.168.0.248)

CHANGE MASTER TO MASTER_HOST='192.168.0.204', MASTER_PORT=3306, MASTER_AUTO_POSITION=1, MASTER_USER='repl', MASTER_PASSWORD='123';

6)将slave1从新加入mha管理

由于主切换,导致/etc/mha/app1.cnf会自动将原先的主删掉

mha进行ssh验证

masterha_check_ssh --conf=/etc/mha/app1.cnf

mha进行主从复制验证

masterha_check_repl --conf=/etc/mha/app1.cnf

开启mha,并检查状态

当经历了一次主库宕机之后,manager程序会被自动干掉

nohup masterha_manager --conf=/etc/mha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/mha/app1/manager.log 2>&1 &

masterha_check_status --conf=/etc/mha/app1.cnf

4、实现外部数据补偿(binlog server)

5.6以上支持gtid开启,我们使用slave2(192.168.0.248)

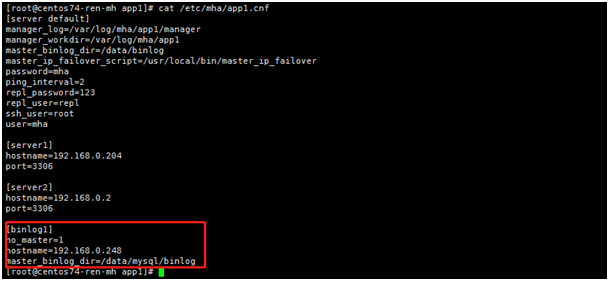

1)在mha配置文件中加入binlogserver配置

[binlog1]

no_master=1 让某一个节点永远不成为新的主节点

hostname=192.168.0.248

master_binlog_dir=/data/mysql/binlog 目录不能和主库binlog一样

2)创建binlog目录,并授权

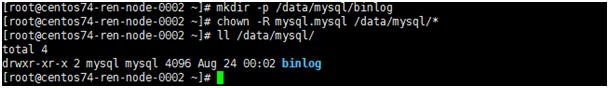

在slave2(192.168.0.248)节点上

mkdir -p /data/mysql/binlog

chown -R mysql.mysql /data/mysql/*

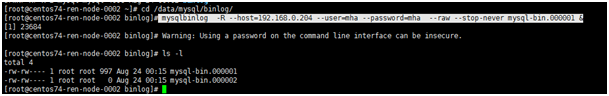

3)拉取主库

在slave2上操作,目前主库是192.168.0.204

看主库是从哪开始的,如果是mysql-bin.000005开始,进主库mysqbin目录看下,那就要写mysql-bin.000005

cd /data/mysql/binlog/

mysqlbinlog -R --host=192.168.0.204 --user=mha --password=mha --raw --stop-never mysql-bin.000001 &

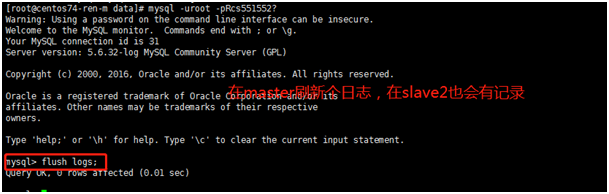

4)在主库模拟个新的

在slave2查看

5)重启mha

masterha_stop --conf=/etc/mha/app1.cnf

nohup masterha_manager --conf=/etc/mha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/mha/app1/manager.log 2>&1 &

masterha_check_status --conf=/etc/mha/app1.cnf

5、基于MHA环境实现Atlas读写分离

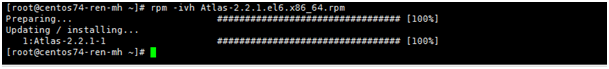

1)安装atlas

wget https://github.com/Qihoo360/Atlas/releases/download/2.2.1/Atlas-2.2.1.el6.x86_64.rpm

rpm -ivh Atlas-2.2.1.el6.x86_64.rpm

2) 配置Atlas配置文件

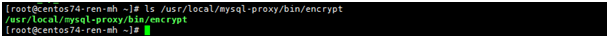

atlas配置文件中的密码需要加密,可以使用,软件自带的加密工具进行加密

/usr/local/mysql-proxy/bin/encrypt 密码 ---->制作加密密码

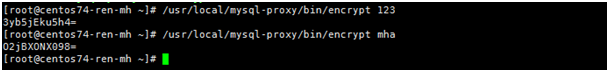

生成密文密码:

/usr/local/mysql-proxy/bin/encrypt 123

/usr/local/mysql-proxy/bin/encrypt mha

编辑配置文件

vim /usr/local/mysql-proxy/conf/test.cnf [mysql-proxy] #管理接口的用户名 admin-username = user #管理接口的密码 admin-password = pwd proxy-backend-addresses = 192.168.0.3:3306 (0.3在主库上,是虚拟机IP,若是弹性云服务器,需要购买个真实的虚拟IP) proxy-read-only-backend-addresses = 192.168.0.2:3306,192.168.0.248:3306 pwds = repl:3yb5jEku5h4=,mha:O2jBXONX098= daemon = true keepalive = true event-threads = 8 log-level = message log-path = /usr/local/mysql-proxy/log sql-log=ON #Atlas监听的工作接口IP和端口,连接这个端口可以正常执行数据库的增删改查sql语句 proxy-address = 0.0.0.0:3307 #Atlas监听的管理接口IP和端口,用于连接增删节点 admin-address = 0.0.0.0:2345 charset=utf8

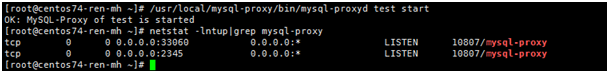

3)启动atlas

/usr/local/mysql-proxy/bin/mysql-proxyd test start #启动

/usr/local/mysql-proxy/bin/mysql-proxyd test stop #停止

/usr/local/mysql-proxy/bin/mysql-proxyd test restart #重启

test是配置文件的名称 应该是3307,,3060是第一次配置,后期改成3307

Atlas:读写分离、从库负载均衡、IP过滤、自动分表、DBA可平滑上下线DB、自动摘除宕机的DB

4)测试读操作

在slave1(192.168.0.2)和slave2(192.168.0.248)之间轮询

通过代理正常操作,疑惑点为啥用主库(mysql -umha -pmha –h192.168.0.3 -P3307就不行)

mysql -umha -pmha -h127.0.0.1 -P3307

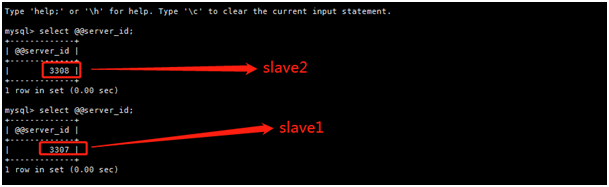

mysql> select @@server_id;或者mysql> show variables like '%server_id%';

每次server_id不一样,分离是2台从库的server_id,说明分离是OK的

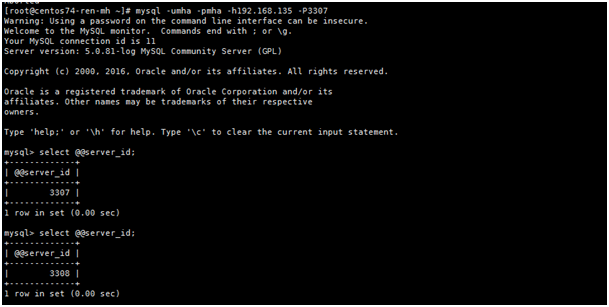

由于mha和Atlas是单独部署的节点(192.168.0.135),应该用

[root@centos74-ren-mh ~]# mysql -umha -pmha -h192.168.0.135(127.0.0.1) -P3307

(注释:如果mha和atlas部署在主slave2节点上,连接就应该用mysql -umha -pmha -h192.168.0.248 -P3307,单独部署的节点会根据命令自动实现读写分离,写的就会自动跑到主库上,读就会在从库轮询)

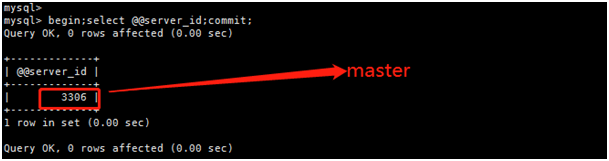

5)测试写操作

mysql> begin;select @@server_id;commit; ,开启事务,数据库就会认为我们是写入操作

6、在线增加删除节点管理Atlas

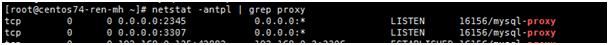

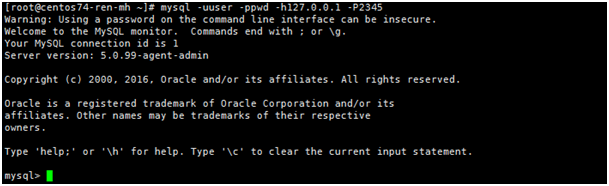

1)连接atlas管理接口

[root@centos74-ren-mh ~]# mysql -uuser -ppwd -h127.0.0.1 -P2345

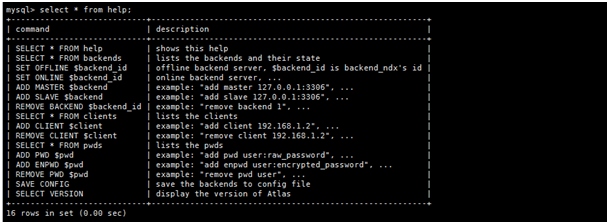

打印帮助

2)查询后端所有节点信息

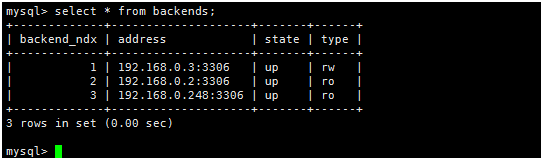

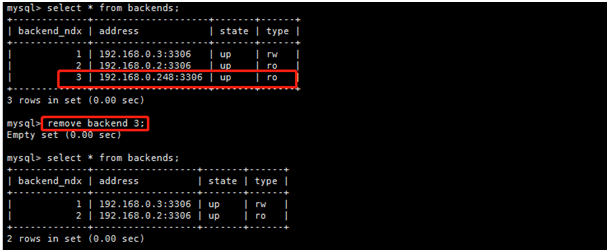

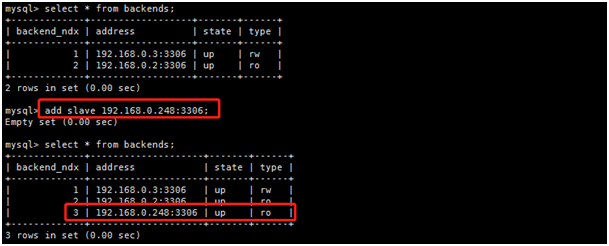

mysql> select * from backends;

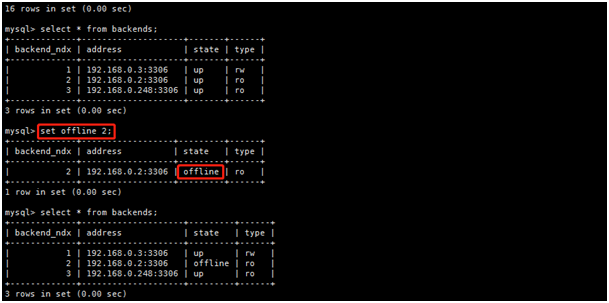

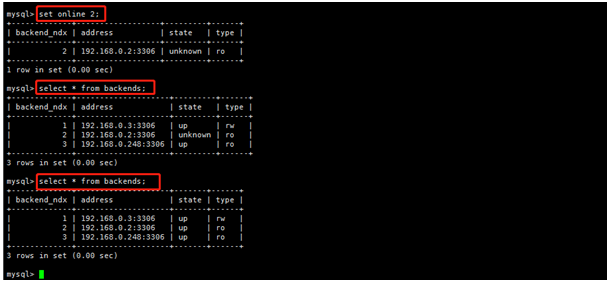

3)上下线节点

set offline backend_ndx---下线节点

set online backend_ndx---上下节点

配置文件就会增删这些节点信息/usr/local/mysql-proxy/conf/test.cnf

4)动态增删节点

动态删除节点

mysql> remove backend 3;

动态增加节点

mysql> add slave 192.168.0.248:3306;

配置文件就会增删这些节点信息/usr/local/mysql-proxy/conf/test.cnf

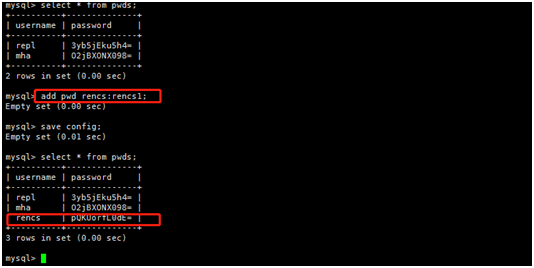

5)添加atlas用户

不需要像之前那样先在主库添加用户,然后利用encrypt加密密码,并把加密写在/usr/local/mysql-proxy/conf/test.cnf

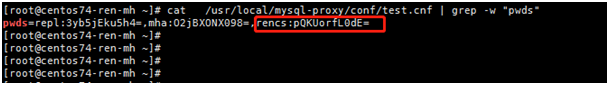

add pwd rencs:rencs1;

cat /usr/local/mysql-proxy/conf/test.cnf | grep -w "pwds" ---发现自动添加的用户,已自动添加到配置文件

浙公网安备 33010602011771号

浙公网安备 33010602011771号