04| scrapy start_urls和中间键

scrapy引擎来爬虫中取起始URL:

1. 调用start_requests并获取返回值 2. v = iter(返回值) 3. req1 = 执行 v.__next__() req2 = 执行 v.__next__() req3 = 执行 v.__next__() ... 4. req全部放到调度器中

源码

def start_requests(self):

cls = self.__class__

if method_is_overridden(cls, Spider, 'make_requests_from_url'):

warnings.warn(

"Spider.make_requests_from_url method is deprecated; it "

"won't be called in future Scrapy releases. Please "

"override Spider.start_requests method instead (see %s.%s)." % (

cls.__module__, cls.__name__

),

)

for url in self.start_urls:

yield self.make_requests_from_url(url)

else:

for url in self.start_urls:

yield Request(url, dont_filter=True)

我们可以实现自定义

def start_requests(self):

url = 'http://dig.chouti.com/'

yield Request(url=url, callback=self.login, meta={'cookiejar': True})

- 定制:可以去redis中获取start_url

深度和优先级

- 深度 - 最开始是0 - 每次yield时,会根据原来请求中的depth + 1 - 配置:DEPTH_LIMIT 深度控制 优先级

- 请求被下载的优先级 -= 深度 * 配置 DEPTH_PRIORITY 配置:DEPTH_PRIORITY

from scrapy.spidermiddlewares.depth import DepthMiddleware

class DepthMiddleware(object):

def __init__(self, maxdepth, stats=None, verbose_stats=False, prio=1):

self.maxdepth = maxdepth

self.stats = stats

self.verbose_stats = verbose_stats

self.prio = prio

@classmethod

def from_crawler(cls, crawler):

settings = crawler.settings

maxdepth = settings.getint('DEPTH_LIMIT')

verbose = settings.getbool('DEPTH_STATS_VERBOSE')

prio = settings.getint('DEPTH_PRIORITY')

return cls(maxdepth, crawler.stats, verbose, prio)

# 每次经过中间键的时候,都会执行这个方法

def process_spider_output(self, response, result, spider):

def _filter(request):

if isinstance(request, Request):

depth = response.meta['depth'] + 1

request.meta['depth'] = depth

if self.prio:

request.priority -= depth * self.prio

if self.maxdepth and depth > self.maxdepth:

logger.debug(

"Ignoring link (depth > %(maxdepth)d): %(requrl)s ",

{'maxdepth': self.maxdepth, 'requrl': request.url},

extra={'spider': spider}

)

return False

elif self.stats:

if self.verbose_stats:

self.stats.inc_value('request_depth_count/%s' % depth,

spider=spider)

self.stats.max_value('request_depth_max', depth,

spider=spider)

return True

# base case (depth=0) 如果没传则赋值为 0

if self.stats and 'depth' not in response.meta:

response.meta['depth'] = 0

if self.verbose_stats:

self.stats.inc_value('request_depth_count/0', spider=spider)

return (r for r in result or () if _filter(r))

from scrapy.http import Response 我们可以看到response.mata = request.meta

class Response(object_ref):

def __init__(self, url, status=200, headers=None, body=b'', flags=None, request=None):

self.headers = Headers(headers or {})

self.status = int(status)

self._set_body(body)

self._set_url(url)

self.request = request

self.flags = [] if flags is None else list(flags)

@property

def meta(self):

try:

return self.request.meta

内置代理

1 在环境变量中添加,在爬虫启动时,提前在os.envrion中设置代理即可。

class ChoutiSpider(scrapy.Spider):

name = 'chouti'

allowed_domains = ['chouti.com']

start_urls = ['https://dig.chouti.com/']

cookie_dict = {}

def start_requests(self):

import os

os.environ['HTTPS_PROXY'] = "http://root:woshiniba@192.168.11.11:9999/"

os.environ['HTTP_PROXY'] = '19.11.2.32',

for url in self.start_urls:

yield Request(url=url,callback=self.parse)

2 在meta中添加

class ChoutiSpider(scrapy.Spider):

name = 'chouti'

allowed_domains = ['chouti.com']

start_urls = ['https://dig.chouti.com/']

cookie_dict = {}

def start_requests(self):

for url in self.start_urls:

yield Request(url=url,callback=self.parse,meta={'proxy':'"http://root:woshiniba@192.168.11.11:9999/"'})

自定义代理

# by luffycity.com import base64 import random from six.moves.urllib.parse import unquote try: from urllib2 import _parse_proxy except ImportError: from urllib.request import _parse_proxy from six.moves.urllib.parse import urlunparse from scrapy.utils.python import to_bytes class XdbProxyMiddleware(object): def _basic_auth_header(self, username, password): user_pass = to_bytes( '%s:%s' % (unquote(username), unquote(password)), encoding='latin-1') return base64.b64encode(user_pass).strip() def process_request(self, request, spider): PROXIES = [ "http://root:woshiniba@192.168.11.11:9999/", "http://root:woshiniba@192.168.11.12:9999/", "http://root:woshiniba@192.168.11.13:9999/", "http://root:woshiniba@192.168.11.14:9999/", "http://root:woshiniba@192.168.11.15:9999/", "http://root:woshiniba@192.168.11.16:9999/", ] url = random.choice(PROXIES) orig_type = "" proxy_type, user, password, hostport = _parse_proxy(url) proxy_url = urlunparse((proxy_type or orig_type, hostport, '', '', '', '')) if user: creds = self._basic_auth_header(user, password) else: creds = None request.meta['proxy'] = proxy_url if creds: request.headers['Proxy-Authorization'] = b'Basic ' + creds class DdbProxyMiddleware(object): def process_request(self, request, spider): PROXIES = [ {'ip_port': '111.11.228.75:80', 'user_pass': ''}, {'ip_port': '120.198.243.22:80', 'user_pass': ''}, {'ip_port': '111.8.60.9:8123', 'user_pass': ''}, {'ip_port': '101.71.27.120:80', 'user_pass': ''}, {'ip_port': '122.96.59.104:80', 'user_pass': ''}, {'ip_port': '122.224.249.122:8088', 'user_pass': ''}, ] proxy = random.choice(PROXIES) if proxy['user_pass'] is not None: request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port']) encoded_user_pass = base64.b64encode(to_bytes(proxy['user_pass'])) request.headers['Proxy-Authorization'] = to_bytes('Basic ' + encoded_user_pass) else: request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port'])

DOWNLOADER_MIDDLEWARES = { #'xdb.middlewares.XdbDownloaderMiddleware': 543, 'xdb.proxy.XdbProxyMiddleware':751, 'xdb.proxy.DdbProxyMiddleware':751, }

scrapy 解析器

在程序中使用scrapy中的xpath

html = """<!DOCTYPE html> <html> <head lang="en"> <meta charset="UTF-8"> <title></title> </head> <body> <ul> <li class="item-"><a id='i1' href="link.html">first item</a></li> <li class="item-0"><a id='i2' href="llink.html">first item</a></li> <li class="item-1"><a href="llink2.html">second item<span>vv</span></a></li> </ul> <div><a href="llink2.html">second item</a></div> </body> </html> """ from scrapy.http import HtmlResponse from scrapy.selector import Selector response = HtmlResponse(url='http://example.com', body=html,encoding='utf-8') # hxs = Selector(response) # hxs.xpath() response.xpath('')

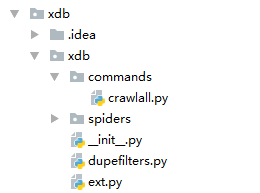

自定制命令

- 在spiders同级创建任意目录,如:commands

- 在其中创建 crawlall.py 文件 (此处文件名就是自定义的命令)

from scrapy.commands import ScrapyCommand from scrapy.utils.project import get_project_settings class Command(ScrapyCommand): requires_project = True def syntax(self): return '[options]' def short_desc(self): return 'Runs all of the spiders' def run(self, args, opts): spider_list = self.crawler_process.spiders.list() for name in spider_list: self.crawler_process.crawl(name, **opts.__dict__) self.crawler_process.start()

- 在settings.py 中添加配置 COMMANDS_MODULE = '项目名称.目录名称'

# 自定制命令目录 COMMANDS_MODULE = "xdb.commands"

- 在项目目录执行命令:scrapy crawlall

import sys from scrapy.cmdline import execute if __name__ == '__main__': execute(["scrapy","github","--nolog"])

浙公网安备 33010602011771号

浙公网安备 33010602011771号