03 | scrapy pieline 和自定制去重

pipeline 格式化处理

如果对于想要获取更多的数据处理,则可以利用Scrapy的items将数据格式化,然后统一交由pipelines来处理。我们可以在利用pipeline在爬虫开始时打开数据的链接,子爬虫结束后关闭数据库的链接

使用方法:

a. 先写pipeline类

class XXXPipeline(object):

def process_item(self, item, spider):

return item

b. 写Item类

class XdbItem(scrapy.Item):

href = scrapy.Field()

title = scrapy.Field()

c. 配置

ITEM_PIPELINES = {

'xdb.pipelines.XdbPipeline': 300,

}

d. 爬虫,yield每执行一次,process_item就调用一次。

yield Item对象

import scrapy class XdbItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() href = scrapy.Field() title = scrapy.Field()

# -*- coding: utf-8 -*- import scrapy from scrapy.http.response.html import HtmlResponse # import sys,os,io # sys.stdout=io.TextIOWrapper(sys.stdout.buffer,encoding='gb18030') from xdb.items import XdbItem class ChoutiSpider(scrapy.Spider): name = 'chouti' allowed_domains = ['chouti.com'] start_urls = ['http://chouti.com/'] def parse(self, response): # 去子孙中找div并且id=content-list item_list = response.xpath('//div[@id="content-list"]/div[@class="item"]') for item in item_list: text = item.xpath('.//a/text()').extract_first() href = item.xpath('.//a/@href').extract_first() print(text) yield XdbItem(title=text,href=href)

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html """ """ """ 源码内容: 1. 判断当前XdbPipeline类中是否有from_crawler 有: obj = XdbPipeline.from_crawler(....) 否: obj = XdbPipeline() 2. obj.open_spider() 3. obj.process_item()/obj.process_item()/obj.process_item()/obj.process_item()/obj.process_item() 4. obj.close_spider() """ from scrapy.exceptions import DropItem class FilePipeline(object): def __init__(self,path): self.f = None self.path = path @classmethod def from_crawler(cls, crawler): """ 初始化时候,用于创建pipeline对象 :param crawler: :return: """ print('File.from_crawler') path = crawler.settings.get('HREF_FILE_PATH') return cls(path) def open_spider(self,spider): """ 爬虫开始执行时,调用 :param spider: :return: """ # if spider.name == 'chouti': print('File.open_spider') self.f = open(self.path,'a+') def process_item(self, item, spider): print('File',item['href']) print(123) self.f.write(item['href']+'\n') return item # raise DropItem() def close_spider(self,spider): """ 爬虫关闭时,被调用 :param spider: :return: """ print('File.close_spider') self.f.close() class DbPipeline(object): def __init__(self,path): self.f = None self.path = path @classmethod def from_crawler(cls, crawler): """ 初始化时候,用于创建pipeline对象 :param crawler: :return: """ print('DB.from_crawler') path = crawler.settings.get('HREF_DB_PATH') return cls(path) def open_spider(self,spider): """ 爬虫开始执行时,调用 :param spider: :return: """ print('Db.open_spider') self.f = open(self.path,'a+') def process_item(self, item, spider): # f = open('xx.log','a+') # f.write(item['href']+'\n') # f.close() print('Db',item) # self.f.write(item['href']+'\n') return item def close_spider(self,spider): """ 爬虫关闭时,被调用 :param spider: :return: """ print('Db.close_spider') self.f.close()

ITEM_PIPELINES = { 'xdb.pipelines.FilePipeline': 300, 'xdb.pipelines.DbPipeline': 301, } # 读取配置 HREF_FILE_PATH = "news.log" HREF_DB_PATH = "db.log"

注意:pipeline是所有爬虫公用,如果想要给某个爬虫定制需要使用spider参数自己进行处理。

自定制去重规则

scrapy默认使用 scrapy.dupefilter.RFPDupeFilter 进行去重,相关配置有:

DUPEFILTER_CLASS = 'scrapy.dupefilter.RFPDupeFilter' DUPEFILTER_DEBUG = False JOBDIR = "保存范文记录的日志路径,如:/root/" # 最终路径为 /root/requests.seen

自定值

自定义类

配置(修改默认的去重规则)

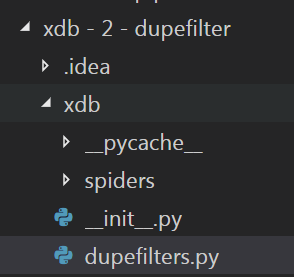

项目结构目录

注意:

- request_seen中编写正确逻辑

- dont_filter=False

# -*- coding: utf-8 -*- import scrapy from scrapy.http.response.html import HtmlResponse # import sys,os,io # sys.stdout=io.TextIOWrapper(sys.stdout.buffer,encoding='gb18030') from xdb.items import XdbItem from scrapy.dupefilter import RFPDupeFilter class ChoutiSpider(scrapy.Spider): name = 'chouti' allowed_domains = ['chouti.com'] start_urls = ['https://dig.chouti.com/'] def parse(self, response): print(response.request.url) # item_list = response.xpath('//div[@id="content-list"]/div[@class="item"]') # for item in item_list: # text = item.xpath('.//a/text()').extract_first() # href = item.xpath('.//a/@href').extract_first() page_list = response.xpath('//div[@id="dig_lcpage"]//a/@href').extract() for page in page_list: from scrapy.http import Request page = "https://dig.chouti.com" + page yield Request(url=page,callback=self.parse,dont_filter=False) # https://dig.chouti.com/all/hot/recent/2

from scrapy.dupefilter import BaseDupeFilter from scrapy.utils.request import request_fingerprint class XdbDupeFilter(BaseDupeFilter): def __init__(self): self.visited_fd = set() @classmethod def from_settings(cls, settings): return cls() def request_seen(self, request): # 为每个请求的url 生成一个类似md5的唯一值 fd = request_fingerprint(request=request) if fd in self.visited_fd: return True self.visited_fd.add(fd) def open(self): # can return deferred print('开始') def close(self, reason): # can return a deferred print('结束') # def log(self, request, spider): # log that a request has been filtered # print('日志')

# 修改默认的去重规则 # DUPEFILTER_CLASS = 'scrapy.dupefilter.RFPDupeFilter' DUPEFILTER_CLASS = 'xdb.dupefilters.XdbDupeFilter'

限制深度

settings.py中设置DEPTH_LIMIT = 1来指定“递归”的层数

DEPTH_LIMIT = 3

处理cookie

获取cookie

from scrapy.http.cookies import CookieJar cookie_jar = CookieJar() cookie_jar.extract_cookies(response, response.request) # 去对象中将cookie解析到字典 for k, v in cookie_jar._cookies.items(): for i, j in v.items(): for m, n in j.items(): self.cookie_dict[m] = n.value

# -*- coding: utf-8 -*- import scrapy from scrapy.http.response.html import HtmlResponse # import sys,os,io # sys.stdout=io.TextIOWrapper(sys.stdout.buffer,encoding='gb18030') from xdb.items import XdbItem import scrapy from scrapy.http.cookies import CookieJar from scrapy.http import Request from urllib.parse import urlencode class ChoutiSpider(scrapy.Spider): name = 'chouti' allowed_domains = ['chouti.com'] start_urls = ['https://dig.chouti.com/'] cookie_dict = {} def parse(self, response): """ 第一次访问抽屉返回的内容:response :param response: :return: """ # 去响应头中获取cookie # 去响应头中获取cookie,cookie保存在cookie_jar对象 cookie_jar = CookieJar() cookie_jar.extract_cookies(response, response.request) # 去对象中将cookie解析到字典 for k, v in cookie_jar._cookies.items(): for i, j in v.items(): for m, n in j.items(): self.cookie_dict[m] = n.value yield Request( url='https://dig.chouti.com/login', method='POST', body="phone=8613121758648&password=woshiniba&oneMonth=1",# # body=urlencode({})"phone=8615131255555&password=12sdf32sdf&oneMonth=1" cookies=self.cookie_dict, headers={ 'Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8' }, callback=self.check_login ) def check_login(self,response): print(response.text) yield Request( url='https://dig.chouti.com/all/hot/recent/1', cookies=self.cookie_dict, callback=self.index ) def index(self,response): news_list = response.xpath('//div[@id="content-list"]/div[@class="item"]') for new in news_list: link_id = new.xpath('.//div[@class="part2"]/@share-linkid').extract_first() yield Request( url='http://dig.chouti.com/link/vote?linksId=%s' % (link_id,), method='POST', cookies=self.cookie_dict, callback=self.check_result ) page_list = response.xpath('//div[@id="dig_lcpage"]//a/@href').extract() for page in page_list: page = "https://dig.chouti.com" + page yield Request(url=page, callback=self.index) # https://dig.chouti.com/all/hot/recent/2 def check_result(self, response): print(response.text)

浙公网安备 33010602011771号

浙公网安备 33010602011771号