云计算概述和KVM虚拟化

一、云计算概述

为什么有书还需要培训;

xftp;

查找问题:之前干了什么?

中国的计算机行业已经跨入了云计算行业;

运维工资虚高;

1、传统的数据中心面临的问题

为什么分享的人做的非常好:a、想跳槽了;b、宣传的公司的产品; c、提高知名度;

搞电脑的;

a、云计算是一种使用模式;b、云计算是通过网络进行访问的;c、按需付费,弹性计算;

包年包费:是vps;

IDC托管 IDC租用 虚拟主机 VPS openvz 云主机

有足够的机器的话,无需自动化扩容;

但是如果对于机器资源不足的话,需要实现自动化的调度,可以使用自动化扩容;

2、云计算和虚拟化

IaaS:操作系统一下,不包含操作系统; Managed by vendor PaaS:操作系统,运行环境等; SaaS:软件即服务,应用,数据,全部包含;eg:邮箱(反垃圾,反垃圾邮件联盟);

云计算和虚拟化不能比较:云计算是一种模式,虚拟化是一种技术;

不知道虚拟机是干什么的?不知道干什么的?不敢停

云计算是使用虚拟化的技术,来实现的;云计算是通过网络来获取资源的;

虚拟化分类:

1)、全虚拟化:KVM虚拟化是内核支持,CPU需要支持; 2)、半虚拟化:zone; 服务器虚拟化: 桌面虚拟化: 应用虚拟化:

携程和京东使用的是基于openstack的桌面虚拟化;

ESXI XenServer KVM RHEV oVirt openstack Vmware Vshpere EMC 被Dell收购了

3、KVM虚拟化

特性:

1)嵌入的linux内核中

2)代码级资源调用

3)虚拟机就是一个进程;

建议使用CentOS 7,目前所有的开源软件都是支持的;

二、KVM常用管理

1、创建虚拟机

[root@linux-node1 ~]# yum install qemu-kvm qemu-kvm qemu-kvm-tools virt-manager libvirt virt-install -y

一台机器分为:cpu、内存、IO(磁盘、网络);

kvm(是一个内核模块,是内核态的,需要用户态的程序进行管理)有:cpu和内存;

qemu(本身是一个虚拟化软件,是用户态软件)有:磁盘和网络;

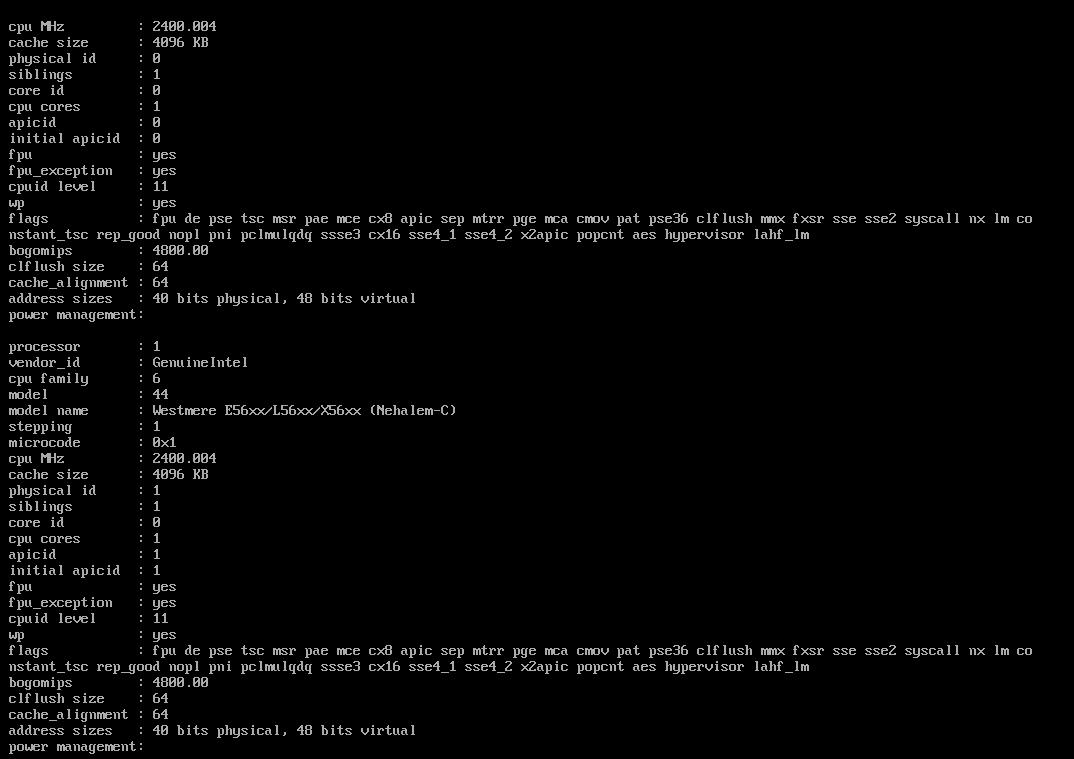

kvm需要硬件虚拟化支持,查看硬件是否支持虚拟化:

[root@linux-node3 ~]# grep -E '(vmx|svm)' /proc/cpuinfo flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss syscall nx rdtscp lm constant_tsc arch_perfmon pebs bts nopl xtopology tsc_reliable nonstop_tsc aperfmperf eagerfpu pni pclmulqdq vmx ssse3 fma cx16 sse4_1 sse4_2 movbe popcnt aes xsave avx hypervisor lahf_lm ida arat epb pln pts dtherm hwp hwp_noitfy hwp_act_window hwp_epp tpr_shadow vnmi ept vpid xsaveopt xsavec xgetbv1

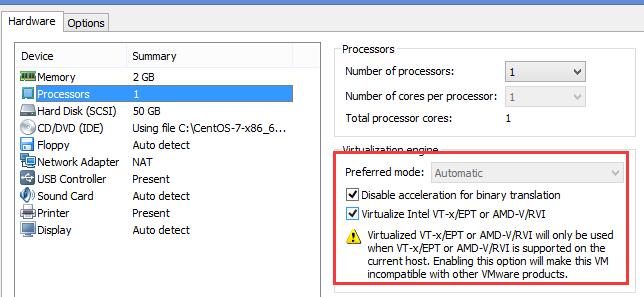

默认虚拟机没有打开硬件虚拟化,需要在setting中进行设置;

创建虚拟机的磁盘:

[root@linux-node1 ~]# qemu-img create -f raw /opt/CentOS-7.1-x86_64.raw 10G #创建一个虚拟机,-f指定文件格式 Formatting '/opt/CentOS-7.1-x86_64.raw', fmt=raw size=10737418240

[root@linux-node1 opt]# lsmod |grep kvm kvm_intel 162153 0 kvm 525259 1 kvm_intel

启动libvirt:

[root@linux-node1 ~]# systemctl enable libvirtd.service Created symlink from /etc/systemd/system/sockets.target.wants/virtlockd.socket to /usr/lib/systemd/system/virtlockd.socket. [root@linux-node1 ~]# [root@linux-node1 ~]# systemctl start libvirtd.service [root@linux-node1 ~]# systemctl status libvirtd.service ● libvirtd.service - Virtualization daemon Loaded: loaded (/usr/lib/systemd/system/libvirtd.service; enabled; vendor preset: enabled) Active: active (running) since Thu 2017-02-09 15:11:11 CST; 3min 50s ago

创建ISO镜像文件:

dd if=/dev/cdrom of=/opt/CentOS-7.1.iso #创建ISO文件 [root@linux-node1 ~]# virt-install --name CentOS-7.1-x86_64 --virt-type kvm --ram 1024 --cdrom=/opt/CentOS-7.1.iso --disk path=/opt/CentOS-7.1-x86_64.raw,bus=sata --network network=default --graphics vnc,listen=0.0.0.0 --noautoconsole Starting install... Creating domain... | 0 B 00:00:00 Domain installation still in progress. You can reconnect to the console to complete the installation process.

如果使用virt-manager+xmanager安装的话,需要设置环境变量DISPLAY:

export DISPLAY=192.168.74.1:0.0

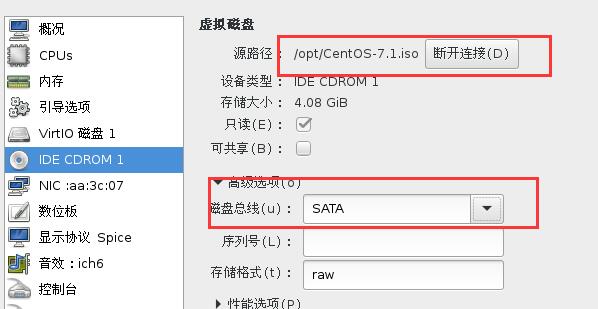

选择cdrom:

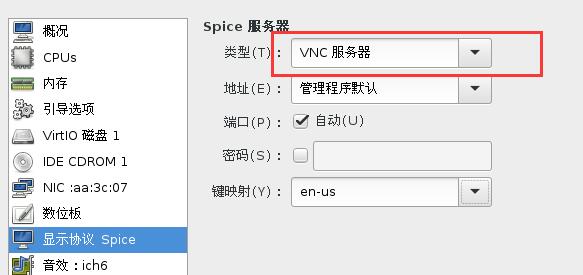

我这里使用的使用virt-manager安装的,由于之前磁盘接口默认是ide的,总是包ide-0-0-0找不到的错误,安装失败,所以在安装的时候需要将磁盘接口选择为sata的; 使用vnc,默认监听端口为5900;

远程连接选择vnc:

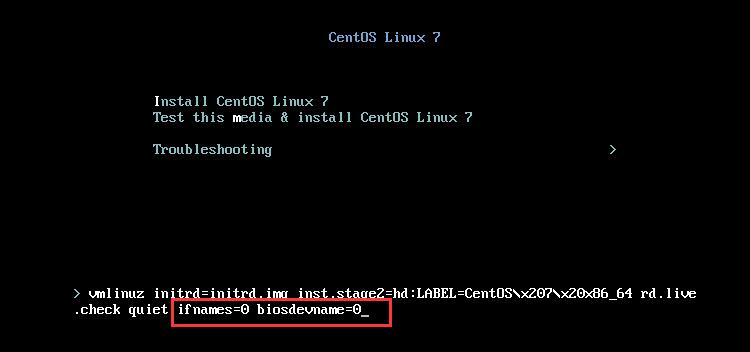

在安装的时候,设置网卡名为eth0:

[root@linux-node3 ~]# ps aux|grep kvm #kvm就是宿主机中的一个进程 root 610 0.0 0.0 0 0 ? S< 06:38 0:00 [kvm-irqfd-clean] qemu 4830 1.9 23.3 1730568 438344 ? Sl 08:03 2:28 /usr/libexec/qemu-kvm -name CentOS-7.1-x86_64 -S -machine pc-i440fx-rhel7.0.0,accel=kvm,usb=off -cpu Westmere -m 1024 -realtime mlock=off -smp 1,sockets=1,cores=1,threads=1 -uuid 6201bfeb-ae90-447d-b75a-1c09cbd7f658 -no-user-config -nodefaults -chardev socket,id=charmonitor,path=/var/lib/libvirt/qemu/domain-8-CentOS-7.1-x86_64/monitor.sock,server,nowait -mon chardev=charmonitor,id=monitor,mode=control -rtc base=utc,driftfix=slew -global kvm-pit.lost_tick_policy=discard -no-hpet -no-shutdown -global PIIX4_PM.disable_s3=1 -global PIIX4_PM.disable_s4=1 -boot strict=on -device ich9-usb-ehci1,id=usb,bus=pci.0,addr=0x6.0x7 -device ich9-usb-uhci1,masterbus=usb.0,firstport=0,bus=pci.0,multifunction=on,addr=0x6 -device ich9-usb-uhci2,masterbus=usb.0,firstport=2,bus=pci.0,addr=0x6.0x1 -device ich9-usb-uhci3,masterbus=usb.0,firstport=4,bus=pci.0,addr=0x6.0x2 -device ahci,id=sata0,bus=pci.0,addr=0x5 -device virtio-serial-pci,id=virtio-serial0,bus=pci.0,addr=0x7 -drive file=/opt/CentOS-7.1-x86_64,format=raw,if=none,id=drive-virtio-disk0 -device virtio-blk-pci,scsi=off,bus=pci.0,addr=0x8,drive=drive-virtio-disk0,id=virtio-disk0,bootindex=1 -drive if=none,media=cdrom,id=drive-sata0-0-0,readonly=on -device ide-cd,bus=sata0.0,drive=drive-sata0-0-0,id=sata0-0-0 -netdev tap,fd=26,id=hostnet0,vhost=on,vhostfd=28 -device virtio-net-pci,netdev=hostnet0,id=net0,mac=52:54:00:ae:02:78,bus=pci.0,addr=0x3 -chardev pty,id=charserial0 -device isa-serial,chardev=charserial0,id=serial0 -chardev socket,id=charchannel0,path=/var/lib/libvirt/qemu/channel/target/domain-8-CentOS-7.1-x86_64/org.qemu.guest_agent.0,server,nowait -device virtserialport,bus=virtio-serial0.0,nr=1,chardev=charchannel0,id=channel0,name=org.qemu.guest_agent.0 -chardev spicevmc,id=charchannel1,name=vdagent -device virtserialport,bus=virtio-serial0.0,nr=2,chardev=charchannel1,id=channel1,name=com.redhat.spice.0 -device usb-tablet,id=input0,bus=usb.0,port=1 -vnc 0.0.0.0:0 -k en-us -vga qxl -global qxl-vga.ram_size=67108864 -global qxl-vga.vram_size=67108864 -global qxl-vga.vgamem_mb=16 -device intel-hda,id=sound0,bus=pci.0,addr=0x4 -device hda-duplex,id=sound0-codec0,bus=sound0.0,cad=0 -chardev spicevmc,id=charredir0,name=usbredir -device usb-redir,chardev=charredir0,id=redir0,bus=usb.0,port=2 -chardev spicevmc,id=charredir1,name=usbredir -device usb-redir,chardev=charredir1,id=redir1,bus=usb.0,port=3 -device virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x9 -msg timestamp=on root 4854 0.0 0.0 0 0 ? S 08:03 0:00 [kvm-pit/4830] root 7059 0.0 0.0 112668 972 pts/2 R+ 10:09 0:00 grep --color=auto kvm

2、libvirt介绍

支持xen,kvm,vmware,virtualbox等等;

[root@linux-node3 ~]# virsh list #libvirt api的工具 Id 名称 状态 ---------------------------------------------------- 1 CentOS-7.1-x86_64 running 2 CentOS-7.2-x86_64 running [root@linux-node3 ~]# virsh list --all Id 名称 状态 ---------------------------------------------------- 1 CentOS-7.1-x86_64 running 2 CentOS-7.2-x86_64 running

openstack默认使用kvm;

libvirt会创建如下的网卡:

vnet0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::fc54:ff:feae:278 prefixlen 64 scopeid 0x20<link> ether fe:54:00:ae:02:78 txqueuelen 1000 (Ethernet) RX packets 160 bytes 15462 (15.0 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 4271 bytes 227026 (221.7 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

在新创建的虚拟机上,编辑网卡,设为开机自启动,使用 ip ad li查看,网卡有地址,是因为dncp的原因:

[root@linux-node3 ~]# ps aux|grep dns nobody 1519 0.0 0.0 15544 468 ? S 06:38 0:00 /sbin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/default.conf --leasefile-ro --dhcp-script=/usr/libexec/libvirt_leaseshelper root 1522 0.0 0.0 15516 164 ? S 06:38 0:00 /sbin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/default.conf --leasefile-ro --dhcp-script=/usr/libexec/libvirt_leaseshelper root 7662 0.0 0.0 112668 968 pts/2 S+ 10:36 0:00 grep --color=auto dns

可以看到分配的libvirt的地址的范围:

[root@linux-node3 ~]# cat /var/lib/libvirt/dnsmasq/default.conf ##WARNING: THIS IS AN AUTO-GENERATED FILE. CHANGES TO IT ARE LIKELY TO BE ##OVERWRITTEN AND LOST. Changes to this configuration should be made using: ## virsh net-edit default ## or other application using the libvirt API. ## ## dnsmasq conf file created by libvirt strict-order pid-file=/var/run/libvirt/network/default.pid except-interface=lo bind-dynamic interface=virbr0 dhcp-range=192.168.122.2,192.168.122.254 #dncp分配的地址范围 dhcp-no-override dhcp-lease-max=253 dhcp-hostsfile=/var/lib/libvirt/dnsmasq/default.hostsfile addn-hosts=/var/lib/libvirt/dnsmasq/default.addnhosts

3、CPU热添加

virsh edit CentOS-7.1-x86_64 #编辑libvirt的xml文件

<vcpu placement='auto' current='1'>4</vcpu> #cpu的热添加,最大为4个;cpu的热添加,只有在Centos 7才能做

相当于直接编辑该文件:

[root@linux-node3 qemu]# pwd /etc/libvirt/qemu [root@linux-node3 qemu]# ls CentOS-7.1-x86_64.xml CentOS-7.2-x86_64.xml networks

编辑xml文件之后,只有重启虚拟机才能生效:

[root@linux-node3 ~]# virsh shutdown CentOS-7.1-x86_64 #编辑完xml之后,需要重启才能生效 域 CentOS-7.1-x86_64 被关闭 [root@linux-node3 ~]# virsh start CentOS-7.1-x86_64 域 CentOS-7.1-x86_64 已开始

将cpu的个数改为2个

[root@linux-node3 ~]# virsh setvcpus CentOS-7.1-x86_64 2 --live

然后再虚拟机上查看cat /proc/cpuinfo,可以看到cpu变成两个了

查看CPU是否激活

[root@linux-node3 ~]# cat /sys/devices/system/cpu/cpu0/online 1

cpu的个数只能加,不能减少

[root@linux-node3 ~]# virsh setvcpus CentOS-7.1-x86_64 1 --live #只能加,不能减 错误:不支持的配置:failed to find appropriate hotpluggable vcpus to reach the desired target vcpu count

注意:#cpu热添加,不能超过cpu最大数;

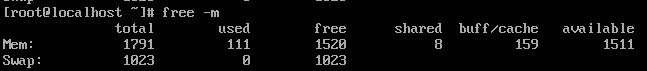

4、内存热添加

<memory unit='KiB'>2048576</memory> #内存的最大值,内存的变化,最大不能超过最大值;

修改完成之后,需要重启虚拟机;

[root@linux-node3 ~]# virsh qemu-monitor-command --help

NAME

qemu-monitor-command - QEMU 监控程序命令

SYNOPSIS

qemu-monitor-command <domain> [--hmp] [--pretty] {[--cmd] <string>}...

DESCRIPTION

QEMU 监控程序命令

OPTIONS

[--domain] <string> 域名,id 或 uuid

--hmp 采用认同监控协议的命令

--pretty 以美化格式输出任意 qemu 监视器协议输出结果

[--cmd] <string> 命令

[root@linux-node3 ~]# virsh qemu-monitor-command CentOS-7.1-x86_64 --hmp --cmd info

info balloon -- show balloon information

info block -- show the block devices

info block-jobs -- show progress of ongoing block device operations

info blockstats -- show block device statistics

info capture -- show capture information

info chardev -- show the character devices

info cpus -- show infos for each CPU

info history -- show the command line history

info irq -- show the interrupts statistics (if available)

info jit -- show dynamic compiler info

info kvm -- show KVM information

info mem -- show the active virtual memory mappings

info mice -- show which guest mouse is receiving events

info migrate -- show migration status

info migrate_cache_size -- show current migration xbzrle cache size

info migrate_capabilities -- show current migration capabilities

info mtree -- show memory tree

info name -- show the current VM name

info network -- show the network state

info numa -- show NUMA information

info pci -- show PCI info

info pcmcia -- show guest PCMCIA status

info pic -- show i8259 (PIC) state

info profile -- show profiling information

info qdm -- show qdev device model list

info qtree -- show device tree

info registers -- show the cpu registers

info roms -- show roms

info snapshots -- show the currently saved VM snapshots

info spice -- show the spice server status

info status -- show the current VM status (running|paused)

info tlb -- show virtual to physical memory mappings

info tpm -- show the TPM device

info trace-events -- show available trace-events & their state

info usb -- show guest USB devices

info usbhost -- show host USB devices

info usernet -- show user network stack connection states

info uuid -- show the current VM UUID

info version -- show the version of QEMU

info vnc -- show the vnc server status

设置虚拟机内存的大小,内存可大可小

[root@linux-node3 ~]# virsh qemu-monitor-command CentOS-7.1-x86_64 --hmp --cmd balloon 600 #内存设置为600M [root@linux-node3 ~]# virsh qemu-monitor-command CentOS-7.1-x86_64 --hmp --cmd info balloon balloon: actual=600 [root@linux-node3 ~]# virsh qemu-monitor-command CentOS-7.1-x86_64 --hmp --cmd balloon 2000 #设为2G [root@linux-node3 ~]# virsh qemu-monitor-command CentOS-7.1-x86_64 --hmp --cmd info balloon balloon: actual=2000

5、硬盘

全镜像模式 稀疏模式 raw qcow2(openstack首选)

查看硬盘的信息

[root@linux-node3 opt]# ll 总用量 14763012 -rw-r--r--. 1 qemu qemu 4379901952 2月 12 00:03 CentOS-7.1.iso -rw-------. 1 qemu qemu 10737418240 2月 12 15:06 CentOS-7.1-x86_64 drwxr-xr-x. 2 root root 6 3月 26 2015 rh [root@linux-node3 opt]# qemu-img info CentOS-7.1-x86_64 image: CentOS-7.1-x86_64 file format: raw virtual size: 10G (10737418240 bytes) disk size: 10G

6、网络

默认是nat

如何将nat该为桥接模式?

查看网桥

[root@linux-node3 ~]# brctl show

bridge name bridge id STP enabled interfaces

virbr0 8000.5254003ca721 yes virbr0-nic

vnet0

vnet1

添加网桥,并将eth0关联进去

[root@linux-node3 ~]# brctl addbr br0 #添加一个网桥 [root@linux-node3 ~]# brctl show bridge name bridge id STP enabled interfaces br0 8000.000000000000 no virbr0 8000.5254003ca721 yes virbr0-nic vnet0 [root@linux-node3 ~]# brctl addif br0 eth0 #将eth0加进去(网断了)

删除eth0的ip地址,加入到br0中

![]() ,就可以重启连接了

,就可以重启连接了

[root@linux-node3 ~]# brctl show

bridge name bridge id STP enabled interfaces

br0 8000.000c29385f8a no eth0

virbr0 8000.5254003ca721 yes virbr0-nic

vnet0

vnet1

这样,网卡就实现桥接了

[root@linux-node3 ~]# ifconfig

br0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.74.22 netmask 255.255.255.0 broadcast 192.168.74.255

inet6 fe80::20c:29ff:fe38:5f8a prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:38:5f:8a txqueuelen 1000 (Ethernet)

RX packets 105 bytes 15245 (14.8 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 80 bytes 11974 (11.6 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::38c2:5066:2880:ac5f prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:38:5f:8a txqueuelen 1000 (Ethernet)

RX packets 323111 bytes 35975466 (34.3 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 304017 bytes 436428151 (416.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1 (Local Loopback)

RX packets 130 bytes 13769 (13.4 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 130 bytes 13769 (13.4 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

virbr0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255

ether 52:54:00:3c:a7:21 txqueuelen 1000 (Ethernet)

RX packets 538 bytes 36160 (35.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 411 bytes 35200 (34.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

vnet0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::fc54:ff:feae:278 prefixlen 64 scopeid 0x20<link>

ether fe:54:00:ae:02:78 txqueuelen 1000 (Ethernet)

RX packets 523 bytes 41742 (40.7 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8501 bytes 456432 (445.7 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

vnet1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::fc54:ff:feaa:3c07 prefixlen 64 scopeid 0x20<link>

ether fe:54:00:aa:3c:07 txqueuelen 1000 (Ethernet)

RX packets 15 bytes 1950 (1.9 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 819 bytes 43640 (42.6 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

7、删除虚拟机

virsh undefine CentOS-7.1-x86_64 #删除就找不到了,可以先将xml备份 [root@linux-node3 ~]# virsh suspend CentOS-7.1-x86_64 #挂起 域 CentOS-7.1-x86_64 被挂起 [root@linux-node3 ~]# virsh list Id 名称 状态 ---------------------------------------------------- 13 CentOS-7.1-x86_64 暂停 [root@linux-node3 ~]# virsh resume CentOS-7.1-x86_64 #恢复 域 CentOS-7.1-x86_64 被重新恢复 [root@linux-node3 ~]# virsh list Id 名称 状态 ---------------------------------------------------- 13 CentOS-7.1-x86_64 running

三、kvm性能优化

1、CPU优化

cpu是qume模拟的;

x86有四个运行级别:rang0~ring3

ring0是内核态(可以使用硬件),ring3是用户态(不可以使用硬件);

如果进程需要使用硬件,进程需要切换到ring0上,这样的切换称之为上下文切换;

只实现了ring0和ring3;

vt-x:上下文切换技术实现;

查看CPU状态

[root@linux-node3 /]# lscpu #查看cpu状态 Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 1 On-line CPU(s) list: 0 Thread(s) per core: 1 Core(s) per socket: 1 座: 1 NUMA 节点: 1 厂商 ID: GenuineIntel CPU 系列: 6 型号: 78 型号名称: Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz 步进: 3 CPU MHz: 2400.000 BogoMIPS: 4800.01 虚拟化: VT-x 超管理器厂商: VMware 虚拟化类型: 完全 L1d 缓存: 32K #一级数据缓存 L1i 缓存: 32K #一级指令缓存 L2 缓存: 256K L3 缓存: 3072K NUMA 节点0 CPU: 0

减少Cache Miss

xml taskset:将某一个进程绑定到某一个CPU上;

内存优化

内存寻址:EPT 内存合并:KSM 大页内存:khugepaged 把连续的4k内存合并为2M; 1、宿主机虚拟内存-->宿主机物理内存 影子页表: 虚拟机的虚拟内存->虚拟机的物理内存 [root@linux-node3 /]# cat /sys/kernel/mm/transparent_hugepage/enabled [always] madvise never

I/O

一般内核优化很少做,内核裁剪可以做;

Virtio(虚拟化的队列接口) virtio-net virtio-blk 半虚拟化 io半虚拟化

linux的IO调度算法:

cfq:完全公平的io调度算法; fifo:用于SSD;

查看IO调度算法

[root@linux-node3 /]# dmesg |grep -i scheduler [ 1.264325] io scheduler noop registered [ 1.264327] io scheduler deadline registered (default) [ 1.264344] io scheduler cfq registered

修改IO调度算法

[root@linux-node3 /]# cat /sys/block/sda/queue/scheduler noop [deadline] cfq [root@linux-node3 /]# echo cfq >/sys/block/sda/queue/scheduler #修改IO调度算法,如果要永久生效,需要修改内核参数 [root@linux-node3 /]# cat /sys/block/sda/queue/scheduler noop deadline [cfq]

elevator=noop #修改内核参数

四、ovirt(RHEV的开源版本)和总结:

1.镜像制作: 分区的时候,只分一个/分区 不建议使用交换分区 2.删除虚拟机网卡的UUID MAC 3.安装基础软件包 net-tools lrzsz screen tree vim wget

KVM管理平台:

openstack cloudstack cloud.com公司-->Ctrix -->Apache基金会-->Java openNebula oVirt:RHEV的开源版本;

ovirt:管理端+客户端组成

oVirt Egine oVirt主机/节点 Vcenter Exsi

开源的邮箱:

iRedmail extmail Zimbra(开源版本) 现在:腾讯企业邮箱; zstack SEU:搜索引擎优化;

作业:

1.ovirt体验 2.openstack环境准备 CentOS7.1系统两台。每台2G内存 linux-node1.oldboy.com linux-node2.oldboy.com 3.硬盘50G 4.Tightvnc view vnc客户端 5.SOA 消息队列 RestAPI 分布式存储和对象存储

Dubbo:阿里开源的SOA框架,目前京东,当当都在使用,是基于RPC,zookeeper做注册中心;

总结:

服务器虚拟化 桌面虚拟化 应用虚拟化 硬件虚拟化:Intel vt-x/EPT AMD AMD-v/PVI