spark性能调优

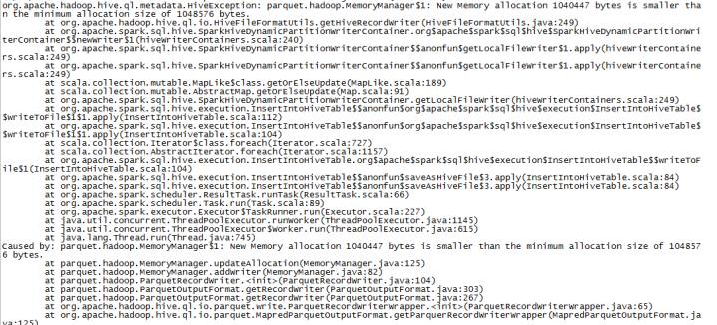

1、spark汇聚失败

出错原因,hive默认配置中parquet和动态分区设置太小

hiveContext.setConf("parquet.memory.min.chunk.size","100000") hiveContext.setConf("hive.exec.max.dynamic.partions","100000")

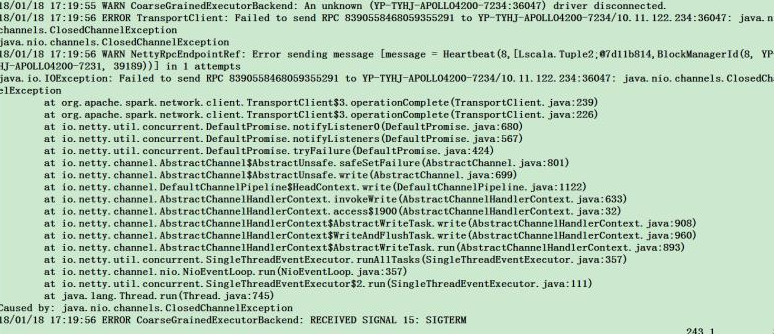

2.hive数据入hbase报错

出现报错原因:

executor_memory和dirver_memory太小,在增大内存后还会出现连接超时的报错

解决连接超时:spark.network.timeout=1400s

在此问题解决后,还是会有以下报错

18/01/19 10:46:44 WARN NettyRpcEndpointRef: Error sending message [message = Heartbeat(14,[Lscala.Tuple2;@3ce02577,BlockManagerId(14

, YP-TYHJ-APOLLO4200-7232, 46653))] in 1 attempts

org.apache.spark.rpc.RpcTimeoutException: Futures timed out after [10 seconds]. This timeout is controlled by spark.executor.heartbe

atInterval

at org.apache.spark.rpc.RpcTimeout.org$apache$spark$rpc$RpcTimeout$$createRpcTimeoutException(RpcTimeout.scala:48)

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:63)

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:59)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:33)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:76)

at org.apache.spark.rpc.RpcEndpointRef.askWithRetry(RpcEndpointRef.scala:101)

at org.apache.spark.executor.Executor.org$apache$spark$executor$Executor$$reportHeartBeat(Executor.scala:476)

at org.apache.spark.executor.Executor$$anon$1$$anonfun$run$1.apply$mcV$sp(Executor.scala:505)

at org.apache.spark.executor.Executor$$anon$1$$anonfun$run$1.apply(Executor.scala:505)

at org.apache.spark.executor.Executor$$anon$1$$anonfun$run$1.apply(Executor.scala:505)

at org.apache.spark.util.Utils$.logUncaughtExceptions(Utils.scala:1801)

at org.apache.spark.executor.Executor$$anon$1.run(Executor.scala:505)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471)

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:304)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:178)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.util.concurrent.TimeoutException: Futures timed out after [10 seconds]

at scala.concurrent.impl.Promise$DefaultPromise.ready(Promise.scala:219)

at scala.concurrent.impl.Promise$DefaultPromise.result(Promise.scala:223)

at scala.concurrent.Await$$anonfun$result$1.apply(package.scala:107)

at scala.concurrent.BlockContext$DefaultBlockContext$.blockOn(BlockContext.scala:53)

at scala.concurrent.Await$.result(package.scala:107)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:75)

... 14 more

18/01/19 10:46:46 WARN CoarseGrainedExecutorBackend: An unknown (YP-TYHJ-APOLLO4200-8028:40221) driver disconnected.

18/01/19 10:46:47 ERROR TransportClient: Failed to send RPC 5730830365128558454 to YP-TYHJ-APOLLO4200-8028/10.11.123.28:40221: java.

nio.channels.ClosedChannelException

java.nio.channels.ClosedChannelException

18/01/19 10:46:47 WARN NettyRpcEndpointRef: Error sending message [message = Heartbeat(14,[Lscala.Tuple2;@3ce02577,BlockManagerId(14

, YP-TYHJ-APOLLO4200-7232, 46653))] in 2 attempts

java.io.IOException: Failed to send RPC 5730830365128558454 to YP-TYHJ-APOLLO4200-8028/10.11.123.28:40221: java.nio.channels.ClosedC

hannelException

at org.apache.spark.network.client.TransportClient$3.operationComplete(TransportClient.java:239)

at org.apache.spark.network.client.TransportClient$3.operationComplete(TransportClient.java:226)

at io.netty.util.concurrent.DefaultPromise.notifyListener0(DefaultPromise.java:680)

at io.netty.util.concurrent.DefaultPromise.notifyListeners(DefaultPromise.java:567)

at io.netty.util.concurrent.DefaultPromise.tryFailure(DefaultPromise.java:424)

at io.netty.channel.AbstractChannel$AbstractUnsafe.safeSetFailure(AbstractChannel.java:801)

at io.netty.channel.AbstractChannel$AbstractUnsafe.write(AbstractChannel.java:699)

at io.netty.channel.DefaultChannelPipeline$HeadContext.write(DefaultChannelPipeline.java:1122)

at io.netty.channel.AbstractChannelHandlerContext.invokeWrite(AbstractChannelHandlerContext.java:633)

at io.netty.channel.AbstractChannelHandlerContext.access$1900(AbstractChannelHandlerContext.java:32)

at io.netty.channel.AbstractChannelHandlerContext$AbstractWriteTask.write(AbstractChannelHandlerContext.java:908)

at io.netty.channel.AbstractChannelHandlerContext$WriteAndFlushTask.write(AbstractChannelHandlerContext.java:960)

at io.netty.channel.AbstractChannelHandlerContext$AbstractWriteTask.run(AbstractChannelHandlerContext.java:893)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:357)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:357)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.nio.channels.ClosedChannelException

18/01/19 10:46:47 ERROR CoarseGrainedExecutorBackend: RECEIVED SIGNAL 15: SIGTERM

18/01/19 10:46:47 INFO DiskBlockManager: Shutdown hook called

18/01/19 10:46:47 INFO ShutdownHookManager: Shutdown hook called

出现以上报错是资源不足导致的。需设置永久代

spark.driver.extraJavaOptions="-XX:PermSize=2g -XX:MaxPermSize=2g"

浙公网安备 33010602011771号

浙公网安备 33010602011771号