ecs上搭建minikube

操作系统版本:centos-8.2

docker版本:20.10.17

minikube版本:v1.26.1

kubernetes版本: v1.24.3

安装docker

sudo yum remove docker docker-common docker-selinux docker-engine

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo

sudo yum makecache fast

sudo yum -y install docker-ce

#启动docker

systemctl enable docker --now

#验证版本

docker version配置自己的镜像加速器,也可以使用下面的配置

# docker加速配置

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://ke9h1pt4.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker下载minikube

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

sudo install minikube-linux-amd64 /usr/local/bin/minikube启动minikube

启动minikube start之前有这个选项参数应该注意:

--base-image string The base image to use for docker/podman drivers. Intended for local development. (default "gcr.io/k8s-minikube/kicbase-builds:v0.0.33-1659486857-14721@sha256:98c8007234ca882b63abc707dc184c585fcb5372828b49a4b639961324d291b3")

--binary-mirror string Location to fetch kubectl, kubelet, & kubeadm binaries from.

--image-mirror-country string Country code of the image mirror to be used. Leave empty to use the global one. For Chinese mainland users, set it to cn.

--image-repository string Alternative image repository to pull docker images from. This can be used when you have limited access to gcr.io. Set it to "auto" to let minikube decide one for you. For Chinese mainland users, you may use local gcr.io mirrors such as registry.cn-hangzhou.aliyuncs.com/google_containers

--registry-mirror strings Registry mirrors to pass to the Docker daemon

执行:

注意:建议在普通用户下安装

$ minikube start \

--image-mirror-country=cn \

--image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers[root@VM-4-9-centos ~]# minikube start \

> --image-mirror-country=cn \

> --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers

😄 minikube v1.26.1 on Centos 8.2.2004 (amd64)

✨ Automatically selected the docker driver. Other choices: ssh, none

🛑 The "docker" driver should not be used with root privileges. If you wish to continue as root, use --force.

💡 If you are running minikube within a VM, consider using --driver=none:

📘 https://minikube.sigs.k8s.io/docs/reference/drivers/none/

❌ Exiting due to DRV_AS_ROOT: The "docker" driver should not be used with root privileges.在root用户下安装时,可以考虑加上--force选项:

再次执行:

[root@VM-4-9-centos ~]# minikube start --force --image-mirror-country=cn --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers

😄 minikube v1.26.1 on Centos 8.2.2004 (amd64)

❗ minikube skips various validations when --force is supplied; this may lead to unexpected behavior

✨ Using the docker driver based on existing profile

🛑 The "docker" driver should not be used with root privileges. If you wish to continue as root, use --force.

💡 If you are running minikube within a VM, consider using --driver=none:

📘 https://minikube.sigs.k8s.io/docs/reference/drivers/none/

💡 Tip: To remove this root owned cluster, run: sudo minikube delete

👍 Starting control plane node minikube in cluster minikube

🚜 Pulling base image ...

🏃 Updating the running docker "minikube" container ...

🐳 Preparing Kubernetes v1.24.3 on Docker 20.10.17 ...

🔎 Verifying Kubernetes components...

▪ Using image registry.cn-hangzhou.aliyuncs.com/google_containers/storage-provisioner:v5

🌟 Enabled addons: storage-provisioner, default-storageclass

💡 kubectl not found. If you need it, try: 'minikube kubectl -- get pods -A'

🏄 Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default如果在安装过程中,出现问题,可以查看安装日志

$ minikube logs为了后续方便使用kubectl,可以设置别名,添加到.bashrc中,这个

alias kubectl="minikube kubectl --"不过这种并不好用,尤其是在operator开发过程中,会总是提示找不到kubectl。

为了后续方便还是建议直接安装一个kubectl吧。

安装kubectl

配置yum源:

#配置k8s的yum源地址,这里指定为阿里云的yum源

cat <<EOF | sudo tee -a /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF安装kubectl(由于上面安装了kubernets版本为1.24.3,所以这里保持一致):

yum -y install kubectl-1.24.3基本操作

查看POD:

$ kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7f74c56694-227v8 1/1 Running 0 6m16s

kube-system etcd-minikube 1/1 Running 3 (6m36s ago) 7m20s

kube-system kube-apiserver-minikube 1/1 Running 3 (6m36s ago) 7m10s

kube-system kube-controller-manager-minikube 1/1 Running 3 (6m36s ago) 7m1s

kube-system kube-proxy-vk786 1/1 Running 0 6m16s

kube-system kube-scheduler-minikube 1/1 Running 2 (6m36s ago) 7m4s

kube-system storage-provisioner 1/1 Running 1 (5m55s ago) 6m26s安装dashboard

安装dashboard

$ minikube dashboard --url

🔌 Enabling dashboard ...

▪ Using image registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-scraper:v1.0.8

▪ Using image registry.cn-hangzhou.aliyuncs.com/google_containers/dashboard:v2.6.0

🤔 Verifying dashboard health ...

🚀 Launching proxy ...

🤔 Verifying proxy health ...

http://127.0.0.1:37777/api/v1/namespaces/kubernetes-dashboard/services/http:kubernetes-dashboard:/proxy/但是这个URL只能在宿主机内部访问,但是我们宿主机是一台ECS,上面给出的地址,在宿主机之外无法直接访问。

我们使用kubectl proxy重新做一下代理即可。

$ kubectl proxy --address='0.0.0.0' --accept-hosts='^*$'注意:

1、 在ECS上需要添加防火墙规则,允许8001端口流量通行。

2、也可以使用其他方式来实现ECS外访问,如ingress+nginx方式。

如果想要卸载dashboard,操作如下:

$ minikube addons disable dashboardMinikube 也提供了丰富的 Addon 组件

$ minikube addons list

|-----------------------------|--------------------------------|

| ADDON NAME | MAINTAINER |

|-----------------------------|--------------------------------|

| ambassador | 3rd party (Ambassador) |

| auto-pause | Google |

| csi-hostpath-driver | Kubernetes |

| dashboard | Kubernetes |

| default-storageclass | Kubernetes |

| efk | 3rd party (Elastic) |

| freshpod | Google |

| gcp-auth | Google |

| gvisor | Google |

| headlamp | 3rd party (kinvolk.io) |

| helm-tiller | 3rd party (Helm) |

| inaccel | 3rd party (InAccel |

| | [info@inaccel.com]) |

| ingress | Kubernetes |

| ingress-dns | Google |

| istio | 3rd party (Istio) |

| istio-provisioner | 3rd party (Istio) |

| kong | 3rd party (Kong HQ) |

| kubevirt | 3rd party (KubeVirt) |

| logviewer | 3rd party (unknown) |

| metallb | 3rd party (MetalLB) |

| metrics-server | Kubernetes |

| nvidia-driver-installer | Google |

| nvidia-gpu-device-plugin | 3rd party (Nvidia) |

| olm | 3rd party (Operator Framework) |

| pod-security-policy | 3rd party (unknown) |

| portainer | 3rd party (Portainer.io) |

| registry | Google |

| registry-aliases | 3rd party (unknown) |

| registry-creds | 3rd party (UPMC Enterprises) |

| storage-provisioner | Google |

| storage-provisioner-gluster | 3rd party (Gluster) |

| volumesnapshots | Kubernetes |

|-----------------------------|--------------------------------|安装Ingress

$ minikube addons enable ingress这里安装的实际上是ingress controller,它会持续监控APIServer的/ingress接口(即用户定义的到后端服务的转发规则)的变化。当/ingress接口后端的服务信息发生变化时,IngressController会自动更新其转发规则。

使用Ingress进行服务路由时,Ingress Controller基于Ingress规则将客户端请求直接转发到Service对应的后端Endpoint(Pod)上,这样会跳过kube-proxy设置的路由转发规则,以提高网络转发效率。

Kubernetes使用了一个Ingress策略定义和一个具体提供转发服务的Ingress Controller,两者结合,实现了基于灵活Ingress策略定义的服务路由功能。如果是对Kubernetes集群外部的客户端提供服务,那么Ingress Controller实现的是类似于边缘路由器(EdgeRouter)的功能。

默认是安装到“ingress-nginx”命名空间下:

$ kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-qhmzt 0/1 Completed 0 32m

pod/ingress-nginx-admission-patch-zkbnb 0/1 Completed 1 32m

pod/ingress-nginx-controller-5b486bcc4b-2tlzk 1/1 Running 0 32m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller NodePort 10.97.194.74 <none> 80:32760/TCP,443:30787/TCP 32m

service/ingress-nginx-controller-admission ClusterIP 10.105.64.43 <none> 443/TCP 32m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ingress-nginx-controller 1/1 1 1 32m

NAME DESIRED CURRENT READY AGE

replicaset.apps/ingress-nginx-controller-5b486bcc4b 1 1 1 32m

NAME COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create 1/1 7s 32m

job.batch/ingress-nginx-admission-patch 1/1 8s 32m该控制器是以deploy方式部署的,实际上以daemonset方式部署更为常见。它创建了对应的deploy和service。

查看POD信息:

$ kubectl get pods -n ingress-nginx -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create-qhmzt 0/1 Completed 0 34m 172.17.0.13 minikube <none> <none>

ingress-nginx-admission-patch-zkbnb 0/1 Completed 1 34m 172.17.0.12 minikube <none> <none>

ingress-nginx-controller-5b486bcc4b-2tlzk 1/1 Running 0 34m 172.17.0.9 minikube <none> <none>查看Ingressclass:

$ kubectl get ingressclass

NAME CONTROLLER PARAMETERS AGE

nginx k8s.io/ingress-nginx <none> 8h默认创建了一个名为nginx的ingressclass,控制器为k8s.io/ingress-nginx

查看“service/ingress-nginx-controller”

$ kubectl describe service/ingress-nginx-controller -n ingress-nginx

Name: ingress-nginx-controller

Namespace: ingress-nginx

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/instance=ingress-nginx

app.kubernetes.io/name=ingress-nginx

Annotations: <none>

Selector: app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.97.194.74

IPs: 10.97.194.74

Port: http 80/TCP

TargetPort: http/TCP

NodePort: http 32760/TCP

Endpoints: 172.17.0.9:80

Port: https 443/TCP

TargetPort: https/TCP

NodePort: https 30787/TCP

Endpoints: 172.17.0.9:443

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>尝试访问:

root@minikube:/# curl localhost:80

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx</center>

</body>

</html>

root@minikube:/# 上面我们安装了dashboard后,在ECS外部访问时,采用的kube proxy方式。实际上我们还可以采用ingress+nginx方式来实现。

创建Ingress

查看dashboard service

$ kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.96.116.15 <none> 8000/TCP 12d

kubernetes-dashboard ClusterIP 10.104.80.205 <none> 80/TCP 12d创建ingress,

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

rules:

- host: kubernetes-dashboard.org

http:

paths:

- path: /

pathType: ImplementationSpecific

backend:

service:

name: kubernetes-dashboard

port:

number: 80注:

- host 自定义

- service.name为dashboard service的名称,

- service.port为dashboard service端口

登录一个nginx-ingress-controller Pod,在/etc/nginx/(或/etc/nginx/conf.d)目录下可以查看其生成的内容,可以看到对kubernetes-dashboard.org的转发规则的配置:

$ kubectl exec -it ingress-nginx-controller-5b486bcc4b-2tlzk -n ingress-nginx -- cat /etc/nginx/nginx.conf

...

## start server kubernetes-dashboard.org

server {

server_name kubernetes-dashboard.org ;

listen 80 ;

listen 443 ssl http2 ;

set $proxy_upstream_name "-";

ssl_certificate_by_lua_block {

certificate.call()

}

location / {

set $namespace "kubernetes-dashboard";

set $ingress_name "kubernetes-dashboard";

set $service_name "kubernetes-dashboard";

set $service_port "80";

set $location_path "/";

set $global_rate_limit_exceeding n;

rewrite_by_lua_block {

lua_ingress.rewrite({

force_ssl_redirect = false,

ssl_redirect = true,

force_no_ssl_redirect = false,

preserve_trailing_slash = false,

use_port_in_redirects = false,

global_throttle = { namespace = "", limit = 0, window_size = 0, key = { }, ignored_cidrs = { } },

})

balancer.rewrite()

plugins.run()

}

# be careful with `access_by_lua_block` and `satisfy any` directives as satisfy any

# will always succeed when there's `access_by_lua_block` that does not have any lua code doing `ngx.exit(ngx.DECLINED)`

# other authentication method such as basic auth or external auth useless - all requests will be allowed.

#access_by_lua_block {

#}

header_filter_by_lua_block {

lua_ingress.header()

plugins.run()

}

body_filter_by_lua_block {

plugins.run()

}

log_by_lua_block {

balancer.log()

monitor.call()

plugins.run()

}

port_in_redirect off;

set $balancer_ewma_score -1;

set $proxy_upstream_name "kubernetes-dashboard-kubernetes-dashboard-80";

set $proxy_host $proxy_upstream_name;

set $pass_access_scheme $scheme;

set $pass_server_port $server_port;

set $best_http_host $http_host;

set $pass_port $pass_server_port;

set $proxy_alternative_upstream_name "";

client_max_body_size 1m;

proxy_set_header Host $best_http_host;

# Pass the extracted client certificate to the backend

# Allow websocket connections

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

proxy_set_header X-Request-ID $req_id;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header X-Forwarded-Host $best_http_host;

proxy_set_header X-Forwarded-Port $pass_port;

proxy_set_header X-Forwarded-Proto $pass_access_scheme;

proxy_set_header X-Forwarded-Scheme $pass_access_scheme;

proxy_set_header X-Scheme $pass_access_scheme;

# Pass the original X-Forwarded-For

proxy_set_header X-Original-Forwarded-For $http_x_forwarded_for;

# mitigate HTTPoxy Vulnerability

# https://www.nginx.com/blog/mitigating-the-httpoxy-vulnerability-with-nginx/

proxy_set_header Proxy "";

# Custom headers to proxied server

proxy_connect_timeout 5s;

proxy_send_timeout 60s;

proxy_read_timeout 60s;

proxy_buffering off;

proxy_buffer_size 4k;

proxy_buffers 4 4k;

proxy_max_temp_file_size 1024m;

proxy_request_buffering on;

proxy_http_version 1.1;

proxy_cookie_domain off;

proxy_cookie_path off;

# In case of errors try the next upstream server before returning an error

proxy_next_upstream error timeout;

proxy_next_upstream_timeout 0;

proxy_next_upstream_tries 3;

proxy_pass http://upstream_balancer;

proxy_redirect off;

}

}

## end server kubernetes-dashboard.org

...我们的所配置的Ingress创建成功后,Ingress Controller就会监控到其配置的路由策略,并更新到Nginx的配置文件中生效。

在宿主机上安装和配置nginx

安装nginx:

$ yum -y install nginxnginx设置代理,修改宿主机:/etc/nginx/conf.d/nginx.conf

server {

listen 8080;

server_name kubernetes-dashboard.org;

location / {

proxy_pass http://kubernetes-dashboard.org;

}

}为宿主机添加域名映射:

echo $(minikube ip) kubernetes-dashboard.org |sudo tee -a /etc/hosts 在宿主机上试一下,能够正常访问

$ curl kubernetes-dashboard.org

<!--

Copyright 2017 The Kubernetes Authors.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

--><!DOCTYPE html><html lang="en" dir="ltr"><head>

<meta charset="utf-8">

<title>Kubernetes Dashboard</title>

<link rel="icon" type="image/png" href="assets/images/kubernetes-logo.png">

<meta name="viewport" content="width=device-width">

<style>html,body{height:100%;margin:0}*::-webkit-scrollbar{background:transparent;height:8px;width:8px}</style><link rel="stylesheet" href="styles.243e6d874431c8e8.css" media="print" onload="this.media='all'"><noscript><link rel="stylesheet" href="styles.243e6d874431c8e8.css"></noscript></head>

<body>

<kd-root></kd-root>

<script src="runtime.4e6659debd894e59.js" type="module"></script><script src="polyfills.5c84b93f78682d4f.js" type="module"></script><script src="scripts.2c4f58d7c579cacb.js" defer></script><script src="en.main.0d231cb1cda9c4cf.js" type="module"></script>

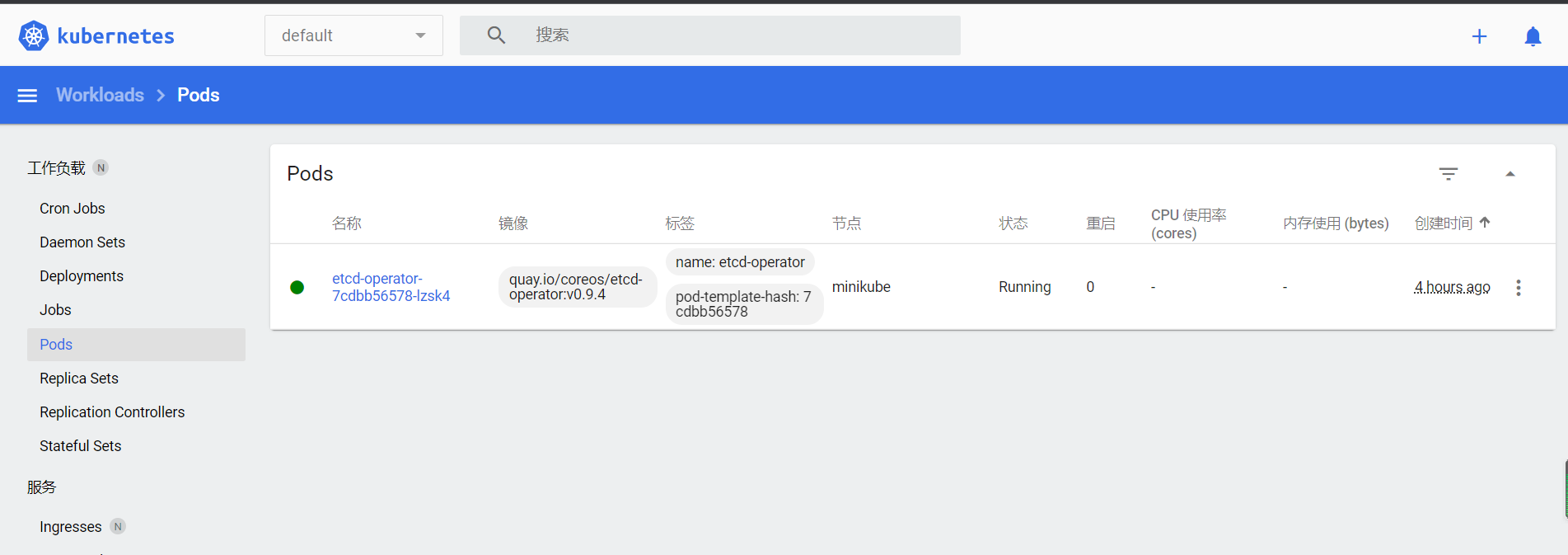

</body></html>在宿主机之外浏览器访问:http://弹性公网IP:8080/#/workloads?namespace=default

其他说明

查看docker进程,能够发现,minikube就是运行在宿主机上的一个docker进程

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b85acc8f48b0 registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.33 "/usr/local/bin/entr…" 9 hours ago Up 9 hours 127.0.0.1:49157->22/tcp, 127.0.0.1:49156->2376/tcp, 127.0.0.1:49155->5000/tcp, 127.0.0.1:49154->8443/tcp, 127.0.0.1:49153->32443/tcp minikube其中做了些端口映射:

$ docker inspect minikube

...

"Ports": {

"22/tcp": [

{

"HostIp": "127.0.0.1",

"HostPort": "49157"

}

],

"2376/tcp": [

{

"HostIp": "127.0.0.1",

"HostPort": "49156"

}

],

"32443/tcp": [

{

"HostIp": "127.0.0.1",

"HostPort": "49153"

}

],

"5000/tcp": [

{

"HostIp": "127.0.0.1",

"HostPort": "49155"

}

],

"8443/tcp": [

{

"HostIp": "127.0.0.1",

"HostPort": "49154"

}

]

},

...minikube的配置文件保持保存在用户的家目录.minikube下,我们可以简单看下

$ tree .minikube/

.minikube/

├── addons

├── cache

│ ├── images

│ │ └── amd64

│ │ └── registry.cn-hangzhou.aliyuncs.com #这里保存了一些下载到的kubernetes组件镜像

│ │ └── google_containers

│ │ ├── coredns_v1.8.6

│ │ ├── etcd_3.5.3-0

│ │ ├── kube-apiserver_v1.24.3

│ │ ├── kube-controller-manager_v1.24.3

│ │ ├── kube-proxy_v1.24.3

│ │ ├── kube-scheduler_v1.24.3

│ │ ├── pause_3.7

│ │ └── storage-provisioner_v5

│ ├── kic

│ │ └── amd64 #这里是主要镜像

│ │ └── kicbase_v0.0.33@sha256_73b259e144d926189cf169ae5b46bbec4e08e4e2f2bd87296054c3244f70feb8.tar

│ └── linux

│ └── amd64

│ └── v1.24.3 #这里是另外一些kubernetes组件,上面我们通过yum安装kubectl,实际上也可以直接使用它

│ ├── kubeadm

│ ├── kubectl

│ └── kubelet

├── ca.crt

├── ca.key

├── ca.pem

├── cert.pem

├── certs

│ ├── ca-key.pem

│ ├── ca.pem

│ ├── cert.pem

│ └── key.pem

├── config

├── files

├── key.pem

├── logs

│ ├── audit.json

│ └── lastStart.txt

├── machine_client.lock

├── machines

│ ├── minikube

│ │ ├── config.json

│ │ ├── id_rsa

│ │ └── id_rsa.pub

│ ├── server-key.pem

│ └── server.pem

├── profiles

│ └── minikube #保存了一些认证证书

│ ├── apiserver.crt

│ ├── apiserver.crt.dd3b5fb2

│ ├── apiserver.key

│ ├── apiserver.key.dd3b5fb2

│ ├── client.crt

│ ├── client.key

│ ├── config.json

│ ├── events.json

│ ├── proxy-client.crt

│ └── proxy-client.key

├── proxy-client-ca.crt

└── proxy-client-ca.key

19 directories, 41 files而kubectl访问集群是的配置文件保存在~/.kube下,

$ cat ~/.kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority: /home/vagrant/.minikube/ca.crt

extensions:

- extension:

last-update: Tue, 30 Aug 2022 10:39:36 CST

provider: minikube.sigs.k8s.io

version: v1.26.1

name: cluster_info

server: https://192.168.49.2:8443 #访问集群的API

name: minikube

contexts:

- context:

cluster: minikube

extensions:

- extension:

last-update: Tue, 30 Aug 2022 10:39:36 CST

provider: minikube.sigs.k8s.io

version: v1.26.1

name: context_info

namespace: default

user: minikube

name: minikube

current-context: minikube

kind: Config

preferences: {}

users:

- name: minikube

user: #这些是访问集群时的证书文件

client-certificate: /home/vagrant/.minikube/profiles/minikube/client.crt

client-key: /home/vagrant/.minikube/profiles/minikube/client.key后面在kubebuilder或operator开发中会用到它。

开启代理访问kubernetes api

#设置API server接收所有主机的请求:

$ kubectl proxy --address='0.0.0.0' --accept-hosts='^*$'

Starting to serve on [::]:8001查看api

$ curl -X GET -L http://127.0.0.1:8001

{

"paths": [

"/.well-known/openid-configuration",

"/api",

"/api/v1",

"/apis",

"/apis/",

"/apis/admissionregistration.k8s.io",

"/apis/admissionregistration.k8s.io/v1",

"/apis/apiextensions.k8s.io",

"/apis/apiextensions.k8s.io/v1",

"/apis/apiregistration.k8s.io",

"/apis/apiregistration.k8s.io/v1",

"/apis/apps",

"/apis/apps/v1",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/autoscaling/v2",

"/apis/autoscaling/v2beta1",

"/apis/autoscaling/v2beta2",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v1beta1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1",

"/apis/coordination.k8s.io",

"/apis/coordination.k8s.io/v1",

"/apis/discovery.k8s.io",

"/apis/discovery.k8s.io/v1",

"/apis/discovery.k8s.io/v1beta1",

"/apis/events.k8s.io",

"/apis/events.k8s.io/v1",

"/apis/events.k8s.io/v1beta1",

"/apis/flowcontrol.apiserver.k8s.io",

"/apis/flowcontrol.apiserver.k8s.io/v1beta1",

"/apis/flowcontrol.apiserver.k8s.io/v1beta2",

"/apis/myapp.demo.kubebuilder.io",

"/apis/myapp.demo.kubebuilder.io/v1",

"/apis/networking.k8s.io",

"/apis/networking.k8s.io/v1",

"/apis/node.k8s.io",

"/apis/node.k8s.io/v1",

"/apis/node.k8s.io/v1beta1",

"/apis/policy",

"/apis/policy/v1",

"/apis/policy/v1beta1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1",

"/apis/scheduling.k8s.io",

"/apis/scheduling.k8s.io/v1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/autoregister-completion",

"/healthz/etcd",

"/healthz/log",

"/healthz/ping",

"/healthz/poststarthook/aggregator-reload-proxy-client-cert",

"/healthz/poststarthook/apiservice-openapi-controller",

"/healthz/poststarthook/apiservice-openapiv3-controller",

"/healthz/poststarthook/apiservice-registration-controller",

"/healthz/poststarthook/apiservice-status-available-controller",

"/healthz/poststarthook/bootstrap-controller",

"/healthz/poststarthook/crd-informer-synced",

"/healthz/poststarthook/generic-apiserver-start-informers",

"/healthz/poststarthook/kube-apiserver-autoregistration",

"/healthz/poststarthook/priority-and-fairness-config-consumer",

"/healthz/poststarthook/priority-and-fairness-config-producer",

"/healthz/poststarthook/priority-and-fairness-filter",

"/healthz/poststarthook/rbac/bootstrap-roles",

"/healthz/poststarthook/scheduling/bootstrap-system-priority-classes",

"/healthz/poststarthook/start-apiextensions-controllers",

"/healthz/poststarthook/start-apiextensions-informers",

"/healthz/poststarthook/start-cluster-authentication-info-controller",

"/healthz/poststarthook/start-kube-aggregator-informers",

"/healthz/poststarthook/start-kube-apiserver-admission-initializer",

"/livez",

"/livez/autoregister-completion",

"/livez/etcd",

"/livez/log",

"/livez/ping",

"/livez/poststarthook/aggregator-reload-proxy-client-cert",

"/livez/poststarthook/apiservice-openapi-controller",

"/livez/poststarthook/apiservice-openapiv3-controller",

"/livez/poststarthook/apiservice-registration-controller",

"/livez/poststarthook/apiservice-status-available-controller",

"/livez/poststarthook/bootstrap-controller",

"/livez/poststarthook/crd-informer-synced",

"/livez/poststarthook/generic-apiserver-start-informers",

"/livez/poststarthook/kube-apiserver-autoregistration",

"/livez/poststarthook/priority-and-fairness-config-consumer",

"/livez/poststarthook/priority-and-fairness-config-producer",

"/livez/poststarthook/priority-and-fairness-filter",

"/livez/poststarthook/rbac/bootstrap-roles",

"/livez/poststarthook/scheduling/bootstrap-system-priority-classes",

"/livez/poststarthook/start-apiextensions-controllers",

"/livez/poststarthook/start-apiextensions-informers",

"/livez/poststarthook/start-cluster-authentication-info-controller",

"/livez/poststarthook/start-kube-aggregator-informers",

"/livez/poststarthook/start-kube-apiserver-admission-initializer",

"/logs",

"/metrics",

"/openapi/v2",

"/openapi/v3",

"/openapi/v3/",

"/openid/v1/jwks",

"/readyz",

"/readyz/autoregister-completion",

"/readyz/etcd",

"/readyz/informer-sync",

"/readyz/log",

"/readyz/ping",

"/readyz/poststarthook/aggregator-reload-proxy-client-cert",

"/readyz/poststarthook/apiservice-openapi-controller",

"/readyz/poststarthook/apiservice-openapiv3-controller",

"/readyz/poststarthook/apiservice-registration-controller",

"/readyz/poststarthook/apiservice-status-available-controller",

"/readyz/poststarthook/bootstrap-controller",

"/readyz/poststarthook/crd-informer-synced",

"/readyz/poststarthook/generic-apiserver-start-informers",

"/readyz/poststarthook/kube-apiserver-autoregistration",

"/readyz/poststarthook/priority-and-fairness-config-consumer",

"/readyz/poststarthook/priority-and-fairness-config-producer",

"/readyz/poststarthook/priority-and-fairness-filter",

"/readyz/poststarthook/rbac/bootstrap-roles",

"/readyz/poststarthook/scheduling/bootstrap-system-priority-classes",

"/readyz/poststarthook/start-apiextensions-controllers",

"/readyz/poststarthook/start-apiextensions-informers",

"/readyz/poststarthook/start-cluster-authentication-info-controller",

"/readyz/poststarthook/start-kube-aggregator-informers",

"/readyz/poststarthook/start-kube-apiserver-admission-initializer",

"/readyz/shutdown",

"/version"

]查看pod:

$ curl -X GET -L http://127.0.0.1:8001/api/v1/pods查看deployments

$ curl -X GET -L http://127.0.0.1:8001/apis/apps/v1/deployments安装过程中,常见问题:

执行到【kubelet-check】过程中失败,下载"k8s.gcr.io/pause:3.6"镜像超时

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all running Kubernetes containers by using crictl:

- 'crictl --runtime-endpoint unix:///var/run/cri-dockerd.sock ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'crictl --runtime-endpoint unix:///var/run/cri-dockerd.sock logs CONTAINERID'

stderr:

W0829 02:16:52.980341 1499 initconfiguration.go:120] Usage of CRI endpoints without URL scheme is deprecated and can cause kubelet errors in the future. Automatically prepending scheme "unix" to the "criSocket" with value "/var/run/cri-dockerd.sock". Please update your configuration!

[WARNING FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster

To see the stack trace of this error execute with --v=5 or higher

▪ Generating certificates and keys ...

▪ Booting up control plane ...\ 注意:由于minikube是以容器的方式安装在宿主机上,所以执行上面的检查命令的时候,应该进入到容器内部,

systemctl status kubelet

journalctl -xeu kubelet同时可以在宿主机上查看安装日志,minikube logs,

Aug 29 02:20:24 minikube kubelet[1610]: E0829 02:20:24.539096 1610 kuberuntime_sandbox.go:70] "Failed to create sandbox for pod" err="rpc error: code = Unknown desc = failed pulling image \"k8s.gcr.io/pause:3.6\": Error response from daemon: Get \"https://k8s.gcr.io/v2/\": net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)" pod="kube-system/kube-controller-manager-minikube"这里出现下载"k8s.gcr.io/pause:3.6"镜像超时,那就手动下载下吧!,注意是要下载到minikube容器上

查看宿主机docker进程,(补充一下,也可以直接minikube ssh)

[root@VM-4-9-centos ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

0dd5203d291e registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.33 "/usr/local/bin/entr…" 10 minutes ago Up 10 minutes 127.0.0.1:49157->22/tcp, 127.0.0.1:49156->2376/tcp, 127.0.0.1:49155->5000/tcp, 127.0.0.1:49154->8443/tcp, 127.0.0.1:49153->32443/tcp minikube进入到该容器:

[root@VM-4-9-centos ~]# docker exec -it minikube bash

root@minikube:/# cat /etc/*release*

DISTRIB_ID=Ubuntu

DISTRIB_RELEASE=20.04

DISTRIB_CODENAME=focal

DISTRIB_DESCRIPTION="Ubuntu 20.04.4 LTS"

NAME="Ubuntu"

VERSION="20.04.4 LTS (Focal Fossa)"

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 20.04.4 LTS"

VERSION_ID="20.04"

HOME_URL="https://www.ubuntu.com/"

SUPPORT_URL="https://help.ubuntu.com/"

BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"

PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

VERSION_CODENAME=focal

UBUNTU_CODENAME=focal

root@minikube:/# 能够看到该容器是基于ubuntu的。

查看该容器内已经下载的镜像

root@minikube:/# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.24.3 d521dd763e2e 6 weeks ago 130MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.24.3 3a5aa3a515f5 6 weeks ago 51MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.24.3 586c112956df 6 weeks ago 119MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.24.3 2ae1ba6417cb 6 weeks ago 110MB

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.5.3-0 aebe758cef4c 4 months ago 299MB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.7 221177c6082a 5 months ago 711kB

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns v1.8.6 a4ca41631cc7 10 months ago 46.8MB

registry.cn-hangzhou.aliyuncs.com/google_containers/storage-provisioner v5 6e38f40d628d 17 months ago 31.5MB

root@minikube:/# 已经有pause了,但是版本为3.7的,而安装过程却是要求下载3.6的,可能是这个版本的kubernetes的小bug吧!

那就手动下载一个3.6版本,注意要在该容器内pull,而不是宿主机上

$ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6

3.6: Pulling from google_containers/pause

fbe1a72f5dcd: Pull complete

Digest: sha256:3d380ca8864549e74af4b29c10f9cb0956236dfb01c40ca076fb6c37253234db

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6

registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6修改tag名为k8s.gcr.io/pause:3.6

#修改tag名

root@minikube:/# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 k8s.gcr.io/pause:3.6

#删除registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6

root@minikube:/# docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/pause@sha256:3d380ca8864549e74af4b29c10f9cb0956236dfb01c40ca076fb6c37253234db

#查看修改后k8s.gcr.io/pause

root@minikube:/# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.24.3 d521dd763e2e 6 weeks ago 130MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.24.3 2ae1ba6417cb 6 weeks ago 110MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.24.3 586c112956df 6 weeks ago 119MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.24.3 3a5aa3a515f5 6 weeks ago 51MB

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.5.3-0 aebe758cef4c 4 months ago 299MB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.7 221177c6082a 5 months ago 711kB

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns v1.8.6 a4ca41631cc7 10 months ago 46.8MB

k8s.gcr.io/pause 3.6 6270bb605e12 12 months ago 683kB

registry.cn-hangzhou.aliyuncs.com/google_containers/storage-provisioner v5 6e38f40d628d 17 months ago 31.5MB

root@minikube:/# 再次在宿主机上执行minikube start就可以了。

如果您觉得阅读本文对您有帮助,请点一下“推荐”按钮,您的“推荐”将是我最大的写作动力!欢迎各位转载,但是未经作者本人同意,转载文章之后必须在文章页面明显位置给出作者和原文连接,否则保留追究法律责任的权利。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 全程不用写代码,我用AI程序员写了一个飞机大战

· DeepSeek 开源周回顾「GitHub 热点速览」

· MongoDB 8.0这个新功能碉堡了,比商业数据库还牛

· 记一次.NET内存居高不下排查解决与启示

· 白话解读 Dapr 1.15:你的「微服务管家」又秀新绝活了