Hbase安装

1、centos下载并解压jdk

关键是要添加--no-cookie --header "Cookie: oraclelicense=accept-securebackup-cookie"

wget --no-cookie --header "Cookie: oraclelicense=accept-securebackup-cookie" https://download.oracle.com/otn-pub/java/jdk/12.0.1+12/69cfe15208a647278a19ef0990eea691/jdk-12.0.1_linux-x64_bin.tar.gz

2、设置环境变量

(1)解压JDK

(2)vi /etc/profile

JAVA_HOME=/root/jdk/jdk-12.0.1 CLASSPATH=.:$JAVA_HOME/lib PATH=$PATH:$JAVA_HOME/bin export JAVA_HOME CLASSPATH PATH

(3)source /etc/profile

3、下载并解压zookeeper

wget https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/zookeeper-3.4.14/zookeeper-3.4.14.tar.gz

4、修改zk配置文件

zk配置文件路径:/root/zookeeper/zookeeper-3.4.14/conf/zoo.cfg,主要修改dataDir,添加dataLogDir

dataDir=/root/zookeeper/zkdata

dataLogDir=/root/zookeeper/zkdatalog

5、启动zk

cd /root/zookeeper/zookeeper-3.4.14/bin

./zkServer.sh start

6、下载并解压hadoop

wget http://mirrors.hust.edu.cn/apache/hadoop/common/hadoop-2.7.6/hadoop-2.7.6.tar.gz

为啥要用这个版本hadoop,可以参考https://hbase.apache.org/2.1/book.html#basic.prerequisites

7、修改hadoop配置文件

主要增加java_home配置,配置文件地址/root/hadoop/hadoop-2.7.6/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/root/jdk/jdk-12.0.1

8、hadoop mapreduce例子测试

(1)mkdir input

(2) cp hadoop-2.7.6/etc/hadoop/*.xml input

(3)执行

hadoop-2.7.6/bin/hadoop jar hadoop-2.7.6/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.6.jar grep input output 'dfs[a-z.]+'

9、hadoop伪分布式安装

hbase需要hdfs,我们使用伪分布式模式安装hadoop,启动hdfs。伪分布式模式在1个节点上运行HDFS的NameNode、DataNode和YARN的ResourceManger、NodeManager java进程。

伪分布式模式参考:https://www.cnblogs.com/ee900222/p/hadoop_1.html

9.2.1 修改设定文件

# vi etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

# vi etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

9.2.2 设定本机的无密码ssh登陆

# ssh-keygen -t rsa # cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

# chmod 600 ~/.ssh/authorized_keys # chmod 710 ~/.ssh

9.2.3 执行Hadoop job

MapReduce v2 叫做YARN,下面分别操作一下这两种job

9.2.4 执行MapReduce job

9.2.4.1 格式化文件系统

# hdfs namenode -format

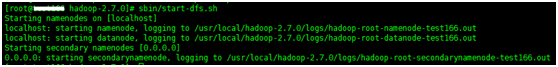

9.2.4.2 启动名称节点和数据节点后台进程

# sbin/start-dfs.sh

在localhost启动一个1个NameNode和1个DataNode,在0.0.0.0启动第二个NameNode

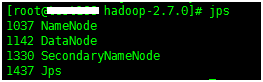

9.2.4.3 确认

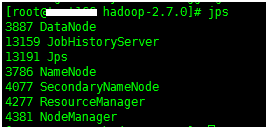

# jps

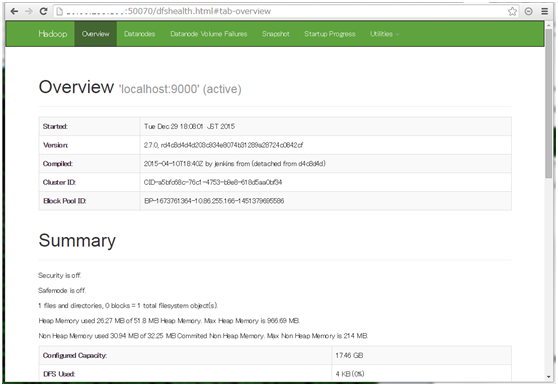

9.2.4.4 访问NameNode的web页面

http://localhost:50070/

9.2.4.5 创建HDFS

# hdfs dfs -mkdir /user # hdfs dfs -mkdir /user/test

9.2.4.6 拷贝input文件到HDFS目录下

# hdfs dfs -put etc/hadoop /user/test/input

确认,查看

# hadoop fs -ls /user/test/input

9.2.4.7 执行Hadoop job

# hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.0.jar grep /user/test/input output 'dfs[a-z.]+'

9.2.4.8 确认执行结果

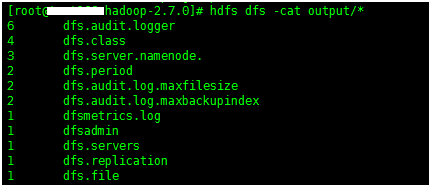

# hdfs dfs -cat output/*

或者从HDFS拷贝到本地查看

# bin/hdfs dfs -get output output # cat output/*

9.2.4.9 停止daemon

# sbin/stop-dfs.sh

9.2.5 执行YARN job

MapReduce V2框架叫YARN

9.2.5.1 修改设定文件

# cp etc/hadoop/mapred-site.xml.template etc/hadoop/mapred-site.xml

# vi etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

# vi etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

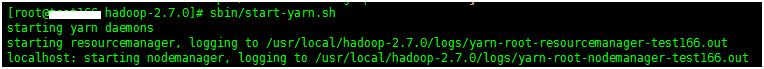

9.2.5.2 启动ResourceManger和NodeManager后台进程

# sbin/start-yarn.sh

9.2.5.3 确认

# jps

9.2.5.4 访问ResourceManger的web页面

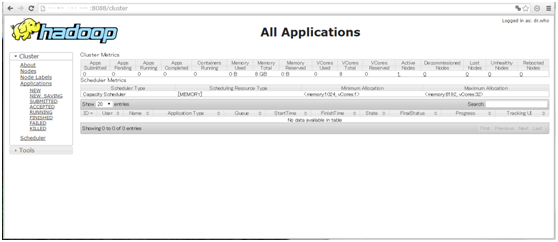

http://localhost:8088/

9.2.5.5 执行hadoop job

# hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.0.jar grep /user/test/input output 'dfs[a-z.]+'

9.2.5.6 确认执行结果

# hdfs dfs -cat output/*

执行结果和MapReduce job相同

9.2.5.7 停止daemon

# sbin/stop-yarn.sh

9.2.5.8 问题点

1. 单节点测试情况下,同样的input,时间上YARN比MapReduce好像慢很多,查看日志发现DataNode上GC发生频率较高,可能是测试用VM配置比较低有关。

2. 出现下面警告,是因为没有启动job history server

java.io.IOException: java.net.ConnectException: Call From test166/10.86.255.166 to 0.0.0.0:10020 failed on connection exception: java.net.ConnectException: Connection refused;

启动jobhistory daemon

# sbin/mr-jobhistory-daemon.sh start historyserver

确认

# jps

访问Job History Server的web页面

http://localhost:19888/

3. 出现下面警告,DataNode日志中有错误,重启服务后恢复

java.io.IOException: java.io.IOException: Unknown Job job_1451384977088_0005

9.3 启动/停止

也可以用下面的启动/停止命令,等同于start/stop-dfs.sh + start/stop-yarn.sh

# sbin/start-all.sh

# sbin/stop-all.sh

9.4 日志

日志在Hadoop安装路径下的logs目录下

10、下载HBase

wget http://mirrors.hust.edu.cn/apache/hbase/2.1.3/hbase-2.1.3-bin.tar.gz

11、安装HBase

参考《Hbase伪分布式安装》