LSTM (Long Short Term Memory) networks

# Define LSTM

class Lstm(nn.Module):

def __init__(self, input_size, hidden_size=2, output_size=1, num_layers=1):

super().__init__()

self.layer1 = nn.LSTM(input_size, hidden_size, num_layers)

self.layer2 = nn.Linear(hidden_size, output_size)

def forward(self, _x):

x, _ = self.layer1(_x)

s, b, h = x.shape

x = x.view(s*b, h)

x = self.layer2(x)

x = x.view(s, b, -1)

return x

# Generate data

n = 100

t = np.linspace(0,10.0*np.pi,n)

X = np.sin(t)

X = X.astype('float32')

# Set window of past points for LSTM model

input_N = 5

output_N = 1

batch = 5

# Split into train/test data

last = int(n/2.5)

Xtrain = X[:-last]

Xtest = X[-last-input_N:]

# Store window number of points as a sequence

xin = []

next_X = []

for i in range(input_N,len(Xtrain)):

xin.append(Xtrain[i-input_N:i])

next_X.append(Xtrain[i])

# Reshape data to format for LSTM

xin, next_X = np.array(xin), np.array(next_X)

xin = xin.reshape(-1,1,input_N)

train_x = torch.from_numpy(xin)

train_y = torch.from_numpy(next_X)

train_x_tensor = train_x.reshape(-1,batch,input_N) # set batch size to 5

train_y_tensor = train_y.reshape(-1,batch,output_N) # set batch size to 5

model = Lstm(input_N, 5, output_N,1) # 5 hidden units

loss_function = nn.MSELoss()

optimizer = torch.optim.Adam(model.parameters(), lr=1e-2)

max_epochs = 1000

for epoch in range(max_epochs):

output = model(train_x_tensor)

loss = loss_function(output, train_y_tensor)

loss.backward()

optimizer.step()

optimizer.zero_grad()

if loss.item() < 1e-4:

print('Epoch [{}/{}], Loss: {:.5f}'.format(epoch+1, max_epochs, loss.item()))

print("The loss value is reached")

break

elif (epoch+1) % 100 == 0:

print('Epoch: [{}/{}], Loss:{:.5f}'.format(epoch+1, max_epochs, loss.item()))

# prediction on training dataset

predictive_y_for_training = model(train_x_tensor)

predictive_y_for_training = predictive_y_for_training.view(-1, 1).data.numpy()

# ----------------- plot -------------------

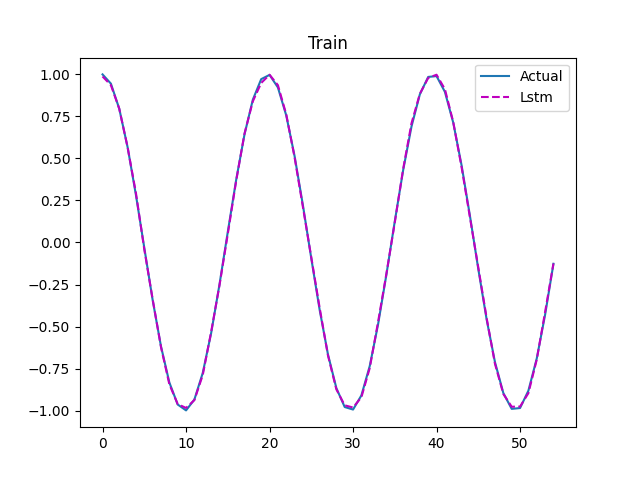

plt.figure()

plt.plot(train_y, label='Actual')

plt.plot(predictive_y_for_training, 'm--', label='Lstm')

plt.title('Train')

plt.legend(loc=1)

x_test_in = []

test_X =[]

for i in range(input_N,len(Xtest)):

x_test_in.append(Xtrain[i-input_N:i])

test_X.append(Xtrain[i])

x_test_in = np.array(x_test_in)

test_x_tensor = x_test_in.reshape(-1, batch, input_N) # set batch size to 5

test_x_tensor = torch.from_numpy(test_x_tensor)

predictive_y_for_testing = model(test_x_tensor)

predictive_y_for_testing = predictive_y_for_testing.view(-1, 1).data.numpy()

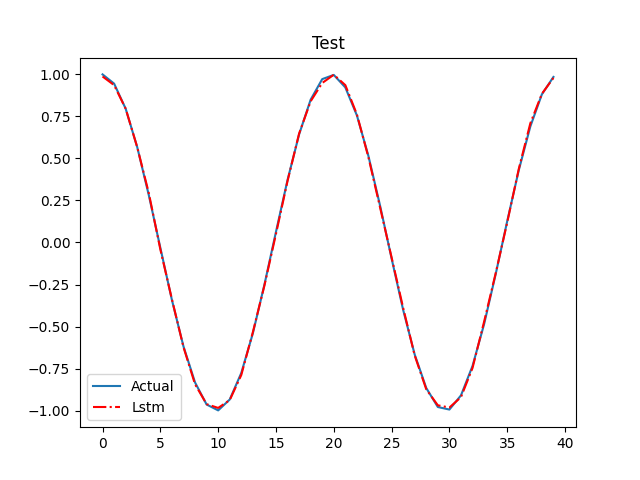

plt.figure()

plt.plot(test_X,label = 'Actual')

plt.plot(predictive_y_for_testing,'r-.',label='Lstm')

plt.title('Test')

plt.legend(loc=0)

plt.show()

浙公网安备 33010602011771号

浙公网安备 33010602011771号